LiDAR-Inertial Odometry in Dynamic Driving Scenarios using Label Consistency Detection

Abstract

In this paper, a LiDAR-inertial odometry (LIO) method that eliminates the influence of moving objects in dynamic driving scenarios is proposed. This method constructs binarized labels for 3D points of current sweep, and utilizes the label difference between each point and its surrounding points in map to identify moving objects. Firstly, the binarized labels, i.e., ground and non-ground are assigned to each 3D point in current sweep using ground segmentation. In actual driving scenarios, dynamic objects are always located on the ground. For most points scanned from moving objects, they cannot coincide with any existing structures in space. For a minority of moving objects’ points that are close to the ground, their labels exhibit differences with surrounding ground points. Thus, the points on moving objects are identified due to lacking of nearest neighbors in map or inconsistency with the labels of surround ground points. The nearest neighbors from global map are localized by voxel-location-based nearest neighbor search and the consistency is evaluated by comparing the label consistency with nearest neighbors, without involving any massive computations. Finally, the points on moving objects are removed. The proposed method is embeded into a self-developed LIO system (i.e., Dynamic-LIO), evaluated with six public datasets, and tested in both dynamic and static environments. Experimental results demonstrate that our method can identify moving objects with extremlely low computational overhead (i.e., 19ms/sweep), and our Dynamic-LIO can achieve state-of-the-art pose estimation accuracy in both static and dynamic scenarios. We have released the source code of this work for the development of the community.

Index Terms:

SLAM, localization, sensor fusion.I Introduction

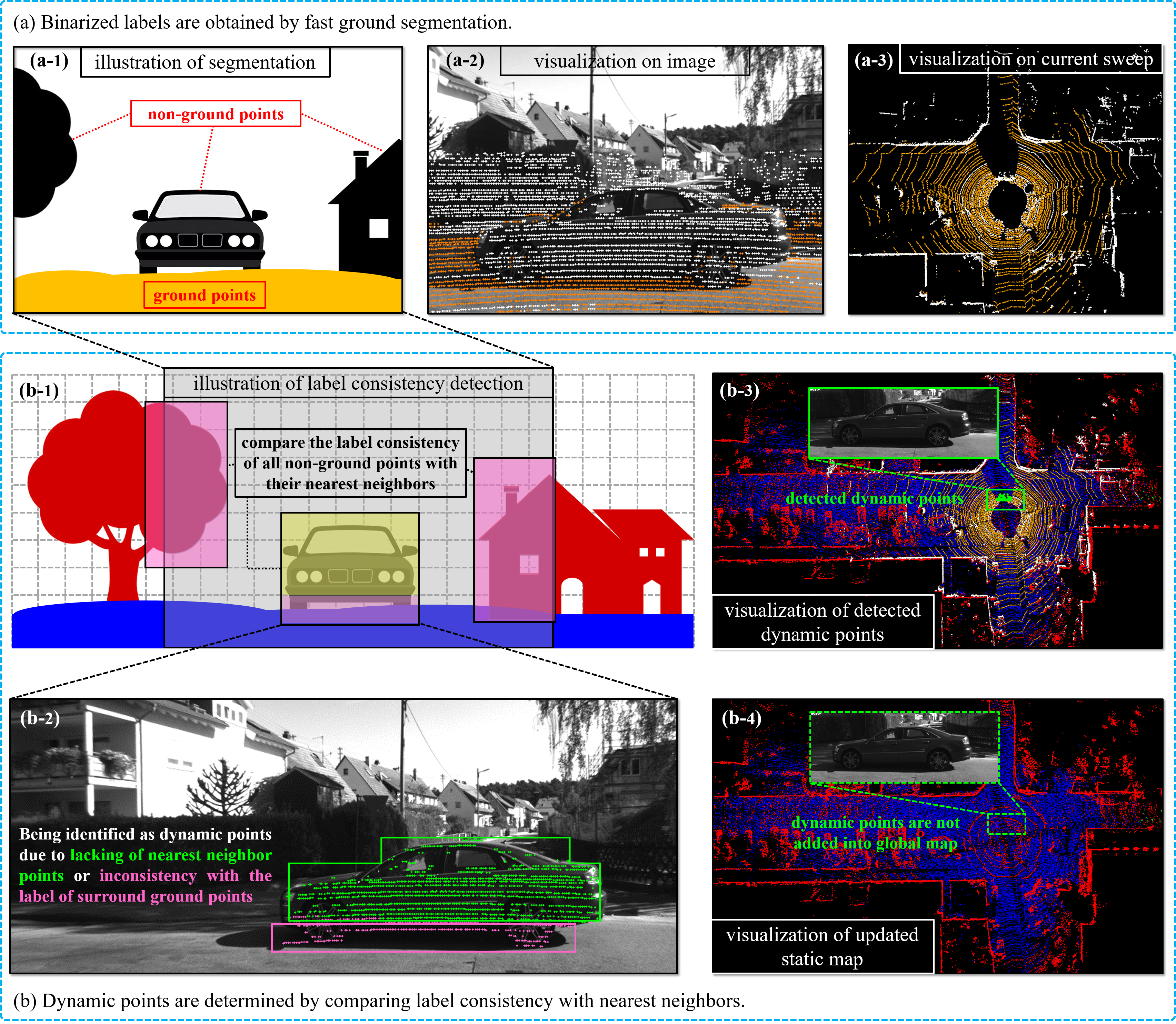

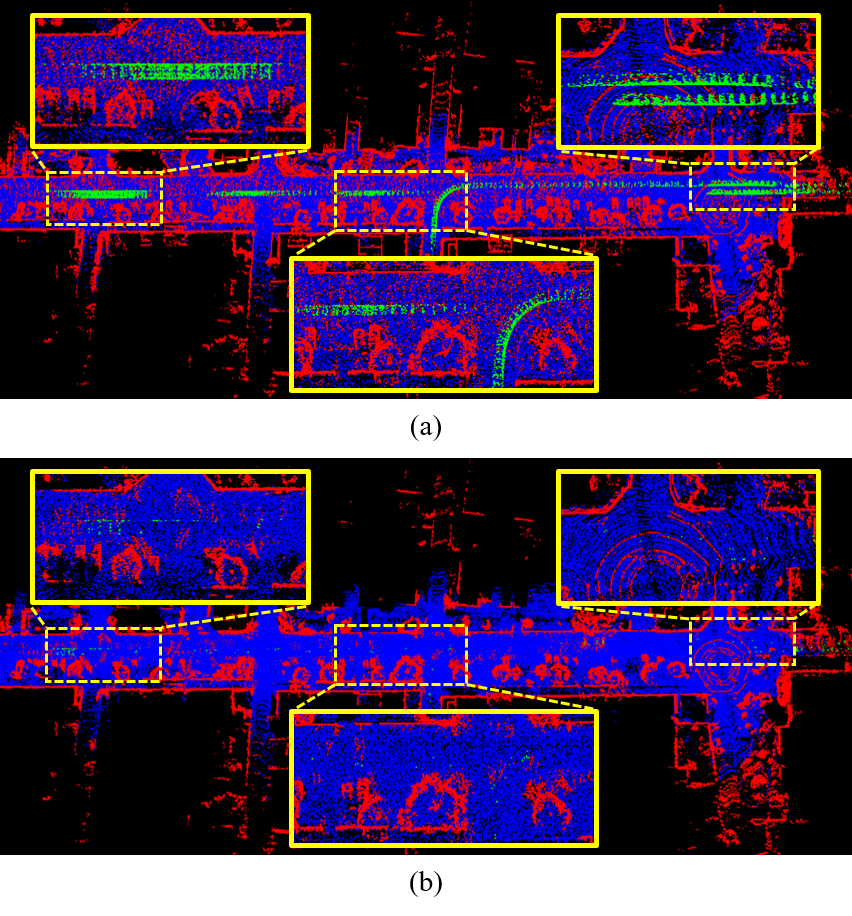

In recent years, 3D light detection and ranging (LiDAR) based state estimation methods, including LiDAR-inertial odometry (LIO), have played an important role in autonomous driving thanks to the strong strength of LiDAR to perceive 3D space. Theses methods can provide 15 degree-of-freedom (DOF) state estimation of vehicle platform and recover 3D structure of surrounding environment in real time. However, the scenarios where they apply are strictly limited by the assumption of static environment. In actual driving scenarios, moving vehicles or pedestrians can leave ghost tracks in the map (as shown in Fig. 1 (a)), leading to cumulative errors in state estimation and providing erroneous observational information for obstacle avoidance. Given the necessity for real-time state estimation and mapping in a LIO system, the computational cost of removing dynamic objects must be within the processing time budget for a single sweep. Hence, efficiently identifying moving objects from 3D point clouds is crucial.

To address the issues of poor map reconstruction and inaccurate state estimation caused by ghost tracks, researchers employ a range of approaches such as point correlation, visibility, occupancy probability and semantic information to identify and remove dynamic 3D points from input LiDAR sweeps. For point correlation [8], the correlation exists between static points, but there is no correlation between dynamic and static points. The connectivity of map points can be exploited to separate moving objects from the static environments. However, the construction and maintenance of a correlation graph involve computing pairwise Euclidean distances of batch 3D points, and the entire process requires substantial computational resources. For visibility [18, 39, 10], the static pixels on re-projected image planes remain invariant across multiple sweeps, whereas those dynamic pixels undergo displacements. However, the generation of re-projected image plane entails a computational process to map large number of LiDAR points from their original 3D space onto the 2D image plane. For occupancy probability [28, 38], the occupancy status of voxels corresponding to static environments remains constant over time, whereas the occupancy status of voxels occupied by dynamic objects will change with time. However, the estimation of an occupancy grid map need to combine multiple nearest static submaps and count the occupancy status of each voxel in each submap. The three aforementioned methods leverage extensive quantitative geometric computations and global statistics to identify moving objects in 3D point clouds, and they incur high computational costs, which limits their integration into LIO systems. Deep learning methods [7, 21] use learned semantic information for rapid qualitative separation of dynamic objects. However, they depend on extensive labeled data, risk failure with unlabeled classes, and necessitate powerful Graphic Processing Units (GPUs) for real-time operation, which can detract from resources available for subsequent tasks such as trajectory planning.

In contrast to existing approaches involving extensive quantitative geometric computations, global statistics or prior semantic information to classify moving objects and static environments, in this study, a qualitative identification criterion based on label difference with nearest neighbors is used to identify 3D points on moving objects. Since moving vehicles and pedestrians are both situated on the ground, the potential dynamic points only exist in non-ground space, while the bases of moving objects are adjacent to the ground. Based on this characteristic, a label consistency detection method, which can fastly identify moving objects without any prior semantic informations, is proposed to classify moving objects and static environments in driving scenarios. The core idea of the proposed label consistency detection method consists of two parts. First, a fast 2D connected components [14] is utilized to divide the 3D points of current sweep into binarized labels, i.e., ground points and non-ground points. Then, dynamic points are determined by comparing label consistency with nearest neighbors, which are directly localized by a voxel-location-based nearest neighbor search method. The whole dynamic point identification process including voxel-location-based nearest neighbor search and label consistency comparison, is devoid of any operations about geometric computations or global statistics. This characteristic ensures that the proposed method has the advantage of a expremely low computational overhead (i.e., 19ms/sweep). Finally, a self-developed LIO system (i.e., Dynamic-LIO) that uses this label consistency detection method is proposed to eliminate the influence of moving objects in driving scenarios. In order to guarantee that the final reconstructed map comprises solely static points, any detected dynamic points will be excluded from the global map (as shown in Fig. 1 (b)). Subsequently, the clean static global map can be used to perform label consistency detection for the next sweep, ensuring the sustainability of the proposed method.

Experimental results on three public datasets of dynamic environments, i.e., - [1], - [32] and -, demonstrate that our method achieves comparable preservation rate (PR), rejection rate (RR) and absolute trajectory error (ATE), and sighnificantly outperforms online dynamic point detection methods [10, 38, 25, 33] in terms of expremely low computational overhead (19ms/sweep). In addition, experimental results on three public datasets of static environments, i.e., [3], [37] and - [32], demonstrate that our Dynamic-LIO also outperforms state-of-the-art LIO systems for static scenes in terms of smaller ATE.

To summarize, the main contributions of this work are three aspects: 1) We propose a label consistency detection method for fast identification of 3D dynamic points. It circumvents the operations of extensive geometric computations or global statistics and thus achieves lightweight; 2) We develop a LIO system and integrate the proposed label consistency detection method into this LIO in a unified manner, improving the accuracy of pose estimation in dynamic scenes by eliminating the ghost tracks in the reconstructed map; 3) We have released the source code of our approach to facilitate the development of the community111https://github.com/ZikangYuan/dynamic_lio.

The rest of this paper is structured as follows. Sec. II reviews the relevant literature. Sec. III provides preliminaries. Secs. IV details our label consistency detection method and Sec. V introduces our system Dynamic-LIO, followed by experimental evaluation in Sec. VI. Sec. VII concludes the paper.

II Related Work

In this section, we review the related work about existing 3D dynamic point detection approaches without deep learning [24, 8, 39, 18, 15, 27, 20, 5, 28, 38] and current mainstream LIO systems for static and dynamic scenes [30, 26, 36, 12, 4, 6, 42, 41, 23, 25, 33, 34, 44]. Although there are some deep learning based 3D dynamic point detection methods [7, 21], they are weakly relevant to this work so we omit the detailed discussion of them.

Pomerleau el. al. [24] utilized the motion pattern of point to represent the correlation. They calculated the motion pattern of each 3D point and infer the dominant motion patterns within the map, then determined the points that do not fit the motion pattern as dynamic points. Dai et. el. [8] utlized the relative position between two points to represent the correlation, and then utilized the amplitude of relative position change over time as the criterion of consistency to identify dynamic points. However, the computational overhead of calculating motion pattern and maintaining map point correlation in large-scale outdoor scenarios is prohibitive. Yoon et. al. [39] proposed to simply query one sweep against another, and identify point with evident visibility difference as dynamic point. Removert [18] proposed a multi-resolution range image-based false prediction reverting algorithm. This method first conservatively retained definite static points and iteratively recover more uncertain static points by enlarging the query-to-map association window size. However, visibility-based approaches usually suffers from incidence angle ambiguity and occlusion issues. In addition, the generation of multiple projections on the spherical image plane and the assignment of a static value to each point in visibility-based approaches require high computational overhead. OctoMap [15] firstly proposed a framework to generate volumetric 3D environment model, which is based on octrees and uses probabilistic occupancy estimation. Given a registered set of 3D points, Schauer et. al. [27] build a regular voxel occupancy grid and then traverse it along the lines of sight between the sensor and the measured points to identify the differences in volumetric occupancy between multiple sweeps. Erasor [20] proposed the concept called pseudo occupancy to express the occupancy of unit space and then discriminate spaces of varying occupancy. Then, the region-wise ground plane fitting (R-GPF) method is adopted to distinguish static points from dynamic points within the candidate bins that potentially contain dynamic points. DORF [5] proposed a novel coarse-to-fine offline framework that exploits global 4D spatial-temporal LiDAR information to achieve clean static point cloud map generation. DORF first conservatively preserved the definite static points leveraging the receding horizon sampling (RHS) mechanism, then gradually recovered more ambiguous static points, guided by the inherent characteristic of dynamic objects in urban environments. [28] proposed to incrementally estimate high confidence free-space areas by modeling and accounting for sensing, state estimation, and mapping limitations during online robot operation. It can achieve robust moving object detection in complex unstructured environments. RH-Map [38] proposed a novel map construction framework based on 3D region-wise hash map structure, which adopts the two-layer 3D region-wise hash map structure and the region-wise ground plane estimation for dynamic object removal. Occupancy map-based approaches are usually accompanied by the nearest neighbor search, confidence calculation, occupancy probability statistics, relative spatial position calculation and other operations requiring batch geometric computation, which cause a significant computational burden.

In recent years, various LIO systems have been proposed in the robotics community. LIO-SAM [30] firstly formulated LIO as a factor graph, which allows the incorporation of a multitude of relative and absolute measurements, including loop closures, as factors from different sources into the system. In LINs [26], a pioneering integration of 6-axis IMU and 3D LiDAR was accomplished within an error state iterated Kalman filter (ESIKF) framework. This design ensures that the computational demands of the system remain tractable. Based on mathematical foundations, Fast-LIO [36] adapted a technique of solving Kalman gain [31], circumventing the need for high-order matrix inversion, thereby significantly alleviating the computational load. Building upon the advancements of Fast-LIO, Fast-LIO2 [35] introduced an innovative ikd-tree algorithm [2]. Compared to the conventional kd-tree, this algorithm offers reduced temporal expenditure in processes such as tree construction, traversal, and element removal. Point-LIO [12] proposed a point-by-point LIO framework that updates the state at each LiDAR point measurement, which allows an extremely high-frequency output. DLIO [4] proposed to preserve a third-order minimum within the realms of state prediction and point distortion calibration, thereby facilitating the acquisition of more precise pose estimation. IG-LIO [6] integrated the generalized-ICP (GICP) constraints and inertial constraints into a unified estimation framework. In addition, iG-LIO employed a voxel-based surface covariance estimator to estimate the surface covariances of scans, and utilized an incremental voxel map to represent the probabilistic models of surrounding environments. Semi-Elastic-LIO [42] proposed a semi-elastic optimization-based LiDAR-inertial state estimation method, which imparts sufficient elasticity to the state to allow it be optimized to the correct value. SR-LIO [41] adapted the sweep reconstruction method [43, 40], which segments and reconstructs raw input sweeps from spinning LiDAR to obtain reconstructed sweeps with higher frequency. Consequently, the frequency of estimated pose is also increased. Pfreundschuh et. al. [23] proposed an end-to-end occupancy grid based pipeline that can automatically label a wide variety of arbitrary dynamic objects, and embeded this network into a LiDAR odometry system. RF-LIO [25] utilized an adaptive multi-resolution range images to first remove dynamic objects, and then match LiDAR sweeps to the map for state estimation. ID-LIO [33] proposed a LiDAR-inertial odometry based on indexed point and delayed removal strategy for dynamic scenes, which builds on LIO-SAM. Although RF-LIO and ID-LIO have the ability to perform state estimation in dynamic scenarios, huge computational overhead makes them unable to run stably in real time.

III Preliminary

III-A Coordinate Systems

We denote , and as a 3D point in the world coordinate, the LiDAR coordinate and the IMU coordinate respectively. The world coordinate is coinciding with at the starting position.

We denote the LiDAR coordinate for taking the sweep at time as and the corresponding IMU coordinate at as , then the transformation matrix (i.e., external parameters) from to is denoted as , which consists of a rotation matrix and a translation vector . The external parameters are usually calibrated once offline and remain constant during online pose estimation. Therefore, we can represent as for simplicity. The pose from the IMU coordinate to the world coordinate is strictly defined as .

III-B Voxel map Management

The entire system maintains two global maps: the tracking-map and the output map. The former is utilized for state estimation, while the latter is utilized for label consistency detection and serves as the final reconstruction outcome. In Dynamic-LIO, the tracking-map has already removed out the vast majority of dynamic points. However, to prevent over-filtering that could lead to insufficient geometric information for LIO, we refrain from further processing the tracking-map and instead focus on the output map (as illustrated in Sec. IV-E). Thus compared to the tracking-map, the dynamic points in the output map are removed more thoroughly. Both the tracking-map and the output map are managed by voxel, whose voxel resolution is (unit: m) and each voxel contains a maximum of 20 points.

IV Label Consistency Detection

Label consistency detection aims to circumvent batch geometric computations and global statistics, which are prevalent in existing dynamic point detection approaches, thereby facilitating rapid identification of 3D dynamic points. To our knowledge, most existing methods primarily involve batch geometric computations and global statistics in aspects of nearest neighbor search and consistency evaluation. Consequently, we are committed to achieve the lightweight of these two aspects in our method.

The core premise of label consistency detection is that the moving objects in driving scenarios are in contact with the ground. Under this premise, we first construct the binarized labels (i.e., ground label and non-ground label) for each 3D point by segmenting the ground points from current input sweep (as illustrated in Fig. 2 (a)). All ground points are inherently static, and the potential dynamic points are exclusively found among non-ground points. If we have already prepared the static global map at the previous moment, aside from the new points to be added at a greater distance, each static point at current moment can find its corresponding nearest neighbor within the global map during map update. For LiDAR points scanned from moving objects, the lack of structural informations within the global map prevents the current position from coinciding with any existing static geometric structures in space. (as illustrated in the green area of Fig. 2 (b-1)). Thus, most LiDAR points scanned from moving objects are often unable to find nearest neighbors during registration and we identify thoes points as dynamic points (shown as the green points in Fig. 2 (b-2)). As for the remaining small subset of LiDAR points (shown as the pink points in Fig. 2 (b-2)), they may find ground points as their nearest neighbors. We then determine whether to classify them as dynamic points according to the proportion of ground points within the nearest neighbors. It is evident that throughout the process of evaluating label consistency, we only need to calculate the proportion of ground points among the nearest neighbors, without engaging in any batch geometric computations and global statistics. In addition, we utilize the voxel-location-based nearest neighbor search to obtain nearest neighbors, which can be directly located without any quantitative geometric distance calculation. The lightweight of these two core aspects ensures the low computational overhead of our method. Once the dynamic points at the current moment are identified, we utilize the estimated pose from LIO to register the static points into the global map to finish the map update, which can be used to identify dynamic points of next sweep, thereby ensuring the sustainability of our method.

Specially, the label consistency detection method is divided into five steps: binarized label construction, background separation, voxel-location-based nearest neighbor search, dynamic point determination and undetermined-point re-determination. In the following, we will provide a detailed description of each step.

IV-A Binarized Label Construction

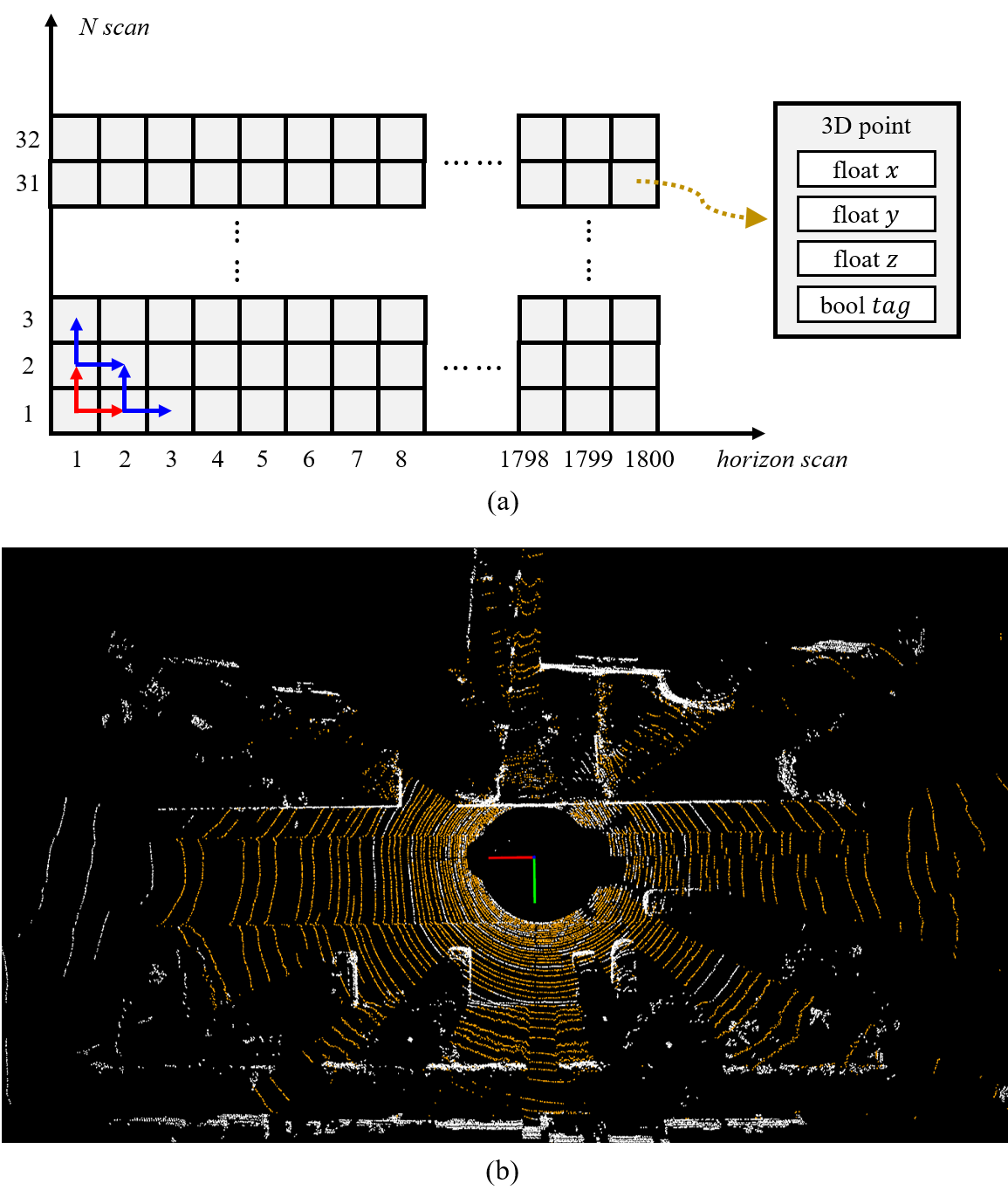

Before dynamic point identification, we first construct binarized descriptors, i.e., ground label and non-ground label, for each 3D point of current sweep. We utilize a fast 2D connected component method [14], which is the same as LeGO-LOAM [29], to separate ground points from current input sweep with very low computational cost. By default, the LiDAR is mounted horizontally on the vehicle, and the LiDAR’s -axis is perpendicular to the ground. If the LiDAR is equipped at an inclined angle, external parameters can be introduced to ensure the -axis remains perpendicular. After changing the -axis to perpendicular, we configure the range image [22] with the number of LiDAR lines ( in Fig. 3 (a)) as the vertical axis and the horizontal resolution ( in Fig. 3 (a)) as the horizontal axis, and position the 3D points of current sweep in their corresponding locations within the range image according to their horizontal and vertical line indices. In the range image, each point is equipped with , , coordinates in , as well as a Boolean variable , which is used to denote whether the point is a ground point. Initially, all are set to false. We set the of point at to . Then following the red arrows indicated in Fig. 3 (a), we calculate the pitch angles of two 3D points at the adjacent positions and to that at respectively. Then the pitch angle of to can be calculated as:

| (1) |

If the calculated pitch angle is less than a certain threshold (e.g., 5 degree in our system), is set as , which means the corresponding 3D point is determined as ground point. Similarily, we can calculate the pitch angle of to , and set value for . If the 3D point located at or is determined as ground point, we follow the blue arrows indicated in Fig. 3 (a) to indentify other points adjacent to them. The entire process is executed recursively, continuing until all pixels in the range image have been visited or the recursive exit condition is met. The visualization of segmented ground points is illustrated in Fig. 3 (b), where the orange points are labeled as “ground points” and the white points are labeled as “non-ground points”.

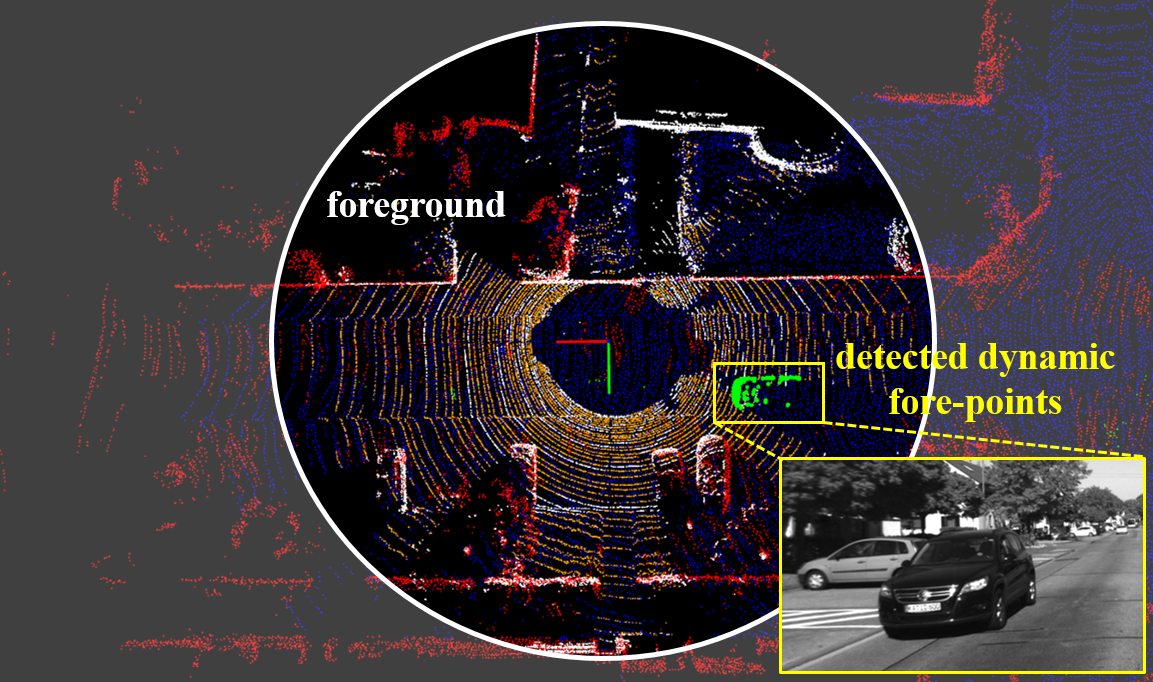

IV-B Background Separation

In the process of executing label consistency detection, it is necessary to find the nearest neighbors for each point of current sweep. Points that are close to the vehicle platform can reliably find their nearest neighbors, whereas points that are farther may fail due to the incomplete reconstruction of their locations. In outdoor driving scenarios (excluding extreme occlusion), the map structures within a 30 meter radius around the vehicle platform are usually already reconstructed. Therefore, we set a empirical threshold of 30 meters, and define points within 30 meters of the vehicle platform as fore-points and those beyond 30 meters as back-points. For fore-points and back-points, we employ determinations that are specifically tailored to their characteristics.

IV-C Voxel-Location-Based Nearest Neighbor Search

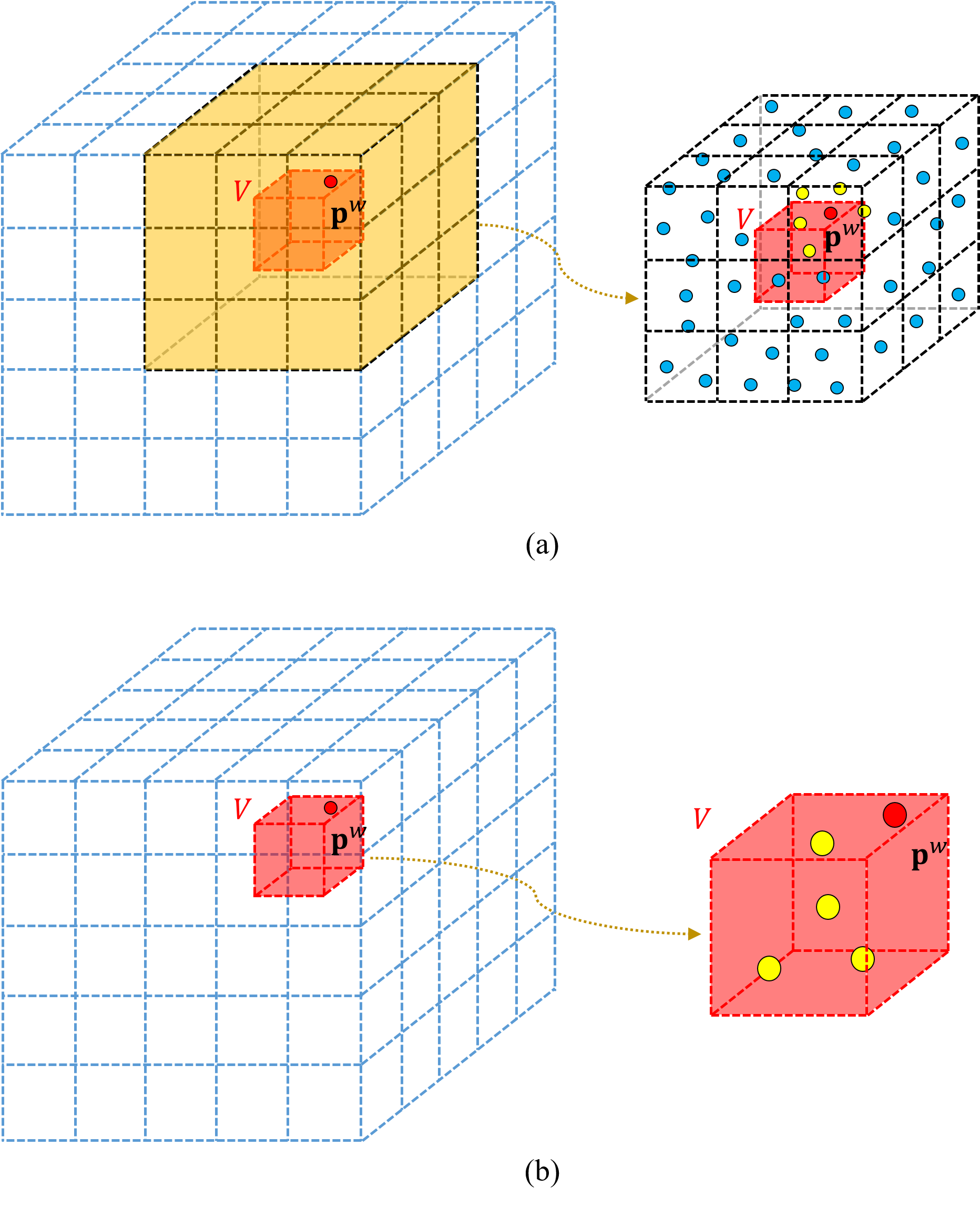

For a specific point with the “non-ground point” label, to determine whether its label is consistent with surrounding points in the global map, we first need to search for the nearest neighbors of . An intuitive alternative is to utilize the 8-nearest neighbor search which is the same as the nearest neighbor search method for point-to-plane distance computation (as illustrated in Fig. 4 (a)) in LIO. Specially, we locate the voxel to which belongs and the 8 voxels adjacent to , and set all points in these voxels as candidate points. Subsequently, the 20 nearest points of are identified from 9 candidate voxels by comparing the magnitudes of Euclidean distances to .

However, calculating the Euclidean distance of each candidate point to is an extremely time-consuming process. When LIO builds the point-to-plane distance residuals, in order to ensure that the fitted plane can reflect as much of the geometric information around as possible, we have to utilize the conventional 8-nearest neighbor search. Fortunately, only 600 point-to-plane distance residuals are required for estimating the pose of each sweep in our system, so the total computational overhead is acceptable. However, in order to ensure that the final output map does not contain dynamic points, it is necessary to determine each point of the current sweep, which requires searching the nearest neighbors for each point from the global map. A single sweep of one 32-line LiDAR can yield more than 50,000 points, making the conventional 8-nearest neighbor search inapplicable here.

In the proposed label consistency detection method, we qualitatively assess whether the non-ground point is a dynamic point by comparing its label with those of its surround points. Since the surround points are not involved in quantitative calculations, it is not necessary to strictly satisfy the concept of nearest neighbors. Instead, an approximate approach, i.e., voxel-location-based nearest neighbor search, can be adopted to significantly reduce the computational cost. As illustrated in Fig. 4 (b), we locate the voxel to which belongs and consider the other points within as the approximate nearest neighbors. Since the voxel map has a query operation with a computational complexity of , the entire nearest neighbor search process is extremely fast. In addition, the computational overhead associated with Euclidean distance is also saved. The voxel-location-based nearest neighbor search plays a crucial role in ensuring the low computational cost of label consistency detection, as evidenced by the results of the ablation study, which are documented in Sec. VI-G.

IV-D Dynamic Point Determination

In Sec. IV-B, we categorize the points of current sweep into fore-points and back-points based on their distance from the vehicle platform. For dynamic point determination of fore-points and back-points, we employ the following two distinct modes.

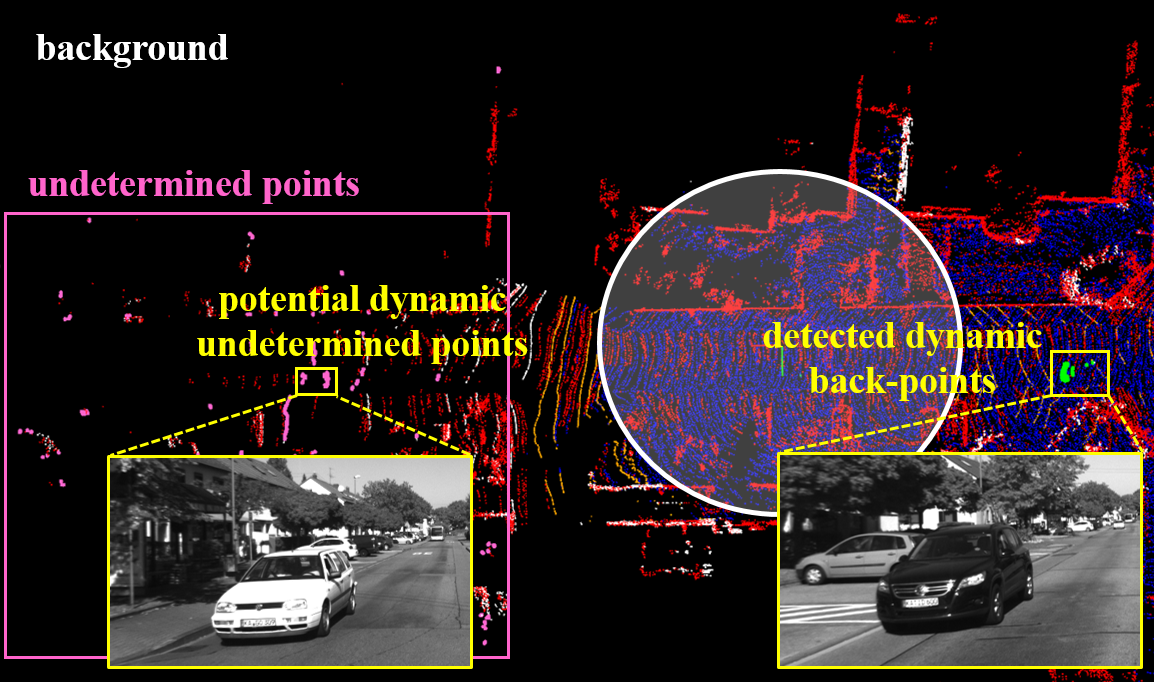

Mode for fore-points. If the number of nearest neighbors is below a certain threshold (5 in our system), it indicates that the location of was originally unoccupied, and thus is classified as a dynamic point. If the number of nearest neighbors is sufficiently large (greater than 5), we calculate the proportion of non-ground points among all nearest neighbors. If this proportion is sufficiently low (less than 30), is classified as a static point and added to both the tracking-map and the output map. Conversely, if the proportion is larger than 30, is classified as a dynamic point and excluded in the map. Inevitably, this determination criteria potentially results in the erroneous removal of some static points in close proximity to the ground. Nonetheless, the points most commonly affected by this misfiltration are situated at the transition between walls and the ground. Despite the possibility of such misfiltration, the overall geometric integrity of the scene remains intact, and it does not affect the performance of the LIO system. The visualization of dynamic point determination results for fore-points is shown in Fig. 5.

Mode for back-points. If the number of nearest neighbors is below a certain threshold (5 in our system), we cannot identify the back-point as a dynamic point, because it is possible that the location has not yet been reconstructed, preventing the obtainment of the nearest neighbors. Such points are labeled as undetermined-points, and a determination will be made once the vehicle platform continues to move and the geometric structures of the locations of these points are recovered. To ensure that newly acquired point clouds can be properly registered during state estimation, it is necessary to incorporate the undetermined-points into the tracking-map. This will not significantly affect the accuracy of state estimation, as even if there are dynamic objects among the back-points, the number of LiDAR points scanned onto them is very sparse. As for the final output map, it is imperative to ensure that it contains as few dynamic points as possible, hence the determination for undetermined-points will be conducted subsequently. When the number of nearest neighbors is sufficiently large (greater than 5), the processing approach is the same as that for fore-points, and the static points are added to both the tracking-map and the output map. The visualization of dynamic point determination results for back-points is shown in Fig. 6.

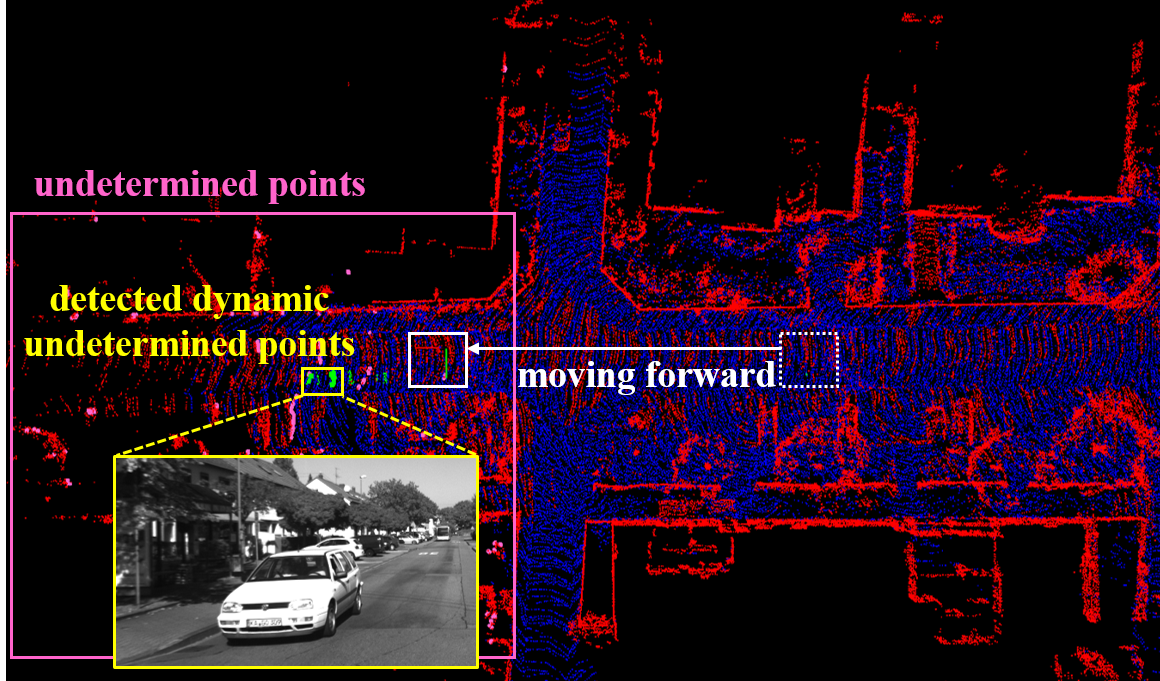

IV-E Undetermined-Point Re-Determination

As mentioned in Sec. IV-D, some back-points may fail to find nearest neighbors due to the incomplete reconstruction of their locations. For such points, we label them as undetermined points and place them in a container. As the vehicle platform continues to move forward, the geometric structure informations of previously unreconstructed positions are recovered (as shown in Fig. 7). Then we can make re-determination for those undetermined-points. When a point in the undetermined point container is close to the current position of the vehicle platform (less than 30 meter), it is highly likely that the geometric structure information around has been reconstructed. We can then determine whether is a dynamic point. If the number of nearest neighbors is below a certain threshold (5 in our system), it suggests that the location of was originally unoccupied, leading to the classification of as a dynamic point. If the number of nearest neighbors is larger than the threshold of 5, we calculate the proportion of non-ground points among all nearest neighbors. If this proportion is sufficiently low (less than 30), it is classified as a static point and added to the output map. On the contrary, if the proportion is not smaller than the threshold of 30, it is classified as a dynamic point and would not be included in output map. If an undetermined-point is more than 30 meters away from the vehicle platform’s position for 10 consecutive sweeps, it is likely to be a sparse background point at far distance. Thus, we directly classify it as a static point and add it to the output map.

V Our System Dynamic-LIO

V-A System Overview

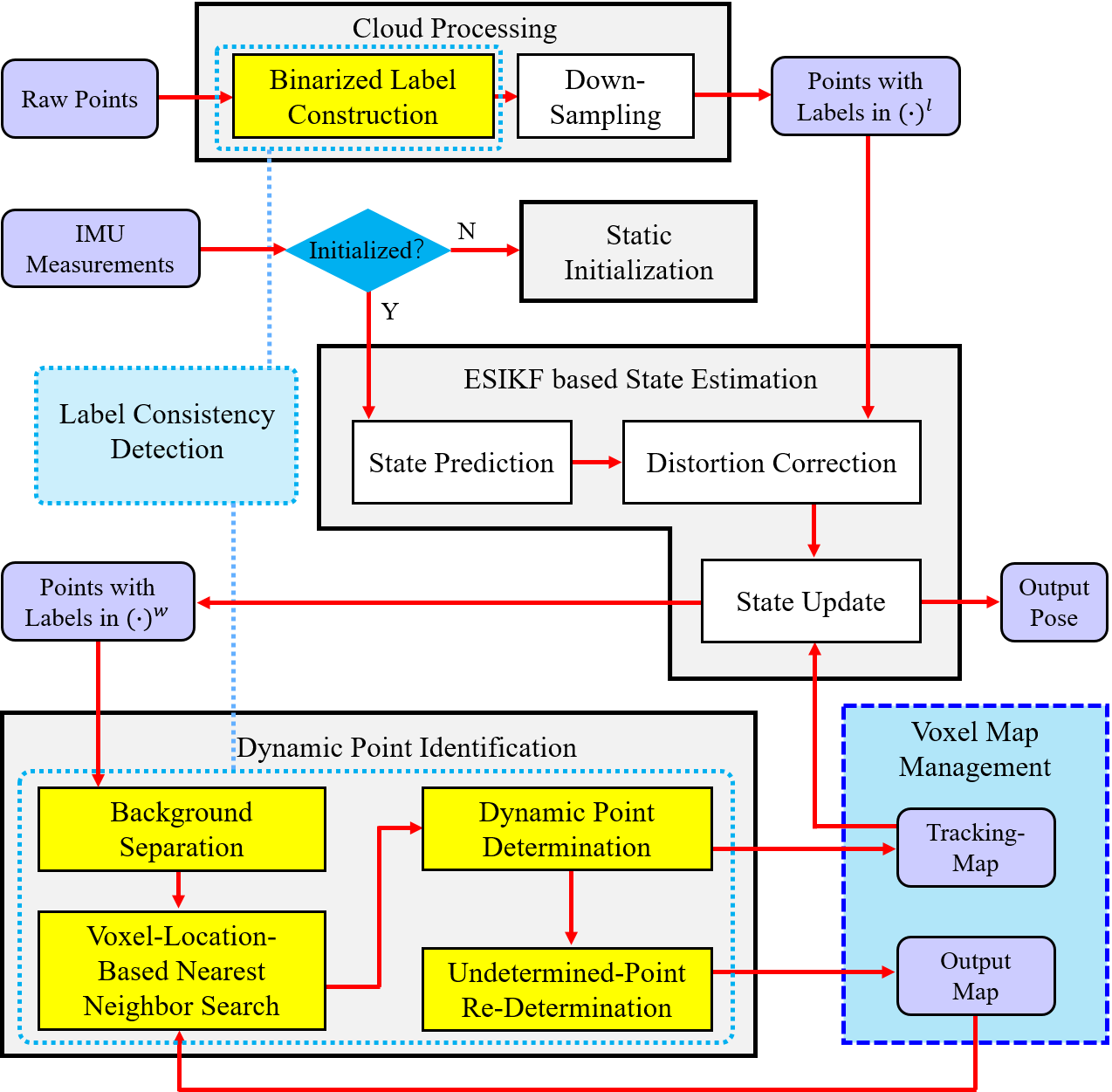

Fig. 8 illustrates the framework of our self-developed system Dynamic-LIO which consists of four main modules: cloud processing, static initialization, ESIKF based state estimation and dynamic point identification. The cloud processing module constructs binarized label (i.e., ground label or non-ground label) for each 3D point of current sweep. Subsequently, it performs spatial down-sampling to ensure uniform density of current point cloud. The static initialization module [11] utilizes the IMU measurements to estimate some state parameters such as gravitational acceleration, accelerometer bias, gyroscope bias and initial velocity. The ESIKF based state estimation module estimates the state of current sweep, and utilize the estimated pose to transform all points of current sweep from to . The dynamic point identification module identifies dynamic points from current input point cloud data, to ensure that the global map contains only static points. The yellow rectangles indicate the specific locations of five steps of label consistency detection within the overall system framework.

V-B Down-Sampling

To alleviate the substantial computational load caused by the overwhelming number of 3D points collected by a LiDAR in a single sweep, we implement a decimation strategy on point cloud. Initially, a uniform subsampling approach is applied to retain a single point for every set of four. Subsequently, we integrate the uniformly subsampled points into a voxel grid defined by a (unit: m) resolution, ensuring that each voxel is occupied by a solitary point.

It is worth noting that the down-sampling of current input sweep must be performed after binarized label construction. The reason is that down-sampling can disrupt the adjacency relationships of 3D points in range images, which can affect the execution of binarized label construction.

V-C Static Initialization

In our Dynamic-LIO, a static initialization procedure is employed to estimate essential parameters, including initial poses, initial velocities, gravitational forces, as well as biases in both accelerometer and gyroscope measurements. For a detailed elucidation of the methodology, please refer to reference [11].

V-D ESIKF based State Estimation

We adapt the error state iterated Kalman filter (ESIKF) to perform state estimation, which is the same as Fast-LIO2 [35]. Fast-LIO2 utilized their own self-developed toolbox IKFoM [13] for the implementation of on-manifold Kalman filter, and we utilized the more widely recognized Eigen3 library [9] to implement this function. We have documented the entire execution process of our ESIKF in Appendices for readers to refer the details of implementation.

It is worth mentioning that during state estimation, we need to find the nearest neighbors for randomly selected 600 points of current sweep from the global map, and fit a plane with these nearest neighbors to construct point-to-plane distance constraints. To ensure that the final fitted plane as accurately as possible reflect the surrounding geometric information, we still use the conventional 8-nearest neighbor search method here. Additionally, we search for nearest neighbors based on the tracking-map. Compared to the output map, the tracking-map is more conducive to the accurate and robust running of LIO. Because the tracking-map retains enough geometric details while removing out the vast majority of dynamic points.

VI Experiments

We evaluate the overall performance of our method on six autonomous driving scenario datasets: - [1], - [32], - [16], [3], [37] and - [32]. Among them, -, - and - are three public datasets collected at dynamic scenes, , and - are three public datasets collected at static scenes. - is collected by a 64-line Velodyne LiDAR and each LiDAR point has its unique semantic label. Thus, - is used to evaluate the preservation rate (PR) and rejection rate (RR) of proposed label consistency based dynamic point detection and removal method. - is collected by a 32-line Robosense LiDAR and IMU, and - is collected by a 32-line Velodyne LiDAR and IMU. These two datasets are used to evaluate the improvement of dynamic point detection and removal method on pose estimation in terms of absolute trajectory error (ATE). Both , and - are collected by a 32-line Velodyne LiDAR and IMU. These three datasets are used to show the outstanding performance of our self-developed LIO system, and demonstrate that the proposed dynamic point detection and removal method has no negative effect on the accuracy of LIO in static scenes. Details of all the 24 sequences used in this section, including name, duration, and whether they contain dynamic objects, are listed in Table I. A consumer-level computer equipped with an Intel Core i7-11700 and 32 GB RAM is used for all experiments.

| Name |

|

|

|||||

|---|---|---|---|---|---|---|---|

| semantic-kitti-00 | 00:14 | Yes | |||||

| semantic-kitti-01 | 00:10 | Yes | |||||

| semantic-kitti-02 | 00:09 | Yes | |||||

| semantic-kitti-05 | 00:33 | Yes | |||||

| semantic-kitti-07 | 00:20 | Yes | |||||

| CA-MarktStreet | 24:35 | Yes | |||||

| CA-RussianHill | 26:57 | Yes | |||||

| TST | 13:05 | Yes | |||||

| Whampoa | 25:37 | Yes | |||||

| 2012-01-08 | 92:16 | No | |||||

| 2012-02-02 | 98:37 | No | |||||

| 2012-02-04 | 77:39 | No | |||||

| 2012-05-11 | 83:36 | No | |||||

| 2012-05-26 | 97:23 | No | |||||

| 2012-06-15 | 55:10 | No | |||||

| 2012-08-04 | 79:27 | No | |||||

| 2012-09-28 | 76:40 | No | |||||

| 2018-07-19 | 15:26 | No | |||||

| 2019-01-31 | 16:00 | No | |||||

| 2019-04-18 | 11:59 | No | |||||

| 2018-07-20 | 16:45 | No | |||||

| 2018-07-13 | 16:59 | No | |||||

| HK-2019-01-17 | 05:18 | No | |||||

| HK-2019-04-26-1 | 02:30 | No |

VI-A PR and RR Comparison with the State-of-the-Arts

We compare our label consistency based dynamic point detection method with three state-of-the-art 3D point-based dynamic point detection methods, i.e., Removert [18], Erasor [20] and Dynamic Filter [10], on - dataset [1]. Among them, Removert and Erasor are offline methods which need the pre-built map as input, Dynamic Filter and our method are online methods which do not rely on any prior information.

| offline | online | |||

|---|---|---|---|---|

| Removert | Erasor | Dynamic Filter | Ours | |

| 86.83 | 93.98 | 90.07 | 90.36 | |

| 95.82 | 91.49 | 87.95 | 88.43 | |

| 83.29 | 87.73 | 88.02 | 88.25 | |

| 88.17 | 88.73 | 90.17 | 90.31 | |

| 82.04 | 90.62 | 87.94 | 89.28 | |

| offline | online | |||

|---|---|---|---|---|

| Removert | Erasor | Dynamic Filter | Ours | |

| 90.62 | 97.08 | 91.09 | 90.73 | |

| 57.08 | 95.38 | 87.69 | 88.41 | |

| 88.37 | 97.01 | 86.10 | 86.22 | |

| 79.98 | 98.26 | 84.65 | 85.84 | |

| 95.50 | 99.27 | 86.80 | 87.34 | |

VI-B ATE Comparison with the State-of-the-Arts

We compare our Dynamic-LIO with two state-of-the-art LIO systems for dynamic scenes, i.e., RF-LIO [25] and ID-LIO [33], on - [32] and - [16] datasets. Both RF-LIO, ID-LIO have loop detection module, and use GTSAM [17] to optimize the factor graph. Thus, we also add the same loop detection module and global optimization module to Dynamic-LIO when comparing with them. Both results of RF-LIO and ID-LIO are recorded from their literatures because they have not released the code. The selected four sequences both encompass highly dynamic scenarios and can effectively evaluate the performance of LIO systems in dynamic scenes.

| RF-LIO | ID-LIO | Ours | |

|---|---|---|---|

| 15.89 | 28.02 | 12.96 | |

| 12.17 | 15.34 | 4.84 | |

| - | 1.06 | 3.90 | |

| - | 3.45 | 6.66 |

-

Denotations: ”-” means the corresponding value is not available.

Results in Table IV demonstrate that the accuracy of our Dynamic-LIO is superior to that of RF-LIO and ID-LIO on and . Since RF-LIO is neither open-sourced nor tested on the - dataset, we are unable to obtain its results on sequence and . Although ID-LIO achieves smaller ATE than our system on - dataset, our act of open-sourcing the code better substantiates the reproducibility of our results.

We also compare our Dynamic-LIO with six state-of-the-art LIO systems for static scenes, i.e., LiLi-OM [19], LIO-SAM [30], Fast-LIO2 [35], DLIO [4], IG-LIO [6] and Point-LIO [12], on [3], [37] and - [32] datasets, to demonstrate that our Dynamic-LIO still has excellent pose estimation performance in static scenes. The six methods we compared have released corresponding code, thus we obtain the results of them based on the source code provided by the authors.

| LiLi- OM | LIO- SAM | Fast- LIO2 | DLIO | IG- LIO | Point- LIO | Ours | |

|---|---|---|---|---|---|---|---|

| 50.71 | 1.85 | 3.57 | 3.27 | 1.85 | 2.55 | 1.54 | |

| 91.86 | 7.18 | 2.00 | 1.80 | 1.72 | 2.45 | 1.74 | |

| 92.93 | 2.16 | 2.77 | 5.35 | 2.92 | 5.31 | 2.23 | |

| 185.24 | 2.46 | 3.14 | 1.84 | 11.24 | 1.67 | ||

| 141.83 | 2.60 | 12.44 | 2.12 | 14.89 | 2.24 | ||

| 50.42 | 2.97 | 2.37 | 2.98 | 1.82 | 4.39 | 2.05 | |

| 137.05 | 2.26 | 2.59 | 7.84 | 2.40 | 16.28 | 2.13 | |

| 2.65 | 7.72 | 1.72 | 16.22 | 1.66 | |||

| 67.16 | - | 15.13 | 14.25 | 17.37 | 22.71 | 13.92 | |

| 38.17 | - | 21.21 | 13.85 | 21.27 | 23.02 | 16.09 | |

| 10.70 | - | 10.81 | 55.28 | 13.75 | 13.81 | 9.10 | |

| 70.98 | - | 15.20 | 18.05 | 16.44 | 21.76 | 9.63 | |

| 62.57 | - | 13.24 | 14.95 | 19.88 | 9.63 | ||

| 1.68 | 1.20 | 2.44 | 1.15 | 1.07 | 1.03 | ||

| 3.11 | 3.13 | 3.24 | 3.31 | 2.82 | 3.08 |

-

Denotations: “” means the system fails to run entirely on the corresponding sequence, and “-” means the corresponding value is not available.

| Ours w/o Considering Undetermined-Points | Ours | |

|---|---|---|

| 90.09 | 90.36 | |

| 88.17 | 88.43 | |

| 87.59 | 88.25 | |

| 89.31 | 90.31 | |

| 88.43 | 89.28 |

| Ours w/o Considering Undetermined-Points | Ours | |

|---|---|---|

| 90.05 | 90.73 | |

| 87.86 | 88.41 | |

| 86.22 | 86.22 | |

| 84.08 | 85.84 | |

| 87.30 | 87.34 |

|

Ours | ||||

| Dynamic Scenes | 13.98 | 12.96 | |||

| 4.88 | 4.84 | ||||

| 5.21 | 3.90 | ||||

| 9.19 | 6.66 | ||||

| Static Scenes | 1.60 | 1.54 | |||

| 1.77 | 1.74 | ||||

| 2.23 | 2.23 | ||||

| 2.55 | 1.67 | ||||

| 2.44 | 2.24 | ||||

| 1.93 | 2.05 | ||||

| 2.06 | 2.13 | ||||

| 1.92 | 1.66 | ||||

| 13.76 | 13.92 | ||||

| 17.05 | 16.09 | ||||

| 9.66 | 9.10 | ||||

| 12.97 | 9.63 | ||||

| 9.74 | 9.63 | ||||

| 1.04 | 1.03 | ||||

| 3.31 | 3.08 |

| Dynamic Filter (Front-End) | RH-Map | Ours | |

| 55.71 | 63.96 | 41.60 | |

| 93.23 | 34.93 | ||

| 66.98 | 46.00 | ||

| 64.61 | 34.87 | ||

| 51.02 | 27.61 | ||

| CPU model | i7-8559U | i7-12700H | i7-11700 |

| clock speed | 2.7GHz | 2.7Ghz | 2.5GHz |

| RF-LIO | ID-LIO | Ours | |

| 96 | 121 | 23.33 | |

| 121 | 100 | 21.50 | |

| - | 96 | 16.46 | |

| - | 99 | 16.41 | |

| CPU model | i5 | i7-10700K | i7-11700 |

| clock speed | 1.33.7GHz | 3.8GHz | 2.5GHz |

-

Denotations: “-” means the corresponding value is not available.

| Cloud Processing | State Estimation | Label Consistency Detection | Sum | |||

|---|---|---|---|---|---|---|

| Binarized Label Construction | Dynamic Point Identification | Total | ||||

| 7.49 | 27.98 | 1.85 | 4.28 | 6.13 | 41.60 | |

| 2.01 | 24.57 | 1.84 | 6.51 | 8.35 | 34.93 | |

| 7.11 | 36.07 | 1.88 | 0.94 | 2.82 | 46.00 | |

| 7.66 | 18.93 | 1.85 | 6.43 | 8.28 | 34.87 | |

| 7.94 | 19.67 | 1.80 | 1.21 | 3.01 | 27.61 | |

| 5.45 | 13.48 | 0.53 | 3.87 | 4.40 | 23.33 | |

| 8.30 | 13.20 | 0.68 | 0.45 | 1.13 | 21.50 | |

| 6.07 | 7.31 | 1.27 | 1.81 | 3.08 | 16.46 | |

| 7.14 | 7.03 | 1.33 | 0.91 | 2.24 | 16.41 | |

| 5.76 | 21.78 | 0.70 | 0.65 | 1.35 | 28.89 | |

| 6.38 | 23.12 | 0.75 | 0.50 | 1.25 | 30.75 | |

| 5.91 | 22.20 | 0.72 | 0.75 | 1.47 | 29.58 | |

| 5.09 | 20.31 | 0.67 | 0.59 | 1.26 | 26.66 | |

| 5.12 | 19.81 | 0.68 | 0.60 | 1.28 | 26.21 | |

| 6.52 | 20.48 | 0.78 | 0.76 | 1.54 | 28.54 | |

| 5.97 | 24.43 | 0.74 | 0.58 | 1.32 | 31.72 | |

| 5.07 | 19.64 | 0.65 | 0.74 | 1.39 | 26.10 | |

| 5.03 | 14.23 | 1.01 | 1.51 | 2.52 | 21.78 | |

| 4.79 | 16.91 | 0.97 | 1.83 | 2.80 | 24.50 | |

| 4.45 | 14.27 | 0.90 | 2.28 | 3.18 | 21.90 | |

| 4.92 | 14.51 | 0.99 | 1.20 | 2.19 | 21.62 | |

| 5.01 | 15.14 | 0.97 | 1.42 | 2.39 | 22.54 | |

| 6.54 | 11.05 | 0.98 | 0.39 | 1.37 | 18.96 | |

| 6.91 | 9.22 | 1.28 | 2.03 | 3.31 | 19.44 | |

|

|

|||||

|---|---|---|---|---|---|---|

| 78.53 | 6.13 | |||||

| 115.99 | 8.35 | |||||

| 81.04 | 2.82 | |||||

| 43.22 | 8.28 | |||||

| 46.63 | 3.01 | |||||

| 21.69 | 4.40 | |||||

| 21.58 | 1.13 | |||||

| 15.20 | 3.08 | |||||

| 15.50 | 2.24 | |||||

| 8.76 | 0.65 | |||||

| 9.04 | 0.50 | |||||

| 8.78 | 0.75 | |||||

| 8.88 | 0.59 | |||||

| 9.35 | 0.60 | |||||

| 9.42 | 0.76 | |||||

| 9.05 | 0.58 | |||||

| 8.63 | 0.74 | |||||

| 11.50 | 1.51 | |||||

| 10.56 | 1.83 | |||||

| 11.44 | 2.28 | |||||

| 12.47 | 1.20 | |||||

| 10.92 | 1.42 | |||||

| 9.54 | 0.39 | |||||

| 18.16 | 2.03 |

-

Denotations: ”LCD” is the abbreviation of ”Label Consistency Detection”.

Results in Table V demonstrate that our Dynamic-LIO outperforms state-of-the-arts for more than half sequences in terms of smaller ATE. Although IG-LIO achieves comparable results to our system on dataset, it shows poor accuracy on dataset. In addition, although our accuracy is not the best on , , , and , we are very close to the best accuracy. “-” means the corresponding value is not available. LIO-SAM needs 9-axis IMU data as input, while the dataset only provides 6-axis IMU data. Therefore, we cannot provide the results of LIO-SAM on the dataset. “” means the system fails to run entirely on the corresponding sequence. Except for our system, Fast-LIO2 and Point-LIO, other systems break down on several sequences, which also demonstrate the robustness of our system.

VI-C Ablation Study of Undetermined-Points

In our system, the purpose of incorporating undetermined-points is to remove dynamic points as much as possible, thereby increasing the proportion of static points in the output map. In this section, we validate the necessity of incorporating undetermined-points through comparing the PR and RR value of our Dynamic-LIO with and without considering undetermined-points.

VI-D Ablation Study of Dynamic Point Removal for Pose Estimation

In this section, we evaluate the effectiveness of removing dynamic points for pose estimation by comparing the ATE results of our Dynamic-LIO with and without removing dynamic points.

Results in Table VIII demonstrate that removing dynamic points can enhance the pose estimation accuracy of our Dynamic-LIO in dynamic scenes, especially on dataset. In static scenes, our label consistency based dynamic point detection and removal has no negative effect on the pose estimation accuracy, which also reflects the robustness and practicability of the proposed method.

VI-E Time Consumption Comparison with the State-of-the-Arts

We compare the time consumption of our label consistency based dynamic point detection and removal method with two state-of-the-art online 3D point-based dynamic point detection and removal methods, i.e., Dynamic Filter [10] and RH-Map [38], on - dataset [1]. Then, we compare the time consumption of our Dynamic-LIO with RF-LIO and ID-LIO. Both results of other approaches are recorded from their literatures because they have not released the code.

Results in Table IX demonstrate that the time consumption of our dynamic point detection and removal method is much smaller than Dynamic Filter and RH-Map. The front-end of Dynamic Filter requires 55.71ms to process the data of a single sweep. When accounting for the back-end overhead, the total duration for processing a single sweep will be even longer. Given that the current LiDAR acquisition frequency is typically between 1020Hz, this implies that the processing time for a single sweep must be within 50ms to ensure the real-time performance. It can be seen that neither Dynamic Filter nor RH-Map can guarantee real-time capability, whereas our method can rum in real time stably. Since the - dataset does not include IMU data, we complete pose estimation and mapping in a LiDAR-only odometry mode while simultaneously detecting and removing dynamic objects online. Therefore, the time consumption recorded in Table IX represents the total time cost for both LiDAR-only odometry and dynamic point detection and removal, and the results for Dynamic Filter and RH-Map are the same. Although the testing platforms are not entirely the same, the CPUs used for testing Dynamic Filter and RH-Map have a higher clock speed than the one we used. This also serves to a certain extent as evidence of the reference value of our experimental results. Results in Table X demonstrate that the time consumption of our Dynamic-LIO is much smaller than RF-LIO [25] and ID-LIO [33], while our system operates at a speed approximately 5X faster than that of RF-LIO and ID-LIO. Since RF-LIO is neither open-sourced nor tested on the - dataset, we are unable to obtain its results on sequence and . As stated in ID-LIO [33], the authors tested their system using an i5 CPU. However, no specific model was provided in [33], thus we record the range of clock speeds for the entire i5 series of CPUs in Table X. Furthermore, although the i7-11700 has an advantage over the i5 series and i7-8559U in terms of thread count, our entire Dynamic-LIO system is implemented based on a single thread and does not take advantage of the multi-threading capability.

VI-F Time Consumption of Each Module

We evaluate the runtime breakdown (unit: ms) of our system for all testing sequences. For each sequence, we test the time consumption of cloud processing (except for binarized label construction), state estimation and label consistency detection. The label consistency detection module can be further decomposed into two sub-steps: binarized label construction and dynamic point identification. Results in Table XI show that our system takes only 19ms to identify dynamic points of a sweep, while the total duration for completing all tasks of LIO is 1646ms. This implies that our method can accomplish the dynamic point detection and removal with extremely low computational overhead in LIO systems.

VI-G Ablation Study of Nearest Neighbor Search

As mentioned in Sec. IV-C, compared to the conventional 8-nearest neighbor search, the voxel-location-based nearest neighbor search can significantly reduce the computational cost required for nearest neighbor search in label consistency detection. This subsection will provides quantitative comparative results to substantiate this conclusion.

Table XII demonstrates that the voxel-location-based nearest neighbor search we employ has achieved an order-of-magnitude reduction in time consumption compared to the conventional 8-nearest neighbor search, primarily for the following two reasons: (1) The voxel-location-based nearest neighbor search only requires to process the voxel to which the current point belongs, whereas the 8-nearest neighbor search requires to process the voxel to which the current point belongs and its eight adjacent voxels; (2) The voxel-location-based nearest neighbor search does not necessitate the computation of the Euclidean distance between candidate points and the current point.

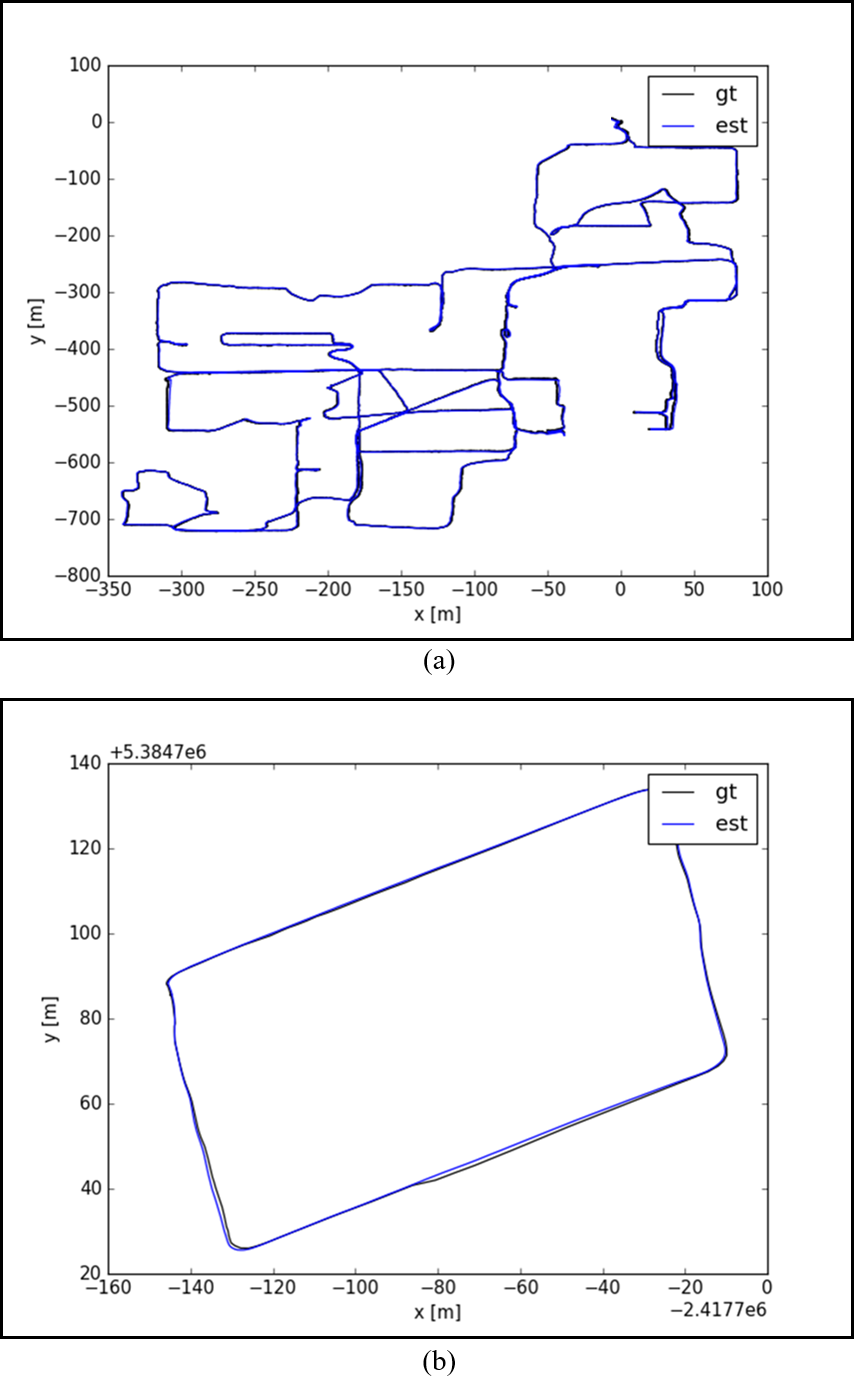

VI-H Visualization for Trajectory and Map

We visualize the trajectories and the local point cloud map estimated by our system. The comparison results between our estimated trajectories and ground truth of the exemplar sequences and are shown in Fig. 9 (a) and (b), where our estimated trajectories and ground truth almost exactly coincide. Fig. 10 shows the ability of our Dynamic-LIO to reconstruct a static point cloud map on the exemplar sequence . As illustrated in Fig. 10 (a), before removing dynamic points, the ghost tracks of moving objects (green points) are clearly visible on the map. As illustrated in Fig. 10 (b), after removing dynamic points, the output map almost no longer contains ghost tracks.

VII Conclusion

This paper proposes a LIO system with label consistency detection, which can fastly eliminate the influence of moving objects in driving scenarios. Different from existing approaches involving batch geometric computation or global statistics to identify moving objects, the proposed method is more lightweight. Specifically, the proposed method constructs binarized labels for each point of current sweep, and utilizes the label difference between each point and its surrounding points in map to identify moving objects. Firstly, a fast 2D connected component method is employed to construct binarized labels, i.e., ground and non-ground, for each point of current sweep. Given that moving objects in driving scenes are located on ground, the inconsistency arises when comparing the label of a non-ground point in current sweep with its nearest neighbors in map. Meanwhile, we propose to utilize the voxel-location-based nearest neighbor to achieve fast nearest neighbor search. Furthermore, we embed the proposed label consistency detection method into a self-developed LIO, which can accurately estimate state and exclude the interference of dynamic objects with extremely low time consumption.

Experimental results show that the proposed label consistency detection method can achieve comparable PR and RR to state-of-the-art dynamic point detection and removal methods, while ensuring a lower computational cost. In addition, our Dynamic-LIO operates at a speed approximately 5X faster than state-of-the-art LIO systems for dynamic scenes, and achieve state-of-the-art pose estimation accuracy on both dynamic and static scenes.

References

- [1] J. Behley, M. Garbade, A. Milioto, J. Quenzel, S. Behnke, C. Stachniss, and J. Gall, “Semantickitti: A dataset for semantic scene understanding of lidar sequences,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 9297–9307.

- [2] Y. Cai, W. Xu, and F. Zhang, “ikd-tree: An incremental kd tree for robotic applications,” arXiv preprint arXiv:2102.10808, 2021.

- [3] N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “University of michigan north campus long-term vision and lidar dataset,” The International Journal of Robotics Research, vol. 35, no. 9, pp. 1023–1035, 2016.

- [4] K. Chen, R. Nemiroff, and B. T. Lopez, “Direct lidar-inertial odometry: Lightweight lio with continuous-time motion correction,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 3983–3989.

- [5] Z. Chen, K. Zhang, H. Chen, M. Y. Wang, W. Zhang, and H. Yu, “Dorf: A dynamic object removal framework for robust static lidar mapping in urban environments,” IEEE Robotics and Automation Letters, 2023.

- [6] Z. Chen, Y. Xu, S. Yuan, and L. Xie, “ig-lio: An incremental gicp-based tightly-coupled lidar-inertial odometry,” IEEE Robotics and Automation Letters, vol. 9, no. 2, pp. 1883–1890, 2024.

- [7] T. Cortinhal, G. Tzelepis, and E. Erdal Aksoy, “Salsanext: Fast, uncertainty-aware semantic segmentation of lidar point clouds,” in Advances in Visual Computing: 15th International Symposium, ISVC 2020, San Diego, CA, USA, October 5–7, 2020, Proceedings, Part II 15. Springer, 2020, pp. 207–222.

- [8] W. Dai, Y. Zhang, P. Li, Z. Fang, and S. Scherer, “Rgb-d slam in dynamic environments using point correlations,” IEEE transactions on pattern analysis and machine intelligence, vol. 44, no. 1, pp. 373–389, 2020.

- [9] Z. Eugene, “Eigen3: a c++ template library for linear algebra: matrices, vectors, numerical solvers, and related algorithms,” https://gitlab.com/libeigen/eigen, 2011.

- [10] T. Fan, B. Shen, H. Chen, W. Zhang, and J. Pan, “Dynamicfilter: an online dynamic objects removal framework for highly dynamic environments,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 7988–7994.

- [11] P. Geneva, K. Eckenhoff, W. Lee, Y. Yang, and G. Huang, “Openvins: A research platform for visual-inertial estimation,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 4666–4672.

- [12] D. He, W. Xu, N. Chen, F. Kong, C. Yuan, and F. Zhang, “Point-lio: Robust high-bandwidth light detection and ranging inertial odometry,” Advanced Intelligent Systems, p. 2200459, 2023.

- [13] D. He, W. Xu, and F. Zhang, “Kalman filters on differentiable manifolds,” arXiv preprint arXiv:2102.03804, 2021.

- [14] M. Himmelsbach, F. V. Hundelshausen, and H.-J. Wuensche, “Fast segmentation of 3d point clouds for ground vehicles,” in 2010 IEEE Intelligent Vehicles Symposium. IEEE, 2010, pp. 560–565.

- [15] A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “Octomap: An efficient probabilistic 3d mapping framework based on octrees,” Autonomous robots, vol. 34, pp. 189–206, 2013.

- [16] L.-T. Hsu, N. Kubo, W. Wen, W. Chen, Z. Liu, T. Suzuki, and J. Meguro, “Urbannav: An open-sourced multisensory dataset for benchmarking positioning algorithms designed for urban areas,” in Proceedings of the 34th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2021), 2021, pp. 226–256.

- [17] M. Kaess, H. Johannsson, R. Roberts, V. Ila, J. J. Leonard, and F. Dellaert, “isam2: Incremental smoothing and mapping using the bayes tree,” The International Journal of Robotics Research, vol. 31, no. 2, pp. 216–235, 2012.

- [18] G. Kim and A. Kim, “Remove, then revert: Static point cloud map construction using multiresolution range images,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 10 758–10 765.

- [19] K. Li, M. Li, and U. D. Hanebeck, “Towards high-performance solid-state-lidar-inertial odometry and mapping,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 5167–5174, 2021.

- [20] H. Lim, S. Hwang, and H. Myung, “Erasor: Egocentric ratio of pseudo occupancy-based dynamic object removal for static 3d point cloud map building,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 2272–2279, 2021.

- [21] A. Milioto, I. Vizzo, J. Behley, and C. Stachniss, “Rangenet++: Fast and accurate lidar semantic segmentation,” in 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 2019, pp. 4213–4220.

- [22] V. Nguyen, S. Gächter, A. Martinelli, N. Tomatis, and R. Siegwart, “A comparison of line extraction algorithms using 2d range data for indoor mobile robotics,” Autonomous Robots, vol. 23, pp. 97–111, 2007.

- [23] P. Pfreundschuh, H. F. Hendrikx, V. Reijgwart, R. Dubé, R. Siegwart, and A. Cramariuc, “Dynamic object aware lidar slam based on automatic generation of training data,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 11 641–11 647.

- [24] F. Pomerleau, P. Krüsi, F. Colas, P. Furgale, and R. Siegwart, “Long-term 3d map maintenance in dynamic environments,” in 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2014, pp. 3712–3719.

- [25] C. Qian, Z. Xiang, Z. Wu, and H. Sun, “Rf-lio: Removal-first tightly-coupled lidar inertial odometry in high dynamic environments,” arXiv preprint arXiv:2206.09463, 2022.

- [26] C. Qin, H. Ye, C. E. Pranata, J. Han, S. Zhang, and M. Liu, “Lins: A lidar-inertial state estimator for robust and efficient navigation,” in 2020 IEEE international conference on robotics and automation (ICRA). IEEE, 2020, pp. 8899–8906.

- [27] J. Schauer and A. Nüchter, “The peopleremover—removing dynamic objects from 3-d point cloud data by traversing a voxel occupancy grid,” IEEE robotics and automation letters, vol. 3, no. 3, pp. 1679–1686, 2018.

- [28] L. Schmid, O. Andersson, A. Sulser, P. Pfreundschuh, and R. Siegwart, “Dynablox: Real-time detection of diverse dynamic objects in complex environments,” IEEE Robotics and Automation Letters, 2023.

- [29] T. Shan and B. Englot, “Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 4758–4765.

- [30] T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti, and D. Rus, “Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping,” in 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 2020, pp. 5135–5142.

- [31] H. W. Sorenson, “Kalman filtering techniques,” in Advances in control systems. Elsevier, 1966, vol. 3, pp. 219–292.

- [32] W. Wen, Y. Zhou, G. Zhang, S. Fahandezh-Saadi, X. Bai, W. Zhan, M. Tomizuka, and L.-T. Hsu, “Urbanloco: A full sensor suite dataset for mapping and localization in urban scenes,” in 2020 IEEE international conference on robotics and automation (ICRA). IEEE, 2020, pp. 2310–2316.

- [33] W. Wu and W. Wang, “Lidar inertial odometry based on indexed point and delayed removal strategy in highly dynamic environments,” Sensors, vol. 23, no. 11, p. 5188, 2023.

- [34] J. R. Xu, S. Huang, S. Qiu, L. Zhao, W. Yu, M. Fang, M. Wang, and R. Li, “Lidar-link: Observability-aware probabilistic plane-based extrinsic calibration for non-overlapping solid-state lidars,” IEEE Robotics and Automation Letters, 2024.

- [35] W. Xu, Y. Cai, D. He, J. Lin, and F. Zhang, “Fast-lio2: Fast direct lidar-inertial odometry,” IEEE Transactions on Robotics, vol. 38, no. 4, pp. 2053–2073, 2022.

- [36] W. Xu and F. Zhang, “Fast-lio: A fast, robust lidar-inertial odometry package by tightly-coupled iterated kalman filter,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 3317–3324, 2021.

- [37] Z. Yan, L. Sun, T. Krajník, and Y. Ruichek, “Eu long-term dataset with multiple sensors for autonomous driving,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 10 697–10 704.

- [38] Z. Yan, X. Wu, Z. Jian, B. Lan, and X. Wang, “Rh-map: Online map construction framework of dynamic object removal based on 3d region-wise hash map structure,” IEEE Robotics and Automation Letters, 2024.

- [39] D. Yoon, T. Tang, and T. Barfoot, “Mapless online detection of dynamic objects in 3d lidar,” in 2019 16th Conference on Computer and Robot Vision (CRV). IEEE, 2019, pp. 113–120.

- [40] Z. Yuan, J. Deng, R. Ming, F. Lang, and X. Yang, “Sr-livo: Lidar-inertial-visual odometry and mapping with sweep reconstruction,” IEEE Robotics and Automation Letters, vol. 9, no. 6, pp. 5110–5117, 2024.

- [41] Z. Yuan, F. Lang, T. Xu, and X. Yang, “Sr-lio: Lidar-inertial odometry with sweep reconstruction,” arXiv preprint arXiv:2210.10424, 2022.

- [42] Z. Yuan, F. Lang, T. Xu, C. Zhao, and X. Yang, “Semi-elastic lidar-inertial odometry,” arXiv preprint arXiv:2307.07792, 2023.

- [43] Z. Yuan, Q. Wang, K. Cheng, T. Hao, and X. Yang, “Sdv-loam: Semi-direct visual–lidar odometry and mapping,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 9, pp. 11 203–11 220, 2023.

- [44] T. Zhang, x. Zhang, z. Liao, X. Xia, and Y. Li, “As-lio: Spatial overlap guided adaptive sliding window lidar-inertial odometry for aggressive fov variation,” arXiv preprint arXiv:2408.11426, 2024.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/17af4448-5dda-4bed-8386-dd2f3456b207/zikangyuan.jpg) |

Zikang Yuan received his PhD degree from Huazhong University of Science and Technology (HUST), Wuhan, China, in 2024. He has published one paper on TPAMI, one paper on RA-L, two papers on IROS, two papers on ACM MM and three papers on TMM. His research interests include monocular dense mapping, RGB-D simultaneous localization and mapping, visual-inertial state estimation, visual-LiDAR pose estimation and LiDAR-inertial state estimation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/17af4448-5dda-4bed-8386-dd2f3456b207/xiaoxiangwang.jpg) |

Xiaoxiang Wang is currently a 2nd year M.S. student of Huazhong University of Science and Technology (HUST), School of Electronic Information and Communications. His research interests include 3D point based dynamic point indentification and object tracking. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/17af4448-5dda-4bed-8386-dd2f3456b207/jingyingwu.jpg) |

Jingying Wu is currently a 3rd year M.S. student of Huazhong University of Science and Technology (HUST), School of Electronic Information and Communications. Her research interests include 3D point based dynamic point indentification and loop closure identification. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/17af4448-5dda-4bed-8386-dd2f3456b207/jundacheng.jpg) |

Junda Cheng is currently a 2nd year PhD student at the Department of Electronic Information and Communications at Huazhong University of Science and Technology (HUST). He is supervised by Prof. Xin Yang. He received the B.Eng. degree from Huazhong University of Science and Technology in 2020. His research interests include stereo matching, multi-view stereo, and deep visual odometry. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/17af4448-5dda-4bed-8386-dd2f3456b207/xinyang.jpg) |

Xin Yang received her PhD degree in University of California, Santa Barbara in 2013. She worked as a Post-doc in Learning-based Multimedia Lab at UCSB (2013-2014). She is current Professor of Huazhong University of Science and Technology School of Electronic Information and Communications. Her research interests include simultaneous localization and mapping, augmented reality, and medical image analysis. She has published over 90 technical papers, including TPAMI, IJCV, TMI, MedIA, CVPR, ECCV, MM, etc., co-authored two books and holds 3 U.S. Patents. Prof. Yang is a member of IEEE and a member of ACM. |