LF-3PM: a LiDAR-based Framework

for Perception-aware Planning with Perturbation-induced Metric

Abstract

Just as humans can become disoriented in featureless deserts or thick fogs, not all environments are conducive to the Localization Accuracy and Stability (LAS) of autonomous robots. This paper introduces an efficient framework designed to enhance LiDAR-based LAS through strategic trajectory generation, known as Perception-aware Planning. Unlike vision-based frameworks, the LiDAR-based requires different considerations due to unique sensor attributes. Our approach focuses on two main aspects: firstly, assessing the impact of LiDAR observations on LAS. We introduce a perturbation-induced metric to provide a comprehensive and reliable evaluation of LiDAR observations. Secondly, we aim to improve motion planning efficiency. By creating a Static Observation Loss Map (SOLM) as an intermediary, we logically separate the time-intensive evaluation and motion planning phases, significantly boosting the planning process. In the experimental section, we demonstrate the effectiveness of the proposed metrics across various scenes and the feature of trajectories guided by different metrics. Ultimately, our framework is tested in a real-world scenario, enabling the robot to actively choose topologies and orientations preferable for localization. The source code is accessible at https://github.com/ZJU-FAST-Lab/LF-3PM.

I Introduction

Accurate localization is important for autonomous robots to perform complex tasks, especially in GPS-denied environments. Although localization algorithms [1, 2, 3, 4] are reliable in most cases, scenarios still exist where Localization Accuracy and Stability (LAS) can get poor. One solution is to make the robot actively choose trajectories conducive to improving LAS, known as Perception-aware Planning.

In general, a Perception-aware Planning framework pivots on two essential components: observation evaluation and trajectory generation. By evaluating the accumulated loss of observations simulated along the candidate trajectories, the robots can choose one that is most conducive to improving LAS. Recently, considerable progress [5, 6, 7, 8, 9] has been made on vision-based Perception-aware Planning frameworks, yet related research based on LiDAR remains limited. To improve the LiDAR-based LAS of robots, it’s intuitive to adapt the design principles from vision-based frameworks. However, the essential components require significant modifications due to the distinct attributes of cameras and LiDAR.

On the one hand, the focus of observation evaluation in the framework of the two kinds is different. The main factors that affect the vision-based LAS are texture richness [5] and lighting conditions [10], while for the LiDAR-based LAS, it is the geometric structure of the surroundings [11].

On the other hand, the efficiency of simulating visual feature points is much higher than that of simulating a LiDAR scan. The visual feature points are sparse enough to allow real-time simulation [5], even enabling the construction of visibility functions for each feature point [12]. However, LiDAR points are dense, making it impractical to simulate scans during motion planning.

Therefore, we need a new metric for LiDAR-based observation loss evaluation to produce reliable guidance in trajectory generation and an efficient framework that rationally separates observation evaluation from motion planning to prevent the time-consuming simulation from blocking the trajectory generation process.

We note that the LiDAR-based LAS primarily depends on the geometric structure of the environment, which is inherently more stable than vision-based LAS due to its invariance to lighting conditions. This stability permits the pre-evaluation of the loss of LiDAR observation at the robot’s potential state space like or . In addition, since observations are roughly the same between two similar poses, the loss does not drastically change within a small area with a slight orientation difference. Thus, we can discretize the state space into grids, evaluate the observation loss once at the center of each grid, and then use interpolation to obtain an evaluation of the intermediate states. We name the grid structure as Static Observation Loss Map (SOLM).

As mentioned earlier, we need to tailor a new metric to evaluate LiDAR observation loss at each grid in SOLM. While covariance is a widely used metric [6] to quantify the observation loss by localization uncertainty, it does not offer independent evaluation at one single location because the result depends on historical observations collected in other grids. Additionally, some metrics like pre-defining the empirical principle [13] show insights into the localization process. However, lacking theoretical support may diminish the effectiveness of the metrics across diverse scenarios and with varying LiDAR configurations.

Therefore, a theoretically well-founded metric is required to evaluate LiDAR observation loss at a single location. Given that Mainstream filtering-based [2] or optimization-based [4] localization methods are essentially solving a Least Squares Problem, our analysis starts from the same form. Inspired by the sensitivity analysis of linear Least Squares Problem [14, 15], we propose a metric by introducing perturbations into LiDAR observations.

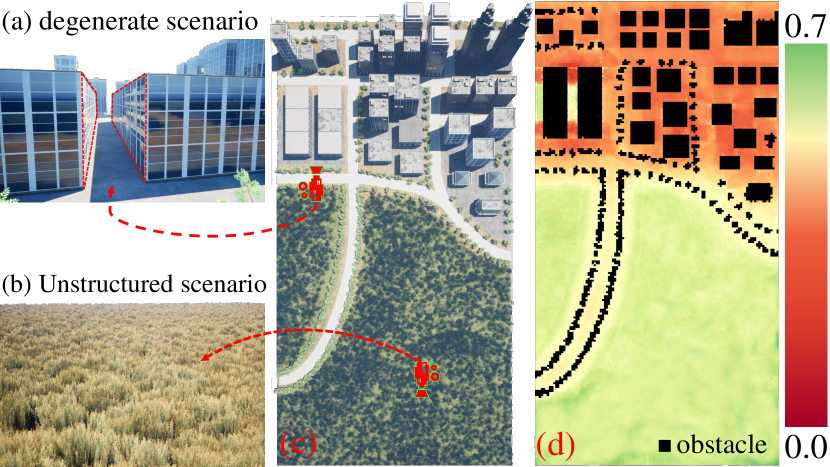

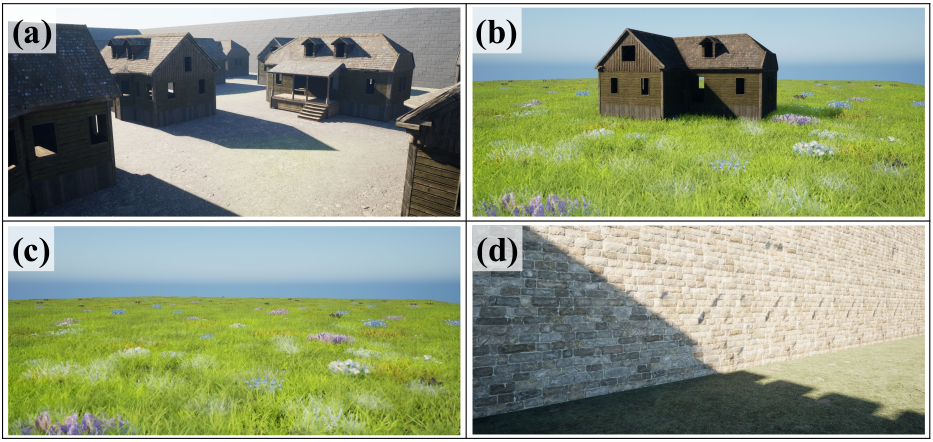

Fig. 1 shows an example of SOLM. A ground robot with a rotating LiDAR localizes itself in the environment, as shown in Fig. 1(c). Since the yaw angle does not affect observations with a 360-degree LiDAR, we calculate SOLM in space to eliminate unnecessary computations. In Sec. V, a case with a limited field of view will be presented. According to the SOLM in Fig. 1(d), degenerate corridors (Fig. 1(a)) and unstructured grasslands (Fig. 1(b)) are identified as less favorable for improving LAS, whereas places with rich structured features are deemed beneficial.

During motion planning, we consider SOLM alongside other factors, such as obstacle avoidance, trajectory smoothness, and agility, to generate trajectories favorable for localization. We apply the proposed framework in more scenarios in Sec.V to validate the effectiveness. The experiments show that benefiting from the reliable metric and well-designed framework, the robot can improve its LAS by selecting topologies and orientations with low observation loss.

In summary, this paper makes the following contributions:

-

1)

We propose a novel perturbation-induced metric to evaluate LiDAR observation loss in SOLM calculation.

-

2)

We design an efficient LiDAR-based Perception-aware Planning framework leveraging LiDAR attributes to decouple observation evaluation and motion planning.

-

3)

We conduct extensive experiments to validate the effectiveness of the proposed framework. The entire project is open-sourced to promote further research in this field.

II Related Work

In the realm of metric design for LiDAR-based Perception-aware Planning frameworks, empirical metrics [13] have been explored to evaluate LiDAR observation loss, offering insights into the localization process. However, these metrics are not well theoretically supported, which may lead to diminished effectiveness across various scenarios and different sensor configurations, highlighting the need for more universally applicable metrics.

Some researchers have proposed metrics [7, 14, 16] that evaluate observation loss at a specific location by examining the observability of observations. These metrics are adept at identifying degenerate scenarios, such as open fields or tunnels. Yet, degenerate scenarios are not the sole contributors to the diminished LAS. Factors like dynamic objects [17], unstructured environments [18], and sensor noise [19] interference also play significant roles.

Cognetti et al. [20] recognized it is insufficient to focus only on observability without considering noise and use pose estimation covariance as the metric. It works in vision-based frameworks, but as mentioned in Sec.I, covariance is not an ideal metric to provide independent evaluation for SOLM calculation due to its historical observations. Without the help of SOLM, we have to simulate the LiDAR scan for covariance calculation during motion planning, which will reduce the efficiency of the entire framework.

Therefore, a theoretically founded and independent metric for evaluating LiDAR observation loss is necessary. This paper proposes a novel metric via perturbation and sensitivity analysis [15]. In contrast to the approach in work [14], where perturbations are applied to additional constraints, we directly apply perturbations to the observations and analyze their effect on the solution. We find that the degeneracy factor derived in work [14] is a special case of the proposed metric.

Regarding the framework for Perception-aware Planning, we focus on how existing strategies incorporate the observation loss into motion planning. Strategy [7, 12] models observation loss as a differentiable function concerning the robot’s pose. This approach assumes feature points are always visible, which may not hold in complex environments. Another strategy [6] selects the final trajectory with the lowest covariance from several candidate trajectories that meet both obstacle avoidance and kinematic requirements. Strategy [5] uses covariance as a heuristic in exploring paths with Rapid Random Trees. The above three strategies work well in vision-based frameworks, while it is impractical for LiDAR-based Perception-aware Planning, primarily because vision feature points are sparse and can be simulated in real-time, whereas LiDAR point clouds are dense and simulating LiDAR scans is time-consuming, making trajectory generation has a long time span.

We decouple the evaluation process and motion planning into a two-phase pipeline to ensure the efficiency of motion planning. At first, the proposed perturbation-induced metric is employed to calculate SOLM, which is then stored as gray-scale images. When a new trajectory needs to be generated, the motion planning module will use the stored SOLM in the front- and back-end, finally optimizing a trajectory that can balance LAS, obstacle avoidance, and agility.

III Metric derivation

In this section, we derive a new metric to evaluate LiDAR observation loss, facilitating SOLM computation. In Sec. III-A, we state the problem to be solved and give the intuition behind our method. In Sec. III-B, we present an overview of the derivation process to outline our methodology and put details in the appendix for better readability.

III-A Problem statement and method intuition

Assume the robot’s pose is denoted by , which has dimensions. Data captured at pose by sensors can be utilized to construct error-based observations, denoted as . An example of is given by Eq.(25) in Sec. V, which means the distance from point to its nearest plane. We aim to develop a metric to evaluate LiDAR observation loss that quantifies the effect of a LiDAR observation on the robot LAS. The metric can be formulated as:

| (1) |

where denotes the metric, and scalar is the observation loss. Regions with low values are more conducive to maintaining good LAS.

As mentioned in Sec. I, mainstream localization algorithms [2, 4] are essentially solving a Least Square Problem. To derive a concrete form of , we explore how observations influence robots’ LAS in the linear Least Square Problem context. Consider the pose estimation problem as the following nonlinear Least Square Problem:

| (2) |

Usually, we solve Eq.(2) iteratively with , an initial estimate of . Suppose we iterate one step using Gauss-Newton method[21] to obtain , which is equivalent to solving the following optimization problem:

| (3) |

where . Each row of is , each row of is , and optimal pose estimation is directly affected by observations .

In fact, the observations usually contain sensor noise, erroneous associations, etc., which can be regarded as a perturbation applied to the exact observations. So we actually solve for under disturbed observations . Naturally, we expect the displacement of the solution caused by perturbations as small as possible.

According to the linear Least Square Problem sensitivity theory [15], given a certain disturbance on observations, the displacement of depends on . In other words, the attributes of decide the robustness of the solution. Hence, if the metric effectively captures the perturbation-induced displacement of , it can serve as a reliable measurement of observation loss at the given pose .

III-B Metric derivation

If we have a smooth enough perturbation mapping and a perturbation that change the observations to , where , will be changed to . Note that the perturbation mapping with different expressions will lead to different changes in . In this work, we assume that the mapping has the form of a linear transformation with a bias, which can be expressed as the following equations:

| (4) | ||||

| (5) | ||||

| (6) |

where . The role of is to resize the vector to the matrix in row-major order [22]. Substituting into Problem (3), can be obtained by solving the following optimization problem:

| (7) |

Let , we have:

| (8) | ||||

| (9) |

where and

What we care is how much small and can affect the displacement norm of the solution . We find that this problem can be converted to a sensitivity analysis problem for the optimal solution of a linear Least Square Problem. Work[15] summarizes a number of methods for obtaining the upper bound on the variation of the solution caused by and . Let the expression for the upper bound be , we have

| (10) |

Writing the perturbations and as: , where , and substituting them into the upper bound , we have:

| (11) |

Treating as constants first and linearizing near (which means the intensities of the perturbations tend to zero), we have

| (12) |

We note that and serve as an amplifier of perturbations, which, in other words, denotes the sensitivity of to the perturbations. As the upper bound of the displacement of the solution , we expect less sensitive to the perturbations, which will constrain the sensitivity of displacement to the perturbations at a level no more than the sensitivity of , because the inequality sign still holds for the derivation of both sides of Inequality (10) at zero. This can be easily proved by considering the functions on the left and right sides of Inequality (10) as and in Lemma 1, respectively. Thus, we define the metric as the sensitivity of the upper bound to the perturbations:

| (13) |

where are user-chosen directions of perturbations, are the weights of , respectively. Here, instead of performing sensitivity analysis on the derivation of directly, we perform sensitivity analysis on the upper bound because the expanded expression for is too complicated to be analyzed. After simplifying, what we need to do is pursue observations with lower value to keep low sensitivity of displacement to perturbations, which is conducive to improving the LAS.

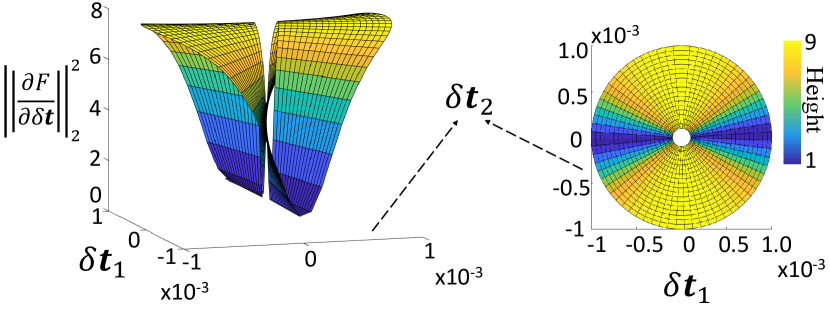

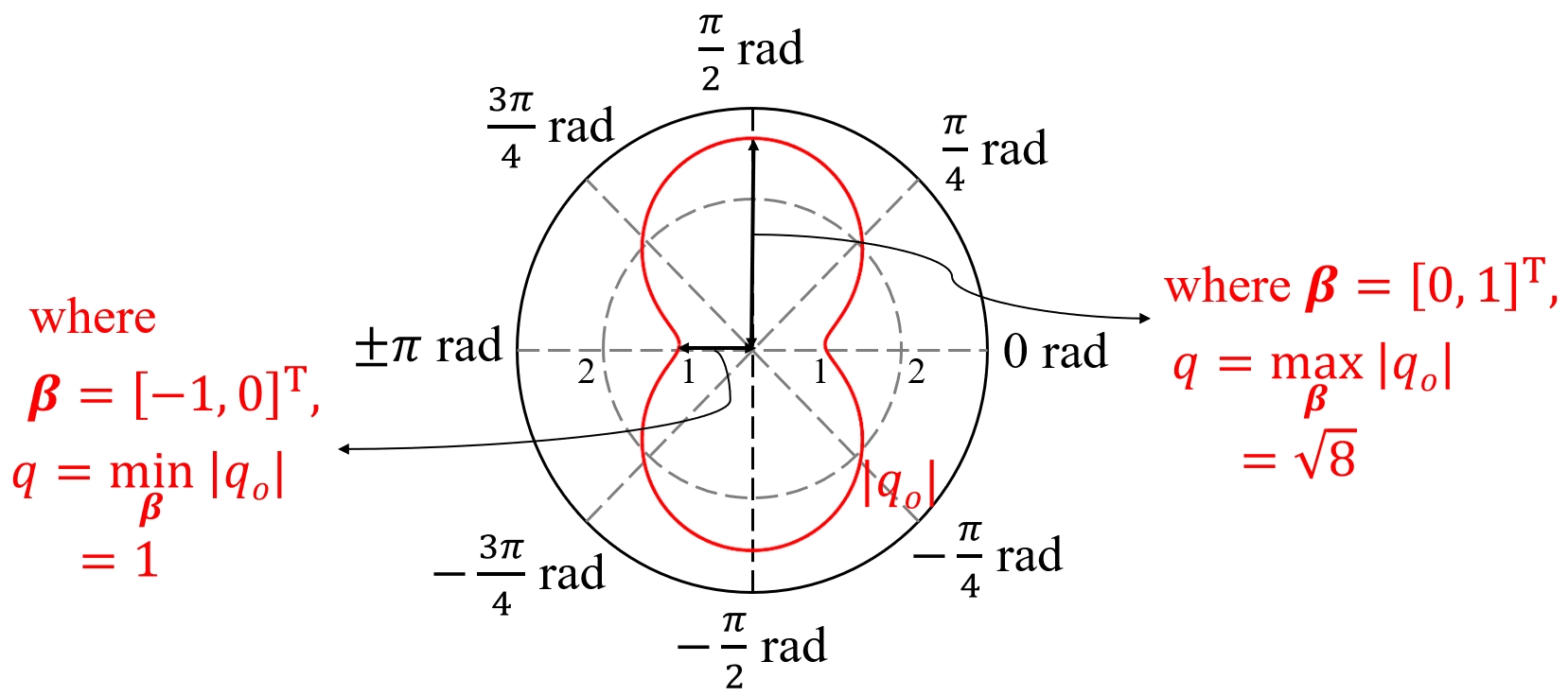

The intuition for defining the metric as Eq.(13) is that the directions of the perturbations can affect the sensitivity of the upper bound . From a mathematical point of view, it is impossible to obtain the sensitivity of by direct derivation of and without treating as constants first, since the derivatives of the norms usually do not exist at zero. To enhance the clarity of the abstract mathematical procedure, we present an example when , visualizing and in Fig. 2.

In this work, we choose one of the upper bounds derived in the work [23] to compute the metric. According to the results of the work[23], suppose that has full column rank and , where is the smallest singular value of , we have

| (14) |

where .

Writing as and calculating the corresponding derivatives (Details can be found in Appendix. -B), we can obtain:

| (15) |

where .

Noting that is a symmetric semi-positive definite matrix and , we can diagonalize it: , where , are eigenvalues of . Since , Eq.(15) is equivalent to:

| (16) |

As illustrated in Fig. 2, the evaluation is affected by the direction of perturbations. With eigenvalue analysis, we can obtain the range of evaluation result: and .

Thus, choosing different value of , we can obtain different forms of . The three most representative forms are:

| (17) |

| (18) |

| (19) |

Different strategies actually represent different conservativeness. is the most conservative estimate, choosing the direction in which the perturbation has the greatest impact. On the contrary, is the least conservative strategy, which is actually the degradation factor in literature [14]. is an intermediate strategy between and , where denotes the dimension of the state to be estimated. In Sec. V, we will show the effect of strategies with different levels of conservativeness in observation evaluation and trajectory generation.

IV Framework design

In this section, we show the framework of the proposed LiDAR-based Perception-aware Planning, including SOLM calculation with the metrics derived in Sec. III, trajectory planning based on SOLM, and the relation of these two parts.

IV-A SOLM Calculation

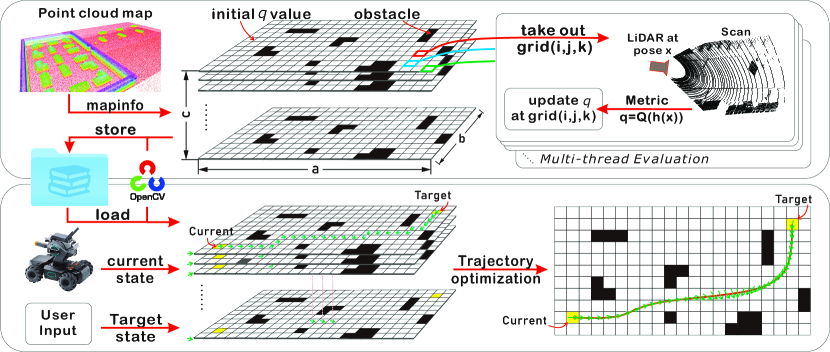

The upper part of Fig. 3 shows the process of SOLM calculation, including initialization, updating, and saving.

To start with, we initialize the SOLM according to the robot’s potential state space. Assume the robot’s state is . Given the resolution on each dimension, we discretize the state space a grid structure with the size of , and for dimensions , , and , respectively. The obstacles within the given point cloud map will be marked on the SOLM, where the observation evaluation will be skipped to reduce unnecessary calculations.

Then, we evaluate the observation loss at each grid. Firstly, the information of a non-obstacle is taken out from the SOLM. Secondly, we simulate a LiDAR scan at the state represented by the center of this grid. A ray-casting-based scan simulator with GPU acceleration can significantly speed up the simulation process, like the one used in work [24]. The simulated scan will formulate observations follow the observation model like Eq. (25). Thirdly, we evaluate the observation loss at state with the metric derived in Sec. III. Finally, we update the evaluation result into . It is noted that since the evaluation process at different grids is independent of each other, the process can be performed in parallel. Once all the non-obstacle grids have been evaluated, we obtain the SOLM that can be used for motion planning. In practice, due to the similar structure of the picture, we store the SOLM as multi-channel images for the case. For higher-dimensional state spaces, like , we store the SOLM as a tensor.

IV-B Motion planning

The lower part of Fig. 3 shows the process of motion planning, including path searching and trajectory optimization.

After loading SOLM from stored images, we restore the knowledge of observation loss in the robot’s state space. For front-end path searching, we adopt the SOLM as the cost function and perform Breadth-First-Search (BFS) in the grid map, which allows for full consideration of all topologies and orientations in the environment to emphasize the effectiveness in improving the robot’s LAS of the proposed Perception-aware Planning framework.

For trajectory optimization, similar to the work[25], we use piecewise polynomials to represent state trajectories, where denotes the position of the robot in the world frame, denotes the yaw angle of the robot, as mentioned in last subsection. In this work, the cubic spline is chosen to represent the trajectory for the smoothness. We formulate the perception-aware trajectory planning problem for ground robots as the following optimization problem:

| (20) | |||

| (21) | |||

| (22) | |||

| (23) | |||

| (24) |

where denote coefficient matrix, N denotes the number of segments of the piecewise polynomials. Each element in denotes the duration of a piece of the trajectory. In the object function, denote observation loss at state , is the total duration of the trajectory, is a positive weight to ensure the aggressiveness of the trajectory.

Conditions (21) denote the continuity constraints of the cubic spline at interpolation points and the positive time, where the inequality is taken element-wise. Conditions (22) denote the possible nonholonomic constraints of the robot. For car-like robots or differential robots, it is . While for omnidirectional robots, this constraint does not exist. Conditions (23) are dynamic feasibility constraints, where the inequality and square operation are also taken element-wise. denote maximum longitude velocity, latitude velocity, and angular velocity, respectively. Condition (24) denotes safety constraint, where denotes the signed distance function (SDF) at position to the nearest obstacle. denotes the safety distance related to the size of the robot. In this work, we compute the SDF by the algorithm proposed in work[26].

To make the above optimization problem easier to solve, we adopt the MINCO trajectory class and differential homogeneous mapping used in work[27] to eliminate the constraints (21). For ease of solving the problem, we also replace the integral in the objective function with a summation approximation. When the equation constraint (22) exists, PHR Augmented Lagrange Multiplier method[28] (PHR-ALM) is used to solve the simplified problem for higher accuracy. Conversely, we utilize the penalty function method, which is sufficient to obtain an acceptable solution. Besides, an efficient quasi-Newton method L-BFGS[29] is chosen as the unconstrained optimization algorithm to work with PHR-ALM and the penalty function method.

V Experiments

V-A Implementation details

To validate the effectiveness and efficiency, we apply the proposed Perception-aware Planning framework on an omnidirectional vehicle (RoboMaster AI-2020) with a 70-degree Field of View (FoV) LiDAR (Livox Mid-70111https://www.livoxtech.com/mid-70). In real-world experiments, the odometry is provided by FAST-LIO2 [2], a high-precision Lidar-Inertial Odometry (LIO) system. The ground truth of robot pose is provided by the motion tracking system (NOKOV222https://www.nokov.com/). All the computation is performed on an on-board computer with Intel i7-1165G7. The observation model takes the distance from the scan point to the nearest plane, the same model defined in work [2]:

| (25) |

where indicates the robot position and orientation to be estimated, is a LiDAR point, is the normal vector of the nearest plane, is a point on the nearest plane.

To see if the metric is reliable in evaluating observation loss, we adopt an indicator Mean Disturbance-induced Error (MDE) serving as the evaluation ground truth, which is similar to defined in work [30] as follows:

| (26) |

where denotes the number of disturbances, is the registration result start from a disturbed with iterated point-to-plane registration.

V-B Metric Effectiveness Validation

In this subsection, we demonstrate the effectiveness and characteristics of the proposed metrics in evaluating observation loss in several representative scenes built in UE5333https://www.ue5.com.

As shown in Fig. 4, scene (a) is rich in planar features where the robot can localize itself stably and robustly to observation perturbations. Scene (d) is a typical degenerate scenario. Scene (c) is an unstructured scenario with roughly the same features, where the robot’s pose estimation is sensitive to the initial guess . Scene (b) shows some structured features in the unstructured grasslands.

We first simulate a LiDAR scan at each scene to obtain observations with the LiDAR model described in Eq. (25). Then we use metrics Eq. (17)-(19) to evaluate the loss of the simulated observation. Besides, we calculate MDE for each scene as the evaluation’s ground truth. The result is shown in TABLE. I. By comparing the ground truth with the evaluations by different metrics, we can judge which metric can provide the most reliable evaluation. It’s important to note that we are only concerned with the relative loss of different scenarios by the same metric.

| Metric | ||||

|---|---|---|---|---|

| 0.320 | 0.28 | 0.31 | 12.32 | |

| 0.547 | 0.91 | 1.20 | 12.34 | |

| 12.15 | 17.2 | 16.3 | 568.8 | |

| MDE | 0.733 | 2.81 | 3.80 | 4.63 |

In Table. I, all of the three metrics can tell the observation loss in scene (d) is the worst, while only the evaluation with metric is consistent with the ground truth in the four scenes. For , which is actually the degeneracy factor proposed in literature [14], cannot distinguish scenarios (), (), and () well because this metric only focuses on the structural richness of scenarios. In addition, the reason why the evaluation with does not distinguish scenarios () and () well is the introduction of over-conservative maximum eigenvalues, which leads to the evaluation influenced by the best-observed dimension of the state to be estimated.

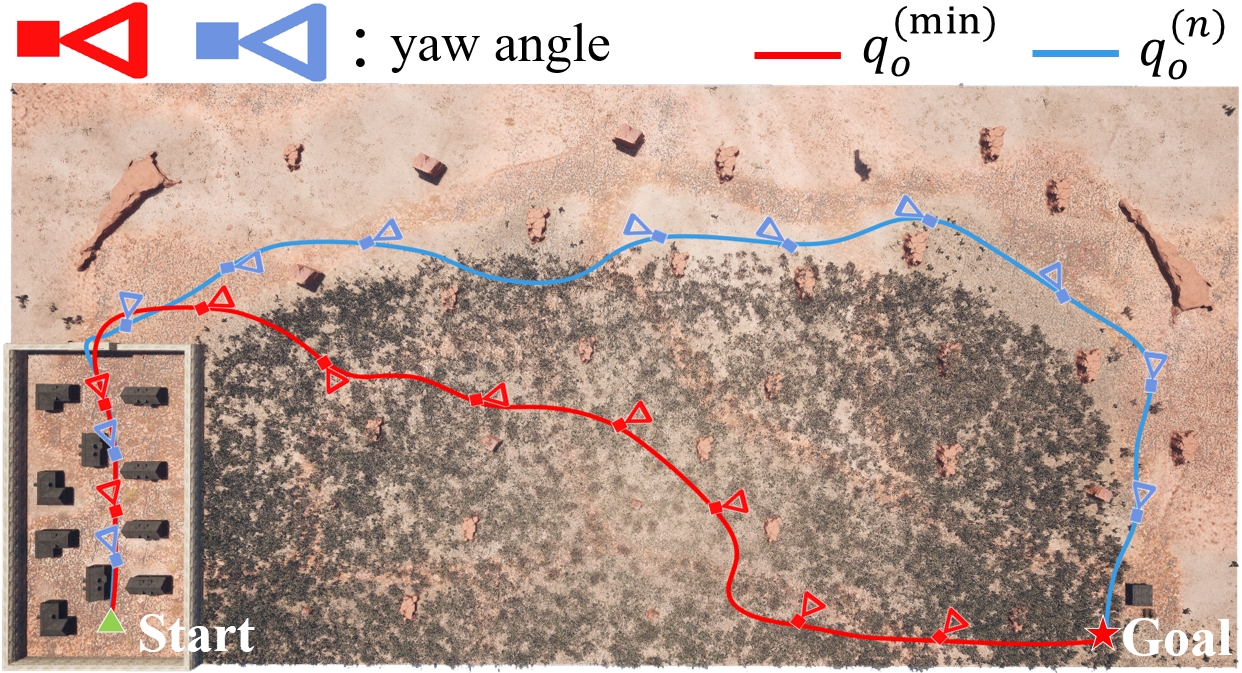

V-C Experiment in Large Scale Map

To demonstrate the effectiveness of the proposed framework in scenarios with large ranges, we build a Gobi desert in UE5 as shown in Fig. 5. We require the robot with the configuration in Sec. V-A to reach a distant warehouse from the inside of a small town. Stones are evenly distributed on the ground outside of towns to help robots locate themselves while traveling, and part of the stones are surrounded by weeds and bushes. To further illustrate the characteristics of the framework with different metrics, we show two trajectories guided by metrics and respectively.

As we can see in Fig. 5, the planner utilizing chooses a trajectory with grass and bush, whereas the planner utilizing prefers the path with well-structured features and devoid of grass and bush, which is because only considers the feature richness of observations and make the robot pursuit area with rich features no matter whether it is preferable for robot localization.

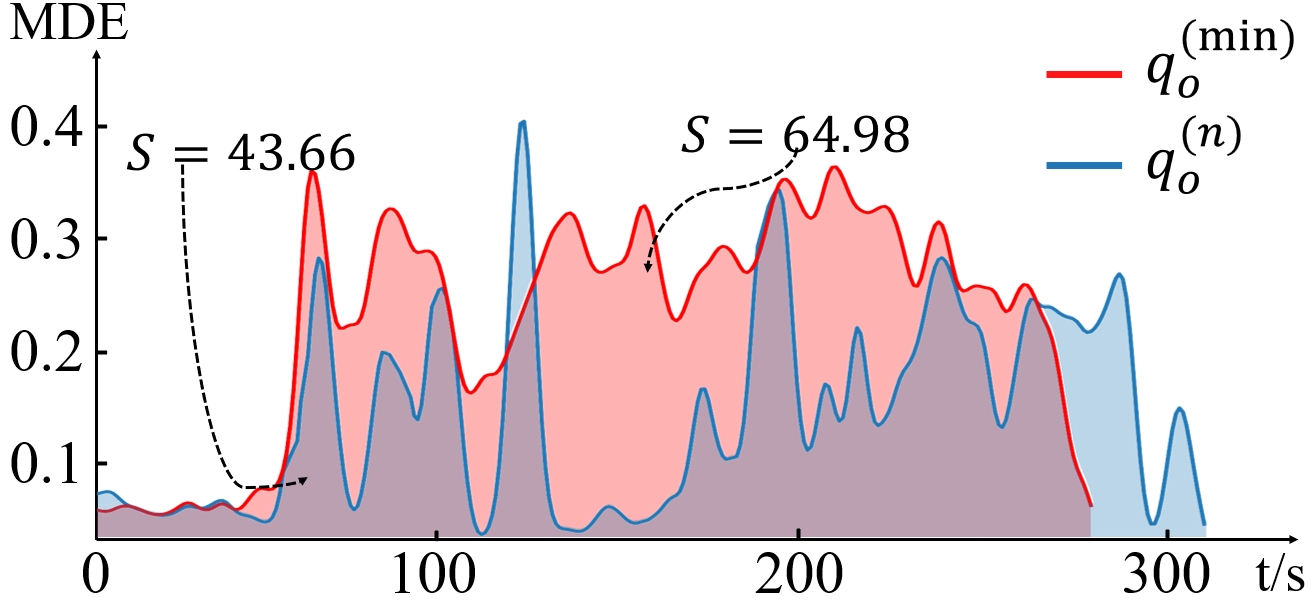

To evaluate how much effect the observations along the trajectories have on LAS, we calculate MDE for each pose sampled along the trajectories at a constant time interval. As shown in Fig. 6, while the trajectory guided by is less rich in features compared to , the clear geometrical features result in smaller accumulated MDE () compared to the trajectory guided by ().

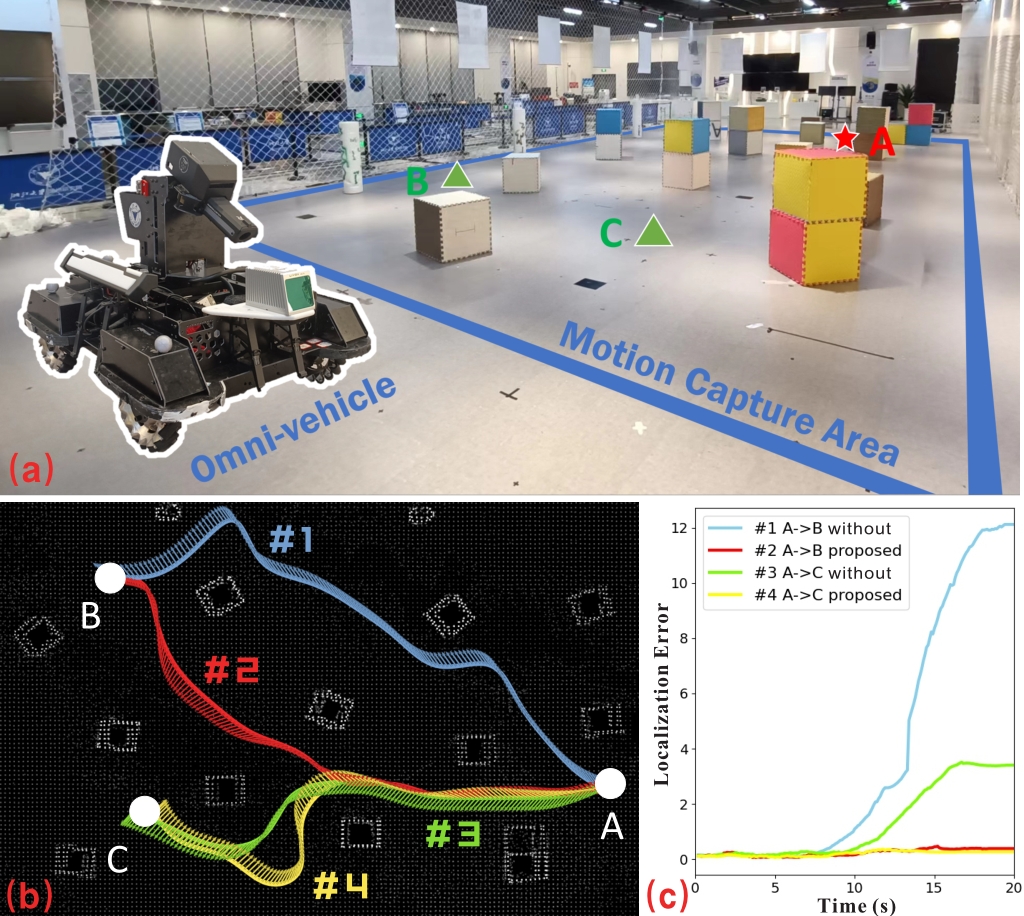

V-D Real-world Experiment

After showing the different features of the proposed metrics, we adapt our Perception-aware Planning framework to the real-world scenario. As shown in Fig. 7(a), a vehicle with configurations in Sec. V-A is required to navigate from point A to point B or C, during which process it has to localize itself with boxes and floor within the motion capture area. According to Sec. V-B and V-C, metric has more holistic performance that provides reliable evaluations. Here, we show trajectories (#2 and #4) guided by metric and trajectories (#1 and #3) without metrics for comparison.

As shown in Fig. 7(b), the vehicle guided by is able to choose topologies and orientations with low observation loss. We calculate the localization error of the robot when moving along each trajectory by , where is given by Motion Tracking System and is given by FAST-LIO2 [2]. As we can see in Fig. 7(c), trajectories #2 and #4 have lower localization error than #1 and #3, which demonstrates that the trajectories generated by the proposed framework are conducive to improving LAS.

VI Conclusion

In this paper, we propose a LiDAR-based Perception-aware Planning framework and perturbation-induced metrics. Through experiments, we show different features of the proposed metrics and validate the effectiveness of our framework. At the same time, there is still space for improvement in our work. Firstly, we assume that the localization algorithm essentially addresses a least square problem. As a result, the proposed metrics may not be suitable for all localization algorithms, such as learning-based methods. In addition, extensive real-world experiments across a broader range of scenarios are necessary to explore the limits of the proposed framework. In the future, we will conduct more experiments to investigate the effectiveness of the framework and evolve the current SOLM into a dynamically updated grid structure for online replanning applications.

-A Lemma for Formula (10) (13)

Lemma 1.

, are continuously differentiable, and . If , we have .

Proof.

Let , then ,

Thus,

∎

-B Derivations of Eq.(14)

| (27) |

| (28) | ||||

| (29) |

References

- [1] P. Geneva, K. Eckenhoff, W. Lee, Y. Yang, and G. Huang, “Openvins: A research platform for visual-inertial estimation,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 4666–4672.

- [2] W. Xu, Y. Cai, D. He, J. Lin, and F. Zhang, “Fast-lio2: Fast direct lidar-inertial odometry,” IEEE Transactions on Robotics, vol. 38, no. 4, pp. 2053–2073, 2022.

- [3] T. Qin, P. Li, and S. Shen, “Vins-mono: A robust and versatile monocular visual-inertial state estimator,” IEEE Transactions on Robotics, vol. 34, no. 4, pp. 1004–1020, 2018.

- [4] T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti, and D. Rus, “Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping,” in 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 2020, pp. 5135–5142.

- [5] G. Costante, C. Forster, J. Delmerico, P. Valigi, and D. Scaramuzza, “Perception-aware path planning,” IEEE Transactions on Robotics, pp. Epub–ahead, 2016.

- [6] Z. Zhang and D. Scaramuzza, “Perception-aware receding horizon navigation for mavs,” in 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018, pp. 2534–2541.

- [7] ——, “Beyond point clouds: Fisher information field for active visual localization,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 5986–5992.

- [8] L. Bartolomei, L. Teixeira, and M. Chli, “Perception-aware path planning for uavs using semantic segmentation,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 5808–5815.

- [9] X. Chen, Y. Zhang, B. Zhou, and S. Shen, “Apace: Agile and perception-aware trajectory generation for quadrotor flights,” arXiv preprint arXiv:2403.08365, 2024.

- [10] D. Falanga, P. Foehn, P. Lu, and D. Scaramuzza, “Pampc: Perception-aware model predictive control for quadrotors,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 1–8.

- [11] J. Zhang and S. Singh, “Loam: Lidar odometry and mapping in real-time.” in Robotics: Science and systems, vol. 2, no. 9. Berkeley, CA, 2014, pp. 1–9.

- [12] Z. Zhang and D. Scaramuzza, “Fisher information field: an efficient and differentiable map for perception-aware planning,” arXiv preprint arXiv:2008.03324, 2020.

- [13] R. Takemura and G. Ishigami, “Perception-aware receding horizon path planning for uavs with lidar-based slam,” in 2022 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI). IEEE, 2022, pp. 1–8.

- [14] J. Zhang, M. Kaess, and S. Singh, “On degeneracy of optimization-based state estimation problems,” in 2016 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2016, pp. 809–816.

- [15] J. F. Grcar, “Optimal sensitivity analysis of linear least squares,” Lawrence Berkeley National Laboratory, Report LBNL-52434, vol. 99, pp. 27–34, 2003.

- [16] P. Salaris, R. Spica, P. R. Giordano, and P. Rives, “Online optimal active sensing control,” in 2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2017, pp. 672–678.

- [17] W. Ding, S. Hou, H. Gao, G. Wan, and S. Song, “Lidar inertial odometry aided robust lidar localization system in changing city scenes,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 4322–4328.

- [18] J. Guivant, E. Nebot, J. Nieto, and F. Masson, “Navigation and mapping in large unstructured environments,” The International Journal of Robotics Research, vol. 23, no. 4-5, pp. 449–472, 2004.

- [19] C. Yuan, W. Xu, X. Liu, X. Hong, and F. Zhang, “Efficient and probabilistic adaptive voxel mapping for accurate online lidar odometry,” IEEE Robotics and Automation Letters, vol. 7, no. 3, pp. 8518–8525, 2022.

- [20] M. Cognetti, P. Salaris, and P. R. Giordano, “Optimal active sensing with process and measurement noise,” in 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018, pp. 2118–2125.

- [21] S. J. Wright, Numerical optimization. Springer, pp. 254–257, 2006.

- [22] D. E. Knuth, The Art of Computer Programming: Fundamental Algorithms, volume 1. Addison-Wesley Professional, pp. 298–305, 1997.

- [23] C. L. Lawson and R. J. Hanson, Solving least squares problems. SIAM, pp. 41–52, 1995.

- [24] F. Kong, X. Liu, B. Tang, J. Lin, Y. Ren, Y. Cai, F. Zhu, N. Chen, and F. Zhang, “Marsim: A light-weight point-realistic simulator for lidar-based uavs,” IEEE Robotics and Automation Letters, vol. 8, no. 5, pp. 2954–2961, 2023.

- [25] L. Xu, K. Chai, Z. Han, H. Liu, C. Xu, Y. Cao, and F. Gao, “An efficient trajectory planner for car-like robots on uneven terrain,” in 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2023, pp. 2853–2860.

- [26] P. F. Felzenszwalb and D. P. Huttenlocher, “Distance transforms of sampled functions,” Theory of computing, vol. 8, no. 1, pp. 415–428, 2012.

- [27] Z. Wang, X. Zhou, C. Xu, and F. Gao, “Geometrically constrained trajectory optimization for multicopters,” IEEE Transactions on Robotics, vol. 38, no. 5, pp. 3259–3278, 2022.

- [28] R. T. Rockafellar, “Augmented lagrange multiplier functions and duality in nonconvex programming,” SIAM Journal on Control, vol. 12, no. 2, pp. 268–285, 1974.

- [29] D. C. Liu and J. Nocedal, “On the limited memory bfgs method for large scale optimization,” Mathematical programming, vol. 45, no. 1, pp. 503–528, 1989.

- [30] J. Nubert, E. Walther, S. Khattak, and M. Hutter, “Learning-based localizability estimation for robust lidar localization,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022, pp. 17–24.