Leveraging Adaptive Implicit Representation Mapping for Ultra High-Resolution Image Segmentation

Abstract

Implicit representation mapping (IRM) can translate image features to any continuous resolution, showcasing its potent capability for ultra-high-resolution image segmentation refinement. Current IRM-based methods for refining ultra-high-resolution image segmentation often rely on CNN-based encoders to extract image features and apply a Shared Implicit Representation Mapping Function (SIRMF) to convert pixel-wise features into segmented results. Hence, these methods exhibit two crucial limitations. Firstly, the CNN-based encoder may not effectively capture long-distance information, resulting in a lack of global semantic information in the pixel-wise features. Secondly, SIRMF is shared across all samples, which limits its ability to generalize and handle diverse inputs. To address these limitations, we propose a novel approach that leverages the newly proposed Adaptive Implicit Representation Mapping (AIRM) for ultra-high-resolution Image Segmentation. Specifically, the proposed method comprises two components: (1) the Affinity Empowered Encoder (AEE), a robust feature extractor that leverages the benefits of the transformer architecture and semantic affinity to model long-distance features effectively, and (2) the Adaptive Implicit Representation Mapping Function (AIRMF), which adaptively translates pixel-wise features without neglecting the global semantic information, allowing for flexible and precise feature translation. We evaluated our method on the commonly used ultra-high-resolution segmentation refinement datasets, i.e., BIG and PASCAL VOC 2012. The extensive experiments demonstrate that our method outperforms competitors by a large margin. The code is provided in supplementary material.

1 Introduction

Image segmentation, the process of partitioning pixels of an input image into distinct regions, has shown notable progress. While prior methods excel in the low-resolution segmentation task [long2015fully, krizhevsky2012imagenet, simonyan2014very, lin2017feature, chen2017deeplab, chen2016attention], addressing the task of ultra-high-resolution segmentation still poses a persistent challenge due to substantial computational demands.

To address the ultra-high-resolution image segmentation problem, [cheng2020cascadepsp] proposes a refinement network with a cascade scheme, where the intermediate refinement outcomes are iteratively upsampled to higher resolutions. Despite achieving surprising results, this progressive process is quite time-consuming. To improve the computational efficiency and reduce the inference time, CRM [shen2022high] draws inspiration from LIIF [chen2021learning] and uses Implicit Representation Mapping (IRM) to achieve the refinement of ultra-high-resolution image segmentation. While exhibiting promising performance, existing IRM-based methods, e.g., CRM and LIIF, typically rely on a CNN-based encoder to extract image features and a Shared Implicit Representation Mapping Function (SIRMF) to translate the pixel-wise features, overlooking two critical limitations: (1) the CNN-based encoder may struggle to effectively capture long-distance information, leading to a lack of global semantic information in pixel-wise features. (2) SIRMF is shared across all samples, limiting its generalization capability to handle diverse inputs. As a result, these two limitations significantly impact the performance of current IRM-based methods (see the green circle in Fig. 1).

To address these limitations, our work begins by conducting comprehensive experiments to examine the impact of receptive fields on IRM. We observe that larger receptive fields significantly enhance the performance of IRM. Additionally, we analyze the weaknesses of the existing SIRMF and find that it lacks the generalization capability to handle diverse inputs. Based on these observations, we introduce a novel approach that Leveraging Adaptive Implicit Representation Mapping (AIRM) for Ultra High-Resolution Image Segmentation. The proposed network comprises two components: the Affinity Empowered Encoder (AEE) and the Adaptive Implicit Representation Mapping Function (AIRMF). The AEE, responsible for feature extraction, leverages the advantages of transformer architecture and semantic affinity to model long-distance features effectively. Unlike using shared mapping parameters, AIRMF’s parameters are predicted by a separate network based on the entire feature extracted by AEE. This enables AIRMF to adaptively translate pixel-wise features without neglecting global semantic information, allowing for flexible and precise feature translation. As a result, the proposed method can extract pixel-wise features with a significantly larger receptive field and translate them into the segmentation result in an adaptive manner, collaborating with global semantic information. Our main contribution is the following.

-

•

We conduct comprehensive experiments to explore the impact of receptive fields on IRM and analyze the limitations of the existing SIRMF. The experimental results demonstrate two key findings: (1) Larger receptive fields significantly enhance the performance of IRM, and (2) the existing SIRMF lacks the generalization capability to handle diverse inputs and tends to overlook the global semantic information.

-

•

We introduce a novel approach named Leveraging Adaptive Implicit Representation Mapping for Ultra-High-Resolution Image Segmentation, which comprises two components: the Affinity Empowered Encoder (AEE) and the Adaptive Implicit Representation Mapping Function (AIRMF). The proposed method can extract pixel-wise features with a significantly larger receptive field and translate them into the segmentation result in an adaptive manner, collaborating with global semantic information.

-

•

The extensive experiment results on multiple ultra-high-resolution segmentation refinement datasets demonstrate that our method outperforms competitors by a large margin.

2 Related Work

2.1 Refining Semantic Segmentation

The progress in semantic segmentation, which aims to assign distinct categories or classes to individual pixels within an image, has been significantly propelled by the achievements of deep neural networks [long2015fully, krizhevsky2012imagenet, simonyan2014very, lin2017feature, chen2017deeplab, chen2016attention, farabet2012learning, he2004multiscale, mostajabi2015feedforward, shotton2009textonboost, zhang2018context]. However, these methods are primarily focused on tackling the issue of low-resolution image segmentation but fall short of producing high-quality segmentation results. Thus, the task of refining segmentation is introduced to improve the quality of segmentation outcomes for high-resolution (1K2K) (HR) or even ultra high-resolution (4K6K) (UHR) images. Unlike low-resolution images, HR and UHR require a deeper understanding of semantic details. Conventional techniques rely on graphical models like CRF [chen2014semantic, chen2017deeplab, lin2016efficient, krahenbuhl2011efficient, liu2015semantic] or region growing [dias2019semantic] techniques to achieve HR segmentation. But they mainly focus on low-level color boundaries [zheng2015conditional], limiting their segmentation performance. In contrast, Multi-scale Cascade Networks [chen2019collaborative, cheng2020cascadepsp, he2019bi, qi2018sequential, sun2013deep, zhao2018icnet] employ a recursive approach to progressively integrate multi-scale information. Nevertheless, such a recursive approach inadvertently introduces more computational burdens. Therefore, a Continuous Refinement Network [shen2022high] is proposed to improve the efficiency by continually aligning the feature map with the refinement target. However, it tends to overlook the significance of the limited receptive field of the Convolution Operation in the CNN backbone and also lacks the generalization capability to effectively handle specific categories. To address these concerns, we employ a Transformer-based adaptive implicit representation function to mitigate these limitations and improve segmentation accuracy.

2.2 Transformer in Segmentation

Vision Transformers (ViT) [dosovitskiy2020image], as demonstrated by their remarkable success, is adapted for various computer vision tasks such as semantic segmentation [liu2021swin, zheng2021rethinking] and object segmentation [zhu2020deformable, dai2021dynamic]. However, applying plain Vision Transformer (ViT) models directly to segmentation tasks is challenging due to their absence of segmentation-specific heads. To solve this problem, SETR [zheng2021rethinking] reframes semantic segmentation as a sequence-to-sequence prediction task. [liu2021swin] proposes a versatile hierarchical Vision Transformer backbone, known as Swin Transformer (Hierarchical Vision Transformer using Shifted Windows), using shifted windows to efficiently capture long-range dependencies while maintaining scalability. Segmenter [strudel2021segmenter] employs pre-trained image classification models fine-tuned on moderate-sized segmentation datasets for semantic segmentation tasks. Instead of ViT, [ru2022learning] utilizes Transformers to generate more integral initial pseudo labels for the end-to-end weakly-supervised semantic segmentation task and proposes an Affinity from Attention (AFA) module to learn semantic affinity from the multi-head self-attention (MHSA) in Transformers. Similar to [ru2022learning], we propose to integrate the Transformer architecture into our UHR image refinement segmentation task and leverage the learned semantic affinity from multi-head self-attention to enhance the precision of coarse masks.

2.3 Hypernetworks

Hypernetworks or meta-models, as referenced in [article, littwin2019deep], involve designing models specifically tailored for generating parameters for other models. This parameterization approach not only enhances the model’s expressiveness, as highlighted in [galanti2020comparing, galanti2020modularity], but it also supports data compression through weight sharing across a meta-model [article]. Unlike conventional deep neural networks, where the weights remain fixed during inference, hypernetworks possess the ability to generate adaptive weights or parameters for target networks [li2022misf, guo2021jpgnet, Li_2023_ICCV, zhang2023superinpaint]. We leverage this technique and propose an Adaptive Implicit Representation Mapping Function. Essentially, an additional Convolution Network is responsible for learning the parameters of the implicit function in the segmentation network, enhancing its adaptability to a variety of high-resolution image segmentation tasks.

3 Discussion and Motivation

In this section, we conduct several comprehensive experiments to explore the impact of receptive fields on IRM (see Sec. 3.1) and analyze the limitation of the existing SIRMF (see Sec. 3.2). We observe that (1) larger receptive fields significantly enhance the performance of IRM, and (2) the existing SIRMF lacks the generalization capability to handle diverse inputs and tends to overlook global semantic information. Building on these observations, we introduce a novel approach named Leveraging Adaptive Implicit Representation Mapping for Ultra-High-Resolution Image Segmentation.

3.1 The Impact of Receptive Fields on IRM

Image encoder. To investigate the impact of receptive fields on IRM, we construct a straightforward image encoder, i.e. , comprising only several convolutional layers, followed by ReLU and batch normalization operations. We then utilize the encoder to extract features from the input image and coarse segmentation mask , \linenomathAMS

| (1) |

SIRMF. Following [chen2021learning], the SIRMF is implemented by an MLP network, denotes as , and the refined segmentation result at location , can be represented as \linenomathAMS

| (2) |

where f is the mapping function, is the trainable parameter of f, are neighbor locations around , and is the pixel-wise feature vector related to location . The aggregation weights, i.e. area value are calculated based on the relative coordinate offset between and .

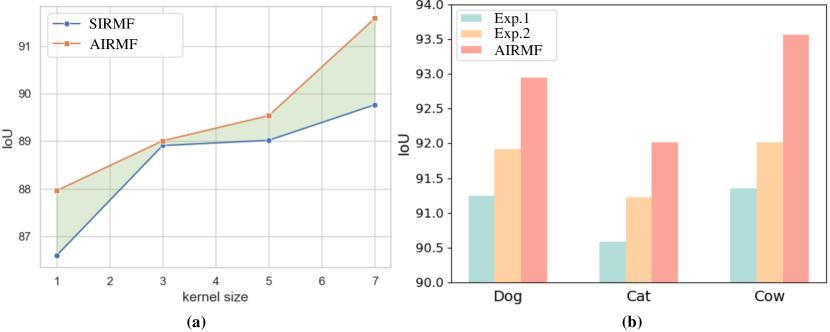

We train the image encoder with various kernel sizes and the SIRMF on the high-resolution image segmentation dataset. Subsequently, we evaluate the performance based on the Intersection over Union (IoU) and illustrate the results in Fig. 2 (a) (see the blue line). We can observe a substantial performance improvement in the segmentation results when enlarging the kernel size. This highlights that larger receptive fields significantly enhance the performance of IRM in the context of image segmentation tasks.

3.2 Limitation of The existing SIRMF

To further analyze the limitations of the current SIRMF, we randomly select three categories of images from the segmentation dataset. Subsequently, we conduct the following experiments:

Exp.1 We utilize the pre-trained image encoder from Sec. 3.1 to extract image features. We train a SIRMF on the entire selected image set and then evaluate the performance of the SIRMF on each category separately (see Fig. 2 (b) blue bar).

Exp.2 We use the same encoder as in Exp.1 to extract image features. But we train three SIRMF, each dedicated to one of the selected categories, and then evaluate the performance of each SIRMF on its corresponding category (see Fig. 2 (b) yellow bar).

From Fig. 2 (b) we can observe that training three SIRMF separately for each category yields better performance compared to training one SIRMF across all three categories. This observation indicates that the existing SIRMF lacks the generalization capability to effectively handle specific categories and tends to overlook global semantic information.

4 Methodology

Building upon the observations in Sec. 3, in this section, we introduce a novel approach that leverages adaptive implicit representation mapping for ultra-high-resolution image segmentation to effectively address the previous limitations, as shown in Fig. 3. Specifically, the proposed method AIRM comprises two components: the Affinity Empowered Encoder (AEE) (see Sec. 4.1) and the Adaptive Implicit Representation Mapping Function (AIRMF) (see Sec. 4.2). The AEE is responsible for effectively modeling long-distance features by taking advantage of the transformer architecture and semantic affinity. The AIRMF is responsible for adaptively translating pixel-wise features without neglecting the global semantic information, allowing for flexible and precise feature translation.

4.1 Affinity Empowered Encoder

As discussed in Sec. 3, larger receptive fields significantly enhance the performance of IRM. Therefore, instead of employing a CNN-based image encoder like CRM [shen2022high] and LIIF [chen2021learning], we propose an affinity-empowered image encoder that takes advantage of the transformer architecture and semantic affinity. Specifically, given the RGB image and the corresponding coarse segmentation mask , we employ the transformer-based encoder to extract features , which can be represented as follows:

| (3) |

where denotes the attention map produced by and represents the flattened spatial dimensions and is the number of channels. Note that the coarse segmentation mask is obtained by applying an existing segmentation method such as [long2015fully, chen2018encoder, lin2017refinenet].

To further empower the capability of the transformer-based encoder, we propose learning the affinity from the attention map to intentionally impose constraints on the encoder during training. Specifically, we obtain the predicted affinity by feeding the sum of and its transpose into an MLP network, i.e., , which can be represented as:

| (4) |

We expect to be close to the ground truth affinity obtained from the ground truth segmentation mask . Specifically, we resize to to align with the shape of and the affinity between two coordinates and is assigned as 1 if their classes are identical, and 0 otherwise.

4.2 Adaptive Implicit Representation Mapping Function (AIRMF)

In Sec. 3.2, we identified the limitation in the existing SIRMF (2) — it lacks the capacity to effectively generalize across diverse inputs (see Fig. 2 (b)). To tackle this issue, we introduce SIRMF which can be represented as \linenomathAMS

| (5) |

where denotes a hypernetwork that is used to predict the adaptive mapping parameters. Instead of directly optimizing the shared parameter in (2), we employ to predict it adaptively by inputting the feature . Intuitively, the predicted mapping parameter should capture global semantic information from the input feature . Consequently, AIRMF can leverage both local information from pixel-wise features and global information from the entire image feature. This design allows AIRMF to yield precise segmentation results (see the red points in Fig. 2 (a) and the red bar in Fig. 2 (b)).

Assume we aim to generate the weight and bias of the l-th linear layer of . To optimize computational efficiency, we do not directly predict the parameter. Instead, we adopt a strategy similar to [skorokhodov2021adversarial], predicting two intermediary rectangular matrices and through , where r = 20 for all the layers of . The linear modulating projection weight is then modulated by multiplying these matrices as follow,

| (6) |

Bias parameter is directly produced due to its small volume. We streamline the AIRMF in Fig. 3.

4.3 Implementation Details

Network architectures. We employ the Mix Transformer (MiT) introduced in Segformer [xie2021segformer]) as the backbone of image encoder . MiT is a more suitable backbone for image segmentation tasks compared to the vanilla ViT [dosovitskiy2020image]. In brief, MiT incorporates overlapped patch embedding to maintain local consistency, spatial-reductive self-attention to accelerate computation, and employs FFN with convolutions to safely replace position embedding. The network of to predict the semantic affinity is implemented by an MLP layer. The deep network employed to generate the flattened parameters of the mapping function is constructed by several Conv+ReLU+BatchNorm layers, followed by a linear layer.

Loss. During training, we use the combined loss functions to optimize overall performance. To improve AIRMF, we utilize the cross-entropy loss , L1 loss , L2 loss and gradient loss

| (7) |

where , , and balance the contributions in .

To calculate the affinity loss , we concentrate on adjacent coordinates during training to leverage their sufficient context while minimizing computational costs. We use a search mask on with a predefined radius as a threshold, efficiently limiting the distance between selected coordinate pairs. This approach ensures that only pixel pairs within the same local search mask are considered, excluding the affinity between distant pixel pairs. The affinity loss term is formulated as follows:

| (8) | ||||

where and refer to two subsets of positive and negative affinity pixel pairs:

| (9) |

| (10) |

Our final loss can be written as:

| (11) |

| IoU/mBA(%) | Coarse Mask | SegFix [yuan2020segfix] | CascadePSP [cheng2020cascadepsp] | CRM [shen2022high] | AIRM(Ours) |

|---|---|---|---|---|---|

| BIG | |||||

| FCN-8s [long2015fully] | 72.39/53.63 | 72.69/55.21 | 77.87/67.04 | 79.62/69.47 | 80.59/69.98 |

| RefineNet [chen2018encoder] | 90.20/62.03 | 90.73/65.95 | 92.79/74.77 | 92.89/75.50 | 93.59/75.26 |

| DeepLabV3+ [lin2017refinenet] | 89.42/60.25 | 89.95/64.34 | 92.23/74.59 | 91.84/74.96 | 92.99/74.77 |

| PSPNet [zhao2017pyramid] | 90.49/59.63 | 91.01/63.25 | 93.93/75.32 | 94.18/76.09 | 94.95/76.88 |

| PASCAL VOC 2012 | |||||

| FCN-8s [long2015fully] | 68.85/54.05 | 70.02/57.63 | 72.70/65.36 | 73.34/67.17 | 74.59/67.26 |

| RefineNet [chen2018encoder] | 90.20/62.03 | 86.71/66.15 | 87.48/71.34 | 87.18/71.54 | 88.03/71.89 |

| DeepLabV3+ [lin2017refinenet] | 87.13/61.18 | 88.03/66.35 | 89.01/72.10 | 88.33/72.25 | 90.01/72.01 |

| PSPNet [zhao2017pyramid] | 90.92/60.51 | 91.98/66.03 | 92.86/72.24 | 92.52/72.48 | 93.56/73.39 |

Training details. During training, we utilize the Adam optimizer [kingma2017adam] with an initial learning rate to train our network. The learning rate is decayed to one-tenth at iterations 22,500 and 37,500 in a total of 45,000 iterations. We randomly crop input with a resolution of 224x224 from the original images. During the training, the coarse masks are generated by introducing random perturbations to the ground truth masks with a random IoU threshold ranging from 0.8 to 1.0. The radius of the local window size when computing the affinity loss is set to 14.

We follow the multi-resolution inference strategy proposed in [shen2022high], which can be seen as progressively coarse-to-fine operations. Specifically, the inference begins at a very low resolution around the training image resolution and gradually increases the input’s resolution along the continuous ratio axis . At each refinement stage, the refined mask is concatenated as a coarse mask for the next refinement step.

5 Experiments

5.1 Setups

Datasets. To acquire object information and evaluate our models, we adhere to a dataset setup similar to that of CascadePSP [cheng2020cascadepsp]. Our training dataset comprises a combination of several individual datasets, totaling 36,572 RGB images accompanied by their corresponding ground truth masks. These images encompass a wide spectrum of over 1,000 categories, drawing from MSRA-10K [6871397], DUT-OMRON [6619251], ECSSD [7182346], and FSS-1000 [Wei2019FSS1000A1]. For testing, CascadePSP introduced a high-resolution image segmentation dataset, named BIG, ranging from 2K to 6K. Additionally, recognizing that the commonly used original PASCAL VOC 2012 dataset lacks pixel-perfect segmentations and designates regions near object boundaries as ’void’, [cheng2020cascadepsp] relabeled 500 objects to create a new dataset suitable for the task of UHR image segmentation.

Metrics. We evaluate our model using Intersection over Union (IoU) and mean Boundary Accuracy (mBA) [cheng2020cascadepsp], which robustly computes the segmentation accuracy across different image sizes within a specified radius from the ground truth boundary. Our model is evaluated without resorting to finetuning in various settings, demonstrating the enhancements it achieves.

5.2 Quantitative Results

Table 1 provides a comprehensive comparison of class-agnostic IoU and mBA among our AIRM, SegFix [yuan2020segfix], CascadePSP [cheng2020cascadepsp] and CRM [shen2022high]. All models are trained on low-resolution images and evaluated on their original high-resolution counterparts. Across both datasets, our method consistently outperforms other methods in terms of IoU and mBA. It’s worth noting that SegFix’s refinement performances are comparatively lower, as it is not specifically designed for the UHR image segmentation task. In the BIG dataset, our method exhibits an average improvement of around 1.7% for IoU and 1.2% for mBA based on four fundamental segmentation approaches. Similarly, in the relabeled PASCAL VOC 2012 dataset, our method achieves an average increase of approximately 1.1% for IoU and 0.6% for mBA compared to CRM and CascadePSP upon four approaches. These quantitative findings underscore the effectiveness of our model in capturing global semantic features and exploring pixel-wise affinity interrelationships in UHR image segmentation.

5.3 Qualitative Results

In Fig. 4, we present a qualitative comparison of the performance of CascadePSP, CRM, and our AIRM. Remarkably, even without any prior exposure to high-resolution training images, our method consistently produces refined results of exceptional quality across various scales. When employing four distinct input coarse masks, it becomes evident that our approach excels at reconstructing missing parts in comparison to CascadePSP and CRM. For instance, as depicted in Fig. 4, our method accurately distinguishes the pilot and the passenger seated in the rear of the aeroplane (the second row) from the segmented object. Additionally, our method precisely delineates the shape of the right rear-view mirror (the third row), achieving a notably high level of precision when compared to the ground truth, which other methods fail to accomplish.

Furthermore, Fig. 5 underscores the substantial visual enhancements achieved by our refinement technique on the relabeled PASCAL VOC 2012 dataset, which consists of low-resolution images. A direct comparison highlights the generation of finer details in our outputs, particularly along the boundary region, such as the gap in the back of the chair and intricate feather textures. More results in supplementary material further demonstrate the efficacy of our refinement algorithm.

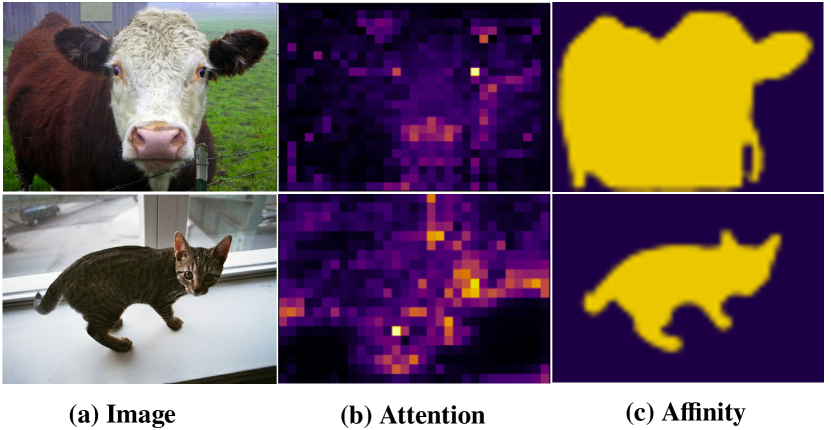

5.4 Ablation Study

Affinity learning. Fig. 6 illustrates that the attention map Fig. 6 (b) can only represent the coarse-level semantic affinity relations, prompting us to investigate the reliable semantic affinities Fig. 6 (c). In Table 2, we showcase the implementation of affinity learning across diverse backbones. For simplicity, We follow the method in [ahn2018learning] to acquire the affinity prediction map in CNN-based backbone. In this approach, the semantic affinity between a pair of feature vectors is defined in terms of their L1 distance. Notably, leveraging affinity from attention within the Transformer encoder yields a performance boost, averaging 1.8%/3.2% higher than its counterpart in the CNN-based encoder. This outcome strongly validates both our initial motivation and the effectiveness of the AEE module.

| Backbone | w Affinity | w/o Affinity |

|---|---|---|

| R50 | 90.14/68.29 | 89.99/67.96 |

| R101 | 90.57/72.19 | 90.03/72.99 |

| WR38 | 90.99/70.67 | 90.84/69.11 |

| Mit-B1(Ours) | 92.99/74.77 | 91.25/72.96 |

Adaptive implicit representation mapping function. We employ the TSNE [van2008visualizing] to reduce the dimension of the predicted adaptive parameters and illustrate that in Fig. 7. We observe that for input images with a similar semantic appearance, the corresponding mapping parameters are closely related. This demonstrates that the predicted adaptive parameters possess the capability to capture global semantic information.

| AEE | AIRMF | IoU | mBA |

|---|---|---|---|

| ✘ | ✘ | 88.75 | 68.34 |

| ✔ | ✘ | 90.99 | 70.77 |

| ✘ | ✔ | 91.08 | 71.09 |

| ✔ | ✔ | 92.99 | 74.77 |

Table 3 showcases the effect of the presence of AIRMF. For a fair comparison, we adopt ResNet50 [he2016deep] as an alternative image encoder for extracting image features when AEE is not employed. Additionally, we utilize a naive 5-layer MLP network as the substitute implicit function for AIRMF to decode the extracted latent code, following the setup outlined in [chen2021learning]. As shown in Table 3, we have the following observations: ❶ Without our proposed empowered encoder and adaptive implicit function, the segmentation results decrease by 2.24%/2.43% and 2.33%/2.75%, respectively, compared with a CNN-based encoder and conventional implicit function (see the first-third rows). This highlights our empowered encoder can efficiently capture strong and dependable global semantic information than the CNN-based encoder. Furthermore, our adaptive implicit function effectively utilizes these global features to compute the adaptive parameters, cooperatively enhancing segmentation performance. ❷ Particularly noteworthy is the synergistic performance achieved by the cohesive implementation of both modules in our proposed method, achieving the highest performance at 92.99%/74.77% (see the fourth row). This underscores the essential roles of AEE and AIRMF within AIRM, showcasing their synergistic effects.

Search mask radius in affinity loss. In Table 4, we also study the segmentation performance on different search mask radius when generating affinity label for computing affinity loss. Through comparison, we observe that a small value of may not yield sufficient affinity pairs, whereas a large might compromise the reliability of distant affinity pairs. Thus, we choose = 14 as our priority.

| 6 | 10 | 14 | 18 | 20 | |

|---|---|---|---|---|---|

| IoU | 91.28 | 91.38 | 91.47 | 90.99 | 90.85 |

6 Conclusion

Our study dives deep into how receptive fields influence implicit representation mapping and explores the shortcomings of current shared mapping functions through extensive experiments. Building on our findings, we introduce an innovative approach titled ”Leveraging Adaptive Implicit Representation Mapping for Ultra-High-Resolution Image Segmentation.” This paradigmatic framework comprises two integral components: the Affinity Empowered Encoder (AEE) and the Adaptive Implicit Representation Mapping Function (AIRMF). Together, this methodological approach facilitates the extraction of pixel-wise features with a much larger receptive field, translating it into a segmentation result enriched with global semantic information. Our meticulous experimentation substantiates the remarkable efficacy that our approach outperforms competitors by a significant margin. Fundamentally, we are shedding light on the limitations of existing methods and offering a promising avenue for ultra-high-resolution image segmentation with improved accuracy and performance.