Let Students Take the Wheel: Introducing Post-Quantum Cryptography with Active Learning

Abstract

Quantum computing presents a double-edged sword: while it has the potential to revolutionize fields such as artificial intelligence, optimization, healthcare, and so on, it simultaneously poses a threat to current cryptographic systems, such as public-key encryption. To address this threat, post-quantum cryptography (PQC) has been identified as the solution to secure existing software systems, promoting a national initiative to prepare the next generation with the necessary knowledge and skills. However, PQC is an emerging interdisciplinary topic, presenting significant challenges for educators and learners. To tackle these challenges, this research introduces a novel active learning approach and assesses the best practices for teaching PQC to undergraduate and graduate students in the discipline of information systems.

Our contributions are two-fold. First, we compare two instructional methods: 1) traditional faculty-led lectures and 2) student-led seminars, both integrated with active learning techniques such as hands-on coding exercises and game-based quizzes using Kahoot. The effectiveness of these methods is evaluated through student assessments and surveys. Second, we have published our lecture video, slides, and findings so that other researchers and educators can reuse the courseware and materials to develop their own PQC learning modules.

We employ statistical analysis (e.g., -test and chi-square test) to compare the learning outcomes and students’ feedback between the two learning methods in each course. Our findings suggest that student-led seminars significantly enhance learning outcomes, particularly for graduate students, where a notable improvement in comprehension and engagement is observed. Moving forward, we aim to scale these modules to diverse educational contexts and explore additional active learning and experiential learning strategies for teaching complex concepts of quantum information science.

1 Introduction

Quantum computing offers revolutionary potential across multiple fields, such as artificial intelligence, optimization, and healthcare, which is known as quantum advantage. However, it also poses a significant threat to cybersecurity. Current encryption schemes, including asymmetric (public-key) and symmetric (private-key) algorithms, are vulnerable to quantum attacks. Shor’s algorithm [16] can efficiently break widely used asymmetric encryption methods such as RSA, ECC, and Diffie-Hellman, while Grover’s algorithm [5] can weaken symmetric encryption like AES by halving the effective key length, requiring the increase of key sizes for equivalent security.

Although full-scale quantum computers are not yet available, immediate action is required. This is due to the risk of harvest-then-decrypt attacks, where encrypted data is stored now and later decrypted using future quantum capabilities [24, 25, 9]. The solution lies in quantum-safe cryptography, which consists of two approaches: quantum cryptography and post-quantum cryptography (PQC). Unlike quantum cryptography, PQC can be implemented using classical devices and has been prioritized by the National Institute of Standards and Technology for securing existing systems [19]. The urgency is underscored by recent initiatives, such as the National Security Agency’s adoption of the Commercial National Security Algorithm Suite 2.0 [13], which mandates PQC for national security systems by 2033. This provides us with a migration window of approximately 10 years, which aligns with previous time spans of cryptography migrations that also spanned over a decade (e.g., 3DES to AES and RSA to ECC) [24].

The migration to PQC represents a complex software evolution challenge and an emerging research priority. As researchers and educators, our goal is to raise awareness of quantum cybersecurity threats and promote best practices for PQC migration. Our initial step is to educate scholars, students, and cybersecurity practitioners, which aligns with the objectives of the Quantum Information Science and Technology Workforce Development National Strategic Plan [20] and the National Quantum Initiative Act [12].

In traditional STEM (science, technology, engineering, and mathematics) education, researchers have explored various pedagogical research to improve the learning outcomes, e.g., active learning [3, 21, 1] and student-ledseminars [11, 8, 2, 7]. With the recent breakthroughs in quantum computing, educational research has started to address quantum-related topics [15, 4, 14]. However, there exists a notable gap in the literature on integrating active learning approaches into quantum cryptography education, particularly for PQC. The challenge comes from the interdisciplinary nature of quantum cryptography, which requires fundamental knowledge of linear algebra, cybersecurity, and quantum mechanics. Given the complexity, it is challenging to introduce PQC concepts effectively and efficiently to STEM students within limited instructional time.

In this study, we evolve existing STEM curricula by integrating PQC learning modules and evaluate the effectiveness of active learning for teaching PQC to both undergraduate and graduate students. The ultimate goal of this research is to raise awareness about quantum threats and effectively promote PQC solutions to the next generation of STEM professionals.

Active learning comprises two types of strategies—student-led approach (e.g., student-led seminars, role-playing) and faculty-led approach (e.g., case studies, interactive quizzes). We are interested in exploring the effectiveness of both strategies. Given the challenges of teaching PQC and the identified gaps in the literature, we pose two research questions (RQs) to guide our study.

RQ1. How do student-led seminars, combined with faculty-led active learning, enhance students’ understanding of PQC concepts compared to faculty-led sessions alone?

RQ2. How do student-led seminars and faculty-led active learning strategies affect student engagement in PQC lectures?

To answer these RQs, we conduct two iterations of lectures—the first iteration consists of faculty-led active learning activities (e.g., hands-on coding exercise), and the second iteration consists of student-led seminars with all the faculty-led active learning activities in the first iteration. To evaluate learning outcomes, we use Kahoot game [6] (game-based learning) as a self-cognitive assessment (results can be seen in Section 4.1) and end-lecture course evaluation (using Google Forms) to review students’ perspectives (see Section 4.2 for details). To answer the RQs, we employ statistical and manual approaches to analyze the Kahoot results and students’ feedback and compare them between the two iterations.

Our contributions are two-fold. First, we introduce an active learning approach for teaching PQC to both undergraduate and graduate students and evaluate its effectiveness through comparison testing, demonstrating that student-led seminars can significantly enhance learning outcomes. Second, we have made our lecture videos and slides publicly available to support other researchers and educators in developing their own PQC learning modules.111Our artifacts are publicly available at https://zenodo.org/records/13909017, our courseware is available at https://est.umbc.edu/teaching/pqc-teaching-module/.

The rest of this paper is organized as follows. Section 2 reviews existing teaching and learning approaches in active learning, student-led seminars, and quantum education, respectively. Section 3 provides our methods for constructing the lectures and our evaluation plan. We illustrate our findings and discussions in Section 4, including Kahoot game results and student lecture evaluation analysis. Section 5 discusses the threats to validity, and Section 6 concludes the paper.

2 Related Work

Due to rapid technological changes, effective and efficient learning paradigms and approaches have become an important factor in the education system. This section presents related literature on student-led seminars, active learning methodologies, and quantum computing in higher education.

2.1 Active Learning

Active learning approaches incorporated in various studies result in better student engagement and satisfaction. The study by Cundell and Sheepy [3] aimed to evaluate student perceptions of online learning activities in a graduate seminar course, integrating 3.5 hours of online study through peer reviews, collaborative tasks, problem-solving, content sharing, and others. A survey of 59 students found that discussion forums were the highest-rated online activities, with 63% of students rating peer review of the assessment forum as the most engaging activity, while online readings from academic websites were rated the lowest. The study concludes that emphasizing learner-to-learner interaction is key to fostering collaborative learning in online environments.

Beemt et al. [21] examined students’ engagement in active learning through a virtual lab program that included three physical experimental setups integrated into systems and control courses. The remote lab featured both live interactive and queued automated experiments, allowing students to perform tasks in both virtual and physical environments. A total of 73 end-of-course evaluations revealed that two-thirds of students found the remote lab valuable and user-friendly, and many highlighted its flexibility as a major benefit. Although increased engagement was observed, some students pointed out the need for more hands-on tutorials to effectively navigate the remote lab setups.

Aji and Khan [1] introduced “Flipped classrooms,” where the students were provided with interactive audio-visual materials explaining various concepts before their class. The study was conducted in a total of 4 classes in lower-level math and aerospace engineering courses, with a count of 25 to 30 students in each class. The end-of-semester grades showed significant improvement in overall grades and passing rates across all flipped courses in comparison with the traditional classes.

2.2 Student-led Seminars

Studies on the effectiveness of student-led seminars generally report positive outcomes, often using surveys for feedback and evaluation. Minhas et al. [11] examined the impact of passive versus active learning in an Animal Physiology course with 72 undergraduates over two years. Each year was split into two halves—the first half used instructor-led classes, and the second used student-led seminars. Survey results showed an increase in preference for student-led seminars, from 22.7% to 33.3% over the years. In general, students rated seminars highly and felt more confident in retaining information through self-study. The study suggested that active learning enhanced performance, though comparing distinct student groups over the years would have yielded more robust results than dividing the curriculum over the two time periods during each year.

Jake Kurczek and Jacob Johnson [8] discussed their experience with the learning approach in a course consisting of nine students who conducted 1) weekly seminars, 2) prepared presentations, and 3) group discussions. The students were encouraged to present their arguments and questions after the seminars to promote a better understanding of the subject. The scores collected from the mid-term and final surveys indicated that the students felt the instructor’s effectiveness improved over time, and they learned more as the course progressed. Overall, the students displayed a likelihood towards seminar format classes with increased participation and better learning outcomes. However, it was observed that more interference from the instructor in explaining complex theories and guidance in the discussion would further improve the classroom experience.

Research by Casteel and Bridges [2] investigated collaborative learning using a student-led seminar approach in advanced psychological courses. The authors led five classes in three different courses over two years, with the number of students ranging from four to ten. The courses used weekly reading assignments, which were presented and discussed in the class by student leaders leading the seminar. End-of-course university standard surveys were compared with other courses taught by the same instructor and the same courses taught on a different campus using traditional approaches. Survey results indicated that the student-led approach enhanced the overall quality of the learning experience. In addition, 71% of the students favored the seminar-based format, which implied the positive impacts of collaborative learning on student understanding and satisfaction.

Kassab et al. [7] evaluated the educational outcomes and perception of students in student-led tutorials in a problem-based learning course with student-led tutorial groups and faculty-led tutorial groups. Based on the results of 290 collected feedback responses, students felt that student tutors provided better feedback than faculty tutors during discussions. Although the examination scores showed no significant difference between the two groups, the student-led group dynamics were also rated better in terms of tutorial atmosphere, decision-making, and support for the group leader. However, student tutors faced challenges in analyzing the problems in the beginning. The study confirmed the value of peer tutoring in promoting collaborative learning but recommended proper tutor training to improve learning outcomes.

2.3 Quantum Education

Research in the field of quantum education is on the rise, highlighting the need for educators to apply effective teaching approaches to cutting-edge technologies. Salehi et al. [15] introduced quantum computing through computer science and linear algebra approach rather than the traditional quantum mechanics and physics concepts. A total of 430 participants from various backgrounds attended the 2 to 3-day task-based workshops. Pre- and post-test scores of the 317 participants showed significant knowledge gain across all participants. However, participants indicated insufficient time to complete the tasks. Moreover, they did not explore and compare various teaching strategies.

Fox et al. [4] explored various quantum career opportunities and skills required, focusing on how higher education can bridge the gap. They interviewed 22 hiring managers and supervisors from firms engaged in quantum computing. Employers highlighted the need for individuals from different educational backgrounds with skills such as coding, statistical analysis, and laboratory experience to support quantum software and hardware. Additionally, 33% of companies suggested the inclusion of quantum awareness courses targeted at engineers and computer scientists along with more hands-on, practical training through internships and capstone projects. The study emphasized the importance of education programs in preparing students for the quantum industry, which aligns with our goal.

Nita et al. [14] proposed a visualization tool to assist non-experts in understanding quantum computation concepts without requiring in-depth mathematical expertise. The tool allowed learners to visualize quantum phenomena like interference and superposition, promoting active learning through hands-on experimentation. The authors identified complex mathematical skills as a barrier to quantum education. Their findings confirmed the motivation of our study.

In summary, while educators recognize the urgency of imparting quantum computing knowledge to students, there is a lack of diverse educational practices and limited research on effective teaching strategies in this emerging field.

3 Our Method

In this section, we outline the approach adopted for our study, which compares the effectiveness of faculty-led lectures (FLL) and hybrid lectures (HL, a combination of student-led seminars and faculty-led lectures).

As shown in Figure 1, we develop two learning modules and integrate them into a graduate course (IS636, “Structured Systems Analysis and Design”) and an undergraduate course (IS471, “Data Analytics for Cybersecurity”) in the Department of Information Systems, respectively. The graduate lecture is 2.5 hours, and the undergraduate lecture is 1 hour. In the graduate lecture, we have more introductory materials on quantum systems because of the length of the graduate lecture. In contrast, the undergraduate lecture mainly focuses on PQC.

| Iteration | Graduate (2.5 hours) | Undergraduate (1 hour) |

|---|---|---|

| 1 | Spring 2023: | Spring 2023: |

| Coding | Coding | |

| Kahoot | Kahoot | |

| 2 | Fall 2023: | Spring 2024: |

| Student-led seminar | Student-led seminar | |

| Coding | Coding | |

| Kahoot | Kahoot |

We provide the PQC lectures in two iterations from the spring of 2023 to the spring of 2024 (see Table 1). Both iterations contain multiple active learning approaches, such as hands-on coding exercises and Kahoot games. However, only the second iteration introduces student-led seminars prior to faculty-led lectures. We assess the impact of both methods on students’ learning and engagement through the results of Kahoot quizzes and anonymous surveys.

Lecture Development and Design. In the spring semester of 2023, we developed a PQC learning module based on active learning. This learning module includes faculty-led lectures with active learning activities, such as hands-on coding exercises and Kahoot quizzes (see Table 1). An example of hands-on exercises is shown in Figure 2. We tailor and incorporate the PQC learning modules into two courses in our department, as follows.

-

•

The graduate course “Structured Systems Analysis and Design” with a 2.5-hour PQC learning module.

-

•

The undergraduate course “Data Analytics for Cybersecurity” with a 1-hour PQC learning module.

After completing the initial PQC learning modules, we aimed to increase student learning outcomes and engagement by incorporating more active learning approaches because of the learning challenges observed in the graduate course. Thus, we integrated a student-led seminar into the PQC learning module. We implemented the second iteration of the PQC learning modules in the same courses in the fall semester of 2023 and the spring semester of 2024. The new PQC lecture begins with a student-led seminar, followed by the faculty-led lecture with hands-on coding exercises, and concludes with a Kahoot quiz (see Table 1). For student-led seminars, participants choose a quantum computing topic (e.g., “What is a qubit?”) from a provided list or suggest their own, and deliver an elevator pitch individually or in groups. Both the graduate and undergraduate courses use the same structure. Similar to the first iteration, the graduate lecture is longer than the undergraduate lecture, and it contains a more detailed introduction to quantum systems.

To assess the effectiveness of active learning approaches, specifically student-led seminars, we conduct comparison testing between the two iterations of PQC lectures—FLL versus HL. We adopt Kahoot to assess the student learning outcomes and an end-of-lecture survey to analyze students’ perspectives and engagement.

| Question # | Question Type | Question |

|---|---|---|

| 1 | True or False | Symmetric encryption involves both public and private keys. |

| 2 | True or False | Quantum computing is the use of quantum mechanics to perform computation. |

| 3 | True or False | Shor’s algorithm is a quantum algorithm that can crack RSA schemes in polynomial time. |

| 4 | True or False | Post-quantum cryptography is a group of classical algorithms running on classical computers. |

| 5 | Multiple Choice | Three qubits in superposition can represent ___ quantum states simultaneously. |

Self-Cognitive Assessment (Kahoot Game). Previous research shows that Kahoot games deliver an effective self-cognitive assessment for participants [18]. Therefore, we employ Kahoot games at the end of each lecture to assess students’ understanding of the material and provide an opportunity for them to self-reflect on their learning progress. The Kahoot questions focus on distinguishing between quantum-safe and traditional cryptographic methods, understanding the significance of Shor’s and Grover’s algorithms, and the implications of quantum computing for cybersecurity. An example of Kahoot games can be found in Figure 3. Our midterm feedback also indicates that Kahoot is one of the most popular interactive learning activities used during classes. To ensure consistency, the same set of Kahoot questions is used in all the PQC lectures. Table 2 lists all the questions adopted in the Kahoot game, including four “True or False” questions and one “Multiple Choice” question. These questions are designed to test key concepts covered in the lectures and provide immediate feedback to both students and instructors. We employ a -test [17] to compare responses (in terms of scores and response times) between FLL and HL groups in Kahoot quizzes. More detailed results and analysis can be found in Section 4.1.

| Question # | Question | Choice |

|---|---|---|

| 1 | Lecture pace is: | 1. Too slow 2. Just right 3. Too fast |

| 2 | Amount of material presented is: | 1. Too little 2. Just right 3. Too much |

| 3 | Difficulty of material presented is: | 1. Too easy 2. Just right 3. Too hard |

| 4 | Is the topic interesting? | 1. Agree 2. Neutral 3. Disagree |

| 5 | Does the instructor explain the material clearly? | 1. Strongly agree 2. Agree 3. Neutral 4. Disagree 5. Strongly disagree |

| 6 | What do you like about the lecture? | Open-ended question |

| 7 | What do you dislike about the lecture? | Open-ended question |

| 8 | Your general comments about the lecture: | Open-ended question |

Student Lecture Evaluation. To collect comprehensive feedback from students on the lecture formats, we use Google Forms to conduct anonymous surveys at the end of each lecture. The questionnaires ask students to evaluate various aspects of the lecture, including the lecture pace, amount, and difficulty level of the presented materials, the clarity of the content, their likes and dislikes, and their overall satisfaction with the learning experience. Table 3 provides an overview of all survey questions. This feedback is crucial in understanding students’ perspectives and identifying areas for further improvement. We will perform a chi-square test [10] and sentiment analysis to quantify survey data and evaluate students’ engagement and satisfaction in FLL and HL groups. Results and analysis can be found in Section 4.2.

4 Results and Discussions

This section analyzes student performance and lecture feedback for two PQC lecture formats—FLL and HL—in both undergraduate and graduate courses. The effectiveness of each format is assessed through Kahoot quiz results, measuring both student scores and response times, alongside detailed course evaluations through questionnaires. Statistical analyses, including -tests and chi-square tests, are utilized to determine the significance of differences between FLL and HL formats. The findings provide insights into the impact of student-led seminars on learning outcomes and overall student satisfaction.

4.1 Kahoot Results Analysis

In the undergraduate course, both the FLL and HL groups have 12 students. For the graduate course, 15 students are enrolled in the FLL group, while 13 students participate in the HL group. All the undergraduate students take Kahoot quizzes. However, one student in the graduate FLL group misses the quiz. The results of the Kahoot quizzes for each group are reported as the mean standard deviation (SD), along with the coefficient of variation (CV), which provides a measure of the relative variability in students’ performance. The statistical significance of the differences between groups is assessed using the -value, with values below 0.05 considered statistically significant.

| Course | Group | Mean SD | CV | -value |

|---|---|---|---|---|

| Undergraduate | FLL | 3540.84 1006.96 | 0.28 | 0.367 |

| HL | 5216.84 5954.70 | 1.14 | ||

| Graduate | FLL | 2759.20 1159.96 | 0.42 | 0.046* |

| HL | 3683.91 1009.41 | 0.27 | ||

| * is considered statistically significant. | ||||

Kahoot Scores. As presented in Table 4, the HL group in the undergraduate course achieves a higher mean score (5216.84 5954.70) compared to the FLL group (3540.84 1006.96), though this difference is not considered statistically significant (-value = 0.367). In contrast, for the graduate course, the HL group not only has a higher mean score (3683.91 1009.41) but also a lower SD and CV compared to the FLL group (2759.2 1159.96), with a -value of 0.046, indicating a statistically significant improvement in the HL group. This suggests that student-led seminars have a positive impact on graduate students’ learning outcomes.

This improvement is further supported by the increase in Kahoot correctness rates, which have risen from 58% (FLL) to 78% (HL). A potential explanation for the low correctness rate in the FLL group is the diverse educational backgrounds of our graduate students (e.g., some of them may graduate from non-STEM disciplines). In contrast, graduate students in the HL group, because of the extra self-directed learning time (to prepare the seminars) before lectures, are better equipped for independent learning than students in the FLL group, as reflected in their higher average Kahoot scores.

| Course | Group | Mean SD | CV | -value |

| Undergraduate | FLL | 4.93 4.09 | 0.83 | 0.183 |

| HL | 3.09 1.77 | 0.57 | ||

| Graduate | FLL | 4.44 5.09 | 1.50 | 0.318 |

| HL | 2.83 1.51 | 0.53 | ||

| * is considered statistically significant. | ||||

Kahoot Response Times. Besides accuracy, Kahoot also measures fluency, i.e., how fast a participant can answer a question. Table 5 presents the average students’ response times. As can be seen from the table, the HL group performs better than the FLL group in both undergraduate and graduate courses in terms of response times and relatively lower CV in both classes. In the undergraduate lecture, the mean response time of the HL group is 3.09 seconds, compared to 4.93 seconds in the FLL group. In the graduate lecture, the average response time of the HL group is 2.83 seconds, compared to 4.44 seconds in the FLL group. However, these differences are not statistically significant, given the threshold of 0.05 (-values of 0.183 and 0.318). This suggests that while the HL format may enhance overall learning outcomes, it does not significantly affect response times.

RQ1. How do student-led seminars, combined with faculty-led active learning, enhance students’ understanding of PQC concepts compared to faculty-led sessions alone?

Our Findings. Kahoot results indicate that student-led seminars in the HL format significantly improve learning outcomes for graduate students. For undergraduate students, the student-led seminars also show a positive effect on learning outcomes, but the improvement is not statistically significant.

4.2 Student Lecture Evaluation Analysis

We design an anonymous questionnaire to assess the learning experience of both our undergraduate and graduate students following each iteration of lectures. This questionnaire aims to gather detailed feedback on various aspects of the students’ learning experiences. The list of questions in the questionnaire can be found in Table 3. We adopt both statistical and manual approaches to analyze the responses from both undergraduate and graduate students in FLL and HL groups, respectively.

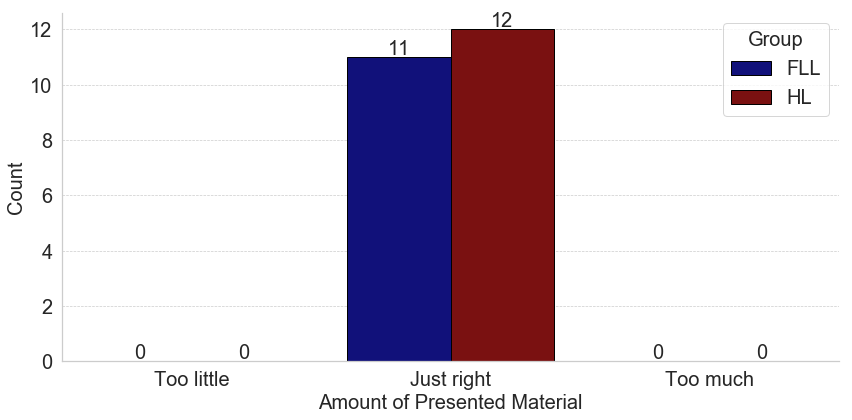

Multiple-Choice Questions. Figures 4, 5, 6, 7 and 8 illustrate the distribution of responses to the five multi-choice questions.

For the lecture pace evaluation, as depicted in Figure 4(a), all undergraduate students in the FLL group (100%) and the majority of HL students (83.34%) agree that the pace is ‘just right.’ However, 16.67% of HL students feel that the lecture pace is ‘Too fast.’ Similarly, the majority of graduate students (in Figure 4(b)) support the same, with 69.23% of FLL students and 84.61% of HL students agreeing that the lecture pace is ‘just right.’ Meanwhile, 30.77% of FLL and 15.38% of HL students think that the lecture pace is ‘Too fast.’

In terms of the amount of material presented (Figure 5(a)), both the undergraduate FLL and HL groups unanimously (100%) mention the amount is ‘just right.’ Also, as shown in Figure 5(b), 61.54% of FLL students and 84.61% of HL students agree the presented amount is ‘just right,’ while 30.77% of FLL students and 7.69% of HL students feel that there is ‘too much’ material. Also, 7.69% of both FLL and HL students believe that there is ‘too little’ material.

According to Figure 6(a), all FLL students and 91.67% of HL students in undergraduate lectures find the lecture difficulty ‘just right,’ with only 8.33% of HL students finding it ‘too hard.’ In the graduate level, Figure 6(b) shows that 69.23% of FLL students and 92.31% of HL students support that the material difficulty is ‘just right,’ while 30.77% of FLL students and 7.69% of HL students find it ‘too hard.’

While there is unanimous agreement (100%) among both undergraduate FLL and HL students that the topic is interesting (see Figure 7(a)), 61.53% of our graduate FLL students and 92.31% of graduate HL students find the topic interesting. Meanwhile, 38.46% and 7.69 % of FLL and HL students respond neutrally (see Figure 7(b)).

Last but not least, in terms of clarity of the material, 100% of undergraduate HL students either ‘strongly agreed’ or ‘agreed’ that the material is clear, compared to 72.73% of undergraduate FLL students (Figure 8(a)). Notably, 27.27% of FLL students ‘strongly disagreed’ with the clarity of the material. Similarly, in our graduate group, 92.31% of FLL and HL students either ‘strongly agreed’ or ‘agreed’ that the material is clear. Notably, 7.69% of both groups rated their satisfaction as ‘neutral.’

| Course | Group | Lecture Pace | Amount of the Material | Difficulty of the Material | Is the Topic Interesting | Clarity of the Material |

|---|---|---|---|---|---|---|

| Undergraduate | FLL versus HL | 0.498 | 1.0 | 0.964 | 1.0 | 0.089 |

| Graduate | FLL versus HL | 0.641 | 0.320 | 0.319 | 0.162 | 0.709 |

| * is considered statistically significant | ||||||

We also perform a chi-square test of independence to determine whether there is a significant association between the type of lecture (FLL versus HL) and the feedback received for the five multiple-choice questions. Table 6 presents the obtained -values of the chi-square test. Based on the 0.05 threshold of -value, our analysis indicates that there is no significant association between the type of lecture and the students’ learning experiences for either graduate or undergraduate courses. This suggests that the format of the lecture (FLL or HL) did not significantly influence the students’ feedback on the investigated aspects.

Open-Ended Questions. For the three open-ended questions (see Table 3), We conduct a manual inspection of the students’ responses on what they like or dislike about our lectures and their overall comments or suggestions to the lecture.

For the question “What do you like about the lecture?”, students’ comments highlight two topics in general—1) the lecture presentation/structure and 2) the active learning activities. We observe 72.73% of undergraduate FLL students indicate that they like the way the lecture is presented, compared to 91.67% of undergraduate HL students, demonstrating a noticeable increase in satisfaction with the HL format. A similar trend is observed at the graduate level, where 38.46% of FLL students express satisfaction with the presentation, compared to 53.85% of HL students. Although satisfaction rates are generally lower among graduate students, HL participants consistently reported higher satisfaction than FLL students at both academic levels.

In the undergraduate course, 45.45% of FLL students provide positive feedback regarding the active learning method used, while slightly more HL students (50.00%) report the same. This indicates a fairly similar level of approval for active learning activities between the two groups. In graduate lectures, 23.08% of HL students provide favorable comments on student-led seminars, and no responses about active learning are collected from the FLL group. This suggests a noticeable difference in how graduate students from the two groups perceive the effectiveness of student-led activities.

For the question “What do you dislike about the lecture?”, we do not observe any negative feedback from the participants regarding the presentation style or the active learning methods adopted. Among undergraduate students, 63.64% of FLL and 58.34% of HL participants indicated they do not dislike anything about the lecture. Similarly, at the graduate level, 15.38% of FLL students and 38.46% of HL students reported that they do not dislike any aspect of our lectures. These statistics suggest that the majority of participants do not have significant negative remarks about the lecture format, indicating general satisfaction with both the presentation and the active learning approach. There are a few suggestions regarding the pace and the amount of presented material, aligning with the responses in the multi-choice questions (see Figures 4(b) and 5(b)).

For the question “Your general comments about the lecture:”, we manually review the feedback from participants and evaluate their overall learning experience. In the undergraduate group, where attendance rates are 92% for the FLL group and 100% for the HL group, both sessions receive universally (100%) positive feedback regarding the active learning approaches employed or the structure of the HL.

For example, one undergraduate student noted,

“The student presentations at the beginning were an interesting inclusion, as it allowed them to gain a basic understanding beforehand. The Kahoot was also very fun.”

Another undergraduate student remarked,

“I liked the lecture and it was engaging. I knew very little coming in and I came out learning interesting things.”

This positive trend extends to the graduate courses as well. In the graduate FLL and HL lectures, with response rates of 60% and 100%, respectively, our lectures also receive 100% positive feedback on the structure of the HL sessions and the active learning strategies. For example, a graduate student emphasized the advantages of our teaching approach as follows.

“Live demo with the code execution really showed me the impact of quantum computers when our classical computers weren’t able to match upto the speed and computing prowess. And getting to learn about advanced technology even more earlier in this stage was an interesting part of this course altogether.”

Positive feedback highlighted the effectiveness of structured preparation and interactive elements, such as student presentations and tools like Kahoot. The absence of negative comments on the HL lectures further suggests that this format is engaging and effective, making it a preferred instructional method for enhancing the overall learning experience.

RQ2. How do student-led seminars and faculty-led active learning strategies affect student engagement in PQC lectures?

Our Findings. Manual examination and sentiment analysis of lecture evaluation from both undergraduate and graduate students reveal that both the FLL and HL groups received positive responses. However, no statistically significant difference is observed in the multiple-choice questions between FLL and HL groups based on the analytical results in Table 6.

4.3 Discussions

To summarize, the results from Kahoot self-cognitive assessments suggest that the student-led seminar, combined with other active learning activities, is an effective method for teaching PQC concepts to both undergraduate and graduate students. Additionally, students’ feedback indicates a strong preference for the PQC lectures, highlighting the topics as engaging, the course presentation as attractive, and the interactive learning experience as positive. Students also express satisfaction with the amount of content and the clarity of instruction.

These findings motivate us to continue offering PQC lectures and expand this teaching approach to a wider range of courses. By promoting PQC education, we aim to deepen students’ understanding of emerging technologies and better prepare them for challenges in the evolving quantum computing landscape. Furthermore, the use of active learning strategies, such as student-led seminars, has shown particularly promising results in enhancing engagement and learning outcomes at the graduate level.

5 Threats to Validity

Internal and construct validity. The dynamic nature of teaching introduces variability in instructional quality, which could influence learning outcomes. Despite our efforts to maintain consistency in the lecture content, unintended variations in delivery, particularly between the two courses from the spring of 2023 to the spring of 2024, may have influenced the outcomes. To mitigate this, we documented all course materials before the first round of lectures and reused them in the following lectures to ensure that both the content and teaching methods were followed consistently in subsequent sessions.

Furthermore, the intrinsic motivation and prior knowledge of the participants, which were not systematically assessed at the onset of the study, may have influenced their engagement and absorption of the material. However, since the graduate course is a core course in the department, all students enrolled had completed the required fundamental courses, including the courses “Introduction to Object-Oriented Programming Concepts”, “Database Program Development”, and “Data Communications and Networks”. These courses provided participants with the essential prior knowledge in programming and networking necessary for understanding PQC. Also, our undergraduate students had completed three years of relevant coursework from the department, such as the course “Introduction to Database Design”, prior to taking the undergraduate course.

External and conclusion validity. This study’s findings may be constrained by its focus on the subject of PQC and the various educational backgrounds of potential participants. Our undergraduate course consisted of students from a STEM program. Our graduate-level course included students from human-centered computing and information systems programs. As students in STEM disciplines, we ensured that all students had a baseline understanding of key concepts before introducing PQC. However, this approach may not be generalizable to students without a STEM background. Since our course is rooted in STEM disciplines, the review session may not be sufficient for those without foundational knowledge of computer systems, limiting the applicability of our teaching methods to non-STEM participants.

Additionally, the findings from this study are based on a relatively small sample size within a single institution, with an average of 12.75 participants and a standard deviation of 1.30. These factors may limit the ability to capture the full range of variability in student performance and engagement. To mitigate these limitations, we aim to expand our methods to other institutions, larger groups of students, and various subjects in future research.

6 Conclusions and Future Work

In this paper, we investigated the effectiveness of active learning approaches in delivering the concepts of PQC to STEM students. We adopted self-cognitive assessments and surveys to analyze the learning outcomes and students’ feedback. The analytical results showed that the active learning approaches, especially the student-led seminars, significantly improved students’ engagement and learning outcomes. Our future directions include but are not limited to 1) expanding the one-week PQC learning module to a six-week online course, which will include the introductory materials of programming, quantum computing, and PQC; 2) incorporating more active learning approaches into the course; e.g., role-playing and case studies.

References

- [1] Chadia A Aji and M Javed Khan. The impact of active learning on students’ academic performance. Open Journal of Social Sciences, 7(03), 2019.

- [2] Mark A Casteel and K Robert Bridges. Goodbye lecture: A student-led seminar approach for teaching upper division courses. Teaching of Psychology, 34(2):107–110, 2007.

- [3] Alicia Cundell and Emily Sheepy. Student perceptions of the most effective and engaging online learning activities in a blended graduate seminar. Online Learning, 22(3):87–102, 2018.

- [4] Michael FJ Fox, Benjamin M Zwickl, and HJ Lewandowski. Preparing for the quantum revolution: What is the role of higher education? Physical Review Physics Education Research, 16(2):020131, 2020.

- [5] Lov K Grover. A fast quantum mechanical algorithm for database search. In Proceedings of the twenty-eighth annual ACM symposium on Theory of computing, pages 212–219, 1996.

- [6] Kahoot! Kahoot! Learning games. https://kahoot.com/.

- [7] Salah Kassab, Marwan F Abu-Hijleh, Qasim Al-Shboul, and Hossam Hamdy. Student-led tutorials in problem-based learning: educational outcomes and students’ perceptions. Medical teacher, 27(6):521–526, 2005.

- [8] Jake Kurczek and Jacob Johnson. The student as teacher: reflections on collaborative learning in a senior seminar. Journal of Undergraduate Neuroscience Education, 12(2):A93, 2014.

- [9] Atefeh Mashatan and Douglas Heintzman. The complex path to quantum resistance: is your organization prepared? Queue, 19(2):65–92, 2021.

- [10] Mary L McHugh. The chi-square test of independence. Biochemia medica, 23(2):143–149, 2013.

- [11] Paras Singh Minhas, Arundhati Ghosh, and Leah Swanzy. The effects of passive and active learning on student preference and performance in an undergraduate basic science course. Anatomical Sciences Education, 5(4):200–207, 2012.

- [12] National Quantum Initiative. About the national quantum initiative. https://www.quantum.gov/about/#NQIA.

- [13] National Security Agency. Announcing the commercial national security algorithm suite 2.0. https://media.defense.gov/2022/Sep/07/2003071834/-1/-1/0/CSA_CNSA_2.0_ALGORITHMS_.PDF.

- [14] Laurentiu Nita, Laura Mazzoli Smith, Nicholas Chancellor, and Helen Cramman. The challenge and opportunities of quantum literacy for future education and transdisciplinary problem-solving. Research in Science & Technological Education, 41(2):564–580, 2023.

- [15] Özlem Salehi, Zeki Seskir, and Ilknur Tepe. A computer science-oriented approach to introduce quantum computing to a new audience. IEEE Transactions on Education, 65(1):1–8, 2021.

- [16] Peter W Shor. Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. Society for Industrial and Applied Mathematics Review, 41(2):303–332, 1999.

- [17] Student. The probable error of a mean. Biometrika, pages 1–25, 1908.

- [18] Rosaline Tandiono. Gamifying online learning: An evaluation of kahoot’s effectiveness in promoting student engagement. Education and Information Technologies, pages 1–18, 2024.

- [19] The National Institute of Standards and Technology. Post-quantum cryptography. https://csrc.nist.gov/projects/post-quantum-cryptography.

- [20] The National Science & Technology Council. Quantum information science and technology workforce development national strategic plan. In United States. Executive Office of the President. United States. Executive Office of the President, 2022.

- [21] Antoine Van den Beemt, Suzanne Groothuijsen, Leyla Ozkan, and Will Hendrix. Remote labs in higher engineering education: engaging students with active learning pedagogy. Journal of Computing in Higher Education, 35(2):320–340, 2023.

- [22] C. Wohlin, P. Runeson, M. Höst, M.C. Ohlsson, B. Regnell, and A. Wesslén. Experimentation in Software Engineering. Computer Science. Springer Berlin Heidelberg, 2012.

- [23] R.K. Yin. Case Study Research: Design and Methods. Applied Social Research Methods. SAGE Publications, 2009.

- [24] Lei Zhang, Andriy Miranskyy, and Walid Rjaibi. Quantum advantage and the y2k bug: A comparison. IEEE Software, 38(2):80–87, 2020.

- [25] Lei Zhang, Andriy Miranskyy, Walid Rjaibi, Greg Stager, Michael Gray, and John Peck. Making existing software quantum safe: A case study on ibm db2. Information and Software Technology, 161:107249, 2023.