Least-Squares Method for Inverse Medium Problems

Abstract

We present a two-stage least-squares method to inverse medium problems of reconstructing multiple unknown coefficients simultaneously from noisy data. A direct sampling method is applied to detect the location of the inhomogeneity in the first stage, while a total least-squares method with mixed regularization is used to recover the medium profile in the second stage. The total least-squares method is designed to minimize the residual of the model equation and the data fitting, along with an appropriate regularization, in an attempt to significantly improve the accuracy of the approximation obtained from the first stage. We shall also present an analysis on the well-posedness and convergence of this algorithm. Numerical experiments are carried out to verify the accuracies and robustness of this novel two-stage least-squares algorithm, with great tolerance of noise.

Keywords: Inverse medium problem, least-squares method, reconstruction algorithm, mixed regularization

Mathematics Subject Classification (MSC2000): 35R30, 65F22, 65N21, 65N06

1 Introduction

In this work, we propose a total least-squares formulation for recovering multiple medium coefficients for a class of inverse medium problems that are governed by the forward model of the form:

| (1.1) |

where is a bilinear operator on , is the state variable, while represents one or multiple unknown coefficients in the model that are to be recovered, under some measurement data of . Here , and are three Hilbert spaces, and is an observation map from to .

In many applications, it is often required to recover multiple coefficients simultaneously. For instance, the diffusive optical tomography (DOT) aims at recovering the diffusion and absorption coefficients and from the governing equation [1, 2]:

| (1.2) |

using the Cauchy data collected at the boundary of :

| (1.3) |

Another example would be the inverse electromagnetic medium problem to recover the unknown magnetic and electric coefficients and in the Maxwell’s system [3, 4]:

using some electric or magnetic measurement data or .

The inverse reconstruction of multiple medium coefficients is generally much more technical and difficult than the single coefficient case. We shall proposed a total least-squares formulation with appropriate regularization to transform the inverse problem into an optimization problem. The total least-squares philosophy is not uncommon. One conventional approach for inverse medium problems is to seek an optimal parameter from a feasible set such that it minimizes an output least-squares functional of the form

| (1.4) |

where solves the forward model (1.1) when is given, is a regularization term and is a regularization parameter. We refer the readers to [2, 5, 6] for more details about this traditional approach. A relaxed variational method of the least-squares form was proposed and studied in [7, 8] for the impedance computed tomography. The least-squares functional consists of a residual term associated with the governing equation while the measurement data is enforced at the feasible set. Different from the aforementioned approaches, we shall follow some basic principle of a total least-squares approach from [9] and treat the governing equation and the data fitting separately, along with a regularization. That is, we look for an optimal parameter from and a state variable from together such that they minimize an extended functional of the form

| (1.5) |

This functional combines the residual of model equation, data fitting and constraints on parameters in the least-squares criterion. The combination in (1.5) results in a regularization effect to treat the model equation and allows a robustness as a quasi-reversibility method [10]. Compared with the conventional approaches, the domain of is much more regular and the semi-norm defined by the formulation is much stronger. More precisely, the total least-squares formulation aims to find a solution pair simultaneously in a smooth class and is less sensitive to the noise and uncertainty in the inverse model. Another important feature of this formulation is that the functional is quadratical and convex with respect to each variable and , if the regularization is chosen to be quadratical and convex, while the traditional one in (1.4) is highly nonlinear and non-convex in general. This special feature facilitates us naturally to minimize the functional effectively by the alternating direction iterative (ADI) method [11, 12] so that only two quadratical and convex suboptimizations are required in terms of the variables and respectively at each iteration.

In addition to the functional (1.5) that uses the residual of the forward model (1.1), we will also address another least-squares functional that makes use of the equivalent first-order system of the forward model (1.1) and replaces the first term in (1.5) by the residuals of the corresponding first-order system. Using the first-order system has been the fundamental idea in modern least-squares methods in solving second-order PDEs [13, 14, 15]. The advantages of using first-order formulations are much more significant to the numerical solutions of inverse problems, especially when we aim at simultaneously reconstructing multiple coefficients as we do in this work. First, the multiple coefficients appear in separated first-order equations, hence are naturally decoupled. This would greatly reduce the nonlinearity and enhance the convexity of the resulting optimization systems. Second, the first-order formulation relaxes the regularity requirement of the solutions in the resulting analysis.

A crucial step to an effective reconstruction of multiple coefficients is to seek some reasonable initial approximations to capture some general (possibly rather inaccurate) geometric and physical profiles of all the unknown multiple coefficients. This is a rather technical and difficult task in numerical solutions. For this purpose, we shall propose to adopt the direct sampling-type method (DSM) that we have been developing in recent years (cf. [17, 18, 26, 27]). Using the index functions provided by DSM, we shall determine a computational domain that is often much smaller than the original physical domain, then the restricted index functions on the computational domain serve as the initial guesses of the unknown coefficients. In this work, we will apply a newly developed DSM [19], where two groups of probing and index functions are constructed to identify and decouple the multiple inhomogeneous inclusions of different physical nature, which is different from the classical DSMs targeting the inhomogeneous inclusions of one single physical nature. As we shall see, DSMs turn out to be very effective and fast solvers to provide some reasonable initial approximations.

The rest of the paper is structured as follows. In Section 2, we justify the well-posedness of the least-squares formulation for the general inverse medium problems. In Section 3, we propose an alternating direction iterative method for solving the minimization problem and prove the convergence of the ADI method. We illustrate in Section 4 how this total least-squares method applies to a concrete inverse problem, by taking the DOT problem as a benchmark problem. We present numerical results in Section 5 for a couple of different types of inhomogeneous coefficients for the DOT problem to demonstrate the stability and effectiveness of this proposed method. Throughout the paper, , and denote generic constants which may differ at each occurrence.

2 Well-posedness of the least-squares formulation for inverse medium problem

Recall that to solve the inverse medium problems modeled by (1.1), we propose the following least-squares formulation

| (2.1) |

This section is devoted to the well-posedness of the total least-squares formulation (2.1), namely, the existence of a solution to (2.1) and the conditional stability of the reconstruction with respect to the measurement. To provide a rigorous justification of the well-posedneness, we present several assumptions on the least-squares formulation, which are minimal for the proof. We will verify these checkable assumptions in Section 4 for a concrete example of such inverse medium problems.

Let us first introduce several notations. For simplicity, for a given (resp. ), we will write (resp. ) as

| (2.2) |

We denote the subdifferential of the regularization term at by , and denote the inner products of the Hilbert spaces , and by , and respectively.

2.1 Existence of a minimizer

We present the following assumptions on the regularization term and operators and in the forward model:

Assumption 1.

The regularization term is strictly convex and weakly lower semicontinuous. Furthermore, is also coercive [6], i.e., .

This assumption implies that the level set defines a bounded set in .

Assumption 2.

Given a constant , for in the level set , , where with a specific side constraint that is not in the least-squares formulation (2.1), is a closed linear operator and is uniformly coercive, i.e., the graph norm satisfies uniformly in for some constant , and thus has a unique solution in .

Under Assumption 2, we can define the inverse operator , which is uniformly bounded by the coercivity of . We also need the following assumption on the sequentially closedness of operators and .

Assumption 3.

The operators and are weakly sequentially closed, i.e., if a sequence converges to weakly in , then the sequence converges to weakly in and the sequence converges to weakly in .

Then we can verify the existence of the minimizers to the least-squares formulation (2.1).

Theorem 1.

Proof.

Since and are nonempty, there exists a minimizing sequence in such that

| (2.3) |

By Assumptions 1–2, is a coercive functional and the graph norm is uniformly coercive, thus it follows (1.5) that the sequence is uniformly bounded. Then there exists a subsequence of , still denoted by , and some such that converges to weakly in and converges to weakly in . As and are weakly sequentially closed by Assumption 3, there hold

| (2.4) |

From the weak lower semicontinuity of the norms and , we have

Together with the lower semicontinuity of the regularization term , we can deduce that

Hence it follows (2.3) that is indeed a minimizer of the functional in . ∎

2.2 Conditional stability

In this subsection, we present some conditional stability estimates of the total least-squares formulation (2.1) for the general inverse medium problems. First we introduce two pairs and that satisfy

| (2.5) |

Letting be the unique minimizer of (2.1) in a neighborhood of , we study the approximation error to illustrate the stability of the least-squares formulation (2.1) with respect to the measurement and also the term in the governing equation (1.1). Denote the residual of the governing equation by . As is the local minimizer of functional in (1.5), we have . Therefore, by the definition of we have the inequality

| (2.6) |

which directly leads to the following observation on :

| (2.7) |

If is coercive, (2.7) provides a rough estimate of the reconstruction with respect to the data noise.

We can further derive an estimate of the approximation error , under the following assumption on the operator .

Assumption 4.

There exists a norm on such that for any ,

Assumption 4 holds when belongs to a finite rank subspace of and for all non-zero . We can now deduce the following result of the approximation error .

Lemma 1.

Proof.

Using the bilinear property of , one can rewrite the difference as

| (2.9) |

By Assumption 2, admits an inverse operator from to , which, together with (2.5), (2.9) and the definition of , implies

| (2.10) |

Plugging (2.10) into (2.6) leads to an inequality:

| (2.11) |

It follows Assumption 4 that there exists a norm such that

| (2.12) |

Then we can deduce from (2.11), the triangle inequality, the boundedness of and (2.12) that

where is a constant, which completes the proof. ∎

The rest of this section is devoted to verifying the consistency of the least-squares formulation (2.1) as the noise level of measurement goes to zero, which is an essential property of a regularization scheme. If we choose an appropriate regularization parameter according to the noise level of the data, we can deduce the convergence result of the reconstructed coefficients associated with the regularization parameter . More precisely, given a set of exact data , we consider a parametric family such that . In the rest of this section, we denote the functional in (1.5) with and by , and the minimizer of by . Then we justify the consistency of the least-squares formulation (2.1) by proving the convergence of the sequence of minimizers to the minimum norm solution [6] of the system (2.5) as .

Theorem 2.

Proof.

As is the minimizer of , there holds

| (2.13) |

By definition, , and thus is uniformly bounded. Following the similar argument in the proof of Theorem 1, there exist a subsequence of , still denoted as , and some such that converges to weakly in . By Assumption 3, we have

From (2.13) one can also derive that

| (2.14) |

which implies

Therefore, as , will converge to satisfying

| (2.15) |

Recall that one has an estimate of from (2.14) that

which leads to

as . Using the lower semicontinuity of functional , one obtain that

Together with (2.15), we conclude that is a minimum norm solution of (2.5). ∎

3 ADI method and convergence analysis

An important feature of the least-squares formulation (2.1) is that the functional is quadratical and convex with respect to each variable and . This unique feature facilitates us naturally to minimize the functional effectively by the alternating direction iterative (ADI) method [11, 12] so that only two quadratical and convex suboptimizations of one variable are required at each iteration. We shall carry out the convergence analysis of the ADI method in this section for general inverse medium problems.

Alternating direction iterative method for the minimization of (1.5).

Given an initial pair , find a sequence of pairs for as below:

-

•

Given , find by solving

(3.1) -

•

Given , find by solving

(3.2)

We shall establish the convergence of the sequence generated by the ADI method, under Assumptions 1–3 on the least-squares formulation (2.1). For this purpose, we would like to introduce the Bregman distance [21] with respect to ,

| (3.3) |

which is always nonnegative for convex function . Now we are ready to present the following lemma on convergence of the sequence generated by (3.1)–(3.2).

Lemma 2.

Proof.

Using the optimality condition satisfied by the minimizer of (3.1), we deduce

| (3.5) |

Similarly, from the optimality condition satisfied by the minimizer of (3.2), we obtain

Taking , and in (3.3), we can derive that

| (3.6) |

The following equality hold for the first term in (3.6):

| (3.7) |

Plugging (3.7) into (3.6), it follows that

| (3.8) |

As the sequence is generated by ADI method (3.1)–(3.2), in each iteration the updated (resp. ) minimizes the functional (resp. ), which would lead to

for all . Then we further derive from (3.5) and (3.8) that for any , satisfies

| (3.9) |

which implies that is bounded. Then we can conclude using Assumption 2 that forms a Cauchy sequence and thus converges to some . Since is uniformly bounded for all , we can derive that converges to some from the strict convexity of . As the sequence is monotone decreasing, there exists a limit . Following the similar argument in the proof of Theorem 1, we conclude that by Assumption 3. This completes the proof of the convergence. ∎

Remark 2.

4 Diffusive optical tomography

We take the diffusive optical tomography (DOT) as an example to illustrate the total least-squares approach for the concrete inverse medium problem in this section. We will introduce the mixed regularization term, present the least-squares formulation of the first-order system of DOT, and then verify the assumptions in Section 2 for the proposed formulation. We shall use the standard notations for Sobolev spaces. The objective of the DOT problem is to determine the unknown diffusion and absorption coefficients and simultaneously in a Lipschitz domain () from the model equation

| (4.1) |

with a pair of Cauchy data on the boundary , i.e.,

Throughout this section, we shall use the notation in the total least-squares formulation, where denotes the Neumann to source map. To define the Neumann to source map, we first introduce the boundary restriction mapping on , i.e., denotes the boundary value of . Then we use to denote the trace operator [22], which is formally defined to be the unique linear continuous extension of as an operator from onto . Using Riesz representation theorem, there exists a function in , denoted by , such that for any ,

which will be denoted by in the least-squares formulation.

4.1 Mixed regularization

In this subsection, we present the mixed regularization term for the DOT problem. As the regularization term in the least-squares formulation (2.1) shall encode the priori information, e.g., sparsity, continuity, lower or upper bound and other properties of the unknown coefficients, it is essential to choose an appropriate regularization term for a concrete inverse problem to ensure satisfactory reconstructions. In this work, we introduce a mixed – regularization term for a coefficient :

| (4.2) |

In this revision we change the semi-norm of into the full norm in the regularization to ensure the strict convexity of . where is given by

and and are the lower and upper bounds of the coefficient respectively. The first term is the regularization term, the second term corresponds to the regularization, and the third term enforces the reconstruction to meet the constrains of the coefficient.

In practice, the regularization enhances the reconstruction and helps find geometrically sharp inclusions, but might cause spiky results. The regularization generates the reconstructions with overall clear structures, while the retrieved background may be blurry. Compared with other more conventional regularization methods, this mixed regularization technique in (4.2) combines two penalty terms and effectively promotes multiple features of the target solution, that is, it enhances the sparsity of the solution while it still preserves the overall structure at the same time. The scalar parameters and need to be chosen carefully to compromise the two regularization terms.

4.2 First-order formulation of DOT

As we have emphasized in the Introduction, it may have some advantages to make use of the residuals of the first-order system of the model equation (4.1), instead of the residual of the original equation in the formulation (2.1), when we aim at recovering two unknown coefficients and simultaneously. Similarly to the formulation (2.1), we now have , and the operator is given by

| (4.3) |

where we have introduced an auxiliary vector flux and each entry of is of a first-order form such that two coefficients are separated naturally. Clearly, is still bilinear with respect to the state variables and coefficients . Using the first-order system, we can then come to the following total least-squares functional:

| (4.4) |

where is the trace operator, and and are the corresponding mixed regularization terms of and defined as in (4.2), , are lower and upper bounds of , and , are lower and upper bounds of . We shall minimize (4.4) over , that is, , .

We will apply the ADI method to solve the least-squares formulation of and :

| (4.5) |

Given an initial guess , we find a sequence for as below:

-

•

Given , , find , by solving

-

•

Given , , find , by solving

4.3 Well-posedness of the least-squares formulation for DOT

Recall that we have proved the well-posedness of the least-squares formulation in Section 2 for general inverse medium problems. This part is devoted to the verification of assumptions in Section 2 for the formulation (4.5) to ensure its well-posedness.

Firstly we consider Assumption 1 on the regularization terms. It is observed from the formula (4.2) that each term of and in (4.5) is convex and weakly lower semicontinuous. As the first term of (4.2) is the regularization term, and are strictly convex by definition. One can also observe that there exists a positive constant such that

| (4.6) | |||

which imply that and are coercive in -norm.

Next we verify Assumption 2 on the closedness of and the coercivity of its graph norm. For fixed , we denote the entries of by , , i.e., for ,

Applying Hölder’s inequality leads to

| (4.7) |

for in the level set for some constant . Then we can deduce from (4.7) that is a closed linear operator. Next, we verify the coercivity of the graph norm. For simplicity, we consider the model problem with homogeneous Neumann boundary condition for the state variable, and set a side constraint for as on . Introduce the following notations

| (4.8) |

From (4.8) and integration by part, we can derive

which implies

| (4.9) |

for some constant when satisfies and for some constant . In this way we verify that the graph norm corresponding to defined as

is uniformly coercive.

Then we consider Assumption 3 on the weakly sequentially closedness of operators and . It is noted that Assumption 3 is applied in Section 2 for general inverse medium problems to prove that the operator maps a subsequence of a bounded sequence to a converging sequence with its limit equal to , where is the limit of . In the analysis of the concrete DOT problem (4.1), the corresponding sequence is actually bounded in a stronger norm than and , as shown in (4.6) and (4.9). As stated in Remark 1, to prove the well-poseness of the least-squares formulation (4.5), it suffices to verify that for a sequence bounded in , there exists a subsequence weakly converging to , and operator defined in (4.3) satisfies weakly converges to in .

The verification of the desired property of is as follows. Given the bounded sequence , there exists a subsequence, still denoted as , weakly converging to in . Denoting by and by , there holds for any that

where we have used the weak convergence of and Hölder’s inequality. Therefore, weakly converges to in . On the other hand, as is the trace operator on in DOT problem, it is linear and thus weakly sequentially closed, which completes our verification.

5 Numerical experiments

In this section, we carry out some numerical experiments on the DOT problem in different scenarios to illustrate the efficiency and robustness of the proposed two-stage algorithm in this work. Throughout these examples, we shall assume that we apply the Neumann boundary data on and measure the corresponding Dirichlet data to reconstruct the diffusion coefficient and the absorption coefficient simultaneously. The basic algorithm involves two stages: we apply the direct sampling method (DSM) in the first stage to get some initial approximations of the two unknown coefficients, and then adopt the total least-squares method to achieve more accurate reconstructions of the coefficients.

5.1 Direct sampling method for initialization

For all the numerical experiments, we shall use the DSM in the first stage of our algorithm, in an attempt to effectively locate the multiple inclusions inside the computational domain with limited measurement data. Here we give a brief description of DSM and refer the readers to [19] for more technical details about this DSM that can identify multiple coefficients. The DSM develops two separate families of probing functions, i.e., the monopole and dipole probing functions, for constructing separate index functions for multiple physical coefficients. The inhomogeneities of coefficients can be approximated based on index functions due to the following two observations: the difference of scattered fields caused by inclusions can be approximated by the sum of Green’s functions of the homogeneous medium and their gradients; and the two sets of probing functions have the mutually almost orthogonality property, i.e., they only interact closely with the Green’s functions and their gradients respectively. Thus we can decouple the monopole and dipole effects and derive index functions for separate physical properties.

In practice, if the value of an index function for one physical coefficient at a sampling point is close to , the sampling point is likely to stay in the support of inhomogeneity; whereas if is close to , the sampling point probably stays outside of the support. Hence the index functions give an image of the approximate support of the inhomogeneity, and we can determine a subdomain to locate the support from index functions. The subdomain could be chosen as with being a suitable cut-off value, then we restrict the index function on . We adopt this choice in the numerical experiments to remove the spurious oscillations in the homogeneous background. Once we have the restricted index function , we set the value of the approximation in as , where the constant is some priori estimate of the true coefficient, and set the value of outside of as the background coefficient. In this way, we obtain as the initial guess for the total least-squares method for our further reconstruction in the second stage.

5.2 Examples

We present numerical results for some two-dimensional examples and showcase the proposed two-stage least-squares method for inverse medium problems using both exact and noisy data. Here the objective functional in (4.4) is discretized by a staggered finite difference scheme [23, 24]. The computational domain is divided into a uniform mesh consisting of small squares of width . The noisy measurements are generated point-wise by the formula

where is the relative noise level and follows the standard Gaussian distribution. The subdomain is chosen using the formula

| (5.1) |

where is the index function from DSM and the cutoff value is taken in the range . As there exist limited theoretical results for the choice of regularization parameters for mixed regularization that we use, the regularization parameters in the functional for reconstructions are determined in a trial-and-error manner, and we present them for different examples in Table 1. The maximum number of alternating iterations is set at 50.

All the computations were performed in MATLAB (R2018B) on a desktop computer.

Example 1.

We shall use only one set of measurements to reconstruct the diffusion and absorption coefficients. It is shown in Fig. 1(b) and 1(f) that the index functions from the DSM separate inclusions of different physical nature well and give approximated locations, while the exact locations of small inclusions are difficult to detect. If we simply take the maximal points of the index functions in Fig. 1(b) and 1(f) as locations of the reconstructed inclusions, we may not be able to identify the true locations of inhomogeneity. Then we set the subdomain using information from the first stage by (5.1), see Fig. 1(c), 1(g), and set the value of approximation out of the subdomain as equal to the background coefficients. As in Fig. 1(d), 1(h), this example illustrates that the least-squares formulation in the second stage works very well to improve the reconstruction and provides a much more accurate location than that provided by DSM. With noise in the measurements, the reconstructions remain accurate as in Fig. 2, which shows that the two-stage algorithm gives quite stable final reconstructions with respect to the noise even though the DSM reconstructions become more blurry in Figs. 2(b) and 2(f). The regularization parameters are presented in Table 1.

Compared with the reconstructions derived from the DSM, the improvement of the approximations for both and is significant: the recovered background is now mostly homogeneous, and the magnitude and size of the inhomogeneity approximate those of the true coefficients well. These results indicate clearly the significant potential of the proposed least-squares formulation with mixed regularization for inverse medium problems.

From Table 1 we obtain an insightful observation about the mixed regularization: the magnitude of parameter is much larger than that of . We can conclude that the penalty plays a predominant role in improving the performance of reconstruction for such inhomogeneous coefficients, whereas the penalty yields a locally smooth structure.

| Noise level | ||||

|---|---|---|---|---|

| Example | ||||

| 1 | (1.0e-2, 2.0e-2 ) | (5.0e-4, 5.0e-4) | (1.0e-2, 2.0e-2) | (5.0e-4, 1.0e-3) |

| 2.1 | (1.0e-3, 5.0e-3) | N/A | (1.0e-3, 1.0e-2) | N/A |

| 2.2 | (1.0e-6, 1.0e-3) | N/A | (1.0e-6, 2.0e-3) | N/A |

| 3 | (1.0e-3, 1.0e-2) | (1.0e-2, 5.0e-3) | (1.0e-3, 2.0e-2) | (1.0e-2, 5.0e-3) |

| 4 | (1.0e-5, 5.0e-4) | N/A | (1.0e-5, 1.0e-3) | N/A |

Example 2.

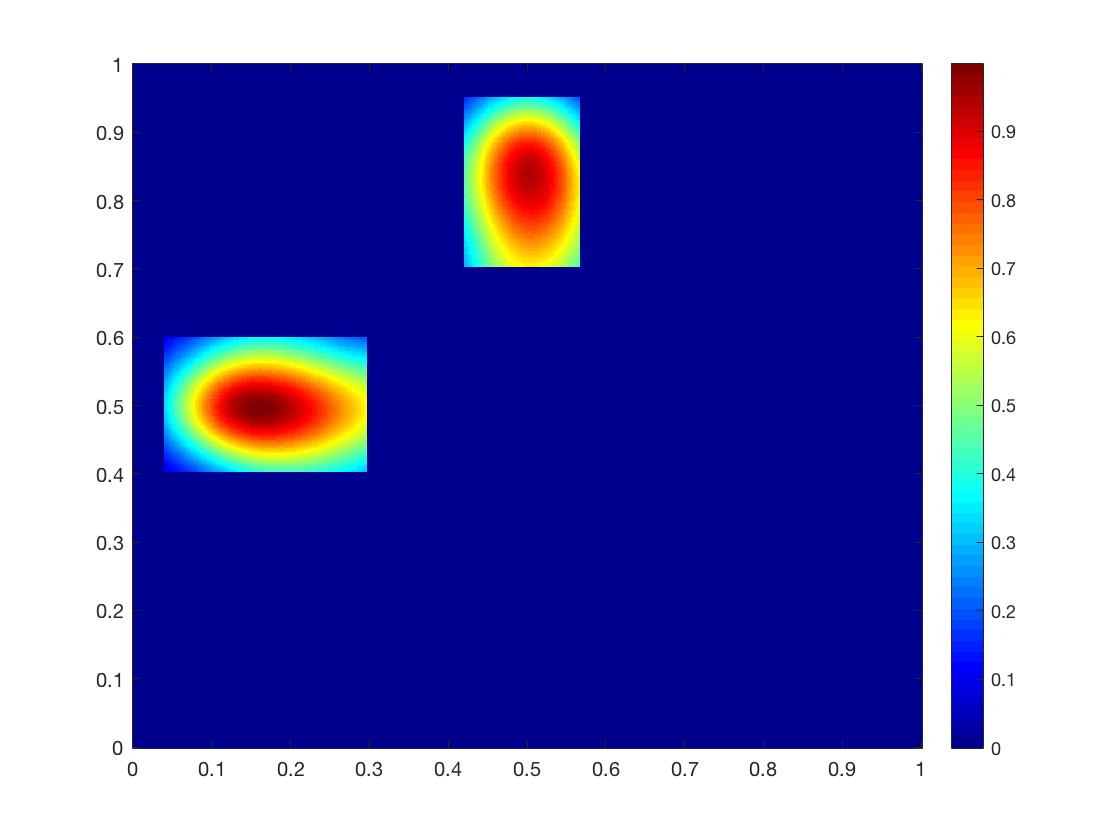

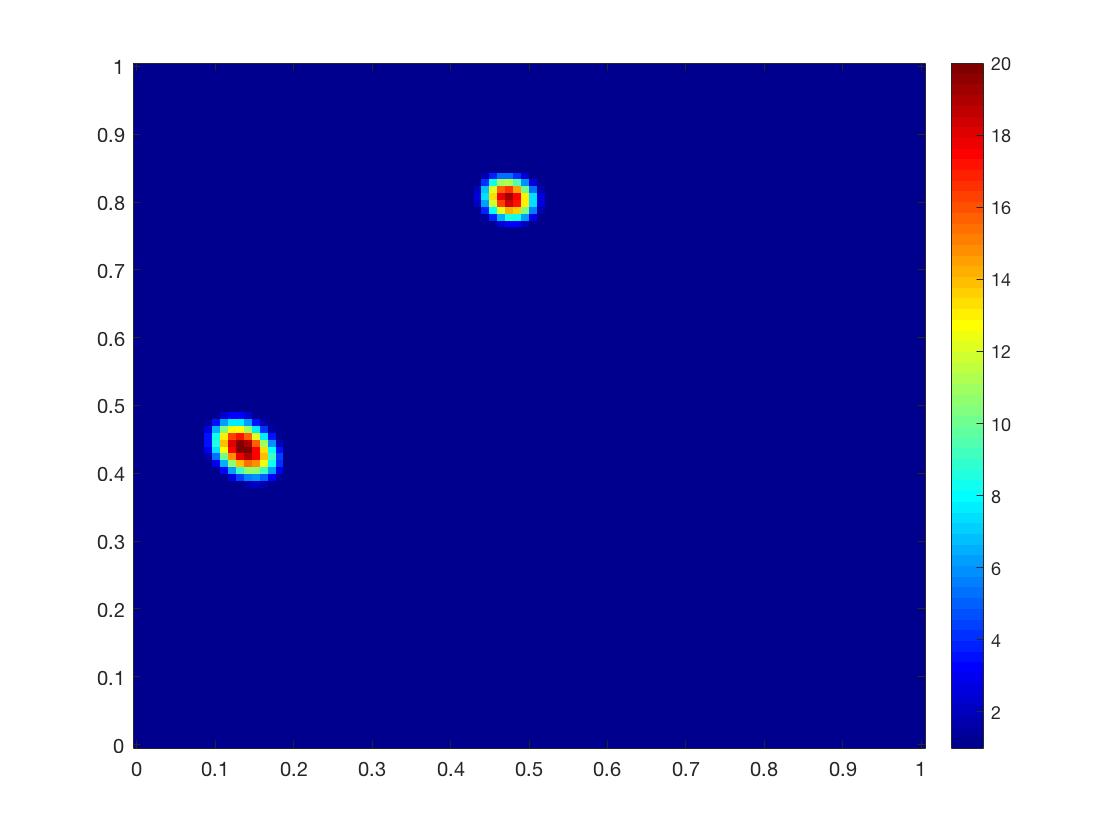

We implement the algorithm to reconstruct the discontinuous diffusion coefficient with two inclusions. We assume that is known and equal to the background coefficient in this example. One set of data is measured to locate two inclusions of , which are centered at and respectively and of width . is taken to be inside the regions as shown in Fig. 3(a).

The reconstructions for using exact measurements and measurements with noise are shown in Fig. 3. In the first stage, the DSM gives the index function that separates these two inclusions well using only one set of data as in Fig. 3(b), while some inhomogeneity is observed in the background. This phenomenon comes from the ill-posedness of inverse medium problems and also the oscillation of fundamental solutions used in the DSM. Even so, the approximations in Fig. 3(b) and 3(f) still provide the basic modes of the inhomogeneity. We can identify that there are two inclusions, and capture the subdomain for the second stage in Figs. 3(c) and 3(g). The least-squares formulation with mixed regularization significantly improves the reconstruction: the locations of both inclusions are captured better with clear background and accurate size in Figs. 3(d), 3(h). Comparing with Figs. 3(d) and 3(h), one can observe that the reconstruction deteriorates only slightly in that the left inclusion shrinks a little bit when the noise level increases from 0 to . This example verifies that the two-stage algorithm is very robust with respect to the data noise.

Example 3.

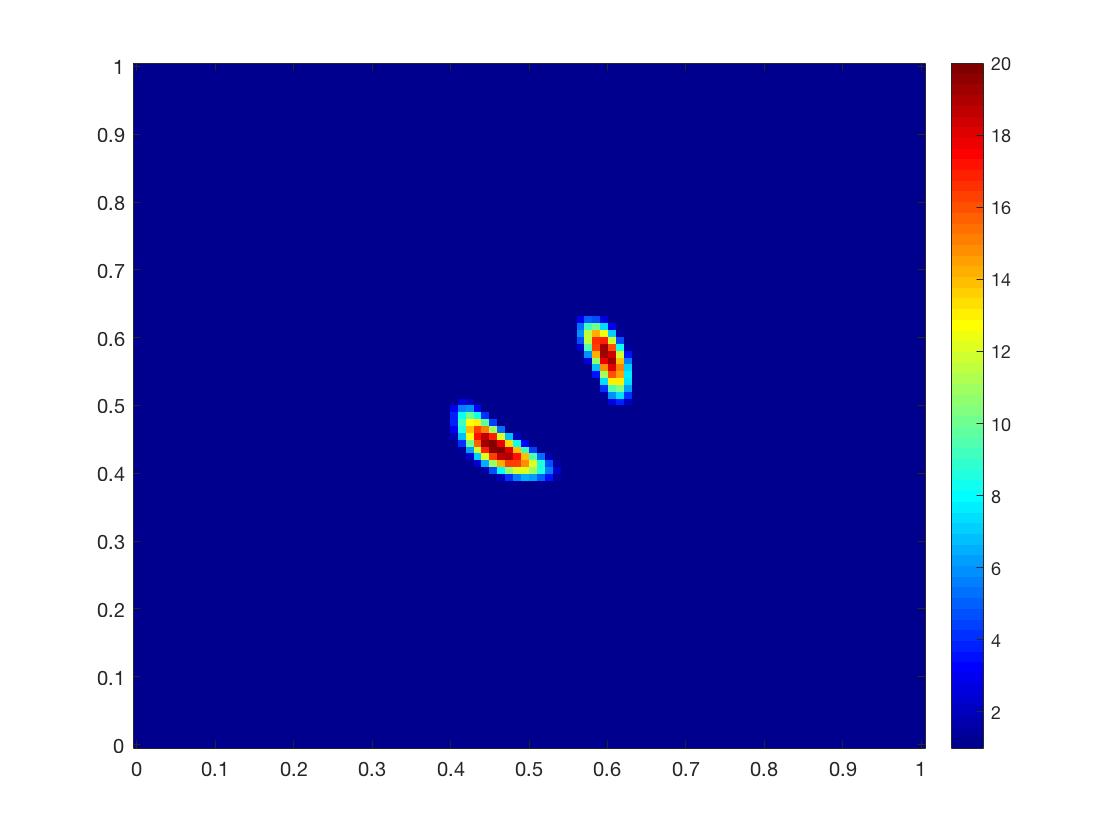

In this example we reconstruct two inclusions of diffusion coefficient that stay very close to each other. The two inclusions of diffusion coefficient are centered at and respectively and of width as shown in Fig. 4(a). The coefficient is in both regions.

The two inclusions in Example 2 are relatively far from each other, while in Example 3 we consider the case when two inclusions are quite close to each other, which is more challenging as it would be difficult to distinguish these two separated inclusions and reconstruct their locations and magnitudes precisely. As shown in Fig. 4(b), the index function from DSM presents limited information on the diffusion coefficient with only one set of data, and shows one connected inclusion. With noise, the index function is blurred a lot as shown in Fig. 4(f). In both noisy and noiseless cases, only one subdomain can be detected from this index function from the first stage. The second stage still presents well separated reconstructions with the size and magnitude that match the exact diffusion coefficient well as shown in Fig. 4(d). For the case with noise, this two-stage algorithm also gives satisfying reconstruction in Fig. 4(h). Compared with Fig. 4(d), it is observable that the left inclusion moves towards x-axis and elongates a little bit, while we can still tell the sizes and locations of two inclusions from the reconstruction. This shows that the least-squares method provides much more details than DSM and is relatively robust with respect the the noise in the measurement.

Note that for the noise level higher than , the DSM can not provide a feasible initial guess for the least-squares method in the second stage with only one set of data, but with an initial guess that reflects the mode of the true coefficient, the least-squares method in the second stage has great tolerance of noise as shown in other examples.

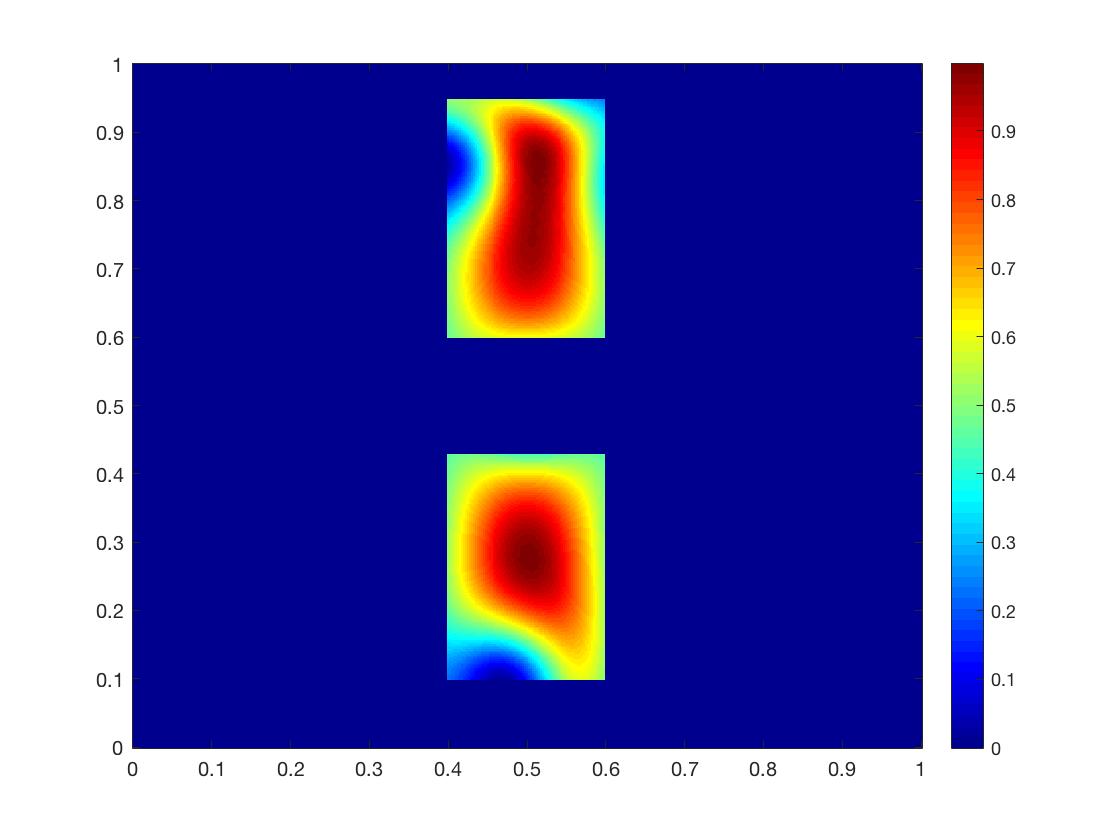

Example 4.

With this example, we reconstruct and simultaneously, with two inclusions for each coefficient. The inclusions are in the following scenario: The inclusions of diffusion coefficient are of width and centered at , respectively, and the magnitude inside the region is ; The inclusions of absorption coefficient are of width and centered at , respectively, and the magnitude inside the region is , as shown in Figs. 5(a) and 5(e).

In Example 1, we have one inclusion for coefficients and each, and it is shown that this algorithm can reconstruct both medium coefficients well. Example 4 is more challenging than Example 1, as the existence of two inclusions for each coefficient will influence the reconstruction of the other coefficient. As shown in Figs. 5(b) and 5(f), the index functions separate these inclusions well and give a rough approximation for their locations with only one set of data. However, it can be observed that the maximal points of index functions actually differ from the exact coefficients, and one can not capture the information of inclusions by simply taking square of the index functions. In the second stage of the proposed algorithm, the locations are modified as shown in Figs. 5(d) and 5(h). When we consider the case with noise, DSM provides blurry approximations as shown in Figs. 6(b) and 6(f). In the second stage of reconstruction, Figs. 6(d) and 6(h) present results almost the same as Figs. 5(d) and 5(h), which once again prove the robust performance of the least-squares method.

Example 5.

In this example we reconstruct diffusion coefficient with ring-shaped square inclusion as shown in Fig. 7(a) with two sets of measurement. The outer and inner side length of the ring are and , and the rectangle ring is centered at . The coefficient is taken to be inside the region.

We use two sets of measurement from different directions for the two-stage least-squares method. For this challenging case, the index function from the DSM only reflects an approximated location of inclusion as in Fig. 7(b), and we have no clue about the shape of inclusion from only DSM reconstruction. With two sets of measurement, the least-squares formulation can reconstruct the edges of the ring-shape inclusion as shown in Fig. 7(d). After adding noise, one has the reconstruction (see Fig. 7(h)) that is very similar to the result without noise, which shows the approximation is quite stable with respect to the noise.

References

- [1] S. R. Arridge, Optical tomography in medical imaging, Inverse Problems, 15(2):R41, 1999.

- [2] A. P. Gibson, J. C. Hebden, and S. R. Arridge, Recent advances in diffuse optical imaging, Phys. Med. Biol., 50(4):R1, 2005.

- [3] O. Dorn, H. Bertete-Aguirre, J. G. Berryman, and G. C. Papanicolaou, A nonlinear inversion method for 3d electromagnetic imaging using adjoint fields, Inverse Problems, 15(6):1523, 1999.

- [4] G. Bao and P. Li, Inverse medium scattering problems for electromagnetic waves, SIAM J. Appl. Math., 65(6):2049–2066, 2005.

- [5] M. Cheney, D. Isaacson, and J. C. Newell, Electrical impedance tomography, SIAM Rev., 41(1):85–101, 1999.

- [6] K. Ito and B. Jin, Inverse problems: Tikhonov theory and algorithms, World Scientific, Hackensack, NJ, 2014.

- [7] R. V. Kohn and M. Vogelius, Relaxation of a variational method for impedance computed tomography, Commun. Pure Appl. Math., 40(6):745–777, 1987.

- [8] A. Wexler, B. Fry, and M. R. Neuman, Impedance-computed tomography algorithm and system, Appl. Opt., 24(23):3985–3992, 1985.

- [9] E. Chung, K. Ito and M. Yamamoto, Least square formulation for ill-posed inverse problems and applications, Appl. Anal., 1–15, 2021.

- [10] R. Lattes, J.L. Lions, The method of quasi-reversibility. applications to partial differential equations, Elsevier, New York, 1969.

- [11] G. Tusnady, I. Csiszar, Information geometry and alternating minimization procedures, Statistics and Decisions, Supp.1:205–237, 1984.

- [12] C. L. Byrne, Iterative optimization in inverse problems, CRC Press, 2014.

- [13] D. C. Jespersen, A least squares decomposition method for solving elliptic equations, Math. Comput., 31(140):873–880, 1977.

- [14] P. B. Bochev and M. D. Gunzburger, Finite element methods of least-squares type, SIAM Rev., 40(4):789–837, 1998.

- [15] A. I. Pehlivanov, G. F. Carey, and R. D. Lazarov, Least-squares mixed finite elements for second-order elliptic problems, SIAM J. Numer. Anal., 31(5):1368–1377, 1994.

- [16] P. B. Bochev and M. D. Gunzburger, Least-squares finite element methods, volume 166, Springer Science & Business Media, 2009.

- [17] K. Ito, B. Jin, and J. Zou, A two-stage method for inverse acoustic medium scattering, J. Comput. Phys., 2013, 237: 211–223, 2012.

- [18] Y. T. Chow, K. Ito, and J. Zou, A direct sampling method for electrical impedance tomography, Inverse Problems, 30(9):095003, 2014.

- [19] Y. T. Chow, F. Han, and J. Zou, A direct sampling method for simultaneously recovering inhomogeneous inclusions of different nature, arXiv preprint arXiv:2005.05499, 2020.

- [20] B. Harrach, On uniqueness in diffuse optical tomography, Inverse Problems, 25(5):055010, 2009.

- [21] L. M. Bregman, The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming, USSR Comput. Math. and Math. Phys., 7(3):200–217, 1967.

- [22] V. Girault and P. A. Raviart, Finite element methods for Navier-Stokes equations: theory and algorithms, volume 5, Springer Science & Business Media, 2012.

- [23] J. Virieux, Sh-wave propagation in heterogeneous media: Velocity-stress finite-difference method, Geophysics, 49(11):1933–1942, 1984.

- [24] J. O. A. Robertsson, J. O. Blanch, and W. W. Symes, Viscoelastic finite-difference modeling, Geophysics, 59(9):1444–1456, 1994.

- [25] M. Burger and S. Osher, Convergence rates of convex variational regularization, Inverse Problems, 20(5):1411, 2004.

- [26] Y. T. Chow, K. Ito, K. Liu, and J. Zou, Direct sampling method for diffusive optical tomography, SIAM J. Sci. Comput., 37(4):1658-A1684, 2015.

- [27] Y. T. Chow, K. Ito, and J. Zou, A time-dependent direct sampling method for recovering moving potentials in a heat equation, SIAM J. Sci. Comput., 40(4):2720-A2748, 2018.