Learning with Noisy Ground Truth: From 2D Classification to 3D Reconstruction

Abstract.

Deep neural networks has been highly successful in data-intense computer vision applications, while such success relies heavily on the massive and clean data. In real-world scenarios, clean data sometimes is difficult to obtain. For example, in image classification and segmentation tasks, precise annotations of millions samples are generally very expensive and time-consuming. In 3D static scene reconstruction task, most NeRF related methods require the foundational assumption of the static scene (e.g. consistent lighting condition and persistent object positions), which is often violated in real-world scenarios. To address these problem, learning with noisy ground truth (LNGT) has emerged as an effective learning method and shows great potential. In this short survey, we propose a formal definition unify the analysis of LNGT LNGT in the context of different machine learning tasks (classification and regression). Based on this definition, we propose a novel taxonomy to classify the existing work according to the error decomposition with the fundamental definition of machine learning. Further, we provide in-depth analysis on memorization effect and insightful discussion about potential future research opportunities from 2D classification to 3D reconstruction, in the hope of providing guidance to follow-up research.

1. Introduction

“Can machines think?” This innovative question was raised in Alan Turing’s paper entitled “Computing Machinery and Intelligence” (Turing, 2009). Suppose putting a machine player in an “imitation game”, he stated that the best strategy for the machine is to try to provide answers that would naturally be given by a man. In other words, the ultimate goal of machines is to be as intelligent as humans. Over the past few decades, with the emergence of advanced models and algorithms (e.g. convolutional neural networks (CNNs) (Krizhevsky et al., 2012), transformers (Vaswani et al., 2017), GPTs (Radford et al., 2018)), large-scale data sets (e.g. ImageNet (Deng et al., 2009) with 1000 image classes), powerful computing frameworks and devices (e.g. GPU and distributed platforms), AI speeds up its pace to be like humans and supports many fields of daily life, such as search engines, autonomous driving cars, industrial robots and the recent popular chatGPT based on GPT-4 (Achiam et al., 2023).

Albeit its prosperity, the superior performance of current deep neural networks (DNNs) owns much to the availability of large-scale correctly annotated datasets, especially in supervised learning tasks. For example, image classification and segmentation always expect and assume a perfectly annotated large-scale training set. However, it is extremely time-consuming and expensive, sometimes even impossible to label a new dataset containing fully correct annotations. Typically, creating a regular dataset requires two steps: data collection and annotating process, involving two kinds of noise in the literature — feature noise and ground truth noise (Zhu and Wu, 2004). Feature noise corresponds to the corruption of input data features, while ground truth noise refers to the change of ground truth from its actual one (e.g. in image classification task, by incorrectly annotating a dog label to a cat image). Both noise types can cause a significant decrease in the performance, while ground truth noise is considered to be more harmful (Frénay and Verleysen, 2013) as the ground truth is unique for each sample while features are multiple. For example, in video classification, video data contain audio, script and vision feature. The importance of each feature varies while the ground truth label always has a significant impact.

To alleviate this problem, one may obtain the data with lower quality annotations efficiently through online keywords queries (Li et al., 2017). Similarly, the expensive annotating process can be crowdsourced with the help of platforms such as Amazon Mechanical Turk 111http://www.mturk.com and Crowdflower 222http://crowdflower.com, which effectively decrease cost. Another widely used approach is to annotate data with automated systems. However, all these approaches inevitably introduce the noisy ground truth. Moreover, noisy ground truth can occur even in the case of expert annotators, such as brain images 333https://adni.loni.usc.edu/. Even domain experts make mistakes because data can be extremely complex to be classified correctly (Lloyd et al., 2004). Also, label noise can be injected intentionally to protect patients’ privacy in image classification (van den Hout and van der Heijden, 2002).

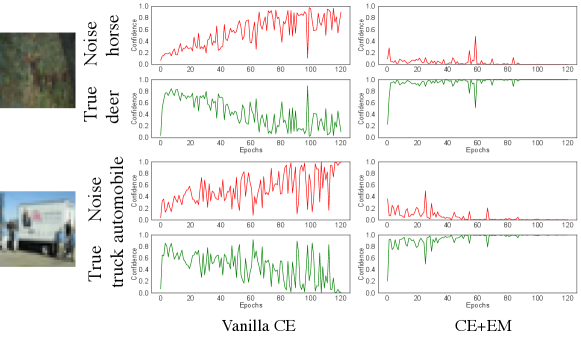

In classification task, when DNNs model is trained with a noisy training set consisting of clean and mislabeled samples, it has been widely observed that the model outputs tend to severe fluctuate (Lu, 2022) then the model memorizes the noise. In Figure 1, we plot the softmax output probabilities corresponding to the noisy label and true label throughout the training. During the Vanilla training using cross entropy (CE) loss, the outputs can vibrate with large oscillations. Take the first row as an example, a deer image is mislabeled as a horse. In the training, the model begins with a high probability to indicate it is a deer image since the clean deer samples would encourage the model to predict this deer image as a deer. However, with the learning continuing, the deer samples with horse labels pull the model back to predict this deer image as a horse. Consequently, the model memorizes the wrong labels thus degrading the classifier prediction accuracy. In (Lu, 2022), it was experimented that by simply adding a weighted entropy term to minimize prediction entropy constricts the randomness of model predictions, allowing the model to produce consistent and correct predictions. The right column in Figure 1 shows the results after adding the entropy term to CE. It can be observed that the output probability on the latent true label become more stable compared to using CE.

In addition to the extensive use of DNNs in 2D classification tasks, the emerged 3D applications of novel view synthesis in fields such as robotics for action planning, 3D scene reconstruction, and AR/VR is gradually being explored with DNNs. Specifically, 3D scene reconstruction methods like Neural Radiance Fields (NeRF) (Mildenhall et al., 2020; Barron et al., 2022) and 3D Gaussian Splatting (3DGS) (Kerbl et al., 2023) has greatly propelled the development of novel view synthesis. For example, NeRF-based methods have recently revolutionized 3D classical task, by storing 3D representations within the weights of a neural network. These representations are optimized by back-propagating image differences. When the fields store view-dependent radiance and volumetric rendering is employed we can capture 3D scenes with photo-realistic accuracy. However, these methods are built upon certain critical assumptions, including consistent lighting conditions and persistent object positions. These assumptions are frequently violated in real-world scenarios, which leads to artifacts, significant degradation in rendering quality. Thus, the recent popular challenge encountered in novel view synthesis is reconstructing the static and clean scene from noisy images containing distractors, which disrupts the assumption of the static scene. In this survey, We show that it is similar to the learning with noisy labels in 2D classification and propose a simple idea to solve it.

Contributions of this survey can be summarized as follows:

-

•

We exploit and connect the memorization effect of LNGT in 2D classification to 3D reconstruction. We are the first work to investigate the memorization effect in 3D scene reconstruction (e.g .NeRF and 3DGS) optimization process.

-

•

We provide a formal definition on LNGT, which naturally connects to the classic machine learning definition. The definition is not only general enough to include existing LNGT works but also specific enough to clarify what the goal of LNGT is and how we can solve it.

-

•

Based on our definition, we point out that the core issue of LNGT is the unreliable empirical risk minimizer, which is analyzed based on error decomposition in classic machine learning. This provides insights to improve LNGT in a more organized and systematic way and help us perform an extensive literature review.

2. Memorization Effect

2.1. 2D Classification

Supervised Classification. Considering a supervised classification problem with classes, suppose be the input space, is the ground-truth label space in an one-hot manner. In practice, the joint distribution over is unknown. We have a training set which are independently sampled from joint distribution . Assume a mapping function class wherein each maps the input space to -dimensional score space, we seek that minimizes an empirical risk for a certain loss function .

Classification with Noisy Labels. Our goal is to learn from a noisy training distribution where the labels are corrupted, with probability , from their true distribution . Given a noisy training set , the observable noisy label has a probability of to be incorrect. Suppose the mapping function is a deep neural network classifier parameterized by . maps an input to -dimensional logits . We obtain conditional probability of each class by using a softmax function , thus . Then the empirical risk on using cross-entropy loss is

| (1) |

When directly optimizing by stochastic gradient descent (SGD), the DNNs have been observed to completely fit the training set including mislabeled samples eventually (see Fig. 2 right most column), resulting in the test performance degradation in the later stage of training. In addition, the clean samples tend to have smaller loss values than the mislabeled samples in early stage (Lu et al., 2022b). We analyze the normalized loss distribution over different training epochs in Fig. 2 top row. Intriguingly, the two distributions are merged at the initialization, then start to separate, but resume merging after the certain point.

To alleviate the impact of noisy labels in training data, existing work Bootstrap (Reed et al., 2015a) proposes to generate soft target by interpolating between the original noisy distributions and model predictions by , where weights the degree of interpolation. Thus the cross-entropy loss using Bootstrap becomes

| (2) |

A static weight (e.g. ) is applied as an approximate measure for the correction of a hypothetical noisy label. Another work M-correction (Arazo et al., 2019b) makes dynamic for different samples, i.e., using a noise model to individually weight each sample.

| (3) |

is dynamically set to posterior probability conditioned on loss value and the Gaussian Mixture Model (GMM) is estimated after each training epoch using the normalized cross entropy loss for each sample . Thus, correct samples rely on their given label ( is large), while incorrect ones let their loss being dominated by their class prediction or their predicted probabilities (1 - is large). In early mature stages of training the CNN model should provide a good estimation of the true class for noisy sample, shown in Fig 2 when epoch = 45.

2.2. 3D Scene Reconstruction

Neural Radiance Fields (NeRF) (Mildenhall et al., 2020) has made a breakthrough in novel view synthesis, which is capable of synthesizing photo-realistic images at arbitrary views. NeRF represents a 3D scene as a continuous radiance field, and synthesizes images with differentiable volumetric rendering. Recently, 3D Gaussian Splatting (Kerbl et al., 2023) has emerged as another promising paradigm for novel view synthesis, which synthesizes the image by rendering a set of learnable Gaussian points. Despite great development in novel view synthesis, the aforementioned methods are limited to static scenes with constant light conditions. They are struggling with multi-view images that contain distractors.

Existing novel view synthesis methods represent a 3D scene as a parameterized model , while facilitating a differentiable rendering technique to render an image as following:

| (4) |

where is the image pose. Concretely, Neural Radiance Fields (Mildenhall et al., 2020) leverages the Multilayer Perceptron (MLP) and volumetric rendering, whereas 3D Gaussian Splatting (Kerbl et al., 2023) is built upon a collection of learnable Gaussian points and splatting rasterization. However, both of them optimize a loss between the ground truth image and rendered image:

| (5) |

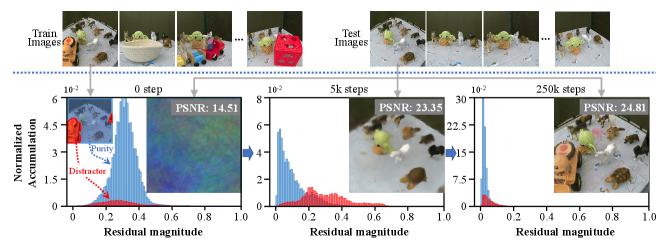

where and are the rendered color and ground truth color at pixel , respectively. In the training of NeRF and 3DGS with multi-view images containing distractors (noise), we observed the similar phenomenon of memorization effect as in classification task. This phenomenon refers to the parameters initially fitting purity and excluding distractor pixels in the early stages of training, gradually overfitting to distractor pixels in the later stages, as evidenced by the rendered image and histogram in Fig. 3 (a). It degrades the quality of rendered image in novel views. Most of the 3D reconstruction methods do not consider the case of distractor noise, while the most related work is RoubstNeRF (Sabour et al., 2023).

To improve it, we can simply generate a dynamic weight to indicate the distractor pixels similar as Equation (3). Then we analogy noisy ground truth color to noisy ground truth label and rendered color to predicted probabilities . Then the loss of new mask-NeRF is

| (6) |

Similar to the M-correction in classification task, leverages the memorization effect to effectively prevent distractors from overfitted, maintaining the position of the red bars unchanged as much as possible. Similar to classification, early mature stage (5k steps) enables a clear distinction between purity and distractor pixels. Subsequently, we can promote the fitting of purity pixels and suppress the learning of distractor pixels throughout the Mip-NeRF 360 training process to easily achieve sharp and clean view rendering in a noisy input scenario. For estimation of , we can use the GMM or other unsupervised model to fit the loss distribution to differentiate the clean pixels from distractor (noisy) pixels. One case results (BabyYoda) with GMM on RoubustNeRF dataset (Sabour et al., 2023) are shown in Fig. 3 (b). More qualitative comparison results with existing methods are shown in Fig. 4. This research direction is barely explored in 3D reconstruction area, more work need to be done to make the algorithm more robust in the near future.

3. Formal Definition of LNGT

LNGT is a sub-area in machine learning, before giving the formal definition of LNGT, let us recall how machine learning is defined in the existing literature.

Definition 3.1 (Machine Learning (Mitchell et al., 1997)).

A computer program is said to learn from experience with respect to some classes of task and performance measure , if its performance at tasks in , as measured by , can improve with .

The above definition can be generalized to a very wide range of practical applications. For example, consider an image classification task , a machine learning program improves its classification accuracy through obtained by training on a large number of labeled images (e.g. the ImageNet). Typically, existing machine learning applications, especially using deep neural networks as in the example mentioned above, require a lot of data samples with correct supervision information. However, this may be difficult or sometimes even impossible in real-world applications. LNGT is a special and more general case of machine learning, which targets at obtaining good learning performance given noisy supervised information in the training set, which consists of examples of inputs ’s along with their corresponding output ’s. Formally, we define LNGT in Definition 3.2.

Definition 3.2.

Learning with Noisy Ground Truth (LNGT) is a type of machine learning problems (specified by , and ), where is corrupted version of invisible clean , consists of clearn and wrong examples for the target task .

In any machine learning problem, usually there are prediction errors and one cannot obtain perfect predictions. In this section, we illustrate the core issue of LNGT based on error decomposition in supervised machine learning (Bottou and Bousquet, 2007). This analysis applies to LNGT including classification and regression tasks.

3.1. Notations

Consider a learning task , LNGT deals with a dataset consisting of a noisy training set , and a clean testing set . Let be the ground truth joint probability distribution of input and output , be the corrupted joint probability distribution of input and output . Let be the optimal hypothesis from to . LNGT learns to discover by fitting and testing on . For clarity, we assume be a set of clean training samples (i.e. inputs with correct labels) and be the mislabeled training samples (i.e. inputs with wrong labels). We have . Note that and are imagination sets which are unobservable. We define them only for clear explanations.

To approximate , the LNGT model determines a hypothesis space of hypotheses ’s, where denotes all the parameters used by . A LNGT algorithm is an optimization strategy that searches to find the that parameterizes the best . The LNGT performance is measured by a loss function defined over the prediction and the observed output over the test set.

3.2. Empirical Risk Minimization with Error Decomposition

Given a hypothesis , we want to minimize its expected risk , which is the loss measured with respect to . Specifically,

| (7) |

As is unknown, similar to regular machine learning tasks, the empirical risk, i.e., the average of sample losses over the noisy training set of samples,

| (8) |

is usually used as a proxy for , leading to empirical risk minimization (Mohri et al., 2018). However, in this case, minimizing usually leads to an estimation of , which is completely different from . Therefore, directly training models without any adjustment has been observed to lead to poor generalization performance (Zhang et al., 2018a). Here we can decouple the into and . Since is a set of clean samples, finding a hypothesis that only minimizes rather than leads to a better estimation of . For better illustration, let

-

•

be the function that minimizes the expected risk;

-

•

be the function in that minimizes the expected risk;

-

•

be the function in that minimizes the empirical risk;

-

•

be the function in that only minimizes the empirical risk of rather than (Assume it is achievable state during learning).

As is unknown, one has to approximate it by searching some . is the best approximation for in . is the best hypothesis in obtained by minimizing the whole empirical risk , while is the optimal hypothesis in that only minimizes . For simplicity, we assume that , , , and are unique. The total error can be decomposed as

where the expectation is with respect to the random choice of . The approximation error measures how close the functions in can approximate the optimal hypothesis . The estimation error measures the effect of minimizing the clean empirical risk instead of the expected risk within . The fitting error measures the effect of minimizing the full empirical risk instead of only the clean empirical risk .

3.3. Unreliable Empirical Risk Minimizer

As can be observed, the total error is influenced by (hypothesis space), (number of samples in ) and (number of samples in ). A special case is when , the LNGT reduces to regular learning problem.

Therefore, reducing the total error can be attempted from the perspectives of (1) data, which provides and ; (2) model, which determines ; and (3) algorithms, which searches for the optimal hypothesis that only fits .

In LNGT, the model would easily fit all noisy samples. The empirical risk may then be far from being a good approximation of the expected risk , and the resultant empirical risk minimizer overfits. Indeed, this is the core issue of LNGT, i.e., the empirical risk minimizer is no longer reliable. Therefore, LNGT is much harder. A comparison of learning with clean data and noisy data is shown in Figure 5. Compared to learning with clean data, both the estimation error and fitting error of LNGT increase.

4. Solutions

Almost all the existing works aim to reduce the learning errors to achieve noise robustness, we summarize them into three different categories (see Fig. 6).

4.1. Reduce estimation error

Similar to learning with clean data, the estimation error can be reduced by increasing the number of samples. Therefore, some methods use prior knowledge to augment . For example, Mixup (Zhang et al., 2018b) constructs virtual training samples by linearly combining two random samples’ features and labels. (Nishi et al., 2021) evaluated multiple augmentation strategies and found that using one set of augmentations for loss modeling tasks and another set for learning is the most effective in LNGT. MixNN (Lu and He, 2021) dynamically mixes the sample with its nearest neighbors to generate synthetic samples for noise robustness. Other methods leverage the unlabeled data to improve the performance of LNL. For instance, (Garg et al., 2021) augmented the training data with random labeled data and provided a theoretical analysis that ensures the true risk is lower. (Iscen et al., 2022) utilized the unlabeled data to enforce the consistency of model predictions, resulting in improving the performance. Combined with Curriculum Learning, more complex Mixup-based methods (Li et al., 2020a; Cordeiro et al., 2023; Nagarajan et al., 2024) have been proposed recently.

4.2. Reduce fitting error

These methods aim to prevent the model from overfitting to mislabeled samples.

4.2.1. Regularization

: these methods implicitly restrict the model parameters or adjusts the gradients to prevent the model from memorizing mislabeled samples. For example, (Li et al., 2020b) proved the gradient descent with early stopping is an effective regularization to achieve robustness to label noise. (Hu et al., 2019) added the regularizer to limit the distance between the model parameters to initialization for noise robustness. ELR (Liu et al., 2020) estimates the target by temporal ensembling (Laine and Aila, 2016) and adds a regularization term to cross-entropy loss to avoid memorization of mislabeled samples. NAL (Lu et al., 2022b) scales the gradients according to the cleanliness of different samples to achieve noise robustness.

4.2.2. Robust loss functions

: these methods develop loss functions that are inherently robust to label noise, including DMI (Xu et al., 2019), MAE (Ghosh et al., 2017), GCE (Zhang and Sabuncu, 2018), SCE (Wang et al., 2019), NCE (Ma et al., 2020), TCE (Feng et al., 2021), GJS (Englesson and Azizpour, 2021) and CE+EM (Lu, 2022). These methods are to hypothesize noise models and then develop robust algorithms based on them. Two typical noise assumptions are symmetric and asymmetric label noise (Natarajan et al., 2013; Patrini et al., 2017), where the labels are corrupted by a noise transition matrix (where is the number of class in classification task), i.e., , where denotes the true label and denotes the noisy label. Suppose noise rate is , for symmetric noise, the flip probability to other labels is constant, i.e., and for . For asymmetric noise, it is a simulation of real-world label noise, where labels are only replaced by similar classes.

| Methods | Loss expression | Symmetric | Gradient | |

| CE | ||||

| FL | ||||

| MAE | ||||

| RCE | ||||

| GCE | ||||

| TCE | ||||

| NCE | 1 |

Given symmetric noise rate , a loss function is proved to be noise-tolerant if it satisfies the symmetric condition as follows (Ghosh et al., 2017):

| (9) |

where is a constant, and is the hypothesis class. Then we can easily obtain

| (10) |

Since , if is the global minimizer of , then it is also the minimizer of . Therefore, a symmetric loss function is theoretical noise-tolerant if the global minimizer can be learned. However, the derivation of global optimum is a strong assumption. In practice, many robust loss functions have been observed to suffer from the underfitting problem on complicated datasets (Zhang and Sabuncu, 2018; Ma et al., 2020). We review the existing loss functions and derive their gradients in Table 1. The CE loss and focal loss (FL) (Lin et al., 2017) are not robust to noisy labels but have the advantage of sufficient learning ability. Both of them put more weights on the gradient of ambiguous (hard) samples. On the contrary, MAE and Reverse CE (RCE) (Wang et al., 2019) are robust to noisy labels but increase difficulty in training as they equally provide the same weights on the gradient for all training samples. To balance learning sufficiency and noise robustness, a generalized version of CE loss (GCE) (Zhang and Sabuncu, 2018) was proposed , which reduces to MAE and CE when and , respectively. Similarly, Taylor cross entropy (TCE) (Feng et al., 2021) loss was proposed , which is also a generalized mixture of CE (when ) and MAE (when ). Recently, Ma et al. (2020) have demonstrated that any loss can be made robust to noisy labels by applying a simple normalization, e.g., normalized cross entropy (NCE). However, the normalization operation actually alters the gradient of CE loss so that NCE no longer retains the original fitting ability. Let’s denote and . In Table 1, the gradient of NCE is weighted by the term . During training, the term may increase even when is fixed. reaches the maximum value when all equals to . As a consequence, the corresponding gradient reaches the minimum value, which hinders the convergence and causes the underfitting problem. To solve this problem, Active Passive Loss (APL) (Ma et al., 2020) was proposed for both robust and sufficient learning by combining two loss terms.

4.2.3. Sample selection

: The key idea is trying to select clean samples or reweigh the samples in training. During the early learning stage, the samples with smaller loss values are more likely to be the clean samples. Based on this observation, MentorNet (Jiang et al., 2018) pre-trains a mentor network for assigning weights to samples for guiding the training of the student network. Decoupling (Malach and Shalev-Shwartz, 2017) updates the two networks by using the samples having different predictions. Co-teaching (Han et al., 2018) trains two networks which select small-loss samples within each mini-batch to train each other. Co-teaching+ (Yu et al., 2019) improves it by updating the network on disagreement data to keep the two networks diverged. (Ren et al., 2018) reweighed samples based on their gradient directions. JoCoR (Wei et al., 2020) jointly trains two networks with the examples that have prediction agreement between two networks. Co-matching (Lu et al., 2022a) uses a novel framework with two networks fed with different strengths of augmented inputs to achieve the better ensemble effect.

4.2.4. Loss correction

4.3. Reduce both and

Some methods focus on correcting the noisy labels, so that the model is gradually refined. (Reed et al., 2015b) proposed a bootstrapping method which modifies the loss with model predictions. (Ma et al., 2018) improved the bootstrapping method by exploiting the dimensionality of feature subspaces to dynamically reweigh the samples. (Arazo et al., 2019a) improved bootstrapping using a dynamic weighting scheme through unsupervised learning techniques. PLC (Zhang et al., 2020) progressively corrects the labels when the prediction confidence over a dynamic threshold. SELC (Lu and He, 2022) gradually corrects noisy labels by ensemble predictions.

References

- (1)

- Achiam et al. (2023) Josh Achiam, Steven Adler, Sandhini Agarwal, Lama Ahmad, Ilge Akkaya, Florencia Leoni Aleman, Diogo Almeida, Janko Altenschmidt, Sam Altman, Shyamal Anadkat, et al. 2023. Gpt-4 technical report. arXiv preprint arXiv:2303.08774 (2023).

- Arazo et al. (2019a) Eric Arazo, Diego Ortego, Paul Albert, Noel O’Connor, and Kevin Mcguinness. 2019a. Unsupervised Label Noise Modeling and Loss Correction. In Proceedings of the 36th International Conference on Machine Learning. 312–321.

- Arazo et al. (2019b) Eric Arazo, Diego Ortego, Paul Albert, Noel O’Connor, and Kevin McGuinness. 2019b. Unsupervised label noise modeling and loss correction. PMLR, 312–321.

- Barron et al. (2022) Jonathan T Barron, Ben Mildenhall, Dor Verbin, Pratul P Srinivasan, and Peter Hedman. 2022. Mip-NeRF 360: Unbounded anti-aliased neural radiance fields. 5470–5479.

- Bottou and Bousquet (2007) Léon Bottou and Olivier Bousquet. 2007. The tradeoffs of large scale learning. Advances in neural information processing systems 20 (2007).

- Cordeiro et al. (2023) Filipe R Cordeiro, Ragav Sachdeva, Vasileios Belagiannis, Ian Reid, and Gustavo Carneiro. 2023. LongReMix: Robust learning with high confidence samples in a noisy label environment. 133 (2023), 109013.

- Deng et al. (2009) Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. 2009. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition. Ieee, 248–255.

- Englesson and Azizpour (2021) Erik Englesson and Hossein Azizpour. 2021. Generalized Jensen-Shannon Divergence Loss for Learning with Noisy Labels. Advances in Neural Information Processing Systems 34 (2021).

- Feng et al. (2021) Lei Feng, Senlin Shu, Zhuoyi Lin, Fengmao Lv, Li Li, and Bo An. 2021. Can cross entropy loss be robust to label noise?. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence. 2206–2212.

- Frénay and Verleysen (2013) Benoît Frénay and Michel Verleysen. 2013. Classification in the presence of label noise: a survey. IEEE transactions on neural networks and learning systems 25, 5 (2013), 845–869.

- Garg et al. (2021) Saurabh Garg, Sivaraman Balakrishnan, Zico Kolter, and Zachary Lipton. 2021. Ratt: Leveraging unlabeled data to guarantee generalization. In International Conference on Machine Learning. PMLR, 3598–3609.

- Ghosh et al. (2017) Aritra Ghosh, Himanshu Kumar, and PS Sastry. 2017. Robust loss functions under label noise for deep neural networks. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence. 1919–1925.

- Han et al. (2018) Bo Han, Quanming Yao, Xingrui Yu, Gang Niu, Miao Xu, Weihua Hu, Ivor Tsang, and Masashi Sugiyama. 2018. Co-teaching: Robust training of deep neural networks with extremely noisy labels. 31 (2018).

- Hendrycks et al. (2018) Dan Hendrycks, Mantas Mazeika, Duncan Wilson, and Kevin Gimpel. 2018. Using trusted data to train deep networks on labels corrupted by severe noise. In NIPS. 10456–10465.

- Hu et al. (2019) Wei Hu, Zhiyuan Li, and Dingli Yu. 2019. Simple and effective regularization methods for training on noisily labeled data with generalization guarantee. arXiv preprint arXiv:1905.11368 (2019).

- Iscen et al. (2022) Ahmet Iscen, Jack Valmadre, Anurag Arnab, and Cordelia Schmid. 2022. Learning with Neighbor Consistency for Noisy Labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4672–4681.

- Jiang et al. (2018) Lu Jiang, Zhengyuan Zhou, Thomas Leung, Li-Jia Li, and Li Fei-Fei. 2018. Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In International Conference on Machine Learning. 2304–2313.

- Kerbl et al. (2023) Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 2023. 3D gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics 42, 4 (2023).

- Krizhevsky et al. (2012) Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. 2012. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 25 (2012).

- Laine and Aila (2016) Samuli Laine and Timo Aila. 2016. Temporal ensembling for semi-supervised learning. arXiv preprint arXiv:1610.02242 (2016).

- Li et al. (2020a) Junnan Li, Richard Socher, and Steven CH Hoi. 2020a. DivideMix: Learning with noisy labels as semi-supervised learning. arXiv preprint arXiv:2002.07394 (2020).

- Li et al. (2020b) Mingchen Li, Mahdi Soltanolkotabi, and Samet Oymak. 2020b. Gradient descent with early stopping is provably robust to label noise for overparameterized neural networks. In International conference on artificial intelligence and statistics. PMLR, 4313–4324.

- Li et al. (2017) Wen Li, Limin Wang, Wei Li, Eirikur Agustsson, and Luc Van Gool. 2017. Webvision database: Visual learning and understanding from web data. arXiv preprint arXiv:1708.02862 (2017).

- Lin et al. (2017) Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. 2017. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision. 2980–2988.

- Liu et al. (2020) Sheng Liu, Jonathan Niles-Weed, Narges Razavian, and Carlos Fernandez-Granda. 2020. Early-Learning Regularization Prevents Memorization of Noisy Labels. Advances in Neural Information Processing Systems 33 (2020).

- Lloyd et al. (2004) Ricardo V Lloyd, Lori A Erickson, Mary B Casey, King Y Lam, Christine M Lohse, Sylvia L Asa, John KC Chan, Ronald A DeLellis, H Ruben Harach, Kennichi Kakudo, et al. 2004. Observer variation in the diagnosis of follicular variant of papillary thyroid carcinoma. The American journal of surgical pathology 28, 10 (2004), 1336–1340.

- Lu (2022) Yangdi Lu. 2022. Robust Approaches for Learning with Noisy Labels. Ph. D. Dissertation.

- Lu et al. (2022a) Yangdi Lu, Yang Bo, and Wenbo He. 2022a. An Ensemble model for combating label noise. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining. 608–617.

- Lu et al. (2022b) Yangdi Lu, Yang Bo, and Wenbo He. 2022b. Noise Attention Learning: Enhancing Noise Robustness by Gradient Scaling. 35 (2022), 23164–23177.

- Lu and He (2021) Yangdi Lu and Wenbo He. 2021. MixNN: Combating Noisy Labels in Deep Learning by Mixing with Nearest Neighbors. In 2021 IEEE International Conference on Big Data (Big Data). IEEE, 847–856.

- Lu and He (2022) Yangdi Lu and Wenbo He. 2022. SELC: Self-ensemble label correction improves learning with noisy labels. arXiv preprint arXiv:2205.01156 (2022).

- Ma et al. (2020) Xingjun Ma, Hanxun Huang, Yisen Wang, Simone Romano, Sarah Erfani, and James Bailey. 2020. Normalized loss functions for deep learning with noisy labels. In International Conference on Machine Learning. PMLR, 6543–6553.

- Ma et al. (2018) Xingjun Ma, Yisen Wang, Michael E Houle, Shuo Zhou, Sarah Erfani, Shutao Xia, Sudanthi Wijewickrema, and James Bailey. 2018. Dimensionality-Driven Learning with Noisy Labels. In International Conference on Machine Learning. 3355–3364.

- Malach and Shalev-Shwartz (2017) Eran Malach and Shai Shalev-Shwartz. 2017. Decoupling” when to update” from” how to update”. In Advances in Neural Information Processing Systems. 960–970.

- Mildenhall et al. (2020) Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing scenes as neural radiance fields for view synthesis.

- Mitchell et al. (1997) Tom M Mitchell et al. 1997. Machine learning.

- Mohri et al. (2018) Mehryar Mohri, Afshin Rostamizadeh, and Ameet Talwalkar. 2018. Foundations of machine learning. MIT press.

- Nagarajan et al. (2024) Bhalaji Nagarajan, Ricardo Marques, Eduardo Aguilar, and Petia Radeva. 2024. Bayesian DivideMix++ for Enhanced Learning with Noisy Labels. Neural Networks 172 (2024), 106122.

- Natarajan et al. (2013) Nagarajan Natarajan, Inderjit S Dhillon, Pradeep K Ravikumar, and Ambuj Tewari. 2013. Learning with noisy labels. NIPS 26 (2013), 1196–1204.

- Nishi et al. (2021) Kento Nishi, Yi Ding, Alex Rich, and Tobias Hollerer. 2021. Augmentation strategies for learning with noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8022–8031.

- Patrini et al. (2017) Giorgio Patrini, Alessandro Rozza, Aditya Krishna Menon, Richard Nock, and Lizhen Qu. 2017. Making deep neural networks robust to label noise: A loss correction approach. In CVPR. 1944–1952.

- Radford et al. (2018) Alec Radford, Karthik Narasimhan, Tim Salimans, Ilya Sutskever, et al. 2018. Improving language understanding by generative pre-training. (2018).

- Reed et al. (2015a) Scott E Reed, Honglak Lee, Dragomir Anguelov, Christian Szegedy, Dumitru Erhan, and Andrew Rabinovich. 2015a. Training Deep Neural Networks on Noisy Labels with Bootstrapping. In ICLR (Workshop).

- Reed et al. (2015b) Scott E Reed, Honglak Lee, Dragomir Anguelov, Christian Szegedy, Dumitru Erhan, and Andrew Rabinovich. 2015b. Training Deep Neural Networks on Noisy Labels with Bootstrapping. In ICLR (Workshop).

- Ren et al. (2018) Mengye Ren, Wenyuan Zeng, Bin Yang, and Raquel Urtasun. 2018. Learning to Reweight Examples for Robust Deep Learning. In International Conference on Machine Learning. 4334–4343.

- Sabour et al. (2023) Sara Sabour, Suhani Vora, Daniel Duckworth, Ivan Krasin, David J Fleet, and Andrea Tagliasacchi. 2023. RobustNeRF: Ignoring distractors with robust losses. 20626–20636.

- Turing (2009) Alan M Turing. 2009. Computing machinery and intelligence. In Parsing the turing test. Springer, 23–65.

- van den Hout and van der Heijden (2002) Ardo van den Hout and Peter GM van der Heijden. 2002. Randomized response, statistical disclosure control and misclassificatio: a review. International Statistical Review 70, 2 (2002), 269–288.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems 30 (2017).

- Wang et al. (2019) Yisen Wang, Xingjun Ma, Zaiyi Chen, Yuan Luo, Jinfeng Yi, and James Bailey. 2019. Symmetric cross entropy for robust learning with noisy labels. In Proceedings of the IEEE International Conference on Computer Vision. 322–330.

- Wei et al. (2020) Hongxin Wei, Lei Feng, Xiangyu Chen, and Bo An. 2020. Combating noisy labels by agreement: A joint training method with co-regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13726–13735.

- Xu et al. (2019) Yilun Xu, Peng Cao, Yuqing Kong, and Yizhou Wang. 2019. L_DMI: A Novel Information-theoretic Loss Function for Training Deep Nets Robust to Label Noise. Advances in Neural Information Processing Systems 32 (2019), 6225–6236.

- Yu et al. (2019) X Yu, B Han, J Yao, G Niu, IW Tsang, and M Sugiyama. 2019. How does disagreement help generalization against label corruption?. In 36th ICML 2019.

- Zhang et al. (2018a) C Zhang, S Bengio, M Hardt, B Recht, and O Vinyals. 2018a. Understanding deep learning requires rethinking generalization.

- Zhang et al. (2018b) Hongyi Zhang, Moustapha Cisse, Yann N Dauphin, and David Lopez-Paz. 2018b. mixup: Beyond Empirical Risk Minimization. In International Conference on Learning Representations.

- Zhang et al. (2020) Yikai Zhang, Songzhu Zheng, Pengxiang Wu, Mayank Goswami, and Chao Chen. 2020. Learning with Feature-Dependent Label Noise: A Progressive Approach. In International Conference on Learning Representations.

- Zhang and Sabuncu (2018) Zhilu Zhang and Mert Sabuncu. 2018. Generalized cross entropy loss for training deep neural networks with noisy labels. In Advances in neural information processing systems. 8778–8788.

- Zhu and Wu (2004) Xingquan Zhu and Xindong Wu. 2004. Class noise vs. attribute noise: A quantitative study. Artificial intelligence review 22, 3 (2004), 177–210.