Learning Weighting Map for Bit-Depth Expansion within a Rational Range

Abstract

Bit-depth expansion (BDE) is one of the emerging technologies to display high bit-depth (HBD) image from low bit-depth (LBD) source. Existing BDE methods have no unified solution for various BDE situations, and directly learn a mapping for each pixel from LBD image to the desired value in HBD image, which may change the given high-order bits and lead to a huge deviation from the ground truth. In this paper, we design a bit restoration network (BRNet) to learn a weight for each pixel, which indicates the ratio of the replenished value within a rational range, invoking an accurate solution without modifying the given high-order bit information. To make the network adaptive for any bit-depth degradation, we investigate the issue in an optimization perspective and train the network under progressive training strategy for better performance. Moreover, we employ Wasserstein distance as a visual quality indicator to evaluate the difference of color distribution between restored image and the ground truth. Experimental results show our method can restore colorful images with fewer artifacts and false contours, and outperforms state-of-the-art methods with higher PSNR/SSIM results and lower Wasserstein distance. The source code will be made available at https://github.com/yuqing-liu-dut/bit-depth-expansion

Index Terms:

Bit-depth expansion, optimization method, progressive training, Wasserstein distance, image restoration.I Introduction

Bit depth expansion (BDE) is an emerging issue to generate and display a high bit-depth (HBD) image from the low bit-depth (LBD) source. With the rapid development of television technology, there are numerous ultra high-definition display terminals supporting 10-bit or even 12-bit luminance levels, while the mainstream video and image sources are just 8-bit [34]. Thus, expanding the bit depth becomes a popular topic for high-dynamic-range (HDR) display [22], display acceleration on mobile (SoC) [8] and video compression [43, 9, 24]. Given a LBD image, the task of BDE is to replenish the missing low-order bits according to the given high-order bits, and restore a suitable HBD representation [48]. Fig. 1 shows an example that restores an 8-bit HBD image from a 2-bit LBD instance. Existing methods still suffer from blur, artifacts and false contours caused by color quantization [47, 48].

Convolutional neural networks (CNNs) offer a fresh chance to break the deadlock of BDE [48, 44, 18, 21], which directly learn a desired value for each pixel in the HBD image. For example, BitNet [5] considers the bit information as a prior to fit different bit-depth situations. LBDEN [47] utilizes a simple but effective network for BDE. However, these methods may modify the given high-order bits and lead to a huge deviation from the ground truth. Fig. 1 shows restoring a 2-bit image to 8-bit by a representative state-of-the-art CNN-based method RMFNet [20]. The result on top part demonstrates obvious banding effect and false contours. Before and after recovery, the bit value of a pixel instance indicates that the highest two bits are totally changed, demonstrating severe color distortions.

Moreover, most of the existing works have no adaptability on all bit-depth situations, and models are trained separately for specific bit-depth recovery. The degradation changes along with the number of missing low-order bits [29], and previous works employ training different models for different bit-depth degradation [48, 20]. Recently, Punnappurath and Brown train several independent networks to predict each missing bit for BDE [29]. However, to our best knowledge, no previous work finds an unified solver for all degradation situations, or designs a general-purpose network with good performance on all bit-depth degradation.

In this paper, we consider BDE in an information replenishing perspective. By keeping the correct high-order bits, we can restore more accurate value and generate the desired HBD image, as shown on bottom part of Fig. 1. To find an unified representation for any bit-depth degradation, we learn the weight between the theoretical lower and upper bounds which is independent to the number of missing bits. Thus, the outputs from all bit depths hold an unified representation and we can use only one model to adaptively fit all degradation situations.

By considering BDE as an unified representation, it can be solved in the optimization perspective. However, the restoration task is usually a non-convex issue with a complex solution space and hard to find an optimal result. Thus, inspired by curriculum learning [2], we propose a progressive training strategy to gradually find a feasible solution with the increase of missing bits. Specifically, we design an end-to-end network for bit restoration (BRNet) and learn a weighting map for replenishing the LBD image to HBD image. Meanwhile, we devise an optimization block (OptBlock) based on the proximal gradient descent algorithm [40] and fourth-order Runge-Kutta (RK-4) method [11]. Besides, we introduce Wasserstein distance [32] to estimate the restored HBD image visual quality, which can reflect the pixel value distribution of the whole image other than pixel-wise evaluation as PSNR and SSIM. Experimental results show our network achieves better objective and subjective performances than state-of-the-art methods. The visual comparisons show our BRNet can efficiently reconstruct the details without false contour artifacts.

Our contributions are concluded as follows:

-

•

To our best knowledge, this weighting map learning network is the first to explore a general model to effectively restore any bit-depth image for BDE without modifying the given information.

-

•

We analyze the BDE problem in an optimization perspective and devise an optimization block (OptBlock) based on the proximal gradient descent algorithm and forth-order Runge-Kutta method, invoking an unified representation for any bit-depth degradation.

-

•

By gradually increasing the number of missing bits, the tailored progressive training manner boosts the training phase and improves the network performance over full bit-depth recovery.

-

•

Experimental results show our network achieves better objective and subjective performances than state-of-the-art methods with more satisfactory details and colors.

II Relate Works

There are classical methods smoothing the banding effect of recovery image by filtering [28], contour interpolation [36], and dithering [4]. Recently, IPAD [22] proposed by Liu et al. introduces an intensity potential field to model the complicated relationships among pixels and utilizes adaptive de-quantization to convert LBD image to HBD, which achieves better performance than other traditional works. However, lacking of the learning and supervision process, there is still a big gap between the recovery image of classical methods and acceptable visual quality.

In recent years, CNN demonstrates great superiority on super-resolution [45, 16, 6], inpainting [7, 41, 46], denoising [12] and other tasks [10, 15]. Therefore, there are CNN-based BDE methods for effective restoration, which obtain the state-of-the-art performance. BitNet [5] uses an encoder-decoder architecture with dilated convolutions to reconstruct the HBD images GG-DCNN [38] utilizes an UNet-style design to restore image with very low bit-depth. To avoid the information loss, single-scale networks without down-sampling operation are considered for better performance. BE-CNN [33] enjoys a residual connection design to suppress the artifacts. BE-CALF [19] uses densely connections to directly generate the high quality images. Besides the single-scale designs, RMFNet [20] introduces a multi-scale network with residual-guided information fusion and achieves the state-of-the-art performance. These works treat different areas of the input image equally and generate the HBD results or residual images straightforwardly. BDEN [48] considers the flat and non-flat areas separately, and uses a two-stream restoration network to restore the information. LBDEN [47] is proposed for lighter but efficient bit-depth expansion, which achieves competitive performance to the heavier works. Recently, BitPlane [29] separates the image into different bit planes, and uses several independent networks to predict the missing bits from different positions. Besides the methods that restores the missing information, there are also GAN-based networks for BDE, aiming to generate the satisfactory results, such as BDGAN [23] and TSGAN [39].

III Methodology

In the following, we introduce the methodology for BDE in detail. Firstly we describe the pipeline of learning the weighting map for BDE. Then, the overall design of BRNet and component design of OptBlock are discussed in detail. Finally, we introduce the proposed progressive training strategy and the quality assessment.

III-A Problem Formulation

Let , be the LBD and restored HBD images, respectively. Our method aims to find the missing information from lost bits and keep the given high-order bits. Let be the residual image of the replenished low-order bits, then there is

| (1) |

The residual image is gained based on the learned weighting map . Let be the theoretical upper bound of the missing bits. Then, is a 3-channel tensor denoting the RGB color channels separately. The value of is the decimal representation of the missing low-order bits. For example, if the number of missing bits , each value of is . Thus, the residual image is

| (2) |

where denotes the point-wise multiplication, and is the weighting map with each value in the range of .

Further, we design a bit restoration network (BRNet), jointly considering and the upper bound to infer the adaptive weighting map . Let represent the network, we can formulate the learning process as

| (3) |

where is the concatenation operation.

III-B Network Design

Fig. 2 illustrates the entire framework of the proposed BRNet. BRNet is mainly composed of encoder and decoder that follows an UNet-style design [30]. The encoder contains an input convolutional layer and three optimization blocks. The input layer converts the input tensor to a series of feature maps for optimization. The optimization blocks (OptBlock) explore the feature distribution in different scale and find a feasible solution in the feature space. The optimized feature maps are down-scaled successively by MaxPooling and explored by the next stage. The smaller size of feature equivalently increases the receptive field of network and learns different scales of feature distributions. The decoder part integrates the multi-scale solutions and produces the desired weighting map.

The design of optimization block (OptBlock) follows the mathematical analysis of BDE in the optimization perspective. Let be the LBD feature, the task of OptBlock is to find a restored HBD feature satisfying

| (4) |

where is the operation that removes the information of last bits, is the prior term, is a weighting factor. It is worth noting that Eq. 4 is an unconstrained optimization issue, and the solution space of has no extra boundary. By converting the feature into weighting map, we can find a suitable mapping within the rational range.

An ideal prior should be a function related to the number of bits in , which can be regarded as a statistical-relative indicator. However, it is difficult for derivation. Thus, we employ proximal gradient descent algorithm to solve Eq. 4 in an iterative formulation by

| (5) |

where

| (6) |

and

| (7) |

, , and are placeholders.

Eq. 6 has a similar formulation to traditional image restoration problem, and thereby we design a residual block ProxBlock composed of three convolutional layers and two ReLU activation to find the solution, as demonstrated in Fig. 3(a).

Further, can be regarded as a quantization step to remove the information of last bits in the feature space, which is difficult to find an explicit quantization expression. To address this issue, we denote , , and , then there is

| (8) |

where , are placeholders.

Eq. 8 holds a similar formulation to the Euler method for finding an approximation of ordinary differential equation (ODE). Thus, we consider Eq. 8 as a dynamical system, and find the solution by a one-step approximation over an interval . To find a more accurate numerical solution, we utilize fourth-order Runge-Kutta (RK-4) method [11] to calculate the result and there is

| (9) |

where

| (10) |

Fig. 3(b) shows the design for finding the solution by RK-4 (RK-4 Block). We emulate the of Eq. 10 by the color blocks of Fig. 3(b) where each block consists of two convolutional layers and a ReLU activation.

As shown in Fig. 3(c), each optimization step consists of a group of one RK-4 Block and one ProxBlock, that optimizes the feature map according to Eq. 9 and Eq. 5, separately. After optimization steps in the OptBlock, we design a residual block with two convolutional layers and a ReLU activation for better gradient transmission.

There are also three stages in the decoder. For each decoder stage, a convolutional layer and a residual block (ResBlock) integrate the information from the current stage of the encoder and the former decoder stage. The ResBlock follows the same design as EDSR [17] which is composed of two convolutional layers and a ReLU activation. The up-scaling method in the decoder is devised by a convolutional layers and a sub-pixel convolution [31]. The convolution expands the number of channels and the sub-pixel convolution reshapes the feature maps and increases the resolution. At the end of the decoder, there is a block group to restore the weighting map from the feature. Two convolutional layers, a ReLU activation and a Sigmoid activation are utilized to explore the decoded feature maps and generate the weighting map .

In this paper, the filter number is set as 64 for all convolutional layers, except for the convolutions and the last convolutional layer. For the first and second stages of the encoder, the number of optimization step is set as 1. For the third stage, there are 6 optimization steps in the OptBlock. The BRNet is designed for bit-depth expansion on standard dynamic range (SDR) image with 8 bits. For HDR bit-depth expansion (with 16 bits), we use two parallel block groups at the end of the decoder for restoration, since it has double information than SDR. Similar to SDR, each group aims to restore at most 8-bit information.

III-C Progressive Training Strategy

It is obvious that replenishing a small number of bits is easier than restoring many of bits. Inspired by the human’s learning behavior from easy to hard, we propose a progressive training strategy by gradually increasing the missing bits of the training data to boost the learning step [2]. Let be datasets with different difficulty. The difficulty rises with the increase of the indicator . Then, the training steps are regarded as a sequence , where , meaning to update the set of network parameter with dataset . The indicator of training steps is sequentially increasing, and the set of network parameters is progressively optimized. Specially, the difficulty of is defined as the upper bound of missing bits. With the increase of missing bits, the information suffers severer loss, and the restoration becomes harder.

The detail of progressive training is as follows. For -th epoch, we generate the LBD image from the original image for training. Specifically, we increase the difficulty of training data for every 20 epochs. Let be the maximum bit depth of image, the upper bound of missing bits for -th epoch is calculated as . is set as 8 for SDR image, and 16 for HDR image. Then, we randomly remove bits for progressive training from and generate the LBD image , where . The overall progressive learning procedure is outlined in Algorithm 1. Overall, we gradually increase the training difficulty to simulate the human’s learning behavior for better performance.

Input: The current epoch , the maximum image bit depth , and the original HBD image for -th epoch.

Output: The degraded LBD image for training.

III-D Quality Assessment

Besides the network design, how to evaluate the performance of restored HBD image is also a vital issue for BDE [27]. By delving into the evaluation method of the human visual system, we hold the hypothesis that if the restored image has the color distribution closer to the natural image, it is more coincident with visual experience. However, the existing PSNR and SSIM are both pixel-wise evaluations [26, 39], having no capability of reflecting the distribution of the whole image. Thus, a distribution distance metric is more suitable for visual performance evaluation.

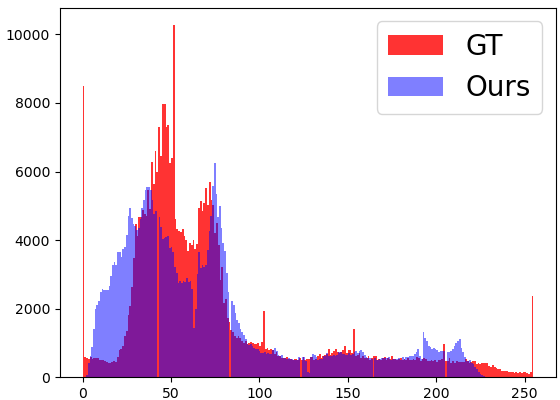

We use Wasserstein distance (W-dis) to indicate the difference between restored image and the ground truth. Wasserstein distance describes the physical transportation cost between two distributions. Similar to PSNR and SSIM, Wasserstein distance is a symmetric metric, which is more suitable for image quality assessment than KL-divergence and other asymmetrical methods. Fig. 8 shows comparisons between PSNR/SSIM and W-dis. In the first row of Fig. 8, The PSNR of BE-CALF is 0.1 dB higher than RMFNet, but there are more artifacts from BE-CALF in the background of the parrot. RMFNet holds lower W-dis than BE-CALF and has fewer unnatural textures, indicating that W-dis consists with the image quality.

To further investigate the superiority of W-dis than traditional metrics, we choose two representative examples to compare PSNR/SSIM and W-dis, as shown in Figure 5. In the first row of the figure, we focus on the color of the ship. The result of BE-CALF is more different to the original image than the result of RMFNet. Correspondingly, although the PSNR of BE-CALF is higher than RMFNet, the W-dis of RMFNet is lower. Similar situation can be observed in the second row of Figure 5. In the sky area, we can find that L-BDEN generates more artifacts than the RMFNet. Although the SSIM of L-BDEN is higher, the W-dis of RMFNet is lower, and the perceptual experience is better. In this point of view, W-dis can well describe the perceptual difference between two images.

IV Experiment

We train and test our BRNet on five widely used datasets: DIV2K [1], Kodak [14], Set5 [3], Set14 [42], and B100 [25]. We train our method on 900 images from DIV2K for SDR restoration. DIV2K is a high-resolution color image dataset, and has been widely used for image restoration tasks. For test, we choose 5 images from Set5, 14 images from Set14, 100 images from B100, and 24 images from Kodak datasets seperately for comparing the performances of models. We use Adam optimizer [13] to update the model with L1-loss, and halve the learning rate for every epochs. We choose PSNR, SSIM and Wasserstein distance (W-dis) as metrics.

| BD | PSNR | SSIM | W-dis | |||

|---|---|---|---|---|---|---|

| w PT | w/o PT | w PT | w/o PT | w PT | w/o PT | |

| 1 | 19.14 | 19.15 | 0.6854 | 0.6827 | 13.68 | 14.02 |

| 2 | 27.91 | 27.71 | 0.8779 | 0.8784 | 3.03 | 3.63 |

| 3 | 34.22 | 34.03 | 0.9495 | 0.9489 | 1.22 | 1.41 |

| 4 | 39.80 | 39.61 | 0.9794 | 0.9789 | 0.59 | 0.64 |

IV-A Model Analysis

IV-A1 Analysis on Progressive Training Strategy

To validate the performance of progressive training (PT), we train the network with and without PT and compare the performances, respectively. The training data without PT are randomly dropped different number of bits from to , and all other settings of the two models are totally the same. Fig. 4 shows the training loss comparison between the two training strategies. The red curve is the loss variation without PT and the blue one is the loss changes with PT. The dash lines in the figure note the converged losses of the two models. In the figure, we can find the loss with PT rises in the first 60 epochs. This is because we increase the difficulty according to the progressive training. The converged loss is calculated by averaging the losses of the last 20 epochs. The converged L1-loss of model with PT is 5.22 which is lower than 5.30 without PT. In this point of view, the blue dash line is lower than the red one, indicating the proposed PT improves the capacity.

Tab. I shows the quantitative comparisons with and without PT in terms of PSNR, SSIM and W-dis on Kodak dataset. In the table, we compare the results of restoring 1, 2, 3, and 4 bits to 8-bit. In all situations, the model trained with PT achieves better W-dis than the model without PT. When the expended bit depth is 1, the model with PT achieves competitive performance to the model without PT. In other situations, the model with PT surpasses the model without PT near 0.2 dB in average on PSNR, separately.

| BD | PSNR | SSIM | W-dis | |||

|---|---|---|---|---|---|---|

| Weight | Value | Weight | Value | Weight | Value | |

| 1 | 19.14 | 18.97 | 0.6854 | 0.6766 | 13.68 | 14.09 |

| 3 | 34.22 | 33.55 | 0.9495 | 0.9469 | 1.22 | 1.65 |

| 5 | 44.32 | 43.43 | 0.9909 | 0.9904 | 0.32 | 0.54 |

| 7 | 52.90 | 47.07 | 0.9998 | 0.9978 | 0.06 | 0.99 |

IV-A2 Analysis on Weighting Map Learning

To validate the effectiveness of learning weighting map for replenishment, we modify BRNet by removing the last Sigmoid layer and the input , which makes the network the same as other CNNs and directly learns desired values. We keep other settings unchanged so that the modified network is trained in all bit-depth situations. Tab. II shows the PSNR/SSIM/W-dis comparison on Kodak dataset between learning weight and learning direct values. “Weight” indicates the weighting learning manner in the paper, while “Value” denotes the modified version. In the table, the weighting map learning outperforms the modified version in all situations. The PSNR of “Weight” is 44.32 dB when bit depth is 5, which is near 1 dB higher than that of the “Value” manner. When the bit-depth is 7, “Weight” achieves near 6 dB PSNR improvement than “Value”.

We also visualize the learned weighting map to investigate the effectiveness of our network. Fig. 6 shows the learned weighting maps of RGB channels for replenishment. The darker color means the lower weight value. In Fig. 6(c), the blue channel plays an important role with higher value in the sky region, while the red channel and green channel reconcile the pure blue color to a gradual changes in the restored image. As such, the learned weighting maps can adaptively find the missing information from LBD images and generate a natural color transaction image.

| Bit-Depth | Indicator | Zero Padding | IPAD [22] | BitNet [5] | LBDEN [47] | BE-CALF [19] | RMFNet [20] | BRNet |

|---|---|---|---|---|---|---|---|---|

| 1 | PSNR | 10.79 | 17.51 | 12.03 | 17.61 | 18.11 | 18.31 | 19.14 |

| SSIM | 0.3067 | 0.5451 | 0.3946 | 0.6090 | 0.6383 | 0.6370 | 0.6854 | |

| W-dis | 64.82 | 17.84 | 53.77 | 18.45 | 17.06 | 16.99 | 13.68 | |

| 3 | PSNR | 22.77 | 29.20 | 32.68 | 32.09 | 32.56 | 32.05 | 34.22 |

| SSIM | 0.8559 | 0.9000 | 0.9339 | 0.9355 | 0.9360 | 0.9294 | 0.9495 | |

| W-dis | 16.00 | 2.59 | 2.2883 | 1.96 | 2.24 | 1.79 | 1.22 | |

| 4 | PSNR | 29.06 | 34.90 | 38.48 | 38.20 | 38.44 | 37.75 | 39.80 |

| SSIM | 0.9484 | 0.9595 | 0.9740 | 0.9750 | 0.9743 | 0.9712 | 0.9794 | |

| W-dis | 7.68 | 1.11 | 1.07 | 0.83 | 1.02 | 0.79 | 0.59 | |

| 5 | PSNR | 35.55 | 40.35 | 43.30 | 43.08 | 43.51 | 42.63 | 44.32 |

| SSIM | 0.9839 | 0.9843 | 0.9887 | 0.9896 | 0.9898 | 0.9882 | 0.9909 | |

| W-dis | 3.50 | 0.69 | 0.60 | 0.45 | 0.59 | 0.45 | 0.32 | |

| 7 | PSNR | 51.02 | 51.02 | 47.13 | 45.74 | 48.79 | 47.66 | 52.90 |

| SSIM | 0.9985 | 0.9985 | 0.9978 | 0.9971 | 0.9976 | 0.9974 | 0.9982 | |

| W-dis | 0.52 | 0.51 | 0.86 | 0.62 | 0.61 | 0.36 | 0.03 |

IV-B Comparison with State-of-the-Art Methods

We compare our BRNet with 6 state-of-the-art methods, including a recent traditional method (IPAD [22]) and five CNN-based methods: BitNet [5], LBDEN [47], BE-CALF [19], and RMFNet [20]. It should be noticed that vanilla RMFNet, BE-CALF and LBDEN have no solution for different bit depths. For a fair comparison, we re-train these models with our whole training datasets covering the same bit expansion situations with our model.

Following the comparison in previous works, we first investigate the performances of state-of-the-art methods on restoring 4-bit image to 8-bit on Kodak dataset. Tab. III shows the PSNR/SSIM/W-dis results on Kodak dataset. The best results are shown in bold. “Zero Padding” means to set the missing bits as zero. In the table, our method achieves the best performance than other works in all evaluation terms. Specifically, our network gains 0.3 dB higher than BitPlane and 2 dB higher than RMFNet in term of PSNR. The W-dis of our method is 0.2 lower than BitPlane and RMFNet, which indicates a better visual performance.

Fig. 7 shows the visual comparisons with two competitive methods on restoring 4-bit image to 8-bit. As can be observed, BRNet generates more natural textures on the sky and cloud while other works suffer from artifacts and false contours. In the zoomed-in sky area, our result contains fewer noises and produces more correct colors than other works, which is the closest to the ground truth. It is worth noting that the PSNR/SSIM results of RMFNet is lower than the BE-CALF. In contrast, RMFNet holds better W-dis result than BE-CALF with higher visual quality, indicating W-dis is a suitable metric for visual quality assessment.

To show the general applicability of BRNet, we compare the performances in different bit-depth situations. We set the bit depth as 1, 3, 5, and, 7, and demonstrate the PSNR/SSIM/W-dis results in Tab. III. In the table, BRNet outperforms the state-of-the-art methods in all bit expansion situations. In general, with the increase of bit depth, the superiority of BRNet becomes more obvious. It should be noticed that BitPlane has no capability on restoring 1-bit image. IPAD is a traditional method that intrinsically satisfies different bit-depth situations. Compared with IPAD, BRNet achieves near 4 dB improvements in term of PSNR when the bit-depth is 5.

Fig. 8 shows the visual comparisons on restoring 2-bit to 8-bit. In the figure, our method achieves the best visual performance than other works with superior PSNR/SSIM/W-Dis results. We can find that BRNet can still generate satisfactory results when suffering severe information loss. In the flat area where banding effect is obvious, such as the background of the parrot and the sky over sea, BRNet can remove the false contours and estimate the correct colors and textures more effectively.

To further investigate the effectiveness of BRNet, we compare the color distributions restored by our network and RMFNet respectively, as shown in Fig. 9. The histograms are constructed in red, blue and green channels respectively. The distributions recovered by our network are much close to the ground truth, while the histograms of RMFNet has huge difference with ground truth. The overlapping area between our distribution and the ground truth is larger than that of RMFnet, which explains why we achieve the best W-dis performance.

We also compare the performances on Set5, Set14, and BSD100 datasets, where BRNet outperforms other works near 2 dB, 3 dB, 1.5 dB separately in term of PSNR on restoring 4-bit image to 8-bit. Table IV, V and VI shows the PSNR [35]/SSIM [37]/W-dis [32] comparisons on different datasets separately. We can find that our BRNet achieves the best performance on all testing benchmarks with different settings of bit depth. With the increase of bit depth, BRNet outperforms other works with much higher PSNR results. Specially, when the bit-depth is 7, our BRNet gains more than 5 dB, 10 dB, and 3 dB PSNR improvement than other works on Set5, Set14, and B100 datasets separately.

Figure 10 shows the visual comparisons on restoring images from 2-bit to 8-bit on B100 dataset. In the first row of the figure, we can find that our BRNet produces the most satisfactory visual results. Especially in the sky area, BRNet contains fewer false contours and more correct colors than other methods. In the second row, other works are suffering from the false blurs on the snow, while BRNet can effectively suppress the unpleasant artifacts.

Figure 11 shows the visual comparison on restoring 2-bit image to 8-bit on Set14 dataset. In the figure, BRNet outputs fewer artifacts around the logo than BE-CALF, while LBDEN and RMFNet suffer from the severe false contours. All the competitive methods exhibit obvious banding effect on the blue circle within the green rectangle, while our BRNet has perfect color transition with accurate textures.

| Bit-Depth | BE-CALF [19] | LBDEN [47] | RMFNet [20] | BRNet |

|---|---|---|---|---|

| 1 | 19.04 / 0.6603 / 15.68 | 19.67 / 0.6565 / 12.37 | 18.98 / 0.6598 / 16.06 | 18.86 / 0.6719 / 18.74 |

| 2 | 25.61 / 0.8162 / 6.93 | 25.96 / 0.8191 / 6.47 | 25.87 / 0.8230 / 6.18 | 27.05 / 0.8470 / 5.06 |

| 3 | 32.07 / 0.9104 / 3.00 | 32.28 / 0.9155 / 2.46 | 32.11 / 0.9204 / 2.23 | 34.07 / 0.9460 / 1.20 |

| 4 | 36.59 / 0.9501 / 2.08 | 36.86 / 0.9625 / 1.33 | 36.35 / 0.9572 / 1.51 | 38.80 / 0.9740 / 0.82 |

| 5 | 40.27 / 0.9631 / 2.29 | 41.18 / 0.9840 / 1.42 | 40.05 / 0.9766 / 1.68 | 43.22 / 0.9749 / 0.64 |

| 6 | 43.81 / 0.9639 / 2.38 | 44.50 / 0.9841 / 1.60 | 43.48 / 0.9827 / 1.69 | 47.93 / 0.9965 / 0.30 |

| 7 | 46.37 / 0.9649 / 2.49 | 47.57 / 0.9880 / 1.53 | 45.63 / 0.9838 / 1.74 | 52.83 / 0.9987 / 0.10 |

| Bit-Depth | BE-CALF [19] | LBDEN [47] | RMFNet [20] | BRNet |

|---|---|---|---|---|

| 1 | 17.96 / 0.6174 / 18.79 | 18.34 / 0.6169 / 14.69 | 18.40 / 0.6333 / 16.49 | 18.60 / 0.6487 / 15.92 |

| 2 | 23.83 / 0.7862 / 10.33 | 24.79 / 0.8084 / 5.93 | 24.73 / 0.8022 / 5.56 | 26.21 / 0.8348 / 4.50 |

| 3 | 29.40 / 0.8966 / 5.84 | 30.56 / 0.9153 / 3.09 | 29.83 / 0.9078 / 3.22 | 32.79 / 0.9362 / 1.71 |

| 4 | 33.94 / 0.9505 / 3.85 | 34.47 / 0.9608 / 2.30 | 33.68 / 0.9544 / 2.58 | 37.64 / 0.9739 / 1.06 |

| 5 | 37.88 / 0.9688 / 3.07 | 36.56 / 0.9761 / 2.75 | 36.32 / 0.9698 / 2.93 | 42.11 / 0.9837 / 0.80 |

| 6 | 41.06 / 0.9740 / 3.09 | 37.69 / 0.9792 / 3.31 | 38.23 / 0.9760 / 3.22 | 47.21 / 0.9965 / 0.43 |

| 7 | 43.49 / 0.9753 / 3.18 | 37.94 / 0.9807 / 3.70 | 38.77 / 0.9777 / 3.56 | 52.19 / 0.9987 / 0.17 |

| Bit-Depth | BE-CALF [19] | LBDEN [47] | RMFNet [20] | BRNet |

|---|---|---|---|---|

| 1 | 19.41 / 0.6423 / 15.12 | 19.17 / 0.6285 / 15.28 | 18.96 / 0.6330 / 15.65 | 19.91 / 0.6724 / 13.82 |

| 2 | 26.41 / 0.8518 / 5.57 | 26.49 / 0.8529 / 5.21 | 26.40 / 0.8496 / 5.05 | 28.27 / 0.8811 / 3.57 |

| 3 | 32.16 / 0.9347 / 3.22 | 32.88 / 0.9425 / 1.92 | 32.68 / 0.9390 / 1.88 | 34.62 / 0.9553 / 1.16 |

| 4 | 37.38 / 0.9715 / 1.88 | 38.42 / 0.9772 / 0.99 | 38.25 / 0.9755 / 0.94 | 39.72 / 0.9807 / 0.67 |

| 5 | 42.47 / 0.9897 / 1.09 | 43.56 / 0.9922 / 0.58 | 43.33 / 0.9911 / 0.56 | 44.35 / 0.9932 / 0.42 |

| 6 | 46.90 / 0.9960 / 0.70 | 47.83 / 0.9969 / 0.39 | 47.47 / 0.9965 / 0.37 | 48.42 / 0.9972 / 0.32 |

| 7 | 50.16 / 0.9981 / 0.53 | 50.92 / 0.9985 / 0.26 | 50.24 / 0.9983 / 0.28 | 53.30 / 0.9989 / 0.12 |

V Conclusion

In this paper, we proposed a novel network for bit-depth expansion (BDE) for any bit-depth degradation, termed as BRNet. Different from directly finding suitable values for high bit-depth (HBD) images from low bit-depth (LBD) instance, we investigated the BDE in an optimization perspective and learned a weighting map to replenish LBD image to HBD within a rational range. We designed an UNet-style network for missing bits restoration, which is termed as BRNet. Specially, proximal gradient descent algorithm and Runge-Kutta method were utilized for designing the block. Progressive training was considered in the training phase to improve the capacity. Experimental results show our BRNet achieves better subjective and objective performances than state-of-the-arts on all degradation situations. When the number of missing bits is larger, BRNet is much superior to the other works with satisfactory visual performance.

In furture, we try to focus on the texture restoration in BDE. Suffering from the severe information loss, the restored images of the network may loss some texture details from the ground truth when the number of missing bits is too large, as observed in the wings of parrot and the sky over sea in Fig. 8. How to apply the structure information as a prior for effective restoration is an open issue for BDE.

References

- [1] Eirikur Agustsson and Radu Timofte. Ntire 2017 challenge on single image super-resolution: Dataset and study. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, July 2017.

- [2] Yoshua Bengio, Jérôme Louradour, Ronan Collobert, and Jason Weston. Curriculum learning. In International Conference on Machine Learning (ICML), volume 382, pages 41–48, 2009.

- [3] Marco Bevilacqua, Aline Roumy, Christine Guillemot, and Marie line Alberi Morel. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In British Machine Vision Conference (BMVC), pages 135.1–135.10, 2012.

- [4] Sitaram Bhagavathy, Joan Llach, and Jiefu Zhai. Multiscale probabilistic dithering for suppressing contour artifacts in digital images. IEEE Transactions on Image Processing (TIP), 18(9):1936–1945, 2009.

- [5] Junyoung Byun, Kyujin Shim, and Changick Kim. Bitnet: Learning-based bit-depth expansion. In C. V. Jawahar, Hongdong Li, Greg Mori, and Konrad Schindler, editors, Asian Conference on Computer Vision (ACCV), volume 11362, pages 67–82, 2018.

- [6] Kelvin C.K. Chan, Xintao Wang, Xiangyu Xu, Jinwei Gu, and Chen Change Loy. Glean: Generative latent bank for large-factor image super-resolution. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 14240–14249, 2021.

- [7] Stanley H. Chan, Xiran Wang, and Omar A. Elgendy. Plug-and-play admm for image restoration: Fixed-point convergence and applications. IEEE Transactions on Computational Imaging (TCI), 3(1):84–98, 2017.

- [8] Qiubo Chen, Hengyu Zhao, Hongbin Sun, and Nanning Zheng. Exploiting bit-depth scaling for quality-scalable energy efficient display processing. In IEEE International Symposium on Circuits and Systems (ISCAS), pages 2357–2360, 2015.

- [9] Jui-Chiu Chiang and Wen-Ting Kuo. Bit-depth scalable video coding using inter-layer prediction from high bit-depth layer. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 649–652, 2009.

- [10] Wenchao Du, Hu Chen, and Hongyu Yang. Learning invariant representation for unsupervised image restoration. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 14471–14480, 2020.

- [11] Abbas I. Abdel Karim. The stability of the fourth order runge-kutta method for the solution of systems of differential equations. Communications of the ACM (CACM), 9(2):113–116, 1966.

- [12] Youngjung Kim, Hyungjoo Jung, Dongbo Min, and Kwanghoon Sohn. Deeply aggregated alternating minimization for image restoration. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 284–292, 2017.

- [13] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Yoshua Bengio and Yann LeCun, editors, International Conference on Learning Representations (ICLR), 2015.

- [14] Eastman Kodak. Kodak lossless true color image suite (photocdpcd0992). http://r0k.us/graphics/kodak/, 1999.

- [15] Kinam Kwon, Eunhee Kang, Sangwon Lee, Su-Jin Lee, Hyong-Euk Lee, ByungIn Yoo, and Jae-Joon Han. Controllable image restoration for under-display camera in smartphones. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 2073–2082, 2021.

- [16] Xiaoming Li, Wenyu Li, Dongwei Ren, Hongzhi Zhang, Meng Wang, and Wangmeng Zuo. Enhanced blind face restoration with multi-exemplar images and adaptive spatial feature fusion. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 2703–2712, 2020.

- [17] Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, and Kyoung Mu Lee. Enhanced deep residual networks for single image super-resolution. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pages 1132–1140, 2017.

- [18] Jing Liu, Pingping Liu, Yuting Su, Peiguang Jing, and Xiaokang Yang. Spatiotemporal symmetric convolutional neural network for video bit-depth enhancement. IEEE Transactions on Multimedia (TMM), 21(9):2397–2406, 2019.

- [19] Jing Liu, Wanning Sun, Yuting Su, Peiguang Jing, and Xiaokang Yang. Be-calf: Bit-depth enhancement by concatenating all level features of dnn. IEEE Transactions on Image Processing (TIP), 28(10):4926–4940, 2019.

- [20] Jing Liu, Xin Wen, Weizhi Nie, Yuting Su, Peiguang Jing, and Xiaokang Yang. Residual-guided multiscale fusion network for bit-depth enhancement. IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), pages 1–1, 2021.

- [21] Jing Liu, Ziwen Yang, Yuting Su, and Xiaokang Yang. Tanet: Target attention network for video bit-depth enhancement. IEEE Transactions on Multimedia (TMM), pages 1–1, 2021.

- [22] Jing Liu, Guangtao Zhai, Anan Liu, Xiaokang Yang, Xibin Zhao, and Chang Wen Chen. Ipad: Intensity potential for adaptive de-quantization. IEEE Transactions on Image Processing (TIP), 27(10):4860–4872, 2018.

- [23] Di Ma, Fan Zhang, and David R. Bull. Gan-based effective bit depth adaptation for perceptual video compression. In IEEE International Conference on Multimedia and Expo (ICME), pages 1–6, 2020.

- [24] Zicong Mai, Hassan Mansour, Rafal Mantiuk, Panos Nasiopoulos, Rabab Ward, and Wolfgang Heidrich. Optimizing a tone curve for backward-compatible high dynamic range image and video compression. IEEE Transactions on Image Processing (TIP), 20(6):1558–1571, 2011.

- [25] D. Martin, C. Fowlkes, D. Tal, and J. Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In IEEE International Conference on Computer Vision. ICCV 2001, volume 2, pages 416–423 vol.2, 2001.

- [26] Akira Mizuno and Masayuki Ikebe. Bit-depth expansion for noisy contour reduction in natural images. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 1671–1675, 2016.

- [27] Soo-Chang Pei and Li-Heng Chen. Image quality assessment using human visual dog model fused with random forest. IEEE Transactions on Image Processing (TIP), 24(11):3282–3292, 2015.

- [28] Changmeng Peng, Maohan Xia, Zhizhong Fu, Jin Xu, and Xiaofeng Li. Bilateral false contour elimination filter-based image bit-depth enhancement. IEEE Signal Processing Letters (SPL), 28:1585–1589, 2021.

- [29] Abhijith Punnappurath and Michael S Brown. A little bit more: Bitplane-wise bit-depth recovery. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), pages 1–1, 2021.

- [30] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI), volume 9351, pages 234–241. Springer, 2015.

- [31] Wenzhe Shi, Jose Caballero, Ferenc Huszar, Johannes Totz, Andrew P. Aitken, Rob Bishop, Daniel Rueckert, and Zehan Wang. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 1874–1883, 2016.

- [32] Guillaume Staerman, Pierre Laforgue, Pavlo Mozharovskyi, and Florence d’Alché Buc. When ot meets mom: Robust estimation of wasserstein distance. In International Conference on Artificial Intelligence and Statistics (AISTAS), pages 136–144, 13–15 Apr 2021.

- [33] Yuting Su, Wanning Sun, Jing Liu, Guangtao Zhai, and Peiguang Jing. Photo-realistic image bit-depth enhancement via residual transposed convolutional neural network. Neurocomputing, 347:200–211, 2019.

- [34] Michael Teutsch, Simone Sedelmaier, Sebastian Moosbauer, Gabriel Eilertsen, and Thomas Walter. An evaluation of objective image quality assessment for thermal infrared video tone mapping. In IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pages 488–497, 2020.

- [35] Video Quality Experts Group (VQEG). Final report from the video quality experts group on the validation of objective models of video quality assessment. http://www.vqeg.org/, 2000.

- [36] Pengfei Wan, Oscar C. Au, Ketan Tang, Yuanfang Guo, and Lu Fang. From 2d extrapolation to 1d interpolation: Content adaptive image bit-depth expansion. In IEEE International Conference on Multimedia and Expo (ICME), pages 170–175, 2012.

- [37] Zhou Wang, A.C. Bovik, H.R. Sheikh, and E.P. Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing (TIP), 13(4):600–612, 2004.

- [38] Yi Xiao, Chao Pan, Yan Zheng, Xianyi Zhu, Zheng Qin, and Jin Yuan. Gradient-guided DCNN for inverse halftoning and image expanding. In Asian Conference on Computer Vision (ACCV), volume 11364, pages 207–222, 2018.

- [39] Zhen Yang, Li Song, Rong Xie, Wenjun Zhang, Lin Li, and Yanan Feng. Tsgan: A two-stream generative adversarial network for bit-depth expansion. In IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), pages 1–6, 2020.

- [40] Haishan Ye, Ziang Zhou, Luo Luo, and Tong Zhang. Decentralized accelerated proximal gradient descent. In Annual Conference on Neural Information Processing Systems (NeurIPS), 2020.

- [41] Zili Yi, Qiang Tang, Shekoofeh Azizi, Daesik Jang, and Zhan Xu. Contextual residual aggregation for ultra high-resolution image inpainting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 7505–7514, 2020.

- [42] Roman Zeyde, Michael Elad, and Matan Protter. On single image scale-up using sparse-representations. In International conference on curves and surfaces, pages 711–730. Springer, 2010.

- [43] Fan Zhang, Mariana Afonso, and David R. Bull. Enhanced video compression based on effective bit depth adaptation. In IEEE International Conference on Image Processing (ICIP), pages 1720–1724, 2019.

- [44] Jing Zhang, Qianqian Dou, Jing Liu, Yuting Su, and Wanning Sun. BE-ACGAN: photo-realistic residual bit-depth enhancement by advanced conditional GAN. Displays, 69:102040, 2021.

- [45] Kai Zhang, Yawei Li, Wangmeng Zuo, Lei Zhang, Luc Van Gool, and Radu Timofte. Plug-and-play image restoration with deep denoiser prior. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), pages 1–1, 2021.

- [46] Lei Zhao, Qihang Mo, Sihuan Lin, Zhizhong Wang, Zhiwen Zuo, Haibo Chen, Wei Xing, and Dongming Lu. Uctgan: Diverse image inpainting based on unsupervised cross-space translation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 5740–5749, 2020.

- [47] Yang Zhao, Ronggang Wang, Yuan Chen, Wei Jia, Xiaoping Liu, and Wen Gao. Lighter but efficient bit-depth expansion network. IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 31(5):2063–2069, 2021.

- [48] Yang Zhao, Ronggang Wang, Wei Jia, Wangmeng Zuo, Xiaoping Liu, and Wen Gao. Deep reconstruction of least significant bits for bit-depth expansion. IEEE Transactions on Image Processing (TIP), 28(6):2847–2859, 2019.