Learning to Detect Few-Shot-Few-Clue Misinformation

Abstract.

A large volume of false textual information has been disseminating for a long time since the prevalence of social media. The potential negative influence of misinformation on the public is a growing concern. Therefore, it is strongly motivated to detect online misinformation as early as possible. Few-shot-few-clue learning applies in this misinformation detection task when the number of annotated statements is quite few (called few shots) and the corresponding evidence is also quite limited in each shot (called few clues). Within the few-shot-few-clue framework, we propose a task-aware Bayesian meta-learning algorithm to extract shared patterns among different topics (i.e. different tasks) of misinformation. Moreover, we derive a scalable method, i.e., amortized variational inference, to optimize the Bayesian meta-learning algorithm. Empirical results on three benchmark datasets demonstrate the superiority of our algorithm. This work focuses more on optimizing parameters than deigning detection models, and will generate fresh insights into data efficient detection of online misinformation at early stages.

1. Introduction

Online misinformation is affecting a wider range of individuals as an increasing number of people tend to use digital sources to access textual news. However, unlike the traditional news published by credible institutions, digital information on the web has not proven its quality. Individuals and organizations publish and share news on popular social media platforms, such as Facebook and Twitter, without accurate content checking. Due to financial or political purposes, some social media accounts publish biased and even false information around popular topics. Some other social accounts spread such misinformation to a wider range, intentionally (for those who know the news is fake) or unintentionally (for those who believe the news content is true).

Online misinformation can be defined as made-up statements on the web that are verifiably false, and could mislead and deceive readers (Allcott and Gentzkow, 2017). Misinformation, when done on a large scale, can influence the public by depicting a false picture of the reality. Hence, detecting misinformation effectively has become one of the most severe challenges faced by social media platforms.

The task of misinformation detection aims to identify whether a statement is True or False. Researchers have been rectifying this epidemic in two ways. One way is manual-checking that has been tackled by some news websites, such as Snopes and FactCheck. However, such a manual way is expensive to be able to check all the daily generated statements appearing on the web. Automatic tools, especially machine learning models, have been developed to accelerate the verification procedure (Potthast et al., 2018). Nonetheless, these models are usually data hungry and require considerable annotations. How to alleviate this requirement is of high research interest. Also, to mitigate negative influence of misinformation, detection should be conducted as early as possible. This puts forward another requirement on the detection models because clues, such as social engagements, are relatively rare at early stages. We refer to, the task of learning to detect misinformation meeting these two requirements, as the few-shot-few-clue learning task, which is the main focus of this paper.

Previous works tried to either generally increase data efficiency of machine learning models (Weiss et al., 2016)or detect misinformation with limited clues at early stages (Wang et al., 2018). Notwithstanding, there has not been a unified framework for data efficient detection of misinformation at early stages. We systematically review previous works and propose a new learning framework for misinformation detection, i.e., few-shot-few-clue learning. This framework extends the conventional few-shot learning by incorporating the concept of clue. As a shot (e.g., the statement and its social engagements in Figure 1) is a datum, clue can be the evidential signal (e.g., social engagement behavior and commenting words in Figure 1) inside the shot. Few-shot-few-clue learning applies when there are a limited number of annotated shots and a limited number of clues in each shot.

Within the framework of few-shot-few-clue learning, we first define a topic-centered misinformation detection as a task. Different tasks indicate misinformation detection of different events/topics (e.g., presidential election and COVID-19). It has been found that tasks share transferable patterns in misinformation detection, such as linguistic features of statements and stance patterns of engaged users in social platforms (Potthast et al., 2018; Wang et al., 2018). Based on these findings, we propose a task-aware Bayesian meta-learning algorithm to recognize the shared patterns between various tasks and improve detection performance on new topics. Finally, for sake of scalable optimization of the meta-learning algorithm, we derive an amortized variational inference method. To sum up, this study advances the state-of-the-art in four aspects:

-

(1)

We define a new framework of few-shot-few-clue learning for misinformation detection at early stages.

-

(2)

We develop a task-aware Bayesian meta-learning algorithm to tackle the task of few-shot-few-clue misinformation detection.

-

(3)

We derive an amortized variational inference method for scalable optimization of the meta-learning algorithm.

-

(4)

We conduct a systematic experimentation on three real-world datasets. Empirical results demonstrate that our algorithm outperforms the state-of-the-art detection methods.

The remainder of the paper is organized as follows: § 2 summarizes the related work; § 3 presents preliminary concepts of few-shot learning and meta-learning; § 4 defines the new few-shot-few-clue learning framework; § 5 details the base model for misinformation detection and the proposed task-aware Bayesian meta-learning algorithm; § 6 derives the amortized variational inference method; § 7 describes the used datasets and experimental setup; § 8 is devoted to experimental results; and § 9 concludes the paper.

2. Related Work

We provide a brief review of four lines of recent works on misinformation detection, early misinformation detection, transferable patterns of misinformation and meta-learning.

2.1. Misinformation Detection Methods

Content features

Misinformation is fabricated to mislead the public for financial or political gains, not to objectively report a fact. Hence they often contain opinionated or inflammatory language and images, which motivates content-based detection methods. In order to reveal linguistic differences between true and false statements, textual methods have studied the lexical, synthetic and topic features at the word, sentence and document levels (Potthast et al., 2018). Some sentiment features, such as positive words (e.g., love, sweet), negating words (e.g., not, never), cognitive action words (e.g., cause, know) and inferring action words (e.g., maybe, perhaps), are reported to help detect rumour (Kwon et al., 2013). Sensational or even fake images provoke anger or other emotional response of consumers. For example, Deepfake (Floridi, 2018) employ deep learning methods to generate fake images and videos to convey misleading information. Visual-based features are extracted from images and videos to capture the characteristics of misinformation. Recently, various visual and statistical features have been extracted for news verification.Yang et al. (2018) developed a convolutional neural network to extract text and visual features simultaneously. Khattar et al. (2019) propose a multimodal variational auto-encoder to extract visual features and detect fake news.

Social context

On social media platforms, every piece of news is correlated to other posts and users. Interactions among users and contents (e.g., commenting, reposting, tagging) provide rich reference evidence for misinformation detection. One type of interactions is post-based, which replies on users’ commentary posts towards the original statement (Zhang et al., 2018b, 2019a). People react to a piece of statement by expressing their stances or emotions in social media posts. Stances can be categorized as supportive, opposing, and neutral, which can be used to infer statement veracity.As the attention mechanism has led to improved performance in various areas (Zhang et al., 2018a, 2020, 2021b), this observation motivated the development of an attention-based recurrent neural network (RNN) model. Zhang et al. (2019b) propose a probabilistic deep learning model to utilize user replies as auxiliary evidence. Yang et al. (2019) consider statement veracity and user credibility as latent variables to predict their stances towards statement veracity. Reinforcement learning has also been tried to provide weak supervision in Wang et al. (2020). The other interaction type is statement propagation over time. Ma et al. (2018b) learns features of non-sequential propagation structure of tweets. A top-down and a bottom-up recursive neural networks were used to predict statement veracity. Monti et al. (2019) harness Graph Convolutional Networks (GCN) to encapsulate the propagation structure of heterogeneous data. Lu and Li (2020) propose Graph-Aware Co-Attention Network (GCAN) whileZhang et al. (2021a) uses temporal point processes for dynamic engagement modeling.

2.2. Early Misinformation Detection

Misinformation is created to mislead the public and can lead to severe consequences if widely propagated. Therefore, early detection is crucial. Convolutional neural networks (CNN) are used to extract interaction features between temporally sequential posts. Nguyen et al. (2017) propose another CNN-based model to learn latent representations of tweets and predict event veracity by aggregating predictions of all related statements. Liu et al. (2018) find that only a part of comments on social platforms are helpful to classify whether a statement is fake. Then they design an attention-based detection model to evaluate relative importance of comments according to their attention values. They also conclude that the attention mechanism contributes to early misinformation detection. Liu and Wu (2018) use recurrent and convolutional networks to extract propagation features while Shu et al. (2020) leverage weak social supervision from multiple sources for early detection.

2.3. Transferable Patterns

Misinformation detection models may not generalize well across topics and cultures (Horne et al., 2020), therefore transferable patterns have attracted researchers’ interests. Potthast et al. (2018) have analyzed the similarity of the writing style of hyperpartisan news and its connection to misinformation. Castelo et al. (2019) consider the changing discourse of emerging events, and propose a topic-agnostic approach that uses linguistic and web-markup features to identify misinformation. Huang et al. (2020) point out that the word/topic distribution of articles from different media sources may be different, so they develop a synthetic network that focuses on function words and syntactic structures for a more generalized representation. Wang et al. (2018) propose an event adversarial neural network where an event discriminator component aims to remove event-specific features. All these methods aim for features, while our work aims for model parameters that allow for strong generalization.

2.4. Meta-Learning

Fast learning is a hallmark of human intelligence. Due to high efficiency of recognizing shared patterns, meta-learning has been drawing increasing research attention (Lemke et al., 2015). There are different approaches to meta-learning: metric learning-based, optimization-based and probabilistic. The first approach to meta-learning is based on metric learning in the embedding space. Vinyals et al. (2016)propose to match the embeddings of query examples with those of the support ones. Zhou et al. (2018) propose to learn a concept space where the concept matching of categories is conducted. One optimization-based work in (Finn et al., 2017) is model-agnostic meta-learning (MAML) algorithms that learns a proper model initialization. Through a few steps of gradient descent-based fine-tuning, the initialization can be adapted to different tasks. From the Bayesian point of view, probabilistic meta-learning is studied in (Ravi and Beatson, 2018),where the initialization is treated as the prior of model parameter distributions. The posterior distributions are derived from the dataset of each learning task. We customize meta-learning by using topic similarity to adaptively control how the meta parameter will initialize the topic-specific parameter. One concurrent work (Wang et al., [n.d.]) combines MAML and neural processes to deal with misinformation. We differ from this work from two aspects: (1) the proposed task-aware Bayesian meta-learning algorithm can provide uncertainty estimation that is infeasible for optimization-based meta-learning including MAML, and is less prone to be under-fitting than neural processes; and (2) we use topic similarity when adapting global knowledge to tasks, which addresses the concern of dissimilar task distributions in (Wang et al., [n.d.]).

3. Preliminaries

Supervised deep learning usually suffers from the desideratum of a large-scale of annotated dataset. However, labels for some tasks are usually limited. To improve data efficiency and generality of neural networks, there have been research efforts on how to utilize the transferable patterns between different learning tasks. One typical view is to recognize such patterns from a set of tasks that enable efficient learning of new unknown tasks. The few-shot learning problem specifies scenarios where there are limited annotated data per class in classification tasks. Few-shot classification is an instantiation of meta-learning under the paradigm of supervised learning.

In the regime of meta-learning, there are multiple learning tasks drawn from a certain distribution . The key assumption of meta-learning is that tasks from this distribution share common patterns. The goal of meta-learning is to discover such patterns by training a model on multiple tasks from the distribution . To this end, the model is trained during the so-called meta-training step on a meta-training set that includes multiple tasks, and is evaluated during the meta-test step.

Suppose a task is a supervised learning problem, and the training and test datasets of this task can be represented as and respectively. Commonly, there are only a few labeled data points in the training set . The meta-training set is made up of multiple tasks from , i.e., , where denotes the number of tasks used during meta-training. While during meta-test, one or multiple new tasks can be used; for simplicity and without loss of generality, we use one task to denote the meta-test set .

The learning procedure of meta-learning algorithms is split into two steps. First, meta-learning aims to learn the meta-parameter from ,

| (1) |

Using as prior, the second step is to obtain the task-specific model parameter that is able to generalize well on after a few trials,

| (2) |

The meta-parameter can be viewed as the shared feature patterns among tasks from . After slight modifications, builds the internal representation that is suitable for new tasks and produces promising results. Meta-learning allows quick model adaptation from a small number of training data, and continuous adaption as more data becomes available. It is fast and flexible learning and avoid overfitting to new information.

4. Problem formulation

In this section, we first connect few-shot learning to misinformation detection, then extend few-shot learning to a new few-shot-few-clue learning framework that is formulated for efficient detection of misinformation at early stages. Table 1 shows the symbols used in this paper.

| Symbol | Description |

|---|---|

| a task of topic-centered misinformation detection | |

| the distribution of tasks | |

| the total number tasks | |

| a shot consisting of a statement and clues | |

| the total number of shots of the task | |

| the statement in the shot of the task | |

| the set of statements of all the shots of the task | |

| the label in the shot of the task | |

| the set of labels of all the shots of the task | |

| the set of clues in the shot of the task | |

| the -th clue in the shot of the task | |

| the total number of clues in the shot of the task | |

| the set of clues of all the shots of the task | |

| the training dataset for the task | |

| the test dataset for the task | |

| the local model parameter for the task | |

| the global model parameter shared across all tasks |

4.1. Few-Shot Misinformation Detection

Annotating statement veracity is expensive and time-consuming. This leads to the challenge of how to improve efficiency of annotated data. Section 3 discusses the few-shot classification task for a few labeled examples per category, the key of which is to properly recognize transferable patterns among different tasks, e.g., linguistic features and user stances (Potthast et al., 2018; Wang et al., 2018). Thus, the challenge of data efficiency can be tackled by few-shot learning. From this viewpoint, we formulate misinformation detection as a few-shot learning problem. As usual, the concept “few” indicates a specific number such as 5. For a statement and clues , the veracity is predicted by a model parameterized by . The model parameter for different tasks shares the same prior . To improve data efficiency and convenient adaptation to unseen events/topics, misinformation detection models need to extract salient patterns, i.e., , that can be transferred across topics and events.

4.2. Few-Shot-Few-Clue Learning

Besides the limited annotations, early misinformation detection faces a new challenge that has not been considered in previous studies. At the early stage of misinformation emerging on social media, there is limited evidential content. Therefore, it is important to study the shared structure of how to utilize limited evidence.

Inspired by few-shot learning, we formulate the early detection of online misinformation as a new few-shot-few-clue learning framework. As the input of each datum is made up of a statement and its evidential clues, the concept of few-shot-few-clue describes scenarios of limited size of data in two levels: (1) the number of annotated data (consisting of labels, statements and their accompanied clues) and (2) the number of clues per statement. Like few-shot misinformation detection, the concept “few” of few-clue indicates a specific number like 5-clue misinformation detection. Few-shot-few-clue learning differs from conventional few-shot learning because it considers fewness at two levels: shot and clue inside each shot.

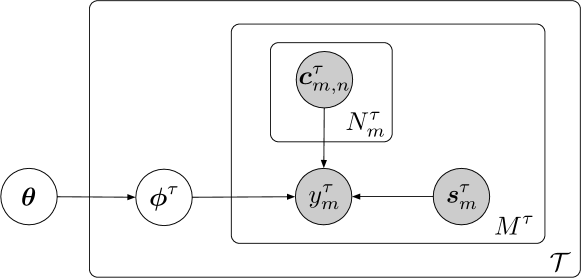

Figure 2 is a graphical representation of variables of interest. Similar to few-shot misinformation detection, the statement veracity is decided by a detection model with: (1) the parameter and (2) the input of the statement content and the corresponding clues . What makes few-shot-few-clue learning different is the decomposition of the clue variable. The variable is decomposed into a set of clues with the set size being . This is in line with our interest in cases when there are limited available clues per statement during the early stages, i.e.,the effect of . Formally, we give definitions of relevant terms with reference to Figure 2.

Definition 4.1.

A task is defined as the topic-centered detection of misinformation on the web.

Definition 4.2.

A shot is defined as a data instance consisting of a label , a statement and its set of evidential clues . denotes the number of shots in the task .

Definition 4.3.

A clue is defined as evidence that contributes to misinformation detection. denotes the number of clues in the shot of the task .

We make three further declarations: (1) misinformation about different events/topics is mapped to different tasks; (2) a statement is in the form of text; (3) a clue is an user engagement. Based on the above definitions, the research problem of few-shot-few-clue applies when there are few statements (small ) and few clues per statement (small ) for the early detection of misinformation regarding.

The meta-parameter can be interpreted as the common patterns shared by detection models across different topics; while the parameter can be interpreted as features specific to certain topics. For early detection when the size of is limited, machine learning models usually perform poorly on ; the meta-knowledge contained in can be helpful.

5. Methodology

After formulating early misinformation detection as a few-shot-few-clue learning framework, we propose a task-aware Bayesian meta-learning algorithm as a solution.

5.1. Base Model

Content feature extractor

We employ the pre-trained Bert model proposed by Devlin et al. (2019) to extract text features. The input to Bert is a sequence of word tokens and the output is a text embedding that summarizes linguistic and semantic features. We chose the base version of the pre-trained Bert for the sake of computation complexity; a fully connected layer converts the Bert output to a lower-dimension embedding. We freeze the parameters of Bert to avoid overfitting; only the fully connected layer is counted in the trainable parameters . For a task with data points, the statement of the -th () datum is input to a function to obtain the embedding vector:

| (3) |

Social context encoder

We consider a set of clues in the temporal order , where denotes a clue. For the social context encoder, we use the state-of-the-art GCAN (Lu and Li, 2020) model that extracts user features from their profiles and social engagements, and uses CNN and RNN to generate embeddings of repost propagation. A graph is constructed to describe the engagements between users, and a GCN is used to learn the graph representation. We use the function to denote this encoder:

| (4) |

The output is a concatenation of source-interaction co-attention embeddings, source-propagation embeddings, and propagation graph embeddings. All trainable GCAN parameters are counted in .

Aggregator

After obtaining and , we investigate how to incorporate clues to predict statement veracity. Veracity is predicted by combining the statement and clue information via an aggregator . We implement it as a multi-layer perceptron (MLP). Basically, takes the concatenation of the statement embedding and the social context embedding as inputs,

| (5) |

All of the three modules , and are parameterized by the topic-specific parameter . Trainable MLP parameters are counted in .

5.2. Task-Aware Bayesian Meta-Learning

To efficiently learn the model parameters, we design a task-aware Bayesian meta-learning algorithm. One concern of meta-learning’s application to misinformation detection is that tasks might be dissimilar and meta-parameters are not transferable. To address this concern, we introduce topic similarity to compute semantic similarity between topics, which determines how much we rely on the meta-parameter to learn the task-specific parameter . We expect depends heavily on when a topic is similar to topics in the meta-training set.

Specifically, we compute a topic embedding using a word embedding (based on Word2Vec (Mikolov et al., 2013)) and its weight (the normalized frequency of a word in the topic), where indicates the -th word in the vocabulary :

| (6) |

For all topics in the meta-training set, we compute an embedding:

| (7) |

where and are the sizes of the dataset and the meta-training set respectively. To avoid overfitting of for a topic , we introduce an adaptive parameter based on topic similarity:

| (8) |

where is the cosine function. Then we use to determine the initialization of the task-specified model parameter :

| (9) |

where is randomly sampled from a normal distribution. When is more similar to meta-training set, depends more on learnt from the meta-training set, otherwise there will be more randomness brought by .

The task-aware Bayesian meta-learning algorithm can provide two benefits: (1) uncertainty estimation that is infeasible for alternative optimization-based meta-learning including MAML, and is less prone to be under-fitting than neural processes; and (2) topic similarity when adapting global knowledge to tasks, which addresses the concern of dissimilar task distributions in (Wang et al., [n.d.]).

6. Optimization

6.1. Evidence Lower Bound

To optimize the task-aware Bayesian meta-learning algorithm with variational inference, we first need to derive the Evidence Lower BOund (ELBO) of the data likelihood:

| (10) |

Derivation of Eq. 10 can be found in Appendix A. Here, and are the variational parameters (such as a mean and standard deviation of each weight) of the approximate posteriors over the global latent variables and the local latent variables . If we maintain distinct variational parameters , each of which indexes a distribution over task-specific model parameters , the number of variational parameters is in the scale of .

6.2. Scaling Meta-Learning with Amortized Variational Inference

To scale the optimization process, we adopt the Amortized Variational Inference (AVI) for meta-learning proposed by Ravi and Beatson (2018) to infer the posterior distributions of and . As shown by Model-Agnostic Meta-Learning (Finn et al., 2017), a proper initialization can produce the decent task-specific parameters. Hence, from a global initialization , we produce the variational parameter by conducting several steps of gradient descent.

First, we need to specify the loss function on ,

| (11) |

Then, we modify the procedure of stochastic gradient descent, , based on the adaptive parameter , to produce from the the global initialization :

| (12) |

is the learning rate and is the number of steps of gradient descents that is determined by the adaptive parameter . and denote the lower and upper bounds of , and is a ceil function. Hereby . With this form of the variational distribution, serves as both the global initialization of local variational parameters and the parameters of the prior .

Finally, we estimate . We let be a dirac delta function , where is the solution to the optimization problem. This conduction removes the need for global variational parameters and simplifies the optimization problem of Eq. 10 to

| (13) |

Algorithm 1 provides the pseudo code for the task-aware Bayesian meta-training algorithm.

| Dataset | Twitter 15 | Twitter 16 | Pheme |

|---|---|---|---|

| # Source Tweets | 742 | 412 | 5,152 |

| # True | 372 | 205 | 3,142 |

| # False | 370 | 207 | 2,010 |

| # Users | 190,868 | 115,036 | 36,647 |

| avg. retweets per story | 292.19 | 208.70 | 64.24 |

7. Experiments

This section presents the experiments to evaluate the effectiveness of our task-aware Bayesian meta-learning (TABML 111The source code of TABML is publicly available from: https://github.com/BML2021/bml.) algorithm. The research questions that guide the remainder of the paper are:

-

RQ1

How is the misinformation detection performance of the proposed TABML algorithm compared to state-of-the-art misinformation detection baselines when full life-cycle data are available?

-

RQ2

What is the effect of the number of annotated statements on the performance of TABML and baselines in the setting of few-shot misinformation detection?

-

RQ3

What is the effect of the number of clues per statement on the performance of TABML and baselines in the setting of few-clue misinformation detection?

-

RQ4

Ablation study: what is the influence of the variable on the model’s performance?

| Model | Twitter15 | Twitter16 | Pheme | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Rec(%) | Prec(%) | Acc(%) | Rec(%) | Prec(%) | Acc(%) | Rec(%) | Prec(%) | Acc(%) | |

| RFC | 61.29 | 57.18 | 61.85 | 65.87 | 73.15 | 59.20 | 56.27 | 61.14 | 60.53 |

| CRNN | 53.05 | 52.96 | 66.19 | 64.33 | 65.19 | 65.76 | 63.14 | 59.98 | 61.01 |

| CSI | 68.67 | 69.91 | 65.37 | 63.09 | 63.21 | 76.12 | 64.66 | 62.33 | 63.12 |

| dFEND | 66.11 | 65.84 | 65.83 | 63.84 | 63.65 | 76.16 | 61.45 | 67.55 | 68.33 |

| GCAN | 82.99 | 81.37 | 81.41 | 73.47 | 81.61 | 82.52 | 58.42 | 63.26 | 70.41 |

| EANN | 64.56 | 82.51 | 71.51 | 65.23 | 81.04 | 79.05 | 65.45 | 66.36 | 69.79 |

| MetaFEND | 78.35 | 81.51 | 82.44 | 76.58 | 73.14 | 79.11 | 70.54 | 73.24 | 70.04 |

| Ours | 82.621.71 | 83.861.26 | 85.651.93 | 80.772.02 | 82.323.16 | 86.141.41 | 71.221.21 | 76.221.47 | 75.652.43 |

For evaluation purposes, we use three well-established benchmark datasets, i.e., Twitter15, Twitter16 (Ma et al., 2018a) and Pheme (Zhang et al., 2019b). Statement veracity is manually annotated by fact-checkers. These datasets also provide rich social context on top of the original statements: (1) users that are involved in the propagation of statements, and (2) social engagements in the temporal order. Table 2 summarizes statistics of these datasets. For datasets without split of topics/events, including Twitter15 and Twitter16, we use Tweeter-LDA (Diao et al., 2012), an LDA variant widely used for short and noisy tweets, to determine topic clusters as well as important words with their weights. The Pheme dataset has been split based on events by its builders, so we compute word frequencies per topic as their weights, and keep the most frequent 10 words. Among these clusters, we assign of them into a meta-training, and the rest into the meta-test subset to ensure there is no data overlap in subsets. From each cluster in Twitter15 and Twitter16, we randomly sample 6 true shots and 6 false shots to make a data batch; the average number of clue in a shot is 30. This can be denoted as 2-way-6-shot-30-clue learning. Similarly, the learning process on Pheme can be denoted as 2-way-6-shot-25-clue learning. We set the inner and outer learning rate and to be and , respectively. The lower and upper bound of update steps and are 2 and 8 respectively, and the dropout rate is 0.6 to avoid overfitting.

7.1. Baselines

We compare the TABML algorithm and the following state-of-the-art models. RFC (Kwon et al., 2017) is a random forest with features from the source tweets and engaged user profiles. CRNN (Liu and Wu, 2018) combines convolutional and recurrent neural networks to extract features from engaged users and retweet texts. CSI (Ruchansky et al., 2017) incorporates relevant articles and analyses group behaviour of engaged users. dEFEND (Shu et al., 2019) uses a co-attention mechanism to study source claims and user features. GCAN (Lu and Li, 2020) utilises the co-attention graph networks to encapsulate the propagation structure of heterogeneous data. EANN (Wang et al., 2018) contains a event discriminator that removes event-specific features and discovers the common pattern among events. MetaFEND (Wang et al., [n.d.]) is a meta neural process that combines meta-learning and neural processes to detect few-shot misinformation.

8. Results and Analysis

8.1. Results of Topic Clustering

We first examine the effectiveness of Twitter-LDA (Diao et al., 2012) on determining the topic cluster that each tweet belongs to. We present the results of topic clustering in the form of word cloud on Twitter15 and Twitter16 in Figure 5. From this figure, it is clear that each topic cluster focuses well on a social event. For instance, the first topic of Twitter15 is about the “MH17” accident of Malaysia Airline, and the second topic of Twitter16 is more about politics. Moreover, there are sill overlap words between clusters, which indicates similarity in terms of semantics. This further verifies our assumption of similar tasks and serves as the basis of using semantic similarity to modify the Bayesian meta-learning based misinformation detection (that is, Equation 9).

The Pheme dataset has clearly split statement tweets into various events, hence there is no need to do topic clustering. These events are “Charlie Hebdo”, “Sydney siege”, “Ferguson”, “Ottawa shooting”, “Germanwings-crash”, “Putin missing”, “Prince Toronto”, “Gurlit” and “Ebola Essien”.

8.2. Performances under all Available Statements and Clues (RQ1)

Subsequently, we aim to evaluate the effectiveness of the proposed task-aware Bayesian meta-learning algorithm with all available statements and clues. Table 3 summarizes the four evaluation metrics of all models on the three datasets. The reported numbers in Table 3 are the means and standard deviations of TABML model running with different weights sampled from the same distribution that is learned at the meta-training phase. Our model outperforms all the baselines on most of all the metrics across the three datasets, achieving a performance improvement of around 4% in terms of the accuracy. As for recall and precision, our model obtains the best values in most cases or the second best with a fairly minor gap in other cases. On the larger Pheme dataset where state-of-the-art GCAN has severely discounted performance, our model enjoys a more significant improvement. Compared to the baseline with transferable patterns, i.e., EANN and MetaFEND, our model outperforms them in terms of all metrics over three datasets. Therefore, our model is able to generalize better to new topics.

8.3. Performances under Limited Available Statements (RQ2)

Annotating statement veracity is expensive and time-consuming. To understand the effect of the number of training statements, we conduct experiments using a different number of annotated statements. Specifically, we use five different percentages (i.e., 60%, 70%, 80%, 90% and 100%) of all training data to train the models. From each topic cluster in the dataset Twitter15 and Twitter16, we still randomly sample 6 true and 6 false shots to make a batch, with each shot containing all available clues. So it is still 2-way-6-shot-30-clue learning on Twitter15 and Twitter16. In the same way, it is 2-way-6-shot-25-clue learning on Pheme. Evaluation performance is reported in Figure 6. We have three observations: First, it is clear that the size of training dataset exerts a significant effect on the performance of all models. Specifically, the performance increases as there are more training data in most cases. Therefore, the most effective way to improve misinformation detection model is by increasing the number of annotated statements. Second, our TABML is able to outperform the state-of-the-art baselines with different numbers of shots. Third, our TABML gains more stable performance improvement compared with baselines when more training data are available.

8.4. Performances under Few Clues (RQ3)

Next, we study early misinformation detection when there are a limited number of clues per statement. From each topic cluster, we fix the number of shots at 6. Then we change the number of clues in each shot, where can be [5, 10, 15, 20, 25, 30] and [5, 10, 15, 20, 25] for Twitter datasets and Pheme dataset respectively. Figure 7 illustrates how the detection performance changes over different numbers of clues. Obviously, our proposed TABML consistently shows better performance when varying the clues. Another obvious finding is that, despite of the overall increasing accuracy with more clues, performance is improvement with different pace. This indicates that some clues hinder veracity prediction, and should be excluded. We leave this in the future work. Baseline models show greater variances, which means instability and lower performance, while our TABML has more stability. In fact, Figure 7 shows TABML is stable enough to deal with few-clue problems.

8.5. Ablation Study (RQ4)

Finally, we study how the adaptive parameter affects the performance of our model. Figure 8 shows the comparative result. As we can see, our TABML model with the adaptive parameter always outperforms the counterpart without across three datasets no matter what metric it is, leading to improvements. The result illustrates that TABML with can adaptively learn about the meta information among the topics and then effectively apply them into the learning of new tasks, avoiding the phenomenon of overfitting and the reduced performance in the learning of new tasks, which always contain the out of distributional training data.

9. Conclusion

In this paper, we have proposed TABML, a model for misinformation detection at early stages. We have investigated misinformation detection with limited statements and clues. Considering two levels of limited resources, we formulate a new few-shot-few-clue learning task. Solving this problem needs to recognize the shared patterns among different events and topics. We then develop a task-aware Bayesian meta-learning algorithm to discover such patterns. An amortized variational inference method is derived for scalable parameter optimization. Experimental results confirm the superiority of our algorithm against the state-of-the-art methods for early misinformation detection. The findings highlight the importance of shared patterns among different events/topics and provide insights into misinformation detection in low-resource languages.

Appendix A Appendix: Derivation of ELBO

The posterior distributions of meta-parameters and task-specific parameters in this paper are intractable. To approximate the these posterior distributions, we resort to the variational inference (VI) method. We present a detailed derivation of the objective function of Evidence Lower Bound (ELBO) in the VI method, i.e., Eq. 10.

References

- (1)

- Allcott and Gentzkow (2017) Hunt Allcott and Matthew Gentzkow. 2017. Social media and fake news in the 2016 election. Journal of Economic Perspectives 31, 2 (2017), 211–36.

- Castelo et al. (2019) Sonia Castelo, Thais Almeida, Anas Elghafari, Aécio Santos, Kien Pham, Eduardo Nakamura, and Juliana Freire. 2019. A topic-agnostic approach for identifying fake news pages. In Companion Proceedings of The Web Conference. 975–980.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In NAACL. 4171–4186.

- Diao et al. (2012) Qiming Diao, Jing Jiang, Feida Zhu, and Ee Peng LIM. 2012. Finding bursty topics from microblogs. Acl.

- Finn et al. (2017) Chelsea Finn, Pieter Abbeel, and Sergey Levine. 2017. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning. 1126–1135.

- Floridi (2018) Luciano Floridi. 2018. Artificial intelligence, deepfakes and a future of ectypes. Philosophy & Technology 31, 3 (2018), 317–321.

- Horne et al. (2020) Benjamin D Horne, Maurício Gruppi, and Sibel Adalı. 2020. Do All Good Actors Look The Same? Exploring News Veracity Detection Across The US and The UK. arXiv preprint arXiv:2006.01211 (2020).

- Huang et al. (2020) Yen-Hao Huang, Ting-Wei Liu, Ssu-Rui Lee, Fernando Henrique Calderon Alvarado, and Yi-Shin Chen. 2020. Conquering Cross-source Failure for News Credibility: Learning Generalizable Representations beyond Content Embedding. In Proceedings of The Web Conference 2020. 774–784.

- Khattar et al. (2019) Dhruv Khattar, Jaipal Singh Goud, Manish Gupta, and Vasudeva Varma. 2019. Mvae: Multimodal variational autoencoder for fake news detection. In The World Wide Web Conference. 2915–2921.

- Kwon et al. (2017) Sejeong Kwon, Meeyoung Cha, and Kyomin Jung. 2017. Rumor detection over varying time windows. PloS one 12, 1 (2017), e0168344.

- Kwon et al. (2013) Sejeong Kwon, Meeyoung Cha, Kyomin Jung, Wei Chen, et al. 2013. Prominent features of rumor propagation in online social media. In International Conference on Data Mining. IEEE.

- Lemke et al. (2015) Christiane Lemke, Marcin Budka, and Bogdan Gabrys. 2015. Meta learning: a survey of trends and technologies. Artificial intelligence review 44, 1 (2015).

- Liu et al. (2018) Qiang Liu, Feng Yu, Shu Wu, and Liang Wang. 2018. Mining significant microblogs for misinformation identification: an attention-based approach. ACM Transactions on Intelligent Systems and Technology (TIST) 9, 5 (2018), 1–20.

- Liu and Wu (2018) Yang Liu and Yi-Fang Brook Wu. 2018. Early detection of fake news on social media through propagation path classification with recurrent and convolutional networks. In Thirty-Second AAAI Conference on Artificial Intelligence.

- Lu and Li (2020) Yi-Ju Lu and Cheng-Te Li. 2020. GCAN: Graph-aware Co-Attention Networks for Explainable Fake News Detection on Social Media. arXiv preprint arXiv:2004.11648 (2020).

- Ma et al. (2018a) Jing Ma, Wei Gao, and Kam-Fai Wong. 2018a. Detect rumor and stance jointly by neural multi-task learning. In Companion Proceedings of the The Web Conference 2018. 585–593.

- Ma et al. (2018b) Jing Ma, Wei Gao, and Kam-Fai Wong. 2018b. Rumor Detection on Twitter with Tree-structured Recursive Neural Networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics.

- Mikolov et al. (2013) Tomas Mikolov, Kai Chen, Greg Corrado, and Jeffrey Dean. 2013. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781 (2013).

- Monti et al. (2019) Federico Monti, Fabrizio Frasca, Davide Eynard, Damon Mannion, and Michael M Bronstein. 2019. Fake news detection on social media using geometric deep learning. arXiv preprint arXiv:1902.06673 (2019).

- Nguyen et al. (2017) Tu Ngoc Nguyen, Cheng Li, and Claudia Niederée. 2017. On early-stage debunking rumors on twitter: Leveraging the wisdom of weak learners. In International Conference on Social Informatics. Springer, 141–158.

- Potthast et al. (2018) Martin Potthast, Johannes Kiesel, Kevin Reinartz, Janek Bevendorff, and Benno Stein. 2018. A Stylometric Inquiry into Hyperpartisan and Fake News. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 231–240.

- Ravi and Beatson (2018) Sachin Ravi and Alex Beatson. 2018. Amortized bayesian meta-learning. (2018).

- Ruchansky et al. (2017) Natali Ruchansky, Sungyong Seo, and Yan Liu. 2017. Csi: A hybrid deep model for fake news detection. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management. ACM, 797–806.

- Shu et al. (2019) Kai Shu, Limeng Cui, Suhang Wang, Dongwon Lee, and Huan Liu. 2019. defend: Explainable fake news detection. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 395–405.

- Shu et al. (2020) Kai Shu, Guoqing Zheng, Yichuan Li, Subhabrata Mukherjee, Ahmed Hassan Awadallah, Scott Ruston, and Huan Liu. 2020. Leveraging Multi-Source Weak Social Supervision for Early Detection of Fake News. arXiv preprint arXiv:2004.01732 (2020).

- Vinyals et al. (2016) Oriol Vinyals, Charles Blundell, Timothy Lillicrap, Daan Wierstra, et al. 2016. Matching networks for one shot learning. In Advances in neural information processing systems. 3630–3638.

- Wang et al. (2018) Yaqing Wang, Fenglong Ma, Zhiwei Jin, Ye Yuan, Guangxu Xun, Kishlay Jha, Lu Su, and Jing Gao. 2018. Eann: Event adversarial neural networks for multi-modal fake news detection. In Proceedings of the 24th acm sigkdd international conference on knowledge discovery & data mining. 849–857.

- Wang et al. ([n.d.]) Yaqing Wang, Fenglong Ma, Haoyu Wang, Kishlay Jha, and Jing Gao. [n.d.]. Multi-modal Emergent Fake News Detection via Meta Neural Process Networks. ([n. d.]).

- Wang et al. (2020) Yaqing Wang, Weifeng Yang, Fenglong Ma, Jin Xu, Bin Zhong, Qiang Deng, and Jing Gao. 2020. Weak supervision for fake news detection via reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence. 516–523.

- Weiss et al. (2016) Karl Weiss, Taghi M Khoshgoftaar, and DingDing Wang. 2016. A survey of transfer learning. Journal of Big data 3, 1 (2016), 9.

- Yang et al. (2019) Shuo Yang, Kai Shu, Suhang Wang, Renjie Gu, Fan Wu, and Huan Liu. 2019. Unsupervised fake news detection on social media: A generative approach. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33. 5644–5651.

- Yang et al. (2018) Yang Yang, Lei Zheng, Jiawei Zhang, Qingcai Cui, Zhoujun Li, and Philip S Yu. 2018. TI-CNN: Convolutional Neural Networks for Fake News Detection. arXiv preprint arXiv:1806.00749 (2018).

- Zhang et al. (2021a) Qiang Zhang, Jonathan Cook, and Emine Yilmaz. 2021a. Detecting and Forecasting Misinformation via Temporal and Geometric Propagation Patterns.. In ECIR (2). 455–462.

- Zhang et al. (2019a) Qiang Zhang, Shangsong Liang, Aldo Lipani, Zhaochun Ren, and Emine Yilmaz. 2019a. From Stances’ Imbalance to Their HierarchicalRepresentation and Detection. In The World Wide Web Conference. 2323–2332.

- Zhang et al. (2018a) Qiang Zhang, Shangsong Liang, and Emine Yilmaz. 2018a. Variational self-attention model for sentence representation. arXiv preprint arXiv:1812.11559 (2018).

- Zhang et al. (2020) Qiang Zhang, Aldo Lipani, Omer Kirnap, and Emine Yilmaz. 2020. Self-Attentive Hawkes Process. ICML.

- Zhang et al. (2019b) Qiang Zhang, Aldo Lipani, Shangsong Liang, and Emine Yilmaz. 2019b. Reply-Aided Detection of Misinformation via Bayesian Deep Learning. In The World Wide Web Conference. ACM, 2333–2343.

- Zhang et al. (2021b) Qiang Zhang, Aldo Lipani, and Emine Yilmaz. 2021b. Learning Neural Point Processes with Latent Graphs. In Proceedings of the Web Conference 2021. 1495–1505.

- Zhang et al. (2018b) Qiang Zhang, Emine Yilmaz, and Shangsong Liang. 2018b. Ranking-based Method for News Stance Detection. In Companion Proceedings of the The Web Conference 2018. ACM Press.

- Zhou et al. (2018) Fengwei Zhou, Bin Wu, and Zhenguo Li. 2018. Deep meta-learning: Learning to learn in the concept space. arXiv preprint arXiv:1802.03596 (2018).