Learning to Affiliate: Mutual Centralized Learning for Few-shot Classification

Abstract

Few-shot learning (FSL) aims to learn a classifier that can be easily adapted to accommodate new tasks, given only a few examples. To handle the limited-data in few-shot regimes, recent methods tend to collectively use a set of local features to densely represent an image instead of using a mixed global feature. They generally explore a unidirectional paradigm, e.g., finding the nearest support feature for every query feature and aggregating local matches for a joint classification. In this paper, we propose a novel Mutual Centralized Learning (MCL) to fully affiliate these two disjoint dense features sets in a bidirectional paradigm. We first associate each local feature with a particle that can bidirectionally random walk in discrete feature space. To estimate the class probability, we propose the dense features’ accessibility that measures the expected number of visits to the dense features of that class in a Markov process. We relate our method to learning a centrality on an affiliation network and demonstrate its capability to be plugged in existing methods by highlighting centralized local features. Experiments show that our method achieves the new state-of-the-art.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x1.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x2.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x3.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x4.png) |

| (a) ) | (b) | (c) | (d) |

1 Introduction

Few-shot classification aims to learn a classifier that can be readily adapted to novel classes given just a small number of labeled instances. To address this problem, a line of previous literature adopts metric-based methods [30, 26, 28] that learn a global image representation in an appropriate feature space and use a distance metric to predict their labels.

Recent approaches [35, 16, 18] have demonstrated that the significant intra-class variations would inevitably drive the image-level embedding from the same category far apart in a given metric space under low-data regimes. In contrast, densely representative local features can provide transferrable information across categories that have shown promising performances in the few-shot scenario. Among those methods illustrated in Figure 1, DN4 [16] finds the nearest neighbor support feature for each query feature and accumulates all the local matches in a Naive-Bayes way to represent an image-to-class similarity; DeepEMD [35] uses the earth mover distance to compare the complex structured representations composed of local features. FRN [33] reconstructs each of query dense features with a linear combination of support dense features in a latent space and use the reconstruction distance to measure the image-to-class relevance. They all follow a unidirectional query-to-support paradigm, whose evident character is an accumulation over all query features followed by a softmax probability.

In this paper, we incorporate an extra support-to-query connection as a complement to thoroughly affiliate two disjoint sets of dense features. The potential offered by this bidirectional paradigm stems from the intuition that, except for using query features to find related support features, it is also plausible to estimate the task-relevance of query features according to the support features. Specifically, we associate dense features with particles that could bidirectionally random walk to the opposite dense features set in the discrete feature space. The prediction probability for each class is then estimated by the dense features’ accessibility, i.e., the expected number of visits to the dense features of that class in a time-homogeneous Markov process.

The contributions are as follows: (1) We propose to learn mutual affiliations between the query and support dense features instead of following the unidirectional query-to-support paradigm in FSL. (2) We introduce the dense features’ accessibility to FSL and demonstrate that traditional transductive methods could be easily adapted to the inductive setting if we treat dense features from a single query image as a set of unlabeled data. (3) We propose a novel bidirectional random walk based method in FSL and draw its connection to the single-mode eigenvector centrality of an affiliation network. (4) The underlying centrality investigated in this work can be plugged in existing global feature based methods like ProtoNet and RelationNet by highlighting task-centralized local features instead of global average pooling.

2 Related Work

Dense Feature based FSL. Those methods focus on learning image-to-image similarities by encoding each input into a set of dense feature vectors (or a feature map). Among them, DC [18] proposes to predict for each local features and average their probabilities. DeepEMD [35] adopts the earth mover’s distance to compute an image-to-class distance. DN4 [16] uses the top-k nearest neighbors in a Naive-Bayes way to represent image-level similarities. ADM [15] and SAML [12] use extra network layers for dense classifications. FRN [33] reconstructs the dense query features from support features of a given class to predict their relevance. Lifchitz et al. [17] propagates labels from support sets to query features. MCL is also among the family of dense feature based frameworks. A major difference is that we consider the mutual affiliations between the query and support features instead of the unidirectional one in previous work.

Graphical models in FSL. Another branch of methods learns graphs on FSL. Among them, [11] build graph neural network (GNN) from a collection of images. [9] use the degree-centrality as part of graph features to adjust the weight of graph vertex in transductive FSL. Our proposed feature accessibility of bidirectional random walks is well-related to the eigenvector centrality. Differently, we use the single-mode centrality of bipartite data as a novel criterion for the inductive classification.

From Transductive to Inductive. TPN [20] first introduces the well-known label propagation [36] to the transductive FSL that transfers information from labeled data to unlabeled one and shows substantial improvements over inductive methods. Lifchitz et al. [17] first demonstrates it feasible to adopt label for inductive FSL by treating the query dense features as numerous unlabeled data. Different from unidirectional label propagation, our MCL further considers the set of query and support features as bipartite data where the self-reinforcements (i.e., query-to-query and support-to-support random walks) are avoided.

3 Method

3.1 Formulation of Dense Features based FSL

In a -way -shot episode, the goal is to predict a class label for a single query image given support classes, each of which contains support images for reference.

We first encode each input image into number of -dimensional dense features . We use to denote dense features from a query image. Inspired by prototype learning [26], we average feature vectors from the same spatial locations of different images into support dense features for each category.

We use the bold font (e.g., and ) to denote a set of feature vectors and use the normal font (e.g., and ) to denote a single feature vector. We use to represent the union set of dense features from all supporting classes. The cardinalities for the sets , and are , and respectively.

The target of dense features based FSL is to find the label of a query image, given its dense query features and the union set of dense support features from all categories.

3.2 Bidirectional Random Walks on Dense Features

We start by using the cosine similarity to measure the closeness between the two feature vectors where is the Frobenius inner product.

Given two dense features sets and , we learn inter similarity matrix between those two disjoint sets where the entry of indicates the similarity between vectors and .

We build query-to-support affiliations from every query feature to all support features by a random walk probability . The probability matrix from all query to support features can be written by

| (1) |

where is the element-wise exponential function, is a scaling temperature for a softmax like probability and is a diagonal normalization matrix with its -value to be the sum of the -th column of . The subscript serves both as the matrix size and as an indication for the feature vector with which it is associated. For example, is of size and it includes random walk probabilities from query features to support features .

Likewise, we connect every support feature to all by a similar probability matrix:

| (2) |

where is the analogous diagonal matrix with its -value to be the sum of the -th column of .

It should be noted that we use two different scaling parameters since is a non-square matrix due to the different cardinalities of dense feature sets and . Although the matrices and would be symmetric if we ignore their different scaling parameters, the probability matrices , are both column-normalized by different , respectively and are thus directional.

To learn the mutual affiliations between the two dense feature sets, we first associate each feature vector with a particle that forms a discrete feature space . Next, we let particles bidirectionally random walk between particles in that space according to and (i.e., particles at query features set are only allowed to move to the support features set, and vice versa). We assume it time-homogeneous in a Markov process and the random walk probability from to for all , in can be formulated by:

|

|

(3) |

To write it in matrix, we can obtain the Markov transition matrix :

| (4) |

that consists of the column-normalized and .

It can be proved (in supplementary) that, after infinity times of bidirectional random walks, the Markov chain with anti-diagonal reaches the periodic stationary distribution:

| (5) | ||||

where , are the stationary distributions with equations and respectively. is a vector of ones with different length indicated by its subscript.

The motivation of using these stationary distributions to encode mutual affiliations is straightforward: support features would be frequently visited in the long times of bidirectional random walk if they shared the same local characteristics with the query ones. In other words, the support class that owns the most mutual affiliations with querying image would be predicted due to their mutual closeness.

3.3 Classification by Dense Features’ Accessibility

To formulate it in FSL, we first assume particles are uniformly distributed in the discrete finite space . Then, we use the expected number of visits from all particles to support features of class in the long times of bidirectional random walks to measure the amount of local characteristics that class owns for the query image:

|

|

(6) |

where is an indicator function that equals 1 if its argument is true and zero otherwise. indicates the entry of the matrix from particle to particle and indicates the entry of the vector that associated to feature .

We give the derivation when the Markov chain length is even in the first equality of Eqn.(LABEL:eq:MEL) and prove that the odd reaches the same result in the supplementary material. The second equality is from the absorbing property of the periodic Markov chain where the power of matrix gets saturated to Eqn.(5) for the increasing . Since is the stationary distribution of column-stochastic under the probability constraint , is a valid distribution for classifications.

3.4 Reinterpretation as a Graph Centrality

If we interpret the random walk probability matrix as an adjacency matrix of a directed bipartite graph , we find that the dense features’ accessibility in the time-homogeneous bidirectional random walk would be equivalent to learning a single-mode eigenvector centrality on the graph . The bipartite graph is also called the affiliation network in social network analysis [3, 4, 5] that models two types of entities "actors" and "society" related by affiliation of the former in the latter. The concept of centrality in social network analysis is generally used to investigate the acquaintanceships among people that often stem from one or more shared affiliations.

To see this in graph theory, we start with a brief overview of the eigenvector centrality that reflects score of vertex for both and in the affiliation network with adjacency :

| (7) | ||||

where is a set of neighbors for the given vertex and is a constant.

With a small rearrangement, Eqn.(7) can be rewritten in vector notation with an eigenvector equation . The additional requirement that all the entries of the eigenvector be non-negative implies (by the Perron–Frobenius theorem [21]) that only the greatest eigenvalue results in the desired centrality measure. For the column-stochastic adjacency matrix in our method, the largest eigenvalue is 1.

Single-mode centrality [5] is a special form of graph centrality that measures the extent to which nodes in one vertex set are relatively central only to other nodes in the same vertex set on bipartite graph. For example, the single-mode centrality for different in is defined by . Lemma 1 (proved in supplementary material) shows that dense features’ accessibility of bidirectional random walks in Eqn.(LABEL:eq:MEL) is equivalent to the single-mode eigenvector centrality of support set on bipartite data.

Lemma 1.

Assume is the affiliation network of bipartite data , with the adjacency matrix defined by the anti-diagonal Markov transition matrix in Eqn.(4). The single-mode eigenvector centrality of vertex set is equivalent to the dense features’ accessibility on in a time-homogeneous Markov process.

Based on this reinterpretation, it is straightforward to introduce the attenuation (damping) factor in Markov bidirectional random walks motivated by the Katz centrality [13], where connections made with distant neighbors are penalized by . The dense features’ accessibility with attenuation factor for few-shot classifications is defined by

| (8) | ||||

where is a constant for a valid distribution.

Although we simply consider the single-mode centrality on for an end-to-end classification purpose, it is also beneficial to learn its conjugate centrality on in the affiliation network (by the analogous in Lemma 1). We will show that both single-mode centralities on two dense features sets could serve as plug-and-play for finding centralized local characteristics in existing methods (hence the term Mutual Centralized Learning, MCL).

3.5 End-to-End Training by Katz Approximation

The algorithm of Mutual Centralized Learning (MCL) in Eqn.(LABEL:eq:MEL) involves the computation of Markov stationary distribution with equation . Theoretically, the is the eigenvector of with the eigenvalue 1 under a probability constraint .

The above constraints could lead to a solution of by solving the overdetermined linear system

| (9) |

where is an identity matrix and is a vector of zeros. Although we can solve it by various methods like pseudo-inverse or QR decomposition and back substitution, it is empirically found time-consuming as these operators are either numerically unstable or not paralleled well in modern deep-learning packages.

To handle it, we present an alternative solution based on Lemma 1 as follows: We first calculate the Katz centrality by its closed-form solution [13]:

| (10) |

and then solve single-mode Katz centrality in Eqn.(8) by the definition of the single-mode centrality:

| (11) |

Since Katz centrality degrades to the eigenvector centrality when approaches 1 [13] (indicates no attenuation), we can obtain the approximation of eigenvector centrality by a large :

| (12) | ||||

and the analogous single-mode eigenvector centrality in Eqn.(LABEL:eq:MEL) can then be approximated by:

| (13) |

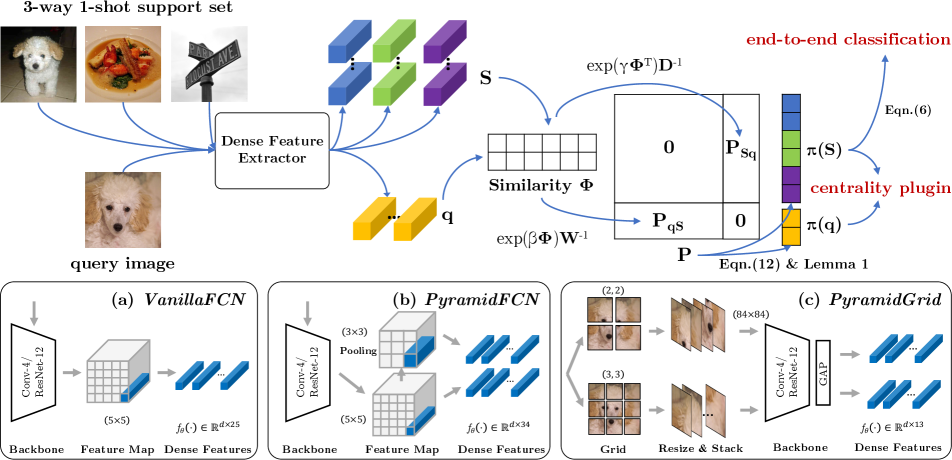

We use the negative log-likelihood loss to update parameters in different feature extractors and the whole picture of MCL for few-shot classifications is shown in Figure 2. We provide the detailed algorithm/pseudo-codes in the supplementary material for reference.

3.6 Plugin for Global Feature based FSL.

Since the underlying centrality learned by MCL reveals the different importance of local features on an affiliation network, it is thus plausible to plug it into global feature based methods by replacing their native global average pooling (GAP) with the centrality weighted pooling as follows:

a. Extract dense features for each input image.

b. Compute the eigenvector centrality by Eqn.(12).

c. Compute and according to definition of the single-mode centrality in Lemma 1.

d. Use as weights on query dense features and weighted accumulate them to a single feature vector.

e. Use the class-wise normalized centrality from to accumulate dense features for each supporting class.

Once we get the centrality weighted features for the query image and support classes, it is straightforward to perform traditional global feature based methods like before.

4 Experiments

We conduct experiments on two widely-used FSL datasets and three fine-grained datasets: (1) miniImageNet [30] contains 600 images per class over 100 classes. We follow the split used by [23] that takes 64, 16 and 20 classes for train/val/test respectively; (2) tieredImageNet [22] is much larger compared to miniImageNet with 608 classes. The 351, 97 and 160 classes are used for train/val/test respectively. (3) CUB [31] consists 11,788 images from 200 bird classes. 100/50/50 classes are used for train/val/test and each image is first cropped to a human-annotated bounding box. (4) meta-iNat [32] is a benchmark of animal species in the wild. We follow the same class split proposed by [32] that uses 908/227 classes for training/evaluation respectively. (5) tiered-meta-iNat [32] is a more difficult version of meta-iNat where a large domain gap is introduced. The 354 test classes are populated by insects and arachnids, while the remaining 781 classes form the training set.

Backbone networks. We conduct experiments with both widely-used four layer convolutional Conv-4 [30] and deep ResNet-12 [27] backbones. As is commonly implemented in the state-of-the-art FSL literature, we adopt a pre-training stage for the ResNet-12 before the episode meta-training while directly meta-train from scratch for the simple Conv-4.

Dense feature extractor . We explore three dense feature extractors in our experiments as shown in Figure 2: (1) VanillaFCN simply treats the feature map output of fully convolutional network as dense features. (2) PyramidFCN uses an extra adaptative average pooling layer to obtain 34 dense features for each image. (3) PyramidGrid crops the image evenly into the grid of size and encodes each grid cell to a feature vector individually. The feature vectors from all the cells constitute the set of dense features.

| Method | Conv-4 | ResNet-12 | |||||||

| miniImageNet | tieredImageNet | miniImageNet | tieredImageNet | ||||||

| 1-shot | 5-shot | 1-shot | 5-shot | 1-shot | 5-shot | 1-shot | 5-shot | ||

| Global features | DSN [25] | 51.78 | 68.99 | 53.22 | 71.06 | 62.64 | 78.83 | 67.39 | 82.85 |

| MetaOptNet [14] | 52.87 | 68.76 | 54.71 | 71.76 | 62.64 | 78.63 | 65.99 | 81.56 | |

| Negative Margin [19] | 52.84 | 70.41 | - | - | 63.85 | 81.57 | - | - | |

| FEAT [34] | 55.15 | 71.61 | - | - | 66.78 | 82.05 | 70.80 | 84.79 | |

| Meta-Baseline [7] | - | - | - | - | 63.17 | 79.26 | 68.62 | 83.29 | |

| RelationNet‡ [28] | 52.12 | 66.90 | 54.33 | 69.95 | 60.97 | 75.12 | 64.71 | 78.41 | |

| RelationNet+MCL | 54.50 | 70.63 | 57.73 | 74.46 | 61.70 | 75.53 | 65.93 | 80.27 | |

| ProtoNet‡ [26] | 52.32 | 69.74 | 53.19 | 72.28 | 62.67 | 77.88 | 68.48 | 83.46 | |

| ProtoNet+MCL | 54.31 | 69.84 | 56.67 | 74.36 | 64.40 | 78.60 | 70.62 | 83.84 | |

| Dense features (VanillaFCN) | DN4‡ [16] | 54.66 | 71.26 | 56.86 | 72.16 | 65.35 | 81.10 | 69.60 | 83.41 |

| DeepEMD† [35] | 52.15 | 65.52 | 50.89 | 66.12 | 65.91 | 82.41 | 71.16 | 83.95 | |

| FRN† [33] | 54.87 | 71.56 | 55.54 | 74.68 | 66.45 | 82.83 | 71.16 | 86.01 | |

| Label Propagation | 52.24 | 67.68 | 54.56 | 70.08 | 65.00 | 80.07 | 71.12 | 83.89 | |

| MCL (ours) | 55.38 | 70.02 | 57.63 | 74.25 | 67.36 | 83.63 | 71.76 | 86.01 | |

| MCL-Katz (ours) | 55.55 | 71.74 | 57.78 | 74.77 | 67.51 | 83.99 | 72.01 | 86.02 | |

| Dense features (PyramidFCN) | DN4‡ [16] | 54.54 | 70.94 | 57.05 | 72.90 | 63.54 | 79.04 | 71.10 | 84.22 |

| DeepEMD‡ [35] | 50.67 | 64.94 | 51.26 | 65.64 | 66.27 | 82.41 | 70.76 | 84.20 | |

| FRN‡ [33] | 54.40 | 70.75 | 57.30 | 75.58 | 65.94 | 81.97 | 70.56 | 85.44 | |

| Label Propagation | 53.38 | 68.69 | 55.21 | 71.22 | 65.71 | 75.78 | 71.00 | 78.01 | |

| MCL (ours) | 55.13 | 70.77 | 57.93 | 74.36 | 67.45 | 84.36 | 72.01 | 86.31 | |

| MCL-Katz (ours) | 55.77 | 71.24 | 58.20 | 74.73 | 67.85 | 84.47 | 72.13 | 86.32 | |

| Dense features (PyramidGrid) | DN4‡ [16] | 57.17 | 70.91 | 56.71 | 70.92 | 67.86 | 80.08 | 71.29 | 82.60 |

| DeepEMD‡ [35] | 55.68 | 70.75 | 55.88 | 70.06 | 67.83 | 81.32 | 73.13 | 84.18 | |

| FRN‡ [33] | 55.80 | 71.52 | 55.68 | 72.87 | 67.00 | 82.20 | 71.42 | 85.58 | |

| Label Propagation | 55.12 | 68.43 | 56.05 | 72.39 | 67.18 | 81.07 | 73.18 | 85.19 | |

| MCL (ours) | 57.50 | 73.03 | 57.57 | 73.81 | 69.31 | 85.11 | 73.62 | 86.29 | |

| MCL-Katz (ours) | 57.88 | 74.03 | 57.63 | 73.96 | 69.25 | 84.71 | 73.38 | 86.21 | |

4.1 General Few-shot Classification Results

Table 1 details the comparisons of MCL with global feature based methods [25, 14, 34, 7, 19] as well as dense feature based methods [16, 35, 33] on mini-/tieredImageNet.

Besides these methods, we also exploit label propagation [36] in dense feature based inductive FSL like in [17] to demonstrate that traditional transductive methods could be easily adapted to inductive settings if we treat dense feature vectors as a set of unlabeled data. As shown, our MCL is highly competitive with recent state-of-the-art results. In particular, our MCL-Katz achieves 67.51% (1-shot) and 83.99% (5-shot) on miniImageNet with the simplest VanillaFCN.

Comparisons with dense feature based methods. The last three panes of Table 1 illustrate that proposed bidirectional MCL outperforms query-to-support DN4’s nearest neighboring, DeepEMD’s optimal matching and FRN’s latent feature reconstructions in various tasks. Although the optimal matching flow is unidirectional in DeepEMD, they adopt a cross-reference attention that encodes the bidirectional relevance to a certain extent. If we treat dense features as nodes in the graph and use their similarities as edges, a major difference is that they treat nodes equally to derive different weights on edges while we use the fixed edges to derive the centrality of nodes directly for classifications.

Comparisons with global feature based methods. The centrality investigated in this work is also capable to be plugged into global feature based methods as local features are not equally important before global average pooling. As shown in the first pane of Table 1, our proposed centrality weighted pooling could provide at most 3.4% and 4.5% performance gains for ProtoNet and RelationNet respectively. We show in Table 4 that the improvements are also consistent if we solely apply it on the query and support dense features.

| Method | CUB | meta-iNat | tiered-meta-iNat | |||||||||

| Conv-4 | ResNet-12 | Conv-4 | ResNet-12 | Conv-4 | ResNet-12 | |||||||

| 1-shot | 5-shot | 1-shot | 5-shot | 1-shot | 5-shot | 1-shot | 5-shot | 1-shot | 5-shot | 1-shot | 5-shot | |

| ProtoNet [26] | 71.64 | 81.50 | 82.98 | 91.38 | 53.78 | 73.80 | 76.47 | 90.20 | 35.47 | 54.85 | 47.61 | 71.06 |

| ProtoNet + MCL | 76.87 | 86.95 | 84.59 | 91.71 | 57.52 | 74.33 | 78.75 | 90.53 | 39.09 | 59.16 | 51.22 | 72.50 |

| DSN [25] | 66.01 | 85.41 | 80.80 | 91.19 | 58.08 | 77.38 | 78.80 | 89.77 | 36.82 | 60.11 | 46.61 | 72.79 |

| CTX [10] | 69.64 | 87.31 | 78.47 | 90.90 | 60.03 | 78.80 | 69.04 | 88.39 | 36.83 | 60.84 | 42.91 | 69.88 |

| DN4 [16] | 78.31 | 88.43 | 85.44 | 92.51 | 62.32 | 79.76 | 79.58 | 91.37 | 43.82 | 64.17 | 48.99 | 72.29 |

| DeepEMD [35] | 75.34 | 85.68 | 83.35 | 91.60 | 54.48 | 68.36 | 76.05 | 86.82 | 36.05 | 48.55 | 48.14 | 66.27 |

| FRN [33] | 73.48 | 88.43 | 83.16 | 92.59 | 62.42 | 80.45 | 73.52 | 91.83 | 43.91 | 63.36 | 48.86 | 76.62 |

| MCL | 77.80 | 88.71 | 83.64 | 92.18 | 64.66 | 81.31 | 80.17 | 91.59 | 44.08 | 64.61 | 51.35 | 76.87 |

| MCL-Katz | 79.61 | 90.56 | 85.63 | 93.18 | 63.92 | 81.09 | 79.34 | 91.84 | 44.00 | 64.24 | 49.68 | 76.05 |

| miniImageNet | tieredImageNet | ||||

| 1-shot | 5-shot | 1-shot | 5-shot | ||

| \hlineB2 unidirectional | VanillaFCN | 54.88 | 69.98 | 55.31 | 68.87 |

| MCL | 55.38 | 70.02 | 57.63 | 74.25 | |

| MCL-Katz | 55.55 | 71.74 | 57.78 | 74.77 | |

| unidirectional | PyramidFCN | 54.92 | 69.17 | 55.69 | 69.26 |

| MCL | 55.13 | 70.77 | 57.93 | 74.36 | |

| MCL-Katz | 55.77 | 71.24 | 58.20 | 74.73 | |

| unidirectional | PyramidGrid | 56.61 | 71.40 | 55.12 | 69.19 |

| MCL | 57.50 | 73.03 | 57.57 | 73.81 | |

| MCL-Katz | 57.88 | 74.03 | 57.63 | 73.96 | |

4.2 Fine-grained Few-shot Classification Results

We follow the same setting (shown in supplementary) as in FRN [33] to train all the models from scratch on those fine-grained datasets. We re-implement DN4 [16], DeepEMD [35] to thoroughly compare with dense features based methods. Apart from the basic comparisons (both backbones on CUB and Conv-4 backbone on meta-iNat/tiered-meta-iNat) in [33], we conduct extra ResNet-12 experiments for all the comparing methods on meta-iNat and tiered-meta-iNat in our unified framework for a thorough comparison.

Results in Table 2 demonstrate that both our end-to-end MCL (VanillaFCN) and the centrality plugin are broadly effective in various fine-grained few-shot classification tasks.

5 Analysis

5.1 Ablation Study

Different choices of ,. Since is non-square due to the different cardinalities of set and , we use two different scaling parameters in Eqn.(1) and Eqn.(2). By the column-wise normalization, and can be interpreted as the reciprocal of temperatures in softmax random walk probability. Large , will have a hard random walk probability that leads to a concentrated centrality in the affiliation network. However, extremely large , (e.g., one-hot probability when they approaching infinity) would inevitably bias the episodic training due to the potential gradient explosion.

Since the cardinality of set is larger than that of , we empirically use a larger than as we need a harder probability in random walks from query to the large set of support features. In the experiments, we carefully select and (generally = and = for 1-shot models and = and = for 5-shot models) from several combinations of parameters by their validation performance.

| +MCL | miniImageNet | tieredImageNet | ||||

| 1-shot | 5-shot | 1-shot | 5-shot | |||

| \hlineB2 ProtoNet [26] | 52.32 | 69.74 | 53.19 | 72.28 | ||

| ✓ | 53.74 | 69.61 | 55.36 | 73.17 | ||

| ✓ | 53.97 | 69.74 | 56.60 | 74.18 | ||

| ✓ | ✓ | 54.31 | 69.84 | 56.67 | 74.36 | |

| RelationNet [28] | 52.12 | 66.90 | 54.33 | 69.95 | ||

| ✓ | 53.25 | 66.79 | 54.46 | 70.71 | ||

| ✓ | 54.37 | 70.57 | 57.68 | 74.24 | ||

| ✓ | ✓ | 54.50 | 70.63 | 57.73 | 74.46 | |

| ProtoNet [26] | |||||||

| ProtoNet +MCL | |||||||

| query | ground truth | confounding support classes | query | ground truth | confounding support classes | ||

| (a) MCL as a plugin for feature centralization | (b) MCL as an end-to-end classification method | ||||||

Influence of Katz attenuation factor . When is small, the contribution given by paths longer than one rapidly declines, and the centrality will be determined by short paths (mostly in-degrees). When is large, long paths are devalued smoothly, and the centrality would be more influenced by endogenous topology. When approaching 0, the classification will be only determined by the unidirectional query-to-support random walk where the contributions from paths longer than one just vanished. We show comparisons in Table 3 to demonstrate that performances are improved by introducing mutual affiliations in a bidirectional manner.

In the experiments, we simply use for MCL-Katz methods, and the performances could be further improved by selecting different as shown in supplementary.

5.2 Computational Speed

Intuitively, bidirectional methods would be slower than unidirectional ones since their back-and-forth computations. However, our MCL would definitely reach to the periodic equilibrium state as shown in Eqn.(5). Thus, we could directly solve it by the Katz approximation.

At first glance, the most expensive step in Eqn.(10) is inverting that costs where is the number of supporting classes and is the number of dense features for each image input. Fortunately, is a very special matrix that equals a diagonal matrix minus an anti-diagonal matrix. In this case, we can reformulate it by block-wise matrix inversion:

|

|

(14) |

where is defined by . The most expensive step then becomes a inversion of and the time complexity is reduced to .

As shown in Table 6, we compare the inference speed between MCL and other unidirectional dense feature based methods with different image resolutions on 5-way 1-shot FSL tasks with ResNet-12. Each class contains 15 query images in an episode. It can be observed that our bidirectional MCL did not introduce many computational overheads compared to unidirectional methods, and Katz approximation does accelerate computing the stationary distribution.

5.3 Qualitative Feature Centralization

To better understand our centrality based methods, we visualize the Grad-CAM [24] of the last convolutional layer in ResNet-12 for both plugin and end-to-end methods. We show in Table 5(a) that our MCL helps ProtoNet concentrate on the most relevant regions of interest. Table 5(b) demonstrates that the most centralized support features in MCL are not only from the ground truth but also mutually affiliated with task-relevant objects in the query images. We consider it qualitatively validates our underlying idea that support features would be frequently visited in the long times of bidirectional random walks if they shared the same local characteristics with the query image.

| Model | VanillaFCN resolution | |||

| 55 | 88 | 1010 | 1212 | |

| DN4 [16] | 11.16 | 13.41 | 16.82 | 23.45 |

| DeepEMD [35] | 262.0 | 814.6 | 2781.9 | 8035.4 |

| FRN [33] (Ridge regression) | 14.55 | 20.42 | 25.81 | 30.65 |

| FRN [33] (Woodbury identity) | 36.74 | 42.33 | 47.31 | 55.84 |

| MCL (torch.pinverse) | 55.91 | 119.0 | 292.2 | 464.8 |

| MCL (QR decomposition) | 600.0 | 658.6 | 719.4 | 802.6 |

| MCL (Katz approximation) | 12.59 | 14.59 | 18.92 | 29.10 |

6 Conclusions

We present a novel dense feature based framework: Mutual Centralized Learning (MCL) to highlight the mutual affiliations of bipartite dense features in FSL. We introduce a novel dense features’ accessibility criterion and demonstrate classic transductive methods like label propagation could be easily adapted to the inductive setting if we treat dense features from a single image as the set of unlabeled data. We propose bidirectional random walk to learn mutual affiliations in FSL and prove its features’ accessibility in a long time-homogeneous Markov process is equivalent to the single-mode eigenvector centrality of an affiliation network. We show that such centrality could not only serve as a noval end-to-end classification criterion but also as a plugin in existing methods. Experimental results demonstrate MCL achieves the state-of-the-art on various datasets.

References

- [1] Arman Afrasiyabi, Jean-François Lalonde, and Christian Gagn’e. Associative alignment for few-shot image classification. In European Conference on Computer Vision, pages 18–35. Springer, 2020.

- [2] Luca Bertinetto, Joao F Henriques, Philip Torr, and Andrea Vedaldi. Meta-learning with differentiable closed-form solvers. In International Conference on Learning Representations, 2018.

- [3] Phillip Bonacich. Factoring and weighting approaches to status scores and clique identification. Journal of mathematical sociology, 2(1):113–120, 1972.

- [4] Phillip Bonacich. Simultaneous group and individual centralities. Social networks, 13(2):155–168, 1991.

- [5] Stephen P Borgatti and Martin G Everett. Network analysis of 2-mode data. Social networks, 19(3):243–269, 1997.

- [6] Wei-Yu Chen, Yen-Cheng Liu, Zsolt Kira, Yu-Chiang Frank Wang, and Jia-Bin Huang. A closer look at few-shot classification. In International Conference on Learning Representations, 2019.

- [7] Yinbo Chen, Xiaolong Wang, Zhuang Liu, Huijuan Xu, and Trevor Darrell. A new meta-baseline for few-shot learning. arXiv preprint arXiv:2003.04390, 2020.

- [8] Guneet Singh Dhillon, Pratik Chaudhari, Avinash Ravichandran, and Stefano Soatto. A baseline for few-shot image classification. In International Conference on Learning Representations, 2019.

- [9] Kaize Ding, Jianling Wang, Jundong Li, Kai Shu, Chenghao Liu, and Huan Liu. Graph prototypical networks for few-shot learning on attributed networks. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pages 295–304, 2020.

- [10] Carl Doersch, Ankush Gupta, and Andrew Zisserman. Crosstransformers: spatially-aware few-shot transfer. arXiv preprint arXiv:2007.11498, 2020.

- [11] Victor Garcia and Joan Bruna. Few-shot learning with graph neural networks. arXiv preprint arXiv:1711.04043, 2017.

- [12] Fusheng Hao, Fengxiang He, Jun Cheng, Lei Wang, Jianzhong Cao, and Dacheng Tao. Collect and select: Semantic alignment metric learning for few-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 8460–8469, 2019.

- [13] Leo Katz. A new status index derived from sociometric analysis. Psychometrika, 18(1):39–43, 1953.

- [14] Kwonjoon Lee, Subhransu Maji, Avinash Ravichandran, and Stefano Soatto. Meta-learning with differentiable convex optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10657–10665, 2019.

- [15] Wenbin Li, Lei Wang, Jing Huo, Yinghuan Shi, Yang Gao, and Jiebo Luo. Asymmetric distribution measure for few-shot learning. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pages 2957–2963, 2021.

- [16] Wenbin Li, Lei Wang, Jinglin Xu, Jing Huo, Yang Gao, and Jiebo Luo. Revisiting local descriptor based image-to-class measure for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7260–7268, 2019.

- [17] Yann Lifchitz, Yannis Avrithis, and Sylvaine Picard. Local propagation for few-shot learning. In 2020 25th International Conference on Pattern Recognition (ICPR), pages 10457–10464. IEEE, 2021.

- [18] Yann Lifchitz, Yannis Avrithis, Sylvaine Picard, and Andrei Bursuc. Dense classification and implanting for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9258–9267, 2019.

- [19] Bin Liu, Yue Cao, Yutong Lin, Qi Li, Zheng Zhang, Mingsheng Long, and Han Hu. Negative margin matters: Understanding margin in few-shot classification. In European Conference on Computer Vision, pages 438–455. Springer, 2020.

- [20] Yanbin Liu, Juho Lee, Minseop Park, Saehoon Kim, Eunho Yang, Sung Ju Hwang, and Yi Yang. Learning to propagate labels: Transductive propagation network for few-shot learning. In International Conference on Learning Representations, 2019.

- [21] Oskar Perron. Zur theorie der matrices. Mathematische Annalen, 64(2):248–263, 1907.

- [22] Mengye Ren, Eleni Triantafillou, Sachin Ravi, Jake Snell, Kevin Swersky, Joshua B Tenenbaum, Hugo Larochelle, and Richard S Zemel. Meta-learning for semi-supervised few-shot classification. In International Conference on Learning Representations, 2018.

- [23] Ravi Sachin and Larochell Hugo. Optimization as a model for few-shot learning. ICLR, 2017.

- [24] Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

- [25] Christian Simon, Piotr Koniusz, Richard Nock, and Mehrtash Harandi. Adaptive subspaces for few-shot learning. In CVPR, pages 4136–4145, 2020.

- [26] Jake Snell, Kevin Swersky, and Richard Zemel. Prototypical networks for few-shot learning. In NIPS, pages 4077–4087, 2017.

- [27] Qianru Sun, Yaoyao Liu, Tat-Seng Chua, and Bernt Schiele. Meta-transfer learning for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 403–412, 2019.

- [28] Flood Sung, Yongxin Yang, Li Zhang, Tao Xiang, Philip HS Torr, and Timothy M Hospedales. Learning to compare: Relation network for few-shot learning. In CVPR, pages 1199–1208, 2018.

- [29] Hung-Yu Tseng, Hsin-Ying Lee, Jia-Bin Huang, and Ming-Hsuan Yang. Cross-domain few-shot classification via learned feature-wise transformation. In International Conference on Learning Representations, 2020.

- [30] Oriol Vinyals, Charles Blundell, Timothy Lillicrap, Koray Kavukcuoglu, and Daan Wierstra. Matching networks for one shot learning. In Proceedings of the 30th International Conference on Neural Information Processing Systems, pages 3637–3645, 2016.

- [31] Peter Welinder, Steve Branson, Takeshi Mita, Catherine Wah, Florian Schroff, Serge Belongie, and Pietro Perona. Caltech-ucsd birds 200. 2010.

- [32] Davis Wertheimer and Bharath Hariharan. Few-shot learning with localization in realistic settings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6558–6567, 2019.

- [33] Davis Wertheimer, Luming Tang, and Bharath Hariharan. Few-shot classification with feature map reconstruction networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8012–8021, 2021.

- [34] Han-Jia Ye, Hexiang Hu, De-Chuan Zhan, and Fei Sha. Few-shot learning via embedding adaptation with set-to-set functions. In CVPR, pages 8808–8817, 2020.

- [35] Chi Zhang, Yujun Cai, Guosheng Lin, and Chunhua Shen. Deepemd: Differentiable earth mover’s distance for few-shot learning. arXiv e-prints, pages arXiv–2003, 2020.

- [36] Dengyong Zhou, Olivier Bousquet, Thomas N. Lal, Jason Weston, and Bernhard Schölkopf. Learning with local and global consistency. In Advances in Neural Information Processing Systems 16, volume 16, pages 321–328, 2003.

Appendix A Proof of Eqn.(5)

Proof of periodic:

Consider the eigenvalue of by the determinant equation

| (A.1) | ||||

it can be found that the eigenvalues of are the square roots of eigenvalues of .

Since both and are column-normalized matrices, their product is still column-stochastic that can be proved by:

| (A.2) |

where is a vector of ones with different length indicated by its subscript. and are the cardinalities of and , respectively.

We know (by the definition of stochastic matrix) that is the largest eigenvalue of , and its uniqueness is guaranteed since there is no zero entry in both and . According to Eqn.(A.1), we get another eigenvalue for stochastic matrix . From the Perron–Frobenius theorem that the period of equals to the number of eigenvalue whose absolute value is equal to the spectral radius of , we prove its stationary distribution is of period 2.

Proof for even periods:

We give the limit of matrix for the extremely large number of as follows:

| (A.3) | ||||

Since we have shown in Eqn.(A.2) that is also column-stochastic, we use to denote its stationary distribution vector by equation .

By analogy, the infinity power of could also reach a similar stationary distribution with equation .

Substituting the two stationary vectors into Eqn.(A.3), we can prove the stationary distributions of for the even periods in Eqn.(5).

Proof for odd periods:

From the definition of matrix product, we first have

| (A.4) | ||||

Next, according to the definition of and , we can get

| (A.5) | ||||

If we right matrix product of on both sides of Eqn.(A.5), we have

| (A.6) |

By analogy, a symmetric equation can also be easily proved in the same way as from Eqn.(A.5) to Eqn.(A.7).

Substituting and into Eqn.(A.4), we can prove the stationary distributions of for the odd periods in Eqn.(5).

Appendix B Proof of Lemma 1

We have shown in Appendix A that there exists an eigenvalue for the column-stochastic matrix with equation . If we interpret the transition matrix as an adjacency matrix for the directed bipartite graph, the eigenvector centrality of that graph is .

We split the eigenvector into , for the bipartite vertex set respectively and the single-mode eigenvector centralities of the single vertex set can therefore be formulated by:

| (B.1) |

If we left matrix product on both sides of , we will have . To write it in matrix notation, we have

| (B.2) |

Consider the first row of matrix product with in Eqn.(B.2), we have . Since is the eigenvector of of eigenvalue 1 with probability constraint , is exactly equivalent to the single-mode eigenvector centrality in Eqn.(B.1).

By analogy, if we consider the matrix product between the second row of and in Eqn.(B.2), we can prove equivalent to the conjugate single-mode eigenvector centrality of the bipartite graph.

Appendix C Proof of Eqn.(6)

Eqn.(6) to prove:

We first define . Since we have proof that Markov process of transition matrix is of 2 period in Appendix A, the proof of Eqn.(6) is thus equivalent to prove:

| (C.1) |

Proof for even period:

From the definition, we have

| (C.2) | ||||

where is the number of visits from particles in to support features in after steps of Markov bidirectional random walk. is the number of visits starting from particles in to support features in . The second equality is derived from the diagonal/anti-diagonal property of / respectively where the sub-matrices are ignored in summation.

Proof for odd period

: From the definition, we have

| (C.4) | ||||

where equals . Take Eqn.(C.4) to the extreme, we have

| (C.5) | ||||

where is ignored when approaches the infinity.

Appendix D Codes

We use block-wise inversion in Eqn.(14) that is more computational-efficient than directly inverting Eqn.(10) when the number of support classes is large. The corresponding Pytorch pseudo code can be found below where the whole calculation is performed in parallel via batched matrix multiplication and inversion. Our unified FSL training and testing framework is publicly available at https://github.com/LouieYang/MCL.

Appendix E Implementation details

Preprocessing: During training on CUB, miniImageNet and tieredImageNet, images are randomly cropped to and then resized into . For meta-iNat and tiered-meta-iNat, images are randomly padded then cropped into . Unlike previous methods , we only random horizontal flip the image during training.

During inference, images are center cropped to and then resized into for CUB, miniImageNet and tieredImageNet. For meta-iNat and tiered-meta-iNat, images are already and are fed into the models directly.

Network backbones: We use two backbones in our experiments: Conv-4 and ResNet-12. Conv-4 contains four convolutional blocks, each of which consists of a convolution, a BatchNorm, a LeakyReLU(0.2) and an additional max-pooling. ResNet-12 consist four residual blocks, each with three convolutional layers, with LeakyReLU(0.1) and max-pooling on the main stem. Given the image of input size , Conv-4 outputs a feature map of size while ResNet-12 outputs that of size .

Re-implemented baselines: We re-implement ProtoNet [26] and RelationNet [28] in our unified framework as two global feature based baselines for our centrality plugin. We borrow most of codes from their official implementations and introduce slight modifications to improve their performances inspired their subsequent work [33, 34]. For ProtNet, we introduce a fixed temperature scaling with before in softmax function. For RelationNet, we change the original MSELoss to CrossEntropyLoss.

| Model | Backbone | 1-shot | 5-shot |

| Baseline [6] | ResNet-10 | - | 65.57 0.70 |

| Baseline++ [6] | ResNet-18 | - | 62.04 0.76 |

| MetaOptNet [14] | ResNet-12 | 44.79 0.75 | 64.98 0.68 |

| MatchingNet+FT [29] | ResNet-10 | 36.61 0.53 | 55.23 0.83 |

| RelationNet+FT [29] | ResNet-10 | 44.07 0.77 | 59.46 0.71 |

| GNN+FT [29] | ResNet-10 | 47.47 0.75 | 66.98 0.68 |

| Neg-margin [19] | ResNet-18 | - | 69.30 0.73 |

| Centroid et al. [1] | ResNet-18 | 46.85 0.75 | 70.37 1.02 |

| FRN [33] | ResNet-12 | 51.60 0.21 | 72.97 0.18 |

| ProtoNet† [26] | ResNet-12 | 40.05 0.18 | 55.29 0.19 |

| ProtoNet+MCL | ResNet-12 | 42.02 0.19 | 64.76 0.20 |

| MCL | ResNet-12 | 55.48 0.22 | 75.93 0.18 |

| MCL-Katz | ResNet-12 | 53.22 0.22 | 77.39 0.18 |

Pre-training: We use the pre-training + meta-training procedure for ResNet-12 backbones on miniImageNet and tieredImageNet like most of the methods in the literature [35, 16, 33]. We follow the same pre-training technique from the dense features based FRN [33] to learn spatially distinctive dense features, e.g., run 350 epochs of batch size 128 on miniImageNet, using SGD with initial learning rate 0.1 and decaying by a factor of 10 at epochs 200 and 300.

Meta-training on mini-/tieredImageNet: We follow the earliest dense feature based DN4 [16] that randomly sample 20,000/200 episodes in an epoch for Conv-4/ResNet-12 backbone, respectively. Since we didn not use pre-training for Conv-4, the number of episodes per epoch of Conv-4 is far larger than ResNet-12 for convergences. In each episode, besides support images in each class, 15 query images will also be selected from each class.

For Conv-4, we adopt Adam optimizer with initial learning rate of 1e-3 to train for 30 epochs (on both datasets) and reduce it by a factor of 10 every 10 epochs.

For ResNet-12, we adopt SGD with initial learning rate of 5e-4 (40 epochs on miniImageNet and 60 epochs on tieredImageNet) and cut it by half every 10 epochs.

Unlike the latest dense feature based FRN that adopts larger way during meta-training (e.g., 25-way for training 1-shot models and 20-way for 5-shot models), we did not use that setting in widely used mini-/tireredImageNet as we thought it would be unfair in comparing with other methods.

Meta-training on fine-grained datasets: We follow the latest fine-grained few-shot classification setting from FRN [33] as most of the comparing performances in Table 2 are from them. Although practitioners agree on the train/validation/test split ratio (i.e., 100/50/50) on CUB dataset, there is no official class split. In our experiments, we use the same train/val/test class split as in [33] for a fair comparison.

For CUB, we train all our Conv-4 models for 1200 epochs using SGD with Nesterov momentum of 0.9 and an initial learning rate of 0.1. The learning rate decreases by a factor of 10 at epoch 400 and 800. ResNet-12 backbone trains for 600 epochs and scale down the learning rate by 10 at epoch 300, 400 and 500. Conv-4 backbone is trained with standard 20-way for 5-shot models and is trained with 30-way for 1-shot models like [33], while ResNet-12 backbone is trained with 10-way for the both shots models.

For meta-iNat and tiered-meta-iNat, we train our Conv-4 and ResNet-12 models for 100 epochs using Adam with initial learning rate 1e-3. We set 0.5 learning rate decay every 20 epochs. Both Conv-4 and ResNet-12 are trained with 10-way for both 1-shot and 5-shot models.

| Methods | 1-shot | 5-shot | |

| Baseline [6] | Global feature | 53.99 0.20 | 78.78 0.15 |

| Baseline++ [6] | 55.03 0.20 | 78.79 0.15 | |

| ProtoNet [26] | 53.99 0.20 | 79.70 0.15 | |

| ProtoNet+MCL | 58.62 0.20 | 80.99 0.14 | |

| DN4 [16] | VanillaFCN | 58.95 0.19 | 78.01 0.15 |

| FRN [33] | 59.71 0.19 | 74.05 0.15 | |

| MCL | 60.94 0.20 | 80.20 0.14 | |

| MCL-Katz | 61.55 0.20 | 81.09 0.14 |

Appendix F Cross-Domain Few-shot Classification

We also evaluate on the challenging cross-domain setting proposed by [6], where models trained on miniImageNet base classes are directly evaluated on test classes from CUB. We use the same test class split as in [33] for fair comparisons, which is much harder than the test class split in [6].

As shown in Table S7, our MCL outperforms previous state-of-the-art methods by large margins of 3.9% on the 1-shot task and 5.4% on the 5-shot task, respectively.

Appendix G Evaluation without Meta-training

Given that an increasing number of methods simply use standard supervised learning to pre-train the feature extractor and then use their methods directly for evaluation without meta-training [6, 8], we also evaluate our methods under this setting with the same pre-trained feature extractor we used in the Table 1. As shown in Table S8, global feature based methods are likely to misclassify images under the extremely 1-shot scenario, where the significant intra-class variations would inevitably drive the image-level embedding from the same category far apart. In contrast, dense feature based methods provide more information across categories that shows promising performances in that scenario.

Our end-to-end Katz centrality based MCL outperforms previous methods by a margin of 1.8% and 1.3% on 1-shot and 5-shot tasks, respectively. It is interested to note that our MCL plugin help centralize the task-relevant local features in ProtoNet [26] by a large margin of 4.6% in 1-shot task and 1.2% in 5-shot task without bell and whistles.

| 5-way 1-shot | 5-way 5-shot | |

| (unidirectional) | 66.60 0.20 | 81.76 0.13 |

| 67.13 0.20 | 83.20 0.13 | |

| 67.28 0.20 | 83.89 0.13 | |

| (MCL-Katz) | 67.51 0.20 | 83.99 0.13 |

| 67.27 0.20 | 84.05 0.13 | |

| 67.41 0.20 | 83.96 0.13 | |

| (MCL) | 67.36 0.20 | 83.63 0.13 |

| 66.12 | 66.15 | 65.87 | 65.39 | |

| 67.21 | 67.20 | 66.78 | 66.23 | |

| 67.53 | 67.36 | 66.70 | 66.00 | |

| 67.05 | 66.77 | 65.84 | 65.08 |

Appendix H Ablation on parameters , and

Ablation on Katz attenuation factor . Table S9 shows results with different in bidirectional random walks. As discussed in Sec.5, the centrality with large is more influenced by the endogenous topology while that with small is more influenced by the in-degree paths. It could be observed in Table S9 that, the optimal differs across various FSL tasks (that with different shot on different datasets).

In the experiments, we use = to approximate single-mode eigenvector centrality in Eqn.(6) and simply use = to represent general Katz centrality in Eqn.(8).

Ablation on scaling parameters and . Table S10 shows MCL results with different scaling parameters and . As discussed in Sec.5 that a larger scaling parameter (i.e., a smaller temperature in softmax-like random walk probability) will lead to a more concentrated eigenvector centrality. However, a larger scaling parameter would inevitably bias the meta-training. Thus, there exists a trade-off to select optimal parameters according to each task.

In the experiments, we find its empirically effective to select and according to their pre-trained models (similar to Table S10). In most cases, we use =, = and =, = for 1-shot and 5-shot tasks, respectively.

Appendix I Additional Plugin Experiments

We have shown that our proposed centrality weighted pooling has a consistent performance gain (especially in the extreme 1-shot scenario) over global average pooling on ProtoNet [26] and RelationNet [28] by concentrating on more task-relevant local features. Besides Table 1, 2, 4 and S8, we give additional results in Table S11 to show that MCL can be easily plugged into those methods that need no extra parameters except for the feature extractor.

The experiments are conducted by the following rules: all comparing methods are evaluated with the same pre-trained backbone ResNet-12 as in Sec.G without meta-training; we only use centrality weighted pooling to aggregate local features from query image as different methods have different operations on support features; we fixed the parameters = and = to MCL plugins for all comparing methods.

As shown in Table S11, our proposed centrality plugin consistently improves the performances of all the global feature based methods without bells and whistles.

| Method | miniImageNet | tieredImageNet | ||

| 1-shot | 5-shot | 1-shot | 5-shot | |

| Baseline[6] | 53.99 | 78.78 | 67.75 | 85.23 |

| +MCL | 56.21+2.22 | 80.44+1.66 | 68.53+0.78 | 85.75+0.52 |

| Baseline++[6] | 55.03 | 78.79 | 61.86 | 84.31 |

| +MCL | 56.93+1.90 | 79.93+1.14 | 63.00+1.14 | 84.61+0.30 |

| ProtoNet[26] | 56.50 | 79.68 | 64.27 | 84.01 |

| +MCL | 58.62+2.12 | 80.99+1.30 | 66.48+2.21 | 84.83+0.82 |

| MatchingNet[30] | 58.41 | 79.52 | 68.68 | 85.16 |

| +MCL | 59.85+1.44 | 80.79+1.27 | 69.45+0.97 | 85.49+0.33 |

| R2D2[2] | 59.82 | 78.97 | 70.22 | 85.30 |

| +MCL | 60.86+1.04 | 80.65+1.68 | 70.64 +0.42 | 85.84+0.54 |

| MetaOptNet[14] | 59.59 | 79.77 | 69.55 | 85.25 |

| +MCL | 60.66+1.07 | 81.27+1.50 | 70.11+0.55 | 85.76+0.51 |

| DSN[25] | 58.70 | 79.01 | 69.13 | 85.14 |

| +MCL | 60.08+1.38 | 80.66+1.65 | 69.59+0.46 | 85.61+0.47 |

| Neg-cosine[19] | 58.82 | 79.63 | 69.44 | 84.94 |

| +MCL | 60.20+1.38 | 81.04+1.41 | 69.85+0.41 | 85.31+0.37 |

Appendix J Additional Visualization

The additional Grad-CAM visualizations as in Table 5 of the main paper are presented as follows: Table S12 illustrates end-to-end MCL-Katz like in Table 5(b). Table S13 illustrates MCL plugin on ProtoNet [26] like in Table 5(a).

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x24.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x25.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x26.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x27.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x28.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x29.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x30.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x31.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x32.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x33.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x34.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x35.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x36.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f3b060a7-8225-4998-bac4-5fcba99f96ad/x37.png) |