widerequation

| (0.1) | (0.1) |

Learning Theory for Inferring Interaction Kernels in Second-Order Interacting Agent Systems

Abstract

Modeling the complex interactions of systems of particles or agents is a fundamental scientific and mathematical problem that is studied in diverse fields, ranging from physics and biology, to economics and machine learning. In this work, we describe a very general second-order, heterogeneous, multivariable, interacting agent model, with an environment, that encompasses a wide variety of known systems. We describe an inference framework that uses nonparametric regression and approximation theory based techniques to efficiently derive estimators of the interaction kernels which drive these dynamical systems. We develop a complete learning theory which establishes strong consistency and optimal nonparametric min-max rates of convergence for the estimators, as well as provably accurate predicted trajectories. The estimators exploit the structure of the equations in order to overcome the curse of dimensionality and we describe a fundamental coercivity condition on the inverse problem which ensures that the kernels can be learned and relates to the minimal singular value of the learning matrix. The numerical algorithm presented to build the estimators is parallelizable, performs well on high-dimensional problems, and is demonstrated on complex dynamical systems.

Keywords: Machine learning; dynamical systems; agent-based dynamics; inverse problems; regularized least squares; nonparametric statistics.

1 Introduction

Physical, biological, and social systems across all scales of complexity and size can often be described as dynamical systems written in terms of interacting agents (e.g. particles, cells, humans, planets, …). Rich theories have been developed to explain the collective behavior of these interacting agents across many fields including astronomy, particle physics, economics, social science, and biology. Examples include predator-prey systems, molecular dynamics, coupled harmonic oscillators, flocking birds or milling fish, human social interactions, and celestial mechanics, to name a few. In order to encompass many of these examples, we will describe a very general second-order, heterogeneous (the agents can be of different types), interacting (the acceleration of an agent is a function of properties of the other agents) agent system that includes external forces, masses of the agents, multivariable interaction kernels, and an additional environment variable that is a dynamical property of the agent (for example, a firefly having its luminescence varying in time). We propose a learning approach that combines machine learning and dynamical systems in order to provide highly accurate dynamical models of the observation data from these systems.

The model and learning framework presented in sections 2-5 includes a very large number of relevant systems and allows for their modeling. Clustering of opinions [45, 27, 10, 56] is a simple first-order case that exhibits clustering. Flocking of birds [32, 29, 28] provides a simple example of a second-order system that exhibits an emergent shared velocity of all agents. Milling of fish [25, 1, 2, 24] is another second-order model and presents both a and -dimensional milling pattern over long time and introduces a non-collective force from the environment. A model of oscillators (fireflies) that sync and swarm together, and have their dynamics governed by their positions and a phase variable , was studied by [73, 59, 58, 57]. There are also models that include both energy and alignment interaction kernels, a particular case of this is the anticipation dynamics model from [71], which we study in this work.

One can also consider a collection of celestial bodies interacting via the gravitational potential, which was initially studied in [83] and is further studied in the upcoming [54]. All of these models fit into our framework and we have presented detailed studies of these, and others in this work as well as [83, 51, 52]. These dynamics exhibit a wide range of emergent behaviors, and as shown in [79, 74, 29, 38, 23, 5, 56], the behaviors can be studied when the governing equations are known. However, if the equations are not known and the data consists of only trajectories, we still wish to develop a model that can both make accurate predictions of the trajectories and discover a dynamical form that accurately reflects their emergent properties. To achieve this, we present a theoretically optimal learning algorithm that is accurate, captures emergent behavior for large time, and, by exploiting the structure of the collective dynamical system, avoids the curse of dimensionality.

Applying machine learning to the sciences has experienced tremendous growth in recent years, a small selection of general applications related to the ideas in this work include: learning PDEs ([4, 68, 46]), modeling dynamical systems ([37, 6, 47]), governing equations ([20, 80]), biology ([21]), fluid mechanics ([63, 41]), many-body problems in quantum systems ([19]), mean-field games ([67]), meteorology ([40]), and dynamical systems ([26, 17, 81, 11]). These, and the references therein, give a flavor of the diverse range of applications. A vast literature exists in the context of learning dynamical systems. In the case of a general nonlinear dynamical system, symbolic regression has been developed to learn the underlying form of the equations from data, see [12, 69]. Sparse regression techniques which use an extremely large collection of functions, often containing most major mathematical functions, are fit to the data with a sparsity condition that only allows a few terms to appear in the final model. Detailed study and development of these approaches can be found for SINDy in ([16, 66, 15]), a LASSO-type penalty ([42, 43]), and sparse Bayesian regression ([82]). Other approaches consider multiscale methods, statistical mechanics, or force-based models, see [3, 8]. Deep learning has also been applied to learn dynamical systems, for ODEs see [62, 65] and for PDEs see [60, 61, 50], as well as the references therein.

The majority of the earliest work in inferring interaction kernels in systems of the type (1), (2.2) occurred in the Physics literature, going back to the works of Newton. From the viewpoint of purely data-driven analysis of the equations, requiring limited or no physical reasoning, foundational work was [53, 44]. In these works, the interaction kernels are assumed to be in the span of a known family of functions and parameters are estimated. In statistics, the problem of parameter estimation in dynamical systems from observations is classical, e.g. [78, 14, 49, 18, 64]. The question of identifiability of the parameter emerges, see e.g. [34, 55]. Our work is closely related to this viewpoint but our parameter is now infinite-dimensional, with identifiability discusses in section 6.

Our learning approach is based on exploiting the structure of collective dynamical systems and nonparametric estimation techniques (see [30, 76, 39, 36, 9]). We focus here on second-order models and the form of the equations, generalizing the first order models (see discussion in Appendix F), derived from Newton’s second law: for

| (1.1) |

Here, is the mass of the agent, is its position, is a non-collective force, and , are known as the interaction kernels. A significant amount of research on modeling collective dynamics is concerned with inducing desired collective behaviors (flocking, clustering, milling, etc.) from relatively simple, local and non-local interaction kernels, often from known or specific parametric families of relatively simple functions. Here we consider a non-parametric, inverse-problem-based approach to infer the interaction kernels from observations of trajectory data, especially within short-time periods. In [13], a convergence study of learning unknown interaction kernels from observation of first-order models of homogeneous agents was done for increasing , the number of agents. The estimation problem with fixed, but the number of trajectories varying, for first-order and second-order models of heterogeneous agents was numerically studied in [52] and learning theory on these first-order models was developed in [51, 48]. Further extension of the model and algorithm to more complicated second-order systems, with particular emphasis on emergent collective behaviors, was discussed in [83]. A big data application to real celestial motion ephemerides is developed and discussed in [54]. In this work, we provide a rigorous learning theory covering the models presented in [83], as well as the second-order models introduced in [52]. We consider generalizations of the models in [83], to include models with higher-dimensional interaction kernels, that do not depend only on pairwise distances. Compared to the theories studied in [51, 48], our theory focuses on second-order models with interaction kernels of the form (with and representing norms of differences of positions and, respectively, velocities of pairs of agents); additionally, we discuss the identifiability and separability of and from the sum.

The overall objective of the algorithm can be stated as: given trajectory data generated from an interacting agent system, we wish to learn the underlying interaction kernels, from which we will understand its long-term and emergent behavior, and ultimately build a highly accurate approximate model that faithfully captures the dynamics. We make minimal assumptions on the form of the interaction kernels and the various forces involved, and in some cases the assumptions are made for purely theoretical reasons and the algorithm can still perform well when they do not hold for a given system. We offer a learning approach to address these collective systems by first discovering the governing equations from the observational data, and then using the estimated equations for large-time predictions.

The approach in this paper builds on many of these ideas and uses observation data coming from collective dynamical systems of the form (2.2), to learn the underlying interaction functions. This variational approach was initially developed in [13, 52] and further studied and extended in [51, 83]. Our analysis of this system blends differential equations, inverse problems and nonparametric regression, and (statistical) learning theory. A central insight is that we exploit the form of (2.2) to move the inference task to just the unknown functions () allowing us to avoid the curse of dimensionality incurred if we were to directly perform regression against the high-dimensional phase space and trajectory data of the system, which provides independence of observations where each observation is a different trajectory.

To use the trajectory data to derive estimators, we consider appropriate hypothesis spaces in which to build our estimators, measures adapted to the dynamics, norms, and other performance metrics, and ultimately an inverse problem built from these tools. Once we have obtained this estimated interaction kernel, we want to study its properties as a function of the amount of trajectory data we receive, which is the trajectories sampled from different initial conditions from the same underlying system. Here we study properties of the error functional, establish the uniqueness of its minimizers, and use the probability measures to define a dynamics-adapted norm to measure the error of our estimators over the hypothesis spaces. In comparing the estimators to the true interaction kernels, we first establish concentration estimates over the hypothesis space.

Our first main result is the strong consistency of our learned estimators asymptotically. For the relevant definitions see 4 and for the full theorem see section 7.2, which for the model (1) yields,

| (1.2) |

where is a dynamics-adapted measure on pairwise distances – and we use a weighted space detailed in section 5, particularly (5.3). Perhaps most importantly, we give a rate of convergence in terms of the trajectories. We achieve the minimax rate of convergence for any number of variables in the interaction kernels. See section 7.3 for the full theorem, (see section 4 for relevant definitions) which is given by:

| (1.3) |

In the case of model (1), , as in the results for first-order systems [13, 52, 51].

This means that our estimators converge at the same rate in as the best possible estimator (up to a logarithmic factor) one could construct when the initial conditions are randomly sampled from some underlying initial condition distribution denoted throughout this work, see (section 7).

To solve the inverse problem, we give a detailed discussion of an essential link between these three aspects, the notion of coercivity of the system - detailed in section 6. Coercivity plays a key role in the approximation properties, the ability to approximate the interaction kernel and the learning theory. We also present numerical examples, see the detailed numerical study in [83], which help to explain why the particular norms we define are the right choice, as well as show excellent performance on complex dynamical systems in section 9.

Our paper is structured as follows. The first part of the paper describes the model, learning framework, inference problem, and the basic tools needed for the learning theory. These ideas are all explained in detail in sections 2-5. If one wishes to quickly jump to the theoretical sections, and then refer back to the definitions as needed, we have provided table 1,3 which explains the model equations and outlines the definitions and concepts needed for the learning theory and general theoretical results, respectively. The theoretical part of the paper (sections 6-8) discusses fundamental questions of identifiability and solvability of the inverse problem, consistency, and rate of convergence of the estimators, and the ability to control trajectory error of the evolved trajectories using our estimators. Some key highlights of our theoretical contributions are described in 4.3, with full details in the corresponding sections. Lastly, we consider applications in section 9, as well as have many additional proofs and details in appendices A-H.

1.1 Comparison with existing work

Our learning approach discovers the governing structure of a particular subset of dynamical systems of the form,

from observations , by implicitly inferring the right hand side, . The main difficulties in establishing an effective theory of learning are the curse of dimensionality caused by the size of , which is often , where is the number of agents, the dimension of physical space; and the dependence of the observation data, for example depends on .

There are many techniques which can be used to tackle the high-dimension of the data set: sparsity assumptions, dimension reduction, reduced-order modeling, and machine learning techniques trained using gradient-based optimization. The dependent nature of the data prevents traditional regression-based approaches, see the discussion in [51], but many of the approaches above successfully address this. Our work, however, exploits the interacting-agent structure of collective dynamical systems, which is driven by a collection of two-body interactions where each interaction depends only on pairwise data between the states of agents, as in (1). With this structure in mind, we are able to reduce the ambient dimension of the data to the dimension of the variables in the interaction kernels, which is independent of . We also naturally incorporate the dependence in the data in an appropriate manner by considering trajectories generated from different initial conditions.

Our theoretical results focus on the joint learning of that takes into account their natural weighted direct sum structure that is described in the following sections, which is different from the learning theory on single ’s considered in [52, 51]. The current theoretical framework is not able to conclusively show that and can be learned separately; however we demonstrate in various numerical experiments that by learning and jointly, we still achieve strong performance. Finally, we note that the first-order theory developed in [51] is a special case of our second-order theory, see details in appendix F.

2 Model description

In order to motivate the choice of second-order models considered in this paper, we begin our discussion with a simple second-order model derived from the classical mechanics point of view. Let us consider a closed system of homogeneous agents (or particles) equipped with a certain type of Lagrangian energy, namely for the whole system, given as follows,

Here is a potential energy depending on pairwise distance. From the Lagrange equation, , we obtain a simple second-order collective dynamics model

| (2.1) |

Here, represents an energy-based interaction between agents. For example, taking , it becomes the celebrated model for Newton’s universal gravity. In order to incorporate more complicated behaviors into the model equation, we consider alignment-based interactions (to align velocities, so that short-range repulsion, mid-range alignment, and long arrange attraction are all present), auxiliary variables describing internal states of agents (emotion, excitation, phases, etc.), and non-collective forces (interaction with the environment). We also consider a system of heterogeneous agents, such that the agents belong to disjoint types , with being the number of agents of type . They interact according to the system of ODEs

| (2.2) |

for , where are the indices of the agent type of the agents and respectively; the interaction kernels, , are in general different for interacting agents of different types, and they not only depend on pairwise distance , but also on other features, given by . For example, the interactions between birds can also depend on the field of vision, not just the distance between pairs of birds. Note that we will often suppress the explicit dependence on time when it is clear from context. The unknowns, for which we will construct estimators, in these equations, are the functions , and ; everything else is assumed given.

Table 1 gives a detailed explanation for the definition of the variables used in (2.2). We note that in what follows the notation, , attached to any expression means that there is one of those maps, functions, etc. for each element in the set . It is a convenient way to avoid excessive repetition of similar definitions.

| Variable | Definition |

|---|---|

| index of agent, from | |

| mass of agent | |

| position/velocity/acceleration vector of agent at time | |

| auxiliary variable, and its derivative | |

| Euclidean norm in | |

| number of types of agents | |

| index of type of agents, from | |

| number of agents in type | |

| type index of agent | |

| set of indices for type agent, subset of | |

| (or ) | interaction kernel: influence of agent (or ) on agent |

| non-collective forces on and respectively | |

| energy, alignment, and environment-based interaction kernels respectively | |

| Features, | |

| Feature map, | |

| Feature evaluation, |

The specific instances of the feature map together with corresponding projections include a variety of systems that have found a wide range of applications in physics, biology, ecology, and social science; see the examples in the chart below. We assume that the function is Lipschitz and known and so are all the ’s. The Lipschitz assumption is necessary for us to control the trajectory error and ensure the well-posedness of the system. The function is a uniform way to collect all of the different variables (functions of the inputs) used across any of the pairs over all of the functions in the system. This uniformity is helpful when discussing the rate of convergence, among other places. Examples of where this generality matters emerge naturally, say when one has a different number of variables across interaction kernels for different pairs , or when the energy and alignment kernels depend on and then additional but distinct other variables. From this uniform set of variables, we then project (which of course implies that the feature maps are all Lipschitz) to arrive at the relevant function for each pair and each of the elements of the wildcard. Lastly, we can then evaluate this map at the specific pair of agents , that leads to the feature evaluation, which is the expression used in the model equation (2.2).

The models encompassed by the form (2.2) are quite diverse. For a concrete example, please see section 9.2. We summarize many examples in table 2 with a shaded cell indicating that the model has that characteristic, and an empty cell indicates that the model does not have this characteristic. A numeric value indicates this is the number of unique variables, used within the EA or portions of the system. The number of these unique variables specifies the dimension in the minimax convergence rate, see section 7.3.

| Properties | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | ||||||||||||

| Anticipation Dynamics | 2 | |||||||||||

| Celestial Mechanics | 1 | |||||||||||

| Cucker-Smale | 1 | |||||||||||

| Fish Milling 2D | 1 | |||||||||||

| Fish Milling 3D | 1 | |||||||||||

| Flocking w. Ext. Poten. | 1 | |||||||||||

| Phototaxis | 1 | 1 | ||||||||||

| Predator-Swarm ( Order) | 1 | |||||||||||

| Lennard-Jones | 1 | |||||||||||

| Opinion Dynamics | 1 | |||||||||||

| Predator-Swarm ( Order) | 1 | |||||||||||

| Synchronized Oscillator | 2 | 2 | ||||||||||

Our second-order model equations cover the first-order models considered in [52, 51, 83] as special cases, see Appendix F, which is why we choose second-order models as the main focus of this work. Furthermore, the dynamical characteristics produced by second-order models are much richer and can model more complicated collective motions and emergent behavior of the agents. Note that our second-order model in (2.2), even when written as a first-order system in more variables, is a strict generalization of the previous first-order analysis.

Rate of convergence notation

For the system (2.2), depending on the number of variables, we will have a different rate of convergence as the dimension of the function(s) we are learning changes. In order to present a unified theorem, we adopt the following notation. Let denote the set of features in the range of that are arguments of or across each of the pairs. For many collective dynamical systems . In the case where the system has both and but , it is easy to see from Theorem 9 below that we still only pay the -dimensional rate. Analogously, we define to be the set of all features in the range of that are arguments involved in the part of (2.2) across all of the pairs. This notation is used to frame the convergence rate theorem on the variable as well.

3 Preliminaries and notation

We vectorize the models given in (2.2) in order to give them a more compact description. Letting , we take the following notations

We introduce a weighted norm to measure the system variables, denoted and given by,

| (3.1) |

for with each or . Here is the same norm used in the construction of pairwise distance data for the interaction kernels. In the subsequent equations we drop the explicit dependence on for simplicity. The weight factor, , is introduced so that agents of different types are considered equally and we learn well even in the case that the number of agents in the classes is highly non-uniform. With thsee vectorized notations, the model in (2.2) becomes,

Here , is the Hadamard product, and we use boldface fonts to denote the vectorized form of our estimators (with some once-for-all-fixed ordering of the pairs ):

| (3.2) |

We also use the shorthand,

| (3.3) |

to denote the element of the direct sum of the function spaces containing . This notation will be used throughout on the energy and alignment ( for short) portion of the system in order to simplify the notation.

We denote by , the vectorized notation for the non-collective force defined as follows, , and

We omit the analogous definitions for and .

3.1 Trajectory Performance Measurement

We will also consider another measurement to assess the learning performance of the estimated kernels in terms of trajectory error. We compare the observed trajectories to the estimated trajectories evolved from the same initial conditions but with the estimated interaction kernels. Let be the trajectory from dynamics generated by the true/unknown interaction kernels with initial condition, ; and be the trajectory from dynamics generated by the estimated interaction kernels learned from observation of with the same initial condition, (i.e., ). We define a norm for the difference between and at time :

| (3.4) |

and a corresponding norm on the trajectory ():

| (3.5) |

We also consider a relative version, invariant under changes of units of measure:

Lastly, we report errors between and ,

Similar re-scaled norms are used for the difference between and , and for the difference between and .

3.2 Function spaces

We begin by describing some basic ideas about measures and function spaces. Consider a compact or precompact set for some , then we define the infinity norm as,

Further define, as the space of real valued functions defined on with finite -norm. A key function space we need to consider is,

defined as the space of compactly supported, times continuously differentiable functions with a -th derivative that is Hölder continuous of order . We can then consider vectorizations of these spaces as

which has the vectorized infinity norm given by

Similarly, we consider direct sums of measures, with corresponding vectorized function spaces. This is done explicitly in section 5.1 where we define a weighted space (under a particular measure with an associated norm that induces a weighting) and consider vectorized versions of it.

In order to develop a theoretical foundation, and in line with the literature, we make assumptions on the class of functions that can arise as interaction kernels in the model (2.2). As the agents get farther and farther apart, they eventually should have no influence on each other. This is an approximation to the vanishing, or rapidly decreasing to zero, nature of pairwise interaction as distance increases that is observed in many physical models. We thus assume a maximum interaction radius for the interaction kernels which represents the maximal distance at which one agent can influence another. Similar assumptions will be made on the feature maps.

More precisely, for each pair we consider the following spaces,

| (3.6) |

for where recall that the notation means that there is an admissible space (or more generally an expression) for each element of the set . Here, are the minimum or maximum, respectively, possible interaction radii for agents in influencing agents in . Similarly, are compact sets in which contain the ranges of the feature maps, and .

We can also define the vectorizations of these spaces, which we will look at subsets of when we define the admissible spaces below, given by

In order to provide uniform bounds, we introduce the following sets:

| (3.7) |

Next, we introduce notation to bound the interaction radii on the pairwise distances and pairwise velocities.

Remark 1

Here we note an important distinction. There is the range of the norms of pairwise interactions generated by the dynamics, and the underlying support of the interaction kernels themselves. These two notions of interaction radius are distinct, we will comment on the estimation and subtleties of both in the numerical algorithm section.

We let

| (3.8) | ||||

| (3.9) |

Notice that a uniform support for all interaction kernels on the pairwise distance variable is .

We denote the distribution of the initial conditions by . This measure is unknown and is the source of randomness in our system. It reflects that we will observe trajectories which start at different initial conditions, but that evolve from the same dynamical system, which allows for learnability. For the numerical experiments we will choose our initial conditions to be sampled uniformly over a system dependent range.

Due to the form of (2.2), and the norms defined below, we consider the following dynamics induced ranges of the variables. Note that the first supremum is taken over the initial conditions, each of which generate different solutions which are used in the second supremum.

| (3.10) | ||||

| (3.11) |

We assume in this work that both of these quantities are finite. This will be easily satisfied if the measures (specifying the distribution of the initial conditions on the velocities and the environment variable) are compactly supported. This follows by the assumptions on the interaction kernels below and that we only consider finite final time .

In order for the second-order systems given by (2.2) to be well-posed, we assume that the interaction kernels lie in admissible sets. For each of the kernels, let and define

| (3.12) | ||||

When estimating the part of the system, we will consider the direct sum admissible space, for ,

| (3.13) |

The admissibility assumptions allow us to establish properties such as existence and uniqueness of solutions to (2.2) as well as to have control on the trajectory errors in finite time . It further allows us to show regularity and absolute continuity with respect to Lebesgue measure of the appropriate performance measures defined in section 5.1.

In the learning approach, we will consider hypothesis spaces that we will search in order to estimate the various interaction kernels. The hypothesis spaces corresponding to are denoted as and we vectorize them as,

| (3.14) |

Analogous to our simplified notation for described in (3.3), we define the direct sum of the hypothesis spaces as,

| (3.15) |

We will consider specific hypothesis spaces during the learning theory and numerical algorithm sections.

4 Inference problem and learning approach

In this section, we first introduce the problem of inferring the interaction kernels from observations of trajectory data and give a brief review and generalization of the learning approach proposed in the works [52] and [83].

4.1 Problem setting

Our observation data is given by for . Here and . For simplicity, we only consider equidistant observation points: for . The proposed algorithm with slight modifications also works for non-equispaced points. The sets of discrete trajectory data are generated by the system (2.1) with the unknown set of interaction kernels, i.e. , whose initial conditions are drawn i.i.d from , a probability measure defined on the space N(2d+2). The goal is to infer the unknown interaction kernels directly from data.

4.2 Loss functionals

Given observations, the references [52, 51, 83] proposed an empirical error functional, recalling the notational convention (3.3),

The estimators of interaction kernels are defined as the minimizers of the error functionals and over and respectively, i.e.

| (4.3) |

For the learning theory, we will consider the following error functionals. On the energy and alignment portion, we consider,

| (4.4) |

Similarly, on the portion, we consider,

| (4.5) |

We can relate these error functionals to the natural empirical error functionals introduced at the start of the section as follows. By the Strong Law of Large Numbers we have that,

The relationship between the theoretical and empirical error functionals will play a key role in the learning theory.

4.3 Overview of theoretical contributions

The papers [83, 52, 51] have applied this learning approach to a variety of systems and the extensive numerical simulations demonstrate the effectiveness of the approach. However, theoretical guarantees of the proposed approach for second order systems had not been fully developed and will be the main focus of this paper. Our theory contains the first-order theory in [51] as a special case, as discussed in Appendix F. We focus on the regime where is fixed but . We provide a learning theory that answers the fundamental questions:

-

•

Quantitative description of estimator errors. We will introduce measures to describe how close the estimators are to the true interaction kernels, that lead to novel dynamics-adapted norms. See section 5.

-

•

Identifiability of kernels. We will establish the existence and uniqueness of the estimators as well as relate the solvability of our inverse problem to a fundamental coercivity property. See section 6.

-

•

Consistency and optimal convergence rate of the estimators. We will prove theorems on strong consistency and optimal minimax rates of convergence of the estimators, which exploit the separability of the learning on the energy and alignment from the learning on the environment variable. See section 7.

-

•

Trajectory Prediction We prove a theorem that describes the performance of the estimated dynamics using the estimated kernels compared to the true dynamics. Our result demonstrates how the expected supremum error (over the entire time interval) of our trajectories is controlled by the norm of the difference between the true and estimated kernels, further justifying our choice of norms and estimation procedure. See section 8.

4.4 Hypothesis Space and Algorithm

First, we choose finite-dimensional subspaces of , i.e., , whose basis functions are piece-wise polynomials of varying degrees (other type of basis functions are also possible, e.g., clamped B-splines as shown in [52]); similarly for . Hence, each test function, , can be expressed in terms of the linear combination of the basis functions as follows

Substituting this linear combination back into (4.2), we obtain a system of linear equations,

Here, with and being the collection of or respectively. Moreover, and . See Sec. H for full details.

The overhead memory storage needed is , with needed for trajectory data, (here , the sum of the number of basis functions on and ) for learning matrices, and for right hand side vectors. Hence if , we can consider parallelization in in order to reduce the overhead memory, ending up with with . The final storage of and only needs .

The computational cost of for solving the system is , with for computing pairwise data, for constructing the learning matrix and right hand side vector, and for solving the linear system. In the case of choosing the optimal number of basis functions, i.e., , we end up with the total computational cost, of the order , which is slightly super linear in , but less than quadratic in the common situation when .

Similar analysis on solving also shows that the computational cost is slightly super linear in .

5 Learning theory

Given estimators, how to best measure the estimation error? This is the first question to address in order to have a full understanding of the performance. In earlier works, a set of probability measures that are adapted to the dynamical system and learning setting are introduced to describe how close the estimators are to the true interaction kernels. We will generalize these ideas and measures to our learning problem.

5.1 Probability measures

The variables we are working with have a natural distribution on the space of pairwise distances and features. Together with the fact that our functions have as arguments the variables () which are functions of the state space, we are led to consider probability measures which account for the distribution of the data, while respecting the interaction structure of the system. For further intuition into these measures, see [51, 52, 13]. For each interacting pair , we introduce the following probability measures,

| (5.1) |

where for and for , and the dirac measures are defined as

We use a superscript to denote that the variable is calculated from the data from that trajectory. For example, denotes the feature maps between agents and at time along the trajectory.

The measure is the discrete counterpart of at the observation time instances. In practice, we can use to approximate since it can be computed from observational data and will converge to as .

We also consider the marginal distributions

| (5.2) |

and , , , defined analogously as above. The empirical measures, , are the ones used in the actual algorithm to quantify the learning performances of the estimators and respectively. They are also crucial in discussing the separability of and .

For ease of notation, we introduce the following measures to handle the heterogeneity of the system, and which are used to describe error over all of the pairs .

| (5.3) |

5.2 Learning performance

We now discuss the performance measures for the estimated interaction kernels. We have already treated the trajectory estimation error in section 3.1. We use weighted -norms (with mild abuse of notation, we omit the weight from the notation) based on the dynamics-adapted measures introduced above, and with analogous definitions when discrete over :

| (5.4) | |||||

Our learning theory focuses on minimizing the difference between and in the joint norm given by (5.4). As long as the joint norm is small, our estimators produce faithful approximations of the right hand side function of the original system and trajectories. However, it does not necessarily imply that both ’s and ’s are small in their corresponding energy- and alignment-based norms, since the joint norm is a weaker norm. It would be interesting to study if there is any equivalence between these two norms, but the problem appears to be quite delicate. The theoretical investigation is still ongoing.

Now, we have all the tools needed to establish a theoretical framework: dynamics induced probability measures, performance measurements in appropriate norms, and loss functionals. These will allow us to discuss the convergence properties of our estimators. Full details of the numerical algorithm are given in Appendix H.

Notational summary

A summary of the learning theory notation introduced in sections 3, 4, and the notation above, is given below in table 3.

| Notation | Definition | Ref |

|---|---|---|

| number of trajectories | Sec. 1 | |

| number of times in for each trajectory | Sec. 2 | |

| full state space vector containing | Sec. 2 | |

| wildcard, means the notation applies for all variables | Sec. 2 | |

| (3.1) | ||

| distribution on the initial conditions | Sec. 3.2 | |

| vectorized true interaction kernels | (3.2) | |

| with | (3.2) | |

| shorthand denoting energy and alignment part of system | (3.3) | |

| represents the joint function | (3.3) | |

| (3.4) | ||

| (3.7) | ||

| (3.8) | ||

| (3.8) | ||

| admissible spaces for the kernels | (3.12) | |

| the hypothesis spaces for | (3.14) | |

| the hypothesis spaces for | (3.14) | |

| direct sum of hypothesis spaces | (3.15) | |

| empirical error functional, error functional | Sec. 4.2 | |

| (4.3) | ||

| (4.3) | ||

| basis for | Sec. 4.4 | |

| , | measure for , with continuous time, infinite trajectories | (5.3), G |

| , | measure for , discrete in time, infinite trajectories | (5.3), G |

| (5.3) | ||

| , | coercivity constant on the , hypothesis spaces | Def.4 |

| , | hypothesis spaces on depending on | Sec. 7 |

| , | Learning matrices for the inverse problem | Sec. 9 |

| -covering number, under the -norm, of a set | [77] |

6 Identifiability of kernels from data

In this section we introduce a technical condition on the dynamical system that relates to the solvability of the inverse problem and plays a key role in the learning theory. We establish theorems in two directions:

-

1.

Showing how identifiability of the kernels can be derived from the coercivity condition by relating the coercivity constant to the singular values of the learning matrices associated to our inverse problem, for both finitely and infinitely many trajectories.

-

2.

Establishing the coercivity condition for a wide class of dynamical systems of the form (2.2), under mild assumptions on the distribution of the initial conditions. From our numerical experiments, we expect that coercivity holds even more generally.

We will make the following assumptions on the hypothesis spaces used in the learning approach for the remainder of the paper:

Assumption 2

is a compact convex subset of (see 3.13) which implies that the infinity norm of all elements in is bounded above by a constant .

Assumption 3

is a compact convex subset of (see 3.12) and is bounded above by .

It is easy to see that can be naturally embedded as a compact subset of and that can be naturally embedded as a compact subset of (recall these measures are defined in section 5.1). Assumptions 2, 3 ensure the existence of minimizers to the loss functionals defined in (4.2) and (4.2), which will be proven in Appendix B.

In order to ensure learnability we introduce a coercivity condition, with terminology coming from the Lax-Milgram theorem. In the second-order case we will have two coercivity conditions, one for the energy and alignment, and the other for the variable. These conditions serve the same purpose in both cases. They first ensure that the minimizers to the error functionals are unique, and second that when the expected error functional is small, then the distance from the estimator to the true kernels is small in the appropriate norm.

Due to their connection to the error functional and the learnability of the kernels, coercivity plays an important role in the theorems of Section 7.

Definition 4 (Coercivity condition)

For the dynamical system (2.2) observed at time instants and with initial condition distributed on (2d+1)N, it satisfies the coercivity condition on the hypothesis space with constant if

| (6.1) |

An analogous definition holds for continuous observations on the time interval , by replacing the sum over observations at discrete times with an integral over . Similarly, the system satisfies the coercivity condition on the hypothesis space with constant if

| (6.2) |

An analogous definition holds for continuous observations on the time interval , by replacing the sum over observations at discrete times with an integral over .

In the following, we prove the coercivity condition on general compact sets of under suitable hypotheses. Our result is independent of , which implies that the finite sample bounds of Theorem 9 can be dimension free – in that the coercivity constant has no dependence on . This result implies that coercivity may be a fundamental property of the dynamical system, including in the mean field regime ().

6.1 Identifiability from coercivity

By choosing the hypothesis space to be compact and convex, we are able to show that the error functional has a unique minimizer. However, many possible bases exist that could potentially yield good performance (in terms of the error functional and error to the true kernel). We want to choose a basis such that the regression matrix, defined in Appendix H, is well-conditioned – which will ensure that the inverse problem can be solved and thus an estimator can be learned that will have good performance (in terms of the error functional and error to the true kernel). In the proposition below we establish two results in this direction. The key for both results is that the basis is chosen to be orthonormal in , versus the naive choice of basis in the underlying direct sum of spaces that the interaction kernels live in. The first result is theoretical and shows that, under appropriate assumptions on the basis, the minimal singular value of the expected regression matrix (denoted ) equals the coercivity constant. While the second result is critical for the practical implementation as it lower bounds the minimal singular value of the regression matrix by the coercivity constant with high probability. In both cases, the numerical performance is affected by the size of the coercivity constant of and if the hypothesis space is well-chosen, then the coercivity constant will be sufficiently large and the regression matrix will be well-conditioned.

To ease the notation, we introduce the bilinear functional on , defined by

| (6.3) | ||||

for any , and . For every pair let be a basis of

satisfying the orthonomality and boundedness conditions

| (6.4) |

We note that multivariable basis functions arise naturally in this setting due to the model. A tensor product basis of splines or piecewise polynomials can be used, as one explicit example. The notation allows multivariable functions, different choices for the number of basis functions across pairs , and a different number of basis functions within a pair with respect to the underlying coordinates of the tensor product.

By convention, we use the lexicographic ordering to order within pairs (with order ), and then across pairs (with the lexicographic ordering on pairs of integers). Set ; then for any function , we can write

Under the setting above, we have the following relationship between the coercivity constant and the minimal singular value of the empirical and expected learning matrix:

Proposition 5

Consider the matrices

and choose the hypothesis spaces as and . Then the coercivity constants for are the smallest singular value of , , respectively:

| (6.5) |

with defined in (6.1) and defined in (6.2). Additionally, for large , the smallest singular value of satisfies the inequality

with probability at least with . Similarly, for large , the smallest singular value of satisfies the inequality

with probability at least with . Therefore, with high probability, a system and its associated hypothesis space satisfying the coercivity condition and sufficiently large, the inverse problem will be solvable with condition number controlled by the coercivity constant.

Proof We prove the result in the case, the proof of the results about the part of the system is analogous. The orthonormality of the component functions given in (6.4), implies that . Expand in this basis as Let the vector , and notice that

This lower bound is achieved by the singular vector corresponding to the singular value , so that by definition (6.1) we have that .

For the second statement, we consider the learning matrix (defined in section H), which we can also write as to emphasize the dependence on as needed built, from the observed trajectories. By construction, for each , and by the Strong Law of Large Numbers. Next we will derive some important properties of the learning matrix that will allow us to apply the matrix Bernstein inequality (see [75], Theorem 6.1.1, Corollary 6.1.2). Note that we will use the notation from this reference. First we note an elementary matrix analysis result (see [7] Problem III.6.13); for any two square matrices ,

All norms in this proof are the spectral norm, unless otherwise specified. Thus if we get a concentration inequality of the form we will get the desired result relating the minimal singular values of to . First, notice that . Additionally, using the definition of the regression matrix, and our assumptions on the kernels and the dynamics, we can bound every entry by . This immediately implies the bound

Next, we upper bound the matrix variance statistic (in our case where ), defined as

Using a similar analysis to bound each entry of the matrices, we can arrive at the result that . Now, we apply the matrix Bernstein inequality to see that

Note that the in the numerator comes because has a factor of on it.

Lastly, choose , which together with the results above yield the desired inequality.

From Proposition 5 we see that, for each hypothesis space , it is important to choose a basis that is well-conditioned in , instead of in the corresponding spaces. If not, the learning matrices, defined in Appendix H, may be ill-conditioned or even singular. This would lead to fundamental numerical challenges in solving for the kernels. In order to mitigate these issues, one can use piecewise polynomials on a partition of the support of the empirical measure and/or use the pseudo-inverse with an adaptive tolerance.

6.2 Discussions on the coercivity condition

The coercivity condition is key to the identifiability of the kernels from data. It is determined by the distribution of the solution to the agent system and introduces constraints on the hypothesis space. For the second-order system, it is therefore related to the distribution of the initial conditions, the true interaction kernels, and the non-collective force. The coercivity condition has been studied for first-order systems in [52, 51, 48]. Below, we give a brief review.

For homogeneous systems, [52, 51] showed that the coercivity condition holds true on any compact subset of the corresponding space for the case of . This result has been generalized to cover heterogeneous systems in [51] and a few examples of the stochastic homogeneous system including linear systems and nonlinear systems with stationary distributions for general in [48].

In this paper, we shall employ a similar idea as for first-order systems and extend the result to second-order systems. One key in the proof is to show the positiveness of integral operators that arise in the expectation in Eq. (6.1). We focus on a representative model of second-order homogeneous systems,

| (6.6) |

which includes the first-order systems considered in [13, 52, 51] as special cases and various second-order system examples in [52, 83] as specific applications. We shall prove the coercivity condition holds true for the case :

Theorem 6

Consider the system (C.1) at time with the initial distribution , where is exchangeable Gaussian with for a constant , are exchangeable with finite second moment, and they are independent of . Then

where

-

•

, where () and and are non-negative constants independent of , and are strictly positive for compact of .

-

•

with () and is a non-negative constant independent of , which is strictly positive for compact of .

This exhibits a particular case that even the coercivity constant is independent of the number of agents . Consequently, the estimated errors of our estimators are independent of , and therefore not only is the convergence rate of our estimators independent of the dimension of the phase space, but even the constants in front of the rate term are independent of . Our results extend those for first-order systems from [52, 51, 83]. The empirical numerical experiments on second-order systems, already conducted in [83], support that the coercivity condition is satisfied by large classes of second-order systems, and is “generally” satisfied for general on relevant hypothesis spaces, with a constant independent of the number of agents thanks to the exchangeability of the distribution of the initial conditions, and of the agents at any time . The proof of the result above is given in Appendix C.

7 Consistency and optimal convergence rate of estimators

The final preparatory results for our main theorems combine concentration with a union bound. Here we control the probability that the supremum of the difference between the expected and empirical normalized errors over the whole hypothesis space is large.

7.1 Concentration

Our first main result is a concentration estimate that relates the coercivity condition to an appropriate bias-variance tradeoff in our setting. Let be the -covering number, with respect to the -norm, of the set .

Theorem 7 (Concentration)

Suppose that . Consider a convex, compact (with respect to the -norm) hypothesis spaces

bounded above by respectively. Additionally, assume that the coercivity condition (6.1) holds on and condition (6.2) on .

Then for all , with probability (with respect to ) at least , we have the estimates

| (7.1) | ||||

provided that, for the first bound to hold,

and similarly for the second inequality, using .

Proof [of Theorem 7] We start out by setting in Proposition 21, it is easy to see that this chosen value yields the tightest bound in the argument below. To ease the notation we let and similarly for . From the Proposition, we have that

holds true with probability

| (7.2) |

This immediately implies, by choosing and reorganizing, that with probability

By definition of as the minimizer of the empirical error functional , we see that

and combining this result with equation (B.13) from Proposition 15, we have

| (7.3) |

with the final inequality holding with probability . Now we bound the error of the empirical estimator to the true interaction kernel, so that with probability

The first inequality follow from the coercivity condition (6.1) and the definition of . The second follows by the definition of the norms. Now for a chosen , let

and solve for . The proof for the part of the system result is similar.

7.2 Consistency

In the regime where , we will choose an increasing sequence of hypothesis spaces, each satisfying the conditions of Theorem 7. By our assumptions on the kernels, we can also choose the sequence of ’s such that the approximation error goes to as . This enables us to control the infimum on the right hand side of (7.1). From here we can apply Theorem 7 on each to prove the consistency of our estimators with respect to the norm and derive the following consistency theorem.

Theorem 8 (Strong Consistency)

Suppose that

is a family of compact and convex subsets such that the approximation error goes to zero,

Further suppose that the coercivity condition holds on , and that is compact in . Then the estimator is strongly consistent with respect to the norm:

An analogous consistency result holds for the estimator in the variable.

These two results together provide a consistency result on the full estimation of the triple and thus consistency of our estimation procedure on the full system (2.2).

Proof [of Theorem 8 ] To simplify the notation, we use the same conventions as the proof of Theorem 7 and let . By definition of the coercivity constant in (6.1), we have the inequality . From an analogous argument used to arrive at equation (7.3) in the proof of Theorem 7, we obtain that

| (7.4) |

Let , the inequality (7.4) gives us that

We now bound the two terms in the above expression separately. For the first term, the proof of Theorem 7 shows that

where , , and is finite because of the compactness assumption on .

Summing this bound in we get that,

For the second term, the bound (B.3) yields that

Since is fixed, the above result, together with our assumption on the sequence of hypothesis spaces, implies that for sufficiently large. So we have . The finiteness of the two sums above implies, by the first Borel-Cantelli Lemma, that

As was arbitrary, we have the desired strong consistency of the estimator. An exactly analogous argument gives the result on the part of the system.

7.3 Rate of convergence

Given data collected from trajectories, we would like to choose the best hypothesis space to maximize the accuracy of the estimators. Theorem 7 highlights the classical bias-variance tradeoff in our setting. On the one hand, we would like the hypothesis space to be large so that the bias

is small. Simultaneously, we would like to be small enough so that the covering number is small. Just as in nonparametric regression, our rate of convergence depends on a regularity condition of the true interaction kernels and properties of the hypothesis space, as is demonstrated in the following theorem. We establish the optimal (up to a log factor) min-max rate of convergence by choosing a hypothesis space in a sample size dependent manner.

Comments

Parts (a) and (c) of the theorem concern an approximation theory type rate of convergence where plays no role in the choice of hypothesis space, whereas parts (b) and (d) of the theorem present a minimax rate of convergence that chooses an adaptive hypothesis space depending on to achieve the optimal rate.

The splitting of the convergence result, between and parts, emphasizes a common theme of the paper: we leverage that the system can be decoupled for the learning process to improve the rate of convergence and performance in learning the estimators, but analytically we study it as the full coupled system, see the trajectory prediction result in Theorem 10.

A final important comment is that even though the dimension of the space in which we measure the error may be large, namely the dimension of (which can be calculated as ), we exploit the structure of the system in such a way that our convergence rate only depends on the number of unique variables across all of the estimators. This number is given by the number of variables in , denoted as , for the portion and by for the portion of the system. We note that we are not predicting the number of variables nor their form, they are assumed known, this applies to both the pairwise interaction variables and the feature maps.

Theorem 9 (Rate of Convergence)

Let

denote the minimizer of the empirical error functional (defined in

(4.2)) over the hypothesis space .

(a) Let the hypothesis space be chosen as the direct sum of the admissible spaces, namely and assume that the coercivity condition (6.1) holds true on it.

Then, there exists a constant depending only on such that

(b) Assume that is a sequence of finite-dimensional linear subspaces of satisfying the dimension and approximation constraints

| (7.5) |

for some fixed constants representing dimension-independent approximation characteristics of the linear subspaces, and related to the regularity of the kernels. The value can be thought of as the number of basis functions along each of the axes. Suppose the coercivity condition holds true on the set . Define to be the closed ball centered at the origin of radius in . Let . If we choose the hypothesis space as , then there exists a constant depending on such that we achieve the convergence rate,

| (7.6) |

(c) under the corresponding assumptions as in (a), there exists a constant depending only on such that

(d) under the corresponding assumptions as in (b), there exists a constant depending only on such that, and for ,

| (7.7) |

We in fact prove bounds not only in expectation, but also with high probability, for every fixed large-enough , as the proof will show.

Proof [of Theorem 9] For part (a), let . Standard results on covering numbers of function spaces (see theorem 2.7.1 of [77]) give us that the covering number of satisfies

for some absolute constant depending only on and . By assumption on the hypothesis space, we have that

From this, the concentration estimate (7.1) together with the covering number bound imply that,

| (7.8) |

where and . Next, define the function

which we will minimize to achieve the desired probability bound.

By direct calculation, if we choose , where . It is then an easy computation to see that the derivative of is for all . Thus, we can put this result into the bound (7.8) to arrive at the probability bound,

| (7.9) |

Integrating this bound over and using the elementary inequality for all , we get that

Now, bringing the coercivity part from (7.1) back in, we achieve the rate,

where is an absolute constant that only depends on .

For part (b), we note the following basic result on the covering number of by -balls (see [31, Proposition 5]),

Using (7.1), and the approximation assumption, we bound the probability as

| (7.10) | ||||

where , , , and are absolute constants independent of . Define

To find the optimal in terms of , we minimize in . By taking a derivative, and solving the corresponding equation, we see that the optimal is

with constant independent of and only depending on . For convenience we will choose . Now let and consider

As before, let and consider . It is easy to see that and . Together with the continuity of , these facts imply that there exists a constant , depending on such that . We further need that for all . By taking the derivative of , setting it , we find that this condition eventually holds by basic calculus on . Therefore, if needed to satisfy the derivative condition, we can enlarge the constant to a constant (independent of ) such that and for all . These results imply the probability bound,

| (7.11) |

Integrating this bound over and using the elementary inequality for all , we get that

where is a constant depending on . Now with and using (7.1), we have shown the convergence rate,

where is an absolute constant that only depends on .

In both theorems, the convergence rates and coincide with the minimax rate of convergence for nonparametric regression in the corresponding dimension – up to the logarithmic factor. It is possible that this logarithmic factor could be removed (see techniques in Chapter 11-15 of [39]), but with considerable additional complexity of the proof. Achieving the same rate of convergence as if we had observed the noisy values of the interaction kernels directly, rather than through the dynamics, is a major strength of our approach. The strong consistency results show the asymptotic optimality of our method, and for wide classes of systems the assumptions of the theorems apply. Specifically, for part (b) of the theorems, the dimension and approximation conditions can be explicitly achieved by piecewise polynomials or splines appropriately adapted to the regularity of the kernel. In the conditions of theorem 9, can be the number of partitions along each axis of the variables in . Then, using multivariate splines or piecewise polynomials we will have a fixed constant (corresponding to the number of parameters to estimate for each function) times as the dimension of the linear space. Furthermore, by standard approximation theory results, see [70] (Chapters 12,13), [33],[35], for the regularity of the interaction kernels we achieve the desired approximation condition with piecewise polynomials of degree . In our admissible spaces we have , note that the theorems are stronger if we have a kernel of higher regularity.

We next briefly examine the convergence rate on a few systems of fundamental interest. Recall that in table 2 we have as the final two columns the values . These correspond directly to the rate of convergence of each of the system under our learning approach.

Some specific highlights:

-

•

For Anticipation Dynamics (AD), even though we are learning both an energy and alignment kernel, because there are only unique variables shared across both of them we learn at the -dimensional rate.

-

•

For the Synchronized Oscillator we achieve the -dimensional optimal learning rate on each of the and portions (rather than a -dimensional rate) due to the decoupled nature of the system, similarly we only pay the -dimensional rate twice for the Phototaxis system. This is a key reason for splitting our learning theory between - and -interaction kernels and accounting for shared and non-shared variables: this enables us to substantially improve the performance guarantees in actual applications.

-

•

Due to the design of the measures, norms and the associated learning algorithm, even in the heterogeneous case for celestial mechanics and predator-swarm, we only pay the -dimensional learning rate, although the constants are of course affected by the heterogeneity and the algorithm requires a larger learning matrix.

- •

One downside of the results above is the lack of dependence on as it seems natural that finer time samples in each trajectory should improve the results. Indeed, the numerical experiments of [83, 52, 51] demonstrate that more data in may indeed be helpful to improve the performance. One technique used in [83] for very long trajectory data (large , medium to small ) is to split each trajectory into larger with smaller in each. In this way, one can explicitly show the desired convergence rate in a form agreeing with the above theorems, we do not believe that it leads to significantly different performance compared to using the original data, only that we can easily transform the data into a form that the theorems apply to and with no loss of performance. Explicit dependence on is not the objective of this work, see [13], but further study of the mean-field regime is of interest to the authors and work is ongoing.

8 Performance of trajectory prediction

Once estimators are obtained, a natural question is the accuracy of the evolved trajectory based on these estimated kernels. The next theorem shows that the error in prediction is (i) bounded trajectory-wise by a continuous-time version of the error functional, and (ii) bounded on average by the , respectively, error of the estimator. This further validates the effectiveness of our error functional and -metric to assess the quality of the estimator. In particular, this emphasizes that although the system is a coupled system of ODE’s, our decoupled learning procedure with our choice of norm will lead to control of the expected supremum error as long as we minimize the norms in obtaining our estimators.

Theorem 10

Suppose that , and . Denote by and the solutions of the systems with kernels , and and respectively, both with the same initial condition. Then

where . The constants are and , with any unspecified constants made precise in the proof and only depending on the Lipschitz constants of the noncollective forces and the feature maps, as well as the values coming from the admissible spaces. It is bounded on average, with respect to the initial distribution , by

| (8.1) |

with the measures defined in (5.1, G.1). Expression (10) shows that by minimizing the right hand side, we can control the expected -supremum error of the estimated trajectories.

We postpone the somewhat lengthy proof to Appendix A.

9 Applications

Our learning theory, as well as measures, norms, functionals etc. can be applied to study all the examples considered in the works [52, 51, 83]. These examples, particularly those of [83], can thus be considered as applications of the theoretical results as well as of the algorithm in section H.

We choose to study two new dynamics, which are not considered in [52, 83] since they exhibit some unique features of our generalized model. In particular, we choose them due to their special form of having both energy-based and alignment-based interactions. These are the flocking with external potential (FwEP) model in [72] and the anticipation dynamics (AD) model in [71].

Table 4 shows the value of learning parameters for these dynamics.

| Num. of learning trials | ||||||

|---|---|---|---|---|---|---|

The setup of the learning experiment is as follows. We use different initial conditions to evolve the dynamics111We use the built-in MATLAB integrating routine, , with relative tolerance at and absolute tolerance at . from to to generate a good approximation to and . Then we use another set of ( for FwEP and for AD) initial conditions to generate training data to learn the corresponding and from the empirical distributions, ’s, etc. We report the relative learning errors calculated via (5.4) for , (5.4) for , and (5.4) for , along with pictorial comparison of those interaction kernels as well as a visualization on the pairwise data which is used to learn the estimated kernels. Then we evolve the dynamics either from the training set of initial conditions or another set of randomly chosen initial conditions with and from to , and report the trajectory errors calculated using (3.5) on (the whole system), and for (the position) and (the velocity). Again, pictorial comparison of the trajectories are also shown. We report the trajectory errors over and . The learning results are shown in the following sections.

9.1 Learning results for flocking with external potential

We consider the FwEP model for its simplicity and clustering behavior in both position and velocity (hence flocking occurs). The dynamics of the FwEP model is given as follows,

Here is a constant representing an attraction force, and 222This choice of interaction for alignment is not mandatory. It is a good comparison between this FwEP model with the Cucker-Smale mode with this choice of interaction functions. with . To fit into our learning regime, we take, , , no , no non-collective force, and

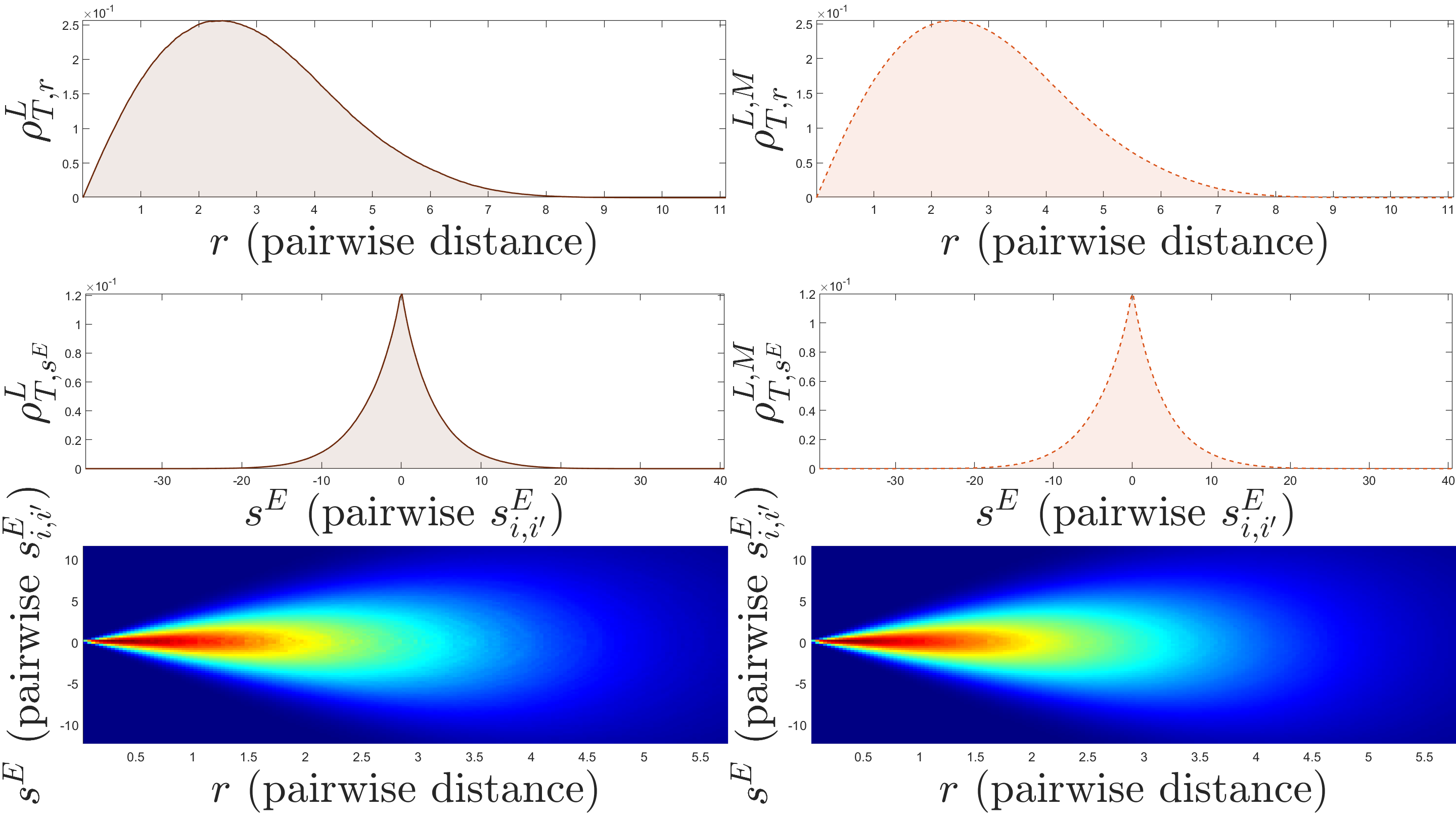

For the FwEP model, we use the space of degree piece-wise polynomials with dimension for learning ; and for , we use the same space. First, consider the comparison of energy-based interactions shown in Fig. 1.

Fig. 1 shows that our learning performance on constant functions using piecewise linear polynomials shows promising results. However, we still have trouble learning the behavior of the interaction at , part of it due to the weight of in the model, and the other part of it being lack of available data towards . Next, we show the comparison of alignment-base interactions shown in Fig. 2 with distribution of the pairwise data.

Again, in Fig. 2(a), it shows a faithful approximation from our estimated kernels. The error is: . The comparison of trajectories are shown in Fig. 3.

Fig. 3 shows little visual difference between the learned and observed trajectories. A more quantitative description of the trajectory errors are shown in table 5.

| on | ||

|---|---|---|

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on |

We are maintaining a relative four-digit accuracy in estimating the position, and a relative three-digit accuracy in estimating the velocity of the agents in the system. Although we are able to reconstruct with a -digit accuracy, we are not able to do the same for . The error in reflects this discrepancy by considering the two functions together.

9.2 Learning results for anticipation dynamics with

The energy-based interactions are constants in the FwEP models, if we want to consider more complicated models, i.e., interactions depending on pairwise distance and more, the AD models are suitable candidates. The dynamics of the AD model is given as follows,

| (9.1) |

Compared to the original model in [71], we take . In order to fit the model into our learning regime, we take

Here we have no , , , and

We also use .

It is shown in [71] that if is bounded when with , then unconditionally flocking would occur. We take for , then the system would show unconditional flocking. We choose for our learning trials333 induces constant forces on the dynamics.. We use a tensor grid of degree piece-wise standard polynomials with for learning , then a set of degree piecewise standard polynomials with for learning . For the energy-based interactions we have the following results.

As is shown in Fig. 4(b), the concentration of pairwise distance data is away from , making the estimation of the behavior of at close to extremely difficult, meanwhile, since is also weighted by the pairwise difference, , and at close to , the information is also lost. Next, we present the alignment-based interaction kernels.

We have less trouble estimating the behavior of at . We have trouble estimating at the other end of the spectrum of , since the agents have aligned their velocities, hence the weight is close to a zero vector. The overall learning performance for estimating is better compared to estimating . The error is: . The comparison of trajectories between the true kernels (LHS) and the estimators (RHS) is shown below.

Visually, there is no difference between the true dynamics and the estimated dynamics. We offer more quantitative insight into the difference between the two in table 6.

| on | ||

|---|---|---|

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on | ||

| on |

We maintain a -digit relative accuracy in estimating the position/velocity of the agents, even though for the interaction kernels, we are only able to maintain a -digit relative accuracy.

10 Conclusion and further directions

We have described a second-order model of interacting agents that incorporates multiple agent types, an environment, external forces, and multivariable interaction kernels. The inference procedure described exploits the structure of the system to achieve a learning rate that only depends on the dimension of the interaction kernels, which is much smaller than the full ambient dimension . Our estimators are strongly consistent, and in fact have learning rates that are min-max optimal within the nonparametric class, under mild assumptions on the interaction kernels and the system. We described how one can relate the expected supremum error of the trajectories for the system driven by the estimated interaction kernels to the difference between the true interaction kernels and the estimated ones – this result gives strong support to the use of our weighted norms as the correct way to measure performance and derive estimators. A detailed discussion of the full numerical algorithm, including the inverse problem derived from data and a coercivity condition to ensure learnability, along with complex examples, were presented and we showed how the formulation presented covers a very wide range of systems coming from many disciplines.

There are various ways that one could build on this work to handle different systems and for many of these further directions, the theoretical framework, techniques, and theorems presented here would be directly useful. In particular, one could consider second-order stochastic systems or a similar system but on a manifold, more complex environments, having more unknowns within the model beyond just the interaction kernels (say estimating the non-collective forces as well), identifying the best feature maps to model the data, and considering semiparametric problems where there are hidden parameters within the interaction kernels or other parts of the model that we wish to estimate along with the interaction kernels. The generality of the model and its broad coverage of models across the sciences, together with the scalability and performance of the algorithm, could inspire new models – both explicit equations and nonparametric estimators learned from data – which are theoretically justified and highly practical.

Acknowledgments and contributions

MM is grateful for discussions with Fei Lu and Yannis Kevrekidis, and for partial support from NSF-1837991, NSF-1913243, NSF-1934979, NSF-Simons-2031985, AFOSR-FA9550-17-1-0280 and FA9550-20-1-0288, ARO W911NF-18-C-0082, and to the Simons Foundation for the Simons Fellowship for the year ’20-’21; ST for support from an AMS Simons travel grant; JM for support from NIH - T32GM11999. Please direct correspondence to any of the first three authors.

All authors jointly designed research and wrote the manuscript; JM and ST derived theoretical results; MZ developed algorithms and applications; JM and MZ analyzed data.

A Control of trajectory error

Proof [of Theorem 10] We introduce the function

defined on for functions . Similarly, let . Now we have by assumption that all trajectories

start from the same initial conditions on both the position and velocity, which implies that and . For every we have that, by the fundamental theorem of calculus and the triangle inequality,

| (A.1) | ||||

Here we have introduced the term,

which can be expressed explicitly as,

| (A.2) |

Note that in , is the index of the type among the and indexes within each type . This holds similarly in later expressions . For the third term of (A.1), we exploit the Lipschitz property of the non-collective force:

So that we have the bound

| (A.3) |

First we introduce the convenient notations of

| (A.4) |

with analogous formulae for . Now we break up using the triangle inequality and get that where

So using the Lipschitz property of we get that, since

then,

By the assumptions on the feature maps, we have that

Combining these bounds we see that,

| (A.5) |

Let , , and then let and we get by Young’s inequality that,

| (A.6) |

and performing a similar analysis we get that

So gathering terms, we can reexpress (A.1) as

| (A.7) |

where . Performing an analogous analysis on , with some additional effort, one can get the following result on the phase variable

| (A.8) |

where and where . Similarly, we have that,

| (A.9) |

where we denote the last three lines by and notice that this is a nondecreasing function in . We also denote and . Now use theorem 30, which is in [22] and is originally in Bainov and Simeonov. With this notation, we can rewrite the above bound as

| (A.10) |

And so in the notation of Theorem 30 we have , , and , so that for all we have

and we have the simple bounds

| (A.11) | |||

| (A.12) |

So that,

So that we can immediately conclude the first assertion of the theorem,

Lastly, we can use the results of section B.1 to get the key result on the expected supremum error. We take expectation on each of the three terms of and normalize them so they are in the form of the results of B.1.

| (A.13) | |||

| (A.14) |

We similarly get that,

| (A.15) |

and can get an analogous bound for the remaining term of . These bounds together lead to

which implies the desired result.

B Learning theory - technical tools

B.1 Continuity of the error functionals

For any , consider the two random variables,

| (B.1) |

| (B.2) |