Learning the Dynamical Response of Nonlinear Non-Autonomous Dynamical Systems with Deep Operator Learning Neural Networks

Abstract

We propose using operator learning to approximate the dynamical system response with control, such as nonlinear control systems. Unlike classical function learning, operator learning maps between two function spaces, does not require discretization of the output function, and provides flexibility in data preparation and solution prediction. Particularly, we apply and redesign the Deep Operator Neural Network (DeepONet) to recursively learn the solution trajectories of the dynamical systems. Our approach involves constructing and training a DeepONet that approximates the system’s local solution operator. We then develop a numerical scheme that recursively simulates the system’s long/medium-term dynamic response for given inputs and initial conditions, using the trained DeepONet. We accompany the proposed scheme with an estimate for the error bound of the associated cumulative error. Moreover, we propose a data-driven Runge-Kutta (RK) explicit scheme that leverages the DeepONet’s forward pass and automatic differentiation to better approximate the system’s response when the numerical scheme’s step size is small. Numerical experiments on the predator-prey, pendulum, and cart pole systems demonstrate that our proposed DeepONet framework effectively learns to approximate the dynamical response of non-autonomous systems with time-dependent inputs.

keywords:

Neural operator for dynamical systems, operator learning for dynamical systems and control, non-autonomous systems, control systems.[label1]organization=Department of Mechanical Engineering, Purdue University, addressline=610 Purdue Mall, city=West Lafayette, postcode=47907, state=IN, country=USA \affiliation[label2]organization=Department of Mathematics, Purdue University, addressline=610 Purdue Mall, city=West Lafayette, postcode=47907, state=IN, country=USA

1 Introduction

High-fidelity numerical schemes are the primary computational tools for simulating and predicting complex dynamical systems, such as climate modeling, robotics, or the modern power grid. However, these schemes may be too expensive for control, optimization, and uncertainty quantification tasks, which often require a large number of forward simulations and consume significant computational resources. Therefore, there is a growing interest in developing tools that can accelerate the numerical simulation of complex dynamical systems without compromising accuracy.

By providing faster alternatives to traditional numerical schemes, machine learning-based computational tools hold the promise of accelerating the rate of innovation for complex dynamical systems. Hence, a recent wave of machine and deep learning-based tools has demonstrated the potential of using observational data to construct fast surrogates of complex systems Efendiev et al. (2021); Qin et al. (2021) and providing efficient solutions to complex engineering tasks such as fault diagnosis Choudhary et al. (2023); Mishra et al. (2022b, a) and power engineering Moya and Lin (2023); Moya et al. (2023). These tools aim to (i) learn the governing equations of a dynamical system or (ii) learn to predict the system’s response from data.

On the one hand, several works Brunton et al. (2016a, b); Schaeffer (2017); Sun et al. (2020) use observational data to discover the unknown governing equations of the underlying system. For example, Brunton et al. Brunton et al. (2016a) proposed identifying nonlinear systems using sparse schemes and a large set of dictionaries. They then extended their work in Brunton et al. (2016b) to identify input/output mappings that describe control systems. In Sun et al. (2020), the authors used sparse approximation schemes to recover the governing partial or ordinary differential equations of unknown systems.

On the other hand, there is growing interest in predicting the future response of a dynamical system using time-series data Qin et al. (2019); Raissi et al. (2018); Qin et al. (2021); Proctor et al. (2018). For instance, the authors of Efendiev et al. (2021); Chung et al. (2021) trained a transformer with data from the early stages of time-dependent partial differential equations to recursively predict the solution of future stages. Qin et al. Qin et al. (2019) used a recurrent residual neural network (ResNet) to approximate the mapping from the current state to the next state of an unknown autonomous system. Similarly, in Raissi et al. (2018), the authors used feed-forward neural networks (FNN) as a building block of a multi-step scheme that predicts the response of an autonomous system. In Qin et al. (2021), the authors extended their previous work Qin et al. (2019) to non-autonomous systems with time-dependent inputs. To this end, they parametrized the input locally within small time intervals.

Many of the works mentioned above require large amounts of training data to avoid overfitting. However, acquiring this data may be prohibitively expensive for complex dynamical systems. Additionally, if one fails to avoid overfitting when using traditional neural networks for next-state methods, the predicted response may drift and accumulate errors over time. It is therefore crucial to develop deep learning-based frameworks that can efficiently handle the infinite-dimensional nature of predicting the response of non-autonomous systems with time-dependent inputs for long time horizons, as studied in this paper.

Operator Learning Chen and Chen (1995); Lu et al. (2019); Li et al. (2020); Zhang et al. (2022) was first addressed by the seminal work of Chen and Chen (1995). Unlike classical function learning, operator learning involves approximating an operator between two function spaces. Recently, the seminal paper Lu et al. (2021) extended the work of Chen and Chen (1995) and proposed the Deep Operator Neural Network (DeepONet) framework. DeepONet exhibits small generalization errors and learns with limited training data Zhang et al. (2022). This has been demonstrated in many application areas, such as power engineering Moya et al. (2023), multi-physics problems Cai et al. (2021), and turbulent combustion problems Ranade et al. (2021). Extensions to the original DeepONet Lu et al. (2021) have enabled the incorporation of physics-informed models Wang et al. (2021b), handling noisy data Lin et al. (2023), and designing novel optimization methods Lin et al. (2023, 2021).

DeepONet is a prediction-free and domain-free tool Zhang et al. (2022). Specifically, DeepONet can predict the target function value at any point in its domain, and the input and output functions are not necessarily required to share the same domain. This property allows for handling incomplete datasets during the training phase. In Zhang et al. (2022), it was further relaxed the assumptions on input function discretization, improving flexibility in data preparation and prediction accuracy for operator learning.

In this paper, we focus on extending the This paper focuses on extending the original DeepONet framework to learn the solution operator of non-autonomous systems with time-dependent inputs for long-time horizons. By using such a data-driven operator framework, control policies can be designed for continuous nonlinear systems, among other applications.

In particular, one of our motivations behind learning to approximate the solution operator of a non-autonomous system is its application in control, and Model-Based Reinforcement Learning (MBRL) Wang et al. (2019); Sutton (1990). In MBRL, one learns to approximate the system to control (from data) and then uses the learned model to seek an optimal policy without extensive interaction with the actual system. A common approach is to learn a discrete-time forward model that predicts the next state using the current state and selected control action. Such an MBRL framework has delivered successful and efficient results in discrete-time problems such as games Kaiser et al. (2019). However, most control systems in science and engineering (e.g., robotics Nagabandi et al. (2020), unmanned vehicles Hafner et al. (2019), or laminar flows Fan et al. (2020)) are continuous. Of course, one can always discretize the continuous dynamics and apply discrete-time MBRL using traditional neural networks (e.g., see Brockman et al. (2016)). However, if one fails to handle the inherent epistemic uncertainty Deisenroth et al. (2013), such a strategy may lead to error accumulation (due to model bias) and poor asymptotic performance. To alleviate these drawbacks, we build on the original DeepONet Lu et al. (2021) to design an effective and efficient framework that can learn the solution operator of a nonlinear non-autonomous system with time-dependent inputs, which one can then apply within the framework of control or continuous MBRL Du et al. (2020).

Formally, the objectives of this paper are twofold.

-

1.

Approximation of the local solution operator: Our goal is to create a neural operator-based framework that can learn to map (i) the current state of the dynamical system with control and (ii) a local approximation of the input to the next state of the non-autonomous dynamical system.

-

2.

Long/Medium-term simulation: We aim to design an efficient scheme that uses the operator learning framework to simulate the dynamical system’s response to a given input over a long or medium-term horizon.

The contributions that will achieve the above objectives are summarized below.

-

1.

We model the non-autonomous/control dynamical system in the deep operator learning framework and propose a DeepONet-based numerical scheme that effectively and recursively simulates the solution of the system.

-

2.

We also propose a novel data-driven Runge-Kutta (RK) DeepONet-based method that reduces the cumulative error and improves recursive prediction accuracy in long-term simulations.

-

3.

We provide an estimation of the cumulative error for the proposed numerical scheme based on DeepONet. Our estimated error bounds are tighter than those presented in Qin et al. (2021). Additionally, we demonstrate that the novel data-driven RK DeepONet-based scheme achieves better stability bounds than the DeepONet-based scheme.

-

4.

We test the proposed frameworks on state-of-the-art models, such as the predator-prey system, the pendulum, the cart-pole system, and a power engineering task. The effectiveness of the methods is observed in all experiments.

We organize the rest of this paper as follows. In Section 2, we review operator learning and introduce the proposed DeepONet-based algorithms. Next, we present the novel data-driven Runge-Kutta DeepONet-based scheme and estimate its corresponding error bound in Section 3. We present the numerical experiments in Section 4. We provide a discussion of our results and future work in Section 5, and conclude the paper in Section 6.

2 Problem Formulation

We consider the problem of learning from data the solution operator of the following continuous-time non-autonomous system with time-dependent inputs

| (1) |

where is the state vector, the input vector, and an unknown function. Additional assumptions on the input function u and the function will be discussed later. Also, throughout this paper, we assume is a scalar input, i.e., . Extending the proposed framework to the vector-valued case is straightforward.

Solution Operator. Let denote the solution operator of (1). takes as input the initial condition and the sequence of input function values , and outputs the state at time . We compute the solution operator via

| (2) |

Approximate System. In practice, to learn the operator , we only have access to an approximate/discretized representation of the input function . Let denote this approximate representation, which yields the following approximate system

| (3) |

whose solution operator is

| (4) |

In the above, with a slight abuse of notation, we denoted as the input discretized using sensors or interpolation points within the interval , and the approximate state function as .

Remark.

In practice, the approximate system (3) with the solution operator (4) can represent, for example, sampled-data control systems Wittenmark et al. (2002) or semi-Markov Decision Processes Du et al. (2020). Therefore, the methods introduced in this paper can be used to design optimal control policies within the framework of model-based reinforcement learning Wang et al. (2019).

2.1 Learning the Solution Operator

To learn the solution operator , we use the Deep Operator Network (DeepONet) framework introduced in Lu et al. (2021). In Lu et al. (2021), the authors used a DeepONet to learn a simplified version of the solution operator (2) with , i.e.,

The DeepONet takes as inputs the (i) input discretized using interpolation points (known as sensors in Lu et al. (2019)) and (ii) time , and outputs the state . Clearly, this DeepONet prediction is one-shot; it requires knowledge of the input in the whole interval . For small values of , the DeepONet’s prediction is very accurate. However, as we increase , the accuracy deteriorates. To improve accuracy, one can increase the number of interpolation points. This, however, makes DeepONet’s training more challenging.

To alleviate this drawback, we take a different approach in this paper. First, we train a DeepONet , with the vector of trainable parameters , to learn the local solution operator of the system. Then, we design a DeepONet-based numerical scheme that recursively uses the trained DeepONet to predict long/medium-term horizons, i.e., for large values of .

Learning the Local Solution Operator. We let denote the possibly irregular and arbitrary time partition

where for all and let . Then, within the local interval , the solution operator is given by

| (5) |

In the above, with a slight abuse of notation, we use to denote the local discretized representation of the input function , within the interval , using interpolation/sensor points or basis.

The DeepONet . Next, we design a Deep Operator Network (DeepONet) , with the vector of trainable parameters , to approximate the local solution operator (5). Figure 1 illustrates the proposed DeepONet that has two neural networks: the Branch Net and the Trunk Net.

The Branch Net maps the vector that concatenates the (i) current state and (ii) input function , discretized using the mesh of local sensors , to the branch output feature vector . Meanwhile, the Trunk Net maps the scalar step size to the trunk output feature vector . We compute the DeepONet’s output for the th component of the state vector using the dot product:

Remark.

Sensor locations. One of the problems with the original DeepONet Lu et al. (2019) is that the sensor locations, , are fixed. If we fix these sensor locations, then we cannot predict the response of the system using the arbitrary and irregular partition . To enable prediction with , we let the input to the branch be the concatenation of the discretized input with the corresponding (flexible) relative sensor locations .

Remark.

The case of sensors, i.e., the piece-wise constant approximation of . If we let sensor, then the discretized input function within the interval corresponds to the singleton . Such a case is the most challenging to learn because it introduces the largest error that propagates over time. However, it also represents one of the target applications: continuous model-based reinforcement learning with semi-Markov decision processes. We will show (in Section 4) that the proposed DeepONet can effectively handle having only sensor.

Training the DeepONet . We train the proposed DeepONet model by minimizing the loss function

over of training data triplets

generated by the unknown ground truth local solution operator .

2.2 Predicting the System’s Response

We predict the response of the system (1) over a long/medium-term horizon (i.e., within the interval , with ) using the DeepONet-based numerical scheme detailed in Algorithm 1 and illustrated in Figure 2. Algorithm 1 takes as inputs the (i) initial condition , (ii) partition , (iii) discretized representation of the input , for , and (iv) trained DeepONet . Then, Algorithm 1 outputs the predicted response of the system over the partition , i.e., . Let us conclude this section by estimating a bound for the cumulative error of the proposed DeepONet-based numerical scheme described in Algorithm 1.

2.3 Error Bound for the DeepONet-based Numerical Scheme

Assumptions. We let the input function where is compact. We also assume the unknown vector field is Lipschitz in and , i.e.,

where is a constant, and are in some proper space. Such an assumption is common in engineering, as is often differentiable with respect to and .

The following lemma, presented in Qin et al. (2021), provides us with an alternative form of the local solution operator (4). Such a form will be used later when estimating the error bound.

Lemma 2.1.

Consider the local solution operator . Then, there exists a function , which depends on , such that

| (6) |

for any . In the above, locally characterizes on the mesh .

We now provide the error estimation of the proposed DeepONet prediction scheme detailed in Algorithm 1. For the ease of notation, we denote and for all .

Lemma 2.2.

For any , we have

| (7) |

where , , and .

Proof.

Let and in the solution operators (2) and (4). Then, subtracting (4) from (2) gives

where is the local approximation error of the input within the interval :

such that

We refer the interested reader to equation (4) of Lu et al. (2019) for details of such an approximation for the input. Set and apply Gronwall’s inequality, we then have,

| (8) |

Taking gives

The bound then follows immediately due to . ∎

Before we estimate the cumulative error of the DeepONet-assisted solution , we review the universal approximation theorem of neural networks for high-dimensional functions Cybenko (1989). To this end, given , we define the following vector-valued continuous function

where . Then, by the universal approximation theorem, for , there exist , , and such that

| (9) |

Here, the two-layer network represents the DeepONet for a given , i.e.,

The following lemma estimates the cumulative error between the DeepONet-assisted solution (obtained via Algorithm 1) and the solution of the approximate system that satisfies (6).

Lemma 2.3.

Assume is Lipschitz in with Lipschitz constant . Suppose the DeepONet is well trained so that the network satisfies (9). Then, we have the following estimate:

| (10) |

where .

Proof.

It follows from the universal approximation theorem of neural networks (9) and being Lipschitz that,

The result follows immediately from . ∎

The following theorem summarizes the error of the proposed DeepONet scheme.

Theorem 2.4.

We conclude this section by observing that the error bound found in this section is tighter than the error found in Qin et al. (2021), which behaves like where is a positive constant.

3 Data-Driven Runge-Kutta DeepONet Prediction Scheme

In this section, we propose a data-driven Runge-Kutta explicit DeepONet-based scheme Iserles (2009) that predicts the new state vector using the current state value , i.e.,

Here and are, respectively, the estimates of at and . We compute these estimates (see equation (13)) using (i) the forward pass of trained DeepONet and (ii) automatic differentiation. Note that in (13b), we use the notation for the estimate of the state at obtained using the DeepONet’s forward pass.

We detail the proposed data-driven RK explicit DeepONet-based scheme in Algorithm 2 and Figure 3. Two remarks about our algorithm are provided next. (i) For simplicity, we only present our scheme for the improved Euler method or RK-2 Iserles (2009). However, we remark that we can extend our idea to any explicit Runge-Kutta scheme. (ii) If is available at , then we can compute as follows:

| (12) |

Then, equations (13a) and (12) will work as a predictor-corrector scheme with the updated input information. Other strategies can also be adopted within the proposed RK scheme. However, we let the design of such strategies for our future work.

| (13a) | ||||

| (13b) | ||||

| (14) |

3.1 Error Bound for the Data-Driven Runge-Kutta Scheme

Here we derive an improved conditional error bound estimate for . To that end, we start by rephrasing the universal approximation theorem of neural network for high-dimensional functions Cybenko (1989), which we introduced in Section 2.3. For , there exist , , and such that

| (15) |

where and is constant. As before, the two-layer network represents the DeepONet for a given .

The following lemma estimates the error between , predicted using the RK scheme (Algorithm 2), and , obtained using the solution operator (4) of the approximate system (3).

Lemma 3.1.

Assume is Lipschitz in with Lipschitz constant . Suppose the DeepONet is well trained so that (15) holds. Then, we have the estimate

| (16) |

where , and .

Proof.

Two remarks about the error bound are as follows. (i) Note that the proposed data-driven RK DeepONet-based scheme provides an improved error bound (16) when compared to the bound obtained in (10). More specifically, the growth factor behaves like in (10). However, when , a smaller factor can be derived. (ii) We can extend the proof provided here for the RK-2 scheme to any other RK explicit scheme. We will analyze this in our future work. Let us conclude this section with the following theorem that summarizes the error of the proposed data-driven RK scheme.

4 Numerical Experiments

To evaluate our framework, we tested the proposed DeepONets on five tasks: the autonomous Lorentz 63 system (in Section 4.1), the predator-prey dynamics with control (in Section 4.2), the pendulum swing-up (in Section 4.3), the cart-pole system (in Section 4.4), and a power engineering application (in Section 4.5). For the three control tasks, we used only sensor. The reasons for selecting only one sensor are twofold. First, we want to show that DeepONet is effective even when the input signal is encoded with minimal information. For reference, in Qin et al. (2021), the authors encoded the input signals (used in their experiments) with at least interpolation points (sensors). Second, the sensor scenario resembles the scenario of sampled-data control systems Wittenmark et al. (2002) or reinforcement learning tasks Sutton and Barto (2018) with continuous action space. Finally, for the power engineering application task, we used sensors.

Training dataset. For each of the three continuous control tasks (predator-prey, pendulum, and cart-pole systems), we generate the training dataset as follows. We use Runge-Kutta (RK-4) Iserles (2009) to simulate trajectories of size two. For each trajectory, the input to RK-4 is the initial condition uniformly sampled from and the input uniformly sampled from the set . The output from the RK-4 algorithm is the state , where is uniformly sampled from the interval . Such a procedure gives the dataset:

Training protocol and neural networks. We implemented our framework using JAX Bradbury et al. (2018)555We will publish the code on GitHub after publication. The neural networks for the Branch and Trunk Nets are the modified fully-connected networks proposed in Wang et al. (2021a) and used in our previous paper Moya et al. (2023). We trained the parameters of the networks using Adam Kingma and Ba (2014). Moreover, we selected (i) the default hyper-parameters for the Adam algorithm and (ii) an initial learning rate of that exponentially decays every 2000 epochs.

4.1 The Autonomous Lorentz 63 System

To evaluate the proposed framework, we first consider the autonomous and chaotic Lorenz 63 system with the following dynamics:

| (21) |

with parameters and Notice that, compared to non-autonomous systems, autonomous systems only require the previous state to predict the next state, simplifying the learning problem. Nevertheless, we do recognize that the chaotic nature of the Lorentz system can pose a challenge to error accumulation over time. To address this, we have arbitrarily increased the size of the training dataset, which is described next.

The training dataset for this autonomous system is provided as a collection of 20000 scatter one-step responses, or trajectories of size two. Specifically, , where is sampled from the state space , and the step sizes are sampled from the interval . Note that we keep the step size small to track the error accumulation of the chaotic system, but this requires us to increase the size of our training dataset and normalize the state space.

The trained DeepONet is used to predict the response of the autonomous Lorentz 63 system over the time-domain seconds, for the initial condition , using a uniform partition with a fixed step size of . Figure 4 shows the comparison between the recursive DeepONet prediction obtained from Algorithm 1 and the true response of the Lorentz system’s state variables and . The results demonstrate excellent agreement between the proposed method and the true values, despite the chaotic nature of the autonomous Lorentz system.

4.2 The Predator-Prey Dynamics with Control

To evaluate our framework, we now consider the following Lotka-Volterra Predator-Prey system with input signal :

| (22) |

The system (22) was also studied in Qin et al. (2021), where the authors encoded using three interpolation points. To train our DeepONet, we generated trajectories with the initial condition (resp. input signal ) sampled from the state space (resp. input space ).

We use the trained DeepONet to predict the response of the predator-prey system (see equation (22)) to the input signal within a partition (s) with a constant step size , where . Figure 5 compares DeepONet’s long-term prediction with the true trajectory. Note that the predicted trajectory agrees very well with the true trajectory for both states, . The -relative errors for and are summarized in Table 1.

| relative error | 2.42 % | 0.93 % |

|---|

4.3 Pendulum Swing-Up

Let us now consider the following pendulum swing-up control system:

| (23) |

where is the state vector, the pendulum’s angle, the angular velocity, and the control torque. We set the parameters to the following values. The pendulum’s mass is (kg), the length is (m), the moment of inertia of the pendulum around the midpoint is , and the friction coefficient is (sNm/rad).

We trained the proposed framework using samples, each consisting of a tuple . The initial condition, (the state of the non-autonomous pendulum system), was sampled from the uniform distribution . The step size was obtained by uniformly sampling from the interval . The control torque, , was constant on the interval and was sampled from the uniform distribution . Finally, we obtained the terminal state, , by evolving according to the non-autonomous dynamics (see equation (23)). It is worth noting that the the proposed operator learning setting allows us to handle datasets with incomplete data, as is not identical for all training samples. Our methods thus have much more flexibility in terms of data preparation.

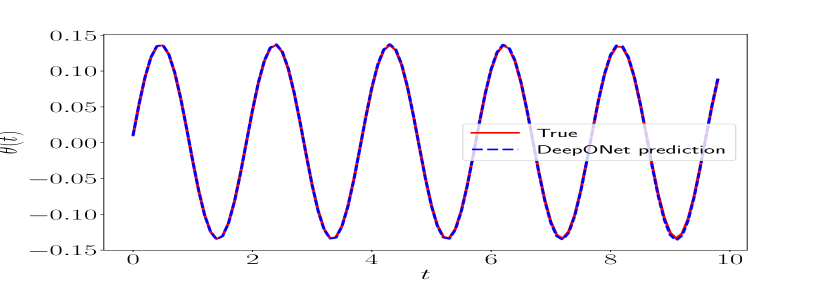

Stable response. We begin by using the trained DeepONet to predict the pendulum’s response to the input within the partition (s) with a step size of (s). This input yields state trajectories that settle to an asymptotic equilibrium point. Figure 6 shows the excellent agreement between the predicted and actual trajectories.

To test the predictive power of the proposed framework, we compute the average and standard deviation (st. dev.) of the -relative error between the predicted and actual response of the pendulum to the following: (i) the control torque , and (ii) initial conditions sampled from the set . Table 2 reports the results, demonstrating that DeepONet consistently maintains an average -relative error of below and for the angle and the angular velocity trajectories, respectively. Please refer to Table 2 for a summary.

| angle | angular velocity | |

|---|---|---|

| mean | 1.056 % | 3.356 % |

| st.dev. | 2.509 % | 8.234% |

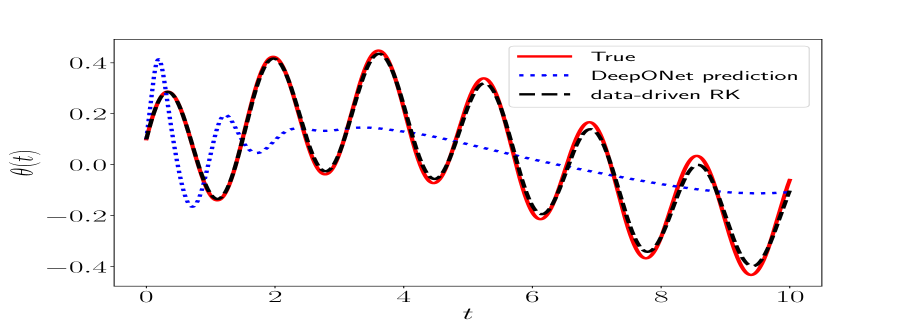

Oscillatory response. We now test the trained DeepONet for the control torque within the partition (s) with a constant step size of . Figure 7 depicts the excellent agreement between the predicted and actual oscillatory trajectory.

Let us now consider a different partition with a smaller step size . Figure 8 shows that the proposed DeepONet fails to keep up with the oscillatory response. To improve our prediction, we employ the proposed data-driven Runge-Kutta DeepONet method described in Algorithm 2. Figure 8 depicts the agreement between the actual trajectory and the trajectory predicted using the data-driven RK DeepONet method. The corresponding -relative errors are and for the angle and the angular velocity , respectively.

Comparison with other benchmarks. We compare the proposed DeepONet numerical scheme (Algorithm 1) with other benchmarks. The authors are only aware of the work developed in Qin et al. (2021), which can approximate the dynamic response of continuous nonlinear non-autonomous systems over an arbitrary time partition for arbitrary input functions. Below, we will compare our method with the approach in Qin et al. (2021), which we refer to as FNN.

Additionally, a related field that attempts to learn an environment model of a discrete, non-linear, non-autonomous system is model-based reinforcement learning (MBRL) Wang et al. (2019). However, since they can only approximate the response over a uniform partition, a comparison of traditional MBRL benchmarks with the proposed work is not possible. To address this problem, we transform the ensemble method Wang et al. (2019), a simple but effective MBRL method to learn the environment dynamics in MBRL, into a continuous approach using ideas from Qin et al. (2021). Thus, we also compare the proposed method with this continuous ensemble approach.

We begin by comparing the average and standard deviation of the relative error between the predicted response from DeepONet, FNN, and Ensemble models. These models were all trained using one-step responses, and compared with the actual response trajectories of the pendulum system (23). The pendulum trajectories are the responses to (i) the control torque and (ii) initial conditions uniformly sampled from the set . Table 3 shows that DeepONet greatly improves generalization when compared with FNN and the continuous ensemble.

| angle | angular velocity | |

|---|---|---|

| mean (DeepONet) | 0.833 % | 1.061 % |

| st.dev. (DeepONet) | 0.604 % | 0.756% |

| mean (FNN) | 10.076 % | 13.318 % |

| st.dev. (FNN) | 7.626 % | 9.817% |

| mean (Ensemble) | 5.820 % | 7.055 % |

| st.dev. (Ensemble) | 3.817 % | 4.654% |

In addition, we compared the predictions of DeepONet, FNN, and Ensemble with the actual trajectories of the pendulum (23) system’s state . We analyzed the response to the input signal within the partition (s) with a constant step size of . Figure 9 (the left plot of Figure 9 shows the angle , and the right plot shows the velocity ) illustrates that only DeepONet can accurately predict oscillatory behavior.

4.4 Cart-Pole

In this section, we consider the following cart-pole system Florian (2007) with control:

| (24) |

In the above, the state of the cart-pole system is , where is the angle of the pendulum, the angular velocity of the pendulum, and are, respectively, the position and the velocity of the cart. The input is the horizontal force that makes the cart move to the left or the right. We selected the parameters of the cart-pole system as follows. The pendulum’s length is (m), the pendulum’s mass is (kg), the cart’s mass is (kg), and the friction coefficient is (sNm/rad).

To train the DeepONet, we generated trajectories with the initial condition (resp. input ) sampled from the state space (resp. ).

We used a trained DeepONet to predict the response of the cart-pole system to a time-dependent input signal within the partition (s) with a constant step size of (i.e., ) for all . In Figure 10, we compare the predicted and true state trajectories. We observe a high degree of agreement between the predicted and true state trajectories, with -relative errors of , , , and for , , , and , respectively. Based on these results, we conclude that the proposed DeepONet framework is effective in predicting the response of a nonlinear control system, such as the cart-pole, to given inputs and different initial conditions.

| relative error | % | % |

|---|

4.5 A Power Engineering Application

The dynamics of the future power grid will fundamentally differ from today’s grid due to widespread installation of energy storage systems, offshore wind power plants, and electric vehicle fast-charging sites. These devices will connect to the power grid via power electronic devices. This scenario differs greatly from the current paradigm where robust models of traditional power systems exist. Thus, simulating future power grids for planning, optimization, and control will require the interplay of legacy simulators and models of power electronics-based devices (e.g., wind turbines) that can be learned from data.

In this final experiment, we aim to demonstrate that the proposed method can potentially interact with a traditional power engineering simulator, such as the Power System Toolbox. To achieve this, we begin with a simple application of recursive DeepONet that uses Algorithm 1 to approximate the dynamic response of a simple second order model of a generator. We use data collected with the Power System Toolbox (PST) Chow et al. (2000) of generator 1 within the classic two area system. Note that the response of the generator can be modeled as a non-autonomous system (1) by assuming the input function corresponds to the interface variables (stator currents) of the bus where the target generator connects.

Training data. We generated training data by simulating disturbance experiments on PST. Each experiment consisted of simulating the two area system system using a uniform partition (s), with a constant step size of . The disturbances occur at (s) with a duration sampled from the interval (s). Trajectory data was collected after each experiment, including the interacting input trajectories and state trajectory data .

We constructed the training dataset by interpolating and sampling this trajectory data (i.e., a time-series). In particular, we discretized the inputs using interpolation and sensors, i.e., , where and was uniformly sampled from the open interval . The final training dataset of size is:

We tested the proposed DeepONet using a PST test trajectory that was not included in the training dataset and which experienced a disturbance of duration 0.01 (s). For these test trajectory, we used an uniform partition of size . As shown in Figure 11, DeepONet-based Algorithm 1 accurately predicted the dynamic response of generator 1 in the classic two area system.

In our future work, we plan to (i) extend this approach to allow for the full interaction of DeepONets and traditional numerical schemes, (ii) enable transfer learning for approximating more than one generator, and (iii) design learning protocols that can withstand error accumulation when predicting more complex and severe discontinuous disturbances.

5 Discussion

This section discusses our main results and outlines our plans for future work.

We have demonstrated through all five tasks outlined in Section 4 that the proposed recursive and data-driven RK DeepONets can effectively approximate the dynamic response of nonlinear, non-autonomous systems for varying inputs and initial conditions. Furthermore, in Section 4.3, we compared the proposed recursive method with two other benchmarks. The first is a neural network approach for approximating non-autonomous systems developed in Qin et al. (2021). The second is an ensemble method Wang et al. (2019) that we extended to the continuous scenario for comparison purposes. Table 3 shows that the proposed recursive DeepONet outperforms the other two benchmarks when the training dataset size is small. In particular, the recursive method keeps the mean -relative error for 100 test trajectories below %. Additionally, Figure 9 demonstrates that among all benchmarks, the proposed recursive DeepONet is the only one that can effectively approximate challenging oscillatory solution trajectories. In conclusion, when the dataset is small, which often occurs in engineering systems, the proposed recursive DeepONet provides the best method for approximating the dynamic response of non-autonomous systems.

In our future work, we aim to extend the proposed framework to include (i) reduced-order, (ii) stochastic, and (iii) networked non-autonomous and control systems. We also plan to apply the DeepONet framework to model-based reinforcement learning and control design. Specifically, we aim to use it for learning semi-Markov decision processes, which can be applied to learn suboptimal offline policies. Moreover, we aim to apply the recursive and data-driven RK DeepONets to complex engineering applications such as fluid dynamics, materials engineering, and future power systems.

6 Conclusion

We introduced a Deep Operator Network (DeepONet) framework that can learn (from data) the dynamic response of nonlinear, non-autonomous systems with time-dependent inputs for long-term horizons. The proposed framework approximates the system’s solution operator locally using the DeepONet and then recursively predicts the system’s response for long/medium-term horizons using the trained network. We also estimated the error bound for this DeepONet-based numerical scheme. To improve the predictive accuracy of the scheme when the step size is small, we designed and theoretically validated a data-driven Runge-Kutta DeepONet scheme. This scheme uses estimates of the vector field computed with the DeepONet forward pass and automatic differentiation. Finally, we validated the proposed framework using an autonomous and chaotic system, three continuous control tasks, and a power engineering application.

Acknowledgments

We gratefully acknowledge the support of the National Science Foundation (DMS-1555072, DMS-2053746, and DMS-2134209), Brookhaven National Laboratory Subcontract 382247, and U.S. Department of Energy (DOE) Office of Science Advanced Scientific Computing Research program DE-SC0021142 and DE-SC0023161.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Bradbury et al. (2018) Bradbury, J., Frostig, R., Hawkins, P., Johnson, M.J., Leary, C., Maclaurin, D., Necula, G., Paszke, A., VanderPlas, J., Wanderman-Milne, S., Zhang, Q., 2018. JAX: composable transformations of Python+NumPy programs. URL: http://github.com/google/jax.

- Brockman et al. (2016) Brockman, G., Cheung, V., Pettersson, L., Schneider, J., Schulman, J., Tang, J., Zaremba, W., 2016. Openai gym. arXiv preprint arXiv:1606.01540 .

- Brunton et al. (2016a) Brunton, S.L., Proctor, J.L., Kutz, J.N., 2016a. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proceedings of the national academy of sciences 113, 3932–3937.

- Brunton et al. (2016b) Brunton, S.L., Proctor, J.L., Kutz, J.N., 2016b. Sparse identification of nonlinear dynamics with control (sindyc). IFAC-PapersOnLine 49, 710–715.

- Cai et al. (2021) Cai, S., Wang, Z., Lu, L., Zaki, T.A., Karniadakis, G.E., 2021. Deepm&mnet: Inferring the electroconvection multiphysics fields based on operator approximation by neural networks. Journal of Computational Physics 436, 110296.

- Chen and Chen (1995) Chen, T., Chen, H., 1995. Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems. IEEE Transactions on Neural Networks 6, 911–917.

- Choudhary et al. (2023) Choudhary, A., Mishra, R.K., Fatima, S., Panigrahi, B., 2023. Multi-input cnn based vibro-acoustic fusion for accurate fault diagnosis of induction motor. Engineering Applications of Artificial Intelligence 120, 105872.

- Chow et al. (2000) Chow, J., Rogers, G., Cheung, K., 2000. Power system toolbox. Cherry Tree Scientific Software 48, 53.

- Chung et al. (2021) Chung, E., Leung, W.T., Pun, S.M., Zhang, Z., 2021. A multi-stage deep learning based algorithm for multiscale model reduction. Journal of Computational and Applied Mathematics 394, 113506.

- Cybenko (1989) Cybenko, G., 1989. Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems 2, 303–314.

- Deisenroth et al. (2013) Deisenroth, M.P., Fox, D., Rasmussen, C.E., 2013. Gaussian processes for data-efficient learning in robotics and control. IEEE transactions on pattern analysis and machine intelligence 37, 408–423.

- Du et al. (2020) Du, J., Futoma, J., Doshi-Velez, F., 2020. Model-based reinforcement learning for semi-markov decision processes with neural odes. Advances in Neural Information Processing Systems 33, 19805–19816.

- Efendiev et al. (2021) Efendiev, Y., Leung, W.T., Lin, G., Zhang, Z., 2021. Hei: hybrid explicit-implicit learning for multiscale problems. arXiv preprint arXiv:2109.02147 .

- Fan et al. (2020) Fan, D., Yang, L., Wang, Z., Triantafyllou, M.S., Karniadakis, G.E., 2020. Reinforcement learning for bluff body active flow control in experiments and simulations. Proceedings of the National Academy of Sciences 117, 26091–26098.

- Florian (2007) Florian, R.V., 2007. Correct equations for the dynamics of the cart-pole system. Center for Cognitive and Neural Studies (Coneural), Romania .

- Hafner et al. (2019) Hafner, D., Lillicrap, T., Ba, J., Norouzi, M., 2019. Dream to control: Learning behaviors by latent imagination. arXiv preprint arXiv:1912.01603 .

- Iserles (2009) Iserles, A., 2009. A first course in the numerical analysis of differential equations. 44, Cambridge university press.

- Kaiser et al. (2019) Kaiser, L., Babaeizadeh, M., Milos, P., Osinski, B., Campbell, R.H., Czechowski, K., Erhan, D., Finn, C., Kozakowski, P., Levine, S., et al., 2019. Model-based reinforcement learning for atari. arXiv preprint arXiv:1903.00374 .

- Kingma and Ba (2014) Kingma, D.P., Ba, J., 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 .

- Li et al. (2020) Li, Z., Kovachki, N., Azizzadenesheli, K., Liu, B., Bhattacharya, K., Stuart, A., Anandkumar, A., 2020. Fourier neural operator for parametric partial differential equations. arXiv preprint arXiv:2010.08895 .

- Lin et al. (2023) Lin, G., Moya, C., Zhang, Z., 2023. B-deeponet: An enhanced bayesian deeponet for solving noisy parametric pdes using accelerated replica exchange sgld. Journal of Computational Physics 473, 111713.

- Lin et al. (2021) Lin, G., Wang, Y., Zhang, Z., 2021. Multi-variance replica exchange stochastic gradient mcmc for inverse and forward bayesian physics-informed neural network. arXiv preprint arXiv:2107.06330 .

- Lu et al. (2019) Lu, L., Jin, P., Karniadakis, G.E., 2019. Deeponet: Learning nonlinear operators for identifying differential equations based on the universal approximation theorem of operators. arXiv preprint arXiv:1910.03193 .

- Lu et al. (2021) Lu, L., Jin, P., Pang, G., Zhang, Z., Karniadakis, G.E., 2021. Learning nonlinear operators via deeponet based on the universal approximation theorem of operators. Nature Machine Intelligence 3, 218–229.

- Mishra et al. (2022a) Mishra, R., Choudhary, A., Fatima, S., Mohanty, A., Panigrahi, B., 2022a. A fault diagnosis approach based on 2d-vibration imaging for bearing faults. Journal of Vibration Engineering & Technologies , 1–14.

- Mishra et al. (2022b) Mishra, R.K., Choudhary, A., Fatima, S., Mohanty, A.R., Panigrahi, B.K., 2022b. A self-adaptive multiple-fault diagnosis system for rolling element bearings. Measurement Science and Technology 33, 125018.

- Moya and Lin (2023) Moya, C., Lin, G., 2023. Dae-pinn: a physics-informed neural network model for simulating differential algebraic equations with application to power networks. Neural Computing and Applications 35, 3789–3804.

- Moya et al. (2023) Moya, C., Zhang, S., Lin, G., Yue, M., 2023. Deeponet-grid-uq: A trustworthy deep operator framework for predicting the power grid’s post-fault trajectories. Neurocomputing 535, 166–182.

- Nagabandi et al. (2020) Nagabandi, A., Konolige, K., Levine, S., Kumar, V., 2020. Deep dynamics models for learning dexterous manipulation, in: Conference on Robot Learning, PMLR. pp. 1101–1112.

- Proctor et al. (2018) Proctor, J.L., Brunton, S.L., Kutz, J.N., 2018. Generalizing koopman theory to allow for inputs and control. SIAM Journal on Applied Dynamical Systems 17, 909–930.

- Qin et al. (2021) Qin, T., Chen, Z., Jakeman, J.D., Xiu, D., 2021. Data-driven learning of nonautonomous systems. SIAM Journal on Scientific Computing 43, A1607–A1624.

- Qin et al. (2019) Qin, T., Wu, K., Xiu, D., 2019. Data driven governing equations approximation using deep neural networks. Journal of Computational Physics 395, 620–635.

- Raissi et al. (2018) Raissi, M., Perdikaris, P., Karniadakis, G.E., 2018. Multistep neural networks for data-driven discovery of nonlinear dynamical systems. arXiv preprint arXiv:1801.01236 .

- Ranade et al. (2021) Ranade, R., Gitushi, K., Echekki, T., 2021. Generalized joint probability density function formulation inturbulent combustion using deeponet. arXiv preprint arXiv:2104.01996 .

- Schaeffer (2017) Schaeffer, H., 2017. Learning partial differential equations via data discovery and sparse optimization. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 473, 20160446.

- Sun et al. (2020) Sun, Y., Zhang, L., Schaeffer, H., 2020. Neupde: Neural network based ordinary and partial differential equations for modeling time-dependent data, in: Mathematical and Scientific Machine Learning, PMLR. pp. 352–372.

- Sutton (1990) Sutton, R.S., 1990. Integrated architectures for learning, planning, and reacting based on approximating dynamic programming, in: Machine learning proceedings 1990. Elsevier, pp. 216–224.

- Sutton and Barto (2018) Sutton, R.S., Barto, A.G., 2018. Reinforcement learning: An introduction. MIT press.

- Wang et al. (2021a) Wang, S., Teng, Y., Perdikaris, P., 2021a. Understanding and mitigating gradient flow pathologies in physics-informed neural networks. SIAM Journal on Scientific Computing 43, A3055–A3081.

- Wang et al. (2021b) Wang, S., Wang, H., Perdikaris, P., 2021b. Learning the solution operator of parametric partial differential equations with physics-informed deeponets. Science advances 7, eabi8605.

- Wang et al. (2019) Wang, T., Bao, X., Clavera, I., Hoang, J., Wen, Y., Langlois, E., Zhang, S., Zhang, G., Abbeel, P., Ba, J., 2019. Benchmarking model-based reinforcement learning. arXiv preprint arXiv:1907.02057 .

- Wittenmark et al. (2002) Wittenmark, B., Åström, K.J., Årzén, K.E., 2002. Computer control: An overview. IFAC Professional Brief 1, 2.

- Zhang et al. (2022) Zhang, Z., Leung, W.T., Schaeffer, H., 2022. Belnet: Basis enhanced learning, a mesh-free neural operator. arXiv preprint arXiv:2212.07336 .