Learning Sparse Fixed-Structure Gaussian Bayesian Networks

Abstract

Gaussian Bayesian networks (a.k.a. linear Gaussian structural equation models) are widely used to model causal interactions among continuous variables. In this work, we study the problem of learning a fixed-structure Gaussian Bayesian network up to a bounded error in total variation distance. We analyze the commonly used node-wise least squares regression LeastSquares and prove that it has the near-optimal sample complexity. We also study a couple of new algorithms for the problem:

-

•

BatchAvgLeastSquares takes the average of several batches of least squares solutions at each node, so that one can interpolate between the batch size and the number of batches. We show that BatchAvgLeastSquares also has near-optimal sample complexity.

-

•

CauchyEst takes the median of solutions to several batches of linear systems at each node. We show that the algorithm specialized to polytrees, CauchyEstTree, has near-optimal sample complexity.

Experimentally111Code is available at https://github.com/YohannaWANG/CauchyEst, we show that for uncontaminated, realizable data, the LeastSquares algorithm performs best, but in the presence of contamination or DAG misspecification, CauchyEst/CauchyEstTree and BatchAvgLeastSquares respectively perform better.

1 Introduction

Linear structural equation models (SEMs) are widely used to model interactions among multiple variables, and they have found applications in diverse areas, including economics, genetics, sociology, psychology and education; see [Mue99, Mul09] for pointers to the extensive literature in this area. Gaussian Bayesian networks are a popularly used form of SEMs where: (i) there are no hidden/unobserved variables, (ii) each variable is a linear combination of its parents plus a Gaussian noise term, and (iii) all noise terms are independent. The structure of a Bayesian network refers to the directed acyclic graph (DAG) that prescribes the parent nodes for each node in the graph.

This work studies the task of learning a Gaussian Bayesian network given its structure. The problem is obviously quite fundamental and has been subject to extensive prior work. The usual formulation of this problem is in terms of parameter estimation, where one wants a consistent estimator that exactly recovers the parameters of the Bayesian network in the limit, as the the number of samples approaches infinity. In contrast, we consider the problem from the viewpoint of distribution learning [KMR+94]. Analogous to Valiant’s famous PAC model for learning Boolean functions [Val84], the goal here is to learn, with high probability, a distribution that is close to the ground-truth distribution , using an efficient algorithm. In this setting, pointwise convergence of the parameters is no longer a requirement; the aim is rather to approximately learn the induced distribution. Indeed, this relaxed objective may be achievable when the former may not be (e.g., for ill-conditioned systems) and can be the more relevant requirement for downstream inference tasks. Diakonikolas [Dia16] surveys the current state-of-the-art in distribution learning from an algorithmic perspective.

Consider a Gaussian Bayesian network with variables with parameters in the form of coefficients222We write to emphasize that is the parent of in the Bayesian network. ’s and variances ’s. For each , the linear structural equation for variable , with parent indices , is as follows:

for some unknown parameters ’s and ’s. If a variable has no parents, then is simply an independent Gaussian. Our goal is to efficiently learn a distribution such that

while minimizing the number of samples we draw from as a function of , and relevant structure parameters. Here, denotes the total variation or statistical distance between two distributions:

One way to approach the problem is to simply view as an -dimensional multivariate Gaussian. In this case, it is known that the Gaussian defined by the empirical covariance matrix , computed with samples from , is -close in TV distance to with constant probability [ABDH+20, Appendix C]. This sample complexity is also necessary for learning general -dimensional Gaussians and hence general Gaussian Bayesian networks on variables.

We focus therefore on the setting where the structure of the network is sparse.

Assumption 1.1.

Each variable in the Bayesian network has at most parents. i.e. .

Sparsity is a common and very useful assumption for statistical learning problems; see the book [HTW19] for an overview of the role of sparsity in regression. More specifically, in our context, the assumption of bounded in-degree is popular (e.g., see [Das97, BCD20]) and also very natural, as it reflects the belief that in the correct model, each linear structural equation involves a small number of variables333More generally, one would want to assume a bound on the “complexity” of each equation. In our context, as each equation is linear, the complexity is simply proportional to the in-degree..

1.1 Our contributions

-

1.

Analysis of MLE LeastSquares and a distributed-friendly generalization BatchAvgLeastSquares

The standard algorithm for parameter estimation of Gaussian Bayesian networks is to perform node-wise least squares regression. It is easy to see that LeastSquares is the maximum likelihood estimator. However, to the best of our knowledge, there did not exist an explicit sample complexity bound for this estimator. We show that the sample complexity for learning to within TV distance using LeastSquares requires only samples, which is nearly optimal.We also give a generalization dubbed BatchAvgLeastSquares which solves multiple batches of least squares problems (on smaller systems of equations), and then returns their mean. As each batch is independent from the others, they can be solved separately before their solutions are combined. Our analysis will later show that we can essentially interpolate between “batch size” and “number of batches” while maintaining a similar total sample complexity – LeastSquares is the special case of a single batch. Notably, we do not require any additional assumptions about the coefficients or variance terms in the analyses of LeastSquares and BatchAvgLeastSquares.

-

2.

New algorithms based on Cauchy random variables: CauchyEst and CauchyEstTree

We develop a new algorithm CauchyEst. At each node, CauchyEst solves several small linear systems of equations and takes the component-wise median of the obtained solution to obtain an estimate of the coefficients for the corresponding structural equation. In the special case of bounded-degree polytree structures, where the underlying undirected Bayesian network is acyclic, we specialize the algorithm to CauchyEstTree for which we give theoretical guarantees. Polytrees are of particular interest because inference on polytree-structured Bayesian networks can be performed efficiently [KP83, Pea86]. On polytrees, we show that the sample complexity of CauchyEstTree is also . Somewhat surprisingly, our analysis (as the name of the algorithm reflects) involves Cauchy random variables which usually do not arise in the context of regression. -

3.

Hardness results

In Section 5, we show that our sample complexity upper-bound is nearly optimal in terms of the dependence on the parameters , , and . In particular, we show that learning the coefficients and noises of a linear structural equation model (on variables, with in-degree at most ) up to an -error in -distance with probability 2/3 requires samples in general. We use a packing argument based on Fano’s inequality to achieve this result. -

4.

Extensive experiments on synthetic and real-world networks

We experimentally compare the algorithms studied here as well as other well-known methods for undirected Gaussian graphical model regression, and investigate how the distance between the true and learned distributions changes with the number of samples. We find that the LeastSquares estimator performs the best among all algorithms on uncontaminated datasets. However, CauchyEst, CauchyEstTree and BatchMedLeastSquares outperform LeastSquares and BatchAvgLeastSquares by a large margin when a fraction of the samples are contaminated. In non-realizable/agnostic learning case, BatchAvgLeastSquares, CauchyEst, and CauchyEstTree performs better than the other algorithms.

1.2 Outline of paper

In Section 2, we relate KL divergence with TV distance and explain how to decompose the KL divergence into terms so that it suffices for us to estimate the parameters for each variable independently. We also give an overview of our two-phased recovery approach and explain how to use recovered coefficients to estimate the variances via VarianceRecovery. For estimating coefficients, Section 3 covers the estimators based on linear least squares (LeastSquares and BatchAvgLeastSquares) while Section 4 presents our new Cauchy-based algorithms (CauchyEst and CauchyEstTree). To complement our algorithmic results, we provide hardness results in Section 5 and experimental evaluation in Section 6.

For a cleaner exposition, we defer some formal proofs to Appendix B.

1.3 Further related work

Bayesian networks, both in discrete and continuous settings, were formally introduced by Pearl [Pea88] in 1988 to model uncertainty in AI systems. For the continuous case, Pearl considered the case when each node is a linear function of its parents added with an independent Gaussian noise [Pea88, Chapter 7]. The parameter learning problem – recovering the distribution of nodes conditioned on its parents from data – is well-studied in practice, and maximum likelihood estimators are known for various simple settings such as when the conditional distribution is Gaussian or the variables are discrete-valued. See for example the implementation of fit in the R package bnlearn [Scu20].

The focus of our paper is to give formal guarantees for the parameter learning in the PAC framework introduced by Valiant [Val84] in 1984. Subsequently, Haussler [Hau18] generalized this framework for studying parameter and density estimation problems of continuous distributions. Dasgupta [Das97] first looked at the problem of parameter learning for fixed structure Bayesian networks in the discrete and continuous settings and gave finite sample complexity bounds for these problems based on the VC-dimensions of the hypothesis classes. In particular, he gave an algorithm for learning the parameters of a Bayes net on binary variables of bounded in-degree in distance using a quadratic in samples. Subsequently, tight (linear) sample complexity upper and lower bounds were shown for this problem [BGMV20, BGPV20, CDKS20]. To the best of our knowledge, a finite PAC-style bound for fixed-structure Gaussian Bayesian networks was not known previously.

The question of structure learning for Gaussian Bayesian networks has been extensively studied. A number of works [PB14, GH17, CDW19, PK20, Par20, GDA20] have proposed increasingly general conditions for ensuring identifiability of the network structure from observations. Structure learning algorithms that work for high-dimensional Gaussian Bayesian networks have also been proposed by others (e.g., see [AZ15, AGZ19, GZ20]).

2 Preliminaries

In this section, we discuss why we upper bound the total variational distance using KL divergence and give a decomposition of the KL divergence into terms, one associated with each variable in the Bayesian network. This decomposition motivates why our algorithms and analysis focus on recovering parameters for a single variable. We also present our general two-phased recovery approach and explain how to estimate variances using recovered coefficients in Section 2.6.

2.1 Notation

A Bayesian network (Bayes net in short) is a joint distribution over variables defined by the underlying directed acyclic graph (DAG) . The DAG encodes the dependence between the variables where and if and only if is a parent of . For any variable , we use the set to represent the indices of ’s parents. Under this notation, each variable of is independent of ’s non-descendants conditioned on . Therefore, using Bayes rule in the topological order of , we have

Without loss of generality, by renaming variables, we may assume that each variable only has ancestors with indices smaller than . We also define as the number of parents of and to be average in-degree. Furthermore, a DAG is a polytree if the undirected version of is a acyclic.

We study the realizable setting where our unknown probability distribution is Markov with respect to the given Bayesian network. We denote the true (hidden) parameters associated with by . Our algorithms recover parameter estimates such that the induced probability distribution is close in total variational distance to . For each , is the set of ground truth parameters associated with variable , is the coefficients associated to , is the variance of , is our estimate of .

In the course of the paper, we will often focus on the perspective a single variable of interest. This allows us to drop a subscript for a cleaner discussion. Let us denote such a variable of interest by and use the index . Without loss of generality, by renaming variables, we may further assume that the parents of are . By 1.1, we know that . We can write where . We use matrix to denote the covariance matrix defined by the parents of , where and is the Cholesky decomposition of . Under this notation, we see the vector is distributed as a multivariate Gaussian. Our goal is then to produce estimates for each . For notational convenience, we can group the coefficients into and . The vector captures the entry-wise gap between our estimates and the ground truth.

We write to mean and to mean the size of a set . For a matrix , denotes its -th entry. We use to both mean the operator/spectral norm for matrices and -norm for vectors, which should be clear from context. We hide absolute constant multiplicative factors and multiplicative factors poly-logarithmic in the argument using standard notations: , , and .

2.2 Basic facts and results

We begin by stating some standard facts and results.

Fact 2.1.

Suppose . Then, for any , .

Fact 2.2.

Consider any matrix with rows . For any and any vector with i.i.d. entries, we have that .

Fact 2.3 (Theorem 2.2 in [Gut09])).

Let be i.i.d. -dimensional multivariate Gaussians with covariance (i.e. ). If is the matrix formed by stacking as rows of , then where is a random matrix with i.i.d. entries.444The transformation stated in [Gut09, Theorem 2.2, page 120] is for a single multivariate Gaussian vector, thus we need to take the transpose when we stack them in rows. Note that and are identically distributed.

Lemma 2.4 (Equation 2.19 in [Wai19]).

Let , where each . Then, and for any , we have .

Lemma 2.5 (Consequence of Corollary 3.11 in [BVH+16]).

Let be a matrix with i.i.d. entries where . Then, for some universal constant , .

Lemma 2.6.

Let be a matrix with i.i.d. entries. Then, for any constant and ,

Proof.

See Appendix B. ∎

Lemma 2.7.

Let be a matrix with i.i.d. entries and be a vector with i.i.d. entries, where and are independent. Then, for any constant ,

Proof.

See Appendix B. ∎

The next result gives the non-asymptotic convergence of medians of Cauchy random variables. We use this result in the analysis of CauchyEst, and it may be of independent interest.

Lemma 2.8.

[Non-asymptotic convergence of Cauchy median] Consider a collection of i.i.d. random variables . Given a threshold , we have

Proof.

See Appendix B. ∎

2.3 Distance and divergence between probability distributions

Recall that we are given sample access to an unknown probability distribution over the values of and the corresponding structure of a Bayesian network on variables. We denote as the true (hidden) parameters, inducing the probability distribution , which we estimate by . In this work, we aim to recover parameters such that our induced probability distribution is as close as possible to in total variational distance.

Definition 2.9 (Total variational (TV) distance).

Given two probability distributions and over , the total variational distance between them is defined as .

Instead of directly dealing with total variational distance, we will instead bound the Kullback–Leibler (KL) divergence and then appeal to the Pinsker’s inequality [Tsy08, Lemma 2.5, page 88] to upper bound via . We will later show algorithms that achieve .

Definition 2.10 (Kullback–Leibler (KL) divergence).

Given two probability distributions and over , the KL divergence between them is defined as .

Fact 2.11 (Pinsker’s inequality).

For distributions and , .

Thus, if samples are needed to learn a distribution such that , samples are needed to ensure .

2.4 Decomposing the KL divergence

For a set of parameters , denote as the subset of parameters that are relevant to the variable . Following the approach of [Das97]555[Das97] analyzes the non-realizable setting where the distribution may not correspond to the causal structure of the given Bayesian network. As we study the realizable setting, we have a much simpler derivation., we decompose into terms that can be computed by analyzing the quality of recovered parameters for each variable .

For notational convenience, we write to mean , to mean the values given to parents of variable by , and to mean . Let us define

where each and represent the parameters that relevant to variable from and respectively. By the Bayesian network decomposition of joint probabilities and marginalization, one can show that

See Appendix A for the full derivation details.

2.5 Bounding for an arbitrary variable

We now analyze with respect to the our estimates and the hidden true parameters for any . For derivation details, see Appendix A.

With respect to variable , one can derive . Thus,

| (1) |

where is the covariance matrix associated with variable , is the coefficients and variance associated with variable , are the estimates for , and .

Proposition 2.12 (Implication of KL decomposition).

Let be a constant. Suppose has the following properties for each :

| (Condition 1) |

| (Condition 2) |

Then, for all . Thus, .666For a cleaner argument, we are bounding . This is qualitatively the same as showing since one can repeat the entire analysis with .

Proof.

Consider an arbitrary fixed . Denote . Observe777This inequality is also used in [ABDH+20, Lemma 2.9]. that for . Since , Eq. Condition 2 implies that . Then,

| By Condition 2 | ||||

| Since | ||||

| Holds when | ||||

Meanwhile,

| By Condition 1 | ||||

| Since | ||||

| Holds when | ||||

Putting together, we see that . ∎

2.6 Two-phased recovery approach

Algorithm 1 states our two-phased recovery approach. We estimate the coefficients of the Bayesian network in the first phase and use them to recover the variances in the second phase.

Motivated by Proposition 2.12, we will estimate parameters for each variable in an independent fashion888Given the samples, parameters related to each variable can be estimated in parallel.. We will provide various coefficient recovery algorithms in the subsequent sections. These algorithms will recover coefficients that satisfy Condition 1 for each variable . We evaluate them empirically in Section 6. For variance recovery, we use VarianceRecovery for each variable by computing the empirical variance999Except in our experiments with contaminated data in Section 6 where we use the classical median absolute devation (MAD) estimator. See Appendix C for a description. of such that the recovered variance satisfies Condition 2.

To analyze VarianceRecovery, we first prove guarantees for an arbitrary variable and then take union bound over variables.

Lemma 2.13.

Consider Algorithm 2. Fix any arbitrary variable of interest with parents, parameters , and associated covariance matrix . Suppose we have coefficient estimates such that . Suppose . With samples, we recover variance estimate such that

Proof.

We first argue that , then apply standard concentration bounds for random variables (see Lemma 2.4).

For any sample , we see that , where is an unknown constant vector (because we do not actually know ). For fixed , we see that . Since and are independent, we have that . So, for any sample , . Therefore, . Let us define

Since , if , then . We first make two observations:

-

1.

For , .

-

2.

For , .

Using Lemma 2.4 with the above discussion, we have

The claim follows by setting . ∎

Corollary 2.14 (Guarantees of VarianceRecovery).

Consider Algorithm 2. Suppose and we have coefficient estimates such that for all . With samples, we recover variance estimate such that

The total running time is .

Proof.

For each , apply Lemma 2.13 with and , then take the union bound over all variables.

The computational complexity for a variable with parents is . Since , the total runtime is . ∎

In Section 5, we show that the sample complexity is nearly optimal in terms of the dependence on and . We remark that we use one batch of samples to use for all the nodes; this is possible as we can obtain high-probability bounds on the error events at each node.

3 Coefficient estimators based on linear least squares

In this section, we provide algorithms LeastSquares and BatchAvgLeastSquares for recovering the coefficients in a Bayesian network using linear least squares. As discussed in Section 2.6, we will recover the coefficients for each variable such that Condition 1 is satisfied. To do so, we estimate the coefficients associated with each individual variable using independent samples. At each node, LeastSquares computes an estimate by solving the linear least squares problem with respect to a collection of sample observations. In Section 3.2, we generalize this approach via BatchAvgLeastSquares by allowing any interpolation between “batch size” and “number of batches” – LeastSquares is a special case of a single batch. Since each solution to batch can be computed independently before their results are combined, BatchAvgLeastSquares facilitates parallelism.

3.1 Vanilla least squares

Consider an arbitrary variable with parents. Using independent samples, we form matrix , where the row consists of sample values , and the column vector . Then, we define as the solution to the least squares problem . The pseudocode of LeastSquares is given in Algorithm 3 and Theorem 3.1 states its guarantees.

Theorem 3.1 (Distribution learning using LeastSquares).

Let . Suppose is a fixed directed acyclic graph on variables with degree at most . Given samples from an unknown Bayesian network over , if we use LeastSquares for coefficient recovery in Algorithm 1, then with probability at least , we recover a Bayesian network over such that in time.101010In particular, this gives a guarantee for learning centered multivariate Gaussians using samples. See e.g. [ABDH+20] for an analogous guarantee.

Our analysis begins by proving guarantees for an arbitrary variable.

Lemma 3.2.

Consider Algorithm 3. Fix an arbitrary variable with parents, parameters , and associated covariance matrix . With samples, for any constants and , we recover the coefficients such that

Proof.

Since , it suffices to bound .

Without loss of generality, the parents of are . Define , , and as in Algorithm 3. Let be the instantiations of Gaussian in the samples. By the structural equations, we know that . So,

We can now establish Condition 1 of Proposition 2.12 for LeastSquares.

Lemma 3.3.

Consider Algorithm 3. With samples, we recover the coefficients such that

The total running time is .

Proof.

By setting , , and in Lemma 3.2, we have

for any . The claim holds by a union bound over all variables.

The computational complexity for a variable with parents is . Since and , the total runtime is . ∎

Theorem 3.1 follows from combining the guarantees of LeastSquares and VarianceRecovery (given in Lemma 3.3 and Corollary 2.14 respectively) via Proposition 2.12.

Proof of Theorem 3.1.

We will show sample and time complexities before giving the proof for the distance.

Let and . Then, the total number of samples needed is . LeastSquares runs in time while VarianceRecovery runs in time. Therefore, the overall running time is .

By Lemma 3.3, LeastSquares recovers coefficients such that

By Corollary 2.14 and using the recovered coefficients from LeastSquares, VarianceRecovery recovers variance estimates such that

As our estimated parameters satisfy Condition 1 and Condition 2, Proposition 2.12 tells us that . Thus, . The claim follows by setting throughout. ∎

3.2 Interpolating between batch size and number of batches

We now discuss a generalization of LeastSquares. In a nutshell, for each variable with parents, BatchAvgLeastSquares solves batches of linear systems made up of samples and then uses the mean of the recovered solutions as an estimate for the coefficients. Note that one can interpolate between different values of and , as long as (so that the batch solutions are correlated to the true parameters). The pseudocode of BatchAvgLeastSquares is provided in Algorithm 4 and the guarantees are given in Theorem 3.1.

In Section 6, we also experimented on a variant of BatchAvgLeastSquares dubbed BatchMedLeastSquares, where is defined to be the coordinate-wise median of the vectors. However, in the theoretical analysis below, we only analyze BatchAvgLeastSquares.

Theorem 3.4 (Distribution learning using BatchAvgLeastSquares).

Let . Suppose is a fixed directed acyclic graph on variables with degree at most . Given samples from an unknown Bayesian network over , if we use BatchAvgLeastSquares for coefficient recovery in Algorithm 1, then with probability at least , we recover a Bayesian network over such that in time.

Our approach for analyzing BatchAvgLeastSquares is the same as our approach for LeastSquares: we prove guarantees for an arbitrary variable and then take union bound over variables. At a high-level, for each node , for every fixing of the randomness in generating , we show that each is a gaussian. Since the iterations are independent, is also a gaussian. Its variance is itself a random variable but can be bounded with high probability using concentration inequalities.

Lemma 3.5.

Consider Algorithm 4. Fix any arbitrary variable of interest with parents, parameters , and associated covariance matrix . With and , for some universal constants and , we recover coefficients estimates such that

Proof.

Without loss of generality, the parents of are . For , define , , and as the quantities involved in the batch of Algorithm 4. Let be the instantiations of Gaussian in the samples for the batch. By the structural equations, we know that . So,

By 2.3, we can express where matrix is a random matrix with i.i.d. entries. So, we see that

For any , 2.2 tells us that

We can upper bound each term as follows:

When , Lemma 2.6 tells us that . Meanwhile, Lemma 2.5 tells us that for some universal constant . Let be the event that for any . Applying union bound with the conclusions from Lemma 2.6 and Lemma 2.5, we have

Conditioned on event , standard Gaussian tail bounds (e.g. see 2.1) give us

where the second last inequality is because of event and the last inequality is because , since .

Thus, applying a union bound over all entries of and accounting for , we have

for some universal constant .

The claim follows by observing that and applying the assumptions on and . ∎

We can now establish Condition 1 of Proposition 2.12 for BatchAvgLeastSquares.

Lemma 3.6 (Coefficient recovery guarantees of BatchAvgLeastSquares).

Consider Algorithm 4. With samples, where and , we recover coefficient estimates such that

The total running time is .

Proof.

For each , apply Lemma 3.5 with , then take the union bound over all variables.

The computational complexity for a variable with parents is . Since and , the total runtime is . ∎

Theorem 3.4 follows from combining the guarantees of BatchAvgLeastSquares and VarianceRecovery (given in Lemma 3.6 and Corollary 2.14 respectively) via Proposition 2.12.

Proof of Theorem 3.4.

We will show sample and time complexities before giving the proof for the distance.

Let and . Then, the total number of samples needed is . BatchAvgLeastSquares runs in time while VarianceRecovery runs in time. Therefore, the overall running time is .

By Lemma 3.6, BatchAvgLeastSquares recovers coefficients such that

By Corollary 2.14 and using the recovered coefficients from BatchAvgLeastSquares, VarianceRecovery recovers variance estimates such that

As our estimated parameters satisfy Condition 1 and Condition 2, Proposition 2.12 tells us that . Thus, . The claim follows by setting throughout. ∎

4 Coefficient recovery algorithm based on Cauchy random variables

In this section, we provide novel algorithms CauchyEst and CauchyEstTree for recovering the coefficients in polytree Bayesian networks. We will show that CauchyEstTree has near-optimal sample complexity, and later in Section 6, we will see that both these algorithms outperform LeastSquares and BatchAvgLeastSquares on randomly generated Bayesian networks. Of technical interest, our analysis involves Cauchy random variables, which are somewhat of a rarity in statistical learning. As in LeastSquares and BatchAvgLeastSquares, CauchyEst and CauchyEstTree use independent samples to recover the coefficients associated to each individual variable in an independent fashion.

Consider an arbitrary variable with parents. The intuition is as follows: if , then one can form a linear system of equations using samples to solve for the coefficients exactly for each . Unfortunately, is non-zero in general. Instead of exactly recovering , we partition the independent samples into batches involving samples and form intermediate estimates by solving a system of linear equation for each batch (see Algorithm 5). Then, we “combine” these intermediate estimates to obtain our estimate .

Consider an arbitrary copy of recovered coefficients . Let be a vector measuring the gap between these recovered coefficients and the ground truth, where . Lemma 4.1 gives a condition where a vector is term-wise Cauchy. Using this, Lemma 4.2 shows that each entry of the vector is distributed according to , although the entries may be correlated with each other in general.

Lemma 4.1.

Consider the matrix equation where , , and such that entries of and are independent Gaussians, elements in each column of have the same variance, and all entries in have the same variance. That is, and . Then, for all , we have that .

Proof.

As the event that is singular has measure zero, we can write . By Cramer’s rule,

where is the determinant of , is the adjugate/adjoint matrix of , and is the cofactor matrix of . Recall that the can defined with respect to elements in : For any column ,

So, . Thus, for any ,

∎

Lemma 4.2.

Consider a batch estimate from Algorithm 5. Then, is entry-wise distributed as , where . Note that the entries of may be correlated in general.

Proof.

Observe that each row of is an independent sample drawn from a multivariate Gaussian . By denoting as the samples of , we can write and thus by rearranging terms. By 2.3, we can express where matrix is a random matrix with i.i.d. entries. By substituting into , we have .111111Note that event that is singular has measure 0.

By applying Lemma 4.1 with the following parameters: , we conclude that each entry of is distributed as . However, note that these entries are generally correlated. ∎

If we have direct access to the matrix , then one can do the following (see Algorithm 6): for each coordinate , take medians121212The typical strategy of averaging independent estimates does not work here as the variance of a Cauchy variable is unbounded. of to form and then estimate . By the convergence of Cauchy random variables to their median, one can show that each converges to the true coefficient as before. Unfortunately, we do not have and can only hope to estimate it with some matrix using the empirical covariance matrix .

4.1 Special case of polytree Bayesian networks

If the Bayesian network is a polytree, then is diagonal. In this case, we specialize CauchyEst to CauchyEstTree and are able to give theoretical guarantees. We begin with simple corollary which tells us that the entry of is distributed according to .

Corollary 4.3.

Consider a batch estimate from Algorithm 5. If the Bayesian network is a polytree, then .

Proof.

Observe that each row of is an independent sample drawn from a multivariate Gaussian . By denoting as the samples of , we can write and thus by rearranging terms. Since the parents of any variable in a polytree are not correlated, each element in the column of is a Gaussian random variable.

By applying Lemma 4.1 with the following parameters: , we conclude that . ∎

For each , we combine the independently copies of using the median. For arbitrary sample and parent index , observe that . Since is just an unknown constant,

Since each term is i.i.d. distributed as , the term converges to 0 with sufficiently large , and thus converges to the true coefficient .

The goal of this section is to prove Theorem 4.4 given CauchyEstTree, whose pseudocode we provide in Algorithm 7.

Theorem 4.4 (Distribution learning using CauchyEstTree).

Let . Suppose is a fixed directed acyclic graph on variables with degree at most . Given samples from an unknown Bayesian network over , if we use CauchyEstTree for coefficient recovery in Algorithm 1, then with probability at least , we recover a Bayesian network over such that in time.

Note that for polytrees, is just a constant. As before, we will first prove guarantees for an arbitrary variable and then take union bound over variables.

Lemma 4.5.

Consider Algorithm 7. Fix an arbitrary variable of interest with parents, parameters , and associated covariance matrix . With samples, we recover coefficient estimates such that

Proof.

We can now establish Condition 1 of Proposition 2.12 for CauchyEstTree.

Lemma 4.6.

Consider Algorithm 7. Suppose the Bayesian network is a polytree. With samples, we recover coefficient estimates such that

The total running time is where is the matrix multiplication exponent.

Proof.

For each , apply Lemma 4.5 with and , then take the union bound over all variables.

The runtime of Algorithm 5 is the time to find the inverse of a matrix, which is for some . Therefore, the computational complexity for a variable with parents is . Since and , the total runtime is . ∎

We are now ready to prove Theorem 4.4.

Theorem 4.4 follows from combining the guarantees of CauchyEstTree and VarianceRecovery (given in Lemma 4.6 and Corollary 2.14 respectively) via Proposition 2.12.

Proof of Theorem 4.4.

We will show sample and time complexities before giving the proof for the distance.

Let and . Then, the total number of samples needed is . CauchyEstTree runs in time while VarianceRecovery runs in time, where is the matrix multiplication exponent. Therefore, the overall running time is .

By Lemma 4.6, CauchyEstTree recovers coefficients such that

By Corollary 2.14 and using the recovered coefficients from CauchyEstTree, VarianceRecovery recovers variance estimates such that

As our estimated parameters satisfy Condition 1 and Condition 2, Proposition 2.12 tells us that . Thus, . The claim follows by setting throughout. ∎

5 Hardness for learning Gaussian Bayesian networks

In this section, we present our hardness results. We first show a tight lower-bound for the simpler case of learning Gaussian product distributions in total variational distance (Theorem 5.2). Next, we show a tight lower-bound for learning Gaussian Bayes nets with respect to total variation distance. (Theorem 5.3). In both cases, our hardness applies to the problems of learning the covariance matrix of a centered multivariate Gaussian, which is equivalent to recovering the coefficients and noises of the underlying linear structural equation.

We will need the following fact about the variation distance between multivariate Gaussians and a Frobenius norm between the covariance matrices.

Fact 5.1 ([DMR18]).

There exists two universal constants , such that for any two covariance matrices and ,

Theorem 5.2.

Given samples from a -fold Gaussian product distribution , learning a such that in with success probability 2/3 needs samples in general.

Proof.

Let be a set with the following properties: 1) and 2) every have a Hamming distance . Existence of such a set is well-known. We create a class of Gaussian product distributions based on as follows. For each , we define a distribution such that if the -th bit of is 0, we use the distribution for the -th component of ; else if the -th bit is 1, we use the distribution . Then for any , . Fano’s inequality tells us that guessing a random distribution from correctly with 2/3 probability needs samples.

5.1 tells us that for any , for some constant . Consider any algorithm for learning a random distribution from in -distance at most for a sufficiently small constant . Let the learnt distibution be . Due to triangle inequality, 5.1, and an appropriate choice of , must be the unique distribution from satisfying for an appropriate choice of . We can find this unique distribution by computing for every covariance matrix from and guess the random distribution correctly. Hence, the lower-bound follows. ∎

Now, we present the lower-bound for learning general Bayes nets.

Theorem 5.3.

For any and such that , there exists a DAG over of in-degree such that learning a Gaussian Bayes net on such that with success probability 2/3 needs samples in general.

Let be a set with the following properties: 1) and 2) every have a Hamming distance . Existence of such a set is well-known. We define a class of distributions based on and the graph shown in Fig. 1 as follows. Each vertex of each distribution in has a noise, and hence no learning is required for the noises. Each coefficient takes one of two values corresponding to bits respectively. For each , we define to be the vector of coefficients corresponding to the bit-pattern of as above. We have possible bit-patterns, which we use to define each conditional probability . Then, we have a class of distributions for the overall Bayes net. We prune some of the distributions to get the desired subclass , such that is the largest-sized subset with any pair of distributions in the subset differing in at least bit-patterns (out of many) for the ’s.

Claim 5.4.

.

Proof.

Let and . Then, there are possible coefficient-vectors/distributions in . We create an undirectred graph consisting of a vertex for each coefficient, and edges between any pair of vertices differing in at least bit-patterns. Note that is -regular for . Turan’s theorem says that there is a clique of size . We define to be the vertices of this clique.

To show that is as large as desired, it suffices to show . The result follows by noting . ∎

We also get for any two distributions in this model with the coefficients respectively, from (1). Hence, any pair of has . Then, Fano’s inequality tells us that given a random distribution , it needs at least samples to guess the correct one with 2/3 probability. We need the following fact about the -distance among the members of .

Claim 5.5.

Let be two distinct distributions (i.e. ) with coefficient-vectors respectively. Then, .

Proof.

By Pinsker’s inequality, we have . Here we show . Let . By construction, and differ in conditional probabilities. Let be the coefficient-vectors on the coordinates where they differ. Let and be the corresponding marginal distributions on variables, and their covariance matrices. In the following, we show . This proves the claim from Fact 5.1.

Let be the adjacency matrix for , where the sources appear last in the rows and columns and in the matrix , each denote the coefficient from source to sink . Similarly, we define using a coefficient matrix . Let and denote the columns of and . Then for every , and differ in at least coordinates by construction.

By definition and , where corresponds to certain matrices not relevant to our discussion131313The missing (symmetric) submatrix of is the identity matrix added with the entries . The missing (symmetric) submatrix of is the identity matrix added with the inner products of the rows of .. Let . It can be checked that for every and . Now for every , each of the places that and differ, . Hence, their total contribution in . ∎

Proof of Theorem 5.3.

Consider any algorithm which learns a random distribution from in distance , for a small enough constant . Let the learnt distribution be . Then, from Fact 5.1, and triangle inequality of , only the unique distribution with would satisfy for every covariance matrix from , for an appropriate choice of . This would reveal the random distribution, hence the lower-bound follows. ∎

6 Experiments

General Setup

For our experiments, we explored both polytree networks (generated using random Prüfer sequences) and Erdős-Rényi graphs with bounded expected degrees (i.e. for some bounded degree parameter ) using the Python package networkx [HSSC08]. Our non-zero edge weights are uniformly drawn from the range . Once the graph is generated, the linear i.i.d. data (with variables and sample size ) is generated by sampling the model , where and is a strictly upper triangular matrix.141414We do not report the results over the varied variance synthetic data, because their performance are close to the performance of the equal variance synthetic data. We report KL divergence between the ground truth and our learned distribution using Eq. 1, averaged over 20 random repetitions. All experiments were conducted on an Intel Core i7-9750H 2.60GHz CPU.

Algorithms

The algorithms used in our experiments are as follows: Graphical Lasso [FHT08], MLE (empirical) estimator, CLIME [CLL11], LeastSquares, BatchAvgLeastSquares, BatchMedLeastSquares, CauchyEstTree, and CauchyEst. Specifically, we use BatchAvg_LS+ and BatchMed_LS+ to represent the BatchAvgLeastSquares and BatchMedLeastSquares algorithms respectively with a batch size of at each node, where is the number of parents of that node.

Synthetic data

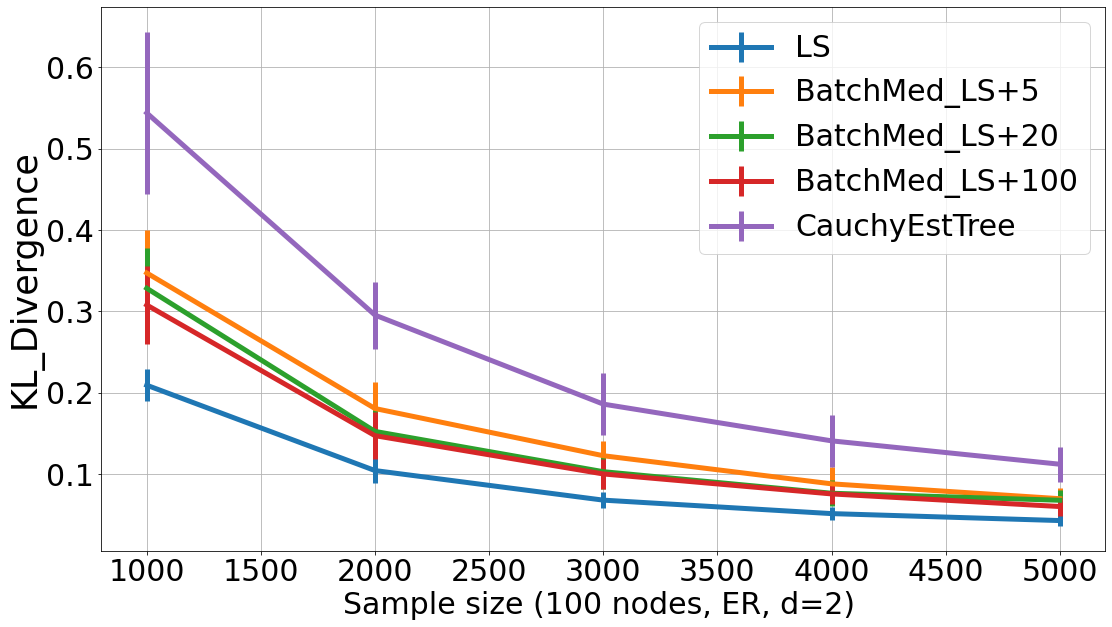

Fig. 2 compares the KL divergence between the ground truth and our learned distribution over 100 variables between the eight estimators mentioned above. The first three estimators are for undirected graph structure learning. For this reason, we are not using Eq. 1 but the common equation in [Duc07, page 13] for calculating the KL divergence between multivariate Gaussian distributions. Fig. 2(a) and Fig. 2(b) shows the results on ER graphs while Fig. 2(c) shows the results for random tree graphs. The performances of MLE and CLIME are very close to each other, thus are overlapped in Fig. 2(a). In figure Fig. 2(b), we take a closer look at the results from Fig. 2(a) for LeastSquares, BatchMedLeastSquares, BatchAvgLeastSquares, CauchyEstTree, and CauchyEst estimators for a clear comparison. In our experiments, we find that the latter five outperform the GLASSO, CLIME and MLE (empirical) estimators. With a degree 5 ER graph, CauchyEst performs better than CauchyEstTree, while LeastSquares performs best. In our experiments for our random tree graphs with in-degree 1 (see Fig. 2(c), we find that the performances between CauchyEstTree and CauchyEst are very close to each other and their plots overlap.

In our experiments, CauchyEst outperforms CauchyEstTree when the graph is generated with a higher degree parameter (e.g. ) and the resultant graph is unlikely to yield a polytree structure.

Real-world datasets

We also evaluated our algorithms on four real Gaussian Bayesian networks from R package bnlearn [Scu09]. The ECOLI70 graph provided by [SS05] contains 46 nodes and 70 edges. The MAGIC-NIAB graph from [SHBM14] contains 44 nodes and 66 edges. The MAGIC-IRRI graph contains 64 nodes and 102 edges, and the ARTH150 [ORS07] graph contains 107 nodes and 150 edges. Experimental results in Fig. 3 show that the error for LeastSquares is smaller than CauchyEst and CauchyEstTree for all the above datasets.

Contaminated synthetic data

The contaminated synthetic data is generated in the following way: we randomly choose samples with 5 nodes to be contaminated from the well-conditioned data over node graphs. The well-conditioned data has a noise for every variable, while the small proportion of the contaminated data has either or . In our experiments in Fig. 4 and Fig. 5, CauchyEst, CauchyEstTree, and BatchMedLeastSquares outperform BatchAvgLeastSquares and LeastSquares by a large margin. With more than 1000 samples, BatchMedLeastSquares with a batch size of performs similar to CauchyEst and CauchyEstTree, but performs worse with less samples. When comparing the performance between LeastSqures and BatchAvgLeastSquares over either a random tree or a ER graph, the experiment in Fig. 4(a) based on a random tree graph shows that LeastSqures performs better than BatchAvgLeastSquares when sample size is smaller than 2000, while BatchAvgLeastSquares performs relatively better with more samples. Experiment results in Fig. 5(a) based on ER degree 5 graphs is slightly different from Fig. 4(a). In Fig. 5(a), BatchAvgLeastSquares performs better than LeastSqures by a large margin. Besides, we can also observe that the performances of CauchyEst, CauchyEstTree, and BatchMedLeastSquares are better than the above two estimators and are consistent over different types of graphs. For all five algorithms, we use the median absolute devation for robust variance recovery [Hub04] in the contaminated case only (see Algorithm 8 in Appendix).

This is because both LeastSquares and BatchAvgLeastSquares use the sample covariance (of the entire dataset or its batches) in the coefficient estimators for the unknown distribution. The presence of a small proportion of outliers in the data can have a large distorting influence on the sample covariance, making them sensitive to atypical observations in the data. On the contrary, our CauchyEstTree and BatchMedLeastSquares estimators are developed using the sample median and hence are resistant to outliers in the data.

Contaminated real-world datasets

To test the robustness of the real data in the contaminated condition, we manually contaminate samples of 5 nodes from observational data in ECOLI70 and ARTH150. The results are reported in Fig. 6 and Fig. 7. In our experiments, CauchyEst and CauchyEstTree outperforms BatchAvgLeastSquares and LeastSquares by a large margin, and therefore are stable in both contaminated and well-conditioned case. Besides, note that different from the well-conditioned case, here CauchyEstTree performs slightly better than CauchyEst. This is because the Cholesky decomposition used in CauchyEst estimator takes contaminated-data into account.

Ill-conditioned synthetic data

The ill-conditioned data is generated in the following way: we classify the node sets into either well-conditioned or ill-conditioned nodes. The well-conditioned nodes have a noise, while ill-conditioned nodes have a noise. In our experiments, we choose 3 ill-conditioned nodes over 100 nodes. Synthetic data is sampled from either a random tree or a Erdős Rényi (ER) model with an expected number of neighbors . Experiments over ill-conditioned Gaussian Bayesian networks through 20 random repetitions are presented in Fig. 8. For the ill-conditioned settings, we sometimes run into numerical issues when computing the Cholesky decomposition of the empirical covariance matrix in our CauchyEst estimator. Thus, we only show the comparision results between LeastSquares, BatchAvgLeastSquares, BatchMedLeastSquares, and CauchyEstTree. Here also, the error for LeastSquares decreases faster than the other three estimators. The performance of BatchMedLeastSquares is worse than BatchAvgLeastSquares but slightly better than CauchyEstTree estimator.

Agnostic Learning

Our theoretical results treat the case that the data is realized by a distribution consistent with the given DAG. In this section, we explore learning of non-realizable inputs, so that there is a non-zero KL divergence between the input distribution and any distribution consistent with the given DAG.

We conduct agnostic learning experiments by fitting a random sub-graph of the ground truth graph. To do this, we first generate a 100-node ground truth graph , either a random tree with 4 random edges removed or a random ER graph with 9 random edges removed. Our algorithm will try to fit the data from the original Bayes net on on the edited graph . Fig. 9 reports the KL divergence learned over our five estimators. We find that BatchAvgLeastSquares estimator performs slightly better than all other estimators in both cases.

Effect of changing batch size

Next, we experiment the trade off between the batch-size (eg. batch size = [5, 20, 100]151515We use batch size up to 100 to ensure there are enough batch size from simulation data, so that either mean and median can converge.) and the KL-divergence of our BatchAvgLeastSquares and BatchMedLeastSquares estimators in detail. As shown in Fig. 10 and Fig. 11, when batch size increases, the results are closer to the LeastSquares estimator. In other words, LeastSquares can be seen as a special case of either BatchAvgLeastSquares or BatchMedLeastSquares with one batch only. Thus, when batch size increases, the performances of BatchAvgLeastSquares and BatchMedLeastSquares are closer to the LeastSquares estimator. On the contrary, the CauchyEstTree estimator can be seen as the estimator with a batch size of . Therefore, with smaller batch size (eg. batch size ), BatchAvgLeastSquares and BatchMedLeastSquares performs closer to the CauchyEstTree estimator.

Runtime comparison

We now give the amount of time spent by each algorithm to process a degree-5 ER graph on 100 nodes with 500 samples. LeastSquares algorithm takes 0.0096 seconds, BatchAvgLeastSquares with a batch size of takes 0.0415 seconds, BatchMedLeastSquares with a batch size of takes 0.0290 seconds, CauchyEstTree takes 0.6063 seconds, and CauchyEst takes 0.6307 seconds. The timings given above are representative of the relative running times of these algorithms across different graph sizes.

Takeaways

In summary, the LeastSquares estimator performs the best among all algorithms on uncontaminated datasets (both real and synthetic) generated from Gaussian Bayesian networks. This holds even when the data is ill-conditioned. However, if a fraction of the samples are contaminated, CauchyEst, CauchyEstTree and BatchMedLeastSquares outperform LeastSquares and BatchAvgLeastSquares by a large margin under different noise and graph types. If the data is not generated according to the input graph (i.e., the non-realizable/agnostic learning setting), then BatchAvgLeastSquares, CauchyEst, and CauchyEstTree have a better tradeoff between the error and sample complexity than the other algorithms, although we do not have a formal explanation.

Acknowledgements

This research/project is supported by the National Research Foundation, Singapore under its AI Singapore Programme (AISG Award No: AISG-PhD/2021-08-013).

References

- [ABDH+20] Hassan Ashtiani, Shai Ben-David, Nicholas JA Harvey, Christopher Liaw, Abbas Mehrabian, and Yaniv Plan. Near-optimal sample complexity bounds for robust learning of gaussian mixtures via compression schemes. Journal of the ACM (JACM), 67(6):1–42, 2020.

- [AGZ19] Bryon Aragam, Jiaying Gu, and Qing Zhou. Learning large-scale bayesian networks with the sparsebn package. Journal of Statistical Software, 91(1):1–38, 2019.

- [AZ15] Bryon Aragam and Qing Zhou. Concave penalized estimation of sparse gaussian bayesian networks. The Journal of Machine Learning Research, 16(1):2273–2328, 2015.

- [BCD20] Johannes Brustle, Yang Cai, and Constantinos Daskalakis. Multi-item mechanisms without item-independence: Learnability via robustness. In Proceedings of the 21st ACM Conference on Economics and Computation, pages 715–761, 2020.

- [BGMV20] Arnab Bhattacharyya, Sutanu Gayen, Kuldeep S Meel, and NV Vinodchandran. Efficient distance approximation for structured high-dimensional distributions via learning. Advances in Neural Information Processing Systems, 33, 2020.

- [BGPV20] Arnab Bhattacharyya, Sutanu Gayen, Eric Price, and NV Vinodchandran. Near-optimal learning of tree-structured distributions by chow-liu. arXiv preprint arXiv:2011.04144, 2020. ACM STOC 2021.

- [BVH+16] Afonso S Bandeira, Ramon Van Handel, et al. Sharp nonasymptotic bounds on the norm of random matrices with independent entries. Annals of Probability, 44(4):2479–2506, 2016.

- [CDKS20] C. L. Canonne, I. Diakonikolas, D. M. Kane, and A. Stewart. Testing bayesian networks. IEEE Transactions on Information Theory, 66(5):3132–3170, 2020.

- [CDW19] Wenyu Chen, Mathias Drton, and Y Samuel Wang. On causal discovery with an equal-variance assumption. Biometrika, 106(4):973–980, 2019.

- [CLL11] Tony Cai, Weidong Liu, and Xi Luo. A constrained l1 minimization approach to sparse precision matrix estimation. Journal of the American Statistical Association, 106(494):594–607, 2011.

- [Das97] Sanjoy Dasgupta. The sample complexity of learning fixed-structure bayesian networks. Mach. Learn., 29(2-3):165–180, 1997.

- [Dia16] Ilias Diakonikolas. Learning structured distributions. Handbook of Big Data, 267, 2016.

- [DMR18] Luc Devroye, Abbas Mehrabian, and Tommy Reddad. The total variation distance between high-dimensional gaussians. arXiv preprint arXiv:1810.08693, 2018.

- [Duc07] John Duchi. Derivations for linear algebra and optimization. Berkeley, California, 3(1):2325–5870, 2007.

- [FHT08] Jerome Friedman, Trevor Hastie, and Robert Tibshirani. Sparse inverse covariance estimation with the graphical lasso. Biostatistics, 9(3):432–441, 2008.

- [GDA20] Ming Gao, Yi Ding, and Bryon Aragam. A polynomial-time algorithm for learning nonparametric causal graphs. Advances in Neural Information Processing Systems, 33, 2020.

- [GH17] Asish Ghoshal and Jean Honorio. Learning identifiable Gaussian bayesian networks in polynomial time and sample complexity. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pages 6460–6469, 2017.

- [Gut09] Allan Gut. An Intermediate Course in Probability. Springer New York, 2009.

- [GZ20] Jiaying Gu and Qing Zhou. Learning big gaussian bayesian networks: Partition, estimation and fusion. Journal of Machine Learning Research, 21(158):1–31, 2020.

- [Hau18] David Haussler. Decision theoretic generalizations of the PAC model for neural net and other learning applications. CRC Press, 2018.

- [HSSC08] Aric Hagberg, Pieter Swart, and Daniel S Chult. Exploring network structure, dynamics, and function using networkx. Technical report, Los Alamos National Lab.(LANL), Los Alamos, NM (United States), 2008.

- [HTW19] Trevor Hastie, Robert Tibshirani, and Martin Wainwright. Statistical learning with sparsity: the lasso and generalizations. Chapman and Hall/CRC, 2019.

- [Hub04] Peter J Huber. Robust statistics, volume 523. John Wiley & Sons, 2004.

- [JNG+19] Chi Jin, Praneeth Netrapalli, Rong Ge, Sham M Kakade, and Michael I Jordan. A short note on concentration inequalities for random vectors with subgaussian norm. arXiv preprint arXiv:1902.03736, 2019.

- [KMR+94] Michael Kearns, Yishay Mansour, Dana Ron, Ronitt Rubinfeld, Robert E Schapire, and Linda Sellie. On the learnability of discrete distributions. In Proceedings of the twenty-sixth annual ACM symposium on Theory of computing, pages 273–282, 1994.

- [KP83] H Kim and J Perl. ’a computational model for combined causal and diagnostic reasoning in inference systems’, 8th ijcai, 1983.

- [Mue99] Ralph O Mueller. Basic principles of structural equation modeling: An introduction to LISREL and EQS. Springer Science & Business Media, 1999.

- [Mul09] Stanley A Mulaik. Linear causal modeling with structural equations. CRC press, 2009.

- [ORS07] Rainer Opgen-Rhein and Korbinian Strimmer. From correlation to causation networks: a simple approximate learning algorithm and its application to high-dimensional plant gene expression data. BMC systems biology, 1(1):1–10, 2007.

- [Par20] Gunwoong Park. Identifiability of additive noise models using conditional variances. Journal of Machine Learning Research, 21(75):1–34, 2020.

- [PB14] Jonas Peters and Peter Bühlmann. Identifiability of Gaussian structural equation models with equal error variances. Biometrika, 101(1):219–228, 2014.

- [Pea86] Judea Pearl. Fusion, propagation, and structuring in belief networks. Artificial intelligence, 29(3):241–288, 1986.

- [Pea88] Judea Pearl. Probabilistic reasoning in intelligent systems: networks of plausible inference. Morgan Kaufmann Publishers, San Francisco, Calif, 2nd edition edition, 1988.

- [PK20] Gunwoong Park and Youngwhan Kim. Identifiability of Gaussian linear structural equation models with homogeneous and heterogeneous error variances. Journal of the Korean Statistical Society, 49(1):276–292, 2020.

- [RV09] Mark Rudelson and Roman Vershynin. Smallest singular value of a random rectangular matrix. Communications on Pure and Applied Mathematics: A Journal Issued by the Courant Institute of Mathematical Sciences, 62(12):1707–1739, 2009.

- [Scu09] Marco Scutari. Learning bayesian networks with the bnlearn r package. arXiv preprint arXiv:0908.3817, 2009.

- [Scu20] Marco Scutari. bnlearn, 2020. Version 4.6.1.

- [SHBM14] Marco Scutari, Phil Howell, David J Balding, and Ian Mackay. Multiple quantitative trait analysis using bayesian networks. Genetics, 198(1):129–137, 2014.

- [SS05] Juliane Schafer and Korbinian Strimmer. A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Statistical applications in genetics and molecular biology, 4(1), 2005.

- [Tsy08] Alexandre B Tsybakov. Introduction to nonparametric estimation. Springer Science & Business Media, 2008.

- [Val84] Leslie G Valiant. A theory of the learnable. Communications of the ACM, 27(11):1134–1142, 1984.

- [Wai19] Martin J Wainwright. High-dimensional statistics: A non-asymptotic viewpoint, volume 48. Cambridge University Press, 2019.

Appendix A Details on decomposition of KL divergence

In this section, we provide the full derivation of Eq. 1.

For notational convenience, we write to mean , to mean the values given to parents of variable by , and to mean . Observe that

| Marginalization | ||||

where is due to the Bayesian network decomposition of joint probabilities. Let us define

where each and represent the parameters that relevant to variable from and respectively. Under this notation, we can write .

Fix a variable of interest with parents , each with coefficient , and variance . That is, for some that is independent of . By denoting (i.e. ) and , we can write the conditional distribution density of as

We now analyze with respect to the our estimates and the hidden true parameters , where and .

With respect to variable , we see that

By defining , we can see that for any instantiation of ,

| By definition of | ||||

| Since is just a number | ||||

Denote the covariance matrix with respect to as , where . Then, we can further simplify as follows:

| From above | ||||

| From above | ||||

where is because while is because , , and .

In conclusion, we have

| (3) |

where is the covariance matrix associated with variable , is the coefficients and variance associated with variable , are the estimates for , and .

Appendix B Deferred proofs

This section provides the formal proofs that were deferred in favor for readability. For convenience, we will restate the statements before proving them.

The next two lemmata Lemma 2.6 and Lemma 2.7 are used in the proof of Lemma 3.2, which is in turn used in the proof of Theorem 3.1. We also use Lemma 2.6 in the proof of Lemma 3.5. The proof of Lemma 2.6 uses the standard result of Lemma B.1.

Lemma B.1 ([RV09]; Theorem 6.1 and Equation 6.10 in [Wai19]).

Let and be a matrix with i.i.d. entries. Denote as the smallest singular value of . Then, for any , we have .

See 2.6

Proof of Lemma 2.6.

Observe that is symmetric, thus is also symmetric and the eigenvalues of equal the singular values of . Also, note that event that is singular has measure 0.161616Consider fixing all but one arbitrary entry of . The event of this independent entry making has measure 0.

By definition of operation norm, equals the square root of maximum eigenvalue of

where the equality is because is symmetric. Since is invertible, we have , which is equal to the inverse of minimum eigenvalue of , which is in turn equal to the square of minimum singular value of .

Therefore, the following holds with probability at least :

where the second last inequality is due to Lemma B.1 and the last inequality holds when . ∎

See 2.7

Proof of Lemma 2.7.

Let us denote as the row of . Then, we see that . For any row , we see that . We will bound values of and separately.

It is well-known (e.g. see [JNG+19, Lemma 2]) that the norm of a Gaussian vector concentrates around its mean. So, . Since and are independent, we see that . By standard Gaussian bounds, we have that .

By applying a union bound over these two events, we see that for any row with probability . The claim follows from applying a union bound over all rows. ∎

See 2.8

Proof of Lemma 2.8.

Let be the number of values that are larger than , where . Similarly, let be the number of values that are smaller than . If and , then we see that .

For a random variable , we know that . For , we see that . By additive Chernoff bounds, we see that

Similarly, we have . The claim follows from a union bound over the events and . ∎

Appendix C Median absolute deviation

In this section, we give a pseudo-code of the well-known Median Absolute Deviation (MAD) estimator (see [Hub04] for example) which we use for the component-wise variance recovery in the contaminated setting. The scale factor, below, is needed to make the estimator unbiased.