Learning Representation for Anomaly Detection of Vehicle Trajectories

Abstract

Predicting the future trajectories of surrounding vehicles based on their history trajectories is a critical task in autonomous driving. However, when small crafted perturbations are introduced to those history trajectories, the resulting anomalous (or adversarial) trajectories can significantly mislead the future trajectory prediction module of the ego vehicle, which may result in unsafe planning and even fatal accidents. Therefore, it is of great importance to detect such anomalous trajectories of the surrounding vehicles for system safety, but few works have addressed this issue. In this work, we propose two novel methods for learning effective and efficient representations for online anomaly detection of vehicle trajectories. Different from general time-series anomaly detection, anomalous vehicle trajectory detection deals with much richer contexts on the road and fewer observable patterns on the anomalous trajectories themselves. To address these challenges, our methods exploit contrastive learning techniques and trajectory semantics to capture the patterns underlying the driving scenarios for effective anomaly detection under supervised and unsupervised settings, respectively. We conduct extensive experiments to demonstrate that our supervised method based on contrastive learning and unsupervised method based on reconstruction with semantic latent space can significantly improve the performance of anomalous trajectory detection in their corresponding settings over various baseline methods. We also demonstrate our methods’ generalization ability to detect unseen patterns of anomalies.

I Introduction

Tremendous progress has been made for autonomous driving in recent years. The autonomous driving pipeline typically consists of several modules, such as sensing, perception, prediction, planning, and control. In particular, the prediction module encodes other vehicles’ past trajectories along with map context and decodes them into potential future trajectories of surrounding vehicles to facilitate the planning module. Recent works [1, 2, 3, 4] have developed various deep learning-based models for trajectory prediction and achieved great performance in terms of the average error between predicted trajectories and ground truth. However, only improving average performance is not enough for autonomous driving systems, where system robustness, safety and security are critical.

Due to the complexity of real traffic situations and limited coverage of training data, the trajectory prediction task suffers from the “long-tail” scenarios by nature. The work in [5] further demonstrates that state-of-the-art trajectory prediction models can be significantly misled by natural-looking but carefully-crafted past trajectory of a certain surrounding vehicle, and discusses several defense methods such as smoothing and SVM-based detection. [6] shows that adversarial training techniques can mitigate the effect of adversarial trajectories. However, few works focus on advanced online anomaly detection methods for vehicle trajectories. We believe that it is crucial to detect anomalous trajectories and scenarios in the prediction stage during runtime, as online anomalous trajectory detection will not only help monitor the prediction module but also enhance the safety of downstream modules in planning [7] and control [8, 9].

In this work, we consider two different settings – supervised and unsupervised, based on whether we have prior knowledge of patterns of anomalous trajectories during the training. Both scenarios are possible in real road situations, but they may make a significant difference to methods of learning representations. Thus, we focus on detecting various patterns of anomalous vehicle trajectories in both supervised and unsupervised settings, and investigate what kinds of representations and corresponding learning techniques are most effective for this safety-critical task.

The representation for anomalous vehicle trajectory detection is more complicated than that for general time-series anomaly detection because that 1) the driving scenarios contain rich contexts such as road maps and interactions between agents and 2) the anomalous or adversarial trajectories may be associated with specific driving behavior that is difficult to model. To tackle these challenges, an ideal anomaly detector should be able to effectively represent the driving scenario at a single sample level and also model the patterns underlying all normal and anomalous trajectories at the distribution level. Therefore, we first apply a state-of-the-art feature extractor based on graph neural networks to represent the trajectories as well as the road contexts, which is trained on a normal trajectory prediction dataset. Based on the extracted feature, we further add an encoder to capture the distribution-level patterns underlying the anomalous and normal trajectories. In the supervised setting, we add a contrastive-learning-based encoder to separate the two patterns in the representation space. In the unsupervised setting, we introduce semantics of driving behavior to learn a general and effective latent space for anomaly detection in complex scenarios without labels.

We extensively compare the anomaly detection performance of different representations under various kinds of anomalies and test scenarios and demonstrate that our proposed representations significantly enhance anomalous trajectory detection performance over baseline methods. The contributions of our work are summarized as follows:

-

We propose a supervised contrastive learning-based method and an unsupervised method with semantics-guided reconstruction for the anomaly detection of vehicle trajectories and demonstrate their effectiveness in different settings.

-

We explore and compare various representations and architectures for anomalous trajectory detection under supervised and unsupervised settings. We evaluate their performances with three metrics in two different datasets.

-

We further demonstrate the algorithms’ generalization ability to detect unseen patterns of anomalies and provide a detailed study to analyze the effectiveness of different modules in our methods.

II Background

II-A Anomalous and Adversarial Trajectories

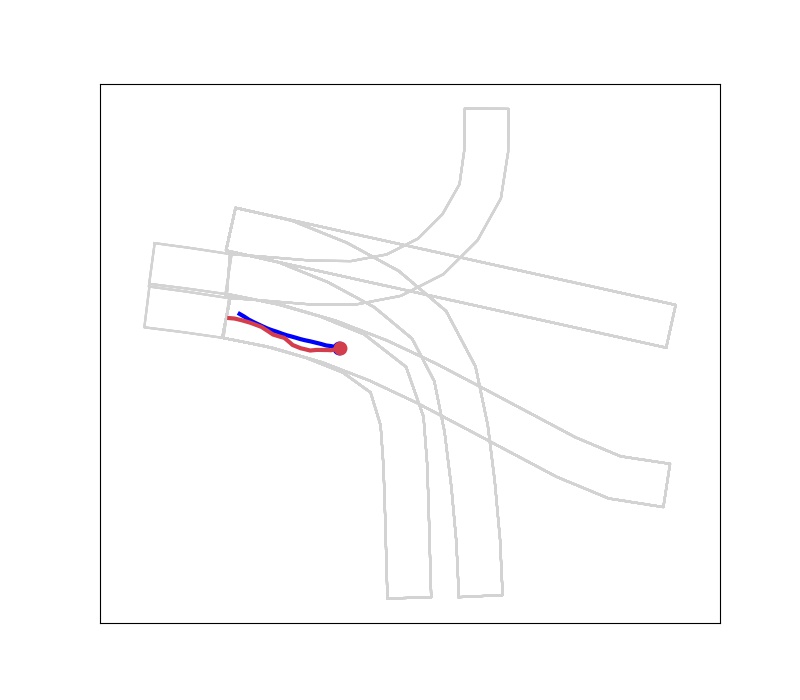

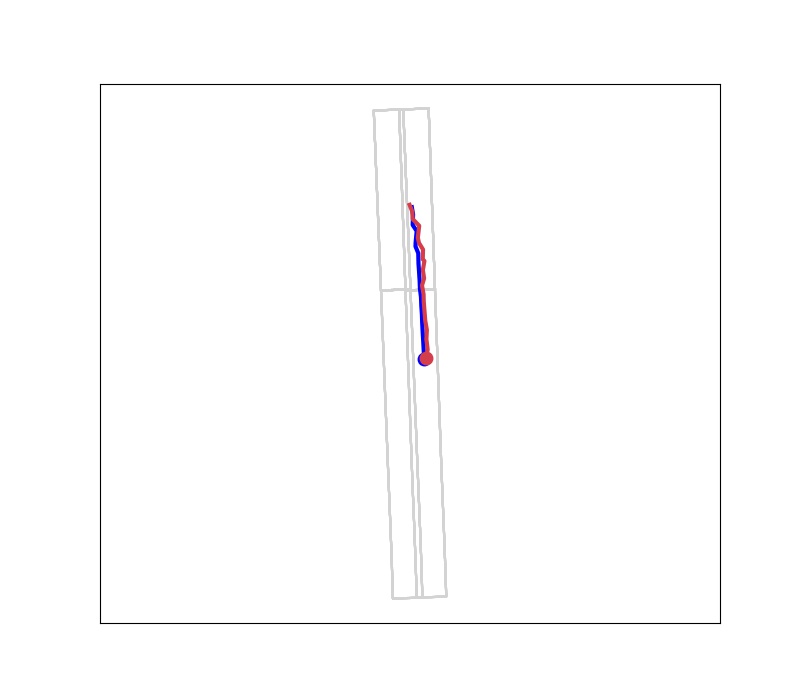

Recent work [5] shows that the trajectory prediction module in autonomous driving pipelines can be easily misled by adversarial (history) trajectories of a surrounding vehicle. In a white-box setting, an anomalous trajectory is optimized with Projected Gradient Decent (PGD) [10]. There are different patterns of anomalous trajectories – random anomalies and directional anomalies. As shown in Fig. 1, both kinds of anomalous trajectories can effectively interfere with the prediction module and may lead to dangerous scenarios. The random anomaly is a generated trajectory that maximizes the average of the root mean squared error between the predicted and the ground-truth trajectory waypoints. The directional anomalous trajectory is to deliberately mislead the prediction of the surrounding vehicle’s future trajectory to a wrong direction. In this work, we apply the lateral directional anomalous as a targeted anomalous pattern and the random attack as a general anomalous pattern, so that we can evaluate the anomaly detection algorithm comprehensively and study their generalization ability to previously-unseen patterns of anomalous trajectories. The detailed metrics for the optimization of targeted attacks are shown in Eq. (1):

| (1) |

where denotes the time frame, is a function to generate the unit vector to a specific direction (lateral direction in our setting), and and are vectors denoting predicted and ground-truth vehicle locations, respectively.

II-B Anomaly Detection

Anomaly detection refers to the problem of finding patterns in data that do not conform to expected behavior[11]. Supervised approaches, unsupervised approaches, and semi-supervised approaches are applied to anomaly detection in different scenarios.

Supervised approaches generally have better performance on classification tasks, but they require prior knowledge of both normal and anomalous samples. KNN-based methods [12, 13] capture nominal data patterns from the local interaction of nominal data points, and anomalous instances are expected to lie further away from nominal data patterns. The support vector machine (SVM) and neural networks are commonly used to project the input to a feature space and then detect the anomalies from normal data. Some other methods, such as Bayesian networks[14] and inverse reinforcement learning[15], are also effective in supervised anomaly detection.

When labels of anomalies are limited or even unavailable, we have to utilize semi-supervised [16] methods and unsupervised methods for the anomaly detection tasks. Reconstruction-based methods assume that anomalies are not compressible and thus cannot be reconstructed from low-dimensional projections[17]. In recent works, deep generative models, such as variational autoencoder (VAE)[18, 19, 20], Generative Adversarial Networks[21, 22] and adversarial autoencoder[23], are introduced to perform reconstruction-based anomaly detection. One-class classification methods including one-class SVM (OC-SVM)[24, 25] and one-class neural network (OCNN)[26] are designed to learn a discriminative boundary surrounding the normal samples.

II-C Contrastive Learning

Contrastive learning [27] learns representations by contrasting positive pairs against negative pairs. Generally, the augmented versions of the original samples are regarded as positive pairs, and a memory bank is used to stabilize the learning process. Recent works show that contrastive learning techniques can benefit representation learning significantly and there are also some advances in enhancing anomaly detection by utilizing the idea of contrastive learning. For instance, [28] proposes an unsupervised method TS2Vec for learning representations of time series. The TS2Vec method captures the contextual representation by leveraging both instance-wise and temporal contrastive loss, and the method shows great performance in time-series anomaly detection. Under a supervised setting, [29] demonstrates that the intermediate features of anomaly and normal data can be considered as negative pairs and help learn an effective representation based on contrast.

II-D Adversarial Autoencoder

The variational autoencoder (VAE) [30] provides a principled method for jointly learning deep latent-variable models and corresponding inference models using stochastic gradient descent [31], which is commonly used to generate samples in the target space from pre-defined latent distribution. Training a VAE model consists of two kinds of loss: regularization and reconstruction. The regularization is aimed to encode the input as certain distributions over the latent space using Kullback-Leibler (KL) divergence, while the reconstruction is to decode the latent variables to the target or original space. In contrast to VAE that uses KL divergence and evidence lower bound, adversarial autoencoder (AAE) [32] uses adversarial learning to impose a specific distribution on the latent variables, making itself superior to VAE in terms of imposing complicated distributions and shaping the latent space. In our work, we utilize an AAE architecture to model the semantics in the driving scenario.

III Our Methods

III-A Feature Extractor

The anomalous trajectory detection task is more complex compared to general time-series anomaly detection because anomalous trajectory detection highly depends on rich contexts, such as the road map and the behavior of surrounding vehicles. We first feed the map information and the trajectories of vehicles into a feature extractor. Similar to [2], we apply a one-dimensional convolutional network to model history trajectories and utilize graph-neural networks to represent map contexts and interactions between agents. In the training process, we first train a feature extractor in a trajectory prediction pipeline and fix the extractor in the anomaly detection task.

III-B Supervised Contrastive Learning-based Method

As shown in Fig. 2, after the feature extractor outputs a representation combining vehicle trajectories and contexts, we further develop a contrastive learning (CL) based encoder to obtain a compact representation for anomalous trajectory detection. Different from instance-wise contrastive learning, the proposed method compares the patterns of two different classes. This CL-based method is considered supervised because we build the negative pairs by contrasting the anomaly and normal data. The CL-based encoder is designed to maximize the similarity between benign scenarios and minimize the similarity between benign and anomalous scenarios. Finally, a simple binary classifier based on the encoded representations will generate the decision on whether the input is an anomalous scenario or not.

In every training mini-batch, we have scenarios containing anomalous trajectories and normal scenarios, so that we have positive pairs and negative pairs, as demonstrated in Fig. 2. We use the inner product of two vectors to measure the cosine similarity between encoded features and we set to control the concentration of samples’ distribution[33]. More negative pairs will generally improve the performance of the learned representation, but it is difficult to calculate and optimize such a large model with -way softmax vector. To fully utilize the labeled data and keep the model efficient, we apply the idea of Noise Contrastive Estimation (NCE)[34] to the optimization. We have an -way softmax classifier (one way for a certain positive pair and ways for negative pairs) to learn a 32-dimensional representation and the loss function is shown in Eq. (2), where and stand for the feature vectors of normal and anomalous scenarios, respectively. Then we define the overall loss function for a mini-batch, as shown in Eq. (3):

| (2) |

| (3) |

During the test time, we add a classifier after the CL-based encoder to produce the detection result based on the 32-dimensional vector. It is feasible to set a threshold of the distance between test samples and the average normal vector to distinguish anomaly from normal data, but in this work, we apply an SVM classifier for all kinds of representations so that we can compare their results fairly. The overall pipeline is demonstrated in Algorithm 1.

III-C Unsupervised Method using Reconstruction with Semantic Latent Space

In real road scenes, it may be challenging for us to get valid negative labels for training due to the difficulty of obtaining prior knowledge of surrounding vehicles’ potential anomalous trajectories, which motivates us to explore unsupervised detection algorithms. The unsupervised methods are aimed to learn the representation underlying normal driving scenarios and then detect unseen patterns of anomalous trajectories at runtime. Most previous works directly use VAE-based reconstruction, one-class SVM, or contrastive learning (unsupervised) to detect anomalies for time-series data. For vehicle trajectories, however, we can utilize more contexts and domain knowledge to enhance unsupervised anomaly detection. In this work, we propose an unsupervised detection method based on the adversarial autoencoder architecture and semantics modeling in the latent space. The encoder takes 128-dimensional features from the extractor as inputs and projects them into a low-dimensional latent space that is divided into three separate parts – a three-dimensional vector representing lateral intention, a one-dimensional vector representing longitudinal aggressiveness, and a six-dimensional remaining latent vector. Here, we introduce domain knowledge into latent space modeling. We apply time headway to extract the longitudinal feature, which measures the time difference between two successive vehicles when crossing a given point. We assume that the time headway follows a log-normal distribution, based on the statistics in urban transportation systems [4, 35]. The lateral intention is modeled by three simple but reasonable classes that follow categorical distribution: moving forward, turning/changing lanes to the left, and turning/changing lanes to the right. All this semantic information can be collected from benign input trajectory and no knowledge of anomaly is required. For the remaining variables in the latent space, we assume that they follow Gaussian distributions.

To optimize the latent space, we conduct a two-fold modeling in both overall distributions and semantics of a single vehicle’s trajectories. We apply the adversarial autoencoder architecture to regularize these distributions of the latent space. Specifically, for each latent vector, a discriminator is trained to distinguish the generated latent vector from the sample in real targeted distribution (log-normal, categorical, or Gaussian). At the same time, we use behavior information such as values of time headway and lateral intention to further render the latent vectors with specific semantics. Thereby, the model can further disentangle the latent space and embed domain knowledge into it. The semantic representation will benefit both unsupervised and supervised anomalous trajectory detection. The loss for semantic latent space modeling is shown below in Eq. (4):

| (4) |

where represents the predicted semantic vectors and represents the reference collected from the input trajectory.

The overall pipeline for our unsupervised method is shown in Fig. 3. In addition to the semantic latent space modeling, we use a decoder to reconstruct the input trajectories with a smooth L1 loss as shown below in Eq. (5):

| (5) |

where and represent the input trajectories and reconstructed trajectories, respectively. Both the encoder and the decoder will be optimized by the reconstruction loss. The overall optimization pipeline is shown in Algorithm 2.

Note that in an unsupervised setting, we use the error between the input trajectory and reconstructed trajectory as a signal for anomaly detection. We consider the input as an anomalous trajectory if the reconstruction error is larger than a threshold. The learned representation can also be used in a supervised setting by adding common binary classifiers after the latent space.

IV Experiments

In this section, we present the anomaly detection results of six methods under two patterns of anomalous scenarios. Each method is tested in two commonly-used datasets with three different metrics so as to comprehensively evaluate the performance, especially under imbalanced data distribution. The results show that the representation learned by our supervised contrastive learning can significantly improve detection performance. Moreover, the semantic latent space we construct can effectively model the context and explicitly encode driving behavior, enhancing anomaly detection in both supervised and unsupervised settings. We conduct a study to show to what extent the ‘semantics’ and ‘contrast’ can benefit the representation learning for anomaly trajectory detection. In addition, we evaluate the generalization ability of learned representations, which is critical for detecting unseen patterns of anomalies.

IV-A Experiment Setup

IV-A1 Data Collection

We conduct experiments with both random and directional anomalous trajectories on two datasets: Argoverse 1 [36] and Argoverse 2 [37]. The Argoverse 1 motion forecasting dataset has more than 30K driving scenarios collected in Miami and Pittsburgh, while Argoverse 2 collects longer and more complicated driving scenarios in six cities. Each scenario used in this work consists of a road graph and trajectories of multiple agents. The history trajectories are 20 waypoints collected in the past 2 seconds.

To collect the anomalous trajectories, we apply the attack methods mentioned in Sec. II-A to generate different patterns of anomalies. For lateral directional anomalous trajectories, we consider past trajectories that can lead to a prediction error of more than 1.5 meters in a lateral direction as anomalies. For random anomalous trajectories, the threshold is set as 5-meter average displacement error (ADE).

IV-A2 Evaluation Metrics

We utilize three different metrics to evaluate the performance of anomaly detection approaches – ROC AUC (area under the receiver operating characteristic curve), PR AUC (area under the precision-recall curve), and F1 score. The ROC AUC is a general metric to evaluate the binary classification ability at all classification thresholds, but it can be overly optimistic on severely imbalanced classification problems. For the imbalance dataset in anomaly detection, the PR AUC is a more powerful metric because both precision and recall are focused on the positive (anomaly) class and are unconcerned with the majority class. The F1 score is the harmonic mean of precision and recall. In the anomaly detection task, the recall (detected anomalies over all anomalies) is expected to be high. Thus we find the point where recall is fixed as 0.8 and calculate its corresponding F1 score.

IV-B Effectiveness of Our CL-based Supervised Method

In the supervised setting, the labels of anomalous driving scenarios are available. We use SVM as a fixed classifier to compare the results of different learned representations. The naive SVM method builds an SVM directly on the acceleration series of the input trajectories. For the methods with semantic latent space and contrastive learning encoder, we use the 128-dimensional feature produced by the feature extractor as input. The results in Tables I and II show that our contrastive learning-based supervised method greatly outperforms other supervised methods in both directional and random anomaly patterns. Compared to the method using semantic latent space (‘Semantics + SVM’), our CL-based supervised method (‘Sup-CL + SVM’) can effectively model the distribution of normal and abnormal trajectories and separate them in the CL-based representation space, making it easy for a simple SVM classifier to detect anomalies. The results of naive SVM (‘Naive SVM’) demonstrate that it is difficult to directly distinguish anomaly from normal data in the trajectory space, even with enough labels.

IV-C Effectiveness of Our Unsupervised Method with Semantic Reconstruction

In the unsupervised case, without prior knowledge of anomaly patterns, it is more difficult to learn an informative representation. We utilize the one-class SVM on trajectory space [24] and a state-of-the-art unsupervised contrastive learning method – TS2Vec [28] as baselines. As shown in Tables III and IV, the one-class SVM on trajectory space method (‘OC-SVM’) and the unsupervised contrastive learning method (‘Unsup-CL + OC-SVM’) have relatively poor performance and can hardly detect the anomalous trajectories. Our unsupervised method with semantic reconstruction (‘Semantic Recon’) has much better performance on every metric over other unsupervised methods. In addition, when detecting random anomalous trajectories, we find that our unsupervised method has close performance to its corresponding supervised version, which further demonstrates the effectiveness of our semantic latent representation. The ROC curves of all supervised and unsupervised methods are shown in Fig. 4.

IV-D Effectiveness of Components in Our Proposed Methods

We conduct more experiments to study to what extent different components of our methods benefit the overall performance improvement. In this study, we test all representations in a supervised manner for comparison. We evaluate how much the feature extractor (embedding the contexts) and the following encoder (semantics or CL-based) contribute to the performance, respectively. The ‘NN + SVM’ stands for the method of directly adding an SVM classifier after the GNN-based feature extractor. In Table V, we find that using such representation directly from the feature extractor has a much poorer detection performance than our CL-based representation (‘Sup-CL + SVM’). The latent space modeling methods (‘Semantics + SVM’ and ‘Naive Latent + SVM’) also outperform the pure feature extractor (‘NN + SVM’). Moreover, Table VI reveals that our semantic latent space modeling (‘Semantics + SVM’) significantly improves the generalization ability to unseen patterns of anomalies when compared to the naive latent space modeling without any semantics (‘Naive Latent + SVM’) and the pure feature extractor (‘NN + SVM’).

| Methods | Anomaly Pattern | F1 score | ROC AUC | PR AUC |

|---|---|---|---|---|

| NN + SVM | Random | |||

| Naive Latent + SVM | Random | |||

| Semantics + SVM | Random | |||

| Sup-CL + SVM | Random |

| Methods | Anomaly Pattern | F1 score | ROC AUC | PR AUC |

|---|---|---|---|---|

| NN + SVM | Random | |||

| Naive Latent + SVM | Random | |||

| Semantics + SVM | Random | |||

| Sup-CL + SVM | Random |

IV-E Evaluation on Generalization Ability

In the supervised setting, one critical aspect is how the representation learned from normal samples and a certain pattern of anomalies can be generalized to other unseen patterns of anomalies. Fig. 5 shows the results when the supervised methods are trained and tested on different patterns of anomalies. Compared to Fig. 4, we find that the lateral directional anomalies are relatively easy to detect, even when the models are trained on another kind of anomaly. However, when the models are trained on lateral directional anomalies but tested on the random anomalous trajectories, the performances of supervised methods drop significantly, although the supervised CL-based method is still the best, which reveals overfitting and a lack of ability to generalize. In this setting, the unsupervised reconstruction with semantic latent space even outperforms its corresponding supervised version. Table VI further shows our semantics modeling can help in learning a more generalized representation and mitigating overfitting to a certain pattern of anomalies, compared to the naive latent space.

V Conclusions

In this work, we propose novel contrastive learning-based supervised method and semantic reconstruction-based unsupervised method for anomalous vehicle trajectory detection. We embed both driving contexts and distributions underlying the normal (and anomalous) trajectories into the representation by various methods. Extensive experiments demonstrate that our methods can significantly improve the detection performance over baseline methods in supervise and unsupervised settings, respectively. We also demonstrate that our methods have better generalization ability to address unseen attack patterns, which is valuable for practical applications on the road.

References

- [1] Y. Liu, J. Zhang, L. Fang, Q. Jiang, and B. Zhou, “Multimodal motion prediction with stacked transformers,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 7577–7586.

- [2] M. Liang, B. Yang, R. Hu, Y. Chen, R. Liao, S. Feng, and R. Urtasun, “Learning lane graph representations for motion forecasting,” in European Conference on Computer Vision. Springer, 2020, pp. 541–556.

- [3] Y. Yuan, X. Weng, Y. Ou, and K. M. Kitani, “Agentformer: Agent-aware transformers for socio-temporal multi-agent forecasting,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 9813–9823.

- [4] R. Jiao, X. Liu, B. Zheng, D. Liang, and Q. Zhu, “Tae: A semi-supervised controllable behavior-aware trajectory generator and predictor,” arXiv preprint arXiv:2203.01261, 2022.

- [5] Q. Zhang, S. Hu, J. Sun, Q. A. Chen, and Z. M. Mao, “On adversarial robustness of trajectory prediction for autonomous vehicles,” arXiv preprint arXiv:2201.05057, 2022.

- [6] R. Jiao, X. Liu, T. Sato, Q. A. Chen, and Q. Zhu, “Semi-supervised semantics-guided adversarial training for trajectory prediction,” arXiv preprint arXiv:2205.14230, 2022.

- [7] X. Liu, R. Jiao, B. Zheng, D. Liang, and Q. Zhu, “Neural network based interactive lane changing planner in dense traffic with safety guarantee,” arXiv preprint arXiv:2201.09112, 2022.

- [8] R. Jiao, H. Liang, T. Sato, J. Shen, Q. A. Chen, and Q. Zhu, “End-to-end uncertainty-based mitigation of adversarial attacks to automated lane centering,” in 2021 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2021, pp. 266–273.

- [9] Q. Zhu, C. Huang, R. Jiao, S. Lan, H. Liang, X. Liu, Y. Wang, Z. Wang, and S. Xu, “Safety-assured design and adaptation of learning-enabled autonomous systems,” in Proceedings of the 26th Asia and South Pacific Design Automation Conference, 2021, pp. 753–760.

- [10] A. Madry, A. Makelov, L. Schmidt, D. Tsipras, and A. Vladu, “Towards deep learning models resistant to adversarial attacks,” arXiv preprint arXiv:1706.06083, 2017.

- [11] V. Chandola, A. Banerjee, and V. Kumar, “Anomaly detection: A survey,” ACM computing surveys (CSUR), vol. 41, no. 3, pp. 1–58, 2009.

- [12] S. Ramaswamy, R. Rastogi, and K. Shim, “Efficient algorithms for mining outliers from large data sets,” in Proceedings of the 2000 ACM SIGMOD international conference on Management of data, 2000, pp. 427–438.

- [13] K. Doshi and Y. Yilmaz, “Online anomaly detection in surveillance videos with asymptotic bound on false alarm rate,” Pattern Recognition, vol. 114, p. 107865, 2021.

- [14] S. Mascaro, A. E. Nicholso, and K. B. Korb, “Anomaly detection in vessel tracks using bayesian networks,” International Journal of Approximate Reasoning, vol. 55, no. 1, pp. 84–98, 2014.

- [15] D. Li, M. L. Shehab, Z. Liu, N. Aréchiga, J. DeCastro, and N. Ozay, “Outlier-robust inverse reinforcement learning and reward-based detection of anomalous driving behaviors,” in 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2022, pp. 4175–4182.

- [16] L. Ruff, R. A. Vandermeulen, N. Görnitz, A. Binder, E. Müller, K.-R. Müller, and M. Kloft, “Deep semi-supervised anomaly detection,” arXiv preprint arXiv:1906.02694, 2019.

- [17] T. Li, Z. Wang, S. Liu, and W.-Y. Lin, “Deep unsupervised anomaly detection,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2021, pp. 3636–3645.

- [18] J. Wiederer, A. Bouazizi, M. Troina, U. Kressel, and V. Belagiannis, “Anomaly detection in multi-agent trajectories for automated driving,” in Conference on Robot Learning. PMLR, 2022, pp. 1223–1233.

- [19] Y. Tang, L. Zhao, S. Zhang, C. Gong, G. Li, and J. Yang, “Integrating prediction and reconstruction for anomaly detection,” Pattern Recognition Letters, vol. 129, pp. 123–130, 2020.

- [20] D. Park, Y. Hoshi, and C. C. Kemp, “A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder,” IEEE Robotics and Automation Letters, vol. 3, no. 3, pp. 1544–1551, 2018.

- [21] T. Li, M. Shang, S. Wang, M. Filippelli, and R. Stern, “Detecting stealthy cyberattacks on automated vehicles via generative adversarial networks,” in 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2022, pp. 3632–3637.

- [22] T. Schlegl, P. Seeböck, S. M. Waldstein, G. Langs, and U. Schmidt-Erfurth, “f-anogan: Fast unsupervised anomaly detection with generative adversarial networks,” Medical image analysis, vol. 54, pp. 30–44, 2019.

- [23] S. Pidhorskyi, R. Almohsen, and G. Doretto, “Generative probabilistic novelty detection with adversarial autoencoders,” Advances in neural information processing systems, vol. 31, 2018.

- [24] S. M. Erfani, S. Rajasegarar, S. Karunasekera, and C. Leckie, “High-dimensional and large-scale anomaly detection using a linear one-class svm with deep learning,” Pattern Recognition, vol. 58, pp. 121–134, 2016.

- [25] M. Amer, M. Goldstein, and S. Abdennadher, “Enhancing one-class support vector machines for unsupervised anomaly detection,” in Proceedings of the ACM SIGKDD workshop on outlier detection and description, 2013, pp. 8–15.

- [26] R. Chalapathy, A. K. Menon, and S. Chawla, “Anomaly detection using one-class neural networks,” arXiv preprint arXiv:1802.06360, 2018.

- [27] R. Hadsell, S. Chopra, and Y. LeCun, “Dimensionality reduction by learning an invariant mapping,” in 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), vol. 2. IEEE, 2006, pp. 1735–1742.

- [28] Z. Yue, Y. Wang, J. Duan, T. Yang, C. Huang, Y. Tong, and B. Xu, “Ts2vec: Towards universal representation of time series,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 8, 2022, pp. 8980–8987.

- [29] O. Kopuklu, J. Zheng, H. Xu, and G. Rigoll, “Driver anomaly detection: A dataset and contrastive learning approach,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2021, pp. 91–100.

- [30] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXiv preprint arXiv:1312.6114, 2013.

- [31] D. Kingma and M. Welling, “An introduction to variational autoencoders,” arXiv preprint arXiv:1906.02691, 2019.

- [32] A. Makhzani, J. Shlens, N. Jaitly, I. Goodfellow, and B. Frey, “Adversarial autoencoders,” arXiv preprint arXiv:1511.05644, 2015.

- [33] G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” arXiv preprint arXiv:1503.02531, 2015.

- [34] M. Gutmann and A. Hyvärinen, “Noise-contrastive estimation: A new estimation principle for unnormalized statistical models,” in Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, 2010, pp. 297–304.

- [35] D.-H. Ha, M. Aron, and S. Cohen, “Time headway variable and probabilistic modeling,” Transportation Research Part C: Emerging Technologies, vol. 25, pp. 181–201, 2012.

- [36] M.-F. Chang, J. Lambert, P. Sangkloy, J. Singh, S. Bak, A. Hartnett, D. Wang, P. Carr, S. Lucey, D. Ramanan, et al., “Argoverse: 3d tracking and forecasting with rich maps,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 8748–8757.

- [37] B. Wilson, W. Qi, T. Agarwal, J. Lambert, J. Singh, S. Khandelwal, B. Pan, R. Kumar, A. Hartnett, J. K. Pontes, et al., “Argoverse 2: Next generation datasets for self-driving perception and forecasting,” arXiv preprint arXiv:2301.00493, 2023.