Learning Rapid Turning, Aerial Reorientation, and Balancing using Manipulator as a Tail

Abstract

In this research, we investigated the innovative use of a manipulator as a tail in quadruped robots to augment their physical capabilities. Previous studies have primarily focused on enhancing various abilities by attaching robotic tails that function solely as tails on quadruped robots. While these tails improve the performance of the robots, they come with several disadvantages, such as increased overall weight and higher costs. To mitigate these limitations, we propose the use of a 6-DoF manipulator as a tail, allowing it to serve both as a tail and as a manipulator. To control this highly complex robot, we developed a controller based on reinforcement learning for the robot equipped with the manipulator. Our experimental results demonstrate that robots equipped with a manipulator outperform those without a manipulator in tasks such as rapid turning, aerial reorientation, and balancing. These results indicate that the manipulator can improve the agility and stability of quadruped robots, similar to a tail, in addition to its manipulation capabilities.

I INTRODUCTION

The tails of animals play crucial roles in enhancing their physical abilities, particularly in quadrupeds. For instance, cheetahs use their tails to change direction during high-speed chases, allowing them to catch prey more effectively [1]. Additionally, squirrels and lizards utilize their tails to adjust body orientation in mid-air, ensuring safe landings [2, 3]. Tails are also essential for balancing, swimming, hopping, courtship, and defense [4]. These versatile tails have garnered the attention of robotic engineers and have been established as solutions for addressing various challenges in the field of robotics.

Previous works have attached tails to quadruped robots to improve their stability and speed during turning and running [5, 6, 7, 8]. Some studies suggest that tails can aid the robot’s attitude control in aerial regions [9, 10, 11, 12]. There have also been attempts to improve the overall stability of robots by incorporating tails [13, 14]. These studies demonstrate that tails can enhance robotic performance in various tasks. However, adding a tail increases the complexity of the robot, leading to issues such as higher costs and increased weight. Consequently, the use of tails in practical applications may not always be efficient.

To alleviate these drawbacks and create a highly efficient tail capable of executing various tasks, we employ a manipulator as a tail rather than designing a tail solely for that purpose. Huang et al. [15] are also exploring the use of a manipulator as a tail. By employing a manipulator as a tail, it can simultaneously perform the roles of a gripper and a tail, thereby achieving high efficiency. In recent years, numerous studies have explored the use of manipulators in quadruped robots [16, 17, 18, 19]. The majority of these studies have focused on manipulators performing manipulation tasks. However, we have identified the potential for manipulators when utilized as tails.

Among various control methods, reinforcement learning (RL) has recently emerged as one of the most popular approaches for legged robots [20, 21, 22]. By using a deep reinforcement learning-based controller, many previous studies have successfully enabled high-complexity robots to execute complex behaviors [23, 24]. Inspired by these studies, we employ deep reinforcement learning (DRL) to develop a controller.

Beyond creating a robust controller, the primary consideration when incorporating a tail on a robot is whether its presence meaningfully enhances the robot’s overall performance. Our experiments demonstrate that a robot equipped with a manipulator outperforms one without a manipulator in tasks such as rapid turning, aerial reorientation, and balancing. Specifically, when a robot running at 4.5 m/s executed a turn, the distance pushed out by centrifugal force was reduced by two-thirds compared to a robot without a manipulator. Additionally, a robot with a manipulator was able to land safely from initial angles of to at heights of 1.5 to 2.25 meters, whereas a robot without a manipulator failed to land under the same conditions. Finally, when subjected to external forces, a robot with a manipulator exhibited a higher survival rate compared to one without a manipulator.

Overall, this paper demonstrates that a manipulator can be an appropriate choice as a quadruped robot’s tail, enhancing the capabilities of a quadruped robot. Our main contributions are as follows.

-

1.

We propose the utilization of a manipulator that functions as a tail.

-

2.

We outline a method for controlling a robot equipped with a tail using reinforcement learning.

-

3.

We demonstrate that a robot equipped with a manipulator exhibits improved performance in tasks such as rapid turning, aerial reorientation, and balancing.

II METHOD

II-A Overview

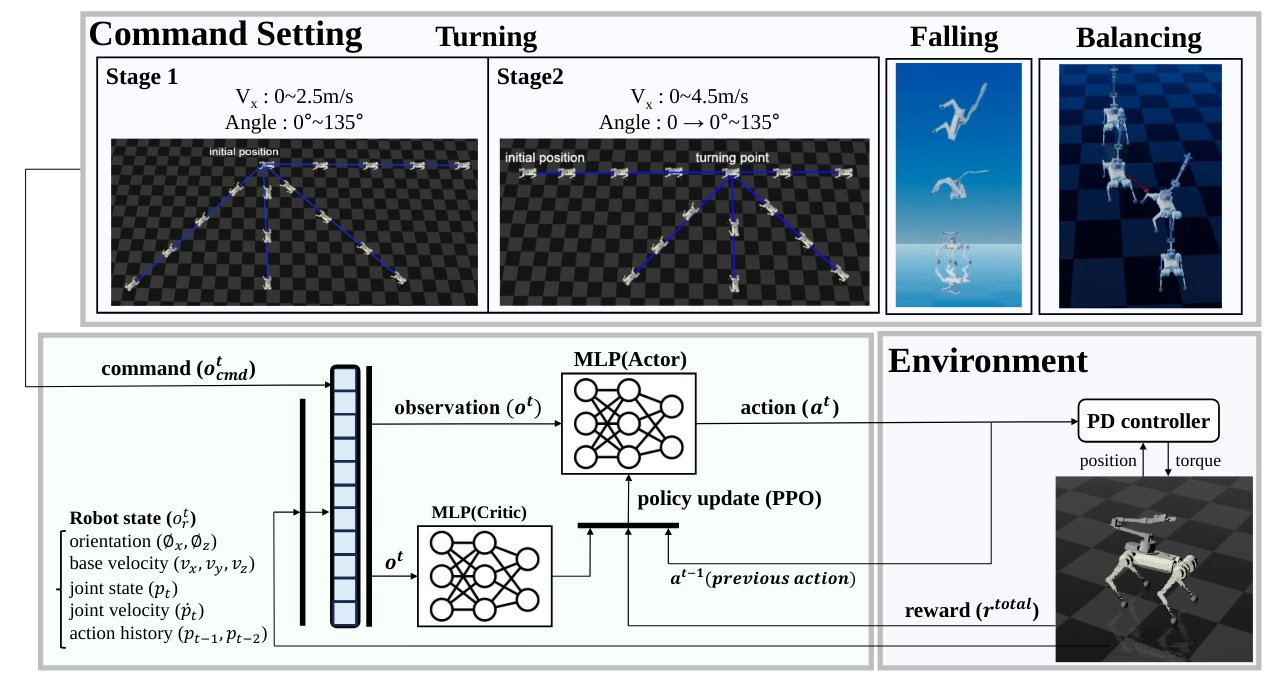

Our goal is to develop a controller for a manipulator-mounted quadruped robot that performs well on various tasks, including rapid turning, aerial reorientation for safe landing, and balancing against external force. We utilize deep reinforcement learning to train a neural network policy. An overview of this deep reinforcement learning process is illustrated in Figure 2. In our study, we employ Proximal Policy Optimization (PPO), a deep reinforcement learning algorithm, to update the policy and utilize two distinct neural network architectures. The first network, termed the ”actor,” maps observations to actions and is structured as a Multi-Layer Perceptron (MLP) with hidden layers sized [512, 256, 128]. The second network, known as the ”critic,” evaluates the current state using an MLP with hidden layers sized [512, 256, 128]. Both networks are essential for the algorithm’s decision-making process and performance evaluation, facilitating the implementation of PPO in complex environments.

In our simulation, we deploy this controller on the Minicheetah robot mounted with a WidowX250S manipulator. We use RAISIM [26] as the simulation environment.

II-B Base

For three different tasks, we employ slightly different learning algorithms. However, the overarching methodology remains largely consistent. This section outlines the general algorithm constant for all tasks, and the subsequent sections will provide a detailed description of the specific adaptations made for each task.

II-B1 Observation

As depicted in Figure 2, the observation is divided into two distinct parts: the robot state and the command. The robot state comprises the joint state, base state, and joint history. We provide the joint velocity () and joint position () for the joint state. For the base state, we include the base angular velocity (), base linear velocity (), and base orientation (). Additionally, we provide the previous joint position history for the two preceding time steps (, ). This type of observation remain constant across all tasks.

Unlike the robot state, the observation of commands() varies according to the task. For the task of rapid turning, we provide the command velocity and command yaw: . For the balancing task, we use the same command structure. In contrast, for the task of aerial reorientation, we do not provide any command observations. Since the goal is to land safely when falling in the air, no particular command is required. Without any command, the task can be sufficiently completed by termination.

| Reward | Expression | Reward Coefficient | |

|---|---|---|---|

| Joint Position | -4.0 | ||

| Joint Velocity | -0.005 | ||

| Torque | -0.002 | ||

| smoothness | -4.0 | ||

II-B2 Action

Instead of using the non-scaled output of the actor network, we scale the output by the technique used by Ji et al. [27]. Here, the action space is scaled by a predefined factor, termed the action factor, and adds to the nominal joint position as follows: , where is the desired position which would be transmitted to the PD controller (, ), is the nominal joint configuration, is the action factor, is the policy output in the neural network. We use 0.3 for action factor(). In the early iterations, this action scaling largely dominates the action, preventing it from moving excessively away from the initial state. However, as the iterations progress, this dominance diminishes.

II-B3 Reward

We utilize two types of rewards in our system: the objective reward , which grants a positive reward when the desired goal is achieved, and the constraint reward , which imposes a negative reward for unsafe or unfeasible actions. We adopt the total reward formula used by Ji et al. [27], where the total reward is calculated as . We use 0.02 for .

Given that three distinct tasks have different objectives, objective reward varies for each task. For the constraint reward , it can be divided into two types: the general constraint reward , and the task-specific constraint reward . The General constraint reward =+++ is illustrated in Table I, and the task-specific constraint reward will be illustrated in the following sections. In conclusion, the total reward function can be rewritten as follows.

| (1) |

II-C Rapid Turning

Designing a controller capable of executing rapid turns during high-speed running presents significant challenges. Frequently, the robot becomes trapped in a local minimum, where it remains stationary before executing the turn command and only moves after the command. This phenomenon occurs because the robot encounters substantial difficulty in performing rapid turns while in motion, leading it to abandon the attempt and execute the turn from a stationary position. Although various methods can successfully overcome this problem, such as gradually increasing the turning angle and velocity through curriculum learning, we have found that segmenting the training into two distinct stages is the most effective and stable approach for learning rapid turning. In the first stage, the robot learns to turn from a stationary position. In the second stage, it learns to execute rapid turns while running.

II-C1 Stage 1 (Yaw Adjustment in Standstill)

The concept of Stage 1 is illustrated at Figure 2. We train the robot to follow the turn command ranging from to in a standstill position. Additionally, the robot learns to follow the velocity command. Stage 1 continues until the iteration count reaches 2000.

To enhance the stability of the learning process, we incrementally increase the command velocity using a curriculum learning approach, which has been shown to improve the stability of learning[27].

| (2) |

II-C2 Stage2 (Rapid turning during running)

Stage2 is the main stage of rapid turning. In stage 2, the robot is trained to rotate in a fully moving state. The concept is shown at Figure 2.

We also use curriculum learning for command velocity in this stage. However, we discover that a straightforward curriculum learning approach by iteration, such as that used in Stage 1, is insufficient. This is mainly because Stage 2 presents greater instability in the learning process compared to Stage 1, due to the requirement for rapid rotation at high speeds. To improve stability, we implement a reward-based curriculum learning strategy[28]. Instead of merely updating the command velocity based on iteration count alone, we adjust the command velocity if the total reward exceeds a predefined threshold value.

| (3) |

In this experiment, the predefined threshold value is set to 4.75. This means that is increased by 1 if the total average reward of the iteration exceeds 4.75.

It is essential to determine the proper timing for executing turn commands during the learning phase. If the commands are issued randomly from the beginning of the learning phase, the likelihood of successful learning is reduced because of the continuous variation in the robot’s foot position and posture during its run. One method to address this issue is to progressively extend the range of commands using a curriculum learning approach. We update the range of commands if the total reward exceeds a predefined threshold value, similar to the Stage 2 command velocity process.

| (4) |

In this experiment, the value of is the same as the used in the velocity command. However, it is possible to set different values.

II-C3 Reward

| Reward | Expression |

|---|---|

| Linear Velocity | |

| Yaw | |

| Angle Velocity | |

| Foot Airtime | |

| Foot Clearance | |

| Base stability | |

| Orientation | |

| Arm position | |

| Coefficient | run | turn | Coefficient | run | turn |

|---|---|---|---|---|---|

| 2.0 | 2.0 | 4.5 | 4.5 | ||

| 0.0 | 3.0 | 0.5 | 0.5 | ||

| -50.0 | -50.0 | -10.0 | -10.0 | ||

| -100.0 | 0.0 | -15.0 | 0.0 |

| Reward | Expression |

|---|---|

| Orientation | |

| Linear Velocity | |

| Base height | |

| Foot Clearance | |

| Arm position |

| Coefficient | air | ground | Coefficient | air | ground |

|---|---|---|---|---|---|

| 5.0 | 5.0 | 0.0 | 2.5 | ||

| 0.0 | 5.0 | 0.0 | -100.0 | ||

| 0.0 | -150.0 |

Table II illustrates the reward details for the rapid turning task in Stage 2. We divide each iteration into two distinct sections: one for running forward and the other for turning. Due to the distinct objectives of these two sections, we employ different sets of reward coefficients. Notably, in the turning section, a reward for angular velocity is added, while rewards for body orientation and arm position are omitted. The key factor enabling the robot to turn rapidly is the angular velocity reward (). This reward provides a high incentive if the robot’s angular velocity is high during the turning phase. It is possible to increase the rotational speed of the robot without using the angular velocity reward. For example, accelerating the robot’s lateral speed or increasing the rate of change in the robot’s direction can increase the turning speed. However, the angular velocity reward provides the best performance in terms of both learning speed and turning performance. An important consideration when setting up an angular velocity reward is to set the angular velocity reward coefficient () higher than the other objective reward coefficients(). Without this adjustment, achieving high-speed turning becomes nearly impossible.

Unlike Stage 2, in Stage 1 we solely utilize the reward associated with the running section, without dividing the iteration into two sections.

The objective task reward can be written as , and the constraint task reward would be .

II-D Aerial Reorientation and Safe Landing

Unlike the rapid turning task, we found that the aerial reorientation task does not require curriculum learning or stage-wise learning. The key factors for these tasks are termination as constraint and the inertia of the tail.

II-D1 Inertia of tail

In the task, we utilize not only the WidowX250S, which is used in the other tasks, but also the ViperX300S, which is heavier and longer. We observed that, due to the limited inertia of the tail, the WidowX250S does not perform well in aerial reorientation. Consequently, we employ a robot arm with higher inertia. The WidowX250S has a total length of 1300 mm and a weight of 2.35 kg, whereas the ViperX300S has a total length of 1500 mm and a weight of 3.6 kg.

II-D2 Termination

Reinforcement learning that relies solely on a reward function can have limitations. In this task, the robot receives a substantial reward if it lands safely and incurs a significant penalty if the landing fails. Due to this extreme setup, the robot might prematurely forfeit the reward in the air, as forfeiting the reward is more advantageous than failing to land. To address this issue, precisely adjusting the reward function can be a solution, but implementing a termination constraint could be more effective.

Several studies have attempted to train robots using constrainted reinforcement learning [29, 30, 31, 32]. Notably, Chane-Sane et al. [32] propose the CaT(Constraint as Termination) algorithm which utilize terminator as the constraint in RL method. In our study, both the reward function and termination constraints are used, and no other complex algorithms are necessary. We simply terminate the iteration with a high negative reward when the robot violates certain rules. This simple algorithm, combined with reward function, successfully enables the robot to execute feasible motions. The following rules are used for termination in this experiment:

II-D3 Reward

This task consists of two stages: the aerial reorientation section, where the robot rotates its body to align its base orientation with the z-axis of the world frame, and the self-righting section, where the robot recovers its body to the nominal position after landing. We divide these sections according to body height: the aerial reorientation section corresponds to the air region (), and the self-righting section corresponds to the ground region(). Due to the different objectives of these two stages, the rewards are divided accordingly, as detailed in Table III. In particular, in the air, only orientation rewards and general constraint rewards are needed. However, on the ground, where self-righting is required, various additional rewards are included.

The objective task reward can be written as , and the constraint task reward would be .

II-E Balancing

For the balancing part, the robot is trained to endure random external impulses at random timings and in random directions while walking at speeds ranging from 0.5 m/s to 3 m/s. The range of impulses is . During training, we use the same reward setup as in the running section of the Rapid Turning task.

III RESULT

III-A Rapid turning

We compared the turning performance of robots with and without a manipulator in simulations using the RAISIM environment. In the simulation tests, we set the forward velocity of the robots between and . We then issued commands for the robots to execute turns up to while moving forward. The quality of the turns was assessed based on three criteria: the speed of the turn, the sharpness of the turn, and the displacement during turning.

Our findings indicate that the turning speed was nearly identical regardless of the presence of the manipulator. However, there was a notable difference in the sharpness of the turns. As depicted in Figure 3, the robot equipped with a manipulator made sharper turns compared to the robot without a manipulator. Furthermore, the trajectory of the robot with a manipulator was closer to the ideal trajectory than that of the robot without a manipulator. This means that the displacement during rotation was shorter when a manipulator was equipped. Specifically, during a turn, the robot without a manipulator was pushed out by up to 1.74 meters, while the robot with a manipulator was pushed out to a maximum of only 1.2 meters.

Figure 4 shows the difference in trajectory with respect to velocity. In Figure 4(a), the trajectory of the robot without a manipulator varies significantly with speed. As speed increases, the deviation from the ideal trajectory becomes more pronounced. In contrast, the trajectory of the robot with a manipulator, shown in Figure 4(b), remains relatively consistent.

III-B Aerial Reorientation and Safe Landing

Unlike the previous task, we used three different robots to evaluate performance: Minicheetah without a manipulator, Minicheetah equipped with a WidowX250S, and Minicheetah equipped with a ViperX300S. Details of these three robots are provided in the Method section. In the simulation, we dropped these robots from heights ranging from 1.5m to 2.25m and angles ranging from to .

We found that the robot without a manipulator failed in all these scenarios. Similarly, the robot equipped with a WidowX250S failed in all scenarios. However, the robot equipped with a ViperX300S succeeded in all situations.

Figure 5 illustrates the orientation shift during aerial reorientation. Regardless of the initial conditions, the robot without a manipulator achieved a maximum rotation of , the robot equipped with the WidowX250S reached up to , and the robot equipped with the ViperX300S attained up to . The graph for the robot equipped with the WidowX250S shows that its rotation speed decreases after 0.2 seconds. In contrast, the robot equipped with the ViperX300S maintains a consistent rotation speed while reorienting and culminates in a constant orientation angle phase after 0.3 seconds, which remains steady while falling.

III-C Balancing

In simulation, we randomly applied impulses to the robot while walking at 1 m/s, with forces in the x, y and z-axis directions, totaling up to 100 N·s. The x-axis aligns with the robot’s forward direction, the y-axis corresponds to the robot’s lateral direction, and the z-axis aligns with gravity. We only display the results for the impulses on the y- and z-axis because the data show that the robot can withstand forces in the x-axis direction well, both with and without manipulator.

The results are illustrated in Figure 6. The survival rate of the robot equipped with a manipulator is , while the survival rate of the robot without a manipulator is . Notably, in almost all areas within the black line, where the robot has already experienced the learning session, the robot with a manipulator successfully survived. In contrast, the robot without a manipulator has relatively often failed in these regions.

IV Discussion

IV-A Rapid Turning

In the Results section, we evaluate the turning performance of the quadruped robot equipped with a manipulator based on three criteria: the speed of the turn, the sharpness of the turn, and the displacement during turning. Among these criteria, the quadruped robot with a manipulator demonstrates superior performance in terms of sharpness and displacement during the turn. This improved performance is attributed to the robot with the manipulator being able to withstand greater centrifugal force during the turn. Figure 4 strongly supports this observation. As velocity increases, the deviation between the ideal trajectory and the trajectory of the robot without the manipulator becomes more pronounced. Given that the magnitude of the centrifugal force increases proportionally with the square of the velocity, this result is expected. However, the trajectory of the robot equipped with the manipulator remains almost constant regardless of the velocity, indicating that the quadruped robot can better endure centrifugal forces with the assistance of the manipulator.

The mechanism by which the quadruped robot utilizes its manipulator to withstand centrifugal force is illustrated in Figure 7. It shows the movement of the quadruped robot with the manipulator when executing a turn while running at a speed of 4.5 m/s. During turning, the manipulator is positioned on the side opposite to the direction of the centrifugal force. By positioning itself in this manner, the manipulator generates a counter-torque that opposes the torque produced by the centrifugal force. Consequently, this counter-torque enables the robot to execute sharper turns.

IV-B Aerial reorientation and safe landing

As illustrated in Figure 5, the rotation speed and the total rotation angle vary depending on the type of robot used. Robots equipped with manipulators rotate more rapidly and achieve a larger total rotation angle than a robot without a manipulator, although the extent of these differences varies depending on the specific manipulator used. The disparity in robot performance according to the presence of a manipulator is attributed to the additional inertia provided by the manipulator. Figure 8 depicts the overall process by which the robot utilizes its manipulator when falling upside-down. During the aerial reorientation phase shown in the figure, the robot rotates its manipulator in the opposite direction of the robot base rotation. This movement generates counter momentum, allowing the robot base to reorient its body in the air.

The rotational performance in the air also varies depending on the type of arm used. This is because the counter momentum generated by the manipulator relies on its inertia. Therefore, the robot equipped with the ViperX300S, which has a higher inertia than the WidowX250S, rotates faster and achieves a larger total rotation angle compared to the one equipped with the WidowX250S.

In Figure 5, after approximately 0.3 seconds, there is a region where the robot’s orientation remains constant for the robot equipped with the ViperX300S. This region represents the phase where the robot adjusts its legs into a posture advantageous for a safe landing. This phase can also be seen in Figure 8, where, in the middle of the figure, the robot finishes reorienting its body and adjusts its legs. We found that securing sufficient time to adjust its legs is crucial for a safe landing. For example, the robot equipped with WidowX250S fails to establish this region due to its inadequate speed of rotation in the air, resulting in unsuccessful landing attempts.

IV-C Balancing

The experimental results show that the quadruped robot has a higher survival rate when external forces are applied. Therefore, engineers can expect an enhancement in the robot’s stability if the manipulator is installed on the quadruped robot. However, when the impulse value is less than , no substantial differences are observed. Consequently, installing a manipulator solely for balance purposes would not be efficient, as situations involving an impulse greater than are relatively infrequent.

V CONCLUSIONS

In this study, we propose the integration of a 6-DoF manipulator as a multifunctional tail for quadruped robots to address the challenges posed by the complexity of traditional tails. Through simulation, we demonstrated that mounting a manipulator on a quadruped robot enhances its performance compared to a robot without one in tasks such as rapid turning, aerial reorientation, and balancing. The results indicate that the manipulator can improve the agility and stability of the quadruped robot. We believe that our study broadens the roles of manipulators, enabling robotics engineers to utilize them for additional functionalities when integrated with quadruped robots.

However, our research focuses solely on simulations, not real-world applications. Therefore, it remains to be proven that the manipulator can indeed improve the performance of quadruped robots in real-world scenarios. Additionally, we addressed only a few applications in which quadruped robots can be enhanced by using a manipulator as a tail. There are numerous other potential applications that future studies could explore.

References

- [1] A. M. Wilson, J. Lowe, K. Roskilly, P. E. Hudson, K. Golabek, and J. McNutt, “Locomotion dynamics of hunting in wild cheetahs,” Nature, vol. 498, no. 7453, pp. 185–189, 2013.

- [2] A. Jusufi, D. T. Kawano, T. Libby, and R. J. Full, “Righting and turning in mid-air using appendage inertia: reptile tails, analytical models and bio-inspired robots,” Bioinspiration & biomimetics, vol. 5, no. 4, p. 045001, 2010.

- [3] A. Jusufi, D. I. Goldman, S. Revzen, and R. J. Full, “Active tails enhance arboreal acrobatics in geckos,” Proceedings of the National Academy of Sciences, vol. 105, no. 11, pp. 4215–4219, 2008.

- [4] S. M. O’Connor, T. J. Dawson, R. Kram, and J. M. Donelan, “The kangaroo’s tail propels and powers pentapedal locomotion,” Biology letters, vol. 10, no. 7, p. 20140381, 2014.

- [5] A. Patel and M. Braae, “Rapid turning at high-speed: Inspirations from the cheetah’s tail,” in 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2013, pp. 5506–5511.

- [6] ——, “Rapid acceleration and braking: Inspirations from the cheetah’s tail,” in 2014 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2014, pp. 793–799.

- [7] N. J. Kohut, A. O. Pullin, D. W. Haldane, D. Zarrouk, and R. S. Fearing, “Precise dynamic turning of a 10 cm legged robot on a low friction surface using a tail,” in 2013 IEEE International Conference on Robotics and Automation. IEEE, 2013, pp. 3299–3306.

- [8] C. S. Casarez and R. S. Fearing, “Steering of an underactuated legged robot through terrain contact with an active tail,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 2739–2746.

- [9] T. Fukushima, R. Siddall, F. Schwab, S. L. Toussaint, G. Byrnes, J. A. Nyakatura, and A. Jusufi, “Inertial tail effects during righting of squirrels in unexpected falls: from behavior to robotics,” Integrative and Comparative Biology, vol. 61, no. 2, pp. 589–602, 2021.

- [10] E. Chang-Siu, T. Libby, M. Tomizuka, and R. J. Full, “A lizard-inspired active tail enables rapid maneuvers and dynamic stabilization in a terrestrial robot,” in 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2011, pp. 1887–1894.

- [11] Y. Tang, J. An, X. Chu, S. Wang, C. Y. Wong, and K. S. Au, “Towards safe landing of falling quadruped robots using a 3-dof morphable inertial tail,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 1141–1147.

- [12] A. M. Johnson, T. Libby, E. Chang-Siu, M. Tomizuka, R. J. Full, and D. E. Koditschek, “Tail assisted dynamic self righting,” in Adaptive mobile robotics. World Scientific, 2012, pp. 611–620.

- [13] R. Briggs, J. Lee, M. Haberland, and S. Kim, “Tails in biomimetic design: Analysis, simulation, and experiment,” in 2012 IEEE/RSJ international conference on intelligent robots and systems. IEEE, 2012, pp. 1473–1480.

- [14] J. Norby, J. Y. Li, C. Selby, A. Patel, and A. M. Johnson, “Enabling dynamic behaviors with aerodynamic drag in lightweight tails,” IEEE Transactions on Robotics, vol. 37, no. 4, pp. 1144–1153, 2021.

- [15] H. Huang, A. Loquercio, A. Kumar, N. Thakkar, K. Goldberg, and J. Malik, “More than an arm: Using a manipulator as a tail for enhanced stability in legged locomotion,” arXiv preprint arXiv:2305.01648, 2023.

- [16] H. Ferrolho, V. Ivan, W. Merkt, I. Havoutis, and S. Vijayakumar, “Roloma: Robust loco-manipulation for quadruped robots with arms,” Autonomous Robots, vol. 47, no. 8, pp. 1463–1481, 2023.

- [17] Z. Fu, X. Cheng, and D. Pathak, “Deep whole-body control: Learning a unified policy for manipulation and locomotion,” in Conference on Robot Learning. PMLR, 2023, pp. 138–149.

- [18] J.-P. Sleiman, F. Farshidian, M. V. Minniti, and M. Hutter, “A unified mpc framework for whole-body dynamic locomotion and manipulation,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 4688–4695, 2021.

- [19] S. Zimmermann, R. Poranne, and S. Coros, “Go fetch!-dynamic grasps using boston dynamics spot with external robotic arm,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 4488–4494.

- [20] J. Hwangbo, J. Lee, A. Dosovitskiy, D. Bellicoso, V. Tsounis, V. Koltun, and M. Hutter, “Learning agile and dynamic motor skills for legged robots,” Science Robotics, vol. 4, no. 26, p. eaau5872, 2019.

- [21] J. Lee, J. Hwangbo, L. Wellhausen, V. Koltun, and M. Hutter, “Learning quadrupedal locomotion over challenging terrain,” Science robotics, vol. 5, no. 47, p. eabc5986, 2020.

- [22] T. Miki, J. Lee, J. Hwangbo, L. Wellhausen, V. Koltun, and M. Hutter, “Learning robust perceptive locomotion for quadrupedal robots in the wild,” Science Robotics, vol. 7, no. 62, p. eabk2822, 2022.

- [23] Y. Ma, F. Farshidian, and M. Hutter, “Learning arm-assisted fall damage reduction and recovery for legged mobile manipulators,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 12 149–12 155.

- [24] N. Rudin, H. Kolvenbach, V. Tsounis, and M. Hutter, “Cat-like jumping and landing of legged robots in low gravity using deep reinforcement learning,” IEEE Transactions on Robotics, vol. 38, no. 1, pp. 317–328, 2021.

- [25] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint arXiv:1707.06347, 2017.

- [26] J. Hwangbo, J. Lee, and M. Hutter, “Per-contact iteration method for solving contact dynamics,” IEEE Robotics and Automation Letters, vol. 3, no. 2, pp. 895–902, 2018.

- [27] G. Ji, J. Mun, H. Kim, and J. Hwangbo, “Concurrent training of a control policy and a state estimator for dynamic and robust legged locomotion,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 4630–4637, 2022.

- [28] G. B. Margolis, G. Yang, K. Paigwar, T. Chen, and P. Agrawal, “Rapid locomotion via reinforcement learning,” The International Journal of Robotics Research, vol. 43, no. 4, pp. 572–587, 2024.

- [29] Y. Kim, H. Oh, J. Lee, J. Choi, G. Ji, M. Jung, D. Youm, and J. Hwangbo, “Not only rewards but also constraints: Applications on legged robot locomotion,” IEEE Transactions on Robotics, 2024.

- [30] S. Gangapurwala, A. Mitchell, and I. Havoutis, “Guided constrained policy optimization for dynamic quadrupedal robot locomotion,” IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 3642–3649, 2020.

- [31] J. Lee, L. Schroth, V. Klemm, M. Bjelonic, A. Reske, and M. Hutter, “Evaluation of constrained reinforcement learning algorithms for legged locomotion,” arXiv preprint arXiv:2309.15430, 2023.

- [32] E. Chane-Sane, P.-A. Leziart, T. Flayols, O. Stasse, P. Souères, and N. Mansard, “Cat: Constraints as terminations for legged locomotion reinforcement learning,” arXiv preprint arXiv:2403.18765, 2024.