11email: {20110980001,qldong18,yanweifu}@fudan.edu.cn

Learning Prior Feature and Attention Enhanced Image Inpainting

Abstract

Many recent inpainting works have achieved impressive results by leveraging Deep Neural Networks (DNNs) to model various prior information for image restoration. Unfortunately, the performance of these methods is largely limited by the representation ability of vanilla Convolutional Neural Networks (CNNs) backbones. On the other hand, Vision Transformers (ViT) with self-supervised pre-training have shown great potential for many visual recognition and object detection tasks. A natural question is whether the inpainting task can be greatly benefited from the ViT backbone? However, it is nontrivial to directly replace the new backbones in inpainting networks, as the inpainting is an inverse problem fundamentally different from the recognition tasks. To this end, this paper incorporates the pre-training based Masked AutoEncoder (MAE) into the inpainting model, which enjoys richer informative priors to enhance the inpainting process. Moreover, we propose to use attention priors from MAE to make the inpainting model learn more long-distance dependencies between masked and unmasked regions. Sufficient ablations have been discussed about the inpainting and the self-supervised pre-training models in this paper. Besides, experiments on both Places2 and FFHQ demonstrate the effectiveness of our proposed model. Codes and pre-trained models are released in https://github.com/ewrfcas/MAE-FAR.

Keywords:

Image Inpainting, Attention, Vision Transformer1 Introduction

Image inpainting aims to fill missing regions of images with semantically consistent and harmoniously textured contents. It has a wide range of practical applications, including object removal [14], image editing [24], and so on. Conventional inpainting algorithms [4, 29, 37, 19, 8] rely on visual low-level assumptions and structures to search similar patches for the reconstruction. But they fail to tackle complicated inpainting situations with the limited feature representation.

Deep Neural Networks (DNNs) have recently been exploited by researchers to achieve prominent improvements in image inpainting [33, 31, 6, 40, 42, 56], which thanks to the great capabilities of Convolutional Neural Networks (CNNs) [27], Generative Adversarial Networks (GAN) [15], and attention-based Transformers [41]. However, repairing images corrupted by arbitrary masks with reasonable results is still challenging, especially in high-resolution cases. Because the inpainting model needs to understand the semantic information from masked images, which demands data-driven priors and sufficient model capacities. Furthermore, the dilemmas shown below should be solved.

(i) Limited capacities for modeling good priors. Many pioneering works have tried to introduce prior information to inpainting models. Some works [6, 33, 39, 50, 42, 51] propose multi-stage models, which repair various auxiliary information and corrupted images sequentially to enhance the image inpainting. These methods learn priors in specific fields, such as structures [33, 6] or semantics [39] with good visual interpretability rather than features with more informative priors. Other methods [31, 46] leverage auxiliary losses to introduce additional prior information without extending sufficient model capacities. Complex loss functions cause sophisticated hyper-parameter tuning and a more difficult inpainting training. Besides, some transformer based inpainting methods [42, 51] heavily depend on low-resolution (LR) images generated by the time-consuming iterative sampling, and then upsample them with CNNs. Lahiri et al. [28] learn global latent priors with GAN, which can only solve simple scenes with single-object. Our method incorporates effective prior features from the transformer based representation learning [20] to enhance the inpainting, which make our method achieve superior results without overfitting the transformer results.

(ii) Informative priors for high-resolution cases. High-resolution (HR) image inpainting enjoys more practical implications with advanced electronic products and high-quality images in real-world. Some researches devote to facilitating HR image inpainting with larger receptive fields [40, 52], attention transfer for the high-frequency residual [47], and two-stage upsampling [53]. Unfortunately, these methods still tend to copy meaningless existing textures rather than really understand semantics of HR masked images without directly training with costly HR data. Our method leverages the continuous positional encoding to upsample the prior features for superior inpainting performance in HR images.

(iii) Missing discussions about representation learning for inpainting. Recently, self-supervised pre-training language models [35, 36, 5, 11] have achieved great success in Natural Language Processing (NLP) fields. Such masking and predicting idea and transformer based architectures have been also well explored in vision tasks [20, 45, 3, 58]. But these vision transformers only consider representation learning for classification tasks. To the best of our knowledge, no one has explored the application of self-supervised pre-training vision models to generative tasks, let alone image inpainting. We present comprehensive discussions about the pre-training based representation learning for image inpainting in this paper.

To address these dilemmas, we propose to guide the image inpainting with an efficient Masked AutoEncoder (MAE) pre-training model [20], which is called as prior Feature and Attention enhanced Restoration (FAR). Specifically, an MAE is firstly pre-trained with the masked visual prediction task. We replace some random masks with large and contiguous masks to make the MAE more suitable for the downstream task. Then, features from the MAE decoder are added to the inpainting CNN for the prior guided image inpainting. Moreover, we find that attention relations of MAE among masked and unmasked regions are compatible with CNN inpainting learning. So group convolutions are used to aggregate CNN features with attention scores from MAE, which can improve the inpainting performance a lot. Furthermore, our model can be effectively extended to HR inpainting with a little finetuning of bilinear resized MAE features and the Cartesian spatial grid. Besides, we discuss some pre-training and finetuning tricks to better utilize MAE for superior inpainting performance.

We highlight our contributions as follows. (1) We propose to learn image priors from pre-trained MAE features, which contain informative high-level knowledge and strengthen the inpainting model. (2) We propose to aggregate the inpainting CNN feature with attention scores from MAE to improve the performance. (3) Several pre-training and finetuning tricks are exploited to make our FAR learn better prior features and attentions from MAE. (4) Our method can be simply extended to HR cases and achieve state-of-the-art results. Extensive experiments on Places2 [57] and FFHQ [25] reveal that our proposed model performs better than other competitors.

2 Related work

Image Inpainting with Auxiliaries. Inpainting with auxiliaries has demonstrated success in many previous works. Various priors have been leveraged to enhance the inpainting, such as edges [33, 17], lines [6], gradients [46], segmentations [39, 31], low-resolution images [42, 51], and even latent priors [28]. Specifically, these methods can be categorized into two types. One is to employ the approach of first correcting auxiliary information and then guiding image inpainting with multi-stage models [33, 28, 39, 50, 6, 42, 51]. Since these methods enjoy superior performance and good interpretability, priors leveraged by them are not comprehensive enough to handle the image inpainting properly. Other methods [31, 46] supervise the inpainting model with auxiliary information directly for introducing more positive priors. But capacities of these models are still stuck to tackle tough corrupted cases. Although work [17] combines both advantages mentioned above with the dual structure and texture learning, such low-level features are still insufficient to achieve results with rich semantics.

High-resolution Image Inpainting. HR image inpainting with large mask areas is still challenging. In [53], Yu et al. propose an iterative inpainting method with a feedback mechanism to progressively fill holes. They further learn an upsampling network to handle HR inpainting results based on LR ones. Yi et al. [47] design a contextual residual aggregation mechanism for the restoration of high-frequency residuals, which are added to LR predictions. Besides, various dilated convolutions are used in [52] to enlarge receptive fields for HR inpainting. Furthermore, Suvorov et al. [40] leverage Fast Fourier Convolution (FFC) to learn a global receptive field in the frequency and achieve amazing HR inpainting results with periodic textures. However, these methods still suffer from copying meaningless textures without really ‘understanding’ semantics in HR images. In contrast to previous methods, we transfer prior features from masked autoencoders to HR cases with a continuous positional encoding, which greatly improves the quality of HR inpainting results with meaningful semantic priors.

Masked Visual Prediction. Masked Visual Prediction (MVP) is a self-supervised task for representation learning by masking and predicting image patches. This work is originated in the masked language model [10] of NLP. The Vision Transformer (ViT) [13] has studied self-supervised pre-training by masking patches. BEiT [3] and iBOT [58] learn MVP on high-level discrete tokens and self-distillation respectively. Moreover, MAE [20] proposes an efficient transformer-based masked autoencoder for visual representation learning. MaskFeat [45] further studies MVP, and proposes to use HOG features [9] to get excellent results efficiently. These MVP pre-training models can be finetuned to achieve excellent classification results. However, few discussions about MVP are explored for image generation. To the best of our knowledge, our work firstly studies MVP-based pre-training for image inpainting.

3 Method

Overview. The overall pipeline of our FAR is shown in Fig. 2. For the given masked image , we resize it into 256256 and further enlarge the mask to patch-wise of 1616. Thus we can get the masked image . Then the MAE is applied to encode the prior features as , where indicate total unmasked patches from (Sec. 3.1). Prior features are resized to of the original image size and concatenated with the Cartesian spatial grid as . After that, is encoded by gated convolutions and added to the encoder of Attention-based CNN Restoration (ACR), which is used to restore the original masked image (Sec. 3.2). Moreover, we leverage mean attention scores from the MAE decoder to aggregate unmasked features to masked regions in ACR and achieve the final inpainted image (Sec. 3.2).

In this section, to better discuss the influence of pre-trained MAE on the inpainting model, we provide ablation studies on the subset of Places2 [57] with 5 scenes (about 25,000 training images, and 500 validation images, detailed in the Appendix). All methods are trained with 150k steps in 256256. Although our MAE is pre-trained on the total Places2 training set, ablations among all MAE enhanced methods are still fair and meaningful. For the 512512 ablations, we finetune the 256256 model trained on the whole places2 for 150k steps with the dynamic resizing (Sec. 7) and test them on 1,000 validation images.

3.1 Masked Autoencoder for Inpainting

Training Settings. We use ViT-Base [13] as the backbone of MAE, which contains 12 encoder layers and 8 decoder layers. Although He et al. [20] have released pre-trained MAEs based on ImageNet-1K with random masks, there are still some domain gaps for the inpainting. Instead, we pre-train the MAE on the whole 1.8 million Places2 [57] and 68,000 FFHQ [25] for scene and face inpainting respectively. Validations of both datasets are excepted from the training set for a fair comparison. Moreover, the random mask used in the standard MAE is not amenable to inpainting. Although the masking ratio is high (75%), such noisy masks are easier to be restored by DNNs compared with continuous and large masks with even lower masking ratios [34]. Therefore, we blend continuous masks with 10% to 50% masking ratios and random masks, while the total masking rate remains at 75% as shown in Fig. 3. Specifically, both irregular and segmentation masks [6] are considered in continuous masks. Then we downsample continuous masks to 1616 patch-wise forms with max pooling to ensure all masked patches are set to 1, while unmasked ones are 0, which enlarges masked regions. The mixed masking strategy can improve the learning of prior attention as shown in Tab. 4 of Sec. 3. Other training details are discussed in Sec. 7.

Prior Features from MAE. In MAE [20], only 25% unmasked patches are applied into the encoder, while learnable masked tokens are shared in the decoder for the reconstruction. These masked tokens will be used to predict pixel values in masked regions. This trick makes the encoder enjoy much lower memory cost, and it can be pre-trained with more capacities for better performance in classification tasks. However, during the inpainting, features from the MAE encoder are insufficient. Because we should achieve masked features encoded by masked tokens with stacked attention modules. Therefore, features from decoder layers are more compatible with the inpainting task. To ensure good decoder learning, we chose to use balanced encoder-decoder ViT-Base architecture, which contains 12 encoder layers and 8 decoder layers rather than further enlarging the encoder capacity. In this work, we choose to use features from the last layer of the MAE decoder before the pixel linear projection as the prior features. Since the predicted images are blurry, which may contain limited information, especially for the HR inpainting. Thus an interesting future work would be exploring features from different transformer layers for inpainting***In our latter ablations, we find that using the feature from 6th layer can achieve superior performance in FAR..

Finetuning for Partially Masked Patches. Since the input of MAE is patch-wise tokens in 1616, we have to enlarge some partially masked patches as shown in Fig. 3(c)(d), which may lose information. Intuitively, we further finetune MAE for those partially masked patches with 50 epochs in Places2. Specifically, masked embeddings of these partially masked patches are re-encoded by a new initialized linear layer of decoder. Inputs of these partially masked patches are composed of the concatenation of RGB pixel values (masked pixels are all 0 in 3 channels) and 0-1 masking maps. From the ablations in Tab. 1, the model trained with finetuned MAE performs slightly worse than the original one in FID. As shown in Fig. 4, we think that the finetuned MAE learns more explicit results, which makes the CNN restoration overfit MAE features. So these enlarged masked regions increase training difficulty and can be seen as noise regularization.

| Partial F.T. | PSNR | SSIM | FID | LPIPS |

|---|---|---|---|---|

| 24.51 | 0.864 | 25.49 | 0.113 | |

| 24.67 | 0.864 | 26.00 | 0.111 |

3.2 Attention-based CNN Restoration (ACR)

The design of CNN modules in ACR is referred to LaMa [40], which is an encoder-decoder model including 4 downsampling convolutions, 9 Fast Fourier Convolution (FFC) blocks, and 4 upsampling convolutions. FFC has been demonstrated that it can tackle some HR inpainting cases with strong periodic textures. We further enhance ACR with prior features and attentions from MAE as follows.

Prior Features Upsampling. To overcome inpainting tasks with arbitrary resolutions, the local feature ensemble [7] is leveraged to facilitate the feature warping from MAE to ACR in various resolutions. Given 1616 patch-wise prior features with dimension from the MAE decoder, we resize them into 1/8 of the original image size with the bilinear interpolation. To indicate the continuous position in HR, the Cartesian spatial grid [23] i.e., normalized 2d coordinates is concated to resized features as

| (1) |

where represent the original image height and width respectively; means normalized 2d coordinates grid valued from -1 to 1. As shown in the ablations of Tab. 2, such positional information can improve the HR inpainting results, and is complementary to learn smooth feature representations.

| 2D-coordinate | norm-pixel | PSNR | SSIM | FID | LPIPS |

|---|---|---|---|---|---|

| 24.35 | 0.879 | 25.47 | 0.135 | ||

| 24.31 | 0.880 | 25.33 | 0.135 | ||

| 24.37 | 0.880 | 25.30 | 0.132 |

Prior Features Combination. Four gated deconvolutions [49] are leveraged to upsample . Then these upsampled features are applied to the encoder of ACR. The gated mechanism works for making ACR filter corrupted features adaptively. To integrate prior features to ACR, the general solution is to element-wise add upsampled prior features to the downsampled ones of ACR. However, we observed an unstable training process with the vanilla element-wise addition as shown in the ablations of Fig. 5(a). We try to multiply trainable parameters to the prior features for the element-wise addition to ACR encoder features in , , , and respectively. Moreover, ablation studies of initialized with 0 and 1 are shown in Fig. 5(a) and Tab. 3. The zero initialization enjoys a much more stable convergence. Therefore, the 0-initialized addition is adopted in our model, which is omitted in the follows for simplicity. We further analyze tendencies of different trained with MAE layer 8 (i.e., row 3 of Tab. 3) in Fig. 5(b). From Fig. 5(b), both high-level and low-level features are important for the prior learning, since absolute values of are large. And features in seem have less effect during the inpainting.

| MAE | init- | PSNR | SSIM | FID | LPIPS |

|---|---|---|---|---|---|

| no | 24.12 | 0.859 | 28.01 | 0.124 | |

| no | 24.14 | 0.860 | 28.01 | 0.127 | |

| 0 | 24.34 | 0.860 | 26.83 | 0.117 | |

| 1 | 24.27 | 0.861 | 26.90 | 0.115 |

Prior Attentions. Many inpainting researches show that the attention mechanism is useful for the image inpainting [48, 49, 59, 47]. The classical contextual attention [48] used in inpainting is to aggregate masked features with unmasked ones . The aggregation is based on the attention score as

| (2) |

where indicates normalized cosine similarities of masked and unmasked features; means the softmax normalization among all unmasked features. Then we can get the aggregated masked features .

Unfortunately, the improvement of the attention module is not orthogonal to other effective inpainting strategies. We add two contextual attention (CA) modules [48] to ACR in the same positions as the prior feature aggregation shown in Fig. 2. But no improvement is achieved by the trainable contextual attention as shown in Tab. 4. We think that the restricted improvement is caused by the limited capacity of CNN models. Therefore, we try to use attention relations from the decoder of MAE to overcome the limited capacity. For the decoder layer of MAE, we can get attention scores as

| (3) |

where mean query and key of the attention; is the channels; and indicates a 0-1 mask map, where 1 means masked regions. We make the scores of masked regions to 0 in Eq. (3) that means all masked regions should only pay attention to unmasked ones. Then we average values of total 8 decoder attention scores, and get the prior attention scores as

| (4) |

The aggregations for getting masked features in ACR are executed in the start and the end of FFC blocks as shown in Fig. 2. Then is added to the original features as the residual. Note that we also multiply a zero-initialized learnable parameter to the before the addition instead of using LayerNorm as discussed in [2]. Besides, from Tab. 4, random masking used in the vanilla MAE fails to learn proper attention relations compared with mixed one discussed in Sec. 3.1. The ablation study about different attention layers from MAE are discussed in the Appendix.

| MAE mask type | attention type | PSNR | SSIM | FID | LPIPS |

|---|---|---|---|---|---|

| mixed | no attention | 24.34 | 0.860 | 26.84 | 0.117 |

| mixed | trainable CA | 24.13 | 0.859 | 26.99 | 0.123 |

| random | prior attention | 24.39 | 0.861 | 26.25 | 0.117 |

| mixed | prior attention | 24.51 | 0.864 | 25.49 | 0.113 |

3.3 Loss Functions

Loss Functions of MAE. Our MAE loss is the mean squared error (MSE) between MAE predictions and ground truth pixel values for masked patches as in [20]. Besides, He et al. study to use normalized pixel values of each masked patch as the self-supervised target, which normalizes each masked patch with the mean and standard of this patch. Such a trick can improve the classification quality in their experiments. However, for the inpainting, the global relation among different patches is also important. The patch-wise normalization makes MAE learn more bias for each patch rather than the global information. Thus we study the MAE pre-training ablations with and without the patch-wise normalization as shown in Tab. 2. We find that non-normalized targets can achieve slightly better quality in HR inpainting.

Loss Functions of ACR. ACR is trained as a regular adversarial inpainting model. We adopt the same loss functions as LaMa [40], which include L1 loss, adversarial loss, feature match loss, and high receptive field (HRF) perceptual loss. Specifically, L1 loss is only used to constrain unmasked regions. The discriminator loss is based on PatchGAN [22]. And WGAN-GP [16] is used as the generator loss. The feature match loss [43] based on L1 loss between true and fake discriminator features is also used to stable the GAN training. Furthermore, we also leverage the segmentation pre-trained ResNet50 for the HRF loss as proposed in [40] to improve the inpainting quality. More details about the loss functions of ACR are in the Appendix.

4 Experiments

Datasets. Our model is trained on Places2 [57] and FFHQ [25]. For Places2, both the pre-training of MAE and training of our full model are based on the whole 1.8 million training images, and tested with 36,500 testing images. We prepare additional 1,000 testing images for 512512 experiment. Detailed settings about main experiments and ablations are in the Appendix. We pre-train another MAE on 68,000 training set of FFHQ, which is also used to train the face inpainting model. And other 2,000 images work for testing. Our pre-trained MAE on Places2 and FFHQ can be well generalized to most real-world cases.

| FFHQ Places2 Co. La. Ours EC Co. La. CT. Ours P. 25.2 26.6 26.8 23.3 22.5 24.3 23.4 24.5 S. .889 .903 .906 .839 .843 .869 .835 .871 F. 5.85 6.38 6.15 6.21 1.49 1.63 11.2 1.31 L. .085 .078 .074 .149 .246 .155 .185 .101 |

|

Implementation Detials. Our FAR is implemented with Pytorch based on two 48 GB NVIDIA RTX A6000 gpus. MAE is pre-trained on Places2 and FFHQ for 200 and 450 epochs respectively with batch size 512, while other settings follow the released codes†††https://github.com/facebookresearch/mae except the masking strategy and the partially masked finetuning. For ACR, we employ the Adam optimizer with and , the learning rates are 1e-3 and 1e-4 for the generator and the discriminator. We train our models with 850k steps on Places2 and 150k steps on FFHQ. For every 200k steps on Places2 and 100k steps on FFHQ, the learning rate is reduced by half. To save the computation, we finetune our model on images with higher resolutions, which are dynamically resized from 256 to 512 for 150k steps on Places2.

Masks Settings. To solve real-world application problems, we adopt the masking strategy in [6]. The masks consist of irregular brush masks and COCO [32] segmentation masks ranged from 10% to 50%. During the training, we combine these two types masks in 20%.

Comparison Methods. We compared our model with other state-of-the-art models including Edge Connect (EC) [33], Co-Modulation GAN (Co-Mod) [56], Large Mask inpainting (LaMa) [40], Conditional Texture and Structure Dual Generation (CTSDG) [18] and Image Completion with Transformers (ICT) [42] using the official pre-trained models. We also retrain LaMa on Places2 and FFHQ, and further finetune it with the same settings as ours in the HR inpainting for a fair comparison.

Quantitative Comparisons. We evaluate PSNR, SSIM [44], FID [21], and LPIPS [55] for both 256256 and 512512 in Tab. 5. For 256256 results shown in the left of Tab. 5, our method achieves significant improvements based on the LaMa baseline. Moreover, Co-Mod results are also competitive. For the FFHQ results, since our MAE enhanced method can achieve much more stable and faithful results for face images, PSNR and SSIM of our method is better than Co-Mod. The powerful stylegan [26] architecture helps Co-Mod learn better textures with good FID, but our results have superior human perception (lower LPIPS). For the Places2, our results achieve best results in all metrics.

For the HR 512512 results listed in the right of Tab. 5, our method can outperform all other competitors. Note that most methods fail to tackle HR inpainting tasks in complex scenes. Results of baseline method LaMa are competitive, but our methods still achieve certain advantages compared with LaMa.

| MAE | Attention | Training(sec/batch) | Inference(sec/image) |

|---|---|---|---|

| 1.0101 | 0.0425 | ||

| 1.2510 | 0.0656 | ||

| 1.2604 | 0.0665 |

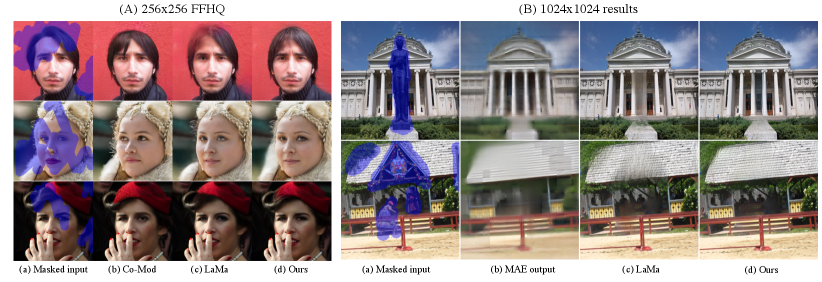

Qualitative Comparisons. We show 256256 qualitative results of Places2 and FFHQ in Fig. 6 and Fig. 7(A) respectively. For the results in Fig. 6, results of EC and CTSDG are blurry and have obvious artifacts. The results of Co-Mod and LaMa have proper qualities. But these two methods generate some unreasonable building architectures, which also cause artifacts. For the results of ICT, they fail to get good quality due to the poor LR reconstruction. Moreover, the slow sampling strategy of ICT makes it hard to test large-scale image datasets for a quantitative comparison. Our method can achieve better inpainting results in both structures and textures. For face images, our method can achieve stable inpainted results with consistent eye gaze.

For qualitative results in 512512, other compared algorithms fail to reconstruct reasonable semantics for certain objects in HR, such as car, necklace, and temple in Fig. 8, except our FAR. Such good results are benefited from informative prior features of MAE, which make the model understand the real categories of objects in HR cases. Besides, as shown in Fig. 8, our method avoids overfitting MAE outputs due to the enlarged masking strategy discussed in Sec. 3. We further provide some 1024 results in Fig. 7(B), which show that MAE can provide expressive priors for both structure and texture reconstructions.

Visualization of Attention. We show the visualization about the attention scores in Fig. 9. Patches attended with the highest attention score are shown in MAE and contextual attention maps, which can be located by the palettes in the first instance of Fig. 9. Attention scores from MAE are more consistent and reasonable compared with learning-based contextual attention [48]. Specifically, some irrelevant patches are attended by masked regions in the contextual attention, which leads to confused attention maps. Besides, although some MAE results are blurry, their attention relations are still effective.

Computation and Complexity. Benefited by the efficient design from [20] with 25% unmasked input tokens to the encoder, we should claim that the MAE pre-training is not heavier than CNNs. As discussed in implemented details, our MAE has been trained for 200 epochs in Places2 with about 10 days on two NVIDIA RTX A6000 gpus, which costs almost the same time for training a LaMa in Places2 for just 800k steps (only 28.4 epochs). Besides, we list times for both training and inference stages tested on A6000 in Tab. 6. It shows that the computation of the prior attention is negligible and can be ignored. MAE and gated convolutions increase about 0.024 seconds for predicting each image, which affects the training a little compared with the time-consuming GAN training. And the inference with 0.0665 seconds for each image is efficient enough for the user interaction, e.g., object removal.

5 Conclusions

This paper proposes an MAE enhanced image inpainting model called FAR. We utilize a masked visual prediction based vision transformer – MAE to provide features for the CNN based inpainting model, which contain rich informative priors with meaningful semantics. Moreover, we apply the prior attention scores from the pre-trained MAE to aggregate masked features, which is proved to work better than learning contextual attention from scratch. Besides, many constructive issues about the pre-trained MAE and image inpainting are discussed in this paper. Our experiments show that our method can achieve good improvements with the prior features and attentions from MAE. Social impacts of our model, especially working on the face dataset are discussed in the Appendix.

Acknowledgements. This work was supported by SMSTC (2018SHZDZX01).

Appendix 0.A Social Impact

All generated results of both the main paper and the appendix are based on learned statistics of the training dataset. Therefore, the results only reflect biases in those released data without our subjective opinion, especially for the face images from FFHQ. This work is only researched for the algorithmic discussion, and related societal impacts should not be ignored by users.

Appendix 0.B Detailed Network Settings

We provide some details for different model components in this section. Gated Convolution Block (GC). For the GC block used for upsampling prior features from MAE, which contains GateConv2D [49]BatchNormReLU. And the GateConv2D works with stride=2.

Encoder and Decoder of ACR. The encoder and decoder of ACR are consisted of vanilla Conv2DBatchNormReLU.

Fast Fourier Convolution Block (FFC). As illustrated in [40], features for FFC are split into local ones encoded by vanilla convolutions and global ones encoded by the spectral transform. The spectral transform is consisted of Fast Fourier Transform (FFT), Conv2DBatchNormReLU, and the inverse FFT. And both the real and imaginary parts are confirmed in the Conv2D after FFT. After the inverse FFT, local and global features are combined as the final output.

Appendix 0.C Loss Functions of ACR

We provide some details about the loss functions of ACR, which are referred to LaMa [40] and include L1 loss, adversarial loss, feature match loss, and high receptive field (HRF) perceptual loss [40]. L1 loss is only calculated between the unmasked regions as

| (5) |

where indicates 0-1 mask that 1 means masked regions; means the element-wise multiplication; indicate the ground truth and predicted images respectively. The adversarial loss is consisted of a PatchGAN [22] based discriminator loss and a WGAN-GP [16] based generator loss as

| (6) |

where is the gradient penalty [16] and . Moreover, the feature match loss [43] , which is based on L1 loss between discriminator features of true and fake samples as

| (7) |

Furthermore, we use the HRF loss in [40] as

| (8) |

where indicates a pretrained segmentation ResNet50 with dilated convolutions, which shows superior performance in inpainting compared with vanilla VGG as discussed in [40]. The final loss of our model can be written as

| (9) |

where set by the experience.

Appendix 0.D More Implement Details

High-Resolution (HR) Finetuning. To save the computation, we find that dynamic finetune the inpainting model from 256 to 512 resolutions can still achieve competitive results. We gradually reduce the resolution from 512 to 256, and then let them back to 512, which can be seen as a cycle. For each epoch in Places2, we finetune the model with 64 cycles.

The Subset of Places2 for Ablations. To flexibly evaluate our ablation studies, we choose to use a subset of Places2 with 5 scenes of ‘bow_window’, ‘house’, ‘village’, ‘dining_room’, and ‘viaduct’ with about 25,000 training images and 500 validation images. This subset contains indoor, outdoor, and natural scenes, which are comprehensive to evaluate the inpainting performance.

Detailed Settings of Places2. We reorganize detailed settings of main experiments and ablations in Tab. 7.

| Exp. | Res. | F.T. from | Places2(train) | Places2(eval) | |||

|---|---|---|---|---|---|---|---|

| whole | subset | whole | subset | HR subset | |||

| (1.8M) | (25,000) | (36,500) | (500) | (1,000) | |||

| MAE | 256 | – | – | – | – | ||

| Main Exp.-1 | 256 | – | |||||

| Main Exp.-2 | 512 | Main Exp.-1 | |||||

| Ablations-1 | 256 | – | |||||

| Ablations-2 | 512 | Main Exp.-1 | |||||

Appendix 0.E User Study

To test the effectiveness of our model with human perception, we conduct user studies on several models. We specifically ask 12 participants who are unfamiliar with image inpainting to judge the quality of inpainted images. FFHQ is compared with three models: Co-Mod [56], LaMa [40] and ours, while Places2 is compared with four methods: Co-Mod, LaMa, CTSDG [18] and ours. We randomly shuffle and combine the outcomes of these algorithms except the masked inputs. After that, volunteers must choose the best one from each group. On both datasets, as shown in Fig. 10, our technique outperforms other competitors. Although Co-Mod also achieves competitive results in Places2, it is trained with extra 6.2 million images from Places365-Challenge, which is much larger than the training set of other competitors.

Appendix 0.F Complete Quantitative Results

Tab. 8 presents more quantitative results for different masking rates ranging from 10% to 50% on datasets FFHQ and Places2. Except for the FID on the FFHQ, our model beats other state-of-the-art methods on other metrics, which demonstrates the superiority of our model.

| FFHQ (256256) | Places2 (256256) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Mask | Co-Mod | LaMa | Ours | EC | Co-Mod | LaMa | CTSDG | Ours | |

| PSNR | 10~20% | 28.45 | 29.84 | 30.11 | 26.61 | 26.40 | 28.23 | 26.73 | 28.36 |

| 20~30% | 26.04 | 27.52 | 27.78 | 24.26 | 23.61 | 25.31 | 24.37 | 25.48 | |

| 30~40% | 24.29 | 25.82 | 26.07 | 22.60 | 21.73 | 23.44 | 22.71 | 23.60 | |

| 40~50% | 22.93 | 24.48 | 24.71 | 21.28 | 20.28 | 22.03 | 21.41 | 22.18 | |

| Mixed | 25.25 | 26.60 | 26.81 | 23.31 | 22.57 | 24.37 | 23.43 | 24.53 | |

| SSIM | 10~20% | 0.938 | 0.950 | 0.951 | 0.913 | 0.926 | 0.942 | 0.913 | 0.942 |

| 20~30% | 0.909 | 0.924 | 0.926 | 0.872 | 0.880 | 0.901 | 0.872 | 0.903 | |

| 30~40% | 0.876 | 0.897 | 0.899 | 0.828 | 0.831 | 0.859 | 0.828 | 0.861 | |

| 40~50% | 0.843 | 0.869 | 0.872 | 0.783 | 0.781 | 0.814 | 0.782 | 0.818 | |

| Mixed | 0.889 | 0.903 | 0.906 | 0.839 | 0.843 | 0.869 | 0.835 | 0.871 | |

| FID | 10~20% | 3.22 | 3.60 | 3.42 | 1.95 | 0.52 | 0.45 | 2.44 | 0.41 |

| 20~30% | 4.66 | 5.20 | 4.94 | 3.79 | 1.00 | 0.95 | 5.62 | 0.81 | |

| 30~40% | 5.68 | 6.57 | 6.14 | 6.98 | 1.65 | 1.73 | 11.43 | 1.40 | |

| 40~50% | 7.04 | 8.69 | 8.12 | 11.50 | 2.38 | 2.82 | 19.88 | 2.20 | |

| Mixed | 5.85 | 6.38 | 6.15 | 6.21 | 1.49 | 1.63 | 11.18 | 1.31 | |

| LPIPS | 10~20% | 0.049 | 0.045 | 0.043 | 0.073 | 0.053 | 0.047 | 0.085 | 0.042 |

| 20~30% | 0.069 | 0.062 | 0.059 | 0.111 | 0.098 | 0.083 | 0.133 | 0.073 | |

| 30~40% | 0.091 | 0.082 | 0.077 | 0.152 | 0.140 | 0.121 | 0.185 | 0.106 | |

| 40~50% | 0.113 | 0.101 | 0.095 | 0.194 | 0.184 | 0.161 | 0.237 | 0.141 | |

| Mixed | 0.085 | 0.078 | 0.074 | 0.149 | 0.246 | 0.155 | 0.185 | 0.101 | |

Appendix 0.G Quantitative Comparisons on DIV2K

We further give quantitative high-resolution results on 100 DIV2K [1] validation images with 2k resolutions in Tab. 9. Following the evaluation protocol of DIV2K in Tab. 9. Our model beats other HR inpainting methods.

| PSNR | SSIM | FID | LPIPS | |

|---|---|---|---|---|

| HiFill | 20.67 | 0.787 | 135.53 | 0.241 |

| LaMa | 21.24 | 0.865 | 118.80 | 0.200 |

| Ours | 21.64 | 0.868 | 113.98 | 0.171 |

Appendix 0.H Experiments with Center Square Masks

Both quantitative and qualitative results of 512512 Places2 test set with 40% center square masks are shown in Tab. 10 and Fig. 11 respectively. Note that our method is trained without any rectangular mask, while masks of Co-Mod include rectangular ones. Co-Mod suffers from hallucinated artifacts and LaMa tends to generate blur results. Our model beat others with best PSNR and LPIPS even without training on rectangular masks, benefited by MAE priors.

| PSNR | SSIM | FID | LPIPS | |

|---|---|---|---|---|

| Co-Mod | 17.59 | 0.755 | 52.38 | 0.262 |

| LaMa | 19.69 | 0.801 | 61.67 | 0.268 |

| Ours | 19.82 | 0.804 | 53.61 | 0.214 |

Appendix 0.I Ablations about Prior Attention from Different Layers

In Tab. 11, we test prior attentions of different layers from the start of the MAE decoder, and find that half-layer (4) just enjoys marginally better FID compared with all-layer (8) used in the main paper. These results show that using such attention priors from MAE is effective, while in general there is no significant difference in using attention from which layer.

| Attn layer | PSNR | SSIM | FID | LPIPS |

|---|---|---|---|---|

| - | 24.34 | 0.860 | 26.84 | 0.117 |

| 2 | 24.50 | 0.863 | 25.61 | 0.112 |

| 4 | 24.51 | 0.862 | 25.38 | 0.113 |

| 6 | 24.54 | 0.863 | 25.67 | 0.114 |

| 8 (ours) | 24.51 | 0.864 | 25.49 | 0.113 |

Appendix 0.J Comparing with More SOTA Methods

We further compare our method with recently proposed ZITS [12] and MAT [30] on Places2 in Tab. 12. Our method can still outperform them with mixed masks.

| 256256 | 512512 | |||||||

|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | FID | LPIPS | PSNR | SSIM | FID | LPIPS | |

| MAT | 22.37 | 0.841 | 1.68 | 0.134 | 21.68 | 0.838 | 32.43 | 0.165 |

| ZITS | 24.42 | 0.870 | 1.47 | 0.108 | 24.23 | 0.881 | 26.08 | 0.133 |

| Ours | 24.53 | 0.871 | 1.31 | 0.101 | 24.33 | 0.880 | 25.39 | 0.119 |

Appendix 0.K More Qualitative Results

More 256256 results of Places2 and FFHQ are shown in Fig. 12 and Fig. 13 respectively. For face images, we recommend to zoom-in for details near the eye regions. Our method tends to generate consistent eyes for face inpainting. We also provide more 512512 results of Places2 in Fig. 14, and some 10241024 results from DIV2K [1] in Fig. 15. For the HR inpainting, we find an interesting phenomenon that the MAE enhanced results enjoy larger receptive fields for the structural recovery in HR cases as shown in the first row of Fig. 15. Besides, for a better reading experience, 1k results shown in the main paper are slightly compressed. We show the high quality ones in Fig. 16.

Appendix 0.L Limitations and Future Works

Although our proposed FAR is powerful enough to inpaint impressive results, it still suffers from fail cases as shown in Fig. 17. MAE has some difficulty in exactly recovering the object/building boundaries or some complex man-made structures, which leads to some ambiguity. To tackle this problem, we think that structure priors can provide more exact boundaries for high-fidelity results. Besides, as mentioned in the main paper, an interesting future work would be exploring features from different MAE layers for inpainting. In our opinion, such improvements are orthogonal to other proposed components in this paper. Our pre-trained Places2 MAE will be released, which is benefit for the community to further study the representation learning based image inpainting. Moreover, although our MAE pre-trained on Places2 is generalized enough for the inpainting, pre-training MAEs on larger datasets (such as ImageNet-22K [38] or even JFT-3B [54]) may achieve superior downstream performance.

References

- [1] Agustsson, E., Timofte, R.: Ntire 2017 challenge on single image super-resolution: Dataset and study. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (July 2017)

- [2] Bachlechner, T., Majumder, B.P., Mao, H.H., Cottrell, G.W., McAuley, J.: Rezero is all you need: Fast convergence at large depth. arXiv preprint arXiv:2003.04887 (2020)

- [3] Bao, H., Dong, L., Wei, F.: Beit: Bert pre-training of image transformers. arXiv preprint arXiv:2106.08254 (2021)

- [4] Bertalmío, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. Proceedings of the 27th annual conference on Computer graphics and interactive techniques (2000)

- [5] Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T.J., Child, R., Ramesh, A., Ziegler, D.M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., Amodei, D.: Language models are few-shot learners. ArXiv abs/2005.14165 (2020)

- [6] Cao, C., Fu, Y.: Learning a sketch tensor space for image inpainting of man-made scenes. arXiv preprint arXiv:2103.15087 (2021)

- [7] Chen, Y., Liu, S., Wang, X.: Learning continuous image representation with local implicit image function. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8628–8638 (2021)

- [8] Criminisi, A., Pérez, P., Toyama, K.: Object removal by exemplar-based inpainting. 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings. 2, II–II (2003)

- [9] Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05). vol. 1, pp. 886–893 vol. 1 (2005). https://doi.org/10.1109/CVPR.2005.177

- [10] Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

- [11] Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. ArXiv abs/1810.04805 (2019)

- [12] Dong, Q., Cao, C., Fu, Y.: Incremental transformer structure enhanced image inpainting with masking positional encoding (2022)

- [13] Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., Houlsby, N.: An image is worth 16x16 words: Transformers for image recognition at scale. CoRR abs/2010.11929 (2020), https://arxiv.org/abs/2010.11929

- [14] Elharrouss, O., Almaadeed, N., Al-Maadeed, S., Akbari, Y.: Image inpainting: A review. Neural Processing Letters 51(2), 2007–2028 (2020)

- [15] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. Advances in neural information processing systems 27 (2014)

- [16] Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.: Improved training of wasserstein gans. arXiv preprint arXiv:1704.00028 (2017)

- [17] Guo, X., Yang, H., Huang, D.: Image inpainting via conditional texture and structure dual generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 14134–14143 (2021)

- [18] Guo, X., Yang, H., Huang, D.: Image inpainting via conditional texture and structure dual generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). pp. 14134–14143 (October 2021)

- [19] Hays, J., Efros, A.A.: Scene completion using millions of photographs. ACM Transactions on Graphics (SIGGRAPH 2007) 26(3) (2007)

- [20] He, K., Chen, X., Xie, S., Li, Y., Doll’ar, P., Girshick, R.B.: Masked autoencoders are scalable vision learners. ArXiv abs/2111.06377 (2021)

- [21] Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: Gans trained by a two time-scale update rule converge to a local nash equilibrium (2018)

- [22] Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1125–1134 (2017)

- [23] Jaderberg, M., Simonyan, K., Zisserman, A., Kavukcuoglu, K.: Spatial transformer networks. CoRR abs/1506.02025 (2015), http://arxiv.org/abs/1506.02025

- [24] Jo, Y., Park, J.: Sc-fegan: Face editing generative adversarial network with user’s sketch and color. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 1745–1753 (2019)

- [25] Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4396–4405 (2019). https://doi.org/10.1109/CVPR.2019.00453

- [26] Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J., Aila, T.: Analyzing and improving the image quality of stylegan. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8110–8119 (2020)

- [27] Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 25, 1097–1105 (2012)

- [28] Lahiri, A., Jain, A.K., Agrawal, S., Mitra, P., Biswas, P.K.: Prior guided gan based semantic inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 13696–13705 (2020)

- [29] Levin, A., Zomet, A., Weiss, Y.: Learning how to inpaint from global image statistics. Proceedings Ninth IEEE International Conference on Computer Vision pp. 305–312 vol.1 (2003)

- [30] Li, W., Lin, Z., Zhou, K., Qi, L., Wang, Y., Jia, J.: Mat: Mask-aware transformer for large hole image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 10758–10768 (2022)

- [31] Liao, L., Xiao, J., Wang, Z., Lin, C.W., Satoh, S.: Guidance and evaluation: Semantic-aware image inpainting for mixed scenes. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXVII 16. pp. 683–700. Springer (2020)

- [32] Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: Common objects in context. In: European conference on computer vision. pp. 740–755. Springer (2014)

- [33] Nazeri, K., Ng, E., Joseph, T., Qureshi, F., Ebrahimi, M.: Edgeconnect: Structure guided image inpainting using edge prediction. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (2019)

- [34] Ntavelis, E., Romero, A., Bigdeli, S., Timofte, R., Hui, Z., Wang, X., Gao, X., Shin, C., Kim, T., Son, H., et al.: Aim 2020 challenge on image extreme inpainting. In: European Conference on Computer Vision. pp. 716–741. Springer (2020)

- [35] Radford, A., Narasimhan, K.: Improving language understanding by generative pre-training (2018)

- [36] Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., Sutskever, I.: Language models are unsupervised multitask learners (2019)

- [37] Roth, S., Black, M.J.: Fields of experts: a framework for learning image priors. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) 2, 860–867 vol. 2 (2005)

- [38] Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A.C., Fei-Fei, L.: ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV) 115(3), 211–252 (2015). https://doi.org/10.1007/s11263-015-0816-y

- [39] Song, Y., Yang, C., Shen, Y., Wang, P., Huang, Q., Kuo, C.C.J.: Spg-net: Segmentation prediction and guidance network for image inpainting. arXiv preprint arXiv:1805.03356 (2018)

- [40] Suvorov, R., Logacheva, E., Mashikhin, A., Remizova, A., Ashukha, A., Silvestrov, A., Kong, N., Goka, H., Park, K., Lempitsky, V.: Resolution-robust large mask inpainting with fourier convolutions. arXiv preprint arXiv:2109.07161 (2021)

- [41] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in neural information processing systems. pp. 5998–6008 (2017)

- [42] Wan, Z., Zhang, J., Chen, D., Liao, J.: High-fidelity pluralistic image completion with transformers. arXiv preprint arXiv:2103.14031 (2021)

- [43] Wang, T.C., Liu, M.Y., Zhu, J.Y., Tao, A., Kautz, J., Catanzaro, B.: High-resolution image synthesis and semantic manipulation with conditional gans. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 8798–8807 (2018)

- [44] Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13(4), 600–612 (2004)

- [45] Wei, C., Fan, H., Xie, S., Wu, C., Yuille, A.L., Feichtenhofer, C.: Masked feature prediction for self-supervised visual pre-training. ArXiv abs/2112.09133 (2021)

- [46] Yang, J., Qi, Z., Shi, Y.: Learning to incorporate structure knowledge for image inpainting. CoRR abs/2002.04170 (2020), https://arxiv.org/abs/2002.04170

- [47] Yi, Z., Tang, Q., Azizi, S., Jang, D., Xu, Z.: Contextual residual aggregation for ultra high-resolution image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7508–7517 (2020)

- [48] Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Generative image inpainting with contextual attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 5505–5514 (2018)

- [49] Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 4471–4480 (2019)

- [50] Yu, Y., Zhan, F., Lu, S., Pan, J., Ma, F., Xie, X., Miao, C.: Wavefill: A wavelet-based generation network for image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 14114–14123 (2021)

- [51] Yu, Y., Zhan, F., Wu, R., Pan, J., Cui, K., Lu, S., Ma, F., Xie, X., Miao, C.: Diverse image inpainting with bidirectional and autoregressive transformers. arXiv preprint arXiv:2104.12335 (2021)

- [52] Zeng, Y., Fu, J., Chao, H., Guo, B.: Aggregated contextual transformations for high-resolution image inpainting. arXiv preprint arXiv:2104.01431 (2021)

- [53] Zeng, Y., Lin, Z., Yang, J., Zhang, J., Shechtman, E., Lu, H.: High-resolution image inpainting with iterative confidence feedback and guided upsampling. In: European Conference on Computer Vision. pp. 1–17. Springer (2020)

- [54] Zhai, X., Kolesnikov, A., Houlsby, N., Beyer, L.: Scaling vision transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12104–12113 (2022)

- [55] Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 586–595 (2018)

- [56] Zhao, S., Cui, J., Sheng, Y., Dong, Y., Liang, X., Chang, E.I., Xu, Y.: Large scale image completion via co-modulated generative adversarial networks. arXiv preprint arXiv:2103.10428 (2021)

- [57] Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., Torralba, A.: Places: A 10 million image database for scene recognition. IEEE transactions on pattern analysis and machine intelligence 40(6), 1452–1464 (2017)

- [58] Zhou, J., Wei, C., Wang, H., Shen, W., Xie, C., Yuille, A., Kong, T.: ibot: Image bert pre-training with online tokenizer. arXiv preprint arXiv:2111.07832 (2021)

- [59] Zhou, T., Ding, C., Lin, S., Wang, X., Tao, D.: Learning oracle attention for high-fidelity face completion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7680–7689 (2020)