Learning Latent Traits for Simulated Cooperative Driving Tasks

Abstract

To construct effective teaming strategies between humans and AI systems in complex, risky situations requires an understanding of individual preferences and behaviors of humans. Previously this problem has been treated in case-specific or data-agnostic ways. In this paper, we build a framework capable of capturing a compact latent representation of the human in terms of their behavior and preferences based on data from a simulated population of drivers. Our framework leverages, to the extent available, knowledge of individual preferences and types from samples within the population to deploy interaction policies appropriate for specific drivers. We then build a lightweight simulation environment, HMIway-env, for modelling one form of distracted driving behavior, and use it to generate data for different driver types and train intervention policies. We finally use this environment to quantify both the ability to discriminate drivers and the effectiveness of intervention policies.

I INTRODUCTION

For robots to successfully cooperate with humans to solve complex tasks, it is important to equip them with a rich understanding of human behavior. For example, when solving a complex task such as driving, human drivers often vary in their preferences for when and how to receive help. If a robot offers help at the wrong time or in the wrong manner, it can lead to a drop in task performance, reducing trust, and making further cooperation difficult.

An individual driver’s receptivity towards help is a function of many things, such as the specifics of the task, the current situation, and the individual’s own traits (such as tendency to be inattentive or make impulsive decisions) and preferences (such as willingness to accept help or tolerate risk). Modern driver-assistance systems (e.g. lane-keep assist, forward collision warning systems) are not adaptive, and intervene irrespective of whether the driver is a teenage novice requiring momentary, repeated help to teach them to become a better driver, or an experienced driver suffering impairment and in need of more significant assistance. This can lead to sub-optimal outcomes. Developing more human-aware solutions to cooperation is challenging, but will allow assistance systems to be better tailored towards individuals, similar to how good driving instructors learn to adapt their styles to assist drivers with different needs.

In this work, we posit that better solutions to cooperative human-machine teaming in driving can be achieved by first observing a given driver’s behaviors over time, then using a summary of that behavior (in the form of a compact learned representation) to understand how to help that driver. Rather than being reactive to the situation, our approach finds the best intervention strategy for the driver by observing their driving behaviors in the setting of a population of drivers, for whom interactions policies have been designed. Large-scale datasets are abundant in driving; however, due to practical considerations (privacy, cost, lack of human-centric measurements), they often do not include any direct measures of driver traits or preferences for receiving assistance. Moreover, the majority of typical driving trips do not reveal differences between individuals. Given these limitations, finding a representation that is useful for a machine to interact with the driver is a challenging problem.

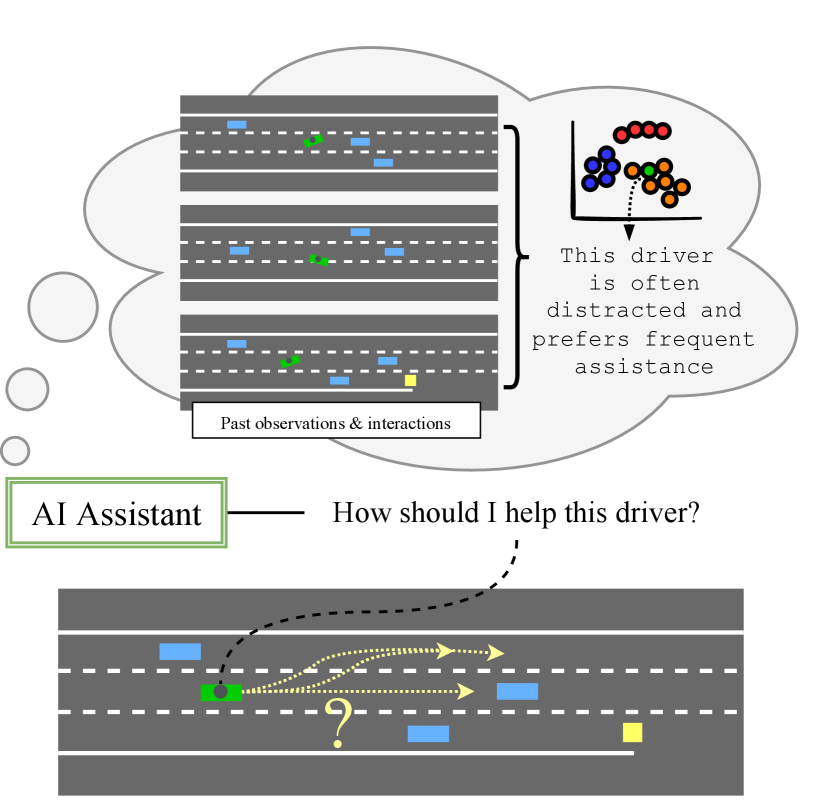

We propose to tackle this question by embedding drivers in a latent space of possible behavior types, and using this embedding to specify policies and rewards that encode the human’s intent. A consequence of this is that we can leverage the policies and rewards to form cooperative teams [1, 2] and the latent representations to deploy the best assistance modality for a given user (e.g. the type or frequency of intervention). Latent representations have shown promise for personalization in domains outside of driving, such as sentiment analysis [3], product recommendation [4, 5], intent recognition [6], and dialogue understanding [7]. We show, via simulation, that the approach holds promise in identifying traits that are important for aiding individual drivers at runtime, e.g. by helping to curb aggressive driving or to reduce distraction. Figure 1 illustrates the approach.

Key contributions.

-

1.

We propose a framework for uncovering latent structure representing the traits of a driver based on patterns of driving observed over multiple demonstrations of behavior, and we tie this latent strucuture to the correct system for intervention.

-

2.

We introduce a new, simulation environment—HMIway-env—that captures different facets of human driving behavior; in particular, we use it to model distraction and cautiousness of human drivers, validated via human subjects.

-

3.

We use this environment as a basis for learning an assistive policy for interacting with a driver.

-

4.

We study the efficacy of our approach for driver inference with respect to different modeled cognitive factors in a simulated highway driving scenario.

II RELATED WORK

Previous work on devising human-understandable moves in games of Chess was introduced in [8]. Many other papers have explored cooperative teaming using Theory-of-Mind to understand the human mental condition, in terms of benchmark frameworks [9], models for exploration and planning [10], and the watch-and-help challenge [11]. Some of these works have approached this from the perspective of an embodied AI system cooperating with humans [12], while others have extended this basic premise to learn how to teach humans in cooperative tasks [13], while yet others have appealed to more data-free approaches [14].

Approaches for learning personalized models of humans in an online fashion are also prevalent. These include personality modeling for dialog systems [7], latent belief space planning under uncertainty [15], and using latent factors in defining winning strategies for the AI system in zero-sum games with a human [6]. In driving and other motion prediction contexts, there are several examples of preference learning, including works that treat the problem as one of intent prediction [16], those involving Theory-of-Mind models mapped to a graph representation [17], and those that involve few-shot learning [18]. Several efforts have been made to characterize preference learning within reinforcement learning [19, 20], including active learning approaches [21, 22] that are highly suited for human robot interactions, but do not translate easily to drivers on the road.

In the offline setting, several papers have addressed the problem of learning an individual among a population. In the driving domain, the latent parameterization of a subject agent is learnt via an autoencoder in [23]. Other approaches focused on specific social states that pertain to right-of-way [24] or maneuvers that are evident in relatively short horizons of behavior [25, 26].

In our work, we aim to learn as much prior information as possible from observations of a population’s behavioral history and track given drivers over several examples of an individual’s driving behavior. We focus on the problem of distinguishability of multiple possible factors, including those that tie directly to states that we should modify. In particular, we learn whether an individual tends to become distracted which, in turn, gives insight into which intervention policy to use.

III BACKGROUND

III-A Adversarial Inverse Reinforcement Learning

Our objective is based on the premise of learning a reward function from a dataset, which we cast as an inverse reinforcement learning (IRL) problem. We adopt an adversarial formulation of IRL [27], where the reward function is learned in a generative adversarial network (GAN). This formulation is suited to data-rich applications and robust to recovering the actual reward, up to a constant.

Markov Decision Processes. A Markov Decision Process (MDP) is defined by the tuple , where is a state space, is an action space, is a stochastic state transition function, is a reward function, is a discount factor, and is an initial state distribution. In the IRL setup, and are unknown, and must be recovered.

MaxEnt IRL. In maximum-entropy IRL, the objective is to find a policy that is exponentially close to the set of demonstration trajectories. In other words, it maximizes the entropy-regularized -discounted reward

| (1) |

where is the entropy of the policy distribution, i.e. over a time horizon . The demonstrations are given by a set of trajectories, which denote the state-action pairs . A collection of trajectories, complemented by an identifier of the human driver, , forms a dataset of demonstrations . We assume that trajectories may be of different lengths.

In [27], the adversarial formulation of the MaxEnt problem is solved via a GAN. Within the GAN, the discriminator takes the form

| (2) |

where , is a discriminator network with parameters , is factored as , are the policy parameters, and , are the base and potential components of the reward.

IV PROBLEM STATEMENT

We acquire a set of driving data from a variety of human drivers in various driving scenarios. This data consists of the driver’s actions, the motion of the vehicle, and interactions of a human driver with a human-machine interface (HMI) encoded as an HMI input. Our goal is to learn a latent variable of driver traits, , that can help to govern how an HMI may best help a human driver, and an interaction policy, , conditioned on knowledge of and an action of the human in some state , where is an interaction choice. We consider the simplest case where the action of the AI system is a binary signal passed to the driver, but this can be extended to any continuous or discrete signal to represent a wider range of interactions.

Running example: A tale of four drivers. We outline four types of drivers, each of who behave and respond differently to interactions to speed up or slow down during a highway merge scenario:

-

1.

Lisa: a confident, often attentive driver who most likely ignores any HMI assistance.

-

2.

Marge: a cautious, often attentive driver, very receptive to HMI assistance.

-

3.

Bart: a confident, often distracted driver who most likely ignores HMI assistance.

-

4.

Homer: a cautious, often distracted driver, very receptive to HMI assistance.

By learning to differentiate between these differing types of behavior and preference, we should be able to generate a more effective interaction policy.

V LATENT MODELING OF COGNITIVE TRAITS

Our insight is that modeling human driving behaviors can be treated as a meta-learning problem that imparts structure onto a latent representation according to the differences in behavior between individuals. In general, the unique characteristics of individual drivers can easily be obscured by noise when observing a single time point, but when aggregating observations over many samples of driving, it becomes possible to distinguish one individual from another. This is especially true in the driving domain, where aggregation of several examples of one human’s driving can illuminate individual characteristics of their behavior. We learn this structure using a dataset encompassing a population of drivers, and then exploit this structure to learn, and apply, the correct intervention policy. Although we do not explore it here, our learned human reward structures and policies are known to admit rich human-AI teaming and shared autonomy strategies [1, 2].

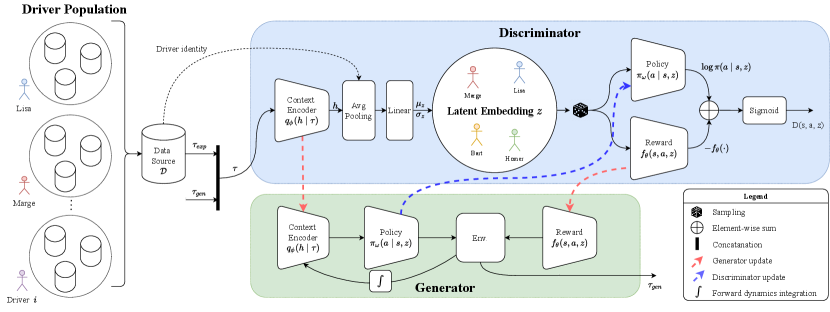

V-A Trait- and Preference-Aware Adversarial IRL

We adopt a meta-IRL approach [29] allowing to learn a reward structure and policy for the human from data, conditioned on the characteristics of particular humans. The overall framework is depicted in Fig. 2. A latent variable is introduced that captures the differences in behaviors between humans, i.e. their traits. Batches of time-series data of the human and their observations, each pre-identified per human, are fed to the learning framework. During each epoch, training alternates between a discriminator and a generator. The discriminator combines a context encoder that captures the traits of a driver and a reward function that encodes the rewards, penalties and preferences of the human agent. Roll-outs generated from a frozen-parameter policy are used to compute the loss terms that allow for the joint optimization of context encoder and reward function. Upon convergence, the discriminator learns to distinguish between the driver-generated and policy-generated trajectories. The discriminator takes the adversarial IRL form:

| (4) |

The generator, on the other hand, uses the frozen context encoder and reward function to train a trait-conditioned policy for the human. We describe each of these components below.

Context encoder. The context encoder is represented as a network, , defining the probability of given the trajectory . We represent as a long short-term memory (LSTM) network [30], taking in a trajectory history. The hidden layer is fed into two linear layers representing the mean and log-variance of the latent encoding. For all of our experiments, we adopt a single-hidden-layer LSTM with 128 units.

Pooling layer. To account for sparsity of behavior indicative of traits, we pool together examples of drivers, determined based on a unique identifier such that we capture the aggregate behavior of the human’s history of behaviors within the latent embedding. We insert this pooling layer just prior to the linear operations mapping LSTM hidden state to the statistics of , i.e. , , and hence it is subsumed within . Although we may use any aggregation operation, we choose an average-pooling operation over for our experiments. We ensure that the pools are consistently sized by maintaining a fixed pooling size, and shuffling the batches for a particular human at each training round.

Reward function. We adopt a discounted reward which maps traits, , and states, actions, and next-states to a real-valued reward. To remove reward ambiguity while learning, we adopt the form [27]. We use a two-layer multi-layer perceptron (MLP) with 32 units per layer for our base reward network and a single-layer MLP with 32 units as our potential network .

Policy. Our policy is a 32-unit feed-forward network, , that encodes the probability of the human taking action given its perception of the world and their trait. We use the policy gradient algorithm proximal policy optimization (PPO) [28] to train such a policy using the empirical reward structure and context encoder.

Discriminator losses. Finally, we describe the losses used for training the discriminator. See Table I for a complete list. Loss encourages the likelihood of the trajectories under the reward, to match those of the demonstrated trajectories, . Loss encourages high mutual information, , between the trajectories and latent variables. The contrastive loss [31] promotes cohesion of pairs of latent variables , having the same label () and separation of pairs , with dissimilar labels (. Here, labels can represent different driver types, different preferences for receiving AI help, and may be any distance measure; we choose the norm. One can soften the indicator function in order to more generally capture a continuum or partial ordering of labels. Finally, regularizes the latent variables according to a unit Gaussian. We apply weights on the final three terms: 5.0 for , 10.0 for and for .

| Reconstruct trajectory | ||

|---|---|---|

| M.I. between and trajectory | ||

| Contrastive loss between driver types or preferences | ||

| Latent regularization |

VI MODELING A DISTRACTED DRIVING INTERVENTION SYSTEM

We introduce a joint human-AI driving environment model, dubbed HMIway-env, built on highway-env [32] to capture: (a) the cautiousness exhibited by a driver, (b) the likelihood of the driver to become distracted, and (c) the receptivity of a driver to an external alert issued by a vehicle AI system. Our model captures momentary lapses in driving, such as when a driver is engaged in a secondary task (e.g. cellphone use) or is otherwise inattentive to the road. It can also encompass delays in action that might result, for example, from the driver being engaged in conversation or in a state of high cognitive load [33].

Highway-env is itself built on the OpenAI Gym environment [34], and is designed primarily for studying and training models for tactical decision-making tasks for autonomous driving. The behavior of other vehicles on the road are modelled using the intelligent driver model [35] and initial speeds are randomized in a range within the prescribed speed limits of the road on which they are spawned. Similar to the approach taken by Morton et. al. [23], the observations fed into the policy encode the positions and velocities of nearby vehicles as lidar observations.

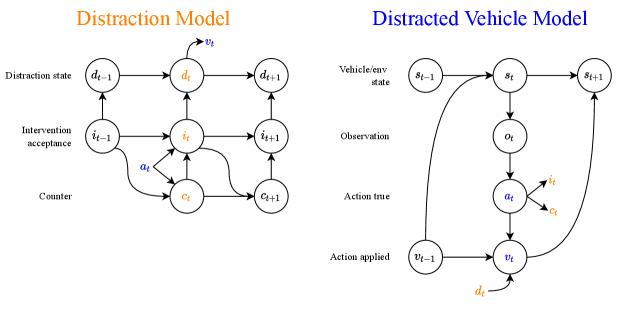

Driving behavioral traits. We simulate three traits of interest, cautiousness—the tendency of a human to treat the road scene with more or less caution, distractability—the tendency of a human to become distracted while driving, and preference for AI help. To model cautiousness, we dilate the spatial footprint of the surrounding vehicles so that the perceived distance to them is reduced. Accordingly, the obstacle inflation factor is a multiplier placed on all objects to achieve this dilation. This is meant to model the tendency of drivers to leave large headway gap with other drivers. We make the assumption that cautiousness of a driver is directly linked to their tendency to prefer help from an assistive AI system. We further model distractability via a controlled Markov model. In our model, see Fig. 3, this is represented by two states: a distraction state, , encoding whether the driver is distracted or attentive, and an acceptance state, that encodes whether the driver has been influenced by the vehicle AI’s alert signal.

VI-A AI-Mediated Driver Distraction Model

In Figure 3 (right), the controlled Markov model consists of two actions: the vehicle action , and the AI’s intervention action . We introduce a counter variable to encode how long a successful intervention by the vehicle AI remains in effect. That is, when the vehicle AI issues an alert, the effect of the alert persists for a fixed time window, during which the acceptance state remains 1.

In our model, the transitions for the acceptance state and are determined as follows:

| if | (5) | |||

| if | (6) | |||

| if | ||||

| (7) |

Relations (5) and (6) capture the behavior that, when a driver complies with an alert from the vehicle AI, their acceptance state is always set to be 1, regardless of the acceptance state at the previous time step. Additionally, is set to be 0, indicating that the vehicle AI’s intervention has been re-triggered. If the driver’s acceptance state is 0, then it continues to be 0 if no alert has been received. In (7), if the acceptance state is already 1 (which implies that the vehicle AI’s alert was already accepted by the driver at an earlier time step), then the acceptance state continues to be 1 (that is the alert continues to have an effect on the driver) as long as remains within the intervention effectiveness window. Once is greater than the window size, the acceptance state is reset to 0, if no more alerts are accepted.

| if crashed, else 0 | |

| , if in right lane, else 0 | |

| if in merging lane, else 0 | |

| if is move_left or move_right, else 0 | |

| if , else 0 | |

| if and , else 0 | |

| if with and , else 0 |

| Driver (ID) | Infl. | |||

|---|---|---|---|---|

| Lisa (0): distractibility, cautiousness | 0.2 | 0.8 | 0.01 | 3.0 |

| Marge (1): distractibility, cautiousness | 0.2 | 0.8 | 1.0 | 9.0 |

| Bart (2): distractibility, cautiousness | 0.8 | 0.2 | 0.01 | 3.0 |

| Homer (3): distractibility, cautiousness | 0.8 | 0.2 | 1.0 | 9.0 |

Mediating distraction. The distraction variable evolves as a controlled Markov chain whose transition probabilities are modulated according to the acceptance state of the driver. The amount of modulation is controlled by the intervention effective factor, , which captures the driver’s willingness to be influenced by the alert issued by the vehicle AI. Crucially, we assume that depends on the level of cautiousness of the driver. Upon accepting the alert from the vehicle AI, the baseline transition probabilities of the distraction state Markov chain are modulated in such a way the probability of becoming distracted is reduced and of becoming more attentive is increased. Specifically, if is the baseline probability of transitioning from an attentive state () to a distracted state (), the conditional (modified according to the acceptance state) transition probabilities for the Markov model is given by

| (8) |

Likewise, the probability of transitioning from a distracted to an attentive state becomes higher when the driver is willing to accept the AI agent’s alert. Therefore,

| (9) |

where is the baseline probability of transitioning from a distracted to an attentive state.

From the above equations, we can see that if the driver accepts an alert from the vehicle’s AI, the acceptance state will be set to 1, and as a result the transition probabilities in (8) and (9) are modulated and this modulation remains in effect for at least time steps.

In our framework, at every timestep , first is updated, followed by the acceptance state and then finally the distraction variable .

For , the value of , the distraction state, affects the applied vehicle action as follows,

| (10) |

with .

Human subject validation. We lastly validated the model using a human studies experiment conducted on 500 individuals via a crowd-sourced survey. Each individual was shown a small set of example roll-outs drawn from the model, and was asked to rate how safe, distracted, risky, or similar to their own driving the behavior was. The findings of this study revealed that the intended behavior of the model was broadly consistent with human expectation of distracted and cautious driving, although further analysis is needed to account for judgement variations over a wider set of roll-outs.

VII LEARNING AN INTERVENTION POLICY

We frame the intervention as a reinforcement learning (RL) problem, and train a composite policy of the human and AI system operating together. Since we express both the human and AI system in terms of their higher-level goals and constraints in terms of a system of rewards, we may take the agent (the policy) to represent both the human and AI system operating together or two policies that interact with one another in a sequential manner. To generate our results, we opt for a 2-stage training protocol.

In the first stage, we train a driver policy that takes into account the different driver types. In the second stage, the HMI policy is trained on an environment that subsumes the driver policy. The combined human-AI policy consists of two action components: (a) the human-initiated vehicle actions () and (b) the AI system’s interventional actions on the driver (). For human-initiated vehicle actions, the action space consists of a discrete set of semantic actions comprising {speed_up, slow_down, keep_speed, move_left, move_right}, where the first two actions result in changes in speed, while the last two bring about lane changes. The action space for the vehicle’s interventions is binary and consists of a discrete set of interventional actions given by {alert, no_alert}. The mechanism by which affects the vehicle is facilitated by the intermediate distraction and alert-acceptance model described in Section VI-A.

| Lisa | Marge | Bart | Homer | |

| Lisa | 445 | * | * | * |

| Marge | * | 446 | * | * |

| Bart | * | * | 444 | * |

| Homer | * | * | * | 458 |

| AvgHMI | 447 | 421 | 432 | 391 |

| NoHMI | 451 | 423 | 428 | 404 |

| Lisa | Marge | Bart | Homer | |

|---|---|---|---|---|

| Lisa | -85 | * | * | * |

| Marge | * | -80 | * | * |

| Bart | * | * | -123 | * |

| Homer | * | * | * | -117 |

| AvgHMI | -181 | -45 | -744 | -162 |

| NoHMI | -192 | -176 | -745 | -770 |

VII-A Reward Structure

Table II shows the full list of driving-related rewards used by the joint human-vehicle AI model capturing a joint policy trained for the actions of both the human and AI system.

, , , , are the reward components pertaining to driving performance, while pertains to the human and captures the rewards the vehicle AI receives for issuing sparse alerts. is the reward term that connects the vehicle AI to the human.

For training our models, we set the coefficients related to driving rewards to values such that the vehicle favors being safe on the right lane, at higher speeds and seeks to minimize lane changes.

VIII EXPERIMENTS

VIII-A Dataset Generation and Latent Trait Learning

To generate training data, we sample from four driver types spanning levels of distraction and levels of cautiousness, as per our running example. We generate timesteps per type, according to the values in Table III. We generate episodes based on a merge scenario, pictured in Fig. 1, having three available lanes, and with a maximum of 20 randomly-placed vehicles. The actions are stepped at a rate of 5 Hz, while the simulator is updated at 15 Hz.

We consider this dataset as ground truth, and execute training based on three assumptions on what aspects of the data are labeled.

- Unsupervised.

-

We assume no supervision on the data, and allow the latent embedding to be learned purely based on the learner’s ability to detect nuances in behavior.

- Supervised on driver ID.

-

We train in the general case where the types of drivers are fully labeled.

- Supervised on preferences.

-

We assume preferences of the driver are identifiable. We argue that such labels could be obtained more easily than for the case with ID supervision, via preference queries given to a subset of drivers [21].

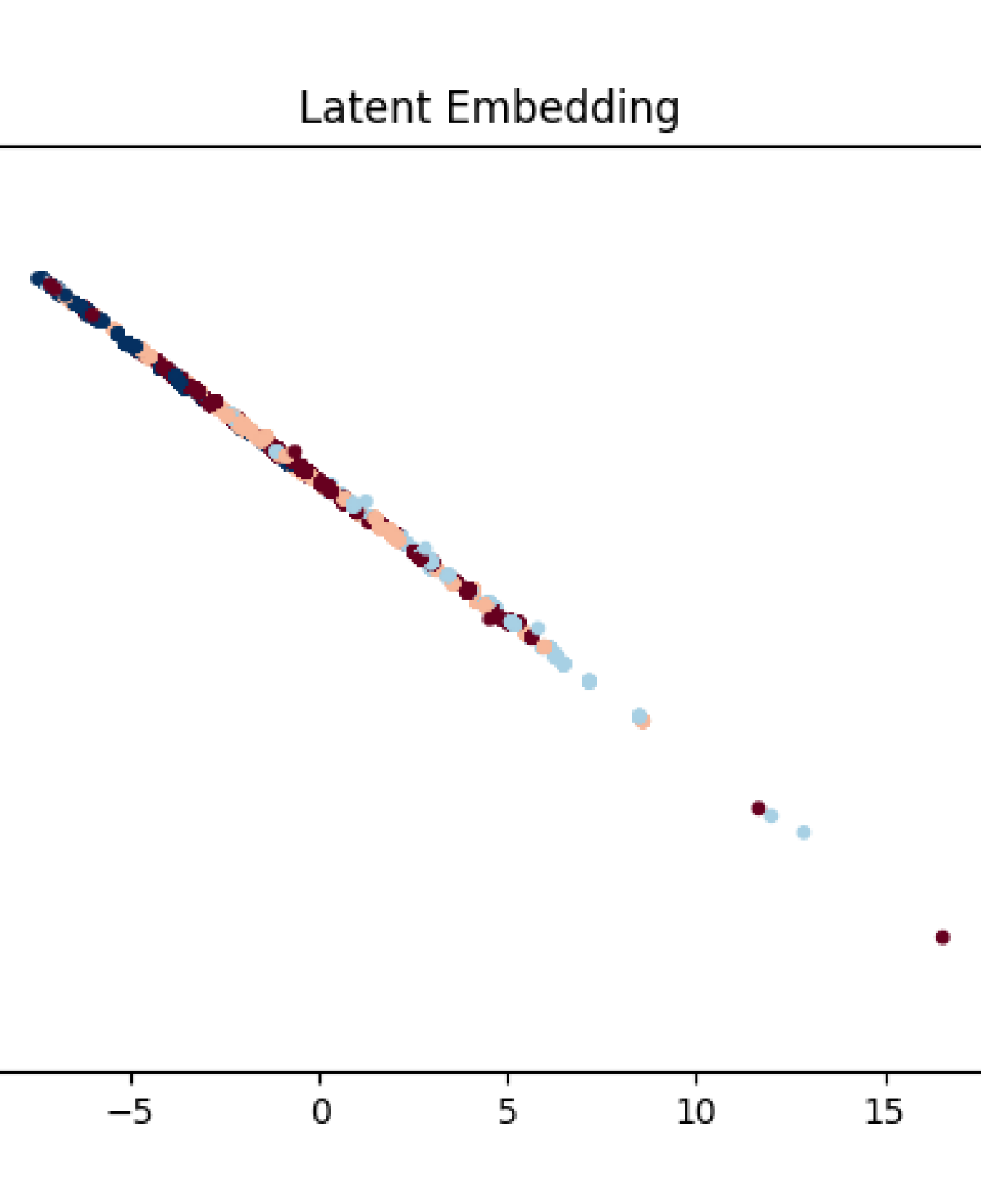

We build training data for each case and assume that 20% of the data is labeled. We use two discriminator updates per training round with a learning rate of , and allow the generator updates for 500 steps with a learning rate of . The LSTM takes in a trajectory 20 seconds in length, and we use a pooling size of 8 for each driver ID. For purposes of comparison, we terminate training after timesteps. In all experiments, we use a two-dimensional latent space.

VIII-B Distinguishability

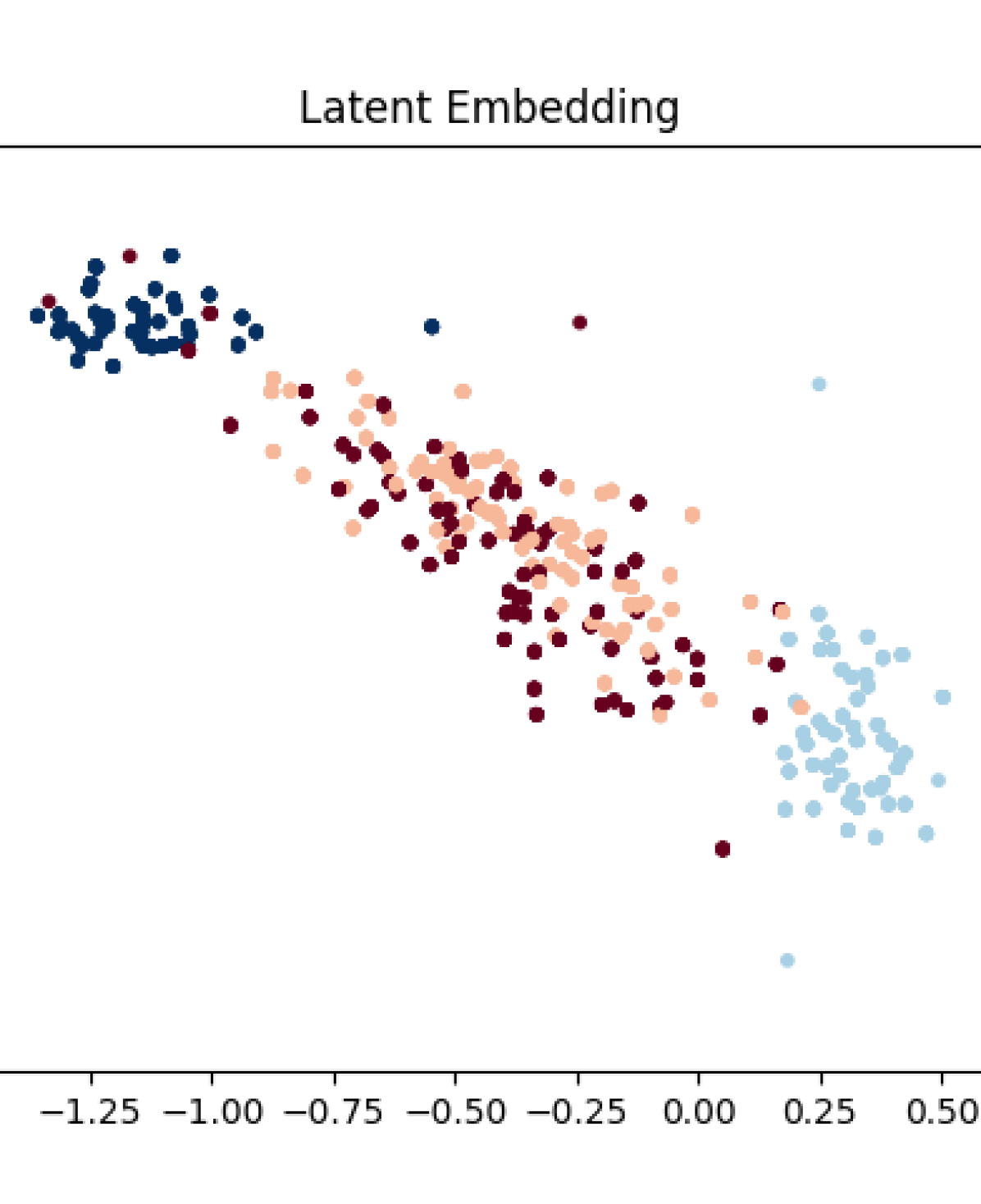

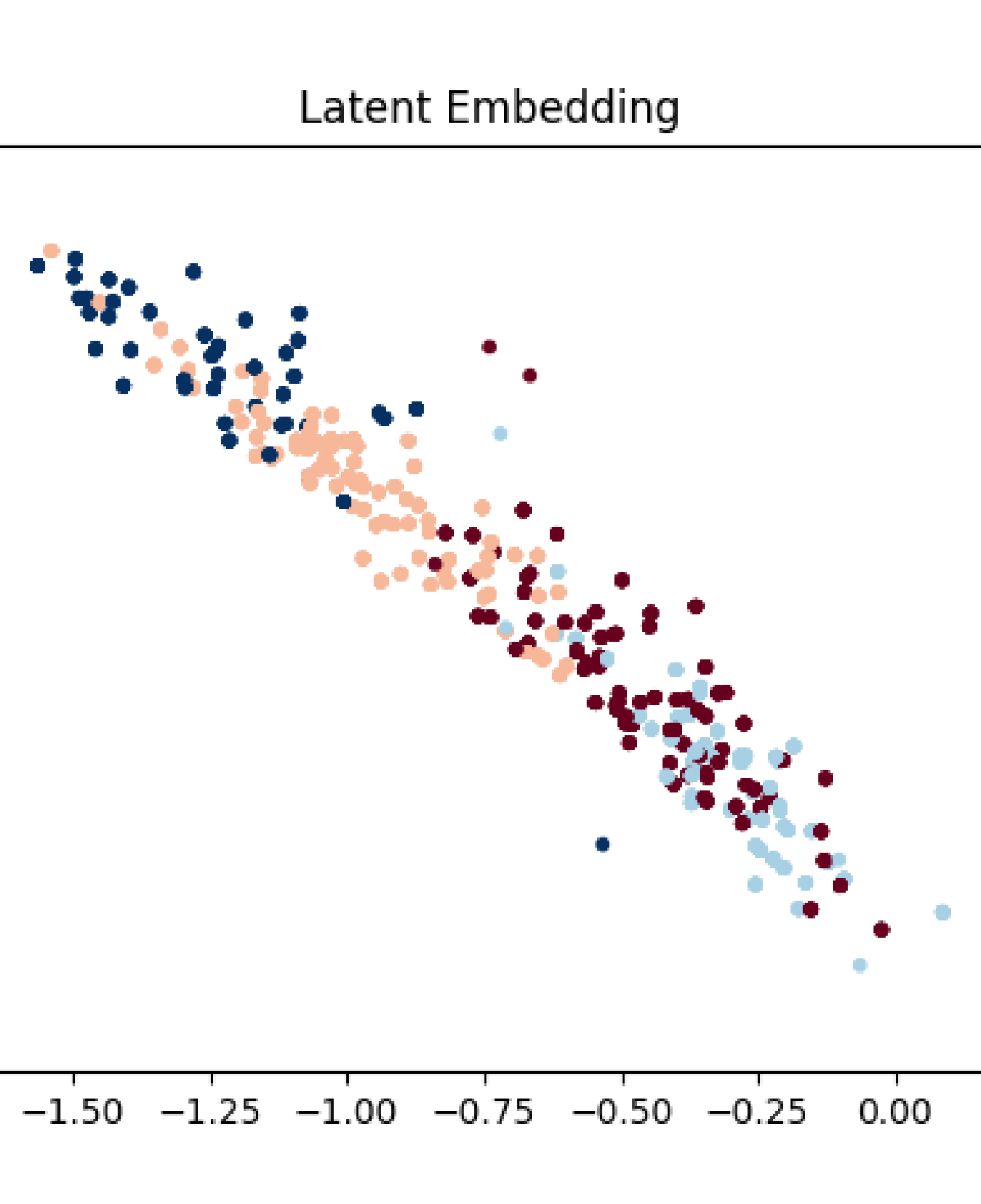

In Fig. 4, we notice that the ability to distinguish driver types is heavily influenced by the level of supervision. In the case of driver ID supervision, our training was successful at achieving good separation of latent variables associated with high-distraction levels from those that were lower (comparing cases 0 (Lisa) and 1 (Marge) with 2 (Bart) and 3 (Homer)). The two high-distraction cases (2 and 3) are also highly separated. In the case of ground-truth preferences, each of the cases yield latent embeddings that are relatively-well clustered; the data indicate that the two distinct preference settings separate into clusters, with more separation happening when the driver tends to be more distracted. We hypothesize that the failure to distinguish between different confidence levels in low-distraction cases is likely due to the chosen scenario.

VIII-C Impact of HMI Interventions

Table IV presents the performance of (1) driver-specific HMI models (rows 1-4) (2) an HMI tuned to an ‘average‘ driver type (Infl. = 6.0, = 0.505, , ), denoted as AvgHMI assisting the four driver types (row 5) and (3) the drivers with no HMI assistance (row 6). The performance is evaluated by computing the average high speed return (Table IV, left) and the average distraction reward per episode (Table IV, right).

We observe that the distraction rewards are the highest when the drivers are assisted by driver-specific HMI models. In particular, we find that the average total distraction reward over an episode is -101.2 for personalized HMI, compared with -283.0 for AvgHMI and -470.8 for NoHMI. Additionally, Bart (less cautious, less receptive and highly distracted) becomes distracted the most across all drivers regardless of the HMI model used. We also see that when the AvgHMI assists Marge and Homer, who are both highly receptive to HMI assistance, the average model is able to do a good job in keeping the distraction rewards fairly high. Since the AvgHMI is trained to assist an ‘average’ driver who tends be more distracted than Marge, but less than Homer, it is effective in increasing Marge’s distraction reward even better than the Marge-specific HMI model. However we do see that this comes at a cost: when AvgHMI assists Marge and Homer, the high speed returns are lower than driver-specific models. The decrease is more prominent for Homer who is much more distractible.

With regards to high speed returns, we observe that in general, driver-specific HMI models are able to help the drivers achieve consistently high speed returns. In particular, we see an average return of 448 over the four individuals, compared with 423 for the AvgHMI, and 427 for NoHMI. Except for Lisa, for all other drivers, there is a decrease in the high speed return when there is no assistance.

IX CONCLUSIONS

We explore a framework to capture and use latent representations of individual drivers to help decide the best strategy to assist them. We furthermore develop a simulator environment that allows for data generation from a diverse set of driver types. Our experiments show that we can uncover the latent structure of different drivers and show that the representation may be useful for interaction. We further show that our intervention strategies produce higher rewards than an HMI system designed for an average-case human.

In our work, we assume explicit structure embedded in both the data generating model and the labels. Future work will include more implicit understanding of preferences from population data that includes interactions with an AI system, where we can explore the emerging structure of human behavior from such datasets, and the interplay of weak supervision and explainability. We also aim to leverage the rewards and policies learned from humans, in addition to their latent representations, to train intervention policies and pave the way toward more personalized interventions.

References

- [1] C. Schaff and M. R. Walter, “Residual policy learning for shared autonomy,” in RSS, 2020.

- [2] K. Backman, D. Kulić, and H. Chung, “Reinforcement learning for shared autonomy drone landings,” 2022.

- [3] M. M. Tanjim, C. Su, E. Benjamin, D. Hu, L. Hong, and J. McAuley, Attentive Sequential Models of Latent Intent for Next Item Recommendation. New York, NY, USA: Association for Computing Machinery, 2020, p. 2528–2534.

- [4] K. Song, W. Gao, S. Feng, D. Wang, K.-F. Wong, and C. Zhang, “Recommendation vs sentiment analysis: A text-driven latent factor model for rating prediction with cold-start awareness,” in IJCAI, 2017, pp. 2744–2750.

- [5] Z. Yu, J. Lian, A. Mahmoody, G. Liu, and X. Xie, “Adaptive user modeling with long and short-term preferences for personalized recommendation,” in Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence. California: International Joint Conferences on Artificial Intelligence Organization, Aug. 2019.

- [6] A. Xie, D. P. Losey, R. Tolsma, C. Finn, and D. Sadigh, “Learning latent representations to influence Multi-Agent interaction,” Nov. 2020.

- [7] R. Yang, J. Chen, and K. Narasimhan, “Improving dialog systems for negotiation with personality modeling,” in Annual Meeting of the Association for Computational Linguistics. Online: Association for Computational Linguistics, Aug. 2021, pp. 681–693.

- [8] R. McIlroy-Young, S. Sen, J. Kleinberg, and A. Anderson, “Aligning superhuman AI with human behavior: Chess as a model system,” in SIGKDD, ser. KDD ’20. New York, NY, USA: Association for Computing Machinery, Aug. 2020, pp. 1677–1687.

- [9] T. Shu, A. Bhandwaldar, C. Gan, K. A. Smith, S. Liu, D. Gutfreund, E. Spelke, J. B. Tenenbaum, and T. D. Ullman, “AGENT: A benchmark for core psychological reasoning,” Feb. 2021.

- [10] P. A. Tsividis, J. Loula, J. Burga, N. Foss, A. Campero, T. Pouncy, S. J. Gershman, and J. B. Tenenbaum, “Human-Level reinforcement learning through Theory-Based modeling, exploration, and planning,” Jul. 2021.

- [11] X. Puig, T. Shu, S. Li, Z. Wang, Y.-H. Liao, J. B. Tenenbaum, S. Fidler, and A. Torralba, “Watch-And-Help: A challenge for social perception and Human-AI collaboration,” Oct. 2020.

- [12] M. Carroll, R. Shah, M. K. Ho, T. L. Griffiths, S. A. Seshia, P. Abbeel, and A. Dragan, On the Utility of Learning about Humans for Human-AI Coordination. Red Hook, NY, USA: Curran Associates Inc., 2019.

- [13] S. Omidshafiei, D.-K. Kim, M. Liu, G. Tesauro, M. Riemer, C. Amato, M. Campbell, and J. P. How, “Learning to teach in cooperative multiagent reinforcement learning,” in Proceedings of the 33rd AAAI Conference on Artificial Intelligence, ser. AAAI’19/IAAI’19/EAAI’19. AAAI Press, 2019.

- [14] D. J. Strouse, K. R. McKee, M. Botvinick, E. Hughes, and R. Everett, “Collaborating with humans without human data,” Oct. 2021.

- [15] D. Qiu, Y. Zhao, and C. Baker, “Latent belief space motion planning under cost, dynamics, and intent uncertainty,” in Robotics: Science and Systems XVI, Jul. 2020.

- [16] S. Lefevre, A. Carvalho, Y. Gao, H. Eric Tseng, and F. Borrelli, “Driver models for personalized driving assistance,” Veh. Syst. Dyn., vol. 53, no. 12, Jul. 2015.

- [17] R. Chandra, A. Bera, and D. Manocha, “StylePredict: Machine theory of mind for human driver behavior from trajectories,” Nov. 2020.

- [18] L.-Y. Gui, Y.-X. Wang, D. Ramanan, and J. M. Moura, “Few-shot human motion prediction via meta-learning,” in ECCV, 2018, pp. 432–450.

- [19] R. Akrour, M. Schoenauer, and M. Sebag, “APRIL: Active preference Learning-Based reinforcement learning,” in Machine Learning and Knowledge Discovery in Databases. Springer Berlin Heidelberg, 2012, pp. 116–131.

- [20] C. Wirth, R. Akrour, G. Neumann, and J. Fürnkranz, “A survey of Preference-Based reinforcement learning methods,” J. Mach. Learn. Res., vol. 18, no. 136, pp. 1–46, 2017.

- [21] D. Sadigh, A. Dragan, S. Sastry, and S. Seshia, “Active preference-based learning of reward functions,” in Robotics: Science and Systems XIII. Robotics: Science and Systems Foundation, Jul. 2017.

- [22] D. Sadigh, S. S. Sastry, S. A. Seshia, and A. Dragan, “Information gathering actions over human internal state,” in IROS, Oct. 2016, pp. 66–73.

- [23] J. Morton and M. J. Kochenderfer, “Simultaneous policy learning and latent state inference for imitating driver behavior,” in ITSC, Oct. 2017, pp. 1–6.

- [24] W. Schwarting, A. Pierson, J. Alonso-Mora, S. Karaman, and D. Rus, “Social behavior for autonomous vehicles,” Proc. Natl. Acad. Sci. U. S. A., vol. 116, no. 50, pp. 24 972–24 978, Dec. 2019.

- [25] N. Deo and M. M. Trivedi, “Multi-Modal trajectory prediction of surrounding vehicles with maneuver based LSTMs,” in IVS, 2018.

- [26] X. Huang, S. G. McGill, J. A. DeCastro, L. Fletcher, and G. Rosman, “DiversityGAN: Diversity-Aware vehicle motion prediction via latent semantic sampling,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 1–1, Jun. 2020.

- [27] J. Fu, K. Luo, and S. Levine, “Learning robust rewards with adverserial inverse reinforcement learning,” Feb. 2018. [Online]. Available: https://openreview.net/forum?id=rkHywl-A-

- [28] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint arXiv:1707.06347, 2017.

- [29] L. Yu, T. Yu, C. Finn, and S. Ermon, “Meta-inverse reinforcement learning with probabilistic context variables,” in Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, Eds., vol. 32. Curran Associates, Inc., 2019.

- [30] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [31] R. Hadsell, S. Chopra, and Y. LeCun, “Dimensionality reduction by learning an invariant mapping,” in CVPR, USA, 2006, p. 1735–1742.

- [32] E. Leurent, “An environment for autonomous driving decision-making,” https://github.com/eleurent/highway-env, 2018.

- [33] M. Chan and A. Singhal, “The emotional side of cognitive distraction: Implications for road safety,” Accident Analysis & Prevention, vol. 50, pp. 147–154, 2013.

- [34] G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W. Zaremba, “Openai gym,” 2016.

- [35] M. Treiber, A. Hennecke, and D. Helbing, “Congested traffic states in empirical observations and microscopic simulations,” Phys. Rev. E Stat. Phys. Plasmas Fluids Relat. Interdiscip. Topics, vol. 62, no. 2 Pt A, pp. 1805–1824, Aug. 2000.