Learning Large Scale Sparse Models

Abstract

In this work, we consider learning sparse models in large scale settings, where the number of samples and the feature dimension can grow as large as millions or billions. Two immediate issues occur under such challenging scenario: (i) computational cost; (ii) memory overhead. In particular, the memory issue precludes a large volume of prior algorithms that are based on batch optimization technique. To remedy the problem, we propose to learn sparse models such as Lasso in an online manner where in each iteration, only one randomly chosen sample is revealed to update a sparse iterate. Thereby, the memory cost is independent of the sample size and gradient evaluation for one sample is efficient. Perhaps amazingly, we find that with the same parameter, sparsity promoted by batch methods is not preserved in online fashion. We analyze such interesting phenomenon and illustrate some effective variants including mini-batch methods and a hard thresholding based stochastic gradient algorithm. Extensive experiments are carried out on a public dataset which supports our findings and algorithms.

Keywords

Sparsity, Lasso, Large Scale Algorithm, Online Optimization

1 Introduction

In the face of big data, learning structured representation continues to be one of the most quintessential tasks in machine learning. In this paper, we are interested in such large scale setting, i.e., we have to manipulate thousands or millions of features and perhaps billions of samples.

To tackle the curse of dimensionality, Lasso was proposed for variable selection [29], where the constraint was utilized since it is a tight convex approximation to the norm.

On the other hand, in order to learn models based on huge scale datasets, one promising alternative is stochastic optimization techniques. Compared to batch algorithms that require to access all the samples during optimization, stochastic methods such as stochastic gradient descent (SGD) [39], stochastic average gradient (SAG) [26] and stochastic variance reduced gradient (SVRG) [18] randomly pick one sample in each iteration for updating the parameters, hence the memory cost is independent of sample size. Interestingly, for the high dimensional case where the number of features is comparable or even larger than the dataset size, some dual coordinate ascent algorithms have been proposed to mitigate the computation, such as stochastic coordinate dual ascent (SCDA) [27] and its accelerated version [28]. Recently, it becomes clear that all the methods mentioned can be formulated in the variance reduction framework [14], which facilitates a unified analysis and new hybrid algorithms [24].

In order to simultaneously address both issues (high dimensionality and huge sample size), it is natural to devise online algorithms for Lasso. Some prominent examples include forward-backward splitting (Fobos) [16], regularized dual averaging (RDA) [35] and proximal algorithms [14, 36]. However, none of them is capable of producing sparse solutions in an online setting, even though they are optimizing the norm. The intuition why Lasso fails in online manner is that, in each iteration, we perform a gradient update followed by soft-thresholding (i.e. the Lasso step). But, note that since we only pick one sample to compute the gradient, the magnitude of the gradient is small, and hence a large would shrink all of them to zero, while a small cannot promote sparsity! Since each sample is chosen randomly, it is essentially hard to find a proper penalty . In contrast, batch methods will not incur such issues since the full dataset is always loaded to compute the gradient. The coordinate descent algorithm was recently shown to achieve linear convergence for strongly convex and composite objectives, and sublinear when only convexity is guaranteed [25]. While promising, this approach is not practical for coping with huge sample size.

1.1 Related Works

Dictionary Learning. There is a large body of works devoted to learning sparse models under different settings. For example, in machine learning and computer vision, dictionary learning (also known as sparse coding) aims to simultaneously optimize an over-complete dictionary and the corresponding codes for a given dataset [33, 20]. There, the sparsity pattern naturally emerges since the dictionary is over-complete. Recently, a theoretical study on the sample complexity and local convergence was carried out which partially explains the success of the empirical algorithm [32, 1, 3].

Compressed Sensing. Parallel to the community of dictionary learning, the core problem considered by compressed sensing is inverting the linear data acquisition process by using many fewer measurements than the signal capacity, i.e., solving a linear system where the unknowns are much larger than the equations [15]. In general, there are infinite number of solutions fitting such system and we have no hope to recover the true signal. Yet, knowing that the signal of interest is sparse immediately changes the premise. In particular, [9] showed that when the sensing matrix satisfies the restricted isometry property (RIP), then one may exactly recover the underlying signal by solving an based program. This is, perhaps, the first known result that the convex relaxed program is equivalent to the NP hard problem. Based on the RIP condition, numerous practical algorithms were developed to establish exact sparse signal recovery, for example, orthogonal matching pursuit (OMP) [30], iterative hard thresholding (IHT) [6], compressive sampling matching pursuit (CoSaMP) [21], subspace pursuit (SP) [13], to name just a few. One may refer to a comprehensive review [31] and the reference therein for more details.

Underlying most of these algorithms is a simple yet effective operator termed hard thresholding, which sets all but the largest absolute values to zero. Hence, it is a good fit to solve the constrained program. [38, 4] extends this technique to the machine learning setting, where the objective function is a general loss. Yet, they are all based on a batch setting, i.e. their algorithms and theoretical results only hold if all samples are revealed in each iteration. It still remains an open question if a constrained formulation can be exactly solved in the online setting. The challenge falls into the fact that online optimization introduces variance to the gradient evaluation which is hard to characterize.

Beyond. Examples of fields that explore sparsity are exhaustive. In computer vision, researchers pursue sparsity for dictionary learning as we aforementioned, and sparse subspace clustering [17], sparse representation [34], uniform or column-wise sparse corruption [7, 37], along with numerous algorithms such as [19]. In the regime of missing entries, interesting works include sparse graph clustering [12], partially observed graphs [11] and matrix completion [8, 10].

1.2 Summary of Contributions

We propose to tackle the important but challenging problem of producing sparse solutions for large scale machine learning. Compared to the batch methods, our formulation reduces the memory cost by one order of magnitude. Compared to existing methods, our empirical study demonstrates that our improved Lasso formulation and hard thresholded SGD algorithm will always result in sparse solutions.

2 Algorithms

In this work, we are mainly interested in the Lasso formulation which has been known to promote sparse solutions. In particular, given a set of training samples , Lasso pursues a sparse solution by optimizing the following program:

| (1) |

where , and is a tunable positive parameter. We also note that the objective is decomposable, i.e.,

| (2) |

where

| (3) |

2.1 Coordinate Descent

Coordinate descent (CD) is one of the most popular methods for solving the Lasso problem. In each iteration, it updates the coordinates of in a sequential (or random) manner by fixing the remaining coordinates. Let be the iterate at the -th iteration. Denote

| (4) |

where we write the th column of as . In this way, solving the th coordinate of amounts to minimizing

| (5) |

where the closed-form solution is given by

| (6) |

Here, is the soft-thresholding operator:

| (7) |

We summarize the coordinate descent algorithm in Algorithm 1.

Positive. The CD algorithm is easy to implement, and usually produces sparse solutions for proper . Its convergence behavior was recently carried out which illustrates that a stochastic version of CD enjoys linear convergence if strong convexity of the objective function is satisfied and a sublinear convergence is attained for general convex functions [25]

Negative. In order to update the coordinate, the algorithm has to load all the samples along that dimension, for which the memory cost is . This is not practical when we are dealing with huge scale datasets.

2.2 Sub-Gradient Descent

Parallel to coordinate descent, gradient descent is another prominent algorithm in mathematical optimization [5, 23]. That is, for a differentiable convex function , one computes its gradient evaluated at and update the solution as

| (8) |

for some pre-defined learning rate . However, the norm is not differentiable at so we have resort to the sub-gradient method. While for first-order differentiable functions the gradient is uniquely determined at a given point, the sub-gradients of a non-differentiable convex function at form a set as follows:

| (9) |

In our problem, the norm is non-differentiable and one may verify that its sub-gradients at is given by

| (10) |

In our implementation, we simply choose the zero vector as the sub-gradient if . Hence, if not specified, we use the sub-gradient

| (11) |

for the norm.

Batch Method. The batch version of sub-gradient descent for (1) is easy to implement. Given an old iterate , we compute the sub-gradient of the objective function as follows:

| (12) |

Then we make gradient update

| (13) |

Notably, we can accelerate the computation of full gradient by storing the matrix and the vector , which is shown in Algorithm 2.

Positive. Again, the sub-gradient method is easy to follow and a sublinear convergence rate, i.e., was established for our non-smooth objective function [22].

Negative. The memory cost is that is not practical for large scale datasets. Also note that our goal is to learning a sparse solution . During the optimization, sub-gradient descent method always computes the sub-gradient and adds it to the iterate scaled by the step size. Hence, there is no shrinking operation to make the solution sparse. Recall that CD invokes soft-thresholding each time. Thereby, chances are that the solution produced by sub-gradient methods are not exactly sparse, i.e., very small values such as may be preserved.

Mini-Batch and Stochastic Variant. Due to the memory issue, we also consider the mini-batch and stochastic variant of sub-gradient descent. Note that the stochastic variant is virtually a special case of mini-batch when the batch size is one. So we instate the mini-batch implementation.

To begin, let be an index set which is drawn randomly at the -th iteration with batch size . Let be the submatrix of consisting of the rows of indexed by . Likewise, we define . With the notation on hand, the mini-batch sub-gradient method can be stated as follows:

| (14) | ||||

| (15) |

Remark. Note that there are two differences between the full sub-gradient method and the mini-batch (or stochastic) one. First, the gradient of the latter one has randomness so the convergence is guaranteed in expectation. Second, due to the randomness, or more precisely, the variance, the step size has to decay to zero with , while we can choose a constant step size for the batch version. The rationale is that, when the sequence converges, tends to zero. Since is not the full gradient, it cannot be zero. Hence, has to converge to . Interestingly, recent works are devoted to designing variance reduction algorithms which allow the learning rate to be a constant, see, e.g., [18]. In our work, we pick .

For the reader’s convenience, we write the algorithm in Algorithm 3.

Positive. Compared to the full sub-gradient version, the memory cost is reduced to where is the batch size that is much smaller than . In particular, if we use the stochastic variant, memory footprint is only . Also, the mini-batch/stochastic variants are able to cope with streaming data since they update the solution dynamically. Finally, the computation in each iteration is cheap.

Negative. One drawback is a worse convergence rate of , which is the price paid for the randomness [22]. Another specific drawback is that the gradient is noisy, i.e., large variance due to randomness, and hence a good that works well for the batch method may not be suitable for the stochastic one since samples are different to each other. Finally, it turns out to be more difficult to expect sparse solutions due to the vanishing step size.

2.3 Forward Backward Splitting (Fobos)

Fobos [16] is an interesting algorithm for solving the Lasso problem and tackles the shortcoming of sub-gradient methods. Recall that sub-gradient methods always compute the sub-gradient of norm and add it. The key idea of Fobos is updating the solution in two phases. First, it computes the gradient for the least-squares loss and performs gradient descent, i.e.,

| (16) |

Note that the least-squares loss is differentiable. Hence, we move forward to a new point . Second, it takes the norm into consideration by minimizing the following problem:

| (17) |

where the closed-form solution is given by

| (18) |

Intuitively, (17) is looking for a new iterate that interpolates between two goals: 1) stay close to the obtained point that minimizes the least-squares (in expectation), and 2) exhibit the sparsity pattern. As [16] suggested, the step size should be inversely proportional to , which is also the choice in Algorithm 4.

Positive. First of all, it is guaranteed that the Fobos algorithm converges with the rate as soon as the step size is . Second, since Fobos performs soft-thresholding, the solutions could be sparse. Finally, each step in Fobos can be computed efficiently and the memory cost is only .

Negative. Although Fobos appears promising, there are two issues regarding the sparsity: 1) Like the stochastic sub-gradient method, each time we only pick a random sample. Hence, it is not easy to find a large parameter (the larger the more sparse) which works for all individual sample. 2) As the learning rate vanishes with , it is evident that for the last iterations, the soft-thresholding step cannot contribute much to a sparse solution.

Variants of Fobos. To partially handle the first shortcoming above, we also implement a mini-batch version of Fobos. Since the implementation is easy, we omit details here. We also attempt a round based variant. That is, for the first iterations, we choose , while for the last iteration we choose . This partially renders us some space to employing a larger .

2.4 Constrained SGD

While it is well known that the Lasso formulation (1) is able to effectively encourage sparse solutions, it is actually impossible to characterize how sparse the Lasso solution is for a given . This makes tuning the parameter hard and time-consuming.

Alternatively, the norm counts the number of non-zero components and thereby, imposing an constraint guarantees sparsity. To this end, we are to solve the following non-convex program:

| (19) |

where is a tunable parameter that controls the sparsity. Note that at this moment, we are only minimizing the least-squares loss, since the constraint already enforces sparsity. Again, the objective function is decomposable:

| (20) |

where

| (21) |

Note that the problem above is non-convex and algorithms such as [6, 21] optimize it in a batch fashion. To be more detailed, these methods basically compute a full gradient followed by a hard thresholding step on the iterate. As mentioned earlier, it is not practical to evaluate a full gradient on a large dataset owing to computational and memory issues.

Henceforth, in this work, we attempt a stochastic gradient descent algorithm to solve (19). The high level idea follows both from the compressed sensing algorithms [6, 21] and the vanilla stochastic gradient method. That is, in each iteration , given the previous iterate , we randomly pick a sample to compute an unbiased stochastic gradient, followed by a usual gradient descent update:

| (22) |

Subsequently, to ensure the iterate satisfies the constraint, we perform hard thresholding which sets all but the largest elements (in magnitude) to zero:

| (23) |

We summarize the algorithm in Algorithm 5.

Positive. The constrained SGD is the only method in this work that ensures a -sparse solution. It is computationally efficient since the gradient descent step costs only and the hard thresholding step costs . It is also memory efficient, i.e., memory suffices.

Negative. Perhaps the only shortcoming is the difficulty in characterizing the convergence rate, especially for realistic data where some standard assumptions like restricted strong convexity and restricted smoothness are not satisfied [2]. However, our empirical study demonstrates that surprisingly, the non-convex formulation always converges fast.

To close this section, we give a comparison among all the methods in Table 1.

| Algorithm | Computation | Memory | Convergence | Sparsity |

|---|---|---|---|---|

| CD | ||||

| SubGD | ||||

| Mini-SubGD | ||||

| Stoc-SubGD | ||||

| Fobos | ||||

| Mini-Fobos | ||||

| Round-Fobos | ||||

| -SGD | unknown | perfect |

3 Experiments

3.1 Datasets

To test our Lasso and implementations, we selected the Gisette dataset . The examples in this dataset are instances of the numeric characters and that must be classified into the appropriate numeric category. All digits were scale normalized and centered in a fixed image window of prior to feature extraction.

This dataset consists of training examples, positive and negative. There are testing examples, again positive and negative. Each example consists of features. Critically though, only of these features are real, the other were added as dummy (random) features, meaning they have no predictive power. Therefore, any good Lasso solution for this dataset should set at least of the weights to . We want to determine which algorithm (if any) will produce the best tradeoff between memory cost, accuracy and sparsity of the solution.

An important point regarding the constrained SGD is that the parameter essentially determines the sparsity of resulting solution since defines the number of weighting coefficients that will be set to . For example, if we have in a dataset with features, the sparsity will be since at most of the features can have non-zero weights.

3.2 Setup

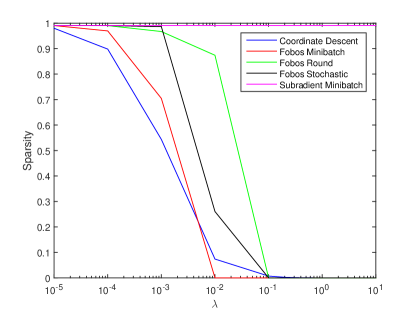

Since Lasso requires tuning of the soft thresholding parameter , we tested our different Lasso implementations along a range of different values (and in the case of the constrained SGD, the parameter ) until performance became asymptotic. values ranged from to , while the parameter in the constrained SGD ranged from to . For our mini-batch implementations, we used a batch size of random samples. We then recorded the accuracy and sparsity of each solution. Accuracy is computed as classification accuracy (the percentage of test examples correctly classified as a or a ). Sparsity is given by the percentage of non-zero weight. For example, a sparsity value of would mean that none of the weights were set to , likewise a sparsity value of would indicate that only of the weights are non-zero and are . Therefore, lower values correspond to more sparse solution. Algorithms that provide solutions preferable in both accuracy and sparsity will be deemed better solutions.

3.3 Results

As can be seen in Table 2, all Lasso implementations were able to achieve reasonably high accuracy given proper parameter tuning. Maximal accuracy values ranged from to across the different Lasso implementations. However, only Coordinate Descent and the Constrained SGD were able to produce truly sparse solutions.

| Algorithm | Accuracy | Sparsity | / |

|---|---|---|---|

| Fobos | |||

| Fobos Round | |||

| Fobos Minibatch | |||

| Coordinate Descent | |||

| Stochastic Subgradient | |||

| Minibatch Subgradient | |||

| L0 Constrained SGD |

If we look at the sparsity of the solutions generated by each Lasso implementation in Figure 1 we notice a clear trend. As we should expect, as increases, so does the sparsity. The one exception is the Subgradient Minibatch and Stochastic Subgradient (not plotted), which always result in dense solutions. Therefore, the Minibatch and Stochastic Subgradient Descent methods are not able to produce sparse solutions.

Although the various Fobos implementations are able to generate sparse solutions, the accuracy of these sparse solutions is extremely low (e.g. ). The only Lasso implementation plotted here that can produce both sparse and accurate solutions is Coordinate Descent, which has the significant drawback of being a batch method and therefore requiring extensive memory.

As previously mentioned, the constrained SGD will always produce a sparse solution since the sparsity is directly related to the parameter . Since the sparsity of the solution for this implementation is always pre-defined, it is not necessary or informative to plot here.

3.3.1 Batch Method

Overall, our batch method (Coordinate Descent) performed quite well. We obtained sparse, accurate solutions for ranging from to . This is not surprising since the batch method computes the gradient for the entire dataset, but has the unfortunate drawback of being computationally and memory inefficient.

3.3.2 Online Method

Of the online methods, only the constrained SGD performed well. Stochastic Subgradient Descent was unable to produce sparse solutions for any setting of , while the Stochastic Fobos and Round Fobos implementations were unable to achieve sparse and accurate solutions. Instead, we obtained Fobos solutions that were either accurate or sparse but not both. constrained SGD was able to produce solutions that were both sparse and accurate for ranging from (corresponding to sparsity of ) to (corresponding to a sparsity of ).

For the Fobos Round algorithm (Figure 2), the best convergence was found for small values of . Additionally, the value of the objective function was highly variable from iteration to iteration, and therefore the error does not decrease monotonically. Similar effects were found for the stochastic variant of Fobos (Figure 3), with similar rates of convergence.

Similar to the online implementations of Fobos, the stochastic Subgradient Descent (Figure 4) had the best convergence rates for small . On the other hand, the rate of convergence for the stochastic SubGD is much slower than for Fobos, so even though the value of the objective function does decrease monotonically, the stochastic Subgradient Descent provides a worse solution than the online implementations of Fobos.

The constrained SGD (Figure 5) shows the best rate of convergence out of all the online algorithms. We found the best convergence rate for larger values of , although there is probably a point of diminishing returns where becomes too large and we get a worse solution. Similar to the implementations of Fobos, the value of the objective function for constrained SGD is highly variable from iteration to iteration due to variacne.

3.3.3 Mini-batch Method

Both mini-batch methods were unable to produce good solutions. Similar to the stochastic version, the mini-batch version of Subgradient Descent was also unable to produce any sparse solutions. Additionally, similar to the other Fobos implementations, the mini-batch version was able to produce solutions that were either sparse or accurate, but not both.

For the two mini-batch methods (Fobos and Subgradient Descent) we see similar effects for the rate of convergence. Once again, small corresponds to faster convergence. Additionally, the value of the objective function decreases monotonically for both mini-batch algorithms. However, as in the online implementations, the mini-batch version of Fobos (Figure 6) converges at a much faster rate than the mini-batch version of Subgradient Descent (Figure 7).

4 Conclusion and Future Works

We investigated the quality of solutions, measured in terms of accuracy and sparsity, that are produced by various batch, mini-batch, and online implementations of Lasso and a non-convex constrained SGD. Unsurprisingly, we found that the batch method implemented here, Coordinate Descent, was able to produce solutions that were both sparse and accurate. Although Coordinate Descent produces good solutions, it is a batch method and therefore has the side effect of requiring a large amount of memory to compute the solution. Additionally, the cost of computing the gradient on each iteration is much larger than for the online algorithms.

Mini-batch and stochastic methods have the important advantage of requiring very little memory to compute solutions. As previous work has shown, the drawback is that the solutions produced are usually only either accurate or sparse, but not both. In the case of Subgradient Descent, the mini-batch and stochastic versions of the algorithm were not able to produce sparse solutions. Additionally, we were not able to obtain solutions that were both sparse and accurate for any of the Fobos implementations. However, the constrained SGD algorithm performed quite well, producing solutions that were both accurate and sparse. Perhaps somewhat surprisingly, we found that the constrained SGD algorithm also had the fastest rate of convergence among the online methods. By using hard thresholding (e.g. setting all but the largest absolute values to ) we were able to develop an online algorithm that guarantees we will obtain sparse solutions.

Although the constrained SGD algorithm is guaranteed to produce solutions that are sparse, it remains to be seen whether it will produce accurate solutions on different data sets. As mentioned previously, of the features in the Gisette data set used here were dummy features, and were therefore uninformative for classifying each example. Because of this, the Gisette data set is very well-suited for using Lasso for variable selection since the structure of the data set naturally promotes sparse solutions. It is necessary to test additional data sets to ensure the generalizability of our results.

One important advantage of the constrained implementation of SGD is the intuitive setting of the parameter as compared with in the other algorithms. This is because corresponds directly to the sparsity of the solution, so setting the parameter is really just defining the sparsity of the solution. This becomes critical in settings where we have prior knowledge about how sparse our solution should be. Rather than testing a multitude of different , one could instead test a much smaller set of , allowing us to obtain a solution more efficiently.

References

- [1] A. Agarwal, A. Anandkumar, P. Jain, and P. Netrapalli. Learning sparsely used overcomplete dictionaries via alternating minimization. arXiv preprint arXiv:1310.7991, 2013.

- [2] A. Agarwal, S. Negahban, M. J. Wainwright, et al. Fast global convergence of gradient methods for high-dimensional statistical recovery. The Annals of Statistics, 40(5):2452–2482, 2012.

- [3] S. Arora, R. Ge, and A. Moitra. New algorithms for learning incoherent and overcomplete dictionaries. arXiv preprint arXiv:1308.6273, 2013.

- [4] S. Bahmani, B. Raj, and P. T. Boufounos. Greedy sparsity-constrained optimization. The Journal of Machine Learning Research, 14(1):807–841, 2013.

- [5] D. P. Bertsekas. Nonlinear programming. Athena scientific, 1999.

- [6] T. Blumensath and M. E. Davies. Iterative hard thresholding for compressed sensing. Applied and Computational Harmonic Analysis, 27(3):265–274, 2009.

- [7] E. J. Candès, X. Li, Y. Ma, and J. Wright. Robust principal component analysis? Journal of the ACM (JACM), 58(3):11, 2011.

- [8] E. J. Candès and B. Recht. Exact matrix completion via convex optimization. Foundations of Computational mathematics, 9(6):717–772, 2009.

- [9] E. J. Candes and T. Tao. Decoding by linear programming. Information Theory, IEEE Transactions on, 51(12):4203–4215, 2005.

- [10] E. J. Candès and T. Tao. The power of convex relaxation: Near-optimal matrix completion. Information Theory, IEEE Transactions on, 56(5):2053–2080, 2010.

- [11] Y. Chen, A. Jalali, S. Sanghavi, and H. Xu. Clustering partially observed graphs via convex optimization. The Journal of Machine Learning Research, 15(1):2213–2238, 2014.

- [12] Y. Chen, S. Sanghavi, and H. Xu. Clustering sparse graphs. In Advances in neural information processing systems, pages 2204–2212, 2012.

- [13] W. Dai and O. Milenkovic. Subspace pursuit for compressive sensing signal reconstruction. Information Theory, IEEE Transactions on, 55(5):2230–2249, 2009.

- [14] A. Defazio, F. Bach, and S. Lacoste-Julien. Saga: A fast incremental gradient method with support for non-strongly convex composite objectives. In Advances in Neural Information Processing Systems, pages 1646–1654, 2014.

- [15] D. L. Donoho. Compressed sensing. Information Theory, IEEE Transactions on, 52(4):1289–1306, 2006.

- [16] J. C. Duchi and Y. Singer. Efficient online and batch learning using forward backward splitting. Journal of Machine Learning Research, 10:2899–2934, 2009.

- [17] E. Elhamifar and R. Vidal. Sparse subspace clustering. In Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on, pages 2790–2797. IEEE, 2009.

- [18] R. Johnson and T. Zhang. Accelerating stochastic gradient descent using predictive variance reduction. In Advances in Neural Information Processing Systems, pages 315–323, 2013.

- [19] H. Lee, A. Battle, R. Raina, and A. Y. Ng. Efficient sparse coding algorithms. In Advances in neural information processing systems, pages 801–808, 2006.

- [20] J. Mairal, F. Bach, J. Ponce, and G. Sapiro. Online learning for matrix factorization and sparse coding. The Journal of Machine Learning Research, 11:19–60, 2010.

- [21] D. Needell and J. A. Tropp. Cosamp: Iterative signal recovery from incomplete and inaccurate samples. Applied and Computational Harmonic Analysis, 26(3):301–321, 2009.

- [22] Y. Nesterov. Introductory lectures on convex optimization, volume 87. Springer Science & Business Media, 2004.

- [23] Y. Nesterov. Introductory lectures on convex optimization: A basic course, volume 87. Springer Science & Business Media, 2013.

- [24] S. J. Reddi, A. Hefny, S. Sra, B. Póczos, and A. J. Smola. On variance reduction in stochastic gradient descent and its asynchronous variants. In Advances in Neural Information Processing Systems, pages 2629–2637, 2015.

- [25] P. Richtárik and M. Takáč. Iteration complexity of randomized block-coordinate descent methods for minimizing a composite function. Mathematical Programming, 144(1-2):1–38, 2014.

- [26] N. L. Roux, M. Schmidt, and F. R. Bach. A stochastic gradient method with an exponential convergence rate for finite training sets. In Advances in Neural Information Processing Systems, pages 2663–2671, 2012.

- [27] S. Shalev-Shwartz and T. Zhang. Stochastic dual coordinate ascent methods for regularized loss. The Journal of Machine Learning Research, 14(1):567–599, 2013.

- [28] S. Shalev-Shwartz and T. Zhang. Accelerated proximal stochastic dual coordinate ascent for regularized loss minimization. Mathematical Programming, pages 1–41, 2014.

- [29] R. Tibshirani. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), pages 267–288, 1996.

- [30] J. A. Tropp and A. C. Gilbert. Signal recovery from random measurements via orthogonal matching pursuit. Information Theory, IEEE Transactions on, 53(12):4655–4666, 2007.

- [31] J. A. Tropp and S. J. Wright. Computational methods for sparse solution of linear inverse problems. Proceedings of the IEEE, 98(6):948–958, 2010.

- [32] D. Vainsencher, S. Mannor, and A. M. Bruckstein. The sample complexity of dictionary learning. The Journal of Machine Learning Research, 12:3259–3281, 2011.

- [33] J. Wright, Y. Ma, J. Mairal, G. Sapiro, T. S. Huang, and S. Yan. Sparse representation for computer vision and pattern recognition. Proceedings of the IEEE, 98(6):1031–1044, 2010.

- [34] J. Wright, A. Y. Yang, A. Ganesh, S. S. Sastry, and Y. Ma. Robust face recognition via sparse representation. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 31(2):210–227, 2009.

- [35] L. Xiao. Dual averaging method for regularized stochastic learning and online optimization. In Advances in Neural Information Processing Systems, pages 2116–2124, 2009.

- [36] L. Xiao and T. Zhang. A proximal stochastic gradient method with progressive variance reduction. SIAM Journal on Optimization, 24(4):2057–2075, 2014.

- [37] H. Xu, C. Caramanis, and S. Sanghavi. Robust pca via outlier pursuit. In Advances in Neural Information Processing Systems, pages 2496–2504, 2010.

- [38] X.-T. Yuan, P. Li, and T. Zhang. Gradient hard thresholding pursuit for sparsity-constrained optimization. arXiv preprint arXiv:1311.5750, 2013.

- [39] T. Zhang. Solving large scale linear prediction problems using stochastic gradient descent algorithms. In Proceedings of the 21st International Conference on Machine Learning. ACM, 2004.