ifaamas \acmConference[AAMAS ’22]Proc. of the 21st International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2022)May 9–13, 2022 Auckland, New ZealandP. Faliszewski, V. Mascardi, C. Pelachaud, M.E. Taylor (eds.) \copyrightyear2022 \acmYear2022 \acmDOI \acmPrice \acmISBN \affiliation \institutionUniversity of California, Berkeley \cityBerkeley \stateCalifornia \countryUnited States \affiliation \institutionUniversity of California, Berkeley \cityBerkeley \stateCalifornia \countryUnited States \affiliation \institutionUniversity of California, Berkeley \cityBerkeley \stateCalifornia \countryUnited States \affiliation \institutionUniversity of California, Berkeley \cityBerkeley \stateCalifornia \countryUnited States

Learning Generalizable Multi-Lane Mixed-Autonomy Behaviors in Single Lane Representations of Traffic

Abstract.

Reinforcement learning techniques can provide substantial insights into the desired behaviors of future autonomous driving systems. By optimizing for societal metrics of traffic such as increased throughput and reduced energy consumption, such methods can derive maneuvers that, if adopted by even a small portion of vehicles, may significantly improve the state of traffic for all vehicles involved. These methods, however, are hindered in practice by the difficulty of designing efficient and accurate models of traffic, as well as the challenges associated with optimizing for the behaviors of dozens of interacting agents. In response to these challenges, this paper tackles the problem of learning generalizable traffic control strategies in simple representations of vehicle driving dynamics. In particular, we look to mixed-autonomy ring roads as depictions of instabilities that result in the formation of congestion. Within this problem, we design a curriculum learning paradigm that exploits the natural extendability of the network to effectively learn behaviors that reduce congestion over long horizons. Next, we study the implications of modeling lane changing on the transferability of policies. Our findings suggest that introducing lane change behaviors that even approximately match trends in more complex systems can significantly improve the generalizability of subsequent learned models to more accurate multi-lane models of traffic.

Key words and phrases:

Reinforcement Learning, Social Simulation, Traffic Control1. Introduction

Reinforcement learning (RL) methods will likely play a significant role in the design of future autonomous driving strategies. As is the case in several cooperative/competitive settings Mordatch and Abbeel (2018); Leibo et al. (2017); Bansal et al. (2017); Silver et al. (2016), these methods may provide significant insights into the nature of desirable interactions by automated vehicles (AVs). In particular, if properly applied, these methods may enhance our understanding of the quality of policies needed for socially optimal and energy-efficient driving to emerge. As such, efficient learning methods are needed to ensure that such systems succeed.

Due to the complexity of deploying RL methods in real-world, safety-critical environments, studies have focused primarily on analyzing such methods in simulation. In Wu et al. (2021), for instance, the authors demonstrate the reinforcement learning policies can match and even exceed the performance of controllers already demonstrated to provide benefits in real-world settings Stern et al. (2018). This has been done for more complex problems as well, with other studies showing performative benefits of decentralized autonomous vehicles trained to operate in lane-restricted bottlenecks Vinitsky et al. (2020) and intersections Wu et al. (2019), among others Wu et al. (2017a); Vinitsky et al. (2018); Cui et al. (2021). However, these methods are often hindered by the process of modeling and simulating large-scale transportation networks and the difficulty of jointly optimizing for the behavior of multiple vehicles within these settings. Thus, robust learning methods in more simplified traffic control problems are needed to address this.

In this paper, we demonstrate that policies learned in simplified and computationally efficient ring road settings, if properly defined, can effectively learn behaviors that generalize to more complex problems. To enable proper generalization, we identify two limiting factors that exacerbate the dynamical mismatch between the two problems and describe methods for addressing each of them while maintaining the relative simplicity and efficiency of the original task. For one, to address mismatches that arise from variations in the boundary conditions, we construct a curriculum learning paradigm that scales the performance of policies learned to larger rings, where boundaries pose less of a concern. Next, to introduce perturbations that arise for lane changes and cut-ins, we introduce a simple approach for simulating such disturbances in single-lane settings and study the implications of the aggressive and accuracy of such disturbances on the resultant policy.

We validate the performance of our approach on a calibrated model of the I-210 network in Los Angeles, California (Figure 1). Our findings suggest that learning in larger rings via curricula and introducing simple, random lane change events greatly generalizes the resultant congestion-smoothing behaviors. An exploration of the rate with which lane change occurs also provides insights into the types of lane changes needed to generalize to other similar settings.

The primary contributions of this paper are:

-

•

We design a curriculum learning paradigm consisting of rings of increasing length and number of AVs to efficiently learn multiagent vehicle interactions in large-scale settings.

-

•

We explore methods for modeling lane changes in single-lane problems that improve the generalizability of learned policies to more realistic multi-lane settings.

-

•

We demonstrate the relevance of the above to methods in learning effective congestion-mitigation policies in large-scale, flow-restricted networks.

The remainder of this paper is organized as follows. Section 2 introduces relevant concepts and terminology and their relation to the present paper. Section 3 discusses the problem statement and the techniques utilized to improve the generalizability of policies learned in ring roads. Section 4 presents numerical results of the proposed methods and provides insights into the degree of generalizability achieved. Finally, Section 5 provides concluding remarks and discusses potential avenues for future work.

2. Related Work

2.1. Addressing Congestion in Ring Roads

Ring roads model the behavior of vehicles in a circular track of length with periodic boundary conditions. Within these models, the evolution of the state (or position ) of each vehicle is represented via the system of ordinary differential equations:

| (1) |

where is a car-following model that mimics the acceleration of human driver as a function of their ego speed , the speed of their leader, and the bumper-to-bumper headway between the two , and and are the initial position and speed of the vehicle, respectively. TODO: example.

Single-lane ring roads have served as a proxy for studying traffic instabilities for several decades. Within these problems, instabilities appear as a result of behaviors inherent to human driving. In particular, due to string instabilities Swaroop and Hedrick (1996) in human driving behaviors, small fluctuations in driving speeds produce higher amplitude oscillations by following vehicles as they break harder to avoid unsafe settings. This response perturbs the system from its desirable uniform flow equilibrium, in which all vehicles are equidistant and drive at a constant speed, and results in the formation of stop-and-go waves common to highway networks, thereby contributing to the reduced throughput and efficiency of the network. This effect has been empirically validated by the seminal work of Sugiyama et al. Sugiyama et al. (2008), and in simulation may be reconstructed through the use of popular car-following models (e.g Treiber et al. (2000); Bando et al. (1995)).

The presence of string instabilities in human drivers highlights a potential benefit for upcoming autonomous driving systems. In particular, by responding to string instabilities in human driving, automated vehicles (AVs) at even low penetrations can reestablish uniform flow driving, improving throughput and energy-efficiency in the process. Several studies have attempted to establish appropriate policies Sun et al. (2018); Cui et al. (2017); Wang and Horn (2019). Most relevant to the present paper, studies have also looked into the characteristics of emergent phenomena learned via deep reinforcement learning in mixed-autonomy ring roads with socially optimal objectives Wu et al. (2017a). The behaviors, however, lend little insight into the nature of desired behaviors needed to achieve socially optimal driving in real, large-scale networks. We highlight the limiting factors in Section 3.2 and provide methods for addressing them in Section 3.3 and 3.4. We then discuss the implications of these factors on learned behaviors in Section 4.2.

2.2. Reinforcement Learning and MDPs

RL problems are studied as a Markov decision problem (MDP) Bellman (1957), defined by the tuple: , where is an -dimensional state space, an -dimensional action space, a transition probability function, a bounded reward function, an initial state distribution, a discount factor, and a time horizon. For multiagent problems in particular, these problems are further expressed as Markov games Littman (1994), consisting a collection of state and action sets for each agent in the environment.

In a Markov game, an agent is in a state in the environment and interacts with this environment by performing actions . The agent’s actions are defined by a policy parametrized by . The objective of the agent is to learn an optimal policy: , where is the expected discounted return. In the present article, these parameters are updated using direct policy gradient methods Williams (1992), and in particular the TRPO algorithm Schulman et al. (2015).

2.3. Transfer Learning

Transfer learning techniques in reinforcement learning provide methods of leveraging experiences acquired from training in one task to improve training on another Taylor and Stone (2009). These tasks may differ from the perspective of the agent (e.g., the observation the agent perceives or the actions it may perform) or other components of the MDP structure (e.g., the transition probability). Standard transfer learning practices include sharing policy parameters and state-action pairs between tasks. For a survey of transfer learning techniques, we refer the reader to Taylor and Stone (2009); Pan and Yang (2009).

The notion of transferring knowledge from policies learned on ring roads to more complex tasks has been explored in the past. In particular, the work of Kreidieh et al. (2018) explores the transferability of policies learned on a closed ring road network to open highway networks with instabilities arising from an on-ramp merge. It finds that policies trained on ring road networks exhibiting similar network densities as their target network performs well in single-lane merges without exposure to the network. This work, however, provides limited success in generating meaningful control strategies for multi-lane highway settings, where additional approximations for the dynamics of lane-changing are needed. We introduce methods for addressing this challenge in the following section.

3. Experimental Setup

In this section, we introduce the explored mixed-autonomy control problem and the features within this problem that limits its generalizability to complex tasks. We then introduce methods to improve the scalability and transferability of learned policies.

3.1. Problem Definition

We begin by establishing the training environment under which policies learn to regulate congestion in simplified ring road representations of traffic. This is an extension of the specifications provided in Wu

et al. (2021), with modifications aimed at improving the performance of learning methods in multiagent settings.

Network/actions: We define the mixed-autonomy ring road problem as an extension of the model depicted in Eq. (1), in which the actions of decentralized agents dictate the desired accelerations by AVs. Let consist of the set of AVs whose actions are dictated by a learning agent, or policy, . The updated system of ordinary differential equations dictating the dynamics of the network is then:

| (2) |

where is the state of AV at time , defined later in this section. For the car-following model , we consider the Intelligent Driver Model (IDM) Treiber et al. (2000), a popular model for accurately simulating string-instabilities in human driving. Through this model, the acceleration for a vehicle is defined by its headway , velocity , and relative velocity with the preceding vehicle as:

| (3) |

where is an exogenous noise term designed to mimic stochasticity in driving, and is the desired headway of the vehicle denoted by:

| (4) |

and , , , , , are given parameters provided in Table 1.

Observations: The observation space of the learning agent consists of locally observable network features. This includes the speed and bumper-to-bumper headway of the vehicle immediately preceding the automated vehicle and its ego speed , extended over a given number of frames and sampling rate . The final state at time is:

|

|

(5) |

where and .

Rewards: We choose a reward function that aims to direct the flow of traffic towards its uniform flow speed while regularizing the actions performed by the AVs. Moreover, due to the difficulty of credit assignment when multiple agents interact with one another, we choose to represent this behavior as a function of features local to the individual AVs. The reward of agent at time is:

| (6) |

where is the uniform flow speed at a given network density (see Wu et al. (2017a) for more details), and the constants are set to and .

| Intelligent Driver Model (IDM) | |||||||

| Parameter | |||||||

| Value | |||||||

3.2. Limitations to Generalizability

The process of learning mixed-autonomy traffic regulation policies within simulated ring roads presents several notable benefits in the context of RL. For one, the simplicity of the proposed dynamics renders the problem easy to reconstruct and computationally efficient to simulate over discrete time segments. This is particularly beneficial within the field of RL, which relies largely on reproducibility and benchmarking to progress, and within which existing methods often require millions of interactions to generate meaningful behaviors. In addition, notions of stability and social optimally render the definition of the reward, or objective function, relatively straightforward. This is in contrast to RL studies in more complex mixed-autonomy settings, which rely primarily on heuristic insights for engineering reward functions that handle, for example, the presence on and off-ramps, insights that may not be transferable to other domains. The question, nevertheless, remains: Are policies learned within these settings meaningful/generalizable to complex, multifeatural networks?

To answer this question, we identify two features limiting ring road policies’ applicability to multi-lane, open highway networks. We address these limitations in the following subsections.

3.2.1. Boundary conditions

The first of these challenges considers the effect of periodic boundary conditions unique to closed (circular) networks. In smaller ring networks, similar to those previously studied, this boundary strongly couples the actions performed by individual AVs and the long-term responses of all other vehicles in the network, including those in front of the AV. In real-world networks, however, the effect vehicles have on traffic is unidirectional, propagating against the flow of traffic. The presence of this condition, as such, introduces potential optimal behaviors that limit the potential transferability of any learned behavior.

3.2.2. Perturbations by adjacent lanes

In addition to the effects of boundary conditions, perturbations to the observed state induced by lane changes are also absent in the single-lane ring. These perturbations include sudden reductions in headway resulting from overtaking actions and temporal fluctuations in vehicle densities and destabilize the learned policy if not adequately captured by the source task. As such, solutions need to alleviate the shift between the two domains.

3.3. Learning Scalable Behaviors via Curricula

We begin by addressing the challenges of learning transferable open-network behaviors in environments with closed-loop / periodic boundary conditions. As mentioned in the previous subsection, the presence of this boundary condition produces strong couplings between actions by an AV and the behaviors of vehicles directly in front of it. This effect is in direct opposition to the natural convective stability of open networks, which likely results in the formation of undesirable emergent behaviors.

Larger diameter ring roads with additional automated vehicles, as noted in Orosz et al. (2009), can help dilute these boundary effects. Learning in these more complex settings, however, poses a significant challenge to existing multiagent RL algorithms. In particular, the increasingly delayed effects that individual actions have on the global efficiency of these networks, coupled with the effects of further non-stationary and credit assignment problems, hinder the progression of gradient-based methods as they attempt to traverse the space of possible solutions. As a result, features within the learning procedure as simple as policy initializations heavily dictate the nature of the converged behavior in these larger settings.

To improve the performance of policies in larger ring problems, we design a curriculum learning paradigm that exploits the extendability of the problem and the similarity in solutions between rings of similar magnitudes. This approach is depicted in Figure 2. Within this paradigm, policies to be trained in mixed-autonomy rings of length with AVs are initially pretrained in a ring of length with AV for a total of epochs. This initial problem is relatively simple to solve but does not sufficiently generalize the -AV problem due to the vast difference in effects from the periodic boundary. However, the solution serves as a beneficial initialization for scaling the performance of policies learned in the -AV settings, which share closer boundary effects. As such, a new pretrain policy is learned on the -AV ring using the policy learned prior as a warm start, and this process is repeated for iterations until a proper policy initialization may be provided to the -AV problem, which is then trained for a total of epochs.

3.4. Modeling Disturbances by Lane Changes

In this subsection, we attempt to address the second of the challenges presented in Section 3.2, namely the shift in domains induced by the presence of lane changes in real-world tasks. To resolve this concern, we attempt to model the effects of lane changes from the perspective of individual lanes. In particular, we take inspiration from the work of Wu et al. (2017b) and model lane changes as stochastic insertions and deletions of vehicles within the ring. As seen in Figure 3, the insertions, which model lane-change-in actions, are characterized by their event probability and the entry speed and headway with respect to the trailing vehicle, and deletions, which model lane-change out events, are characterized by their event probability .

In modeling the insertion/deletion event probabilities and entry conditions, we wish to design simple representations that sufficiently account for the effects of perturbations to ensure effective generalizability. Random lane change events represent the simplest approach for modeling such effects on automated vehicles. For vehicles whose headway permits a safe lane change, the lane change events are modeled as identical independent random variables of a Bernoulli distribution. Through such an approach, the components of the lane change events at a given time are defined as:

| (7) |

| (8) |

| (9) |

| (10) |

where and are the hyperparameters for the expected number of lane change events at each time step. is the number of vehicles who can safely experience an overtaking event at time .

The use of random lane change actions, in addition to its design simplicity, absolves the modeling procedure of its dependence on data and estimation. As a result, policies learned through such a lane change model are not biased by small datasets or poor performing estimations and instead must learn to perform generally well under any potential lane change event, aggressive or otherwise. In the context of transfer learning, this approach can be considered closest to domain randomization Tobin et al. (2017). We explore the effects of the and terms in the following section.

3.5. Simulation and Training

Simulations of the ring-road problem are performed via a discretized variant of the model depicted in Eq. 2 with a simulation time step of sec/step. We consider four variants of the ring environment, which we characterized by the number of controlled agents . In each of these tasks, we choose a range of ring circumferences that possess optimal (uniform flow) speeds in the range m/s. The corresponding range of acceptable lengths is chosen to be m. At the start of every rollout, a new length is chosen from this range to ensure that any solution learned does not overfit to a specific desired speed. To simulate the effects of lane changes, we follow the process highlighted in Wu et al. (2017b) for stochastically introducing and removing vehicles while extending this approach to include arbitrary placements of vehicles within a leading gap during cut-ins. The lane-change interval upon which vehicles enter and exit is considered a hyperparameter and is further discussed in later sections. These simulations are reproducible online from: REDACTED FOR REVIEW PURPOSES.

For all RL experiments in this paper, we use the Trust Region Policy Optimization (TRPO) Schulman et al. (2015) policy gradient method for learning the control policy, discount factor , and step size , and a diagonal Gaussian MLP policy with hidden layers and a ReLU non-linearity. The parameters of this policy are shared among all agents in the execution/evaluation procedure and jointly optimized in the training procedure.

4. Numerical Results

In this section, we present numerical results for the training procedures presented in the previous section. Through these results, we aim to answer the following questions:

-

(1)

Does the curriculum learning procedure improve the scalability of decentralized policies learned in larger ring roads?

-

(2)

To what extent does the trained policy succeed in mimicking lane-changing behaviors by human drivers in single-lane settings?

-

(3)

What effect does the utilization of larger rings and dynamics of lane-changing have on the transferability of policies to realistic multi-lane highway networks?

Reinforcement learning experiments for the different setups are executed over seeds. The training performance is averaged and reported across all these seeds to account for stochasticity between simulations and policy initialization.

4.1. Ring Experiments

We begin by evaluating the performance of the training procedure and the importance of utilizing curricula in solving larger problems. Figure 6 depicts the learning performance on the ring road task with and without the use of stochastic lane changes and curricula. This figure evaluates the policy on its ability to achieve uniform-flow equilibrium over the past rollout. The evaluation metric for an individual rollout consists of the ratio between driving speeds by individual vehicles and the desired uniform flow speed as defined in Section 3.1. Mathematically, this can be written as:

| (11) |

where is the time horizon, is the number of vehicles, and is the ring’s circumference. As we can see, while the early stage problems perform well without curricula, policies trained on the larger ring problem struggle to achieve similar performance. On the other hand, when trained via a curriculum of gradually growing rings, the learned policies continue to achieve high scores close to the ideal value of and outperforming their non-curricular counterparts by up to %. This improvement is particularly evident once lane changes are introduced to the environment, possibly due to the increased stochasticity and complexity that is forced onto the problem.

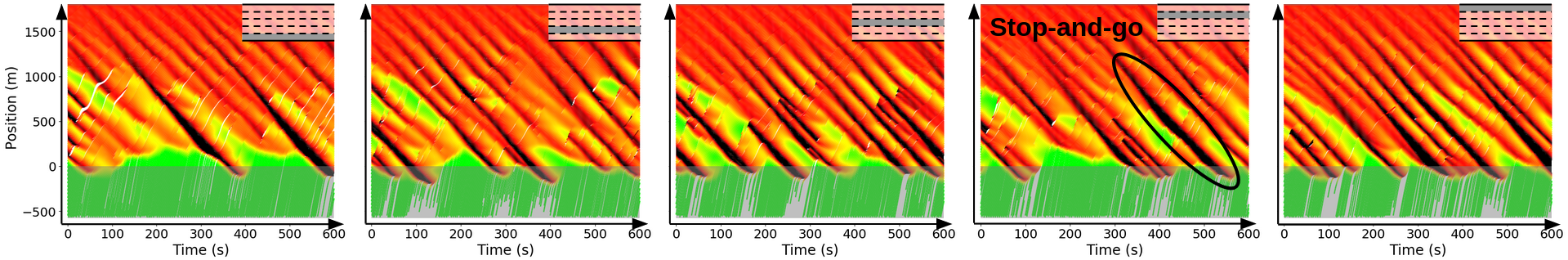

Figure 5 depicts the spatio-temporal performance of the different learned policies in mixed-autonomy ring roads of different lengths. To visualize these behaviors, we use time-space diagrams to visualize the evolution of vehicles across space and time and color their trajectories based on their current speeds to visualize macroscopic mobility and the presence of stop-and-go congestion. In fully human-driven settings (Fig. 5(a)), instabilities in human driving behaviors result in the formation of stop-and-go waves after a few minutes, depicted by the red diagonal lines in the figures. In larger rings, these waves span more vehicles and propagate more slowly through the network, rendering them more complex problems to solve. Standard RL methods (Fig. 5(b)) can perform well in training stop-and-go wave dissipating policies for smaller environments but fail in the larger ring settings. On the other hand, the introduction of curricula to the training procedure (Fig. 5(c)) helps significantly scale the performance of these algorithms, producing much fewer waves. Finally, when trained in the presence of stochastic lane changes (Fig. 5(d)), slightly more waves are experienced due to the increased complexity of the problem.

4.2. Realistic Network Experiments

Next, we validate the generalizability of the learned policy by evaluating its zero-shot transfer to an intricate and calibrated model of human-driving. The target network considered (Figure 1, right) is a simulation of a -mile section of the I-210 highway network in Los Angeles, California. This network has been the topic of considerable research, with various studies aiming to identify and reconstruct the source of congestion within it Gomes et al. (2004); Dion et al. (2015). We here exploit the work of Lee et al. (2021), which explores the role of mixed-autonomy traffic systems in improving the energy-efficiency of vehicles within I-210.

Simulations of the I-210 network are implemented in Flow Wu et al. (2021), an open-source framework designed to enable the integration of machine learning tools with microscopic simulations of traffic via SUMO Krajzewicz et al. (2012). These simulations are executed with step sizes of sec/step and are warmed-started for sec to allow for the onset of congestion before being run within the evaluation procedure an additional sec. Once the initial warmup period is finished, % of vehicles are replaced with AVs whose actions are sampled from the learned policy to mimic a penetration rate of %.

Figure 9 depicts the performance of the different learned policies once transferred onto the I-210 network. In this task, we can no longer compute the performance as before, as no optimal uniform flow speed exists. Instead, we choose to compute the average time spent stopped by individual vehicles as they traverse the network. This serves as a proxy for the average length and frequency of stop-and-go waves that emerge in the network. Moreover, in choosing an estimation for the expectations in Eq. 8 and 7, we compute the average number of lane changes experienced in the I-210 in the absence of autonomy and use different magnitudes of this expectation (half, equal/same, double, and quadruple) as the parameter for different algorithms. As we can see from this figure, both the choice of network size and the presence and magnitude of lane changes significantly impact the amount of stopped delay incurred by drivers. From the perspective of magnitude, the transition from to AVs captures a significant portion of the improvement, with consecutive gains being marginal. This suggests that the vehicles themselves, when interacting with one another, also largely nullify the effects of the boundary in training time. From the perspective of the lane change frequency, a similar effect is seen as frequencies increase, with the policies learning more cautious maneuvers to avoid the increasing perturbations.

Figure 8 depicts the spatio-temporal performance of the learned policy in the -AV setting once transferred to the I-210 network. As noted in the previous paragraph, in the absence of lane changes, policies learned significantly underperforms when transferred to this network. In particular, the policy does not account for the eventuality of waves forming downstream by maintaining a safe distance from its leader, thereby periodically contributing to the propagation of stop-and-go waves. Conversely, the policies trained in the presence of lane changes generalizes nearly perfectly to the new settings, effectively dissipating the vast majority of waves that appear. Similar behaviors seem to occur when the number of lane changes experienced in training is as high as quadruple those in the target task. However, in this latter setting, large gaps begin to form by the automated vehicles in largely uncongested settings. These overly cautious behaviors serve to unnecessarily degrade the mobility of the network, reducing the average speeds of vehicles and the throughput of the entire system despite, interestingly enough, reducing the amount of stopped time experienced on average. This finding suggests that, while exact estimations of lane-change frequencies may not be needed, significant overestimation should be avoided when designing a robust system that is not overly apprehensive of potential perturbations.

5. Conclusion

This paper explores methods for learning generalizable policies in computationally efficient ring road simulations that effectively transfer to calibrated representations of traffic. First, it introduces a curriculum learning paradigm that utilizes the extendability of the mixed autonomy ring problem to learn scalable interactions between arbitrary numbers of vehicles. Next, it presents a simple method for introducing lane-change style perturbations to the environment in training time. Finally, it demonstrates that combining these two approaches results in a robust learning procedure that produces traffic smoothing policies that generalize to open multi-lane highway networks.

References

- (1)

- Bando et al. (1995) Masako Bando, Katsuya Hasebe, Akihiro Nakayama, Akihiro Shibata, and Yuki Sugiyama. 1995. Dynamical model of traffic congestion and numerical simulation. Physical review E 51, 2 (1995), 1035.

- Bansal et al. (2017) Trapit Bansal, Jakub Pachocki, Szymon Sidor, Ilya Sutskever, and Igor Mordatch. 2017. Emergent complexity via multi-agent competition. arXiv preprint arXiv:1710.03748 (2017).

- Bellman (1957) Richard Bellman. 1957. A Markovian decision process. Journal of mathematics and mechanics 6, 5 (1957), 679–684.

- Cui et al. (2021) Jiaxun Cui, William Macke, Harel Yedidsion, Aastha Goyal, Daniel Urieli, and Peter Stone. 2021. Scalable Multiagent Driving Policies for Reducing Traffic Congestion. In Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems. 386–394.

- Cui et al. (2017) Shumo Cui, Benjamin Seibold, Raphael Stern, and Daniel B Work. 2017. Stabilizing traffic flow via a single autonomous vehicle: Possibilities and limitations. In 2017 IEEE Intelligent Vehicles Symposium (IV). IEEE, 1336–1341.

- Dion et al. (2015) F Dion, J Butler, L Hammon, and Y Xuan. 2015. Connected corridors: I-210 pilot integrated corridor management system concept of operations. California PATH, Berkeley, CA (2015).

- Gomes et al. (2004) Gabriel Gomes, Adolf May, and Roberto Horowitz. 2004. Congested freeway microsimulation model using VISSIM. Transportation Research Record 1876, 1 (2004), 71–81.

- Krajzewicz et al. (2012) Daniel Krajzewicz, Jakob Erdmann, Michael Behrisch, and Laura Bieker. 2012. Recent development and applications of SUMO-Simulation of Urban MObility. International journal on advances in systems and measurements 5, 3&4 (2012).

- Kreidieh et al. (2018) Abdul Rahman Kreidieh, Cathy Wu, and Alexandre M Bayen. 2018. Dissipating stop-and-go waves in closed and open networks via deep reinforcement learning. In 2018 21st International Conference on Intelligent Transportation Systems (ITSC). IEEE, 1475–1480.

- Lee et al. (2021) Jonathan W Lee, George Gunter, Rabie Ramadan, Sulaiman Almatrudi, Paige Arnold, John Aquino, William Barbour, Rahul Bhadani, Joy Carpio, Fang-Chieh Chou, et al. 2021. Integrated Framework of Vehicle Dynamics, Instabilities, Energy Models, and Sparse Flow Smoothing Controllers. arXiv preprint arXiv:2104.11267 (2021).

- Leibo et al. (2017) Joel Z Leibo, Vinicius Zambaldi, Marc Lanctot, Janusz Marecki, and Thore Graepel. 2017. Multi-agent reinforcement learning in sequential social dilemmas. arXiv preprint arXiv:1702.03037 (2017).

- Littman (1994) Michael L Littman. 1994. Markov games as a framework for multi-agent reinforcement learning. In Machine learning proceedings 1994. Elsevier, 157–163.

- Mordatch and Abbeel (2018) Igor Mordatch and Pieter Abbeel. 2018. Emergence of grounded compositional language in multi-agent populations. In Thirty-second AAAI conference on artificial intelligence.

- Orosz et al. (2009) Gábor Orosz, R Eddie Wilson, Róbert Szalai, and Gábor Stépán. 2009. Exciting traffic jams: Nonlinear phenomena behind traffic jam formation on highways. Physical review E 80, 4 (2009), 046205.

- Pan and Yang (2009) Sinno Jialin Pan and Qiang Yang. 2009. A survey on transfer learning. IEEE Transactions on knowledge and data engineering 22, 10 (2009), 1345–1359.

- Schulman et al. (2015) John Schulman, Sergey Levine, Pieter Abbeel, Michael Jordan, and Philipp Moritz. 2015. Trust region policy optimization. In International conference on machine learning. PMLR, 1889–1897.

- Silver et al. (2016) David Silver, Aja Huang, Chris J Maddison, Arthur Guez, Laurent Sifre, George Van Den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, et al. 2016. Mastering the game of Go with deep neural networks and tree search. nature 529, 7587 (2016), 484–489.

- Stern et al. (2018) Raphael E Stern, Shumo Cui, Maria Laura Delle Monache, Rahul Bhadani, Matt Bunting, Miles Churchill, Nathaniel Hamilton, Hannah Pohlmann, Fangyu Wu, Benedetto Piccoli, et al. 2018. Dissipation of stop-and-go waves via control of autonomous vehicles: Field experiments. Transportation Research Part C: Emerging Technologies 89 (2018), 205–221.

- Sugiyama et al. (2008) Yuki Sugiyama, Minoru Fukui, Macoto Kikuchi, Katsuya Hasebe, Akihiro Nakayama, Katsuhiro Nishinari, Shin-ichi Tadaki, and Satoshi Yukawa. 2008. Traffic jams without bottlenecks—experimental evidence for the physical mechanism of the formation of a jam. New journal of physics 10, 3 (2008), 033001.

- Sun et al. (2018) Jie Sun, Zuduo Zheng, and Jian Sun. 2018. Stability analysis methods and their applicability to car-following models in conventional and connected environments. Transportation research part B: methodological 109 (2018), 212–237.

- Swaroop and Hedrick (1996) Darbha Swaroop and J Karl Hedrick. 1996. String stability of interconnected systems. IEEE transactions on automatic control 41, 3 (1996), 349–357.

- Taylor and Stone (2009) Matthew E Taylor and Peter Stone. 2009. Transfer learning for reinforcement learning domains: A survey. Journal of Machine Learning Research 10, 7 (2009).

- Tobin et al. (2017) Josh Tobin, Rachel Fong, Alex Ray, Jonas Schneider, Wojciech Zaremba, and Pieter Abbeel. 2017. Domain randomization for transferring deep neural networks from simulation to the real world. In 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 23–30.

- Treiber et al. (2000) Martin Treiber, Ansgar Hennecke, and Dirk Helbing. 2000. Congested traffic states in empirical observations and microscopic simulations. Physical review E 62, 2 (2000), 1805.

- Vinitsky et al. (2018) Eugene Vinitsky, Aboudy Kreidieh, Luc Le Flem, Nishant Kheterpal, Kathy Jang, Cathy Wu, Fangyu Wu, Richard Liaw, Eric Liang, and Alexandre M Bayen. 2018. Benchmarks for reinforcement learning in mixed-autonomy traffic. In Conference on robot learning. PMLR, 399–409.

- Vinitsky et al. (2020) Eugene Vinitsky, Nathan Lichtle, Kanaad Parvate, and Alexandre Bayen. 2020. Optimizing Mixed Autonomy Traffic Flow With Decentralized Autonomous Vehicles and Multi-Agent RL. arXiv preprint arXiv:2011.00120 (2020).

- Wang and Horn (2019) Liang Wang and Berthold KP Horn. 2019. On the chain stability of bilateral control model. IEEE Trans. Automat. Control 65, 8 (2019), 3397–3408.

- Williams (1992) Ronald J Williams. 1992. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine learning 8, 3 (1992), 229–256.

- Wu et al. (2017a) Cathy Wu, Aboudy Kreidieh, Eugene Vinitsky, and Alexandre M Bayen. 2017a. Emergent behaviors in mixed-autonomy traffic. In Conference on Robot Learning. PMLR, 398–407.

- Wu et al. (2021) Cathy Wu, Abdul Rahman Kreidieh, Kanaad Parvate, Eugene Vinitsky, and Alexandre M Bayen. 2021. Flow: A Modular Learning Framework for Mixed Autonomy Traffic. IEEE Transactions on Robotics (2021).

- Wu et al. (2017b) Cathy Wu, Eugene Vinitsky, Aboudy Kreidieh, and Alexandre Bayen. 2017b. Multi-lane reduction: A stochastic single-lane model for lane changing. In 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 1–8.

- Wu et al. (2019) Yuanyuan Wu, Haipeng Chen, and Feng Zhu. 2019. DCL-AIM: Decentralized coordination learning of autonomous intersection management for connected and automated vehicles. Transportation Research Part C: Emerging Technologies 103 (2019), 246–260.