Learning Efficient Navigation in Vortical Flow Fields

Abstract

Efficient point-to-point navigation in the presence of a background flow field is important for robotic applications such as ocean surveying. In such applications, robots may only have knowledge of their immediate surroundings or be faced with time-varying currents, which limits the use of optimal control techniques for planning trajectories. Here, we apply a novel Reinforcement Learning algorithm to discover time-efficient navigation policies to steer a fixed-speed swimmer through an unsteady two-dimensional flow field. The algorithm entails inputting environmental cues into a deep neural network that determines the swimmer’s actions, and deploying Remember and Forget Experience replay. We find that the resulting swimmers successfully exploit the background flow to reach the target, but that this success depends on the type of sensed environmental cue. Surprisingly, a velocity sensing approach outperformed a bio-mimetic vorticity sensing approach by nearly two-fold in success rate. Equipped with local velocity measurements, the reinforcement learning algorithm achieved near 100% success in reaching the target locations while approaching the time-efficiency of paths found by a global optimal control planner.

Introduction.—Navigation in the presence of a background unsteady flow field is an important task in a wide range of robotic applications, including ocean surveying [1], monitoring of deep-sea animal communities [2], drone-based inspection and delivery in windy conditions [3], and weather balloon station keeping [4]. In such applications, robots must contend with unsteady fluid flows such as wind gusts or ocean currents in order to survey specific locations and return useful measurements, often autonomously. Ideally, robots would exploit these background currents to propel themselves to their destinations more quickly or with lower energy expenditure.

If the entire background flow field is known in advance, numerous algorithms exist to accomplish optimal path planning, ranging from the classical Zermelo’s equation from optimal control theory [5, 6] to modern optimization approaches [7, 8, 3, 1, 9, 10]. However, measuring the entire flow field is often be impractical, as ocean and air currents can be difficult to measure and can change unpredictably. Robots themselves can also significantly alter the surrounding flow field, for example when multi-rotors fly near obstacles [11] or during fish-like swimming [12]. Additionally, oceanic and flying robots are increasingly operated autonomously and therefore do not have access to real-time external information about incoming currents and gusts (e.g. [13, 14]).

Instead, robots may need to rely on data from on-board sensors to react to the surrounding flow field and navigate effectively. A bio-inspired approach is to navigate using local flow information, for example by sensing the local flow velocity or pressure. Zebrafish appear to use their lateral line to sense the local flow velocity and avoid obstacles by recognizing changes in the local vorticity due to boundary layers [15]. Some seal species can orient themselves and hunt in total darkness by detecting currents with their whiskers [16]. Additionally, a numerical study of fish schooling demonstrated how surface pressure gradient and shear stress sensors on a downstream fish can determine the locations of upstream fish, thus enabling energy-efficient schooling behavior [17].

Reinforcement Learning (RL) offers a promising approach for replicating this feat of navigation from local flow information. In simulated environments, RL has successfully discovered energy-efficient fish swimming [18, 19] and schooling behavior [12], and a time-efficient navigation policy for repeated quasi-turbulent flow using position information [20]. In application, RL using local wind velocity estimates outperformed existing methods for energy-efficient weather balloon station keeping [4] and for replicating bird soaring [21]. Other methods exist for navigating uncertainty in a partially known flow field such as fuzzy logic or adaptive control methods [7], however RL can be applied generally to an unknown flow field without requiring human tuning for specific scenarios.

The question remains, however, as to which environmental cues are most useful for navigating through flow fields using RL. A biomimetic approach suggest that sensing the vorticity could be beneficial [15]; however flow velocity, pressure, or quantities derived thereof are also viable candidates for sensing.

In this letter, we find that Deep Reinforcement Learning can indeed discover time-efficient, robust paths through an unsteady, two-dimensional (2D) flow field using only local flow information, where simpler strategies such as swimming towards the target largely fail at the task. We find, however, that the success of the RL approach depends on the type of flow information provided. Surprisingly, a RL swimmer equipped with local velocity measurements dramatically outperforms the bio-mimetic local vorticity approach. These results show that combining RL-based navigation with local flow measurements can be a highly effective method for navigating through unsteady flow, provided the appropriate flow quantities are used as inputs to the algorithm.

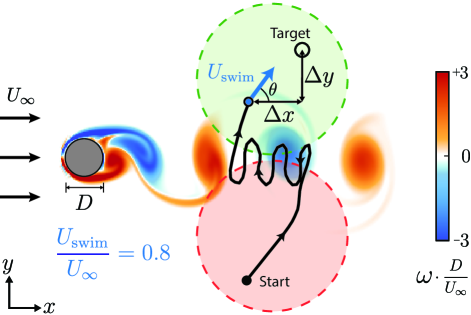

Simulated Navigation Problem.—As a testing environment for RL-based navigation, we pose the problem of navigating across an unsteady von Kármán vortex street obtained by simulating 2D, incompressible flow past a cylinder at a Reynolds number of 400. Other studies have investigated optimal navigation through real ocean flows [1], simulated turbulence [20], and simple flows for which there exist exact optimal navigation solutions [8]. Here, we investigate the flow past a cylinder to retain greater interpretability of learned navigation strategies while remaining a challenging, unsteady navigation problem.

The swimmer is tasked with navigating from a starting point on one side of the cylinder wake to within a small radius of a target point on the opposite side of the wake region. For each episode, or attempt to swim to the target, a pair of start and target positions are chosen randomly within disk regions as shown in Figure 1. Additionally, the swimmer is assigned a random starting time in the vortex shedding cycle. The spatial and temporal randomness prevent the RL algorithm from speciously forming a one-to-one correspondence between the swimmer’s relative position and the background flow, which would not reflect real-world navigation scenarios. All swimmers have access to their position relative to the target (, ) rather than their absolute position to further prevent the swimmer from relying on memorized locations of flow features during training.

For simplicity and training speed, we consider the swimmer to be a massless point with a position which advects with the time-dependent background flow . The swimmer can swim with a constant speed and can directly control its swimming direction . These dynamics are discretized with a time step using a forward Euler scheme:

| (1) | |||

| (2) |

It is also possible to apply RL-based navigation with more complex dynamics, including when the swimmer’s actions alter the background flow [12].

We chose a swimming speed of 80% of the freestream speed to make the navigation problem challenging, as the swimmer cannot overcome the local flow in some regions of the domain. A slower speed () makes navigating this flow largely intractable, while a swimming speed greater than the freestream () would allow the swimmer to overcome the background flow and easily reach the target.

Navigation Using Deep Reinforcement Learning.—In Reinforcement Learning, an agent acts according to a policy, which takes in the agent’s state as an input and outputs an action . Through repeated experiences with the surrounding environment, the policy is trained so that the agent’s behavior maximizes a cumulative reward. Here, the agent is a swimmer, the action is the swimming direction , and we seek to determine how the performance of a learned navigation policy is impacted by the type of flow information contained in the state.

To this end, we first consider a flow-blind swimmer as a baseline, which cannot sense the surrounding flow and only has access to its position relative to the target (). Next, inspired by the vorticity-based navigation strategy of the zebrafish [15], we consider a vorticity swimmer with access to the local vorticity at the current and previous time step in order to sense changes in the local vorticity (). We also consider a velocity swimmer, which has access to both components of the local background velocity (). Other states were also investigated, and are included in supplemental materials.

We employ Deep Reinforcement Learning for this navigation problem, in which the navigation policy is expressed using a deep neural network. Previously, Biferale et al. [20] employed an actor-critic approach for RL-based navigation of repeated quasi-turbulent flow. The policy was expressed using a basis function architecture, requiring a coarse discretization of both the swimmer’s position and swimming direction. Here, a single 128128 deep neural network is used for the navigation policy, which accepts the swimmers state (i.e. flow information and relative position) and outputs the swimming direction as continuous variables. The network also outputs a Gaussian variance in the swimming direction to allow for exploration during training. The policy network is randomly initialized and then trained through repeated attempts to reach the target using the V-RACER algorithm [22]. The V-RACER algorithm employs Remember and Forget Experience replay, in which experiences from previous iterations are used to update the swimmer’s current policy in a stable and data-efficient manner. Due to the random intialization of the policy, the training process is repeated five times for each swimmer to ensure reproducibility [23].

At each time step, the swimmer receives a reward according to the reward function , which is designed to produce the desired behavior of navigating to the target. We employ a similar reward function as Biferale et al. [20]:

| (3) |

The first term penalizes duration of an episode to encourage fast navigation to the target. The second two terms give a reward when the swimmer is closer to the target than it was in the previous time step. The final term is a bonus equal to 200 seconds, or approximately 30 times the duration of a typical trajectory. The bonus is awarded if the swimmer successfully reaches the target. Swimmers that exit the simulation area or collide with the cylinder are treated as unsuccessful. The second two terms are scaled by 10 to be on the same order of magnitude as the first term, which we found significantly improved training speed and navigation success rates. We also investigated a non-linear reward function, in which the second two terms are the reciprocal of the distance to the target, however it exhibited lower performance. The RL algorithm seeks to maximize the total reward, which is the sum of the reward function across all time steps in an episode:

| (4) |

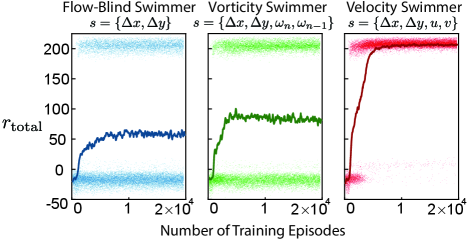

Assuming the swimmer reaches the target location, the only term in that depends on the swimmer’s trajectory is . Therefore, maximizing the cumulative reward of a successful episode is equivalent to finding the minimum time path to the target. During training however, all terms in the reward contribute to finding policies that drive the swimmer to the target in the first place. The evolution of the reward function during training for each swimmer is shown in Figure 2. All RL swimmers were trained for 20,000 episodes.

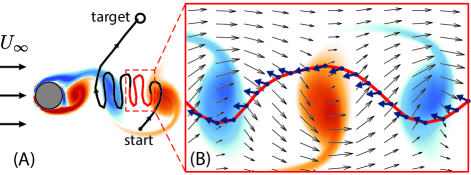

Success of RL Navigation.—After training, Deep RL discovered effective policies for navigating through this unsteady flow. An example of a path discovered by the velocity RL swimmer is shown in Figure 3. Because the swimming speed is less than the free-stream velocity, the swimmer must utilize the wake region where it can exploit slower background flow to swim upstream. Once sufficiently far upstream, the swimmer can then steer towards the target. The plot of the swimming direction inside the wake (Figure 4B) shows how the swimmer changes its swimming direction in response to the background flow, enabling it to maintain its position inside the wake region and target low-velocity regions.

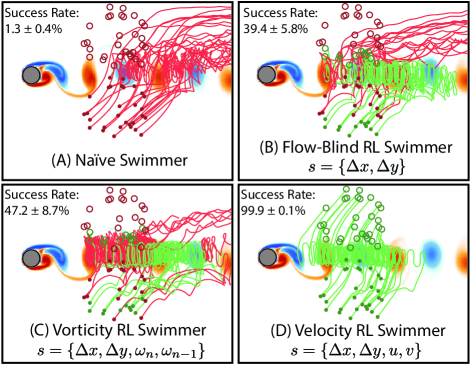

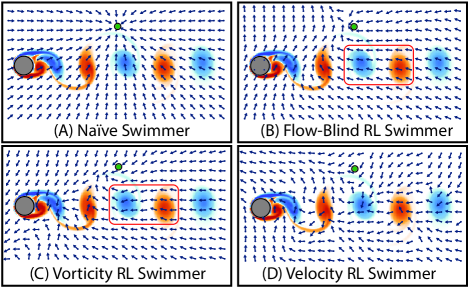

However, the ability of Deep RL to discover these effective navigation strategies depends on the type of local flow information included in the swimmer state. To illustrate this point, example trajectories and the average success rates of the flow-blind, vorticity, and velocity RL swimmers are plotted in Figure 4, and are compared with a naïve policy of simply swimming towards the target ().

A naïve policy of swimming towards the target is highly ineffective. Swimmers employing this policy are swept away by the background flow, and reached the target only 1.2% of the time on average. A reinforcement learning approach, even without access to flow information, is much more successful: the flow-blind swimmer reached the target locations nearly 40% of the time.

Giving the RL swimmers access to local flow information increases the success further: the vorticity RL swimmer averaged a 47.2% success rate. Surprisingly however, the velocity swimmer has a near 100% success rate, greatly outperforming the zebrafish-inspired vorticity approach. With the right local flow information, it appears that an RL approach can navigate nearly without fail through a complex, unsteady flow field. However, the question remains as to why some flow properties are more informative than others.

To better understand the difference between RL swimmers with access to different flow properties, the swimming direction computed by each RL policy is plotted over a grid of locations in Figure 5. The flow-blind swimmer does not react to changes in the background flow field, although it does appear to learn the effect of the mean background flow, possibly through correlation between the mean flow and the relative position of the swimmer in the domain. This provides it an advantage over the naïve swimmer. The vorticity swimmer adjusts its swimming direction modestly in response to changes in the background flow, for example by swimming slightly upwards in counter-clockwise vortices and slightly downwards in clockwise vortices. The velocity swimmer appears most sensitive to the background flow, which may help it respond more effectively to changes in the background flow.

Station-keeping inside the wake region may be important for navigating through this flow. In the upper right of the domain, the velocity swimmer learns to orient downwards and back to the wake region, while the other swimmers swim futilely towards the target. Because the vorticity depends on gradients in the background flow, that property cannot be used to respond to flow disturbances that are spatially uniform. These difference appear to explain many of the failed trajectories in Figure 4, in which the flow-blind and vorticity swimmers are swept up and to the right by the background flow.

It is worth noting that because the flow pushes the swimmers according to linear dynamics (Equation 2), the local velocity can exactly determine the swimmer’s position at the next time step. This may explain the high navigation success of the velocity swimmer, as it has the potential to accurately predict its next location. To be sure, the Deep RL algorithm must still learn where the most advantageous next location ought to be, as the flow velocity at the next time step is still unknown.

While sensing of vorticity is insufficient to detect spatially uniform disturbances, it can be useful for distinguishing the vortical wake from the freestream flow. This can explain why the vorticity swimmer performs better than the flow-blind swimmer. A similar reasoning could apply to swimmers that sense other flow quantities such as pressure or shear.

For real swimmers however, vorticity may play a larger role, for example by causing a swimmer to rotate in the flow [24] or by altering boundary layers and skin friction drag [12]. Real robots would also be subject to additional sources of complexity not considered in this simplified simulation, which would make it more difficult to determine a swimmer’s next position from local velocity measurements.

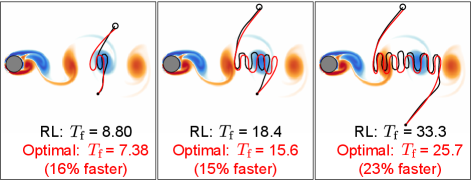

Comparison with Optimal Control.—In addition to reaching the destination successfully, it is desirable to navigate to the target while minimizing energy consumption or time spent traveling. Biferale et. al [20] demonstrated that RL can approach the performance of time-optimal trajectories in steady flow for fixed start and target positions. Here, we find that this result also holds for the more challenging problem of navigating unsteady flow with variable start and target points.

As noted in Equation 4, maximizing is equivalent to minimizing the time spent traveling to the target (), provided the swimmer successfully reaches the target. Therefore, we compare the velocity RL swimmer to the time-optimal swimmer derived from optimal control.

To find time-optimal paths through the flow, given knowledge of the full velocity field at all times, we constructed a path planner that finds locally optimal paths in two steps. First, a rapidly-exploring random tree algorithm (RRT) finds a set of control inputs that drive the swimmer from the starting location to the target location, typically non-optimally [25]. Then we apply constrained gradient-descent optimization (i.e. the fmincon function in MATLAB) to minimize the time step (and therefore overall time ) of the trajectory while enforcing that the swimmer starts at the starting point (Equation 1), obeys the dynamics at every time step in the trajectory (Equation 2), and reaches the target (). The trajectories produced by this method are local minima, so to approximate a globally optimal solution, we run the path planner 30 times and chose the fastest trajectory. Unlike with steady flow, Zermelo’s classical solution for optimal navigation is not readily applicable for unsteady flow. Other algorithms could also be used to find optimal trajectories for unsteady flow given knowledge of the entire flow field [10, 8].

A comparison between RL and time-optimal navigation for three sets of start and target points is shown in Figure 6. These points were chosen to represent a range of short and long duration trajectories. Despite only having access to local information, the RL trajectories are nearly as fast and qualitatively similar to the optimal trajectories, which were generated with the advantage of having full global knowledge of the flow field.

The surprisingly high performance of the RL approach compared to a global path planner suggests that deep neural networks can, to some extent, approximate how local flow at a particular time impacts navigation in the future. In other words, a successful RL swimmer must simultaneously navigate and identify the approximate current state of the environment. In comparison, the optimal control approach relies on knowledge of the environment in advance. There are limitations to the RL approach, however. For example, the optimal swimmer in the middle of Figure 6 enters the wake region at a different location than the RL swimmer to avoid a high velocity region, which the RL swimmer may not have been able to sense initially.

In addition to approaching the optimality of a global planner, RL navigation offers a robustness advantage. As noted in [20], RL can be robust to small changes in initial conditions. Here, we show that RL navigation can generalize to a large area of initial and target conditions as well as random starting times in the unsteady flow. RL navigation may also generalize to other flow fields to some extent [24]. In contrast, the optimal trajectories here are open loop: any disturbance or flow measurement inaccuracy would prevent the swimmer from successfully navigating the target. While robustness can be included with optimal control in other ways [7], responding to changes in the surrounding environment is the driving principle of this RL navigation policy. Indeed, the related algorithm of imitation learning has been applied to add robustness to existing path planners [26].

Conclusion.—We have shown in this Letter how Deep Reinforcement Learning can discover robust and time-efficient navigation policies which are improved by sensing local flow information. A bio-inspired approach of sensing the local vorticity provided a modest increase in navigation success over a position-only approach, but surprisingly the key to success was discovered to lie in sensing the velocity field, which more directly determined the future position of the swimmer. This suggests that RL coupled with an on-board velocity sensor may be an effective tool for robot navigation. Future investigation is warranted to examine the extent to which the success of the velocity approach extends to real-world scenarios, in which robots may face more complex, 3D fluid flows, and be subject to non-linear dynamics and sensor errors.

References

- Weizhong Zhang et al. [2008] Weizhong Zhang, T. Inanc, S. Ober-Blobaum, and J. E. Marsden, in 2008 IEEE International Conference on Robotics and Automation (2008) pp. 1083–1088, iSSN: 1050-4729.

- Kuhnz et al. [2020] L. A. Kuhnz, H. A. Ruhl, C. L. Huffard, and K. L. Smith, Deep Sea Research Part II: Topical Studies in Oceanography Thirty-year time-series study in the abyssal NE Pacific, 173, 104761 (2020).

- Guerrero and Bestaoui [2013] J. A. Guerrero and Y. Bestaoui, Journal of Intelligent & Robotic Systems 69, 297 (2013).

- Bellemare et al. [2020] M. G. Bellemare, S. Candido, P. S. Castro, J. Gong, M. C. Machado, S. Moitra, S. S. Ponda, and Z. Wang, Nature 588, 77 (2020), number: 7836 Publisher: Nature Publishing Group.

- Zermelo [1931] E. Zermelo, ZAMM - Journal of Applied Mathematics and Mechanics / Zeitschrift für Angewandte Mathematik und Mechanik 11, 114 (1931).

- Techy [2011] L. Techy, Intelligent Service Robotics 4, 271 (2011).

- Panda et al. [2020] M. Panda, B. Das, B. Subudhi, and B. B. Pati, International Journal of Automation and Computing 17, 321 (2020).

- Kularatne et al. [2018] D. Kularatne, S. Bhattacharya, and M. A. Hsieh, Autonomous Robots 42, 1369 (2018).

- Petres et al. [2007] C. Petres, Y. Pailhas, P. Patron, Y. Petillot, J. Evans, and D. Lane, IEEE Transactions on Robotics 23, 331 (2007), conference Name: IEEE Transactions on Robotics.

- Lolla et al. [2014] T. Lolla, P. F. J. Lermusiaux, M. P. Ueckermann, and P. J. Haley, Ocean Dynamics 64, 1373 (2014).

- Shi et al. [2019] G. Shi, X. Shi, M. O’Connell, R. Yu, K. Azizzadenesheli, A. Anandkumar, Y. Yue, and S. Chung, in 2019 International Conference on Robotics and Automation (ICRA) (2019) pp. 9784–9790, iSSN: 2577-087X.

- Verma et al. [2018] S. Verma, G. Novati, and P. Koumoutsakos, Proceedings of the National Academy of Sciences 115, 5849 (2018), publisher: National Academy of Sciences Section: Physical Sciences.

- Fiorelli et al. [2006] E. Fiorelli, N. E. Leonard, P. Bhatta, D. A. Paley, R. Bachmayer, and D. M. Fratantoni, IEEE Journal of Oceanic Engineering 31, 935 (2006), conference Name: IEEE Journal of Oceanic Engineering.

- Caron et al. [2008] D. A. Caron, B. Stauffer, S. Moorthi, A. Singh, M. Batalin, E. A. Graham, M. Hansen, W. J. Kaiser, J. Das, A. Pereira, A. Dhariwal, B. Zhang, C. Oberg, and G. S. Sukhatme, Limnology and Oceanography 53, 2333 (2008).

- Oteiza et al. [2017] P. Oteiza, I. Odstrcil, G. Lauder, R. Portugues, and F. Engert, Nature 547, 445 (2017), number: 7664 Publisher: Nature Publishing Group.

- Dehnhardt et al. [1998] G. Dehnhardt, B. Mauck, and H. Bleckmann, Nature 394, 235 (1998), number: 6690 Publisher: Nature Publishing Group.

- Weber et al. [2020] P. Weber, G. Arampatzis, G. Novati, S. Verma, C. Papadimitriou, and P. Koumoutsakos, Biomimetics 5, 10 (2020).

- Gazzola et al. [2014] M. Gazzola, B. Hejazialhosseini, and P. Koumoutsakos, SIAM Journal on Scientific Computing 36, B622 (2014), publisher: Society for Industrial and Applied Mathematics.

- Jiao et al. [2020] Y. Jiao, F. Ling, S. Heydari, N. Heess, J. Merel, and E. Kanso, arXiv:2009.14280 [physics, q-bio] (2020), arXiv: 2009.14280.

- Biferale et al. [2019] L. Biferale, F. Bonaccorso, M. Buzzicotti, P. Clark Di Leoni, and K. Gustavsson, Chaos: An Interdisciplinary Journal of Nonlinear Science 29, 103138 (2019), publisher: American Institute of Physics.

- Reddy et al. [2018] G. Reddy, J. Wong-Ng, A. Celani, T. J. Sejnowski, and M. Vergassola, Nature 562, 236 (2018), number: 7726 Publisher: Nature Publishing Group.

- Novati and Koumoutsakos [2019] G. Novati and P. Koumoutsakos, arXiv:1807.05827 [cs, stat] (2019), arXiv: 1807.05827.

- Henderson et al. [2019] P. Henderson, R. Islam, P. Bachman, J. Pineau, D. Precup, and D. Meger, arXiv:1709.06560 [cs, stat] (2019), arXiv: 1709.06560.

- Colabrese et al. [2017] S. Colabrese, K. Gustavsson, A. Celani, and L. Biferale, Physical Review Letters 118, 158004 (2017), publisher: American Physical Society.

- LaValle and Kuffner [2001] S. M. LaValle and J. J. Kuffner, The International Journal of Robotics Research 20, 378 (2001), publisher: SAGE Publications Ltd STM.

- Rivière et al. [2020] B. Rivière, W. Hönig, Y. Yue, and S. Chung, IEEE Robotics and Automation Letters 5, 4249 (2020), conference Name: IEEE Robotics and Automation Letters.