Learning-Augmented Search Data Structures

Abstract

We study the integration of machine learning advice to improve upon traditional data structure designed for efficient search queries. Although there has been recent effort in improving the performance of binary search trees using machine learning advice, e.g., Lin et. al. (ICML 2022), the resulting constructions nevertheless suffer from inherent weaknesses of binary search trees, such as complexity of maintaining balance across multiple updates and the inability to handle partially-ordered or high-dimensional datasets. For these reasons, we focus on skip lists and KD trees in this work. Given access to a possibly erroneous oracle that outputs estimated fractional frequencies for search queries on a set of items, we construct skip lists and KD trees that provably provides the optimal expected search time, within nearly a factor of two. In fact, our learning-augmented skip lists and KD trees are still optimal up to a constant factor, even if the oracle is only accurate within a constant factor. We also demonstrate robustness by showing that our data structures achieves an expected search time that is within a constant factor of an oblivious skip list/KD tree construction even when the predictions are arbitrarily incorrect. Finally, we empirically show that our learning-augmented search data structures outperforms their corresponding traditional analogs on both synthetic and real-world datasets.

1 Introduction

As efficient data management has become increasingly crucial, the integration of machine learning (ML) has significantly improved the design and performance of traditional algorithms for many big data applications. [KBC+18] first showed that ML could be incorporated to create data structures that support faster look-up operations while also saving an order-of-magnitude of memory compared to optimized data structures oblivious to such ML heuristics. Subsequently, learning-augmented algorithms [MV20] have been shown to achieve provable worst-case guarantees beyond the limitations of oblivious algorithms for a wide range of settings. For example, ML predictions have been utilized to achieve more efficient data structures [Mit18, LLW22], algorithms with faster runtimes [DIL+21, CSVZ22, DMVW23], mechanisms with better accuracy-privacy tradeoffs [KADV23], online algorithms with better performance than information-theoretic limits [PSK18, GP19, LLMV20, WLW20, WZ20, BMS20, AGP20, ACL+21, AGKP21, IKQP21, LV21, ACI22, AGKP22, APT22, GLS+22, KBTV22, JLL+22, SGM22, AGKK23, ACE+23, SLLA23], streaming algorithms with better accuracy-space tradeoffs [HIKV19, IVY19, JLL+20, CIW22, CEI+22, LLL+23], and polynomial-time algorithms beyond hardness-of-approximation limits, e.g., NP-hardness [EFS+22, NCN23, CLR+24].

In this paper, we focus on the consolidation of ML advice to improve data structures for the fundamental problem of searching for elements among a large dataset. For this purpose, tree-based structures stand out as a popular choice among other structures, particularly for their logarithmic average performance. However, these structures often have weaknesses for specific use cases that make them sub-optimal for various applications, which we now discuss.

Skip lists. One weakness of tree-based structures is that they need to be balanced for optimal performance, and thus their effectiveness is often closely tied to the order of element insertions. For example, the motivation of [LLW22] to study learning-augmented binary search trees noted that although previous results already characterized the statically optimal tree if the underlying distribution is known [Knu71, Meh77], these methods do not handle dynamic insertion operations. In contrast, skip lists, introduced by [Pug90a], maintain balance probabilistically, offering a simpler implementation while delivering substantial speed enhancements [Pug90b]. Skip lists are generally built iteratively in levels. The bottom levels of the skip list is an ordinary-linked list, in which the items of the dataset are organized in order. Each higher level serves to accelerate the search for the lower levels, by storing only a subset of the items in the lower levels, also as an ordered link list. Traditional skip lists are built by promoting each item in a level to a higher level randomly with a fixed probability .

Querying for a target element begins at the first element in the highest level and continues by searching along the linked list in the highest level until finding an item whose value is at least that of the target element. If the found item is greater than the target element, the process is repeated after returning to the previous element and dropping to a lower list. It can be shown that the expected number of steps in the search is so that serves as a trade-off parameter between the search time and the storage costs.

In many modern applications, skip lists are used because of their excellent search runtime and their space efficiency. Skip lists are often preferred over binary search trees due to their simplicity of implementation, their support for efficient range query, and their amenability to concurrent processes [SL00, LJ13], high efficiency for dynamic datasets [GZ08, PT10], network routing [HPJ03, ASS20], and real-time analytics [BBB+20, ZCL+23]. Thus while binary search trees have been a long-standing choice for querying ordered elements, skip lists offer a simpler, more efficient, and in some cases, necessary alternative.

KD trees. Another weakness of tree-based data structures is that they generally require the data to obey an absolute ordering. However, in many cases, e.g., geometric applications or multidimensional data, the input points can only be partially ordered. Thus in 1975, KD trees, which stand for -dimensional trees, were proposed as a more efficient alternative to binary search trees for searching in higher-dimensional spaces in procedures such as nearest neighbor search or ray tracing for applications in computational geometry or computer vision. A KD tree works by picking a data point and splitting along some spatial dimension to partition the space. This process is repeated until every data point is included in the tree, creating a hierarchical tree structure that enables quick access to specific data points or ranges within the dataset.

Skewed distributions. Traditional search data structures treat each element equally when promoting the elements to higher levels. This balancing behavior facilitates good performance in expectation when a query to the skip list is equally likely to be any dataset element. On the other hand, this behavior may limit the performance of the data structure when the incoming queries are from an unbalanced probability distribution.

Real-world applications can feature a diverse range of distribution patterns. One particularly common distribution is the Zipfian distribution, which is a probability distribution that is a discrete counterpart of the continuous Pareto distribution, and is characterized by the principle that a small number of events occur very frequently, while a large number of events occur rarely.

In a Zipfian distribution, the frequency of an event is inversely proportional to its rank , raised to the power of (where is a positive parameter), in a dataset of elements. In particular, we have . The value of determines the steepness of the distribution so that a smaller value, i.e., closer to , makes it more uniform, while a larger increases skewness.

Zipfian distributions provide a simple means for understanding phenomena in various fields involving rank and frequency, ranging from linguistics to economics, and from urban studies to information technology. Indeed, they appear in many applications such as word frequencies in natural language [WW16, BHZ18], city populations [Gab99, VA15], biological cellular distributions [LVM+23], income distribution [San15], etc.

Unfortunately, although Zipfian distributions are common in practice, their properties are generally not leveraged by traditional search data structures, which are oblivious to any information about the query distributions. To improve this performance bottleneck, we propose the augmentation of traditional skip lists and KD trees with “learned” advice, which (possibly erroneously) informs the data structure in advance about some useful statistics on the incoming queries. Although we model the data structure as having oracle access to the advice, in practice, such advice can often easily be acquired from machine learning heuristics trained for these statistics.

1.1 Our Contributions

We propose the incorporation of ML advice into the design of skip lists and KD trees to improve upon traditional data structure design. For ease of discussion in this section, we assume the items that may appear either in the data set or the query set can be associated with an integer in , which also in the case of high-dimensional data, may be associated with a -dimensional point. We allow the algorithm access to a possibly erroneous oracle that, for each , outputs a quantity , which should be interpreted as an estimation for the proportion of search queries that will be made to the data structure for the item . Hence, for each , we assume that and . Note that these constraints can be easily enforced upon the oracle as a pre-processing step prior to designing the skip list or KD tree data structure. We also assume that the oracle is readily accessible so that there is no cost for each interaction with the oracle. Consequently, we assume the algorithm has access to the predicted frequency by the oracle for all . On the other hand, we view a sequence of queries as defining a probability distribution over the set of queries, so that is the true proportion of queries to item , for each . Although is the ground truth, our algorithms only have access to , which may or may not accurately capture .

Consistency for accurate oracles. We introduce construction for a learning-augmented skip list and KD trees, which gives expected search time at most , for some constant . On the other hand, we show that any skip list or KD tree construction requires an expected search time of at least the entropy of the probability vector . We recall that the entropy is defined as .

Thus, our results indicate that within nearly a factor of two, our learning-augmented search data structures are optimal for any distribution of queries, provided that the oracle is perfectly accurate. Moreover, even if the oracle on each estimated probability is only accurate up to a constant factor, then our learning-augmented search data structures are still optimal, up to a constant factor.

Implications to Zipfian distributions. We describe the implications of our results to queries that follow a Zipfian distribution; analogous results hold for other skewed distributions, e.g., the geometric distribution. It is known that if the -th most common query/item has proportion for some , then the entropy of the corresponding probability vector is a constant. Consequently, if the set of queries follows a Zipfian distribution and the oracle is approximately accurate within a constant factor, then the expected search time for an item by our search data structures is only a constant, independent of the total number of items, i.e., . By comparison, a traditional skip list or KD tree will have expected search time .

Robustness to erroneous oracles. So far, our discussions have centered around an oracle that either produces estimated probabilities such that or is within a constant factor of . However, in some cases, the machine learning algorithm serving as the oracle can be completely wrong. In particular, a model that is trained on a dataset before a distribution change, e.g., seasonal trends or other temporal shifts, can produce wildly inaccurate predictions. We show that our search data structures are robust to erroneous oracles. Specifically, we show that our algorithms achieve an expected search time that is within a constant factor of an oblivious skip list or KD tree construction when the predictions are incorrect. Therefore, our data structure achieves both consistency, i.e., good algorithmic performance when the oracle is accurate, and robustness, i.e., standard algorithmic performance when the oracle is inaccurate.

Empirical evaluations. Finally, we analyze our learning-augmented search data structures list on both synthetic and real-world datasets. Firstly, we compare the performance of traditional skip lists with our learning-augmented skip lists on synthetically generated data following Zipfian distributions with various tail parameters. The dataset is created using four distinct values ranging from 1.01 to 2, along with a uniform dataset. During the assessment, we query a specified number of items selectively chosen based on their frequency weights. Our results match our theory, showing that learning-augmented skip lists have faster query times, with an average speed-up factor ranging from 1.33 up to 7.76, depending on the different skewness parameters.

We then consider various datasets for internet traffic data, collected by AOL and by CAIDA, observed over various durations. For each dataset, we split the overall observation time into an early period, which serves as the training set for the oracle, and a later period, which serves as the query set for the skip list. The oracle trained using the IP addresses in the early periods outputs the probability of the appearance of a given node, and then the position of each node is determined.

Our learning-augmented skip list outperforms traditional skip lists with an average speed-up factor of 1.45 for the AOL dataset and 1.63 for the CAIDA dataset. Moreover, the insertion time of our learning-augmented skip list is comparable with that of traditional skip lists on both synthetic and real-world datasets. We also observe that our history-based oracle demonstrates good robustness against temporal change, with little shift in the dominant element set. The adopted datasets show that the set of the top frequent elements does not change much across the time intervals in the datasets used herein.

We similarly perform evaluations on our learning-augmented KD tree data structure. We evaluate our KD tree data structure on Zipfian distributions with various tail parameters, and provide a heatmap of average lookup times for elements. We find that for a large variety of Zipfian parameters, ourt method is able to provides large improvements over traditional KD trees. We perform a similar experiment under Zipfian distributions with added noise, and find our data structure still provides considerable improvements in query time.

We additionally evaluate our method on point cloud samples taken from a 3D model. We bin these samples in space, and create our learning-augmented KD tree on these binned samples. When querying this tree with new binned point samples, we find a decrease in average query time as compared to a traditional KD tree under the same conditions. In addition to this experiment, we provide results on real world datasets of n-grams and neuron activation, and similarly find improvements over traditional KD trees.

Concurrent and independent work. We mention that concurrent and independent of our work, [ZKH24] used similar techniques to achieve the same guarantees on the performance of learning-augmented skip lists that are robust to erroneous predictions. However, they do not show optimality for their learning-augmented skip lists and arguably perform less exhaustive empirical evaluations. They also do not consider KD trees at all, which forms a significant portion of our contribution, both theoretically and empirically.

Comparison to [LLW22]. Our work was largely inspired by [LLW22], who observed that classical literature characterizing statically optimal binary search trees [Knu71, Meh77] no longer apply in the dynamic setting, as elements arrive iteratively over time. Thus, they designed the construction of dynamic learning-augmented binary search trees (BSTs). Their analysis for the expected search time utilized the notion of pivots within their trees and thus were somewhat specialized to BSTs. Therefore, [LLW22] explicitly listed skip trees and advanced tree data structures as interesting open directions. Qualitatively, our results are similar to [LLW22], as are those of [ZKH24]. This is not quite altogether surprising because the main difference between these data structures is not necessarily the search time, but the either the ease of construction in the setting of skip lists, or the ability to handle multi-dimensional data in the setting of KD trees.

2 Learning-Augmented Skip Lists

In this section, we describe our construction for a learning-augmented skip list and show various consistency properties of the data structure. In particular, we show that up to a factor of two, our algorithm is optimal, given a perfect oracle. More realistically, if the oracle provides a constant-factor approximation to the probabilities of each element, our algorithm is still optimal up to a constant factor.

We first describe our learning-augmented skip list, which utilizes predictions for each item , from an oracle. Similar to a traditional skip list, the bottom level of our skip list is an ordinary-linked list that contains the sorted items of the dataset. As before, the purpose of each higher level is to accelerate the search for an item, but the process for promoting an item from a lower level to a higher level now utilizes the predictions. Whereas traditional skip lists promote each item in a level to a higher level randomly with a fixed probability , we automatically promote the item to a level if its predicted frequency satisfies . Otherwise, we promote the item with probability . This adaptation ensures that items with high predicted query frequencies will be promoted to higher levels of the skip list and thus be more likely to be found quickly.

It is worth addressing a number of other natural approaches and their shortcomings. For example, one natural approach would be to use the “median” frequency across the items as a threshold to promote elements to higher levels. However, this promotion scheme is not ideal because computing the median frequency at each time would either require an additional data structure for fast update time or increase the insertion time. A potential approach to resolve this issue would be to use a separate threshold probability is set for each level so that only nodes with a probability higher than the corresponding threshold are promoted to the next level. However, this approach seems to result in an unnecessarily large number of created levels if some item appears with small probability, e.g., . We can thus first filters out the low-frequency elements and place them remain on the bottom level of the skip list and then proceed with using a separate threshold probability for each level. Unfortunately, this approach utterly fails to even match the search time performance of oblivious skip lists when the distribution is uniform, because all items will be in the same level, resulting in an expected search time of . Hence, we ensure that each element still has a chance of being promoted to higher levels even when their probability is less than the corresponding threshold.

We again emphasize that due to the dynamic nature of the updates, existing results on statically optimal binary search trees [Knu71, Meh77] do not apply, as observed by [LLW22]. We give the full details in Algorithm 1. For the sake of presentation, we focus on the setting where the queries are made to items in the dataset. However, we remark that our results generalize to the setting where queries can be made on the search space rather than the items in the dataset, provided the oracle is also appropriately adjusted to estimate the query distribution, using the approach we describe in Section 3.

We first show an upper bound on the expected search time of our learning-augmented skip-list.

Theorem 2.1.

For each , let and be the proportion of true and predicted queries to item . Then with probability at least over the randomness of the construction of the skip list, the expected search time over the choice of queries at most .

To achieve Theorem 2.1, we first show that each item must be contained at some level , depending on the predicted frequency of the item. We also show that with high probability, the total number of levels in the skip list is at most . This allows us to upper bound the expected search time for item by at most . We can then analyze the expected search time across the true probability distribution . Putting these steps together, we obtain Theorem 2.1.

We also prove a lower bound on the expected search time of an item drawn from a probability distribution for any skip list.

Theorem 2.2.

Given a random variable so that with probability , let denote the search time for in a skip list. Then , where is the entropy of .

Theorem 2.2 uses standard entropy arguments that have been previously used to lower bound the optimal constructions of data structures such as Huffman codes. We next upper bound the entropy of a probability vector that satisfies a Zipfian distribution with parameter .

Lemma 2.3.

Let be fixed constants and let be a frequency vector such that for all . If , then and otherwise if , then .

By Theorem 2.1 and Lemma 2.3, we thus have the following corollary for the expected search time of our learning-augmented skip list on a set of search queries that follows a Zipfian distribution.

Corollary 2.4.

With high probability, the expected search time on a set of queries that follows a Zipfian distribution with exponent is at most for and for .

Next, we show that our learning-augmented skip list construction is robust to somewhat inaccurate oracles. Let be the true-scaled frequency vector so that for each , is the probability that a random query corresponds to . Let be the predicted frequency vector, so that for each , is the predicted probability that a random query corresponds to . For , we call an oracle -noisy if for all , we have . Then we have the following guarantees for an -noisy oracle:

Lemma 2.5.

Let be a constant and . A learning-augmented skip list with a set of -noisy predictions has performance that matches that of a learning-augmented learned with a perfect oracle, up to an additive constant.

To achieve Lemma 2.5, we parameterize our analysis in Theorem 2.1. Due to the guarantees of the -noisy oracles, we can write , which allows us to express the search time in terms of the true entropy of the distribution and a small additive constant that stems from . In fact, we remark that even when the predictions are arbitrarily inaccurate, our learning-augmented skip list still has expected query time , since the total number of levels is at most with high probability. Since the expected query list of an oblivious skip list is also , then the expected query time of our learning-augmented skip list is within a constant multiplicative factor, even with arbitrarily poor predictions.

3 Learning-Augmented KD Trees

In this section we present details on our novel approach to KD tree construction. First, we present the algorithm that constructs a learning-augmented KD tree. We focus on the setting where queries can be made on the search space rather than the items in the dataset, which is much more interesting for high-dimensional datasets, since even building a balanced tree on the search space could result in prohibitively high query time, as the height of the tree would already be at least the dimension . Nevertheless, assuming that we have a query probability prediction for element of our dataset, the intuition of our method is straightforward. Whereas a learning-augmented binary search tree would attempt to find a value such that the probability of a query being on either branch of the tree is balanced, high-dimensional datasets do not have an absolute ordering.

Thus, instead of relying on standard techniques to determine the splitting point of our dataset, we find a specific dimension in which there exists a balanced split such that the probability of a query being on either branch of the tree is balanced. However, there can still be high frequency queries that are not in the dataset, which can cause significantly high query time if not optimized. Hence, we also add to the tree construction high frequency queries that are not data points, in order to reject these negative queries more quickly.

We prove the following guarantees on the performance of our learning-augmented KD tree, first assuming that our oracle is perfect.

Theorem 3.1.

Suppose is the space of possible input points and queries. Let and be the probability that a random query is made to , given the natural mapping between and . Let be the probability vector and be its entropy. Then given a set of input points, the expected query time for the tree created by Algorithm 3 is .

The analysis of Theorem 3.1 corresponding to our learning-augmented KD tree follows from a similar structure as the proof of Theorem 2.1. However, the crucial difference is that the universe size is now , which is exponential in . Thus constructions that consider distributions over all of may suffer query time, which can be prohibitively expensive for large , e.g., high-dimensional data. Hence, our algorithm requires a bit more care in the truncation of queries with low probability and instead, we build a balanced KD tree for any item with less than probability of being queried, so that each of their query times is at most . We further remark this implies robustness of our data structure to arbitrarily poor predictions, by a similar argument as in Section 2.

We next prove a lower bound on the expected search time of an item drawn from a probability distribution for any KD tree.

Theorem 3.2.

Given a random variable so that with probability , let denote the depth for in a learning-augmented KD tree. Then , where is the entropy of .

By Theorem 3.1 and Lemma 2.3, we thus have the following corollary for the expected query time on our learning-augmented KD tree on a set of search queries that follows a Zipfian distribution.

Corollary 3.3.

With high probability, the expected query time on a set of queries that follows a Zipfian distribution with exponent is at most for and for .

Finally, we show near-optimality when given imperfect predictions from a -noisy oracle:

Lemma 3.4.

Let be a constant and let . Then the query time for our learning-augmented KD tree with -noisy prediction matches the performance of a learning-augmented KD tree constructed using a perfect oracle up to an additive constant.

4 Empirical Evaluations

In this section, we describe a number of empirical evaluations demonstrating the efficiency of our learning-augmented search data structures on both synthetic and real-world datasets. We provide additional experiments in Appendix D.

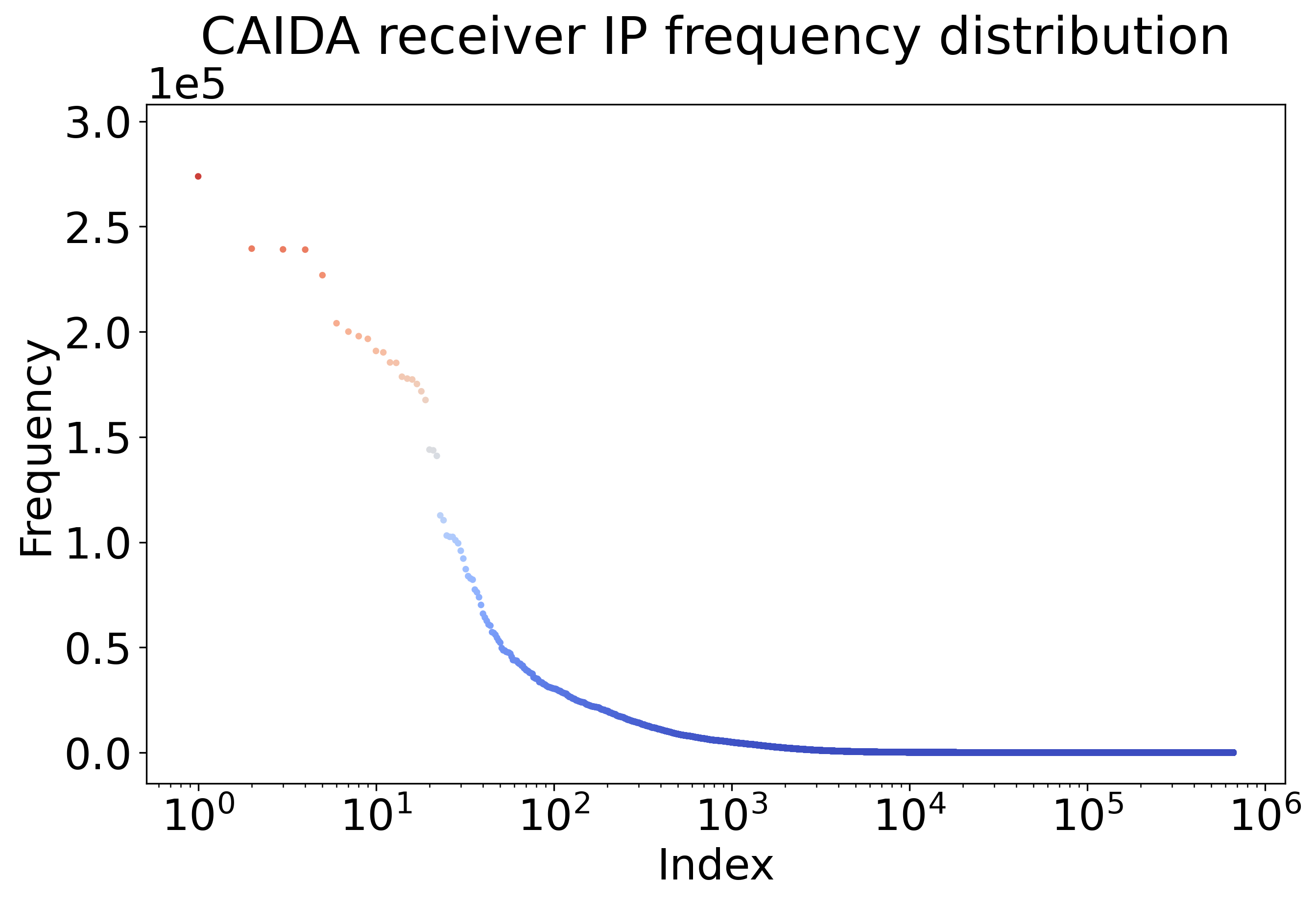

Skip lists on CAIDA dataset. In the CAIDA datasets [CAI16], the receiver IP addresses from one minute of the internet flow data are extracted for testing, which contains over 650k unique IP addresses of the 30 million queries. Given that the log-log plot of the frequency of all nodes in the CAIDA datasets follows approximately a straight line in Figure 1, the CAIDA datasets can be approximately characterized by an factor of 1.37. The insertion time is similar between classic and augmented skip lists, while Figure 2 shows that query time is almost halved when using the learning augmented skip lists at different query sizes. These results assume that the predicted frequency of all items in the query stream is accurate, i.e., the probability vector that is used to build the skip list matches exactly the query stream. The speed-up between the query times of the largest learning-augmented and the oblivious skip lists in Figure 2 is roughly , which is surprisingly and perhaps coincidentally close to our theoretical speed-up of roughly on a Zipfian dataset with exponent .

Next, we demonstrate that our proposed algorithm still manages to outperform the classic skip list even when temporal change exists in the probability vector by comparing the query time for the same set of query elements with different probability vectors being used to guide the building of the structure. For the skip list augmented by a noisy probability vector, the probability vector of elements during a period of T1 is used as the predicted frequencies. The skip list being augmented by this probability vector has its own set of elements to be organized into the target skip list. Suppose the historic data from T1 contains a set of elements S1, and some future query stream contains a set of elements S2. For each element in our target set S2, if the element is present in S1, then the occurrence probability of this element from S1 will be used to build S2; otherwise, if the element has not shown up during T1 (i.e., in S1), then we assume its probability to be 0. After this, the probability vector is normalized to sum to 1, resulting in a predicted probability vector to be used to build a skip list based on the historic element frequency. Since there is temporal changes in the frequency of elements being queried, the predicted probability vector will show a discrepancy with the true probability vector. The results are presented in Figure 3(a) and we show that even when the prediction is not perfect, the augmented skip list still performs better than a conventional skip list.

Figure 3(a) shows that the skip list with perfect learning shows the best performance, while the skip list augmented with noisy learning performs very close to the scenario with perfect predictions. Moreover, the closer the test data is to the reference data chronologically, the closer the noisy-augmented skip list will perform to the perfect learning skip list. The CAIDA datasets used in this study contain 12 minutes of internet flow data, which totals around 444 million queries. The indices on the x-axis in Figure 3(a) means:

-

•

10_2: the first 10 minutes of data are used to create the reference (i.e., oracle) and the last 2 minutes are used to build and test the total query time using the former as reference.

-

•

2_2: the 9th and 10th minutes data is used as reference and the last 2 minutes are used for testing.

-

•

3_3: the 1st, 2nd and 3rd minutes of data are used to create reference and the 4th, 5th and 6th minutes of data are used for testing.

-

•

6_6: the first 6 minutes are used to create the reference and the last 6 minutes are used for testing.

Further analysis of the temporal change of item frequency shows the reason behind the good performance of the history-based oracle. Figure 3(b) shows the change of intersection index between any 2 given minutes among the 12 minutes of CAIDA data. The intersection index is defined as the ratio of the number of shared queries to the total number of queries of any given 2 minutes of queries. Figure 3(b) shows that the number of intersects queries has decreased by about 6% after 12 minutes, which indicates that the probability of the majority of the elements will be predicted with good accuracy, resulting in good oracle performance.

KD trees on synthetic datasets. KD Trees are commonly used in the field of computer graphics, with applications in collision detection, ray-tracing, and reconstruction. We first generate datasets of points in 3-dimensional space, with frequencies given by a fixed Zipfian distribution with parameters – parameters at which our method greatly outperforms a standard KD tree. In order to simulate constructing the tree on noisy data, we multiply the ground truth query probabilities by numbers sampled uniformly from 1 to , and then add numbers uniformly sampled from to , before renormalizing to form a valid probability distribution. We query the tree times, with point queries selected by the ground truth Zipfian distribution. We repeat this process 32 times, and report the median of the average query depth across all runs in Figure 4. We find that our method continues to outperform traditional KD trees under moderate amounts of noise, and at worst, performs on-par with a traditional KD tree.

Next, we generate datasets of points in 3-dimensional space, with frequencies given by a Zipfian distribution with parameters . In the left plot, we assign these Zipfian weights randomly. In the right plot, however, we assign Zipfian weights with ranks decreasing with the distance to some random data point. We then query the tree times, with point queries selected by the same Zipfian distribution. We repeat this process 32 times, and report the median of the average query depth across all runs. We find that, when points frequencies are distributed smoothly over space, our method’s performance increases on less skew distributions, as seen in this Figure 5.

KD trees on 3D point-cloud datasets. Finally, we evaluate our method on point cloud data generated from the Stanford Lucy mesh [Sta96], with dimensions . We first uniformly sample points along the mesh surface, and bin points with resolution 10, and assign lookup frequencies by the number of bin occupants. This results in 32k bins. Note, the resulting frequency distribution for binned cells is not highly skewed.

We then generate a new set of surface samples on the mesh, binning them and assigning frequencies in the same way. When looking up with the new samples, our method yields an average query depth of 15.1, while a traditional KD tree yields an average lookup depth of 17.6.

Acknowledgements

Samson Zhou is supported in part by NSF CCF-2335411. The work was conducted in part while Samson Zhou was visiting the Simons Institute for the Theory of Computing as part of the Sublinear Algorithms program.

References

- [ACC+11] Nir Ailon, Bernard Chazelle, Kenneth L. Clarkson, Ding Liu, Wolfgang Mulzer, and C. Seshadhri. Self-improving algorithms. SIAM J. Comput., 40(2):350–375, 2011.

- [ACE+23] Antonios Antoniadis, Christian Coester, Marek Eliás, Adam Polak, and Bertrand Simon. Online metric algorithms with untrusted predictions. ACM Trans. Algorithms, 19(2):19:1–19:34, 2023.

- [ACI22] Anders Aamand, Justin Y. Chen, and Piotr Indyk. (optimal) online bipartite matching with degree information. In Advances in Neural Information Processing Systems 35: Annual Conference on Neural Information Processing Systems, NeurIPS, 2022.

- [ACL14] Laurence Aitchison, Nicola Corradi, and Peter E Latham. Zipf’s law arises naturally in structured, high-dimensional data. arXiv preprint arXiv:1407.7135, 2014.

- [ACL+21] Matteo Almanza, Flavio Chierichetti, Silvio Lattanzi, Alessandro Panconesi, and Giuseppe Re. Online facility location with multiple advice. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems, NeurIPS, pages 4661–4673, 2021.

- [AGKK23] Antonios Antoniadis, Themis Gouleakis, Pieter Kleer, and Pavel Kolev. Secretary and online matching problems with machine learned advice. Discret. Optim., 48(Part 2):100778, 2023.

- [AGKP21] Keerti Anand, Rong Ge, Amit Kumar, and Debmalya Panigrahi. A regression approach to learning-augmented online algorithms. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems, NeurIPS, pages 30504–30517, 2021.

- [AGKP22] Keerti Anand, Rong Ge, Amit Kumar, and Debmalya Panigrahi. Online algorithms with multiple predictions. In International Conference on Machine Learning, ICML, pages 582–598, 2022.

- [AGP20] Keerti Anand, Rong Ge, and Debmalya Panigrahi. Customizing ML predictions for online algorithms. In Proceedings of the 37th International Conference on Machine Learning, ICML, pages 303–313, 2020.

- [AM78] Brian Allen and J. Ian Munro. Self-organizing binary search trees. J. ACM, 25(4):526–535, 1978.

- [APT22] Yossi Azar, Debmalya Panigrahi, and Noam Touitou. Online graph algorithms with predictions. In Proceedings of the 2022 ACM-SIAM Symposium on Discrete Algorithms, SODA, pages 35–66, 2022.

- [ASS20] Chen Avin, Iosif Salem, and Stefan Schmid. Working set theorems for routing in self-adjusting skip list networks. In IEEE INFOCOM 2020-IEEE Conference on Computer Communications, pages 2175–2184. IEEE, 2020.

- [BBB+20] Dmitry Basin, Edward Bortnikov, Anastasia Braginsky, Guy Golan-Gueta, Eshcar Hillel, Idit Keidar, and Moshe Sulamy. Kiwi: A key-value map for scalable real-time analytics. ACM Transactions on Parallel Computing (TOPC), 7(3):1–28, 2020.

- [BHZ18] Jeremiah Blocki, Benjamin Harsha, and Samson Zhou. On the economics of offline password cracking. In IEEE Symposium on Security and Privacy, SP, Proceedings, pages 853–871. IEEE Computer Society, 2018.

- [BMS20] Étienne Bamas, Andreas Maggiori, and Ola Svensson. The primal-dual method for learning augmented algorithms. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems, NeurIPS, 2020.

- [CAI16] CAIDA. The caida ucsd anonymized internet traces. https://www.caida.org/catalog/datasets/passive_dataset, 2016.

- [CCC+23] Xinyuan Cao, Jingbang Chen, Li Chen, Chris Lambert, Richard Peng, and Daniel Sleator. Learning-augmented b-trees, 2023.

- [CD07] Lawrence Cayton and Sanjoy Dasgupta. A learning framework for nearest neighbor search. In Advances in Neural Information Processing Systems 20, Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems, pages 233–240, 2007.

- [CEI+22] Justin Y. Chen, Talya Eden, Piotr Indyk, Honghao Lin, Shyam Narayanan, Ronitt Rubinfeld, Sandeep Silwal, Tal Wagner, David P. Woodruff, and Michael Zhang. Triangle and four cycle counting with predictions in graph streams. In The Tenth International Conference on Learning Representations, ICLR, 2022.

- [CFLM02] Valentina Ciriani, Paolo Ferragina, Fabrizio Luccio, and S. Muthukrishnan. Static optimality theorem for external memory string access. In 43rd Symposium on Foundations of Computer Science (FOCS, Proceedings, pages 219–227. IEEE Computer Society, 2002.

- [CIW22] Justin Y. Chen, Piotr Indyk, and Tal Wagner. Streaming algorithms for support-aware histograms. In International Conference on Machine Learning, ICML, pages 3184–3203, 2022.

- [CLR+24] Karthik C. S., Euiwoong Lee, Yuval Rabani, Chris Schwiegelshohn, and Samson Zhou. On approximability of min-sum clustering. CoRR, abs/2412.03332, 2024.

- [Cov99] Thomas M Cover. Elements of information theory. John Wiley & Sons, 1999.

- [CSVZ22] Justin Y. Chen, Sandeep Silwal, Ali Vakilian, and Fred Zhang. Faster fundamental graph algorithms via learned predictions. In International Conference on Machine Learning, ICML, pages 3583–3602, 2022.

- [DIL+21] Michael Dinitz, Sungjin Im, Thomas Lavastida, Benjamin Moseley, and Sergei Vassilvitskii. Faster matchings via learned duals. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems, NeurIPS, pages 10393–10406, 2021.

- [DMVW23] Sami Davies, Benjamin Moseley, Sergei Vassilvitskii, and Yuyan Wang. Predictive flows for faster ford-fulkerson. In International Conference on Machine Learning, ICML, volume 202, pages 7231–7248, 2023.

- [EFS+22] Jon C. Ergun, Zhili Feng, Sandeep Silwal, David P. Woodruff, and Samson Zhou. Learning-augmented -means clustering. In The Tenth International Conference on Learning Representations, ICLR, 2022.

- [Gab99] Xavier Gabaix. Zipf’s law for cities: an explanation. The Quarterly journal of economics, 114(3):739–767, 1999.

- [GLS+22] Elena Grigorescu, Young-San Lin, Sandeep Silwal, Maoyuan Song, and Samson Zhou. Learning-augmented algorithms for online linear and semidefinite programming. In Advances in Neural Information Processing Systems 35: Annual Conference on Neural Information Processing Systems, NeurIPS, 2022.

- [Goo12] Google. Google ngram viewer. http://books.google.com/ngrams/datasets, 2012.

- [Goo22] Google books n-gram frequency lists. https://github.com/orgtre/google-books-ngram-frequency, 2022.

- [GP06] C. Torgeson G. Pass, A. Chowdhury. 500k user session collection. https://www.kaggle.com/datasets/dineshydv/aol-user-session-collection-500k, 2006.

- [GP19] Sreenivas Gollapudi and Debmalya Panigrahi. Online algorithms for rent-or-buy with expert advice. In Proceedings of the 36th International Conference on Machine Learning, ICML, pages 2319–2327, 2019.

- [GZ08] Tingjian Ge and Stan Zdonik. A skip-list approach for efficiently processing forecasting queries. Proceedings of the VLDB Endowment, 1(1):984–995, 2008.

- [HIKV19] Chen-Yu Hsu, Piotr Indyk, Dina Katabi, and Ali Vakilian. Learning-based frequency estimation algorithms. In 7th International Conference on Learning Representations, ICLR, 2019.

- [HPJ03] Yih-Chun Hu, Adrian Perrig, and David B Johnson. Efficient security mechanisms for routing protocolsa. In Ndss, 2003.

- [Huf52] David A Huffman. A method for the construction of minimum-redundancy codes. Proceedings of the IRE, 40(9):1098–1101, 1952.

- [IKQP21] Sungjin Im, Ravi Kumar, Mahshid Montazer Qaem, and Manish Purohit. Online knapsack with frequency predictions. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems, NeurIPS, pages 2733–2743, 2021.

- [ISZ21] Zachary Izzo, Sandeep Silwal, and Samson Zhou. Dimensionality reduction for wasserstein barycenter. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS, pages 15582–15594, 2021.

- [IVY19] Piotr Indyk, Ali Vakilian, and Yang Yuan. Learning-based low-rank approximations. In Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS, pages 7400–7410, 2019.

- [JLL+20] Tanqiu Jiang, Yi Li, Honghao Lin, Yisong Ruan, and David P. Woodruff. Learning-augmented data stream algorithms. In 8th International Conference on Learning Representations, ICLR, 2020.

- [JLL+22] Shaofeng H.-C. Jiang, Erzhi Liu, You Lyu, Zhihao Gavin Tang, and Yubo Zhang. Online facility location with predictions. In The Tenth International Conference on Learning Representations, ICLR, 2022.

- [KADV23] Mikhail Khodak, Kareem Amin, Travis Dick, and Sergei Vassilvitskii. Learning-augmented private algorithms for multiple quantile release. In International Conference on Machine Learning, ICML 2023, pages 16344–16376, 2023.

- [KBC+18] Tim Kraska, Alex Beutel, Ed H. Chi, Jeffrey Dean, and Neoklis Polyzotis. The case for learned index structures. In Proceedings of the 2018 International Conference on Management of Data, SIGMOD Conference, pages 489–504, 2018.

- [KBTV22] Misha Khodak, Maria-Florina Balcan, Ameet Talwalkar, and Sergei Vassilvitskii. Learning predictions for algorithms with predictions. In Advances in Neural Information Processing Systems 35: Annual Conference on Neural Information Processing Systems, NeurIPS, 2022.

- [KLR96] Marek Karpinski, Lawrence L. Larmore, and Wojciech Rytter. Sequential and parallel subquadratic work algorithms for constructing approximately optimal binary search trees. In Proceedings of the Seventh Annual ACM-SIAM Symposium on Discrete Algorithms, pages 36–41, 1996.

- [Knu71] Donald E. Knuth. Optimum binary search trees. Acta Informatica, 1:14–25, 1971.

- [LJ13] Jonatan Lindén and Bengt Jonsson. A skiplist-based concurrent priority queue with minimal memory contention. In Principles of Distributed Systems: 17th International Conference, OPODIS 2013, Nice, France, December 16-18, 2013. Proceedings 17, pages 206–220. Springer, 2013.

- [LLL+23] Yi Li, Honghao Lin, Simin Liu, Ali Vakilian, and David P. Woodruff. Learning the positions in countsketch. In The Eleventh International Conference on Learning Representations, ICLR, 2023.

- [LLMV20] Silvio Lattanzi, Thomas Lavastida, Benjamin Moseley, and Sergei Vassilvitskii. Online scheduling via learned weights. In Proceedings of the 2020 ACM-SIAM Symposium on Discrete Algorithms, SODA, pages 1859–1877, 2020.

- [LLW22] Honghao Lin, Tian Luo, and David P. Woodruff. Learning augmented binary search trees. In International Conference on Machine Learning, ICML, pages 13431–13440, 2022.

- [LV21] Thodoris Lykouris and Sergei Vassilvitskii. Competitive caching with machine learned advice. J. ACM, 68(4):24:1–24:25, 2021.

- [LVM+23] Silvia Lazzardi, Filippo Valle, Andrea Mazzolini, Antonio Scialdone, Michele Caselle, and Matteo Osella. Emergent statistical laws in single-cell transcriptomic data. Physical Review E, 107(4):044403, 2023.

- [Meh77] Kurt Mehlhorn. A best possible bound for the weighted path length of binary search trees. SIAM J. Comput., 6(2):235–239, 1977.

- [Mit18] Michael Mitzenmacher. A model for learned bloom filters and optimizing by sandwiching. In Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems, NeurIPS, pages 462–471, 2018.

- [MV20] Michael Mitzenmacher and Sergei Vassilvitskii. Algorithms with predictions. In Tim Roughgarden, editor, Beyond the Worst-Case Analysis of Algorithms, pages 646–662. Cambridge University Press, 2020.

- [NCN23] Thy Dinh Nguyen, Anamay Chaturvedi, and Huy L. Nguyen. Improved learning-augmented algorithms for k-means and k-medians clustering. In The Eleventh International Conference on Learning Representations, ICLR, 2023.

- [PSK18] Manish Purohit, Zoya Svitkina, and Ravi Kumar. Improving online algorithms via ML predictions. In Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS, pages 9684–9693, 2018.

- [PT10] Jonathan J Pittard and Alan L Tharp. Simplified self-adapting skip lists. In International Conference on Intelligent Data Engineering and Automated Learning, pages 126–136. Springer, 2010.

- [Pug90a] William Pugh. Concurrent maintenance of skip lists. University of Maryland at College Park, 1990.

- [Pug90b] William Pugh. Skip lists: A probabilistic alternative to balanced trees. Commun. ACM, 33(6):668–676, jun 1990.

- [San15] Agnar Sandmo. The principal problem in political economy: income distribution in the history of economic thought. In Handbook of income distribution, volume 2, pages 3–65. Elsevier, 2015.

- [SGM22] Ziv Scully, Isaac Grosof, and Michael Mitzenmacher. Uniform bounds for scheduling with job size estimates. In 13th Innovations in Theoretical Computer Science Conference, ITCS, pages 114:1–114:30, 2022.

- [Sha01] Claude Elwood Shannon. A mathematical theory of communication. ACM SIGMOBILE mobile computing and communications review, 5(1):3–55, 2001.

- [SL00] Nir Shavit and Itay Lotan. Skiplist-based concurrent priority queues. In Proceedings 14th International Parallel and Distributed Processing Symposium. IPDPS 2000, pages 263–268. IEEE, 2000.

- [SLLA23] Yongho Shin, Changyeol Lee, Gukryeol Lee, and Hyung-Chan An. Improved learning-augmented algorithms for the multi-option ski rental problem via best-possible competitive analysis. In International Conference on Machine Learning, ICML, pages 31539–31561, 2023.

- [Sta96] The stanford 3d scanning repository, 1996.

- [VA15] Nikolay K Vitanov and Marcel Ausloos. Test of two hypotheses explaining the size of populations in a system of cities. Journal of Applied Statistics, 42(12):2686–2693, 2015.

- [WLW20] Shufan Wang, Jian Li, and Shiqiang Wang. Online algorithms for multi-shop ski rental with machine learned advice. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems, NeurIPS, 2020.

- [WW16] Ding Wang and Ping Wang. On the implications of zipf’s law in passwords. In Computer Security - ESORICS 2016 - 21st European Symposium on Research in Computer Security, Proceedings, Part I, volume 9878 of Lecture Notes in Computer Science, pages 111–131. Springer, 2016.

- [WZ20] Alexander Wei and Fred Zhang. Optimal robustness-consistency trade-offs for learning-augmented online algorithms. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems, NeurIPS, 2020.

- [Yao82] F Frances Yao. Speed-up in dynamic programming. SIAM Journal on Algebraic Discrete Methods, 3(4):532–540, 1982.

- [ZCL+23] Xuanhe Zhou, Cheng Chen, Kunyi Li, Bingsheng He, Mian Lu, Qiaosheng Liu, Wei Huang, Guoliang Li, Zhao Zheng, and Yuqiang Chen. Febench: A benchmark for real-time relational data feature extraction. Proceedings of the VLDB Endowment, 16(12):3597–3609, 2023.

- [ZKH24] Ali Zeynali, Shahin Kamali, and Mohammad Hajiesmaili. Robust learning-augmented dictionaries. CoRR, abs/2402.09687, 2024.

Appendix A Additional Related Works

In this section, we discuss a number of related works in addition to those mentioned in Section 1. This paper builds upon the increasing body of research in learning-augmented algorithms, data-driven algorithms, and algorithms with predictions. For example, learning-augmented algorithms have been applied to a number of problems in the online setting, where the input arrives sequentially and the goal is to achieve algorithmic performance competitive with the best solution in hindsight, i.e., an algorithm that has the complete input on hand. Among the applications in the online model, learning-augmented algorithms have been developed for ski rental problem and job scheduling [PSK18], caching [LV21], and matching [AGKK23]. Learning-augmented algorithms have also been used to improve the performance of specific data structures such as Bloom filters [Mit18], index structures [KBC+18], CountMin and CountSketch [HIKV19]. Specifically, [KBC+18] proposes substituting B-Trees (or other index structures) with trained models for querying databases. In their approach, rather than traversing the B-Tree to locate a record, they use a neural network to directly identify its position. Our work differs in that we retain the desired data structures, i.e., skip lists and kd trees, and focus on optimizing their structures to enable faster queries, which allows us to continue supporting standard operations specific to the data structures such as traversal, order statistics, merging, and joining, among others. Our work uses the frequency estimation oracle trained in [HIKV19] on the AOL search query dataset and the CAIDA IP traffic monitoring dataset.

Perhaps the works most closely related to ours in the area of learning-augmented algorithms are those of [LLW22, CCC+23, ZKH24]. [LLW22] noted that traditional theory on statically optimal binary search trees [Knu71, Meh77] is no longer applicable in dynamic settings, where elements are added incrementally over time. Hence, they developed learning-augmented binary search trees (BSTs) and showed that their expected search time is near-optimal. [CCC+23] then extended these techniques to general search trees, allowing for nodes with more than two children. [CCC+23] also studied the setting where the predictions may be updated, while ultimately still utilizing a data structure that requires rebalancing as data is dynamically changing. [ZKH24] also consider the performance of learning-augmented skip lists that are robust to erroneous predictions; we elaborate more on the differences from [ZKH24] in Section 1.1. We also note that none of these works consider KD trees at all, which is an important data structure with applications in computer vision and computational geometry, thus forming a basis of our work. For a more comprehensive source of related works in learning-augmented algorithms, see https://algorithms-with-predictions.github.io/.

Beyond the context of learning-augmented algorithms, there is a large body of works that study design of data structures that are optimal for their inputs. For example, while standard binary search trees use query time, optimal static trees can be constructed using dynamic programming or efficient greedy algorithms [Meh77, Yao82, KLR96], given access frequencies. However, the computational cost of these methods often exceeds the cost of directly querying the tree. As a result, a key objective is to construct a tree whose cost is within a constant factor of the entropy of the data. Several approaches have achieved this either for worst-case data [Meh77] or when the input follows particular distributions [AM78].

More recent works have considered using results from learning theory to estimate the query frequencies, rather than assuming explicit access to their values. For example, [CD07] studied how to obtain such an oracle for learning-augmented data structures. In particular, they study generalization bounds in the context of learning theory, analyzing the number of samples from an underlying distribution necessary to produce an oracle with a small error rate. On the other hand, [ACC+11] studied algorithms for sorting and clustering that can improve their expected performance given access to multiple instances sampled from a fixed distribution. Although the high-level goal of improving algorithmic performance using auxiliary information is the same as ours, the specifics of the paper seem quite different than ours, as the paper focuses on techniques for sorting and clustering. Similarly, [CFLM02] considers self-adjusting data structures, including skip lists, which can dynamically change as the sequence of queries arrive. However, their methods are catered specifically to the setting where there is access to the queries, whereas our data structures must be constructed without such access and must therefore be able to handle erroneous predictions. Finally, we remark that utilizing techniques for learning theory, there are standard results about the learnability of oracles for the purposes of learning-augmented algorithms [EFS+22, ISZ21].

Appendix B Missing Proofs from Section 2

In this section, we give the missing proofs from Section 2.

B.1 Expected Search Time

We first show that each item is promoted to a higher level with probability at least .

Lemma B.1.

For each item at level , the probability that is in level is at least .

Proof.

Note that if , then will be placed in level . Otherwise, conditioned on the item being at level , then Algorithm 1 places at level with probability . Thus, the probability that is in level is at least . ∎

We next upper bound the expected search time for any item at any fixed level, where the randomness is over the construction of the skip list.

Lemma B.2.

In expectation, the search time for item at level is at most .

Proof.

Suppose item is in level . Let be the subset of items in level that are less than . Note that by Lemma B.1, each item of is promoted to level with probability at least . Thus, the search time for item at level is if and only if the previous items in were all not promoted, which can only happen with probability at most . Hence, the expected search time for item at level is at most

∎

We now show that each item must be contained at some level depending on the predicted frequency of the item.

Lemma B.3.

Each item is included in level .

Proof.

First, observe that all items are inserted at level . Next, note that Algorithm 1 inserts item into level if or equivalently . Thus, each item is included in level . ∎

We next analyze the expected search time for each item .

Lemma B.4.

Suppose the total number of levels is at most for some constant . Then the expected search time for item is at most .

Proof.

Finally, we analyze the expected search time across the true probability distribution .

Lemma B.5.

Suppose the total number of levels is at most for some constant . For each , let be the proportion of queries to item . Then the expected search time at most .

Proof.

For each query, the probability that the query is item is . Conditioned on the total number of levels being at most , then by Lemma B.4, the expected search time for item is at most . Thus, the expected search time at most

∎

We now show that with high probability, the total number of levels in the skip list is at most .

Lemma B.6.

With probability at least , the total number of levels in the skip list is at most .

Proof.

For each level , let be the number of items that are deterministically promoted to exactly level , i.e., . Note that for each fixed , the highest level it remains is a geometric random variable with parameter , beyond the highest level at which it is deterministically placed. This is because the item is promoted to each higher level with probability . Hence with probability , is not placed at least levels above its highest deterministic placement. Therefore, the probability that an item at level is placed at level is at most . Since no fixed will have predicted frequency more than , then no item will be deterministically placed at level . Hence by a union bound over all , the probability that an item is placed at level is at most

On the other hand, we have , so that

Therefore, with probability at least , the total number of levels in the skip list is at most . ∎

B.2 Near-Optimality

We first recall the construction of a Huffman code, a type of variable-length code that is often used for data compression. The encoding for a Huffman is known to be an optimal prefix code and can be represented by a binary tree, which we call the Huffman tree [Huf52].

To construct a Huffman code, we first create a min-heap priority queue that initially contains all the leaf nodes sorted by their frequencies, so that the least frequent items have the highest priority. The algorithm then iteratively removes the two nodes with the lowest frequencies from the priority queue, which become the left and right children of a new internal node that is created to represent the sum of the frequencies of the two nodes. This internal node is then added back to the priority queue. This process is continued until there only remains a single node left in the priority queue, which is then the root of the Huffman tree.

A binary code is then assigned to the paths from the root to each leaf node in the Huffman tree, so that each movement along a left edge in the tree corresponds to appending a 0 to the codeword, and each movement along a right edge in the tree corresponds to appending a 1 to the codeword. Thus, the resulting binary code for each item is the path from the root to the leaf node corresponding to the item.

Huffman coding is a type of symbol-by-symbol coding, where each individual item is separately encoded, as opposed to alternatives such as run-length encoding. It is known that Huffman coding is optimal among symbol-by-symbol coding with a known input probability distribution [Huf52] and moreover, by Shannon’s source coding theorem, that the entropy of the probability distribution is an upper bound on the expected length of a codeword of a symbol-by-symbol coding:

Theorem B.7 (Shannon’s source coding theorem).

[Sha01] Given a random variable so that with probability , let denote the length of the codeword assigned to by a Huffman code. Then , where is the entropy of .

We now prove our lower bound on the expected search time of an item drawn from a probability distribution . See 2.2

Proof.

Let be a skip list. We build a symbol-by-symbol encoding using the search process in . We begin at the top level. At each step, we either terminate, move to the next item at the current level, or move down to a lower level. Similar to the Huffman coding, we append a 0 to the codeword when we move down to a lower level, and we append a 1 to the codeword when we move to the next item at the current level. Now, the search time for an item in corresponds to the length of the codeword of in the symbol-by-symbol encoding. By Theorem B.7 and the optimality of Huffman codes among symbol-by-symbol encodings, we have that , where is the probability distribution vector of . ∎

B.3 Zipfian Distribution

In this section, we briefly describe the implications of our data structure to Zipfian distributions.

We first recall the following entropy upper bound for a probability distribution with support at most .

Theorem B.8.

[Cov99] Let be a probability distribution on a support of size . Then .

We can then upper bound the entropy of a probability vector that satisfies a Zipfian distribution with parameter . See 2.3

Proof.

Since is a probability distribution on the support of size , then by Theorem B.8, we have that . Thus, it remains to consider the case where . Since , we have

Note that there exists an integer such that for , we have . Since , then and thus

Hence,

∎

By Theorem 2.1 and Lemma 2.3, we have the following statement about the performance of our learning-augmented skip list on a set of search queries that follows a Zipfian distribution. See 2.4

B.4 Noisy Robustness

In this section, we show that our learning-augmented skip list construction is robust to somewhat inaccurate oracles. Let be the true-scaled frequency vector so that for each , is the probability that a random query corresponds to . Let be the predicted frequency vector, so that for each , is the predicted probability that a random query corresponds to . For , we call an oracle -noisy if for all , we have . See 2.5

Proof.

Suppose the total number of levels is at most for some constant . Note that this occurs with a high probability for a learning-augmented skip list with a set of -noisy predictions. For each , let be the proportion of queries to item and let be the predicted proportion of queries to item . By Lemma B.5, the expected search time at most

Since the oracle is -noisy then we have for all .

We first note that in the expected search time for is proportional to . Thus, for expected search time for item , it suffices to assume for all .

Observe that for and , then implies

Hence, we have so that the expected search time for item is at most

Therefore, the expected search time is at most

Since the perfect oracle would achieve runtime , then it follows that a learning-augmented skip list with a set of -noisy predictions has performance that matches that of a learning-augmented learned with a perfect oracle, up to an additive constant. ∎

Appendix C Missing Proofs from Section 3

First, we will show that the expected depth of a given query depends on the probability of that query, and that high frequency queries must be found close to the root of our tree.

Lemma C.1.

Suppose is the space of possible input points and queries. Let and be the probability that a random query is made to , given the natural mapping between and . Then the level at which resides in the tree is at most .

Proof.

First, consider only the high-frequency query points and data points for which we base our construction off.

In constructing the learning-augmented KD tree, we balance the contents of the children nodes such that a query to that node has a probability of of belonging to each of the children. Therefore, at a depth of , the probability of belonging to either child is . In particular, a query with probability at a depth must satisfy . Thus, we have that the depth of is , as desired.

Since the lowest probability of a high-frequency data point is , this tree must have a depth of at most .

Now, consider a low-frequency data point, which we add to the bottom of the tree. By construction, the learned point of our tree has depth at most . Then, when inserting the additional data points as a balanced KD tree, we can accumulate at most an additional depth of . Note, implies . Thus, this low-frequency data point will have a depth of at most , as desired.

Similarly, if is not a data point and is low frequency, we achieve the same bound of . In this case, we simply terminate at a leaf node and determine that the desired query is not in the dataset.

In summary, any query which has high frequency can be found in time. Low-frequency data points can similarly be found in time, and low frequency queries can be determined to not exist in time. ∎

Lemma C.2.

Suppose is the space of possible input points and queries. Let and be the probability that a random query is made to , given the natural mapping between and . Then the level at which resides in the tree is at most .

Proof.

This follows directly from the analysis in Llemma C.1. ∎

Now, we have demonstrated the the depth of a given query point is bounded by both and . Using this fact, we will now show that the expected query time of our algorithm is bounded by both the entropy of the dataset in addition to .

We now analyze the performance of our learning-augmented KD tree. See 3.1

Proof.

Following C.1, the points in with non-negligible probability are guaranteed to exist in the learning-augmented KD tree with depth at most . For points in the dataset with negligible probability, they exist in the tree and have depth in . For all other points not contained in the KD tree, the query will terminate at a depth of .

For any point in , the depth that a query to will terminate in the tree is

| (1) |

Then, the expected search time is the expected depth of a given point,

| (2) |

∎

Thus, we have shown that the expected query time of our algorithm is bounded by the entropy of the dataset. In particular, when the dataset has a highly skew distribution, can be far less than .

C.1 Near-Optimality

Near-optimality of our learning-augmented KD trees uses a similar argument to the proof of the near-optimality of our learning-augmneted skip lists. In particular, we again utilize Shannon’s source coding theorem from Theorem B.7. We then have the following: See 3.2

Proof.

In a learning-augmented KD tree, the search path to an element can be encoded as a codeword, with entries indicating whether the lower or upper branch is taken at each node traversal. Moreover, the length of this codeword in the symbol-by-symbol encoding corresponds to the depth of element . Then, by Theorem B.7 and the optimality of Huffman codes in symbol-by-symbol encodings, we have that , as desired. ∎

C.2 Noisy Robustness

In this section, we analyze the performance of the learning-augmented KD tree under noisy data conditions.

Previously, we analyzed the performance of the learning-augmented KD-tree assuming access to a perfect prediction oracle.

Now we analyze the performance with a noisy oracle. That is, for each there is a true-scaled frequency that the point will be queried and that is a prediction made by the noisy oracle.

First, we analyze the multiplicative robustness of the algorithm. In this case, the oracle predicts up to some multiplicative constant such that .

Lemma C.3.

Suppose is the space of possible input points and queries. Let and be the probability that a random query is made to during tree construction. Suppose during runtime that the true probability of querying is for some . Then the level at which resides in the tree is at most .

Proof.

If , this is immediate. If this is the case, in construction we expected to be queried more often than it actually is, so our construction placed the point higher in the tree than is necessary. Thus, the depth is at most the previously shown .

Now, suppose . In this case, we must have placed deeper in the tree than we should have, as our construction frequency is less than the true query frequency. Then, as in C.1, the depth of is Now, we have that

| (3) |

∎

Having shown multiplicative robustness of the learning-augmented KD tree we next analyze the additive-multiplicative robustness of the method. An oracle is -noisy if the prediction satisfies for constants .

See 3.4

Proof.

From Lemma C.1 we have that the depth of a point in the learning-augmented KD tree is where is the predicted frequency of .

Then, with an -noisy oracle we have that the prediction is bounded from below by . As is a point in the tree, we can assume that the true-scaled frequency has a lower-bound of .

By choosing we ensure that the predicted is always nonnegative. Then, let

| (4) |

Then, applying Lemma C.1 again

| (5) |

∎

Appendix D Additional Empirical Evaluations

D.1 Skip Lists

In this section, we perform empirical evaluations comparing the performance of our learning-augmented skip list to that of traditional skip lists, on both synthetic and real-world datasets. Firstly, we compare the performance of traditional skip lists with our learning-augmented skip lists on synthetically generated data following Zipfian distributions. The proposed learning-augmented skip lists are evaluated empirically with both synthetic datasets and real-world internet flow datasets from the Center for Applied Internet Data Analysis (CAIDA) and AOL. In the synthetic datasets, a diverse range of element distributions, which are characterized by the skewness of the datasets, are evaluated to assess the effectiveness of the learning augmentation. In the CAIDA datasets, the factor is calculated to reflect the skewness of the data distribution.

The metrics of performance evaluations include insertion time and query time, representing the total time it takes to insert all elements in the query stream and the time it takes to find all elements in the query stream using the data structure, respectively.

The computer used for benchmarking is a Lenovo Thinkpad P15 with an intel core [email protected], 64GB RAM, and 1TB of Solid State Drive. The tests were conducted in a Ubuntu 22.04.3 LTS OS. GNOME version 42.9.

D.1.1 Synthetic Datasets

In the synthetic datasets, both the classic and augmented skip lists are tested against different element counts and values. In terms of the distribution of the synthetic datasets, the uniform distribution and a Zipfian distribution of between 1.01 and 2 with query counts up to 4 million are evaluated. It is worth noting that the number of unique element queries could vary for the same query count at different values in the Zipfian distribution, which may affect the insertion time.

| Distribution | Query size of synthetic data (unit: thousand) | |||||||||||

| 0.5 | 10 | 100 | 500 | 1000 | 1500 | 2000 | 2500 | 3000 | 3500 | 4000 | Average | |

| uniform | 3.02 | 0.84 | 1.01 | 1.05 | 1.11 | 1.14 | 1.17 | 1.21 | 1.22 | 1.42 | 1.4 | 1.33 |

| =1.01 | 3.63 | 2.6 | 1.04 | 1.24 | 1.03 | 1.21 | 1.2 | 1.14 | 1.3 | 1.18 | 1.3 | 1.53 |

| =1.25 | 3.28 | 3.74 | 5.87 | 2.89 | 2.47 | 3.21 | 2.95 | 3.34 | 3.55 | 3.16 | 3.12 | 3.42 |

| =1.5 | 2.42 | 8.97 | 6.93 | 6.54 | 7.99 | 5.83 | 4.65 | 3.8 | 4.92 | 5.34 | 5.93 | 5.76 |

| =1.75 | 12.43 | 10.4 | 5.76 | 9.78 | 6.76 | 7.13 | 7.31 | 7.09 | 6.63 | 5.07 | 6.98 | 7.76 |

| =2 | 8.19 | 2.5 | 5.56 | 10.1 | 4.47 | 3.91 | 7.26 | 5.33 | 9.29 | 7.65 | 5.55 | 6.35 |

| Unique node count | |

|---|---|

| 1.01 | 2886467 |

| 1.25 | 259892 |

| 1.75 | 8386 |

| 2 | 2796 |

Table 1 shows the speed-up factor, defined as the time taken by the augmented skip list over the classic skip list for the same query stream. We can observe a progressive improvement in the performance of our augmented skip lists as the dataset skewness increases. It also suggests that our augmented skip list will perform at least as good as the traditional skip list and will outperform a traditional skip list by a factor of up to 7 times depending on the skewness of the datasets.

Figure 6 shows that the insertion time decreases with more skewed datasets for the same size of the query stream. This is attributed to the reduced number of nodes in the datasets, as shown in Table 2.

The query time of augmented skip lists is also reduced greatly compared to the classic skip lists as shown in Figure 7.

In addition, we conduct experiments to compare the performance of standard binary search trees and standard skip lists. In particular, we generate datasets of size . For each fixed value of , each element of the dataset is generated uniformly at random in , i.e., uniformly at random from . Because the dataset is generated uniformly at random, then learning-augmented data structures will perform similar to oblivious data structures. We measure the construction time of the data structures, based on the input dataset. Our results demonstrate that as expected, skip lists perform significantly better than balanced binary search trees across all values of , due to the latter’s necessity of constantly rebalancing the data structure. In fact, skip lists performed almost better than BSTs in some cases, e.g., . We illustrate our results in Figure 8.

D.1.2 AOL Dataset

The AOL dataset [GP06] features around 20M web queries collected from 650k users over three months. The distribution of the queries is shown in Figure 9. The AOL dataset is a less skewed dataset than CAIDA with an alpha value of 0.75.

Figure 9 shows the distribution of the AOL queries with an estimated alpha value of 0.75. The AOL dataset resembles more to a slightly skewed uniform distribution with very few highly frequent items, which accounts for a lower improvement as in the case of AOL shown in Figure 10. The total number of queries for items with higher than 1000 frequency accounts for only 5% of the total number of queries for the AOL datasets. The learning-augmented skip list still outperforms the traditional skip list on this slightly skewed dataset. This result is also in line with the results from the synthetic data shown in Table 1 where lower alpha values have resulted in a lower speedup factor.

D.2 KD Trees

For KD Trees, first describe our methodology for evaluating our data structure on synthetic data. We then describe our empirical evaluations on real-world datasets.

The computer used for KD tree benchmarking is a desktop machine with an Intel Core i9-14900KF@ 3200MHz, with 64GB RAM, and 2TB of Solid State Drive. The tests were conducted in Windows 10 Enterprise, version 10.0.19045 Build 19045.

D.2.1 Synthetic Datasets with Perfect Knowledge

First, consider a dataset with a Zipfian distribution. In order to construct this dataset, we first select unique data points in uniformly. We then generate Zipfian frequencies, , and randomly pair the frequencies to the data points to serve as both the construction and query frequencies. We construct either a traditional KD tree, or our learned KD tree on this dataset. Then, we evaluate the performance of querying by sampling the known datapoints with probabilities given by their Zipfian probabilities.

In Table 3, we use a Zipfian distribution with parameters and . This data demonstrates that our method outperforms a traditional KD tree, given that the Zipfian distribution has the given parameters. In Figure 11, we vary the Zipfian parameters of our dataset. As expected, our learned KD tree performance increases with how skew the distribution is. Moreover, we find that our method outperforms the traditional KD tree on all tested Zipfian distributions.

| type | dim | const_time | avg query(s) | avg query depth |

|---|---|---|---|---|

| traditional | 1 | 1.267E-01 | 2.645E-05 | 1.330E+01 |

| learned | 1 | 2.387E-01 | 2.181E-05 | 1.086E+01 |

| traditional | 2 | 1.266E-01 | 2.709E-05 | 1.341E+01 |

| learned | 2 | 3.232E-01 | 2.221E-05 | 1.086E+01 |

| traditional | 3 | 1.253E-01 | 2.700E-05 | 1.334E+01 |

| learned | 3 | 3.981E-01 | 2.264E-05 | 1.097E+01 |

| traditional | 4 | 1.185E-01 | 2.724E-05 | 1.336E+01 |

| learned | 4 | 4.549E-01 | 2.256E-05 | 1.089E+01 |

| traditional | 5 | 1.300E-01 | 2.743E-05 | 1.336E+01 |

| learned | 5 | 5.286E-01 | 2.296E-05 | 1.096E+01 |

| traditional | 10 | 1.277E-01 | 2.896E-05 | 1.340E+01 |

| learned | 10 | 8.642E-01 | 2.403E-05 | 1.091E+01 |

| traditional | 20 | 1.295E-01 | 3.121E-05 | 1.344E+01 |

| learned | 20 | 1.543E+00 | 2.564E-05 | 1.085E+01 |

| traditional | 40 | 1.361E-01 | 3.558E-05 | 1.340E+01 |

| learned | 40 | 2.911E+00 | 2.902E-05 | 1.078E+01 |

D.2.2 Synthetic Datasets with Noisy Knowledge

In reality, it is rarely the case that we have perfect knowledge in constructing a model. In order to evaluate the performance of our method on noisy data, we create a synthetic dataset with noisy training information.

As before, we generate world points and assign them Zipfian weights. When constructing the tree, each weight us updated to be , where and are drawn from uniform distributions. This new noisy distribution is normalized in order to form a valid probability distribution. Then, points are queried many times with their ground truth Zipfian probabilities. For the fixed Zipfian distribution with , we plot the effects of different ranges of and to demonstrate the effect noise has in Fig. 4. This figure demonstrates that, even with moderate amounts of noise, our method still outperforms a traditional KD tree. Moreover, our method still remains on par with the traditional KD tree when significant noise is present, due to our robustness guarantees.

D.2.3 Real-World Datasets

In addition to evaluating our method on synthetic data, we also evaluate results on real-world data.

First, we consider -grams in various languages. We test on a pre-processed subset of the Google N-Gram dataset [Goo22, Goo12].

In order to evaluate our method, we convert an -gram to a vector in with each entry indexing the words in the -gram. We construct the learning-augmented and traditional KD trees, and show the performance of lookup with queries weighted by their ground truth frequency in Table 4. In all cases, we find that the learning-augmented KD tree outperforms the traditional KD tree at average lookup depth.