Learn and Control while Switching: Guaranteed Stability and Sublinear Regret

Abstract

Over-actuated systems often make it possible to achieve specific performances by switching between different subsets of actuators. However, when the system parameters are unknown, transferring authority to different subsets of actuators is challenging due to stability and performance efficiency concerns. This paper presents an efficient algorithm to tackle the so-called “learn and control while switching between different actuating modes” problem in the Linear Quadratic (LQ) setting. Our proposed strategy is constructed upon Optimism in the Face of Uncertainty (OFU) based algorithm equipped with a projection toolbox to keep the algorithm efficient, regret-wise. Along the way, we derive an optimum duration for the warm-up phase, thanks to the existence of a stabilizing neighborhood. The stability of the switched system is also guaranteed by designing a minimum average dwell time. The proposed strategy is proved to have a regret bound of in horizon with number of switches, provably outperforming naively applying the basic OFU algorithm.

Index Terms:

Over-actuated System, Reinforcement Learning, Regret, Switched SystemI Introduction

Control allocation is a vast and richly studied field that addresses the problem of distributing control efforts over redundant actuators to achieve a specific performance. Along this line, supervisory switching control that enables operation with different subsets of actuators is a practical approach for control allocation [1, 2, 3, 4]. In this class of problems, the switching time, the switch mode (which represents a subset of actuators), or both of these parameters can be either specified as part of the general decision-making process or determined by an external unit. Three selected problem classes that illustrate the significance of switching in over-actuated systems are Fault Tolerant Control (FTC), Time-Triggered Protocol (TTP), and moving target defense (MTD). These examples differ in their approach to defining and designing these crucial variables. Designing an algorithm to efficiently switch between different actuating modes when system parameters are unknown presents a significant challenge in terms of performance. We refer to this problem as ”learn and control while switching.” In this context, it is inefficient to disregard previously acquired information about these parameters and start the learning and control process from scratch each time a mode switch occurs. Our goal is to create an algorithm that capitalizes on previously acquired knowledge when commencing a new actuation mode. More specifically, our focus lies on an online Optimism in the Face of Uncertainty (OFU) based algorithm within the framework of Linear Quadratic (LQ) settings. This class of algorithms builds a confidence ellipsoid containing the unknown system parameters, allowing us to design a feedback gain by adopting an optimistic perspective toward this defined set. A naive approach to address our proposed problem would involve the repetitive application of the OFU-based algorithm whenever a mode switch takes place. However, this method is likely to be inefficient, given that it disregards previously acquired knowledge. In this research endeavor, we introduce a novel strategy that leverages learned information. This strategy involves the projection of recently constructed ellipsoids into the mode space that the system has transitioned into. Following this projection, we establish a confidence ellipsoid for control design, incorporating both the knowledge stored within the projected ellipsoid and the online measurements gathered after initiating the actuation. The core technical challenge in constructing this ellipsoid involves confidence set construction while incorporating the already learned information stored within the projected confidence ellipsoid. In our setting, unlike [5], who use the trace norm of the system parameters for regularization in least square estimation and confidence set construction, we utilize a weighted Frobenius norm. This approach provides us with an opportunity to incorporate the projected ellipsoid into confidence ellipsoid construction. To fully incorporate this idea, we also forgo the confidence ellipsoid normalization applied in [5]. As a result, our confidence ellipsoid representation differs slightly from that of [5]. Regarding the stability guarantee in the switching setting, we need to determine a minimum average dwell time that ensures the system slows down the switching process sufficiently to guarantee boundedness of the state norm during the switching operation.

Actuator faults can cause a decay in performance (e.g., impact on energy consumption of actuator) or even more devastating incidents [6]. This kind of safety concerns is often considered within the literature on so-called Fault Tolerant Control (FTC) [7, 8], where the goal of switching is to bypass a failing actuator [9], [10]. [11].

In TTP systems [12, 13], where control must occur through communication channels whose availability varies according to a predetermined/ known a priori schedule, the goal of switching is to conform to the specifications of that protocol.

As another specific application of switching systems, we can refer to the MTD strategy in cyber-physical security of control systems. MTD, which first appeared in the computer science literature [14] is a proactive strategy using which a system can hide its dynamics from a potential attacker by randomly switching between a different subset of actuators. This strategy seems practical considering that adversarial agents have limited resources and cannot compromise all the actuators simultaneously. In [15, 16], the authors apply MTD for identifying malicious attacks on sensors and actuators. Furthermore, in [4] and [17] the authors show an effort to address this challenge for a system with known dynamics.

One significant difference between these applications is that some are instances of ”direct switching” whereas others belong to the ”indirect switching” category [18], which impacts the determination of ”when and what mode to switch to”. For example, in TTP, both the switch time and actuation mode are pre-specified and given. On the other hand, for the FTC, the switch time is simply when an anomaly is detected. For this class of problems, we propose a mechanism that picks the best actuating mode to switch to by examining the richness of learned information up to that point. As for the MTD application, given that high-frequency switches are desirable, we let the system switch as often as desired unless stability is violated. For this aim, we constrain the system to stay in a mode for some minimum average dwell time (MADT). The next actuating method is specified with a similar strategy as in the FTC case. In all cases, the algorithm guarantees closed-loop stability and that parameters are learned well-enough to ensure low regret (defined as the average difference between the closed-loop quadratic cost and the best achievable one, with the benefit of knowing the plant’s parameters). The former is addressed by designing a MADT while latter requires detailed insight into available RL algorithms in LQ control literature.

Several studies have recently attempted to address switched systems control under different assumptions when the system model is unknown. Authors in [19] designed data-driven control laws for switched systems with discrete-time linear systems and unknown switch signals. The stability of an unknown switched linear system is investigated probabilistically by observing only finite trajectories in [20]. Furthermore, in [21, 22] design of stabilizing controllers for a switched system is addressed by solely relying on system structure and experimental data and without an explicit plant identification.

Learning-based methods in LQ framework are divided into model-free ([23] [24, 25, 26, 27]) and model-based RL approaches. The former ignores parameter estimates and outputs sub-optimal policy by solely relying on the history of data and directly minimizing the cost function [26]. The latter category usually comes with guaranteed regret, thanks to system identification as a core step in control design. We use similar properties to obtain a guaranteed regret.

A naive approach to model-based adaptive control is a simple philosophy that estimates a system’s unknown parameters and treats them as actual parameters in optimal control design. This algorithm is known as certainty equivalence in the literature, and since it decouples the estimation problem from the control one, it may lead to strictly sub-optimal performance [28]. Model-Based algorithms, in general, can be divided into three main categories, namely ”Offline estimation and control synthesis,” ”Thompson Sampling-based,” and ”Optimism in the Face of Uncertainty (OFU)” algorithms. OFU that couples estimation and control design procedures have shown efficiency in outperforming the naive certainty equivalence algorithm. Campi and Kumar in [28] proposed a cost-based parameter estimator, which is an OFU based approach to address the optimal control problem for linear quadratic Gaussian systems with guaranteed asymptotic optimality. However, this algorithm only guarantees the convergence of average cost to that of the optimal control in limit and does not provide any bound on the measure of performance loss for the finite horizon. Abbasi-Yadkori and Szepesvari [29] proposed a learning-based algorithm to address the adaptive control design of the LQ control system in the finite horizon, with worst case regret bound of . Using -regularized least square estimation, they construct high-probability confidence set around unknown parameters of the system and design an algorithm that optimistically plays concerning this set [30]. Along this line, Ibrahimi and et. al. [31] later on proposed an algorithm that achieves regret bound with state-space dimension of . Authors in [32] proposed an OFU-based learning algorithm with mild assumptions and regret. Furthermore, in [33] propose an OFU-based algorithm which for joint stabilization and regret minimization that leverages actuator redundancy to alleviate state explosion during initial time steps when there is low number of data.

The objective function to be minimized in the algorithm [30] is non-convex which brings about a computational hurdle. However, recently Cohen et al., in [5], through formulating the LQ control problem in a relaxed semi-definite program (SDP) that accounts for the uncertainty in estimates, proposed a computationally efficient OFU-based algorithm. Moreover, unlike the state-norm bound given by [30] which is loose, [5] guarantees strongly sequentially stabilizing policies enabling us to come ups with tight upper-bound, which ensures small MADT (appropriate for MTD application). These advantages motivate us to use the relaxed SDP framework in our analysis rather than [30].

The remainder of the paper is organized as follows: Section II provides the problem statement and then is followed by Section III which gives a brief background review, overview of projection technique, and augmentation technique. Moving on to Section V, we first provide an overview of the projection-based strategy. Then, we detail the main algorithmic steps and summarize the stability analysis, which includes MADT design and algorithm performance, particularly in terms of the regret bound. Section VI briefly discusses the extension of the proposed algorithm to the FTC and MTD type of applications. Afterwards, numerical experiment is given in Section VII. Finally, we conclude the key achievements in Section VIII. The most rigorous analysis of the algorithms (e.g., stability and regret bounds) and technical proofs are provided in Appendix A while leaving complimentary proofs to Appendix B. .

II Problem statement

Throughout this paper, we use the following notations: denotes the operator norm i.e., . We denote trace norm by . The entry-wise dot product between matrices is denoted by . The weighted norm of a matrix with respect to is interchangeably denoted by or . We denote a dimensional identity matrix by . We denote the ceiling function by that maps to the least integer greater than or equal to .

Consider the following time-invariant discrete time LQR system

| (1) | ||||

| (2) |

where , are unknown and and are known and respectively positive definite matrices.

Let be the set of all columns, () of . An element of its power set is a subset , of columns corresponding to a submatrix of and mode . For simplicity, we assume that i.e., that the first mode contains all actuators. With this definition in place we can express the system dynamics as follows:

| (3) |

or equivalently

| (4) |

where is controllable and is a given right-continuous piecewise constant switching signal where denotes the set of all controllable modes .

The associated cost with this mode is

| (5) |

where is a block of which penalizes the control inputs of the actuators of mode

Since we are considering arbitrary, externally-determined, switching patterns (which could include switching to a particular mode at some time instant, then never switching out of it), controllability of the system in each mode is necessary to ensure controllability of the switched system. This mode-by-mode controllability assumption is also useful to enable OFU-style learning at each epoch.

The noise process satisfies the following assumption which is a standard assumption in controls community (e.g., [30], [5] and [34]).

Assumption 1

There exists a filtration such that

is a martingale difference, i.e.,

for some ;

are component-wise sub-Gaussian, i.e., there exists such that for any and

Our goal is to design a strategy which can efficiently transfer the control authority between different subsets of actuators when the system parameters are unknown.

Relying on the past measurements, the problem is designing a sequence of control input such that the average expected cost

| (6) |

is minimized where , where , represents the switch sequence. Each element in the set specifies both the mode index and the time at which the switch to that mode occurs. In the context of TTP applications, this sequence is typically predetermined, and thus the system has access to both the switch times and the corresponding modes. In contrast, for FTC, while the switch times are determined by an anomaly detector, the decision regarding which mode to switch to is made by the algorithm itself. Therefore, the algorithm plays a pivotal role in selecting the next mode, denoted as . When the system parameters are known, it may be relatively straightforward to specify the next mode that results in the lowest average expected cost. However, this task becomes significantly more challenging in the absence of such information. The MTD application introduces an even greater level of complexity, as the algorithm must autonomously decide both when and to which mode to switch. In this work, our primary focus is on TTP setting. In Section VI we will provide a brief overview of the general solutions for the other two settings.

As a measure of optimality loss having to do with the lack of insight into the model, the regret of a policy is defined as follows:

| (7) |

where is the optimal average expected cost of actuating in mode .

Proposition 1

The regret incurred by employing a fixed feedback controller on a LQ system over rounds follows an order of .

Assumption 2

-

1.

There are known constants such that,

for all .

-

2.

There is an initial stabilizing policy to start algorithm with.

Note that having access to and for all separately is quite demanding and, hence, in practice, we only require knowledge of and . The assumption of having access to an initial stabilizing policy like is a common practice in the literature (as seen in [5] and [35]). Nevertheless, it is possible to develop a stabilizing policy in real-time by relying on some a priori bounds. For further details on this approach, we encourage readers to explore [34] and [33].

Developing a ”learn and control while switching” strategy requires overcoming two core challenges: performance and stability. We propose an efficient OFU-based algorithm equipped with a projection tool that can efficiently transfer the learned information from an actuating mode to others to achieve the former goal. This strategy outperforms the naive approach of repeatedly applying the standard OFU algorithm. Fast recurrent switching between the actuating modes can cause explosion of state norm over finite horizon. Hence, in TTP setting, we operate under the assumption that the switches occur at a sufficiently slow rate on average, with the time interval between two consecutive switches being longer than some minimum average dwell time .

Before presenting the strategy in more detail (and, in a later section, the specifics of the algorithms), we start by reviewing the main ingredients of our approach to build the foundation for our analysis.

III Preliminaries

III-A Primal SDP formulation of LQR problem

Consider a linear system

| (8) | ||||

| (9) |

Observing that a steady-state joint state and input distribution exists for any stabilizing policy , we denote the covariance matrix of this joint distribution as , which is defined as follows:

where and the average expected cost of the policy is given as follows

Now, for a linear stabilizing policy that maps , the covariance matrix of the joint distribution takes the following form:

| (10) |

where . Then the average expected cost is computed as follows

Now given , the LQR control problem can be formulated in a SDP form, as follows:

| (11) |

where any feasible solution can be represented as follows:

| (12) |

in which , , and . The optimal value of (11) coincides with the average expected cost , and for (ensuring ), the optimal policy of the system, denoted as and associated with the optimum solution , can be derived from considering (10).

In [5], the relaxed form of the SDP is used to design the stabilizing control policy when the matrix is replaced by its estimates (i.e., confidence set of ). We will slightly modify the relaxed SDP formulation for our purpose.

III-B System Identification

The proposed algorithm includes a system identification step that applies self-normalized process to obtain least square estimates and uses the martingale difference property of process noise to construct high probability confidence set around the system’s parameters. To sketch a brief outline, assume the system actuates in the first mode and evolves in an arbitrary time interval . One can then simply rewrite (1) as follows:

| (13) |

where and are matrices whose rows are and respectively. And in a similar way is constructed by concatenating vertically.

Using the self-normalized process, the least square estimation error, can be defined as

| (14) |

where is some given initial estimate and is a regularization parameter. This yields the -regularized least square estimate:

| (15) |

where

is covariance matrix. Then assuming for , a high probability confidence set containing unknown parameters of system is constructed as

| (16) | |||||

where

| (17) |

It can be shown that the true matrix is guaranteed to belong to this set with probability at least , for . The right hand side of (16) is dependent on the user-defined parameters , and initial estimation error . The proof follows a combination of steps of [5] and [30] with the difference that we ignore the normalization of the confidence set. For the sake of brevity, we may sometimes use in our analysis.

III-C strong sequential stability

We review the notation of strong sequentially stabilizing introduced in [5], which will be used in the context of switched system as well.

Definition 1

Consider a linear plant parameterized by and . The closed-loop system matrix is strongly stable for and if there exists and such that and

-

1.

and

-

2.

and with

A sequence of closed-loop system matrices is strongly sequentially stable if each is strongly stable and . Furthermore, we say a sequence of control gains is strongly sequentially stabilizing for a plant if the sequence is strongly sequentially stable.

As shown in [5] applying such a strongly stabilizing sequence of controllers starting from state guarantees the boundedness of state trajectory as follows:

| (18) |

III-D Projection of the Confidence Ellipsoid

Suppose at time the central confidence ellipsoid

| (19) | |||||

is given. By projecting the central ellipsoid (19), rich with information until time , into the space of mode , the corresponding confidence set of parameters for mode is again an ellipsoid, denoted by and represented as follows:

| (20) | |||||

The characteristics and of this projected ellipsoid can be computed following the steps specified below.

Consider the ellipsoid

| (21) |

where and has rows. We aim to project this set to a lower dimensional space of mode identified by for some . To this end, we first change the order of rows in such a way that the rows corresponding to the actuators of mode , come first whereas . The reformulated ellipsoid then is written as follows:

| (24) |

Where is in similar to . Let us now rewrite the parametrization of ellipsoid (24) as follows

where in is a vectorization of and .

Suppose is the boundary of the projection of the ellipsoid on our target lower dimensional space. It is obvious that any point is the projection of a point and both of the points are on the tangent space of parallel to the direction of . Hence, we can deduce that on these points, which gives algebric equations. By solving these equation for the components of s in terms of the components of , and subsequently substituting them into we obtain the projected ellipsoid which can be written in following compact form :

| (25) |

where , , and are the block matrices of the partitioned , e.g.,

| (28) |

We can easily construct this partition noting that . By sorting the arrays of vector by order into a matrix of dimension the projected confidence ellipsoid (25) can be rewritten in the standard format of

where covariance matrix .

III-E Augmentation

Let us call the mode consisting of all actuators (i.e., mode ) the ”central mode”. Its associated confidence ellipsoid contains all information up to time and can be computed at all times even when the system operates in a different mode. Indeed when the system is in the mode with the following dynamics:

| (29) |

we can equivalently rewrite it as follows:

| (30) |

where and

| (31) |

with being the complement of .

In turn the estimation process of III-B can be performed on (30) to obtain updated estimates of computed by the central ellipsoid .

We refer to this simple mathematical operation as ”augmentation technique” using which we can update central ellipsoid regardless of the actuation mode.

IV Projection-based strategy

IV-A Overview

A possible way to tackle the ”learn and control while switching problem:” is to apply the OSLO algorithm described in [5] repeatedly, each time a switch occurs. However, such a naive approach is likely inefficient because (1) it ignores previously learned information and, (2) it runs a warm-up phase after each switch. As a (provably) better alternative, we propose a projection-based strategy which relies on the central ellipsoid to incorporate previously learned information in control design procedure. A controller that plays optimistically with respect to this set is then computed by solving a relaxed SDP.

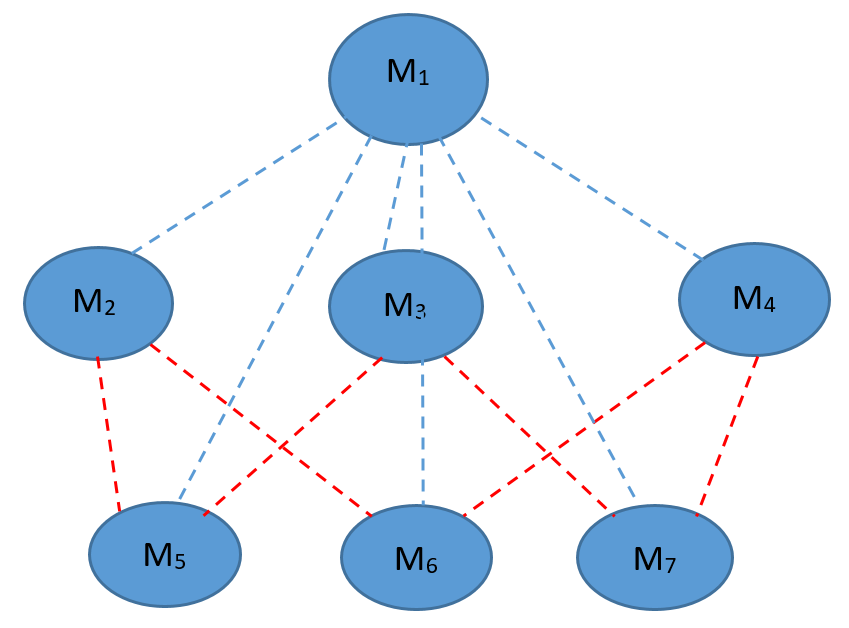

To clarify the main idea, consider a simple example of control system with three actuators which offers seven actuating modes assuming that they all satisfy the controllability condition. Let denote the mode 1 which takes advantage of all the actuators. The modes and are the ones with two actuators and single actuator respectively. We can consider as the central mode which is at the same time the parent of all other actuating modes. Figure 1 illustrates the relationships between these actuating modes.

The dashed lines indicate how the modes are interconnected. For instance, the column set of modes and are the subsets of the column set of mode . We break the strategy into two phases, the warm-up and Learn and Control while Switching (LCS) phases, whose pseudo codes have been provided in Algorithms 1 and 2. The strategy starts with Algorithm 1, which applies a given strong stabilizing policy feedback for time steps to provide Algorithm 2 with close enough parameter estimates . For warm-up duration computation, we use the recent results of [36] on the existence of a stabilizing neighborhood around unknown parameters of the system. We compute such that the controller designed by Algorithm 2 at time , which relies on the estimate , performs better than the initial stabilizing controller and ensures the achievement of a favorable overall regret. The computation of this duration is detailed in Theorem 1.

After the warm-up phase, Algorithm 2 is applied whenever a switch occurs. This algorithm possesses the capability to access the updated central ellipsoid, which it maintains through the utilization of the augmentation technique. LCSA projects the central ellipsoid to the space of the actuating mode ; the mode system operates in for the epoch (time interval between two subsequent switches of actuating mode). During operation in an epoch, the algorithm applies OFU for the actuating mode to obtain the control input. In parallel, it updates (i.e., enriches) the central ellipsoid by using the augmentation technique.

In the next section, we propose LCSA algorithm for the TTP application where the switch sequence (both switch time and modes) are given upfront. We define this sequence by where and are switch times and is total number of switches. Having provided by Algorithm 1, we reset the time and let switch time where . We denote the time interval between two subsequent switches, i.e., as epoch . This algorithm, however, can be slightly modified to address FTC and MTD classes of applications. We will discuss these modifications in Section VI.

IV-B Main steps of Algorithms

For the sake of the reader more interested in implementation than in the algorithms’ theoretical underpinnings, we start by surveying the main steps of Algorithm 1 and 2. We are mostly concerned with explaining the computations and algebraic manipulations performed in each step, and provide details about the rationale for and properties of these steps under the umbrella of performance analysis in the next section. Proofs of most of these results are relegated to the appendix.

IV-B1 System Identification

The system identification step aims at building confidence set around the system’s true but unknown parameters. This step plays a major role in both Algorithm 1 and Algorithm 2.

Confidence Set construction of warm-up phase

In Algorithm 1, using data obtained by executing control input (which consists of linear strongly stabilizing term and extra exploratory noise ), a confidence set is constructed around the true parameters of the system by following the steps of Section III-B. For warm-up phase we let in (14) which results in

| (32) |

where the covariance matrix is constructed similar to Section III-B. Recalling by assumption, a high probability confidence set around true but unknown parameters of system is constructed as

| (33) | |||||

where

| (34) |

For warm-up phase we set .

Deployment of Algorithm 1 endures until the parameter estimates reside in -stabilizing neighborhood . As per Definition 2, the first stabilizing controller designed by Algorithm 2, where has been substantiated to yield an average expected cost that is lower than that of when applied in mode 1. This, in essence, ensures that Algorithm 2 commences with a feedback gain as effective as the initial stabilizing feedback gain . Additionally, to secure joint stabilization and effectively manage regret, it is imperative to ensure the proximity of the estimate . This duration is determined by the theorem presented below.

Theorem 1

For a given -strongly stabilizing input , there exist explicit constants and such that the following holds: Let be the smallest time that satisfies:

| (35) |

then that , obtained by Algorithm 2 applied to the system , has an average expected cost that is lower than that of the policy . Furthermore, requiring is a sufficient condition for strong sequential stability of the closed-loop system under the implementation of the policy produced by Algorithm 2.

The regret associated with the implementation of the warm-up algorithm is of the order . To substantiate this assertion, let denote the specified horizon for implementing Algorithm 2. By definition, we have , where , as the condition for strong sequential stability (see proof of Lemmas 9 and 10). This, along with the definition of , indeed indicates that . Now, based on equation (35), we deduce that . Given Proposition 1, which establishes that the regret associated with a fixed strongly stabilizing policy over rounds scales as , we can conclude that within rounds, the warm-up phase incurs a regret of

Confidence Set construction of LCSA algorithm

Having been run for the mode 1, the warm-up phase outputs as an initial estimate using which the central ellipsoid as an input to Algorithm 2, is constructed as follows

where , where and . The initial confidence ellipsoid (LABEL:eq:initialCentaEl) is, in fact, equivalent to . We apply the procedure of Section III-B together with augmentation technique to update the central ellipsoid.

Suppose that at time , the system switches to mode . The associated inherited confidence ellipsoid for mode can be derived through the projection of the central ellipsoid, as discussed in Section III-D, and can be expressed as follows:

| (37) | |||||

The following theorem provides a confidence bound on the error of parameter estimation when there is an inherited confidence set.

Theorem 2

Let the linear model (4) satisfy Assumption 1 with a known . Assume the system at an arbitrary time switches to the mode and runs for the time period (i.e. ’s epoch). Let the inherited information, which is a projection of central ellipsoid, be given by (37). Then with probability at least we have

| (38) |

where

| (39) |

| (40) |

and

| (41) |

The second term in the right hand side of (39) is related to the inherited information from the central ellipsoid while the first term is the contribution of learning at the running epoch. Similarly, in the definition of provided by (41), the term is derived from inherited information, while represents the contribution of learning during the current running epoch.

IV-C Control design

The relaxed SDP problem which accounts for the uncertainity in parameter estimates is used for control design. Given the parameter estimate and the covariance matrix obtained by (40) and (41), the relaxed SDP is formulated as follows:

| (45) |

where is any scalar satisfying . And we define . Denoting as the optimal solution of (45), the controller extracted from solving the relaxed SDP is deterministic and linear in state () where

The designed controller is strongly stabilizing in the sense of Definition 1 and the sequence of feedback gains is strongly sequentially stabilizing, as affirmed by Theorem 3. The detailed analysis of the relaxation of the primal SDP formulation, incorporating the constructed confidence ellipsoid to account for parameter uncertainty, is provided in Appendix A-C which is adapted from [5] with modifications as the result of not normalizing the confidence ellipsoid.

Recurrent update of confidence sets may worsen the performance, so a criterion is needed to prevent frequent policy switches in an actuation epoch. As such, Algorithm 2 updates the estimate and policy when the condition is fulfilled where is the last time the policy got updated ( is solely used in Algorithm 2 and should not be mixed with switch times in actuating modes; s). This is a standard step in OFU-based algorithms ([30], [33], and [5]).

IV-D Projection Step

In Algorithm 2 we apply the projection technique described in Section III-D to transfer the information contained in the central ellipsoid to the mode that the system just switched to. The central ellipsoid is consistently updated in Algorithm 2 using the augmentation technique regardless of the active actuating mode.

V Performance Analysis

V-A Stability Analysis

In this section, we first proceed to restate the results of [5] showing that the sequence of feedback gains obtained by solving primal relaxed-SDP (45) is strongly sequentially stabilizing. We then define a Minimum Average Dwell Time (MADT), denoted as , which ensures the boundedness of the state norm in a switching scenario by mandating that the system remains in each mode for at least this duration. In the TTP case, this property would need to hold for the given sequence, i.e., , for . For MTD and FTC cases, on the other hand, the MADT constraints the times of switch.

Solving relaxed SDP (45) is the crucial step of the algorithm, however when it comes to regret bound and stability analysis, the dual program

| (49) |

and its optimal solution play a pivotal role.

Recalling the sequential strong stability definition, the following theorem adapted from [5] shows that the sequence of policies obtained by solving relaxed SDP (45) for system actuating in mode are strongly sequentially stabilizing for the parameters and to be defined.

Theorem 3

Let ’s for be solutions of the dual program (49) associated with an epoch occurring in time interval and the mode with corresponding and . Let and with , then the sequence of policies ’s (associated with ’s) are sequentially strongly stabilizing with probability at least .

Recalling Definition 1, a sequentially stabilizing policy keeps the states of system bounded in an epoch. The following lemma gives this upper bound in terms of the stabilizing policy parameters and .

Lemma 1

For the system evolving in an arbitrary actuating mode in time interval of by applying sequentially stabilizing controllers for any the state is upper-bounded by

| (50) |

Proof:

The proof is straight forward but for the sake of completeness we provide it here. Applying the sequentially stabilizing policy implies (18), then using Hanson-Wright concentration inequality with probability at least yields

| (51) |

which completes the proof. ∎

Having stable subsystems is a necessary but not a sufficient condition to ensure the stability of a switched system. The switches must occur at a sufficiently slow rate on average to guarantee stability. Therefore, we need to impose a requirement that the switches occur at a sufficiently slow rate. The following theorem establishes a lower bound on the time elapsed between two consecutive switches, referred to as the ”dwell time,” which serves as a sufficient condition for ensuring boundedness.

The following theorem establishes a lower bound on the average dwell time.

Theorem 4

Consider the switch sequence , and let the system (3) be controlled by -strongly sequentially stabilizing controllers for any mode . Then, for a user-defined by requiring , where

| (52) |

in which

| (53) |

the state norm will be bounded as follows:

| (54) |

where

V-B Regret Bound Analysis

Taking into account the fact that any confidence ellipsoid can be obtained through projecting the central ellipsoid , we can define the following unified “good event”, using the denomination of [30]

| (55) | ||||

where

The upper bound for is obtained from (), as derived in the proof of Lemma 11 in Appendix A-D. The event holds for any sequence of switches and is used for carrying out regret bound analysis.

By an appropriate decomposition of regret on the event , for an arbitrary switch sequence , the following upper-bound for regret is obtained (adapted from [5]):

| (56) |

where:

| (57) |

| (58) |

| (59) |

| (60) |

in which for and is the indicator function. By bounding the above terms separately, the overall imposed regret, not-counting the warm-up regret, can be obtained. The following theorem summarizes the result.

Theorem 5

The regret of projection-equipped algorithm in horizon and with number of switches is with probability at least . Moreover, this strategy is more efficient than repeatedly and naively applying the basic OFU algorithm.

VI Modifications to Address the MTD and FTC scenarios

Algorithm 2, with minor adjustments, can also address the FTC and MTD problems where switch sequence information (actuating modes and switch times) are unavailable. This section proposes possible directions to adjust the strategy to the mentioned applications.

For the FTC application, leaving aside switch time, which is supposedly determined by an external unit (e.g., a detector), the remaining challenge is determining the next actuating mode to switch to. From the set of available modes , the mode that guarantees to achieve lower regret seems a rational choice. Proofs of Lemmas 13 and 16, in the regret bound analysis section, justify that switching to a mode whose covariance matrix has the highest determinant value guarantees achieving the lower regret bound. In other words, at the time the best mode which guarantees better regret is the mode where is the projection of central ellipsoid on the space of mode .

In the MTD application, where the frequent switch is the central part of the strategy, in addition to determining the next actuating mode, we have to specify the switch instances so that the stability is not violated. To address the latter, we design a MADT (see Theorem 4) and require the algorithm to make the switches ”slow enough” by waiting on a mode for at least the MADT unit of time. Regarding the choice of the next mode, picking merely the well-learned one (by evaluating the covariance matrices) does not seem practical. The reason is that this strategy pushes the algorithm to choose the modes with more actuators (which have greater values of covariance matrix determinant) and technically ignore most of the modes, so the set of candidate modes will be smaller. In turn, this means less unpredictability and helps the attacker perform effective intrusion. To resolve this issue, one may decide to switch by optimizing the weighted sum of determinant values of covariance matrices (of the modes) and the value of some unpredictability measures. This idea basically gives a trade-off between lower regret and unpredictability. The authors in [4] use an information entropy measure produced by a probability simplex when the system is known.

VII Numerical Experiment

In this section, to demonstrate the effectiveness of the proposed “learn and control while switching” algorithm compared to repeatedly applying the basic OFU algorithm, we use a third-order linear system with three actuators as of the following plant and control matrices:

which are unknown to the learner. Weighting cost matrices are chosen as follows:

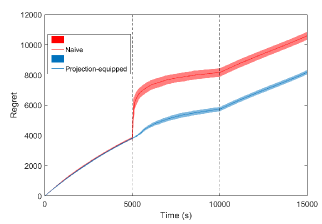

Figure 2 shows the regret bound of the algorithm for the switch sequence of where the mode 2 is specified by

We let the switch happens at times , , and seconds. We intentionally picked this switch sequence to show the regret when the confidence set is projected to a lower-dimensional space and when the system switches back to a previously explored mode. We further assume the initial estimate where is given and let the naive algorithm use this estimate at time when the system switches to the second mode. We set , , , and .

At the time seconds, when the switch happens, the projection-equipped algorithm outperforms the naive one thanks to the learned information during the first epoch, which is restored through the projection tool. At the time seconds, the system switches back to mode 1. During this last epoch, we only see a slight improvement which is because the switch in policy, triggered by fulfillment of the condition (see the line 8 of algorithm 2) rarely happens as the value of increases. In our case, we only have one time of policy improvement during the epoch .

VIII Summary and Conclusion

In this work, we equipped the OFU algorithm with a projection tool to improve the ”learn and control” task when switching between the different subsets of actuators of a system is mandated. This improvement is proved mathematically in a regret-bound sense and shown throughout the simulation compared to a case when the basic OFU algorithm is repeatedly applied. We presented this idea for a time-triggered network control system problem when the sequences of switch times and modes are upfront. We also discussed the possibilities of extending the proposed algorithm to applications such as fault-tolerant control and moving target defense problems for which there is a freedom to pick the best actuating mode when it is a time to switch. To address this, we proposed a mechanism that offers the best-actuating mode, considering how rich the learned data is. A minimum average dwell-time is also designed to address stability concerns in applications such as moving target defense that frequent switches might cause instability. Moreover, by applying recent results on the existence of a stabilizing neighborhood, we have determined an initial exploration duration such that the efficiency for the warm-up phase is guaranteed.

References

- [1] H. Ishii and B. A. Francis, “Stabilizing a linear system by switching control with dwell time,” in Proceedings of the 2001 American Control Conference.(Cat. No. 01CH37148), vol. 3. IEEE, 2001, pp. 1876–1881.

- [2] J.-X. Zhang and G.-H. Yang, “Supervisory switching-based prescribed performance control of unknown nonlinear systems against actuator failures,” International Journal of Robust and Nonlinear Control, vol. 30, no. 6, pp. 2367–2385, 2020.

- [3] M. Staroswiecki and D. Berdjag, “A general fault tolerant linear quadratic control strategy under actuator outages,” International Journal of Systems Science, vol. 41, no. 8, pp. 971–985, 2010.

- [4] A. Kanellopoulos and K. G. Vamvoudakis, “A moving target defense control framework for cyber-physical systems,” IEEE Transactions on Automatic Control, 2019.

- [5] A. Cohen, T. Koren, and Y. Mansour, “Learning linear-quadratic regulators efficiently with only sqrt(t) regret,” arXiv preprint arXiv:1902.06223, 2019.

- [6] S. S. Tohidi, Y. Yildiz, and I. Kolmanovsky, “Fault tolerant control for over-actuated systems: An adaptive correction approach,” in 2016 American control conference (ACC). IEEE, 2016, pp. 2530–2535.

- [7] M. Blanke, M. Kinnaert, J. Lunze, M. Staroswiecki, and J. Schröder, Diagnosis and fault-tolerant control. Springer, 2006, vol. 2.

- [8] R. J. Patton, “Fault-tolerant control: the 1997 situation,” IFAC Proceedings Volumes, vol. 30, no. 18, pp. 1029–1051, 1997.

- [9] M. Takahashi and T. Takagi, “Adaptive fault-tolerant control based on hybrid redundancy,” Asia-Pacific Journal of Chemical Engineering, vol. 7, no. 5, pp. 642–650, 2012.

- [10] Q. Yang, S. S. Ge, and Y. Sun, “Adaptive actuator fault tolerant control for uncertain nonlinear systems with multiple actuators,” Automatica, vol. 60, pp. 92–99, 2015.

- [11] H. Ouyang and Y. Lin, “Adaptive fault-tolerant control for actuator failures: a switching strategy,” Automatica, vol. 81, pp. 87–95, 2017.

- [12] S. Shaheen, D. Heffernan, and G. Leen, “A gateway for time-triggered control networks,” Microprocessors and Microsystems, vol. 31, no. 1, pp. 38–50, 2007.

- [13] R. Blind and F. Allgöwer, “On time-triggered and event-based control of integrator systems over a shared communication system,” Mathematics of Control, Signals, and Systems, vol. 25, no. 4, pp. 517–557, 2013.

- [14] J.-H. Cho, D. P. Sharma, H. Alavizadeh, S. Yoon, N. Ben-Asher, T. J. Moore, D. S. Kim, H. Lim, and F. F. Nelson, “Toward proactive, adaptive defense: A survey on moving target defense,” IEEE Communications Surveys & Tutorials, 2020.

- [15] S. Weerakkody and B. Sinopoli, “Detecting integrity attacks on control systems using a moving target approach,” in 2015 54th IEEE Conference on Decision and Control (CDC). IEEE, 2015, pp. 5820–5826.

- [16] ——, “A moving target approach for identifying malicious sensors in control systems,” in 2016 54th Annual Allerton Conference on Communication, Control, and Computing (Allerton). IEEE, 2016, pp. 1149–1156.

- [17] P. Griffioen, S. Weerakkody, and B. Sinopoli, “A moving target defense for securing cyber-physical systems,” IEEE Transactions on Automatic Control, vol. 66, no. 5, pp. 2016–2031, 2020.

- [18] K. S. Narendra and J. Balakrishnan, “Adaptive control using multiple models,” IEEE transactions on automatic control, vol. 42, no. 2, pp. 171–187, 1997.

- [19] M. Rotulo, C. De Persis, and P. Tesi, “Online learning of data-driven controllers for unknown switched linear systems,” arXiv preprint arXiv:2105.11523, 2021.

- [20] J. Kenanian, A. Balkan, R. M. Jungers, and P. Tabuada, “Data driven stability analysis of black-box switched linear systems,” Automatica, vol. 109, p. 108533, 2019.

- [21] T. Dai and M. Sznaier, “A moments based approach to designing mimo data driven controllers for switched systems,” in 2018 IEEE Conference on Decision and Control (CDC). IEEE, 2018, pp. 5652–5657.

- [22] ——, “A convex optimization approach to synthesizing state feedback data-driven controllers for switched linear systems,” Automatica, vol. 139, p. 110190, 2022.

- [23] S. J. Bradtke, B. E. Ydstie, and A. G. Barto, “Adaptive linear quadratic control using policy iteration,” in Proceedings of 1994 American Control Conference-ACC’94, vol. 3. IEEE, 1994, pp. 3475–3479.

- [24] S. Tu and B. Recht, “Least-squares temporal difference learning for the linear quadratic regulator,” arXiv preprint arXiv:1712.08642, 2017.

- [25] M. Fazel, R. Ge, S. Kakade, and M. Mesbahi, “Global convergence of policy gradient methods for the linear quadratic regulator,” in International Conference on Machine Learning. PMLR, 2018, pp. 1467–1476.

- [26] Y. Abbasi-Yadkori, N. Lazic, and C. Szepesvári, “Model-free linear quadratic control via reduction to expert prediction,” arXiv preprint arXiv:1804.06021, 2018.

- [27] S. Arora, E. Hazan, H. Lee, K. Singh, C. Zhang, and Y. Zhang, “Towards provable control for unknown linear dynamical systems,” arXiv preprint arXiv:1712.08642, 2018.

- [28] M. C. Campi and P. Kumar, “Adaptive linear quadratic gaussian control: the cost-biased approach revisited,” SIAM Journal on Control and Optimization, vol. 36, no. 6, pp. 1890–1907, 1998.

- [29] Y. Abbasi-Yadkori, D. Pál, and C. Szepesvári, “Online least squares estimation with self-normalized processes: An application to bandit problems,” arXiv preprint arXiv:1102.2670, 2011.

- [30] Y. Abbasi-Yadkori and C. Szepesvári, “Regret bounds for the adaptive control of linear quadratic systems,” in Proceedings of the 24th Annual Conference on Learning Theory, 2011, pp. 1–26.

- [31] M. Ibrahimi, A. Javanmard, and B. V. Roy, “Efficient reinforcement learning for high dimensional linear quadratic systems,” in Advances in Neural Information Processing Systems, 2012, pp. 2636–2644.

- [32] M. K. S. Faradonbeh, A. Tewari, and G. Michailidis, “Optimism-based adaptive regulation of linear-quadratic systems,” arXiv preprint arXiv:1711.07230, 2017.

- [33] J. A. Chekan, K. Azizzadenesheli, and C. Langbort, “Joint stabilization and regret minimization through switching in systems with actuator redundancy,” arXiv preprint arXiv:2105.14709, 2021.

- [34] S. Lale, K. Azizzadenesheli, B. Hassibi, and A. Anandkumar, “Explore more and improve regret in linear quadratic regulators,” arXiv preprint arXiv:2007.12291, 2020.

- [35] S. Dean, H. Mania, N. Matni, B. Recht, and S. Tu, “Regret bounds for robust adaptive control of the linear quadratic regulator,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- [36] H. Mania, S. Tu, and B. Recht, “Certainty equivalence is efficient for linear quadratic control,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- [37] A. Cassel, A. Cohen, and T. Koren, “Logarithmic regret for learning linear quadratic regulators efficiently,” in International Conference on Machine Learning. PMLR, 2020, pp. 1328–1337.

Appendix A Further Analysis

In this section, we dig further and provide proofs, rigorous analysis of the algorithms, properties of the closed-loop system, and regret bounds.

A-A Technical Theorems and Lemmas

The following lemma, adapted from [29] gives a self-normalized bound for scalar-valued martingales.

Lemma 2

Let be a filtration, be a stochastic process adapted to and (where is the th element of noise vector ) be a real-valued martingale difference, again adapted to filtration which satisfies the conditionally sub-Gaussianity assumption (Assumption 1) with known constant . Consider the martingale and co-variance matrices:

then with probability of at least , we have,

| (61) |

Proof:

The proof can be found in Appendix B. ∎

The following corollary generalizes the Lemma 1 for a vector-valued martingale which will be later used in Theorem 2 to derive the confidence ellipsoid of estimates.

Corollary 1

Proof:

For proof see Appendix B. ∎

Lemma 3

(Mania et al [36]) There exists explicit constants and such that for any guarantees

| (63) |

where is average expected cost of executing the controller on the system and is optimal average expected cost.

Lemma 4

(Cassel et al [37]) Suppose then is -strongly stabilizing with and .

A-B Confidence Construction (System Identification)

Proof of Theorem 1

Proof:

Note that during the warm-up phase we execute the control input which includes a more-exploration term of. The state norm of system is upperbounded by strong stabilizing property of during warm-up phase (See [5]). Now, let denote warm-up duration. The confidence ellipsoid is constructed during this phase by applying the procedure illustrated in Section III-B.

where

Furthermore, from Theorem 20 of [5], we have the following lower bound for

which results in

| (64) |

Now applying the results of Lemmas 3 and 4 and setting yield

| (65) |

When Algorithm 2 begins actuating in mode 1, given that resides within a -stabilizing neighborhood, solving the primal problem with the confidence bound constructed by results in an average expected cost improvement over that of , or at least achieves a similar performance. ∎

Proof of Theorem 2

Proof:

The least square error regularized by (37) is written as follows

| (66) |

Solving

| (68) |

yields

| (69) |

where

| (70) |

where . For an arbitrary random extended state , one can write

which leads to

which can equivalently be written as:

| (71) |

Given the fact that ,

The following lemma aims at obtaining an upper-bound for the right hand side of (39) which will be later used in regret bound analysis.

Lemma 5

A-C Properties of Relaxed SDP

This subsection attempts to go through some essential features of the primal and dual relaxed-SDP problems (45) and (49). While the former is solved by Algorithm 2 to compute a regularizing control in the face of parameter uncertainty, the latter plays a pivotal role in the stability and regret bound analysis.

Both primal and dual problems directly follow from applying next two matrix-perturbation lemmas 6 and 7 (adapted from [5]) on the exact primal SDP and its dual problems. As the following lemmas hold for any actuating mode , we deliberately dropped the superscripts .

Lemma 6

Let and be matrices of matching sizes and let for positive definite matrix . Then for any and , we have

Proof:

To discuss the solution property of primal problem we need following lemma adapted from [5].

Lemma 7

Let and be matrices of matching sizes and let for positive definite matrix . Then for any and ,

Proof:

The proof can be found [5]. ∎

By substituting , , , and in Lemmas 6 and 7, and applying them on exact primal ((11) with ) and its dual SDPs, the primal and dual relaxed-SDP problems ((45) and (49)) are obtained. Moreover, it is straightforward to show .

Furthermore, it is also shown that , the solution of SDP (11) (with and ), is a feasible solution of the relaxed SDP (45).

We can show that the primal-dual solutions of (45) and (49)) respectively satisfy and where is the corresponding optimal average expected cost of actuating in mode (See Lemma 15 in [5] for more details).

The following lemma shows how to extract the deterministic linear policy from the relaxed SDP. We will later apply this lemma in the Lemma 9 to show that for all actuating modes, the designed controller is strongly sequentially stabilizing in an epoch (a time interval between two subsequent switches).

Lemma 8

Proof:

proof is in Lemma 16 of [5]. ∎

Now we continue with carrying out the stability analysis of the system under execution of control designed by Algorithms 3 and 4.

A-D Stability Analysis

Lemma 9 shows that the designed controller is strongly stabilizing for the values of parameters specified in Theorem 3. Lemma 10 shows is closed to and as such proves the sequentiality of stability and completes the proof of the Theorem 3.

Lemma 9

For the system which actuates in an arbitrary mode , is strongly stabilizing for where and . Moreover,

Proof:

Regardless of the similarity of the proof to that of Lemma 18 in [5], we provide it for the sake of completeness and justification of the value of , and upper-bound of mentioned in Theorem 3.

First we need to appropriately upper-bound which is an input of the algorithms 3 and 4. Given (proved in Lemma 11),we have

| (76) |

where is an upper bound for all , which has already been provided by the Lemma 5:

We have , and define where and defined by (76). Given the fact that it yields

| (78) |

plugging (A-D) together with the assumptions into (77) yields,

| (79) |

Defining and using the fact that (which implies ),

Furthermore, (79) results in which together with imply .

This automatically yields and . So, our proof is complete and ’s are strongly stabilizing. ∎

Having established the strong stability by by Lemma 9, Lemma 10 shows is closed to and as such proves the sequentiality of stability and completes the proof of the Theorem 3 (See [5] for more detail).

Lemma 10

For any mode by choosing the regularization parameter we have

Proof:

Proof can be found in Appendix B. ∎

Proof of Theorem 4

Proof:

From the Lemma 1, the state norm of system actuating in mode and in an arbitrary actuation epoch happening in interval can be upper bounded as follows:

| (82) |

where is an initial state value of the actuation epoch. Having ’s we can deduce for all modes we have

| (83) |

where and .

Considering

we can upper-bound second term on right hand side of (83) by which is defined as follows:

Let us now denote the time sequences in which the switches in actuating mode happens be , then one can upper bound the state norm as follows:

in which . The term includes all dependent terms.

For stability purpose, the following inequality

| (84) |

needs to be fulfilled which yields

| (85) |

in which is MADT and is a user-define parameter. Subsequently we can write:

| (86) |

Then, the state is upper-bounded by:

| (87) |

∎

The boundedness of at each epoch is very crucial for estimation purpose. To bound it, we need to ensure that the extended state stays bounded as the sequentially stabilizing policy is executed. The following lemma gives this upper-bound and defines the parameter which are used in regret bound analysis section.

Lemma 11

Applying the Algorithms 1 and 2 yields

Proof:

Proof can be found in Appendix B. ∎

A-E Regret Bound Analysis

This section presents regret bound analysis of the proposed ”learn and control while switching strategy.” Proceeding as in [5], the regret can be decomposed into the form of (57-60). However, each term must be bounded differently than in [5] due to the switching nature of the closed-loop system.

The following lemma gives an upper bound on the ratio of covariance matrix determinant of the central ellipsoid and that of its projection to an arbitrary space associated with mode . The result will be useful in upper-bounding and terms.

Lemma 12

Given and as the covariance matrices associated with central ellipsoid and its projection to the space of mode respectively, then for any

| (88) |

holds true almost surely.

Proof:

Proof has been provided in Appendix B. ∎

Following Lemma gives an upper bound for the term .

Lemma 13

Let denote number of epochs.The upper bound for is given as follows:

| (89) |

where to be defined by

| (90) |

and

Proof:

Proof can be found in Appendix B. ∎

Lemma 14

The upper bound on is given as follows:

which holds true with the probability at least

Proof:

Proof directly follows [5] by taking into account where . ∎

To bound we have the following lemma:

Lemma 15

The term has the following upper bound when the sequence of switches is and number of epochs is

| (91) |

Proof:

Proof directly follows [5] by taking into account where . ∎

Lemma 16

The term has the following upper bound.

| (92) | ||||

Proof:

Proof can be found in Appendix B. ∎

Proof of Theorem 5

Proof:

Proof of first statement

Putting everything together gives the following upper bound for regret

where

| (93) |

Proof of second statement

The regret bound of an OFU-based algorithm is directly connected to the extent of tightness of confidence ellipsoid. We can explicitly see this effect in the term . This being said, we now compare the confidence ellipsoid built by the projection-based algorithm and the one obtained by naively and repeatedly applying the basic OFU-based algorithm.

Assume at an arbitrary time system actuates in any arbitrary mode , for which the confidence ellipsoid is given by

| (94) |

where

| (95) |

On the other when the projection is not applied the confidence set is given as follows:

| (96) |

where

| (97) |

and

where is number of time steps that system has been actuating in mode by time , and

| (98) |

Note that belongs to the both confidence sets and with same probability of . Hence, the tightest confidence set gives lower regret. Considering the fact that , there exists a for big enough such that for

This guarantees meaning that the confidence ellipsoid is tighter. ∎

Appendix B Supplementary Proofs

Proof of Lemma 2

Proof:

The proof follows from Theorem 3 and Corollary 1 in [30] which gives.

| (99) |

we have

true which completes proof. ∎

Proof of Corollary 1

Proof:

Proof of Lemma 5

Proof:

Proof of Lemma 10

Proof:

Proof of Lemma 11

Proof:

Having (87) and given the fact that and , we have

| (103) |

which yields

where

and in second inequality we applied .

Having along with which is true always by recalling the definition of , regardless of any sequences of actuating modes, it returns out that

by picking . ∎

Proof of Lemma 12

Proof:

To upper-bound, the ratio (88) where the nominator is the covariance of the central ellipsoid and the denominator is the covariance of the ellipsoid constructed by the projection of the central ellipsoid to the space of mode , one can write:

| (104) |

where for and for are eigenvalues of and respectively. We know that for any ellipsoid constructed by the covariance matrix , we have ’s as the diameters of ellipsoid where ’s are corresponding eigenvalues of . Since is the covariance matrix of an ellipsoid which is projection of the central ellipsoid with covariance matrix , there always exist a such that for all . In other words, there is always a diameter of the central ellipsoid associated with which is greater than or equals to any diameter of its projection defined by . Using this fact we can upper bound (104) as follows

The last inequality directly follows by the fact that takes its maximum value of when the projection is performed into space with dimension (i.e., projection from central ellipsoid to the mode with only one actuator). Also, we have have which completes proof. ∎

Proof of Lemma 13

Proof:

Recalling the switch time sequence with and , we have number of epochs.

Considering (57), one can write

| (105) |

where . Note that the first term of the right-hand side takes zero value except when there is a switch in either the policy or actuation mode. Therefore, We first need to find an upper bound on the total number of switches.

We know for the system actuating in any arbitrary mode and arbitrary epoch happening in time interval we have

| (106) |

where is the total number of switches in the epoch. Suppose in the last epoch the system actuates in the mode . Furthermore, without loss of generality let system actuates in the mode within the epoch . Then by applying (106) one can write

| (107) |

where the last inequality is due to the fact that and is strictly positive definite that guarantees . By following the same argument backward in switch times,it yields

| (108) |

Applying results in

| (109) |

By taking on both sides one can write

| (110) |

where

| (111) |

where denote the actuating mode and denotes the epoch and is defined as follows

| (112) |

which is result of the Lemma 12. Then it yields,

| (113) |

Furthermore, we have

| (114) |

in which . As a result, the maximum number of switch is upper-bounded by

where is the maximum number of total switches including the switches between actuating modes. The upper bound for can be written as follows:

| (115) |

which is obtained noting where and the upper-bound of state (90). ∎

Proof of Lemma 16

Proof:

Suppose the system evolves in a sequence of epochs starting with actuating mode 1, e.g . Then, one can write

| (116) | |||

| (117) | |||

| (118) | |||

| (119) |

where in the inequality (116) we applied the result of Lemma 26 in [5] and then in (117) we telescoped the summation of logarithmic terms. The inequality (118) is due to the facts that for and is strictly positive definite for all that guarantees . Finally the last inequality is direct result of applying (111-114) and (101).

Recalling definition and we have the following upper bound for the terms :

where ’s are upper-bounded by (74)

which completes the proof. ∎