Leader Confirmation Replication for Millisecond Consensus in Private Chains

Abstract

The private chain-based Internet of Things (IoT) system ensures the security of cross-organizational data sharing. As a widely used consensus model in private chains, the leader-based state-machine replication (SMR) model meets the performance bottleneck in IoT blockchain applications, where nontransactional sensor data are generated on a scale. We analyzed IoT private chain systems and found that the leader maintains too many connections due to high latency and client request frequency, which results in lower consensus performance and efficiency.

To meet this challenge, we propose a novel solution for maintaining low request latency and high transactions per second (TPS): replicate nontransactional data by followers and confirm by the leader to achieve nonconfliction SMR, rather than all by the leader. Our solution, named Leader Confirmation Replication (LCR), uses the newly proposed future log and confirmation signal to achieve nontransactional data replication on the followers, thereby reducing the leader’s network traffic and the request latency of transactional data. In addition, the generation replication strategy is designed to ensure the reliability and consistency of LCR when meeting membership changes. We evaluated LCR with various cluster sizes and network latencies. Experimental results show that in ms-network latency (2-30) environments, the TPS of LCR is 1.4X-1.9X higher than Raft, the transactional data response time is reduced by 40%-60%, and the network traffic is reduced by 20%-30% with acceptable network traffic and CPU cost on the followers. In addition, LCR shows high portability and availability since it does not change the number of leaders or the election process.

Index Terms:

confirmation replication, state-machine replication, consensus model, Raft, private chainI Introduction

With the introduction of the concept of virtual factories and intelligent manufacturing, the reliability of cross-organizational data sharing has received greater attention[1]. As a valuable data source, the IoT provides the resources that are required for manufacturing processes, intermediate goods, and manufactured goods. These resources are often enriched with sensor, identification, and communication technologies, such as RFID tags[2]. Although many IoT architectures have been generated over the past two decades, they still have problems with trust, security, overhead, and scalability[3].

The public blockchain architecture based on proof of stake (PoS)[4] and proof of work (PoW) [5] solves trust and security issues well. With good resistance to malicious behaviors, its application in virtual currency has been widely recognized. However, the throughput (lower than 30 TPS) and confirmation delay (minutes) that they provide cannot meet the demands of IoT systems[6, 7]. To address the problems, direct acyclic graph (DAG)-based approches represented by Tangle [8] improve the micro-transactions processing performance well. Its confirmation delay depends on the confirmation threshold and the dependencies of transactions[9]. To build trust between organizations while ensuring high transaction processing efficiency, permissioned private chains use leader-based Crash Fault Tolerant (CFT) consensus protocols (such as Paxos[10], Raft[11]) and reinforced identification checks[12] to achieve state-machine replication (SMR). Different from Byzantine Fault Tolerance (BFT) protocols (such as PBFT[13], PoS[4], PoW[5]), CFT does not allow the existence of Byzantine nodes in the network. Nonetheless, CFT protocols are designed for low-latency distributed systems[14, 15, 16]. For IoT blockchain systems, sensor data are transmitted using (wireless) sensor networks and are provided through well-defined interfaces, and consensus servers are deployed in Wide Area Network (WAN) environments where the network latency between nodes is unstable[17, 18, 3], as shown in Fig. 1.

As a result, this limitation has several important consequences. First, the massive IoT data lead to data retransmissions when performing SMR due to the high latency, which generates high network traffic between consensus nodes. Second, the centralized SMR incurs worker thread starvation problems on the leader node, which causes a significant decrease in the TPS performance of the blockchain system and increases the high overhead on the leader. Third, followers need to redirect the data to the leader for consistency, which generates additional latency for communicating with a remote leader.

Through research on the data generated by the IoT system, we found that operational non-transactional data are generated at a scale. Different from transactional data, these data contain up-to-date, real-time status information and are less likely to be updated[19][20][21]. Therefore, the availability and timeliness of non-transactional data are the focus of researchers[22][23][24]. To more clearly distinguish the difference between transactional data and non-transactional data, we give an example in Fig. 2.

Unfortunately, the existing CFT-based IoT blockchain system does not have optimized solutions for non-transactional data, i.e, transactional data and non-transactional data are treated the same. Most existing optimization methods for the geo-distributed consistency are implemented with multi-leader, i.e., the data store is divided into shards with different leaders. Although it can significantly reduce the load of the single leader under high latency, when any node is down, the corresponding shard will be temporarily unavailable due to the re-election. Another type of method dynamically designates leaders[25], but its performance is sensitive to the degree of dependence in the data and is likely to cause inconsistency when the number of nodes is large[26].

We want to reduce the load generated by SMR on the leader in the geo-distributed scenario without damaging the power of the leader and changing the election process, thereby improving the performance of the consistency model. It is common to implement SMR using replicated logs. Therefore, our goal is to make the followers undertake as much non-transactional log replication work as possible. The key point is how the logs replicated by the followers are confirmed by each node to reach a consensus. Among them, it is a challenge to design a novel control method for the leader in the log replication process, which can solve the log replication failure problem caused by the downtime of the follower nodes[27, 28]. Besides, how to avoid log submission conflicts when followers cannot obtain the entire cluster status in time is also an important problem that we need to solve.

In this paper, we propose Leader Confirm Replication (LCR), a method for optimizing leader-based consensus performance in geo-distributed consensus. LCR is the first SMR optimization method that does not change the number of leaders and election strategy and does not add strong control roles to non-leader nodes. It achieves consistency by proposing the future-log, which makes followers become the data-leader to replicate the non-transactional data without conflict. The leader only needs to confirm the right replicated future-log by a signal to achieve quorum. In doing so, LCR solves two major problems that we detailed above in geo-distributed scenarios: 1) It decouples the non-transactional data replication process from normal SMR, thereby reducing the leader’s resource consumption and improving the consistency efficiency of the data. 2) The entry status information included in each SMR request is increased by applying signal entries and a leader confirmation mechanism. The contributions include the following points:

1) We analyzed the cause of the concentrated load problem in the leader-based consistency model and discussed the existing optimization models.

2) We designed LCR, detailed the future-log replication model, realized nonconfliction log replication, and proved its availability. In addition, we designed generation to resolve log conflicts that can be caused by membership changes in the cluster.

3) We implemented LCR based on Raft and compared its performance with popular approaches. Then, we verified its availability and the optimization effect on the request latency, TPS, network traffic, and CPU load in ms-latency network environments.

4) We analyzed the overhead of LCR and discussed the application scenarios, which can provide ideas for follow-up studies.

We arranged this paper as follows: In Section II, we detail the related work, and in Section III, we show the problems and motivation for the research. We introduce the future-log replication method of LCR in Section IV, and we explain and prove the availability of the model in Section V. In Section VI, we present the experiments that were conducted and the analysis on LCR, and in Section VII, we discuss the protocol. We summarize our work in Section VIII.

II Related work

II-A Consensus protocols in private blockchains

The rapid development of private chain technology represented by Hyperledger has made geo-distributed blockchain systems widely used[29]. Different from the public chain, any node needs to go through a reinforced identification check before it can participate in the blockchain network[12]. Therefore, malicious behavior in the private chain will be easily detected and traced. It allows the private chain system to weaken the security design in an anonymous environment and adopts a consensus model that supports better TPS performance and scalability[30]. Therefore, the existing widely used private chain architectures use CFT protocols (e.g., Raft[11], Paxos[10]) instead of BFT protocols (e.g., PBFT, PoW, DAG), thereby improving the cluster scalability and the efficiency of the consistency[14, 31].

| Consistency | Byzantine Fault | Single node | Leader strategy | Client response | Major drawback |

|---|---|---|---|---|---|

| model | Tolerate | overhead | |||

| PoW[5] | Yes | High | No leader | Tens minutes | High overhead and long confirmation delay |

| PoS[4] | Yes | High | No leader | Tens seconds | Long confirmation delay |

| DAG[8] | Yes | High | No leader | Subsecond | High dependence on data business |

| PBFT[13] | Yes | High | Centralized | 3 RTT | Low scalability |

| Raft[11] | No | High | Centralized | 2 RTT | Single point overhead |

| Multi-Raft[32] | No | Medium | Centralized | 2 RTT | Frequent shard leader re-election |

| Mencius[33] | No | Medium | Rotational | 2 RTT | Run at slowest node |

| Multi-Paxos[34] | No | High | Centralized | 2 RTT | Single point overhead |

| EPaxos [25] | No | Low | No leader | 1 RTT | Higher CPU consumption and more round trips |

| for analyzing dependency | |||||

| SDPaxos [26] | No | Medium | Centralized | 1.5 RTT | More connections between nodes |

| LCR | No | Low | Centralized | 2 RTT (transactional) | Our solution |

| 1 RTT (non-transactional) |

However, CFT protocols are designed for low latency environments. In high network latency, most geo-distributed CFT protocols use leader-based SMR to achieve consistency, which can avoid the performance loss caused by replication conflict in the SMR process (e.g., the livelock in Paxos[35]). Nonetheless, the overhead of the leader will cause serious performance degradation when the consistency model runs in high concurrency. Although it is a good idea theoretically to dynamically change the controller of the consensus process to the follower nodes, it requires a stable network environment between each node to obtain the real-time node status. Otherwise, the consistency model will run at the speed of the slowest node[25].

II-B Optimization and application of CFT protocols in private blockchain

As we discussed in Section II.A, the CFT consistency model which is commonly used in private chains, meets leader overhead in geo-distributed environments. To address these issues, existing popular approaches reduce the leader overhead by adopting multiple leaders or reducing the frequency of the log replication times by batching operations. We summarize the consensus models mentioned in this section in TABLE 1.

II-B1 Leader or leaderless

Leaderless consistency protocols represented by Paxos are widely recognized. Researchers have optimized Paxos and proposed some variants to improve its performance in high-latency environments. Since Paxos[10] could have livelock problems in WAN environments, multi-Paxos adds the leader role to it. However, this strategy also places a higher load on the leader node. Mencius[33] makes each node take turns to act as the leader to solve this problem, but this approach makes the performance be determined by the slowest node. Therefore, EPaxos[25] maintains a leaderless design, which determines the committing method by analyzing the dependencies between operations. If there is no dependency between the operations, then it will use the fast path to reach an agreement. If there is a dependency conflict, an additional round trip is needed to execute ordering constraints to ensure causal consistency. To reduce the extra delay caused by dependency conflicts, SDPaxos[26] adds a sequencer (which can be understood as the leader) to order the data with dependency. Although it reduces the round trip by 0.5 ,it comes at the cost of a heavier load on the leader and more requests between nodes, which are caused by O-instance. Raft is a simplified version of Paxos. Its single leader strategy requires only one round trip to complete the operation submission. The simplicity of its implementation also makes it widely used in industry and blockchain systems. However, its single-node load problem is an important factor that affects its performance.

II-B2 Multiple leaders/controllers

To avoid conflicts of control rights, the multi-leader model divides the data store into multiple shards with different leaders[36, 37]. This method distributes the log replication operation originally led by one node to each node for execution. Although this approach can reduce the overhead generated by the SMR model, making each node act as a leader will make the data replication requests between nodes more frequent. Therefore, TiDB[32] uses connection reuse to ensure that the replication request and response include the metadata of logs and entries, thereby reducing the number of requests between nodes. However, it still cannot solve the frequent leader re-election problem. When any node in the multi-leader cluster is down, the cluster must invoke re-election; otherwise, the shards that it controls will be unavailable because the node must be the leader of a shard. In addition, during the leader election process, no consensus can be established in the corresponding shards, which will cause availability issues. Therefore, multi-leader strategy is more suitable for use in a low-latency, high-reliability environment.

II-B3 Batching entries

In addition to multi-leader, reducing the number of requests in the SMR protocol is another popular approach to improving the TPS. When the new entries are committed to the leader, using the pipeline to replicate them to the followers is a widely used implementation method of the SMR protocol[38, 39, 40]. Therefore, researchers designed the buffer to merge the entries and replicate them in one request, which can significantly reduce the SMR requests between nodes, thereby achieving the effect of improving the TPS[41, 42].

However, this approach has two potential problems. 1) The average response time will increase. Batching makes the first entry in the buffer wait for subsequent entries to finish writing until the buffer is full, after which the replication method can be invoked by the leader. Nevertheless, it can significantly reduce the replication requests, thereby reducing the load of the leader in high concurrency scenarios. However, when the load is low, the buffer requires a longer time to be fully filled, which will increase the response time[34]. 2) The followers must keep more connections at the same time. The reason is that the leader must wait for the entries to be applied before returning the success message to the client. Therefore, the followers that forward the client request to the leader must also keep the requests alive. However, this approach will increase the latency, especially in single-ms or higher network latency environments.

III Problem statement

The process of SMR between nodes is sensitive to network latency, which easily makes nodes prone to performance problems in geo-distributed systems. Therefore, in this section, we analyze the SMR process in a geo-distributed environment.

III-A SMR performance with million-second latency

Raft numbers the entries and copies them to the follower nodes in order[43]. Thus, Raft can use the of each node to quickly reach a consensus. However, private chains are widely used in supervised multi-subject data-sharing scenarios. The entries they generate contain only a single database operation on new keys. In IoT systems, the leader performs log replication more frequently since the operational non-transactional data are generated on a scale. In addition, the private chain systems need to be deployed in each organization’s datacenter to ensure that they can participate in the consensus[44]. However, we found that the network traffic and I/O overhead generated by the SMR model are very sensitive to network latency. The overhead on the leader will incur higher network overhead and TPS degradation. In addition, most non-transactional requests only insert new key values. The operation of verifying the input and output of the transactions will increase the leader’s computing load, resulting in a significant increase in the response time, and adversely affecting the QoS of the private chain system[45].

To better understand the impact of ms-level latency on the efficiency of consistency in geo-distributed systems, we performed experiments on a real-time cross-datacenter backup system for Kingsoft Cloud’s database, which runs with a 1.5-2 ms network latency, and a Hyperledger-based private chain system, which runs with a 3-7 ms latency. The purpose is to obtain the performance bottleneck of geo-distributed SMR that enables us to make realistic assumptions for the design of LCR-Raft.

In experiments, we send requests concurrently to Raft with numbers of users, and count its TPS and entries’ retransmission times under different network latencies, as shown in Fig. 3.

As the number of clients increases, the TPS and the entries per replication of the cluster gradually rise and reach peak performance when the number of clients is less than 25. At this stage, the cluster does not reach its transaction processing capacity, and thus, a stable response time can be guaranteed. With the increase in the number of clients, we observed that the frequency of replication was significantly reduced and the response time increased, as shown in Fig. 4. This outcome occurs because the leader backlogs clients requests or SMR requests due to high network latency, which leads to worker thread starvation or even deadlocks on the leader[46]. Therefore, the limited processing capacity of the leader is a key performance bottleneck of leader-based consistency models in geo-distributed scenarios.

III-B Network traffic feature of leader

To express the network load feature of the SMR model more clearly, we took Raft as an example to analyze its log replication process. Ongaro provides the pipeline and batching approaches to replicate entries in the initial Raft paper[11, 47]. In practice, there are three main ways to implement it: serial-batching[48], pipeline[49, 32], and full parallel[50]. For LAN environments, high routing performance and a stable network are provided. Therefore, it is a better choice to use full parallel or pipeline methods since it can provide a lower response time. However, in geo-distributed systems, network resource are more expensive. In addition, the unstable network environment also makes designers tend to adopt a conservative replication strategy. Accordingly, pipeline strategies were carefully designed by [32] to improve the efficiency of the log replication in geo-distributed scenarios. In an ideal situation, each entry is transmitted k(n-1) times by the leader. However, estimating the round-trip time (RTT) is a key problem for pipeline-based approaches[47] and is not an easy task in WAN environments[51]. For verification, we counted the entry retransmission of the pipeline-based Raft under different network latencies in Fig. 5.

Therefore, it is one of our research motivations to reduce the leader’s message transmission cost in the WAN environment.

IV Log replication in LCR

LCR designed the future-log to distribute the leader’s log replication work to followers to alleviate its concentrated load in the consistency model. The future-log can be led by any follower and applied to the state machine using a leader confirmation mechanism. Similar to the log in Raft (we call it normal-log in this paper to distinguish it from future-log), future-log is saved as a segmented log on disk and consists of entries and metadata. We call the entries in the future-log future entries and entries in the normal-log normal entries. To make it easier for readers to understand, the figures in this paper combine future-log and normal-log, because future entry and normal entry will not conflict on the index in the same node.

Therefore, LCR will not weaken the power of the leader in the replication process. In addition, since the leader uses the confirmation signal to replace the detailed content of the entry, it reduces the leader’s network resource consumption. Besides, LCR guarantees that followers have the same standing, which greatly reduces the complexity of the protocol design and makes the membership change process clearer than than multi-leader types of approaches. Here, we detail the leader confirmation mechanism with the messaging model of LCR in Fig. 6 and TABLE II.

| Steps | Operations |

|---|---|

| 1 | The follower Node 2 receives the request from the client. Node 2 will create a future entry G, assign a future index to it, and write it to the future log. |

| 2 | Node 2 replicates G to other nodes. |

| 3 | The leader receives a request from the client, it will create a normal entry A and assign it a normal index. Subsequently, the leader found that entry G in the future-log has not been applied to the normal log, the leader assigns a normal index to it and writes it to the normal log. Then, the leader appends normal entries A and signal entry G to each follower. |

| 4 | Node 1 receives the Signal log G, takes the corresponding future entry from the future log according to its future index, replaces the signal log with it, and applies G and A to the normal log. When it is completed, Node 1 responds to the leader that it has applied the index of entry A. |

| 5 | Node 2 receives the signal log, but since it cannot find the future entry G in the future log, it will return index-1 of the signal entry G as its lastAppliedIndex to the leader. |

| 6 | Node 1 knows that future entry G has been committed, and it will return a success message to the client. |

| 7 | The leader learns that more than half of the nodes have applied A; it will commit the entry whose normal index is smaller than A and notify all of the other nodes to commit these entries. Since Node 2 did not successfully apply future entry G, it transmits the raw data of G to Node 2 along with the committed index. |

Besides, LCR does not change the leader election attributes. Taking Raft as an example, LCR only needs to add the property to the request of to replicate the future-log between nodes. In the response of , the of the future-log attribute is added, which is used to acknowledge the data-leader the max index of the future-log that it has applied, as shown in Fig. 7.

Therefore, LCR is still stateless in the future-log replication process, which makes it possible to maintain a low coupling between the nodes and tolerate unstable network environments.

In this section, we answer the following questions: 1) how the future log is generated and replicated to all nodes; 2) how to resolve the conflict when normal log and future log are allocated the same index; and 3) how to reduce the occurrence of conflicts. TABLE III collects our notation.

| Name | Meaning |

|---|---|

| a sequenced number that identifies a node. | |

| the max index of entry that was saved in the segmented log of future-log or normal-log on node . | |

| the next index that need to be replicated to . | |

| a transactional data that is generated from a client. | |

| a non-transactional data that is generated from a client. | |

| the current of node . | |

| normal entry with index . | |

| future entry with index . | |

| the network latency between the cluster and client. | |

| the network latency between the consensus nodes in a cluster. | |

| the time period from normal entry packaging to replication request sending on the leader. | |

| the time period from future entry packaging to replication request sending on followers. | |

| the time period that a data-leader waits for a signal entry to apply data | |

| the latency that is composed of disk operations latency such as log writing and state machine applying. |

IV-A Future-log replication

The numbering method of future entry is similar to the commonly used creasing numbering in the SMR protocol, to make it more applicable to data consistency models. The difference is that the index of two entries that are continuously appended to the future-log can be discontinuous. In addition, the replication process of future-log is led by followers. We call the follower leading a certain future entry replication a data-leader and other nodes are data-followers. When a follower with id receives non-transactional data , the follower will become the data-leader, allocate a future log index to and assemble it into a new future entry . The index allocation method is shown in Equation (1).

| (1) |

where is the max index of future entries that saved, and is the current of ( is an increasing integer as the number of nodes changes and , which we introduce in detail in Section V).

Theorem 1. The index of future entry generated by is globally unique.

Proof. For , we can prove that

| (2) |

because

| (3) |

,

| (4) |

and

| (5) |

Therefore, for servers and , , and the index of future entry that generates must be different from another server .

When , will reject the copy request from . For with an obsoleted , when receives a message from a node with a newer , can find all of the future entries that it created according to Equation (1). Then, reallocates a nonconflicting index for these future entries with (we will detail how servers address the change in Section V.)

After is written on a future-log and committed by the data-leader, it copies to all nodes, as shown in Fig. 8.

The node will reject the future entry replication request from the nodes with a smaller or and return the newer information that it knows to them. It ensures that nodes can reach a consensus on the same future entries if and only if the nodes have the same and . The future entries generated from a node with an obsoleted or will not be applied because they cannot be accepted by quorum. It is very important because the signal entry must correspond to the same future entry on all nodes.

IV-B Signal entry and re-replication

However, the data-leader’s log replication process could have two problems: 1) The leader receives future entry , but parts of the nodes do not receive the . 2) The leader did not receive the from the data-leader.

For the first case, leader does not know which followers have received the . Therefore, the leader will use signal entry to replace . In particular, if the of follower is a future entry, will not be replaced by signal entry. As shown in Fig. 9, S5 did not obtain future entry with , and thus, it can only commit a normal entry with and set to 6 in the return value. For a follower whose indexed entry is a future entry, the leader believes that it has not received the future entry . Therefore, when the leader is packaging entries for replication, it will assemble the entry as a normal entry. Followers will trust the correctness of the leader and commit the entries.

For the second case, i.e., when follower with finds that the normal entry sent by the leader conflicts with , the follower trusts the leader. Then, it will check whether the data-leader of is itself according to Equation (6).

| (6) |

If the result is , then, is the data-leader of , and deletes from the future-log, reallocates the future entry index according to Equation (1), and rewrite it into the future-log. If it is , it will discard . Therefore, regardless of whether the result is or , will be written by , as shown in Fig. 10.

IV-C Future entry index allocation

We designed a window for LCR to manage the writing process of future entries. A window is a continuous range of index, and it has two states: closed and open. When the window is opened, the node can act as a data-leader to write future entries with an index within its window. When the window is closed, the data-leader will no longer be able to generate future entries with an index in this range. However, for node , the state of the window does not reject the entries received from other data-leaders. The condition for the window to close is that the of the normal-log (the log that replicated in the sequenced index in SMR) is greater than or equal to the starting index of the window. The window can avoid the conflict described in Section III.B to a certain extent because it can ensure that the allocated index can keep at a distance from the committed index of the leader, as shown in Fig. 11.

In addition, we have added a property to the window to ensure that there will be no conflict between future entries when the membership changes. We will introduce it in Section V.

V Generation for membership change

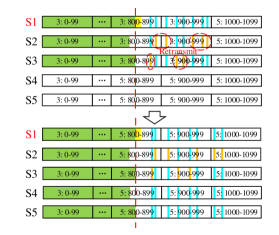

In Section IV.A, we mentioned that since the index allocation of the future entries is related to the server id, the dynamic change of the cluster size (membership change) will make the followers meet an index collision. To address the problem, we introduce the . The is an integer that contains information about the number of nodes in the cluster. The and have similar effects. Nodes with higher will reject future entries’ replication requests from nodes with lower . At the same time, the node will write its in the request and response. When the changes, the follower will re-replicate the unapplied future entries that it saves, as shown in Fig. 12.

In this section, we will explain two key concepts of : 1) The condition that triggers the change. 2) How to re-replicate the future entries for each node to ensure that the entries can keep consistent after the membership changes.

V-A Generation changing

The of a node can change under the following three conditions: 1) The node receives the membership changing configuration from the leader. 2) The node learns a newer from the replication response. 3) The node learns the new from the replication request. When the changes, it will immediately stop sending future entries replication requests and re-allocate the index of the future entries that have not been applied to the state machine in such a way that it can be correctly indexed by all nodes. In addition, to facilitate index calculation between nodes, we can use the number of nodes in the cluster as the . The ’s change rules are flexible. For example, for clusters that frequently change membership, we can take the surplus of to reflect the reduction in the number of nodes.

Theorem 2. The increase in will not cause conflicts between future-logs and normal-logs.

Proof. For node with , future entry and , when the of becomes , , the new index of is

| (7) |

Based on Equation (1), will not duplicate with other future entries in . In addition, suppose that when the increases, the leader’s of the normal-log is . Then, because if , must have been committed in the normal-log according to Section IV.B. Here, we prove that and thus prove . From Equation (2), we have the following:

| (8) |

can be presented as

| (9) |

Since and are integers, we have .

| (10) |

Therefore, the increase in will not cause to conflict with normal entries because is larger than any other normal entries. If can be applied, then must have been successfully replicated to half of the nodes that contain the leader. This circumstance means that does not conflict with the normal entries of the leader and thus will not conflict with the normal entries of other nodes, because must be greater than or equal to the of the normal-log of all other nodes.

| Property | Configuration | Comment |

|---|---|---|

| election timeout | 5000 ms | A follower becomes a candidate if it does not receive any message from |

| the leader in election timeout | ||

| max await timeout | 1000 ms | The maximum waiting timeout for replicate request. |

| heartbeat | 500 ms | A leader sends RPCs at least this often, even if there is no data to send. |

| max flying requests | 16 | Maximum number of concurrent RPC requests. |

| max entries per request | 5000 | Maximum number of entries in each RPC request. |

| max segment file size | 100 MB | Maximum number of bytes in each segment. |

| snapshot period | 60 s | Snapshot interval of each node. |

V-B Re-replication in generation changes

As shown in Section IV, the index allocation method of the future entry is related to the number of nodes. future-log windows with different cannot keep the same content in future-logs. Therefore, when the node is notified of the changing, it needs to re-allocate and re-replicate the future entries. In detail, when follower obtains the message of update, it will immediately record all future entries whose index is larger than the of the normal-log, and clear the windows with the old . At the same time, it creates a new window with updated , reallocates new indexes for these future entries, and starts to replicate the future entries with the new . Since the of the normal-log on the follower must be less than or equal to the value on the leader, the follower will not conflict with the entries that have been committed in the normal-log, as shown in Fig. 13.

S1, S2, and S3 store some future entries in two windows: index range 800-899 and , index range 900-999 and . When the cluster size changes from 3 to 5, is also updated to 5. S2 and S3 need to recreate the window with . In the window where , the nodes will reallocate the index of future entries in it to the new window according to Equation (1) .

Theorem 3. After the generation is updated, the re-replication process will not cause inconsistencies in the future-log.

Proof. In node , for an unapplied future entry with generation , , and of the normal-log is , . When is updated to , the new index of is . In this case, node will replicate to all other nodes with generation . If for node , its of the normal-log is greater than or equal to , it will ignore , which also indicates that the leader’s of the normal-log is greater than . Therefore, cannot be applied since it will not be committed by quorum. Node needs to re-allocate the index of according to the re-replication strategy in Section IV.B. If for node , its of normal-log is less than , it will accept on the premise of confirming that its . According to Theorem 1, the acceptance of will not cause conflict.

VI Experiments and analysis

Due to the complexity of Paxos implementation, Raft has become the main consensus protocol adopted by the private chain due to its advantages in implementation difficulty and efficiency. Therefore, we implemented LCR based on Raft and evaluated it under different network latencies and node numbers to test its scalability and robustness. First, we experiment on the correctness of LCR-Raft to verify its protocol’s functional support for native Raft. Second, we evaluate the performance of LCR-Raft to verify its improvement in specific business scenarios. Finally, we compare the optimization effect of LCR-Raft on the leader node in terms of the CPU and network traffic consumption with native Raft and multi-Raft.

VI-A Environments and configurations

In order to verify the performance improvement effect of LCR-Raft, we implemented it in the open-source Raft consistency model[50]. Among them, the RPC protocol is implemented using brpc[52], and RocksDB[53] 5.1.4 is used to store state machine data. All of the instances are deployed on 3, 5, 7, and 9 servers (according to the number of nodes in the different experimental configurations) with a Xeon E5-2620 v4 CPU, 64 GB of RAM, 4 TB, and 7200 RPM SATA disk. The system runs CentOS Linux release 7.5.1804, 64 bit, with Linux kernel Version 3.10.0 The switch uses H3C Mini S8G-U, with 16 Gbps switching capacity and 12 Mpps forwarding performance.

Our client runs on 2 servers with 6 core 12 thread AMD 3600 CPU and 32 GB memory, and the default number of client jobs is 40. The non-transactional data are the real-time sensor data generated from our blockchain water quality monitoring project, while the transactional data are value transferring operations. The default transactional data and non-transactional data size are 80 bytes. The base configuration of Raft, LCR, and multi-Raft is detailed in TABLE IV.

It is worthwhile to note that to compare LCR-Raft and other consistency models fairly, we use pipelines to implement SMR, i.e., each log contains only one transaction, and the consensus node immediately invokes remote replication when it generates future entries. The reason is that our work focuses on the optimization of SMR. Although the use of batch messages could improve TPS tens to hundreds of times, its effect is related to the number of clients, the request frequency, the batch size, and the dynamically adjusted request queue, which makes it unable to truly reflect the contributions of our work.

VI-B Response time

LCR decouples the replication process of future-log and normal-log. Therefore, for transactional data, the client can still use a normal-log to submit the transactional data. For non-transactional data, using a future-log can significantly reduce the leader’s overhead and the response time of normal entry requests (because normal entries will be processed immediately by the leader). Therefore, in this section, we analyze and test the latency of applying transactional and non-transactional data, and compare it with Raft to verify its optimization effect.

For non-transactional data , the latency is

| (11) |

where the is the network latency between the cluster and client, is the network latency between consensus nodes in the cluster, and are the time periods from entry packaging to replication request sending on the leader and followers, and is the time period during which the data-leader wait for a signal entry to apply the data. Here, is composed of the disk operation latency, such as log writing and state machine applying.

For transactional data , the latency is

| (12) |

In and , is generated from the network latency between client the and nodes. As shown in Figure. 6, it needs for and for to respond to the clients.

For , since the clients do not know which node is the leader, both transactional data and non-transactional data can be sent to any node. When transactional data are sent to a follower, the follower redirects the request to the leader. In this case, an additional RTT is generated from the redirection. Since this case occurs at a probability, the expected latency is . The non-transactional data need to respond to clients since they will not be redirected.

In addition to the , the differences in latency for and are , , and since and are fixed latencies. From the experiments in Figure. 4, it can be found that the additional latency is generated from (tens-ms) because all other conditions are the same besides the request frequency from the clients. The limited worker threads and high network latency make higher as the client increases. Similar to , increases because the high request frequency of non-transactional data makes followers run under high loads. Here, could be higher than 1 RTT since the leader might not send signal entries to the data-leader immediately when it receives future entries. Besides, the overhead on the leader could amplify . However, will decrease significantly because the proportion of normal entries decreases. It allows the leader to reduce the number and frequency of normal entry replication operations. Therefore, the response time of the transactional data in LCR is lower than in Raft.

We tested the effect of LCR and Raft on TPS and the response time in a 5-node, 5 ms latency environment, which is the common network latency between distributed blockchain systems. The proportion of non-transactional (LCR future entries) data was 25%. The result is shown in Fig. 15.

In terms of the TPS, LCR outperforms approximately 30% when the client count is larger than 20. For the response time, as the number of clients and the entry size increase, resource starvation becomes more serious. This circumstance makes the effect of the LCR on reducing the concentrated load more obvious. LCR can provide a 40%-60% response time decrease for transactional data (LCR normal entries), which matches the observation in Fig. 3.

VI-C LCR re-election

To show the availability of LCR, we test the node failures in the cluster. In this experiment, LCR, Raft, and multi-Raft run in a 5-node, 5 ms network latency environment. We randomly stop a non-leader node (an arbitrary node for multi-Raft) at the 10th second and reconnect it to the cluster at the 16th second. In the 25th second, we stop the leader node (an arbitrary node for multi-Raft) and no longer recover it. The result is shown in Fig. 14.

LCR: When the data leader is offline, the future entries that it generates might have not been replicated to all nodes. In this case, the followers that did not receive these future entries will ask the leader to re-replicate these entries, since the leader uses signals to replicate them (as described in Section IV.B). This circumstance will destroy the performance improvement brought by batching. However the performance can be quickly restored when these entries are handled. Nonetheless, the TPS will decrease slightly before the node recovers since the downtime of the followers reduces the frequency of future-entry generation, which reduces the optimization effect of LCR. During the 10th second to the 16th second, other followers can still act as data-leaders to replicate future entries. Therefore, the performance is not adversely affected (from approximately 1700 TPS to 1600 TPS). When the leader node fails (at the 25th second), it will trigger re-election, which makes the service unavailable during this period. When the new leader is elected (at the 31st second), the TPS performance can recover.

Multi-Raft: In multi-Raft, every node is the leader of shards. For a shard whose leader is , failure on will make unavailable because of re-election. Although the TPS does not drop to 0 (from approximately 1200 TPS to 800 TPS), the remaining TPS is contributed by the active shards. Therefore, the performance of multi-Raft is more sensitive to node failures, since when any node fails, performance fluctuations will occur. However, in LCR and Raft, a non-leader node failure will not lead to serious performance loss. In addition, the availability problem still exists in multi-Raft. Since the probability of each node being offline is the same, the total availability performance is the same as that of Raft.

Raft: Raft suffers the least performance loss when facing a failure of the follower node. However, due to the high load on the leader, its performance under a 5 ms network latency is only approximately 60% (approximately 1200 TPS to 1700 TPS) of the LCR.

VI-D LCR performance experiments

As an important performance indicator of the SMR protocol, we tested the TPS of LCR. In addition, we conducted experiments on the CPU load and network traffic to verify its effectiveness in reducing the load of the leader.

VI-D1 TPS

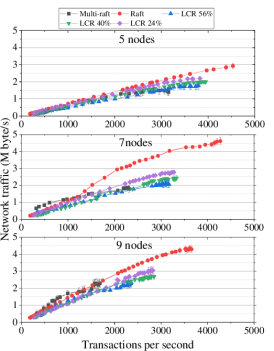

We tested the performance of LCR in terms of the TPS under different cluster sizes and different network latency environments. We designed three clusters with node numbers of 5, 7, and 9. In terms of the network latency setting, we use the command of Linux to set the simulated delay on the network card and design 41 test points, which are 0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1, 1.1, 1.2, 1.3, 1.4, 1.5, 1.6, 1.7, 1.8, 1.9, 2, 2.5, 3, 3.5, 4, 4.5, 5, 6, 7, 8, 9, 10, 12, 14, 16, 18, 20, 22, 24, 26, 28, and 30. The probability of the network latency fluctuation is 30%, 0.1 ms. We compared LCR-Raft with Raft and multi-Raft. Among them, we optimized multi-Raft using RPC reuse to improve the communication efficiency. On the client side, we created 40 clients to compete to call the RPC protocol to make the cluster work under the maximum load. For LCR, we designed 3 workloads with different non-transactional data (future entries) ratios, LCR 24%, LCR 40%, and LCR 56% to show the effect of the proportional relationship between the two on the performance of the mechanism. The experiment results are shown in Fig. 16.

To more clearly show the performance of LCR under different network latencies, we divide the performance comparison chart into three intervals (low latency, medium latency, and high latency) at 2 ms and 10 ms. When the network latency is of medium latency and high latency, the LCR has a significant improvement compared with the Raft of approximately 1.4X-1.9X. Under the workload with a higher proportion of future entries, the LCR also has a significant improvement compared to multi-Raft. In the low latency interval, Raft has better TPS performance when the latency is less than 1 ms. Therefore, the effect produced by our model is more suitable for geo-distributed systems with ms-network latency.

VI-D2 CPU loads

Since the replication process of the future-log is dominated by followers, it will increase the CPU load on the followers in LCR. Although the leader does not need to control the replication process of future-logs, it still needs to handle signal replacement and retransmission. Therefore, LCR can also generate CPU overhead to the leader. As shown in 6.1, under the same network latency, different consistency protocols have obvious gaps in the TPS. Considering that it is unfair to directly compare the CPU load when the network latency is the same, we use TPS as an x-axis to observe the CPU load of the consistency protocols when they have the same TPS. The experiment results are shown in Fig. 17.

The CPU load of the LCR leader node is similar to that of Raft. When the number of nodes is 5, the CPU load of LCR is slightly higher than that of Raft. When the number of nodes is 9, the CPU load is reduced by 5%-10%.

VI-D3 Network traffic

As with the CPU load, we also use TPS as a variable to compare their network traffic. Since LCR transfers most of the log replication work to followers, when the TPS is the same, the leader node will generate smaller network traffic. Similarly, the degree of network traffic savings is also related to the non-transactional data ratio. Therefore, we computed independent statistics on the LCR traffic under different non-transactional data ratios. The experiment results are shown in Fig. 18.

LCR can save 20%-30% of the network traffic of the leader node. We can conclude that LCR achieves the same TPS as Raft with a lower throughput rate, which can effectively provide saving in the costs of network construction.

VI-E Overhead analysis

LCR can generate additional resource costs according to the following three points. 1) Network traffic: This cost arises because the follower nodes undertake part of the log replication work. As a result, the network traffic is increased in the followers. 2) Memory consumption: the leader needs memory to cache the relational mapping to ensure the writing efficiency of the future-log. 3) Computational resource consumption: future-log replication requires index calculation and verification. We calculated statistics on these costs to measure whether the extra cost generated by LCR is acceptable.

First, we measure the network traffic. Since additional network traffic is generated on the follower nodes, we will not compare the leader’s network traffic. Here, we count the extra network traffic with different proportions of future entries and compare it with the traffic consumption of multi-Raft. The traffic consumption of multi-Raft is the average of all of the nodes. Similar to Section VI.C, we still compare the traffic consumption under the same TPS. The experiment results are shown in Fig. 19. The additional network traffic generated by LCR is much less than that generated by multi-Raft.

The consumption of the network traffic generated by LCR is much less than that of multi-Raft traffic. At the same time, we found that at the same TPS, the network consumption of the followers will decrease as the cluster size increases. This finding is in line with our expectations because each follower needs to replicate fewer future entries.

In the consistency protocol, the entries must be written to the segmented log and memory at the same time before it is applied to the state machine to ensure that the received log will not be lost. Therefore, future entries need to consume additional memory. We tested the LCR on the extra main memory consumption. The experiments were run in an environment in which the number of consensus nodes is 5, 7, and 9, the average network latency is 5 ms, and the average size of key-value pairs is 80 bytes. The experiment results are shown in Fig. 20.

The experiments show that the consumption of LCR on the main memory is acceptable (KB level) and that the proportion of future entries is positively related to the additional consumption of the main memory. When the number of future entries is too large, the growth rate of its index will far exceed that of normal entries. However, applying the future entries to the state machine must wait for the normal entries whose index is greater than it to be applied. In extreme cases, such as when the proportion of future entries reaches 99%, too many future entries will make the growth rate of the normal entries index unable to catch up with the future entries index. This circumstance will cause future entries to wait a very long time before it can be applied. To address this problem, we designed a stepping mechanism to allow the leader to confirm future-entries in advance.

For computational resources, LCR only needs extremely low complexity such as list and hash map lookup. Although we calculated statistics on leader resource consumption in Section VI.C, our model did not cause a large overhead on the leader node. However, our model will generate an additional CPU load on the follower nodes. We calculate statistics on the CPU load of followers on Raft, multi-Raft, and LCR under different TPSs in a 9-node cluster in Fig. 21.

The results show that the CPU load of LCR will not exceed the leader in Raft. This finding is acceptable since each node should have similar processing capabilities to be elected as the leader. Therefore, the CPU load of LCR will not cause performance degradation.

VII Discussion

VII-A Confirmation vs. multi leader

LCR is an SMR model based on leader confirmation, but it performs better than semi-synchronous in security. LCR did not decentralize the power of the leader. There is a large number of existing methods that use multi-leader to reduce the load on the leader, such as multi-Raft[32] and Hash Raft[36], etc. However, regardless of the implementation, it will face the following two problems: 1) When any node becomes unavailable, the cluster must invoke re-election. 2) Each pair of nodes must synchronize their online status through heartbeats. The leader confirmation method solves these two problems well. First, when the follower node is unavailable, it cannot copy and receive the log from followers and the leader. This circumstance does not require the re-election process. At the same time, compared with multi-Raft, it does not require heartbeats to maintain the online status of each node. Because it does not need the followers to know whether other nodes are online, the follower only needs to know whether the future-logs are accepted by half nodes that contain the leader. Therefore, it does not need to reuse the connection to reduce the network load between nodes, which greatly reduces the complexity of the protocol. In addition, LCR does not guarantee that every future-log has been written into the state machine when it returns a success message to the client. However it will ensure that data will be written to the state machine at some point in the future, which also makes it applicable to more complex network environments.

VII-B More than Raft

LCR is an SMR model based on leader confirmation, but it performs better than semi-synchronous in terms of consistency. LCR did not decentralize the power of the leader. There is a large number of existing methods that use multi-leader strategies to reduce the concentrated load[32, 36]. However, regardless of the implementation, it will face the following two problems: 1) When any node becomes unavailable, the cluster must invoke re-election. 2) Each pair of nodes needs to synchronize their online status through heartbeats. LCR solves these two problems well. For the follower node failure cases, the node cannot copy or receive the log from followers and the leader. It does not require re-election. At the same time, compared with multi-Raft, LCR does not require heartbeats to maintain the online status of each node. Because followers do not need to know whether other nodes are online, the only thing they care about is whether the future-entries are confirmed by the leader, which greatly reduces the complexity of the protocol.

VII-C Cost

The improvement brought by the LCR does not cause additional costs. As described in Section VI.D, the overhead does not cause consumption that exceeds the original system capacity. Because the log replication process of the SMR protocol is extremely concise, the network, CPU, and other overhead are very sensitive to consensus protocols. As we described in Section II, the increase in the latency will make the TPS drop significantly. To reduce the network latency between geo-distributed nodes, it might be necessary to establish a dedicated network, which is very difficult in terms of feasibility. Although the cost of upgrading the CPU and router is lower, it can also improve the optimization effect brought by the LCR. Therefore, the LCR can trade at a lower cost for the improvement of transactional data consistency efficiency and TPS. In addition, as described in Section VI.C, the LCR can reduce substantially the network traffic. For cluster deployment in WAN, network bandwidth or traffic must be purchased from the Internet Service Provider (ISP), and the cost is positively related to the bandwidth or traffic. Therefore, the LCR can effectively save approximately 20%-30% of the network construction costs.

VIII Conclusions

In this paper, we propose LCR for geo-distributed consensus and non-transactional data. A novel leader confirmation mechanism is proposed, which can reduce the leader’s concentrated load. LCR implements a future-log on the SMR protocol and proves its availability. It makes LCR transparent to the election mechanism, which can support various consensus protocols, and combine with other optimization schemes. We implemented LCR based on Raft and tested it in clusters with 3, 5, 7, and 9 nodes. Experimental results show that LCR improves the TPS at 1.4X-1.9X that of Raft, reduces the transactional data response time by 40%-60%, and the network traffic by 20%-30% when the network latency is greater than 1.5 ms compared with Raft. In addition, the overhead on the followers is much lower than that of multi-Raft, and the CPU consumption on the followers is lower than that of the leader node in Raft.

Acknowledgment

This work is supported by the Fundamental Research Funds for the Central Universities (grant no. HIT.NSRIF.201714), Weihai Science and Technology Development Program (2016DXGJMS15), and the Key Research and Development Program in Shandong Province (2017GGX90103).

References

- [1] S. K. Singh, S. Rathore, and J. H. Park, “Blockiotintelligence: A blockchain-enabled intelligent iot architecture with artificial intelligence,” Future Generation Computer Systems, vol. 110, pp. 721–743, 2020.

- [2] S. Schulte, D. Schuller, R. Steinmetz, and S. Abels, “Plug-and-play virtual factories,” IEEE Internet Computing, vol. 16, no. 5, pp. 78–82, 2012.

- [3] B. Cao, Y. Li, L. Zhang, L. Zhang, S. Mumtaz, Z. Zhou, and M. Peng, “When internet of things meets blockchain: Challenges in distributed consensus,” IEEE Network, vol. 33, no. 6, pp. 133–139, 2019.

- [4] A. Kiayias, A. Russell, B. David, and R. Oliynykov, “Ouroboros: A provably secure proof-of-stake blockchain protocol,” in Annual International Cryptology Conference. Springer, 2017, pp. 357–388.

- [5] S. Nakamoto and A. Bitcoin, “A peer-to-peer electronic cash system,” Bitcoin. URL: https://bitcoin.org/bitcoin.pdf, vol. 4, 2008.

- [6] E. Bandara, W. K. Ng, K. De Zoysa, N. Fernando, S. Tharaka, P. Maurakirinathan, and N. Jayasuriya, “Mystiko—blockchain meets big data,” in 2018 IEEE International Conference on Big Data (Big Data). IEEE, 2018, pp. 3024–3032.

- [7] B. Cao, Z. Zhang, D. Feng, S. Zhang, L. Zhang, M. Peng, and Y. Li, “Performance analysis and comparison of pow, pos and dag based blockchains,” Digital Communications and Networks, vol. 6, no. 4, pp. 480–485, 2020.

- [8] S. Popov, “The tangle,” White paper, vol. 1, no. 3, 2018.

- [9] Y. Li, B. Cao, M. Peng, L. Zhang, L. Zhang, D. Feng, and J. Yu, “Direct acyclic graph-based ledger for internet of things: performance and security analysis,” IEEE/ACM Transactions on Networking, vol. 28, no. 4, pp. 1643–1656, 2020.

- [10] L. Lamport et al., “Paxos made simple,” ACM Sigact News, vol. 32, no. 4, pp. 18–25, 2001.

- [11] D. Ongaro and J. Ousterhout, “In search of an understandable consensus algorithm,” in 2014 USENIX Annual Technical Conference (USENIXATC 14), 2014, pp. 305–319.

- [12] Y. Chen, J. Gu, S. Chen, S. Huang, and X. S. Wang, “A full-spectrum blockchain-as-a-service for business collaboration,” in 2019 IEEE International Conference on Web Services (ICWS). IEEE, 2019, pp. 219–223.

- [13] M. Castro, B. Liskov et al., “Practical byzantine fault tolerance,” in OSDI, vol. 99, no. 1999, 1999, pp. 173–186.

- [14] D. Huang, X. Ma, and S. Zhang, “Performance analysis of the raft consensus algorithm for private blockchains,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 50, no. 1, pp. 172–181, 2019.

- [15] J. C. Corbett, J. Dean, M. Epstein, A. Fikes, C. Frost, J. J. Furman, S. Ghemawat, A. Gubarev, C. Heiser, P. Hochschild et al., “Spanner: Google’s globally distributed database,” ACM Transactions on Computer Systems (TOCS), vol. 31, no. 3, pp. 1–22, 2013.

- [16] M. K. Aguilera, N. Ben-David, R. Guerraoui, V. J. Marathe, A. Xygkis, and I. Zablotchi, “Microsecond consensus for microsecond applications,” in 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), 2020, pp. 599–616.

- [17] X. Zhou, K. Wang, W. Jia, and M. Guo, “Reinforcement learning-based adaptive resource management of differentiated services in geo-distributed data centers,” in 2017 IEEE/ACM 25th International Symposium on Quality of Service (IWQoS). IEEE, 2017, pp. 1–6.

- [18] J. Hasenburg and D. Bermbach, “Geobroker: Leveraging geo-contexts for iot data distribution,” Computer Communications, vol. 151, pp. 473–484, 2020.

- [19] T. Li, Y. Liu, Y. Tian, S. Shen, and W. Mao, “A storage solution for massive iot data based on nosql,” in 2012 IEEE International Conference on Green Computing and Communications. IEEE, 2012, pp. 50–57.

- [20] D. G. Chandra, “Base analysis of nosql database,” Future Generation Computer Systems, vol. 52, pp. 13–21, 2015.

- [21] P. Viotti and M. Vukolić, “Consistency in non-transactional distributed storage systems,” ACM Computing Surveys (CSUR), vol. 49, no. 1, pp. 1–34, 2016.

- [22] D. B. Terry, A. J. Demers, K. Petersen, M. J. Spreitzer, M. M. Theimer, and B. B. Welch, “Session guarantees for weakly consistent replicated data,” in Proceedings of 3rd International Conference on Parallel and Distributed Information Systems. IEEE, 1994, pp. 140–149.

- [23] Y. Saito and M. Shapiro, “Optimistic replication,” ACM Computing Surveys (CSUR), vol. 37, no. 1, pp. 42–81, 2005.

- [24] W. Vogels, “Eventually consistent,” Communications of the ACM, vol. 52, no. 1, pp. 40–44, 2009.

- [25] I. Moraru, D. G. Andersen, and M. Kaminsky, “There is more consensus in egalitarian parliaments,” in Proceedings of the Twenty-Fourth ACM Symposium on Operating Systems Principles, 2013, pp. 358–372.

- [26] H. Zhao, Q. Zhang, Z. Yang, M. Wu, and Y. Dai, “Sdpaxos: Building efficient semi-decentralized geo-replicated state machines,” in Proceedings of the ACM Symposium on Cloud Computing, 2018, pp. 68–81.

- [27] A. Ganesan, R. Alagappan, A. Arpaci-Dusseau, and R. Arpaci-Dusseau, “Strong and efficient consistency with consistency-aware durability,” in 18th USENIX Conference on File and Storage Technologies (FAST 20), 2020, pp. 323–337.

- [28] J.-S. Ahn, W.-H. Kang, K. Ren, G. Zhang, and S. Ben-Romdhane, “Designing an efficient replicated log store with consensus protocol,” in 11th USENIX Workshop on Hot Topics in Cloud Computing (HotCloud 19), 2019.

- [29] M. Muzammal, Q. Qu, and B. Nasrulin, “Renovating blockchain with distributed databases: An open source system,” Future generation computer systems, vol. 90, pp. 105–117, 2019.

- [30] M. Vukolić, “The quest for scalable blockchain fabric: Proof-of-work vs. bft replication,” in International workshop on open problems in network security. Springer, 2015, pp. 112–125.

- [31] D. Mingxiao, M. Xiaofeng, Z. Zhe, W. Xiangwei, and C. Qijun, “A review on consensus algorithm of blockchain,” in 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, 2017, pp. 2567–2572.

- [32] D. Huang, Q. Liu, Q. Cui, Z. Fang, X. Ma, F. Xu, L. Shen, L. Tang, Y. Zhou, M. Huang et al., “Tidb: a raft-based htap database,” Proceedings of the VLDB Endowment, vol. 13, no. 12, pp. 3072–3084, 2020.

- [33] C.-S. Barcelona, “Mencius: building efficient replicated state machines for wans,” in 8th USENIX Symposium on Operating Systems Design and Implementation (OSDI 08), 2008.

- [34] L. Lamport, “The part-time parliament,” in Concurrency: the Works of Leslie Lamport, 2019, pp. 277–317.

- [35] E. Michael, D. Woos, T. Anderson, M. D. Ernst, and Z. Tatlock, “Teaching rigorous distributed systems with efficient model checking,” in Proceedings of the Fourteenth EuroSys Conference 2019, 2019, pp. 1–15.

- [36] J. Yang and H. Shen, “Blockchain consensus algorithm design based on consistent hash algorithm,” in 2019 20th International Conference on Parallel and Distributed Computing, Applications and Technologies (PDCAT). IEEE, 2019, pp. 461–466.

- [37] H. Howard, M. Schwarzkopf, A. Madhavapeddy, and J. Crowcroft, “Raft refloated: Do we have consensus?” ACM SIGOPS Operating Systems Review, vol. 49, no. 1, pp. 12–21, 2015.

- [38] Z. Zhang, H. Hu, Y. Yu, W. Qian, and K. Shu, “Dependency preserved raft for transactions,” in International Conference on Database Systems for Advanced Applications. Springer, 2020, pp. 228–245.

- [39] B. Carmeli, G. Gershinsky, A. Harpaz, N. Naaman, H. Nelken, J. Satran, and P. Vortman, “High throughput reliable message dissemination,” in Proceedings of the 2004 ACM symposium on Applied computing, 2004, pp. 322–327.

- [40] R. Friedman and E. Hadad, “Adaptive batching for replicated servers,” in 2006 25th IEEE Symposium on Reliable Distributed Systems (SRDS’06). IEEE, 2006, pp. 311–320.

- [41] W. Cao, Z. Liu, P. Wang, S. Chen, C. Zhu, S. Zheng, Y. Wang, and G. Ma, “Polarfs: an ultra-low latency and failure resilient distributed file system for shared storage cloud database,” Proceedings of the VLDB Endowment, vol. 11, no. 12, pp. 1849–1862, 2018.

- [42] D. Wang, P. Cai, W. Qian, A. Zhou, T. Pang, and J. Jiang, “Fast log replication in highly available data store,” in Asia-Pacific Web (APWeb) and Web-Age Information Management (WAIM) Joint Conference on Web and Big Data. Springer, 2017, pp. 245–259.

- [43] P. Sharma, R. Jindal, and M. D. Borah, “Blockchain technology for cloud storage: A systematic literature review,” ACM Computing Surveys (CSUR), vol. 53, no. 4, pp. 1–32, 2020.

- [44] Y. Yao, X. Chang, J. Mišić, V. B. Mišić, and L. Li, “Bla: Blockchain-assisted lightweight anonymous authentication for distributed vehicular fog services,” IEEE Internet of Things Journal, vol. 6, no. 2, pp. 3775–3784, 2019.

- [45] Y. Zhang, S. Setty, Q. Chen, L. Zhou, and L. Alvisi, “Byzantine ordered consensus without byzantine oligarchy,” in 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), 2020, pp. 633–649.

- [46] H. Moniz, J. Leitão, R. J. Dias, J. Gehrke, N. Preguiça, and R. Rodrigues, “Blotter: Low latency transactions for geo-replicated storage,” in Proceedings of the 26th International Conference on World Wide Web, 2017, pp. 263–272.

- [47] D. Ongaro, Consensus: Bridging theory and practice. Stanford University, 2014, vol. 1.

- [48] Sofa-jraft. [Online]. Available: https://github.com/sofastack/sofa-jraft

- [49] Etcd raft. [Online]. Available: https://github.com/coreos/etcd/tree/master/raft

- [50] Raft-java. [Online]. Available: https://github.com/wenweihu86/raft-java

- [51] S. Yasuda and H. Yoshida, “Prediction of round trip delay for wireless networks by a two-state model,” in 2018 IEEE Wireless Communications and Networking Conference (WCNC). IEEE, 2018, pp. 1–6.

- [52] Brpc. [Online]. Available: https://github.com/apache/incubator-brpc

- [53] Rocksdb. [Online]. Available: http://rocksdb.org/