lava: Label-efficient Visual Learning and Adaptation

Abstract

We present lava, a simple yet effective method for multi-domain visual transfer learning with limited data. lava builds on a few recent innovations to enable adapting to partially labelled datasets with class and domain shifts. First, lava learns self-supervised visual representations on the source dataset and ground them using class label semantics to overcome transfer collapse problems associated with supervised pretraining. Secondly, lava maximises the gains from unlabelled target data via a novel method which uses multi-crop augmentations to obtain highly robust pseudo-labels. By combining these ingredients, lava achieves a new state-of-the-art on ImageNet semi-supervised protocol, as well as on 7 out of 10 datasets in multi-domain few-shot learning on the Meta-dataset.111Code:github.com/islam-nassar/lava.git

1 Introduction

Using limited data to effectively adapt to new tasks is a challenging but essential requirement for modern deep learning systems. It enables leveraging the power of such systems while avoiding excessive data annotation which is usually costly, time consuming, and often requires domain expertise [9, 18, 55]. A promising direction is to develop methods that are capable of transferring knowledge across collective data of many tasks. In this work, we examine low-resource multi-domain visual transfer: given a visual learner pretrained on a source dataset222We use ImageNet [42] as the source dataset in all our experiments., our goal is to effectively transfer to a target dataset with potential class and/or domain shift. We focus on low-resource cases where the target dataset is very small but fully labelled (as in few-shot learning - FSL); or cases where it is sufficiently large but only partially labelled (as in semi-supervised learning - SSL). We propose a transfer method which simultaneously addresses such cases and demonstrates a strong performance on multiple transfer benchmarks.

lava’s first design goal is to employ a pretraining strategy which supports generalisation beyond classes and domains with limited labelled data. Hence, we begin by investigating the effect of source pretraining on the visual transfer performance. In line with recent research, we find that supervised pretraining leads to sub-optimal transfer[13, 2, 32]. Supervision with labels, more often than not, is too eager to learn specialised features which can successfully discriminate the source classes/domains but fails to generalise beyond them. We argue that its other limitation is the semantic-free nature of the labels used. Labels are represented using one-hot vectors explicitly encouraging to ignore label semantics. For example, the learner is encouraged to treat “bus” and “school bus” as two unrelated classes. In Fig. 2, we use DomainNet [35] clipart dataset to qualitatively demonstrate two artifacts associated with supervised pretrained representations: class collapse, whereby the pretrained representations collapse into incorrect source classes just because they share superficial similarities with target classes; and domain collapse, where the class semantics are preserved but the visual domain information is disregarded. lava’s first ingredient is introducing a two-fold approach to address the collapse problem: 1) self-supervised source pretraining to learn task-independent features leading to better transfer; and 2) using the language modality to ground the self-supervised representations to an independent semantic space: during pretraining, lava uses source class labels to learn a mapping between the visual representations of the source instances and the language semantic representation of their class labels. At transfer time, such mapping is used to infer relations between “seen” and “unseen” classes by virtue of their foreknown semantic similarities. (Sec. 3.1)

lava’s second design goal is to leverage unlabelled target data (if available) to improve transfer performance. For that, we employ multi-crop augmentation which was originally proposed to encourage learning representations that are invariant to spatial augmentations for self-supervised contrastive learning [8]. We extend the idea to semi-supervised learning exploiting the observation that images can often contain multiple semantic classes and hence using a single label per image can hurt the performance. Consider the image in Fig. 3, due to random cropping used during training, it is conceivable that the teacher model (performing the pseudo-labeling) receives a view centered around a different object than the student, leading to a label that is not compatible with the image. Therefore, we calculate pseudo-labels based on multiple local and global views of images to account for those containing multiple semantic concepts. We show that enforcing a single pseudo-label per image is sub-optimal. Instead, applying a pair-wise aggregate loss across multiple views enhances the quality of pseudo-labels. (Sec. 3.2)

lava’s main contributions are:1) a practical method which combines and extends a few recent innovations to address various transfer learning regimes; 2) provides empirical insights about transfer collapse problems associated with supervised pretraining and proposes a strategy to address them; 3) extends the multi-crop augmentation strategy to the semi-supervised setting via pseudo-labeling; 4) sets a new state-of-the-art on the ImageNet [42] SSL benchmark and demonstrates strong performance on other challenging SSL and FSL benchmarks including the Meta-Dataset [52].

2 Related Work

Few-Shot learning (FSL) Existing FSL approaches can be categorized into metric-learning methods that learn an embedding space to compare query and support samples [45, 24, 54, 34], meta-learning approaches that adapt a base learner to new classes [15, 28, 21, 43, 7, 26, 39], or a combination of both [52]. Most existing FSL methods work well when the train and test sets are from the same domain e.g. subsets of ImageNet (mini-ImageNet [54], and tiered-ImageNet [40]). They lack out-of-domain (OOD) generalization, once there is a distribution mismatch between train and test data. Recently introduced meta-dataset [52] provides a challenging benchmark for multi-domain FSL. FSL methods developed on meta-dataset therefore aim to tackle OOD generalization [29, 13]. For instance, Transformer module is employed to capture relationships between different domains in [29, 13]. Even though our approach is generic across different low-label regimes, our results on meta-dataset show that we perform favorably against recent approaches which are specifically developed for FSL.

Semi-supervised learning. A common approach for SSL is to train the model with a joint loss formulation i.e. a supervised cross-entropy loss for the labelled samples, and a un/self-supervised regularization term for unlabelled samples. Examples include UDA [56], S4L [58] and [17, 31, 53]. Another approach to SSL is using pseudo-labels which are generated by either training the model on the labelled samples and pruning the confident predictions on unlabelled data [6, 5, 46, 32, 25, 41], or by using a teacher-student configuration, where a slowly updating teacher model is used to generate soft-predictions on the unlabelled samples, which serve as a supervisory signal for the student model [37, 57, 49]. lava leverages the latter paradigm but improves pseudo-labels using a multi-crop augmentation strategy.

Semantics and Self-supervision for FSL. Rich semantics [16, 1, 38] and self-supervision [13, 22] have been explored to help FSL. [1] introduces an auxiliary task to produce class-level semantic descriptions and showed improvements on fine-grained tasks, while [38] learns rich features from large number of (image, text) pairs. On the other hand, recent work [50, 13] recognised the usefulness of self-supervised features and their ability to generalise, [22] adjusted the instance discrimination SimCLR [10] method to use the few support labels for positive and negative mining, while [13] explicitly adds SimCLR [10] episodes to the training pipeline. Unlike existing approaches, lava only uses class-level label semantics and employs self-supervision [9] as a pretraining step rather than incorporating it into the FSL task.

3 Method

We consider the problem of adapting a classifier pretrained on a set of source classes using labelled samples to a target dataset of classes by using labelled instances , and unlabelled instances , with , denoting an unlabelled, and labelled image respectively, and is the class label. Note that such setup suits both SSL and FSL settings. However, in FSL, and are strictly disjoint and the few-shot transfer utilises a fully labelled support set.

lava employs a teacher-student setup with a teacher identical in architecture to an online student (see Fig. 1). The student is trained to match the “soft” label generated by the teacher when each receives different views of a given image. The student and teacher networks, parametrized by and respectively, are updated alternatively: given a fixed teacher, the student is first updated by gradient descent to minimize the network loss; subsequently, the teacher parameters are updated as an exponential moving average (EMA) of the student’s, i.e. , where is the momentum parameter.

3.1 Generalising beyond domains and classes

Our first aim is to use the source dataset to pretrain our teacher and student with good initial representations to support out-of-distribution transfer while avoiding the collapse problems mentioned in Sec. 1.

Self-supervised Pretraining. We employ the recently proposed DINO [9] method (without modification) to learn self-supervised initialisations from after discarding the labels. DINO, like other self-supervised methods [19, 14, 10, 20, 3, 27], learn visual features which are invariant to common factors of variation (e.g. colour distortion, pose, scale) while not being tied to a specific set of classes or visual domains. Therefore, they encode richer information which better supports generalisation. At transfer time, we use the target instances without their labels to further fine-tune the DINO representations to the target dataset. We provide in the appendix a detailed procedure of fine-tuning DINO features to target instances in low-resource cases. We note that our method is not specifically tied to DINO. However, we chose it due to its demonstrated performance and its similar teacher-student setup making it seamless to integrate with our method. We ablate such choice in our experiments.

Semantic Grounding. To combat the class collapse problem and to aid generalisation to unseen classes, we employ language semantics as an independent modality to ground visual features. During the source pretraining, we additionally learn a semantic MLP module (* denotes s and t for student and teacher respectively) mapping the projection for a given labelled image to an embedding . We apply a hinge loss which minimizes the discrepancy between the semantic projection 444We use the student semantic projection to apply the loss, while is only used during inference. and the corresponding embedding obtained by applying a pretrained language model on the class label, as per:

| (1) |

where denotes cosine similarity, is a scalar hinge margin, and and are the language embeddings of the true and a randomly-sampled false class respectively. In effect, lava learns how to map the visual representations of the source instances to the language model representation space so that each instance is mapped closer to its true class language embedding and further away (up to a margin) from all other class embeddings. At transfer time, is fine-tuned (together with the backbones) without re-initialisation. This is in contrast to the classifier head which must be re-initialised to match the target classes.

Unlike the discrete nature of one-hot class labels, using language embeddings to ground visual representations acts as a continuous space to represent class labels. In such space, “bench” is close to “park bench” and “zebra” is closer to “horse” than it is to “car”. This is intuitively useful to learn visual-semantic relations which enhance generalisation to novel concepts. However, it also implicitly assumes that linguistic similarity is always a good proxy for visual similarity, which is sometimes not true e.g. “wine glass” and “wine bottle” while linguistically similar, are usually visually distinct. Hence, we explored few alternatives for the source of semantics including knowledge graph embeddings [32], “Glove” [36] and other variants of language models (see appendix for details). We find that language models trained on paraphrasing tasks [47] provide the best performance in our setup. We conjecture that this is because it helps the model to unify similar visual concepts which appear under different names across datasets (e.g. “airplane” vs “plane”, “horse cart” vs “carriage”, etc.).

3.2 Multi-crop pseudo-labelling

When transferring to a partially labelled dataset, lava leverages unlabelled samples by using the teacher to iteratively produce pseudo-labels to expand the labelled samples used to train the student. We differ from previous similar SSL approaches (e.g. MeanTeacher [49]) primarily in the way we generate the pseudo-labels: we encourage more robust pseudo-labels via multi-crop augmentation. We generate pseudo-labels based on a set of multiple sized crops of a given unlabelled image ; similarly, student predictions are generated based on another set 555Spatial augmentations (e.g. color jittering, random flips) are also applied on all the crops.. The pseudo-label is then aggregated over the combined views.

Formally, lava uses the backbone to map an unlabelled image to , followed by an MLP mapping to a projection . Finally, using a linear layer followed by a temperature sharpened softmax, is normalised into a probability distribution . Then, we apply our loss as per: , where represents the aggregate loss over targets and predictions .

Design choices. Aggregating multi-crop losses involves a few design alternatives such as: using a single pseudo-label (e.g. via voting) or averaging across the different crops; using hard pseudo-labels (e.g. using argmax or sampling) or soft pseudo-labels; and finally, the count, scale, and size of the crops are important hyperparameters as they respectively impact the diversity of pseudo-labels, the locality of the crops, and the memory consumption during training. Our empirical study found that using soft sharpened pseudo-labels and averaging over pairs of crops yields the best performance across different domains (refer to appendix for more details). More concretely, we eventually opted for: where (, ) is a pair of crops of passed to the student and teacher respectively; and is the set of all crop pairs.

| Real | Clipart | Sketch | Quickdraw | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-shot | 4-shot | 8-shot | 2-shot | 4-shot | 8-shot | 2-shot | 4-shot | 8-shot | 2-shot | 4-shot | 8-shot | |||||

| FixMatch [46] | 23.06 | 34.68 | 42.14 | 30.21 | 41.21 | 51.29 | 12.73 | 21.65 | 33.07 | 24.51 | 32.98 | 43.91 | ||||

| SemCo [32] | 24.38 | 40.03 | 51.13 | 28.39 | 46.96 | 55.48 | 15.71 | 28.62 | 41.06 | 26.17 | 34.17 | 44.12 | ||||

| MeanTeacher++ [49] | 51.44 | 66.16 | 68.77 | 46.02 | 52.43 | 63.09 | 25.8 | 38.79 | 51.16 | 29.78 | 39.12 | 47.11 | ||||

| lava (supervised init.) | 57.47 | 69.51 | 75.41 | 38.45 | 53.05 | 64.74 | 36.15 | 45.15 | 52.15 | 32.61 | 41.67 | 48.44 | ||||

| lava (no semantic loss) | 58.57 | 67.88 | 72.12 | 48.57 | 58.75 | 65.18 | 38.76 | 47.55 | 53.91 | 35.95 | 44.01 | 54.91 | ||||

| lava | 58.79 | 68.04 | 72.19 | 48.65 | 59.05 | 65.08 | 39.12 | 47.63 | 54.39 | 36.66 | 44.12 | 54.75 | ||||

4 Experiments

We evaluate lava against state-of-the-art (SOTA) baselines in three regimes: 1) SSL transfer with domain shift on DomainNet [35]; 2) SSL without domain shift on ImageNet [42], and 3) multi-domain FSL on Meta-dataset [52].

Training. Unless otherwise stated, we use a batch size of 256 with a learning rate of and Adam [23] optimizer with a cosine scheduler. For our multi-crop pseudo-labelling, we use small scale crops and large scale crops (different for teacher than student) following same scales in [9], and a teacher momentum . We use mpnet-base-v2 [47] language model666github.com/UKPLab/sentence-transformers to obtain the label embeddings for our semantic loss. We report accuracy based on the softmax classifier (see Fig. 1), but when relevant, we compare it with the K-Nearest Neighbour (KNN with K=20) accuracy based on the representation and/or the semantic accuracy obtained by applying a cosine classifier on the semantic projection .

SSL on DomainNet. This dataset includes images from 6 visual domains spanning 345 object classes. We examine lava’s ability to transfer from ImageNet to 4 domains with decreasing similarity: real, clipart, sketch, and quickdraw. To ensure fixed settings across all baselines (e.g. labelled splits, backbone, learning rate schedule, etc.), we follow recommendations in [33] and re-implement the three closest baselines in our codebase. FixMatch [46] uses consistency regularization with confidence-based pseudo-labelling, SemCo [32] builds on FixMatch but leverages label semantics (via a knowledge graph) to account for pre-known similarities among classes, and MeanTeacher [49] uses momentum teachers for SSL. We extend it to MeanTeacher++ where we employ the same spatial augmentations we use (instead of the original gaussian noise) and we use the same backbone as ours (ViT-S/16 [51]). For all experiments, we initialise models with pretrained ImageNet weights and follow the SSL standard approach: we use a fraction of the labelled data (expressed in images/class) together with all the unlabelled data to adapt to target. We fix the training to 70 epochs 777The 70 epochs are split into 50 epochs of DINO target pretraining and 20 epochs for training lava (w.r.t unlabelled data) for lava and we use early stopping for all the baseline methods using a validation set. For each of the 4 domains, we examine low-, and moderate-shot transfer scenarios using 2, 4 and 8 images/class. We explore both self-supervised initialisation (i.e. DINO) and supervised initialisation [51] for all the baselines. We report the best results among the two for the baselines and both results for lava in Tab. 1. Finally, to examine the contribution of our semantic loss to SSL, we report results for lava when switching it off.

We observe lava outperforms baselines consistently, sometimes, with large margins. Interestingly, FixMatch and SemCo obtains their best results using supervised source pretraining rather than self-supervised (see self-supervised results in appendix). One possible explanation is that this is due to the very different method of augmentations used in DINO pretraining compared to FixMatch and SemCo. As conjectured, we see that the impact of self-supervised initialisation for lava becomes more significant when the visual domain is different from that of ImageNet. For example, we observe an impressive 10% boost in the clipart 2-shot setting from 38.4% to 48.6%, proving that self-supervised features helps generalisation beyond domains. Among the baselines, MeanTeacher++ is the closest to lava; the main two differences are our multi-crop pseudo-labelling strategy and the semantic loss. We witness a significant boost of lava over MeanTeacher especially with fewer labelled samples. This confirms the usefulness of our multi-crop pseudo-labelling strategy in low-data regimes. Finally, we get a marginal boost when using semantic loss in SSL across almost all experiments.

SSL on ImageNet. To examine SSL transfer under the same domain, we follow ImageNet evaluation protocol by using 1% and 10% of the labels to train lava. Due to the demanding computational requirements for running experiments on ImageNet, we opted to only re-run SOTA method PAWS [2] with the same ViT-S backbone as ours. PAWS combines self-supervised learning with a non-parametric method of generating pseudo-labels based on a small labelled set. For all the other baselines, unless ViT-S results are reported in the original work, we only report the Resnet50 results. We note however, that the ViT-S model has less parameters (21M) compared to Resnet50 (24M), yet recent work [51, 9] showed an approximate 1-2% improvement in favour of ViT-S. Again, we see (Tab. 2) a significant boost for lava against other methods. Interestingly, as opposed to DINO, lava achieves large gains. Note, however, that DINO reports Linear evaluation results on frozen features and does not fine-tune end-to-end, so (64.5% and 72.2%) are not directly comparable to the (69.3% and 76.4%) of lava. However, K-NN results can be directly compared to measure the differential between lava and DINO.

| Method | Arch. | Epochs | 1% | 10% |

| Different Architecture: | ||||

| FixMatch [46] | RN50 | 300 | – | 71.5 |

| MPL [37] | RN50 | 800 | – | 73.9 |

| SwAV [8] | RN50 | 800 | 53.9 | 70.2 |

| SimCLRv2++ [11] | RN50 | 1200 | 60.0 | 70.5 |

| Same Architecture: | ||||

| DINO-NN [9] | ViT-S | 300 | 61.3 | 69.1 |

| DINO [9] | ViT-S | 350 | 64.5 | 72.2 |

| PAWS-nn [2] | ViT-S | 300 | 63.5 | 72.3 |

| PAWS [2] | VIT-S | 300 | 68.9 | 75.2 |

| lava-nn | ViT-S | 300 | 67.2 | 73.3 |

| lava | ViT-S | 350 | 69.3 | 76.4 |

| K-NN | MatchNet | ProtoNet | Finetune | fo-Proto | BOHB | ProtoNet-L | ALFA-fo-Proto | CTX | lava | |

|---|---|---|---|---|---|---|---|---|---|---|

| [52] | [52] | [52] | [52] | MAML [52] | [44] | [52] | MAML[4] | [13] | (ours) | |

| ImageNet | 41.031.01 | 45.001.10 | 50.501.08 | 45.781.10 | 49.531.05 | 51.921.05 | 53.691.07 | 52.801.11 | 62.760.99 | 68.750.54 |

| Omniglot | 37.071.15 | 52.271.28 | 59.981.35 | 60.851.58 | 63.371.33 | 67.571.21 | 68.501.27 | 61.871.51 | 82.211.00 | 77.920.50 |

| Aircraft | 46.810.89 | 48.970.93 | 53.101.00 | 68.691.26 | 55.950.99 | 54.120.90 | 58.040.96 | 63.431.10 | 79.490.89 | 81.140.49 |

| Birds | 50.131.00 | 62.210.95 | 68.791.01 | 57.311.26 | 68.660.96 | 70.690.90 | 74.070.92 | 69.751.05 | 80.630.88 | 84.880.77 |

| Textures | 66.360.75 | 64.150.85 | 66.560.83 | 69.050.90 | 66.490.83 | 68.340.76 | 68.760.77 | 70.780.88 | 75.570.64 | 82.050.50 |

| Quick-Draw | 32.061.08 | 42.871.09 | 48.961.08 | 42.601.17 | 51.521.00 | 50.331.04 | 53.301.06 | 59.171.16 | 72.680.82 | 68.440.57 |

| Fungi | 36.161.02 | 33.971.00 | 39.711.11 | 38.201.02 | 39.961.14 | 41.381.12 | 40.731.15 | 41.491.17 | 51.581.11 | 55.020.67 |

| Flowers | 83.100.68 | 80.130.71 | 85.270.77 | 85.510.68 | 87.150.69 | 87.340.59 | 86.960.73 | 85.960.77 | 95.340.37 | 95.430.66 |

| Traffic-Sign | 44.591.19 | 47.801.14 | 47.121.10 | 66.791.31 | 48.831.09 | 51.801.04 | 58.111.05 | 60.781.29 | 82.650.76 | 69.241.06 |

| MSCOCO | 30.380.99 | 34.991.00 | 48.030.99 | 41.001.10 | 34.860.97 | 43.741.12 | 41.701.08 | 48.111.14 | 59.901.02 | 63.750.45 |

FSL on Meta-dataset.. We use the “ImageNet-only” protocol to evaluate multi-domain FSL on Meta-dataset [52]. Specifically, we use images of 712 out of 1000 classes of ImageNet as our source dataset, choose our hyperparameters by validating on 158 classes and evaluate using episodes coming from the remaining 130 classes of ImageNet as well as other 9 datasets. Meta-dataset measures cross-domain FSL by evaluating on datasets including fine-grained tasks (such as Birds, Aircrafts, Fungi, Flowers, Textures, Traffic Signs), characters and symbols (Omniglot), and real and quickly drawn objects (ImageNet, MSCoCO and Quickdraw). During source pretraining, we use all the instances coming from the 712 train classes without their labels for source pretraining888Unlike meta-learning methods, we do not use episodes during the training.. And, we use the same instances with their labels to train the Semantic MLP (). During transfer, we freeze the backbone and finetune lava using the support set for 300 epochs. We use the standard Meta-dataset setting where each episode contains varying number of ways (i.e. classes) and imbalanced shots (i.e. images per class). As per the common practice, we report results averaged over 600 episodes for each dataset.

As seen in Tab. 3, with such a simple strategy and without resorting to any meta-learning techniques, lava outperforms the closest baseline [13] on 7 out of 10 datasets and for the other 3, it exceeds the second best with a large margin. It is important to note here that it is not straight forward to directly compare between the FSL baselines: primarily, because different methods employ different styles of training (e.g. meta-training [45, 15, 13] vs fine-tuning), different initialisation (self-supervised [13] vs supervised [15, 45]) and different backbones (Resnet18 [52, 44] vs Resnet34 [13]). However, we think of our method as orthogonal to other methods: since we are using language semantics, which is not available for other methods, we are cautious about directly comparing with them and hence we report the results for reference and not comparison. A possible future direction is to explore how semantics (or other possible ways of grounding class relationships) help other strong methods such as “Cross Transformers”.

5 Analysis and Ablations

We gain key insights by analysing the following: 1) the multi-crop strategy dynamics and its usefulness to produce higher quality pseudo-labels; 2) the importance of self-supervised learning for out-of-domain generalisation; 3) the role of language semantics towards class generalisation; and finally 4) the effect of key hyperparameters.

Multi-crop Pseudo-labelling. To study lava’s dynamics, we conduct an “oracle” experiment where we use the ground truth labels (originally hidden to emulate an SSL setup) to calculate the true pseudo-labels accuracy of the multiple crops seen by the student and the teacher networks as the training proceeds. We calculate accuracies based on the argmax of the soft predictions and for the student and teacher respectively. Fig. 4 provides few interesting observations. First, based on the large crops, the teacher model exhibits a consistently better performance compared to the student after an initial ramp up phase (due to the EMA). This demonstrates the usefulness of the teacher-student setup, whereby the student model is always guided by a slightly better teacher. Second, as expected, we observe that the small crops (only seen by the student) have lower accuracy, on average, compared to large crops; but interestingly, the disagreement among the predictions associated with the small crops decrease as training proceeds, suggesting that the model is learning from the different small views of an image a consistent representation which truly captures its main object. Next, we repeat the same process for our closest baseline (MeanTeacher++) to examine the difference in pseudo-labelling quality obtained by each of the methods. Note that with the modifications we introduce to MeanTeacher++, the difference between the two methods in such setting is only the multi-crop pseudo-labelling. We observe in Tab. 4 that indeed, the multi-crop strategy brings a significant benefit across three different domains especially when less labelled data is available. Additionally, we captured a fine-grained view of the pseudo-labels to examine what are the images that most differ in pseudo-labels among the two methods. As expected (see Fig. 3 - bottom), those are the images which contain multiple semantic objects. We provide further examples in the appendix.

| Real | Clipart | Quickdraw | |||||||

| 2-shot | 8-shot | 2-shot | 8-shot | 2-shot | 8-shot | ||||

| Fully-supervised | 73.74 | 76.55 | 71.87 | 72.75 | 61.22 | 67.23 | |||

| Initialisation: | |||||||||

| Sup. (ImNet)∗ | 54.92 | 64.81 | 22.49 | 35.39 | 10.68 | 18.78 | |||

| DINO (ImNet) | 46.17 | 59.42 | 15.19 | 26.97 | 8.98 | 16.81 | |||

| DINO (Target)∗∗ | 50.45 | 62.54 | 40.35 | 55.03 | 30.05 | 43.64 | |||

| Fine-tuning from *: | |||||||||

| Linear Probing | 49.03 | 64.89 | 21.5 | 36 | 6.08 | 13.9 | |||

| MeanTeacher++ | 54.26 | 70.60 | 34.58 | 60.22 | 16.38 | 35.75 | |||

| lava | 57.47 | 75.41 | 38.45 | 64.74 | 32.61 | 48.44 | |||

| Fine-tuning from **: | |||||||||

| Linear Probing | 49.68 | 64.42 | 38.86 | 56.6 | 28.73 | 47.5 | |||

| MeanTeacher++ | 51.44 | 68.77 | 46.02 | 63.09 | 29.78 | 47.11 | |||

| lava | 58.79 | 72.19 | 48.65 | 65.08 | 36.66 | 54.91 | |||

Initialisation Study. Here, we are interested to examine the effect of pretraining when training lava using few labels across different visual domains. In Tab. 4, we report results using different initialisations and different fine-tuning settings across real, clipart, and quickdraw domains. In the top section, we display KNN accuracy based on the model representation when initialised with 1) Supervised ImageNet features [51]; 2) DINO ImageNet features [9]; and 3) DINO features when trained on the target dataset without labels (the standard lava initialisation). Note that for those results, the labels are only used to obtain the KNN accuracy on the validation set of the respective target dataset but never for fine-tuning so they are only meant to compare the quality of “off-the-shelf” pretrained features. First, we observe that using only ImageNet data, supervised pretraining is more useful than self-supervised across the three domains and shots with a degrading performance as the domain deviates from ImageNet. Note that 23% of DomainNet classes also exists in ImageNet, explaining why class-specific features might be helpful in such case. However, once we have access to the target instances (without their labels), we observe that self-supervised target training (i.e. lava initialisation) dramatically improves the representations to become more suited to the target domain without any labeling expense. Even when the target domain is very close to ImageNet (e.g. real), we see a 4% gain in the 2-shot regime (46.17 to 50.45). This boost is even more pronounced in highly dis-similar domains, e.g. 25% and 21% boosts for clipart and quickdraw with only 2 shots per class.

On the other hand, in the middle and bottom sections of Tab. 4, we report transfer results based on the supervised initialisation and the lava initialisation. We also report results using two other transfer methods: 1) Linear Probing on top of frozen representations [9]; and 2) MeanTeacher++ described in Sec. 4. Finally, as an upper bound, we report the fully-supervised results obtained when using the entire target dataset (with labels) to train ViT in a supervised manner, then using the few shots to obtain the KNN accuracy reported. Here, we observe that lava benefits from self-supervised DINO initialisation in all domains, but the gain is more clear when the target domain is different than ImageNet and the available labels are less. For example, we witness an impressive 10%, and 4% boosts in the 2-shot scenario for clipart and quickdraw, respectively. Additionally, to quantify what lava brings on top of the DINO initialisation, we compare lava with MeanTeacher++ and observe that lava outperforms it in all cases thanks to our multi-crop pseudo-labelling strategy. Finally, we notice that lava is almost closing the gap to fully-supervised training using all the target labels: by only using 8-shots, lava achieves 75.4% on the real domain compared to the 76.5% obtained when all the labels are used for training.

Language Semantics. Now, we examine the role of label semantics towards generalisation to unseen classes and we investigate if lava indeed mitigates the “class collapse” problem. To study the impact of label semantics, we consider the 100 FSL episodes of MSCOCO in the Meta-dataset experiment. Recall that we first pretrain on instances of 712 classes of ImageNet then transferred to a different set of classes in MSCOCO . In Fig. 5-left, we display a subset of MSCOCO together with their nearest neighbors among , when they are projected into the language model semantic space. This space is pretrained using language and so it captures the linguistic semantic similarity between different sentences/words. On the right plot, we display the average precision per class based on the softmax classification head as well as the semantic projection head999For a given image, semantic predictions are obtained by finding the class whose language embedding is nearest to .. We observe that for any given MSCOCO class, when the most semantically-similar class in ImageNet is also visually similar (e.g. “bus” and “school_bus”), the language head has significantly higher precision per class. In contrast, in cases when the nearest neighbour is not visually similar (e.g. “wine glass” and “wine bottle”), both heads have comparable performance. This suggests the benefit of the learnt semantic mapping module : during test time and without any further training, when the model receives an image sharing similar visual features associated with one of the source classes , the semantic head maps it to the most closely related linguistic concept in .

Collapse Analysis. We follow a similar setup as [13] to investigate lava’s ability to avoid transfer collapse (see Sec. 1): we begin by uniformly sampling 100 images per class from ImageNet as well as 1000 query images from clipart dataset. Subsequently, we compute the representation for all of the sampled images. Besides lava, we also compute representations obtained by a supervised learner; and a DINO-initialised learner. In Fig. 2, we report examples of query images with their 4 nearest neighbours among all the sampled images. The representation of a given query image is said to be collapsed if its nearest neighbours mostly belong to source classes. To quantify the collapse in the three methods, we calculate the percentage of the 10 nearest neighbours which are instances of source classes over the 1000 query images. We find that this figure is 25% for the supervised learner, 21.7% for DINO, and 17% for lava. We provide further details in the appendix.

Hyperparameters sensitivity. During preliminary experiments, we identified few important design choices to tune: number of large scale and small scale crops, the pseudo-labelling aggregation loss function, the teacher momentum update rate (), and the source of label semantics embeddings.We used a held-out validation set on each of real and clipart domains to tune the hyperparameters (except the source of label semantics for which we used MSCOCO validation in the FSL regime), then we obtained a single set of parameters which we used across all experiments in this paper. We refer the reader to the appendix for a complete list of hyperparameters of lava in addition to a study to demonstrate their effect on lava’s performance.

6 Conclusion

We introduced a unified strategy for multi-domain visual transfer with limited target data. lava employs label semantics and self-supervised pretraining to learn initial representations which support generalisation; and uses multi-crop augmentation to maximise the gains from unlabeled data via pseudo-labeling. We demonstrated lava’s success in image classification over multiple benchmarks. We believe our approach is generic and can be extended to other visual learning tasks such as object detection and action recognition. We leave these explorations to future work.

Acknowledgement

This work was partly supported by DARPA’s Learning with Less Labels (LwLL) program under agreement FA8750-19-2-0501 and by the Australian Government Research Training Program (RTP) Scholarship.

Appendix101010All section, table, and figure references are following the original paper.

A Training Details

In this section, we provide more details about our implementation and a full list of the hyperparameters for reproducibility.

A.1 Architecture

We build our system on top of (DINO)’s [9] github implementation. We use the small Vision Transformer model variant (ViT-S/16) in all our experiments (unless otherwise stated) as it provides the best trade-off between throughput and accuracy and has comparable number of parameters to the baselines with which we compare our method. However, our implementation also supports the larger model variants which are evaluated in [9] (e.g. ViT-S/8 , ViT-B/16, and ViT-B/8).

On top of the ViT-S backbone [CLS] token output representation , we attach our projection MLP (see Fig. 1) comprising 3 linear layers with 2048 hidden dimension each followed by a GELU activation except for the last layer which uses an output bottleneck dimension of 256 and a linear activation. Subsequently, we connect our two heads to the output of the MLP : 1) the language MLP head comprises 2 weight-normalized linear layers with GELU activation and a hidden dimension 2048 and output dimension 768; 2) a linear classifier comprising a single weight-normalized linear layer followed by a temperature sharpened softmax (the student network temperature differs from the teacher network temperature). Please refer to Tab. 6 for a list of hyperparameters.

A.2 LAVA Training Summary

lava training comprises a pretraining stage on a source dataset and an adaptation/transfer stage to a target dataset. During source pretraining, lava first learns self-supervised representations by minimizing DINO [9] loss . Then it uses the labels to learn a mapping (i.e. language MLP) between the frozen self-supervised representations and the label language embeddings using our hinge loss (Eqn. 2). During target training, lava uses a modified variant of the DINO self-supervised objective (described in detail in next section) to adapt its source representations to the target domain without any use of target labels. Subsequently, lava employs a hybrid supervised/unsupervised loss to further train on the target labelled/unlabelled instances: the hinge loss on the labelled instances and our novel multi-crop pseudo-label loss on the unlabelled ones. Importantly, the language MLP is transferred directly from source to target without the need to be reinitialised (as opposed to a linear classifier head in vanilla transfer settings). That is because it predicts a fixed size embedding in a dataset-independent semantic space (the pretrained language model space). Concisely, lava loss can be summarised as per: with modulation coefficients to control the contribution of each term to the different stages of the training pipeline.

A.3 DINO Target Adaptation

After source DINO pretraining, we adapt to target dataset by fine-tuning the source representations on target unlabelled instances using the same DINO objective. In all our experiments, we found that 50 epochs are sufficient to adapt to the target dataset. Empirically, we found that there are two crucial factors to enable the success of this procedure: first, it is important to load the source pretrained weights of the MLP and the DINO head in conjunction with the backbone weights and not just the backbone as commonly done when fine-tuning. The second factor that highly impacts the adaptation procedure is the teacher EMA momentum () which controls the speed with which the teacher model weights follow the student. We found that allowing the teacher network to update its parameters faster than it did during the source pretraining helps to better adapt to the target dataset. More specifically, instead of using the default value of used in [9], we use during adaptation. In Tab. 5, we present a comparison between the different options for teacher momentum while adapting to various datasets. We report the KNN accuracy on 3 datasets of DomainNet before adaptation (i.e. using ImageNet pretrained model) and after adaptation with two different values for the teacher momentum. We observe that adaptation with lower value of significantly improves the learnt representations.

| clipart | quickdraw | painting | |

|---|---|---|---|

| KNN before adaptation | 51.09 | 35.28 | 56.49 |

| KNN after adaptation () | 65.11 | 58.31 | 59.68 |

| KNN after adaptation () | 69.08 | 62.29 | 61.58 |

| Hyperparameter | Value |

|---|---|

| batch size | 256 |

| learning rate | 0.0005 |

| optimizer | adam |

| minimum learning rate | 0.000001 |

| warmup learning rate | True |

| scheduler | cosine decay |

| weight decay start/end | 0.04/0.4 |

| num small crops | 8 |

| num large crops | 2 |

| global crops scale | (0.4, 1.0) |

| local crops scale | (0.05, 0.4) |

| spatial augmentations | random flip, color jitter, |

| gaussian blur, solarization. | |

| out dim (c) | 65536 |

| student softmax temperature | 0.1 |

| teacher softmax temperature | 0.07 |

| warmup teacher temperature | True |

| teacher temperature start | 0.04 |

| teacher momentum start () | 0.996/0.95 |

| teacher momentum end | 1.0 |

| Hyperparameter | Value |

| learning rate | 0.000025 |

| num small crops student | 6 |

| num small crops teacher | 0 |

| num large crops student | 2 |

| num large crops teacher | 2 |

| student softmax temperature | 0.1 |

| teacher softmax temperature | 0.04 |

| warmup teacher temperature | False |

| teacher momentum start () | 0.99 |

| teacher momentum schedule | cosine |

| language model | mpnet-base-v2 |

| language model latent dimension (d) | 768 |

| hinge loss margin () | 0.4 |

B Coupling DINO and lava

We exploit the fact that DINO and lava share a similar self-distillation backbone and only differ in the projection heads architecture, their training procedure and the hyperparameters. Accordingly, to allow training both DINO and lava using the same codebase, we simply attached an additional head to lava’s MLP projection with an output space dimension matching that of DINO’s (they use 65536 output dimension by default). Subsequently, one can switch between DINO pretraining and lava training by adjusting the running configuration to match the respective setup. An additional benefit for such coupling is that it allows us to add DINO proposed loss as an auxiliary loss to our model objective during adaptation and explore if it introduces any benefits to lava. The intuition is that since we use DINO for lava source pretraining and target fine-tuning, it might be useful to continue applying its loss as an auxiliary loss so as to prevent damaging or “forgetting” the learnt self-supervised representations. However, we only found marginal benefits of doing so in the very limited label regimes (e.g. 1 and 2-shot experiments in SSL) but such benefits diminish once we have more labelled data.

C Ablation Study

Here, we are interested to examine the effect of key design choices on lava’s performance.

C.1 Semantics for FSL

Loss Ablations. In Tab. 1, we demonstrated a marginal benefit for our proposed semantic loss under the SSL setting and we concluded that the semantic loss is tailored specifically to address generalisation to new classes. To evaluate such claim, we conduct another experiment in the FSL setting on MSCOCO dataset: starting from lava’s base learner, described in Sec. 4, we run 100 test episodes of MSCOCO (using the same random seed for the episode generation) while ablating over three different losses: standard classification loss using one-hot labels as targets, our proposed semantic loss using language semantics as targets, and our proposed multi-crop pseudo-labelling loss. In Tab. 8, we report the ablation results of both SSL and FSL regimes side-by-side for comparison. First, we observe that pseudo-label loss helps in both cases but its role is more evident in SSL as expected. In contrast, we observe that the semantic loss plays an important role in FSL: when combined with pseudo-label loss, it achieves a 10% boost in accuracy when compared with the standard classification loss (67.68% vs 57.25%) while the difference between the same cases in SSL is marginal (48.89% vs 48.57%). This confirms the usefulness of rich semantics to generalise to unseen classes as conjectured earlier. Finally, we observe that using semantic and pseudo-labeling losses (lava standard setting), we obtain the best performance for both SSL and FSL cases.

| classification | semantic | pseudo-label | MSCOCO (FSL) | Clipart (SSL) | |||

| 66.41 | 43.39 | ||||||

| 54.55 | 42.74 | ||||||

| 67.68 | 48.89 | ||||||

| 57.25 | 48.57 | ||||||

| 66.41 | 48.65 |

Source of semantics.

Here, we examine different alternatives to obtain the class label embeddings (). The first choice as in the seminal work of [16] is to simply use the word embeddings of the class labels (such as Word2Vec [30] or Glove [36]) to ground the semantic relations between classes. Word embeddings are learnt in an unsupervised manner to capture contextual relations between words based on their co-occurence statistics in a large corpus of text. This results in vectors which capture contextual similarity but not necessarily visual similarity. Alternatively, Nassar et al. [32] suggested to use knowledge graphs [48] to adjust word embeddings in a way which also correlates with visual similarity. While the two methods work reasonably well, they both suffer from a coverage issue because they use a predefined set of vocabulary and so it is common that some of the target class labels do not exist in their vocabulary. Accordingly, we suggest in our work to use a pretrained language model using sub-word tokenization such as BERT [12] and its variants. Such models alleviate out-of-vocabulary problems by operating on parts of words (i.e. subwords) instead of words. Hence, to examine these different alternatives, we compare lava’s performance when using each of them. We fix the learning task to the MSCOCO FSL task where we run 100 FSL test episodes with different choices of embeddings: 1) Glove word embeddings [36], 2) Knowledge graph embeddings [32], 3) A multilingual BERT-based sentence encoder111111distiluse-base-multilingual-cased-v1 in

https://www.sbert.net/docs/pretrained_models.html, and 4) a praphrase language model [47]. We respectively obtain the following top-1 average performance on the 100 FSL MSCOCO episodes (using the same random seed for the episode generation): 63.52%, 64.12%, 66.67%, and 67.68%. Accordingly, we select the paraphrase model as the default option for lava.

C.2 Design alternatives for Multi-crop pseudo-labelling

As discussed in Sec. 3.2, we explored different design choices to aggregate the multi-crop losses. We present a comparison between the different design choices in Tab. 9. Specifically, can be calculated as pair-wise average soft pseudo-label loss as in our method (in the main text), or as the pair-wise average hard pseudo-label loss, i.e. by replacing with . Alternatively, a single average soft pseudo-label can be obtained based on all the teacher crops then used as a soft target against all the student crops predictions, i.e.

| (2) | ||||

| (3) |

or a single average hard pseudo-label, i.e. by replacing with in Eqn. 2, or finally a single majority hard pseudo-label by applying a majority vote on the hard predictions of all the teacher crops, i.e. using instead of Eqn. 3.

| real 2-shot | clipart 2-shot | real 2-shot | clipart 2-shot | real 2-shot | clipart 2-shot | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Aggregation strategy | Small crops count | Momentum | ||||||||

| pair-wise average soft | 58.79 | 48.65 | 0 | 54.26 | 46.97 | 0 | 27.84 | 38.44 | ||

| pair-wise average hard | 57.22 | 46.25 | 4 | 56.12 | 47.9 | 0.9 | 51.02 | 46.02 | ||

| single average soft | 55.89 | 45.98 | 6 | 58.57 | 48.57 | 0.95 | 55.38 | 46.86 | ||

| single average hard | 54.12 | 45.12 | 8 | 57.77 | 48.68 | 0.99 | 58.67 | 48.57 | ||

| single majority hard | 55.95 | 46.43 | 10 | 57.97 | 48.42 | 0.999 | 57.90 | 30.81 |

D Details of Analysis Experiments and Additional Examples

In this section we provide more details about the experimental setup for the analysis experiments in Sec. 5, as well as additional qualitative examples to provide more intuition.

D.1 Multi-crop pseudo-labelling analysis

Here, we elaborate on the experimental setup for the experiment presented in Sec. 5 - Fig. 4. We train lava using the clipart 2-shot SSL setting and while training, we capture the teacher and student predictions and for each of the large and small scale crops for every training iteration. Upon convergence (20 epochs of training), we apply argmax on all the captured values to obtain the most dominant “pseudo-label” as viewed by the student/teacher based on each of the crops. Subsequently, we use the ground truth labels of the SSL unlabelled instances to calculate the true top-1 accuracy associated with each of the crops and we average it over each epoch. Additionally, we calculate the disagreement rate among the small/large crops as the ratio between the number of unique pseudo-label classes obtained for small/large crops to the total number of small/large crops used. For example using 6 small crops, if the pseudo-labels obtained were (dog, dog, dog, cat, squirrel, mouse) then the disagreement rate is . In Fig. 4, we report the accuracy based on one of the large crops seen by the student and teacher (denoted as student and teacher in the legend) together with two small crops seen by the student (denoted as small1 and small2). Even though, we use 6 small crops and 2 large crops during training, we only display the above mentioned subset to avoid clutter. Moreover, we report the disagreement rate among the small crops and the large crops (denoted as disagree_small, disagree_large).

Finally, we rank all the training instances based on the disagreement rate among their large crops, averaged over all the training iterations, to examine which images suffer the most and the least from pseudo-labelling inconsistencies due to cropping. We display an additional subset of the top and least ranked images in Fig. 6. We observe that the instances with high disagreement rate are those which contain multiple semantic objects; which confirms our intuition about the necessity of the proposed multi-crop pseudo-labelling strategy.

D.2 Collapse Analysis

Here we provide further details about the transfer collapse described in Fig. 2. Doersch et al. [13] originally suggested that collapse happens as a result of the supervised pretraining used by most recent FSL methods when training their base learner. Essentially, since the learner is trained to merely classify images into one of a predefined set of classes, the learner encodes information which is useful to discriminate such training classes but discard any other information including that which is useful to generalise to new classes or domains. We further categorise supervision collapse into two types: class and domain collapse as we illustrate below. To visually examine such problem, we follow a protocol inspired by [13]: first, we train a supervised base learner (ViT) on all the instances of the 712 Meta-dataset ImageNet train classes using standard classification cross-entropy loss and following [51] training procedure. Then, we randomly select 100 instances per each of the 712 train classes together with 1000 query instances from a given target dataset (e.g. MSCOCO or clipart). Subsequently, we use the supervised base learner and lava to obtain the latent representations (i.e. ) for each of the sampled images (712 x 100 train + 1000 test query images); and we retrieve the 10 nearest neighbours (based on cosine similarity) for each of the 1000 query images with respect to such representations. Note that the 1000 images are never seen by either of the models during training and hence we hope that a general visual learner would be able to retrieve, for each query image, neighbours which are at least semantically related. If the learner retrieves a majority of neighbours which belong to one of the train classes for a given query image, it is said that its representations have collapsed to that training class. In Fig. 2 and Fig. 7, we display collapse examples from MSCOCO dataset, where we observe that e.g. a query “bench” instance has collapsed into “oxcart” and an “orange” instance has collapsed into a “robin” in the supervised learner case, while lava retrieves plausible neighbours.

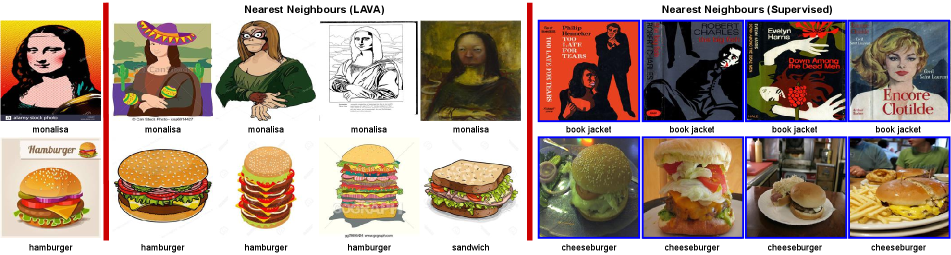

To further investigate collapse in a different visual domain, we conduct a similar experiment but using clipart as the target dataset. Interestingly, we witness two types of collapse when the domain also differs, which we denote as “class collapse” (Fig. 8) and “domain collapse” (Fig. 9). The former is similar to what was described earlier where the majority of retrieved neighbours belongs to a semantically different train class which shares superficial similarity with the query image. While the latter is when the retrieved neighbours belong to a semantically similar train class albeit in the training visual domain rather than the target domain. To elaborate, examining Fig. 8, we see “class collapse” where a “monalisa” clipart instance collapses to “book jacket” ImageNet class (top section); and a “wheel” instance collapses to “dome” (third section from top). Whereas in Fig. 9, we witness a “domain collapse” where e.g. “hamburger” clipart instances collapses to “cheese burger” which is semantically related but belongs to the “real” visual domain rather than the target “clipart” domain.

References

- [1] Mohamed Afham, Salman Khan, Muhammad Haris Khan, Muzammal Naseer, and Fahad Shahbaz Khan. Rich semantics improve few-shot learning. arXiv preprint arXiv:2104.12709, 2021.

- [2] Mahmoud Assran, Mathilde Caron, Ishan Misra, Piotr Bojanowski, Armand Joulin, Nicolas Ballas, and Michael Rabbat. Semi-supervised learning of visual features by non-parametrically predicting view assignments with support samples. arXiv preprint arXiv:2104.13963, 2021.

- [3] Philip Bachman, R Devon Hjelm, and William Buchwalter. Learning representations by maximizing mutual information across views. arXiv preprint arXiv:1906.00910, 2019.

- [4] Sungyong Baik, Myungsub Choi, Janghoon Choi, Heewon Kim, and Kyoung Mu Lee. Meta-learning with adaptive hyperparameters. arXiv preprint arXiv:2011.00209, 2020.

- [5] David Berthelot, Nicholas Carlini, Ekin D Cubuk, Alex Kurakin, Kihyuk Sohn, Han Zhang, and Colin Raffel. Remixmatch: Semi-supervised learning with distribution alignment and augmentation anchoring. arXiv preprint arXiv:1911.09785, 2019.

- [6] David Berthelot, Nicholas Carlini, Ian Goodfellow, Nicolas Papernot, Avital Oliver, and Colin Raffel. Mixmatch: A holistic approach to semi-supervised learning. arXiv preprint arXiv:1905.02249, 2019.

- [7] Luca Bertinetto, Joao F Henriques, Philip HS Torr, and Andrea Vedaldi. Meta-learning with differentiable closed-form solvers. arXiv preprint arXiv:1805.08136, 2018.

- [8] Mathilde Caron, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, and Armand Joulin. Unsupervised learning of visual features by contrasting cluster assignments. arXiv preprint arXiv:2006.09882, 2020.

- [9] Mathilde Caron, Hugo Touvron, Ishan Misra, Hervé Jégou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. Emerging properties in self-supervised vision transformers. arXiv preprint arXiv:2104.14294, 2021.

- [10] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In International conference on machine learning, pages 1597–1607. PMLR, 2020.

- [11] Ting Chen, Simon Kornblith, Kevin Swersky, Mohammad Norouzi, and Geoffrey Hinton. Big self-supervised models are strong semi-supervised learners. arXiv preprint arXiv:2006.10029, 2020.

- [12] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [13] Carl Doersch, Ankush Gupta, and Andrew Zisserman. Crosstransformers: spatially-aware few-shot transfer. arXiv preprint arXiv:2007.11498, 2020.

- [14] Alexey Dosovitskiy, Philipp Fischer, Jost Tobias Springenberg, Martin Riedmiller, and Thomas Brox. Discriminative unsupervised feature learning with exemplar convolutional neural networks. IEEE transactions on pattern analysis and machine intelligence, 38(9):1734–1747, 2015.

- [15] Chelsea Finn, Pieter Abbeel, and Sergey Levine. Model-agnostic meta-learning for fast adaptation of deep networks. In International Conference on Machine Learning, pages 1126–1135. PMLR, 2017.

- [16] Andrea Frome, Greg Corrado, Jonathon Shlens, Samy Bengio, Jeffrey Dean, Marc’Aurelio Ranzato, and Tomas Mikolov. Devise: A deep visual-semantic embedding model. 2013.

- [17] Yves Grandvalet and Yoshua Bengio. Semi-supervised learning by entropy minimization. In Proceedings of the 17th International Conference on Neural Information Processing Systems, pages 529–536, 2004.

- [18] Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre H Richemond, Elena Buchatskaya, Carl Doersch, Bernardo Avila Pires, Zhaohan Daniel Guo, Mohammad Gheshlaghi Azar, et al. Bootstrap your own latent: A new approach to self-supervised learning. arXiv preprint arXiv:2006.07733, 2020.

- [19] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9729–9738, 2020.

- [20] R Devon Hjelm, Alex Fedorov, Samuel Lavoie-Marchildon, Karan Grewal, Phil Bachman, Adam Trischler, and Yoshua Bengio. Learning deep representations by mutual information estimation and maximization. arXiv preprint arXiv:1808.06670, 2018.

- [21] Muhammad Abdullah Jamal and Guo-Jun Qi. Task agnostic meta-learning for few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11719–11727, 2019.

- [22] Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. Supervised contrastive learning. Advances in Neural Information Processing Systems, 33:18661–18673, 2020.

- [23] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [24] Gregory Koch, Richard Zemel, Ruslan Salakhutdinov, et al. Siamese neural networks for one-shot image recognition. In ICML deep learning workshop, volume 2. Lille, 2015.

- [25] Dong-Hyun Lee et al. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Workshop on challenges in representation learning, ICML, volume 3, page 896, 2013.

- [26] Kwonjoon Lee, Subhransu Maji, Avinash Ravichandran, and Stefano Soatto. Meta-learning with differentiable convex optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10657–10665, 2019.

- [27] Chunyuan Li, Jianwei Yang, Pengchuan Zhang, Mei Gao, Bin Xiao, Xiyang Dai, Lu Yuan, and Jianfeng Gao. Efficient self-supervised vision transformers for representation learning. arXiv preprint arXiv:2106.09785, 2021.

- [28] Zhenguo Li, Fengwei Zhou, Fei Chen, and Hang Li. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv preprint arXiv:1707.09835, 2017.

- [29] Lu Liu, William Hamilton, Guodong Long, Jing Jiang, and Hugo Larochelle. A universal representation transformer layer for few-shot image classification. arXiv preprint arXiv:2006.11702, 2020.

- [30] Tomas Mikolov, Kai Chen, Greg Corrado, and Jeffrey Dean. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781, 2013.

- [31] Takeru Miyato, Shin-ichi Maeda, Masanori Koyama, and Shin Ishii. Virtual adversarial training: a regularization method for supervised and semi-supervised learning. IEEE transactions on pattern analysis and machine intelligence, 41(8):1979–1993, 2018.

- [32] Islam Nassar, Samitha Herath, Ehsan Abbasnejad, Wray Buntine, and Gholamreza Haffari. All labels are not created equal: Enhancing semi-supervision via label grouping and co-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7241–7250, 2021.

- [33] Avital Oliver, Augustus Odena, Colin Raffel, Ekin D Cubuk, and Ian J Goodfellow. Realistic evaluation of deep semi-supervised learning algorithms. arXiv preprint arXiv:1804.09170, 2018.

- [34] Boris N Oreshkin, Pau Rodriguez, and Alexandre Lacoste. Tadam: Task dependent adaptive metric for improved few-shot learning. arXiv preprint arXiv:1805.10123, 2018.

- [35] Xingchao Peng, Qinxun Bai, Xide Xia, Zijun Huang, Kate Saenko, and Bo Wang. Moment matching for multi-source domain adaptation. In Proceedings of the IEEE International Conference on Computer Vision, pages 1406–1415, 2019.

- [36] Jeffrey Pennington, Richard Socher, and Christopher D Manning. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pages 1532–1543, 2014.

- [37] Hieu Pham, Zihang Dai, Qizhe Xie, and Quoc V Le. Meta pseudo labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11557–11568, 2021.

- [38] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. arXiv preprint arXiv:2103.00020, 2021.

- [39] Avinash Ravichandran, Rahul Bhotika, and Stefano Soatto. Few-shot learning with embedded class models and shot-free meta training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 331–339, 2019.

- [40] Mengye Ren, Eleni Triantafillou, Sachin Ravi, Jake Snell, Kevin Swersky, Joshua B Tenenbaum, Hugo Larochelle, and Richard S Zemel. Meta-learning for semi-supervised few-shot classification. arXiv preprint arXiv:1803.00676, 2018.

- [41] Ellen Riloff. Automatically generating extraction patterns from untagged text. In Proceedings of the national conference on artificial intelligence, pages 1044–1049, 1996.

- [42] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3):211–252, 2015.

- [43] Andrei A Rusu, Dushyant Rao, Jakub Sygnowski, Oriol Vinyals, Razvan Pascanu, Simon Osindero, and Raia Hadsell. Meta-learning with latent embedding optimization. arXiv preprint arXiv:1807.05960, 2018.

- [44] Tonmoy Saikia, Thomas Brox, and Cordelia Schmid. Optimized generic feature learning for few-shot classification across domains. arXiv preprint arXiv:2001.07926, 2020.

- [45] Jake Snell, Kevin Swersky, and Richard Zemel. Prototypical networks for few-shot learning. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pages 4080–4090, 2017.

- [46] Kihyuk Sohn, David Berthelot, Chun-Liang Li, Zizhao Zhang, Nicholas Carlini, Ekin D Cubuk, Alex Kurakin, Han Zhang, and Colin Raffel. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. arXiv preprint arXiv:2001.07685, 2020.

- [47] Kaitao Song, Xu Tan, Tao Qin, Jianfeng Lu, and Tie-Yan Liu. Mpnet: Masked and permuted pre-training for language understanding. arXiv preprint arXiv:2004.09297, 2020.

- [48] Robyn Speer, Joshua Chin, and Catherine Havasi. Conceptnet 5.5: An open multilingual graph of general knowledge. arXiv preprint arXiv:1612.03975, 2016.

- [49] Antti Tarvainen and Harri Valpola. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pages 1195–1204, 2017.

- [50] Yonglong Tian, Yue Wang, Dilip Krishnan, Joshua B Tenenbaum, and Phillip Isola. Rethinking few-shot image classification: a good embedding is all you need? In European Conference on Computer Vision, pages 266–282. Springer, 2020.

- [51] Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning, pages 10347–10357. PMLR, 2021.

- [52] Eleni Triantafillou, Tyler Zhu, Vincent Dumoulin, Pascal Lamblin, Utku Evci, Kelvin Xu, Ross Goroshin, Carles Gelada, Kevin Swersky, Pierre-Antoine Manzagol, et al. Meta-dataset: A dataset of datasets for learning to learn from few examples. arXiv preprint arXiv:1903.03096, 2019.

- [53] Vikas Verma, Kenji Kawaguchi, Alex Lamb, Juho Kannala, Arno Solin, Yoshua Bengio, and David Lopez-Paz. Interpolation consistency training for semi-supervised learning. Neural Networks, 2021.

- [54] Oriol Vinyals, Charles Blundell, Timothy Lillicrap, Daan Wierstra, et al. Matching networks for one shot learning. Advances in neural information processing systems, 29:3630–3638, 2016.

- [55] Longhui Wei, Lingxi Xie, Jianzhong He, Jianlong Chang, Xiaopeng Zhang, Wengang Zhou, Houqiang Li, and Qi Tian. Can semantic labels assist self-supervised visual representation learning? arXiv preprint arXiv:2011.08621, 2020.

- [56] Qizhe Xie, Zihang Dai, Eduard Hovy, Thang Luong, and Quoc Le. Unsupervised data augmentation for consistency training. Advances in Neural Information Processing Systems, 33, 2020.

- [57] Qizhe Xie, Minh-Thang Luong, Eduard Hovy, and Quoc V Le. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10687–10698, 2020.

- [58] Xiaohua Zhai, Avital Oliver, Alexander Kolesnikov, and Lucas Beyer. S4l: Self-supervised semi-supervised learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 1476–1485, 2019.