LaTeX Deep Learning application for stellar parameters determination:

I- Constraining the hyperparameters

Abstract

Machine Learning is an efficient method for analyzing and interpreting the increasing amount of astronomical data that is available. In this study, we show, a pedagogical approach that should benefit anyone willing to experiment with Deep Learning techniques in the context of stellar parameters determination. Utilizing the Convolutional Neural Network architecture, we give a step by step overview of how to select the optimal parameters for deriving the most accurate values for the stellar parameters of stars: , , , and . Synthetic spectra with random noise were used to constrain this method and to mimic the observations. We found that each stellar parameter requires a different combination of network hyperparameters and the maximum accuracy reached depends on this combination, as well as, the Signal to Noise ratio of the observations, and the architecture of the network. We also show that this technique can be applied to other spectral types in different wavelength ranges after the technique has been optimized.

1 Introduction

Machine learning (ML) applications have been used extensively in astronomy over the last decade ([6]). This is mainly due to the large amount of data that are recovered from space and ground-based observatories. There is therefore a need to analyze this data in an automated way. Statistical approaches, dimensionality reduction, wavelet decomposition, ML, and Deep Learning (DL) are all examples of the attempts that were performed in order to derive more accurate stellar parameters such as the effective temperature (), surface gravity (), projected equatorial rotational velocity (), and metallicity () using stellar spectra in different wavelength ranges ([26, 46, 47, 59, 62, 4, 32, 15, 21, 38, 19, 42, 43]). DL is a machine learning method based on deep Artificial Neural Networks (ANN) that does not usually require a specific statistical algorithm to predict a solution but it is rather learned by experience and thus require a very large dataset ([65]) for training in order to perform properly.

An overview of the automated techniques used in stellar parameters determination can be found in [32]. We will mention some of the recent studies that involve ML/DL. The increase of the computational power and the large availability of predefined optimized ML packages (in e.g. Python, C++, and R) have allowed astronomers to shift from classical techniques to ML when using large data. One of the first trials to derive the stellar parameters using neural networks was done by [5]. This work demonstrated that networks can give accurate spectral type classifications across the spectral types range B2-M7.

[14] presented an ANN architecture that learns the function which can relate the stellar parameters to the input spectra. They obtained residuals in the derivation of the metallicity below 0.1 dex for stars with Gaia magnitude mag, which accounts for a number in the order of four million stars to be observed by the Radial Velocity Spectrograph of the Gaia satellite111The limited magnitude of the RVS is around 15.5 mag ([12]).. [48] used a ML algorithm to measure the mean longitudinal magnetic field in stars from polarized spectra of high resolution. They found a considerable improvement of the results, allowing to estimate the errors associated to the measurements of stellar magnetic fields at different noise levels.

[44] developed, and applied a Convolutional Neural Network (CNN) architecture using multi-task learning to search for and characterize strong HI Ly absorption in quasar spectra. [15] applied a deep neural network architecture to analyse both SDSS-III APOGEE DR13 and synthetic stellar spectra. This work demonstrated that the stellar parameters are determined with similar precision and accuracy as the APOGEE pipeline.

[55] introduced an automated approach for the classification of stellar spectra in the optical region using CNN. They also showed that deep learning methods with a larger number of layers allow the use of finer details in the spectrum which results in improved accuracy and better generalisation with respect to traditional ML techniques.

[59] introduced a deep-learning method, SPCANet, that derived and and 13 chemical abundances for LAMOST Medium-Resolution Survey (MRS) data. These authors found abundance precision up to 0.19 dex for spectra with Signal-to-Noise ratio (SNR) down to 10. The results of SPCANet are consistent with those from other surveys such as APOGEE, GALAH and RAVE, and are also validated with the previous literature values including clusters and field stars. [26] derived the atmospheric parameters and abundances of different species for 420,165 RAVE spectra. They showed that CNN-based methods provide a powerful way to combine spectroscopic, photometric, and astrometric data without the need to apply any priors in the form of stellar evolutionary models.

More recently, [35] introduced a multi-layer CNN to forecast solar flare events probability occurrence of M and X classes. [10] introduced an AGN recognition method based on Deep Neural Network. [1] used machine learning ML methods to generate model SEDs and fit sparse observations of low-luminosity active galactic nuclei. [50, 49] used CNNs and different ANN networks to estimate emission-line parameters and line ratios present in different filters of SITELLE spectrometer. [13] used DL combined with k-Nearest Neighbour and Decision Tree Regression algorithms to compare the accuracy of the predicted photometric redshifts of newly detected sources. [41] applied the ThetaRay Artificial Intelligence algorithms to 10 803 light curves of threshold crossing events and uncovered 39 new exoplanetary candidates targets. [8] reached a classification accuracy of 88 percent while investigating the use of a CNN for automated merger classification. [16] used an assisted inversion techniques based on CNN for solar Stokes profile inversions. In the context of Classification of galactic morphologies, [17] used a ML generative adversarial networks to convert ground-based Subaru Telescope blurred images into quasi Hubble Space Telescope images. [18] presented StelNet, a Deep Neural Network trained on stellar evolutionary tracks that quickly and accurately predicts mass and age from absolute luminosity and effective temperature for stars of solar metallicity.

In this manuscript, we present both a new method to derive stellar atmospheric parameters, and we also demonstrate the effect of each of the CNN parameters (such as the choice of the optimizers, loss function, activation function, ) on the accuracy of the results. We will provide the procedure that can be followed in order to find the most appropriate configuration independently of the architecture of the CNN. This is intended as the first in a series of papers that will help the astronomical community to understand the effect on the accuracy of the prediction from most of the parameters and the architecture of the network. CNN parameters are numerous and to find the optimal ones is a very hard task. To do so, we trained the CNNs with different configurations of the parameters using purely synthetic spectra for the 3 steps of training, cross-validation (hereafter called validation), and testing. Using synthetic spectra, we have access to the true parameters during our tests. Noisy spectra are tested in order to mimic observations.

We have limited our work to a specific type of objects, A stars, because as mentioned previously the purpose is not to show how well we can derive the labeled stellar parameters but what is the effect of specific parameters on stellar spectra analysis. By applying our models to A stars, we use previous results ([19, 32]) as a reference for the expected accuracy of the derived stellar parameters. In the same way, the wavelength range and the resolving power are chosen to be representative of values used by most available instruments. Once the calibration of the hyperparameters was performed, we have tested our optimal network configurations on a set of FGK stars in Sec. 6, using the wavelength range of [42].

The training, validation, and test data are explained in Sec. 2. Section 3 discusses the data preparation previous to training. The neural network construction and the parameters selection is explained in Sec. 4. Results are summarized in Sec. 5. The application of the optimal networks to FGK stars is performed in Sec. 6. Discussion and conclusion are gathered in Sec. 7.

2 Training spectra

| Parameters | Range |

|---|---|

| (K) | [7 000,11 000] |

| (dex) | |

| (dex) | |

| () | |

| 60 000 |

Our learning, or training databases (TDB) are constructed from synthetic spectra for stars having effective temperature between 7 000 and 10 000 K, and the wavelength range of 4450 Å to 5000 Å. This range was selected because it is in the visible domain and contains metallic and Balmer lines sensitive to all stellar parameters (, , , ), especially for the spectra types selected in this work. This region is also insensitive to microturbulent velocity which was adopted to be =2 km/s based on the work of [19, 20]. Surface gravity, , is selected to be in the range of 2.0–5.0 dex. Projected rotational velocity, , is calculated between 0–300 . The Metallicity, , is in the range of -1.5 and +1.5 dex. Table 1 displays the range of all stellar parameters. These spectra are used for both the training and the validation phases. Approximately 55 000 noise free synthetic spectra were calculated using a random selection of the stellar parameters in the range of Tab. 1. These spectra are used instead of the observations (Test data without noise). Gaussian SNR, ranging between 5 and 300, were added to these test spectra in order to check the accuracy of the technique on noisy data (Test data with noise).

Details for the calculations of the synthetic spectra can be found in [19] or [32]. In summary, 1D plane–parallel model atmospheres were calculated using ATLAS9 ([34]). These models are in local thermodynamic equilibrium (LTE) and in hydrostatic and radiative equilibrium. We have used the new opacity distribution function in the calculations ([9]) as well as a mixing length parameter of 0.5 for 7000 K8500 K, and 1.25 for 7000 K ([57]).

We have used [30] SYNSPEC48 synthetic spectra code to calculate all normalized spectra. The adopted line lists are detailed in [19]. This list is mainly compiled using the data from Kurucz gfhyperall.dat222http://kurucz.harvard.edu, VALD333http://www.astro.uu.se/vald/php/vald.php, and the NIST444http://physics.nist.gov databases.

Finally, the resolving power is simulated to R=60 000. This value falls in the range between low and high resolution spectrographs. The technique that will be shown in the next sections can be used for any resolution. The construction and the size of the TDB will be discussed in Sec. 5. The use of synthetic spectra in ML to constrain the stellar parameters has shown to suffer from the so-called synthetic gap ([15, 46]). This gap refers to the differences in feature distributions between synthetic and observed data. We have decided to limit our work to synthetic data for 2 reasons, first we would like to remove the hassle of the data preparation steps (data reduction, flux calibration, flux normalization, radial velocity correction ), and second because our intention is to find the strategy and technique that should be adopted in ML for deriving stellar parameters.

We are working on a future paper that deals with the architecture of the network as well as the choice of the kernel sizes and the number of neurons. Combining the best strategy to constrain the hyperparameters (this manuscript) as well as the most optimal architecture (future studies) will allow us to use a combination of synthetic and observational data in our training database. Having well known stellar parameters, these observational data will allow us to remove/minimize the synthetic gap and better constrain the stellar parameters.

3 Data preparation

The TDB contains spectra that span the wavelength range of 4450–5000 Å. Having a wavelength step of 0.05 Å, this results in 10800 flux points per spectrum. The TDB can then be represented by a matrix M of size . A color map of a subsample of M is displayed in Fig.1. Although the synthetic spectra are normalized, some wavelength points could have fluxes larger than unity. This is due to the noise that is incorporated during the so-called data augmentation procedure which will be explained in Sec. 4.1.1.

Training a CNN using the M matrix is time consuming, especially if one should use a larger wavelength range or a higher resolution. For that reason we have applied a dimensionality reduction technique i.e., Principal Component Analysis (PCA hereafter), in order to reduce the size of the training TDB as well as the size of the validation, test, and noisy synthetic data. Although this step is optional, we recommend its use whenever the data can be represented by a small number of coefficients. The PCA can reduce the size of each spectrum from to . The choice of depends on the many parameters, the size of the database, the wavelength range and the shape of the spectra lines. As a first step, we need to find the Principal Components, and to do so, we proceed as follows:

The matrix M is averaged along the -axis and the result is stored in a vector . Then, we calculate the eigenvectors of the variance-covariance matrix C defined as

| (1) |

where the superscript ”T” stands for the transpose operator. C has a dimension of . Sorting the eigenvectors of the variance-covariance matrix in decreasing magnitude will results in the ”Principal Components”. Each spectrum of M is then projected on these Principal Components in order to find its corresponding coefficient defined as

| (2) |

The choice of the number of coefficient is regulated by the reconstructed error as detailed in [42]:

| (3) |

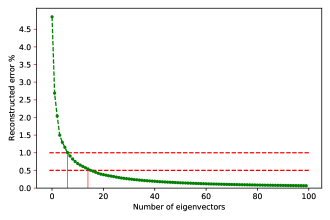

We have opted to a value for that reduces the mean reconstructed error to a value ¡0.5%. As an example, using a database of 25 000 spectra with stellar parameters ranging randomly between the values in Tab. 1 requires less than 7 coefficients to reach an accuracy ¡1%, and a value of =17 to reach a 0.5% error as shown in Fig. 2. This technique has shown its efficiency when applied to synthetic and/or real observational data with 4000 K (see [19, 42, 43], for more details).

Applying the same procedure to all our TDB and taking the maximum value to be used for all, we have adopted a constant value for =50. This value takes into account all the databases that will be dealt with in this work, especially that some will be data augmented as will be explained in Sec. 4.1.1. This means that instead of training a matrix having a dimension of , we are using one with dimension of , with . In that case, our new data consist of a matrix containing the coefficients that are calculated by projecting the spectra on the eigenvectors.

This projection procedure over the Principal Components is then applied to the validation, test, and noisy spectra datasets.

4 Deep Learning: Artificial Neural Network

This section begins with a brief description of supervised555Supervised learning refers to algorithms that calculate a predictive model using data points with known labels/outcomes. learning. Given a data set , the goal is to find a function such that is as ”close” as possible to . For example, could be the effective temperatures or surface gravity and the corresponding spectra. This ”closeness” is typically measured by defining a loss function that measures the difference between the predicted and actual values. Therefore the goal of the learning process is to find that minimizes for a given dataset . Ultimately, the success of any learning method is assessed by how well it generalizes. In other words, once the optimal is found for the training set , and given another data set , how close is to .

One of the most successful methods in tackling this kind of problems is Artificial Neural Networks (ANN), a subset of ML. As the name suggests, an ANN is a set of connected building blocks called neurons which are meant to mimic the operations of biological neurons ([2, 60]). Different kinds of ANNs can be built by varying the number of, connections between, and operations of individual neurons. The operations performed by these neurons depend on a number of parameters, called weights and some nonlinear function, called the activation. At a high level, an ANN is just the function that was described earlier. Since the network architecture is chosen at the start, finding the optimal boils down to finding the optimal weight parameters that minimize the cost function .

Regardless of the type of ANN used, the process of finding the optimal weights is more or less the same, and works as follows. After the network architecture is chosen, the weights are initialized, then a variant of gradient descent is applied to the training data. Gradient descent changes the parameters iteratively, at a certain rate proportional to their gradient, until the loss value is sufficiently small ([52]). The proportionality constant is called the learning rate. While this process is well known, there is to date no clear prescription for choice of the different components . The main difficulty arises from the fact that the loss function contains multiple minima with different generalization properties. In other words, not all minima of the loss function are equal in terms of generalization. Which minimum is reached at the end of training phase depends on the initial values chosen for the weights, the optimization algorithm used, including the learning rate and the training dataset ([63]). In the absence of clear theoretical prescriptions for the components one has to rely on experience and best practices ([7]).

One popular type of ANN is the feedforward network, where neurons are organized in layers, with the outputs of each layer fully connected to the inputs of the next. By increasing the number of layers (whence the ”deep” in ”deep learning”) many types of data can be modelled to a high degree of accuracy. Fully connected ANN, however, have some shortcomings, such as the large number of parameters, slow convergence, overfitting, and most importantly, failure to detect local patterns. Almost all the aforementioned shortcomings are solved by using convolution layers.

4.1 Convolutional Neural Network

A convolutional neural network (CNN) is a multi-layer network where at least one of the layers is a convolution layer [37]. As the name suggests, the output of a convolution layer is the result of a convolution operation, rather than matrix multiplication, as in feedforward layers, on its input. Typically, this convolution operation is performed via a set of filters. CNNs have been very successful in image recognition tasks ([61]). Most commonly, CNNs are used in conjunction with pooling layers. In this work, since the input to the CNN has been already processed with PCA to reduce the dimension of the training database, we decided to omit pooling layers in our work. Even though CNNs have been mostly used for processing image data, which can be viewed as 2-D grid data, they can also be used for 1-D data as well.

The architecture of a CNN differs among various studies. There is no perfect model, it all depends on the type and size of the input data, and on the type of the predicted parameters. In this work, we will not be constraining the architecture of the model but rather we will be providing the best strategy to constrain the parameters of the model for a specific and defined architecture.Figure 3 shows a flow-chart of a typical CNN. Table 2 represents the different layers, the output shape for each layer, and the number of parameters used in our model. In the same table, ”Conv” stands for convolutional layer, ”Flat” for flattening layer which transform the matrix of data to one dimensional, and ”Full” stands for fully connected layer. The total numbers of parameters to be trained every iteration is 764 357. The choice of such an architecture is based on aF trial and error procedure that we performed in order to find the best model that can handle all types of training databases used in this work. The strategy of selecting the number of hidden layers and the size of the convolution layers will be described in a future paper. We decided to do all our tests using the ML platform TensorFlow666https://www.tensorflow.org/ with the Keras777https://keras.io/ interface. The reason is that these two options are open-source and written in Python.

Although the calculation time is an important parameter constraining the choice of a network, we have decided not to take it into consideration while selecting the optimal Network. The reason for that is that the calculation time depends mainly on the network’s architecture which is not discussed in this paper. Two parameters are also constraining the calculation time, the number of epochs and the batch size (related to the size of the TDB). Calculation time increases with increasing epochs number and decreases with increasing batches size. The main goal of this work is to find the optimal configuration for the parameters independently of the calculation time and the Network’s architecture. As a rule of thumb, using a Database of 70 000 spectra and 50 eigenvectors, it takes around 17 hours to run the CNN over 2000 epochs using 64 batches and a Dropout of 30%. These calculations are done on a Intel Core i7-8750H CPU 2.20GHz 6 CPU.

| Layer | Output shape | # Parameters |

|---|---|---|

| Conv | 50 8 | 40 |

| Conv | 50 4 | 132 |

| Conv | 50 4 | 68 |

| Flat | 200 | 0 |

| Full | 1 024 | 205 824 |

| Full | 512 | 524 800 |

| Full | 64 | 32 832 |

| Full | 10 | 650 |

| Full | 1 | 11 |

4.1.1 Data Augmentation

Data augmentation is a regularization technique that increases the diversity of the training data by applying different transformations to the existing one. It is usually used for images classification ([56]) and speech recognition ([31]). We tested this approach in our procedure in order to take into account some modifications that could occur in the shape of the observed spectra due to a bad normalisation or inappropriate data reduction. We also took into account the fact that observed spectra are affected by noise and that the learning process should include the effect of this parameter.

For each spectrum in the TDB, 5 replicas were performed. Each of these 5 replicas has different amount of flux values but they all have the same stellar labels , , , and . The modifications are done as follows:

-

•

A Gaussian noise is added to the spectrum with a SNR ranging randomly between 5 and 300.

-

•

The flux is multiplied in a uniform way with a scaling factor between 0.95 and 1.05.

-

•

The flux is multiplied with a new scaling factor and noise was added.

-

•

The flux is multiplied by a second degree polynomial with values ranging between 0.95 and 1.05 and a having its maximum randomly selected between 4 450 and 5 000 Å.

-

•

The flux is multiplied by a second degree polynomial and Gaussian noise added to it.

The purpose of this choice is to increase the dimension of the TDB from to and to introduce some modifications in the training spectra that could appear in the observations that we need to analyze. Such modifications are the noise and the commonly observed departures from a perfect continuum normalization. Distorsions in observed spectra could appear due to bad selection in the continuum points. We have tested the two options, with and without data augmentation and the results are shown in Sec. 5. Figure. 4 displays one synthetic spectrum having =8800 K, =4.0 dex, =14 , and =0.0 dex as well as the extra 5 modifications that were performed on this spectrum. We have decided to use a continuous SNR between 5 and 300 but different modifications could be tested. As an example, [24] adapted the SNR of the spectra used in the training dataset to the SNR of the spectra for which the atmospheric parameters are needed (evaluation set). They concluded, that in case of , only two regression models are needed (SNR = 50 and 10) to cover the entire SNR range.

4.1.2 Initialisers: Kernel and Bias

The initialization defines the way to set the initial weights. There are various ways to initialize, and we will be testing the following:

-

•

Zeros: weights are initialized with 0. In that case, the activation in all neurons is the same and the derivative of the loss function is similar for every weight in every neuron. This results in a linear behavior for the model.

-

•

Ones: a similar behavior as the Zeros but using the value of 1 instead of 0.

-

•

RandomNormal: initialization with a normal distribution.

-

•

RandomUniform: initialization with a uniform distribution.

-

•

TruncatedNormal: initialization with a truncated normal distribution.

-

•

VarianceScaling: initialization that adapts its scale to the shape of weights.

-

•

Orthogonal: initialization that generates a random orthogonal matrix.

-

•

Identity: initialization that generates the identity matrix.

-

•

Lecun_uniform: LeCun uniform initializer ([36]).

-

•

Glorot_normal: Xavier normal initializer ([22]).

-

•

Glorot_uniform: Xavier uniform initializer ([22]).

-

•

he_normal: He normal initializer ([27]).

-

•

Lecun_normal: LeCun normal initializer ([36]).

-

•

he_uniform: He uniform variance scaling initializer ([27]).

For all of these initializers, the biases are initialized with a value of zero. It will be shown later that most of these initialisers give the same accuracy except for the Zeros and Ones.

4.1.3 Optimizer

Once the (parameterized) network architecture is chosen, the next step is to find the optimal values for the parameters. If we denote by the collective set of parameters, then, by definition, the optimal values, , are the ones that minimize a certain loss function ; a measure of difference between the predicted and the actual values. This optimization problem is, typically, solved in an iterative manner, by computing the gradient of the loss function with respect to the parameters.

Let denote the set of parameters at iteration t. The iterative optimization process produces a sequence of values, that converges to the optimal values . At a given step we define the history of that process as the set . The values are obtained from according to some update rule

| (4) |

where is a set of hyperparameters such as the learning rate.

Different optimization techniques use a different update rule. For example, in the so-called ”vanilla” gradient descent, the update rule depends on the most recent gradient only:

| (5) |

Other methods, include the whole history with different functional dependence on the gradient and different rates for each step (see [11] for a survey). Different optimization techniques are available in keras and we will be testing the following:

-

•

Adam: an Adaptive moment estimation that is widely used for problems with noise and sparse gradients. Practically, this optimizer requires little tuning for different problems.

-

•

RMSprop: a Root Mean Square propagation that iteratively updates the learning rates for each trainable parameter by using the running average of the squares of previous gradients.

-

•

Adadelta: it is an adaptive delta, where delta refers to the difference between the current weight and the newly updated weight. It also works as a stochastic gradient descent-method.

-

•

Adamax: an adaptive stochastic gradient descent method, and a variant of Adam and is based on the infinity norm. It is also less sensitive to the learning rates than other optimizers.

-

•

Nadam: Nesterov-accelerated Adam optimizer that is used for gradients with noise or with high curvatures. It uses an accelerated learning process by summing up the exponential decay of the moving averages for the previous and current gradient. It is also an adaptive learning rate algorithm and requires less tuning of the hyperparameters.

4.1.4 Learning Rate

As mentioned in the beginning of the section the training rate can affect the minimum reached by the loss function and therefore has a large effect on the generalization property of the solution. In this paper, we followed the recommendation of ([7]) and chose the learning rate value to be half of the largest rate that causes divergence.

4.1.5 Dropout

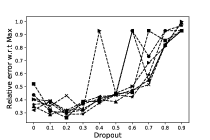

Dropout is a regularization technique for neural networks and deep learning models that prevents the network from overfitting ([58]). When Dropout is applied, randomly selected neurons are removed each iteration of the training and do not contribute to the forward propagation and no weight updates are applied to these neurons during backward propagation. Statistically this has the effect of doing ensemble average over different sub-networks obtained from the original base network. We tried to find the optimal number for the dropped out fraction of neurons. Dropout layers are put after each convolutional ones. Tests were performed with Dropout fraction ranging between 0 and 1.

4.1.6 Pooling

Pooling layers is a way to down sample the features (i.e. reducing the dimension of the data) in the database by taking patches together during the training. The most common pooling methods are the average and the max pooling [64]. The average one summarizes the mean intensity of the features in a patch and the max one considers only the most intense (ie. highest value) value in a patch. The size of the patches and the number of filters used is decided by the user. The standard way to do that is to add a pooling layer after the convolutional layer and this can be repeated one or more times in a given CNN. However, pooling makes the input invariant to small translations. In image detection, we need to know if the features exist and not their exact position. That is why this techniques has shown to be valuable when analyzing images ([25]). This is not the case in spectra because the position of the lines needs to be well known (see Sec. 5). But also, as discussed previously, pooling layers are not needed in our case because the dimension of the TDB was already reduced drastically by applying PCA.

4.1.7 Activation Functions

The activation function is a non linear transformation that is applied on the output of a layer and this output is then sent to the next layer of neurons as input. Activation functions play a crucial role in deriving the output of a model, determining its accuracy and computational efficiency. In some cases, activation functions might prevent the network from converging.

The activation function for the inner layers of a deep networks must be nonlinear, otherwise no matter how deep the network is, it would be equivalent to a single layer (i.e. regression/logistic regression). Having said that, we have tested 5 activation functions that are:

-

•

sigmoid:

-

•

tanh:

-

•

relu:

-

•

elu:

-

•

selu:

It is important to note that in this section we discuss the choice of the activation function for inner layers only. The choice of the activation for the last layer is usually more or less fixed by the type of the problem and how one is modelling it. For example, if one is performing binary classification then a sigmoid like activation is usually used (or softmax for multiclass classification) and interpreted as a probability. Whereas, for regression like problems a linear activation is usually used for the last layer. In our case, which is a purely regression problem, the last layer will have a linear activation function.

The sigmoid and tanh restrict the magnitude of the output of the layer to be . Both, however, suffer from the vanishing gradient problem ([23]). For relatively large magnitudes both functions saturate and their gradient becomes very small. Since deep networks rely on backpropagation for training the gradient, the first few layers, being a product of the succeeding layers, become increasingly small. The rectifier class of activation, relu, elu, etc… seem to minimize the vanishing gradient problem. Also, they lead to sparse representation which seems to give better results ([28, 39]).

4.1.8 Loss Functions

The Loss Function controls the prediction error of a NN as explained in Sec. 4. It is an important criterium in controlling the updates of the weights in a NN, mainly during the backward propagation. The selection of the type of the loss function is decided depending on the types of output labels. If the output is a categorical variable, one can use the Categorical Crossentropy or the Sparse Categorical Crossentropy. If we are dealing with a binary classification, Binary Crossentropy will be the normal choice for a Loss Function. Finally, in case of a regression problem like the one used in stellar spectra parameters determination, variants of Mean Squared Error Loss functions are used. In our work, we have tested the following functions:

-

•

Mean Squared Error:

-

•

Mean Squared Logarithmic Error:

-

•

Mean Absolute Error:

being the actual label, the predicted ones, and the number of spectra in the training dataset.

Loss functions selection can differ from one study to the other ([51]). For that reason we have tested the above three functions in deriving the stellar parameters.

4.1.9 Epochs

The number of Epochs is the number of times the whole dataset is used for the forward and the backward propagation. The number of Epochs controls the number of times the weights of the neurons are updated. While increasing the number of Epochs, we can move from underfitting to overfitting passing through the optimal solution for our network.

4.1.10 Batches

Instead of passing the whole training dataset into the NN, we can divide it in batches and iterate on all batches per epoch. In that case, the number of iterations will be the number of batches needed to complete one epoch. Batches are used in order to avoid the saturation of the computer memory and the decrease of iterations speed. However, the selection of the optimal batch number is not straightforward. Adopted values are usually 32, 64 or 128 ([33]).

One of the most important measures of the success for a deep neural network is how well it generalizes on some test data, not included in the training phase. In current deep neural networks the loss function has multiple minima. Many experimental studies have shown that, during the training phase, the path to reaching a minimum is as important as the final value ([40, 66, 63]). A good rule of thumb is that a ”small”, less than 1% the size of the data, batch size generalize better than ”large” batches, about 10% of the training data ([33]).

5 Results and Analysis

The effect of each CNN parameter on the accuracy of the stellar parameters has been tested. To do so, we have used the same CNN with the same parameters for all our tests while changing only the concerned one at each time. For example, to find the best epoch numbers, we fix the Activation function, the optimizer, the number of Batches, the Dropout percentage, the loss function and the kernel initialiser while iterating on the number of epochs. The same parameters are used again for finding the optimal dropout percentage and so on. The fixed values used in these calculations are the he_normal for the kernel initialiser, the mean squared error for the loss function, the ”ADAM” optimizer, the relu activation function, 50% of Dropout, 64 Batches. These tests are performed with epochs of 100, 500, 1000, 2000, 3000, 4000 and 5000. In all tests, the distribution of Training and Validation are 80% and 20% respectively.

The results will be a combination of Test errors spanning over different number of epochs for each stellar parameter and CNN configuration. The variation with the number of epochs ensures that the trends are real and not due to local minima as a result of the low number of iterations. The Tests are a collection of 110 000 synthetic spectra, half of them without noise and half with random noise as introduced in Sec. 2.

To better visualise the results and to have a better conclusion about the optimal configurations, we display in Figs. 5 to 8 the relative error of the observations. These errors are calculated by dividing the values by the maximum observation standard deviation in all configurations (ie. including all epochs simulations). This will allow us to target the minimum values and pinpoint the best parameters.

In what follows, we show the results that were performed using a training dataset of 40 000 randomly generated synthetic spectra in the ranges of Tab. 1. In Sec. 5.5, we discuss the effect of using a small or a large training database and the effect of using or not Data Augmentation.

5.1 Effective Temperature

According to Fig. 5, the use of a relu or elu activation functions leads to a similar conclusion within a difference of few percents. And this could be applied independently of the number of epochs. As for the Optimizer, Adam and Adamax optimizers seem to be consistently accurate across all epochs number. The optimal number of Batches is found to be between 32 and 64. The number of epochs is tightly related to the Batches number, however, in case of 64 Batches, the optimal number of epochs is found to be 2000. The dropout factor is, as introduced in Sec. 4.1.5, a regularization technique that avoids overfitting. This means that the optimal value depends on the size of the training database. In the case of our 40 000 sample database, the optimal dropout is found to be between 10 and 60%. Neural Networks minimize a loss function and accordingly derive the coefficient that will be used later to predict the parameters of the observations. Among the 3 loss functions that we tested, small differences are found among them. We will be using the mean squared logarithmic error for . Finally, the initialisation of the network coefficients could be done using any initialiser with a exception of Zeros and Ones. Neural networks tend to get stuck in local minima when using these two options. The ratio of the standard deviation with respect to the maximum exhibits an up and down variation that resembles a jig-saw pattern with respect to the epochs number. This is mainly due to the fact that points correspond to different runs. Also we can notice that the variation of the relative error correspond to the smallest variations between the different hyperparameters, and this is the case for all stellar parameters. Of course the search will depend on the size of the training database, the spectral region, the spectral type, the resolution

The optimal configuration that we found for corresponds to the following parameters:

-

Activation function: relu.

-

Optimizer: Adam.

-

Batches: 64.

-

Epochs: 2000.

-

Dropout: 30%.

-

Loss function: mean squared logarithmic error.

-

Kernel initialiser: he_normal.

5.2 Surface Gravity

The accuracies for gravity behave differently than the one of with respect to the various parameters. According to Fig. 6, the optimal values are found to be relu or for the Activation function; Adam, Adamax or RMSprop for the optimizer, a number of Batches between 32 and 128, an epoch number of 3000, a Dropout fraction between 0.3 and 0.4, a mean squared logarithmic error loss function, and all kinds of initialisers except for Zeros and Ones.

In case of the optimal configuration is found to be using the following parameters:

-

Activation function: .

-

Optimizer: Adamax.

-

Batches: 128.

-

Epochs: 3000.

-

Dropout: 30%.

-

Loss function: mean squared logarithmic error.

-

Kernel initialiser: he_normal.

5.3 Metallicity

The metallicity parameter, , also behaves differently than and . As seen in Fig. 7, requires a different combination of parameters in our CNN in order to reach optimal results. or relu activation functions give the least error in most epochs number situations. Adam and RMSprop optimizer lead to similar results within few percents of differences. A combination of 16 Batches and 1000 epochs is appropriate to derive with low errors. A dropout between 10% and 30%, a mean absolute error for a loss function and a RandomUniform kernel initialiser are to be used in order to reach the highest possible accuracy for . Our technique was applied to A stars and extrapolated to FGK stars (Sec. 6). However, specific considerations should be taken into account when deriving the metallicities of cool stars due to forests of molecular lines that are present in the spectra ([45]).

In case of , the optimal configuration is found to be using the following parameters:

-

Activation function: .

-

Optimizer: Adam.

-

Batches: 16.

-

Epochs: 1000.

-

Dropout: 20%.

-

Loss function: mean absolute error.

-

Kernel initialiser: RandomUniform.

5.4 Projected Equatorial Rotational Velocity

Finally, in case of the equatorial projected rotational velocity, , seems to be the optimal activation function independently of the epochs and Batches number (Fig. 8). Adam or Adamax optimizers can be used for with small differences in the derived accuracies. A combination of 32 Batches with 3000 epochs is the one that gives the minimum error for the derived values. A dropout fraction between 0.1 and 0.4 yields very close errors. A mean squared error can be used for the loss function and all kernel initialisers can also be applied except the Zeros and Ones for the same reason explained in Sec. 5.1.

In case of , the optimal configuration is found to be using the following parameters:

-

Activation function: .

-

Optimizer: Adamax.

-

Batches: 32.

-

Epochs: 3000.

-

Dropout: 30%.

-

Loss function: mean squared error.

-

Kernel initialiser: he_Uniform.

5.5 Database Size and the role of Augmentation

In order to check the dependency of the performance of the CNN on the size of the training set, three databases are used. The first database (TDB1) contains 25 000 random synthetic spectra as explained in Sec. 5 , the second database (TDB2) contains 40 000 random spectra, and the third (TDB3) contains 70 000 spectra, resulting from the same TDB1 parameter ranges. We have also checked the importance of using Data Augmentation as a regularization technique for deriving accurate parameters (see Sec. 4.1.1 for details).

For each stellar parameter, we used the optimal CNN with the configuration that was derived in Secs. 5.1 to 5.4. Each configuration was tested with TDB1, TDB2 and TDB3 with and without Data Augmentation. Fig. 9 displays the average relative standard deviation for each stellar parameter with the respect to the maximum values, for the training, validation, test and observation sets. In order to quantify these proxies for the uncertainties of the techniques, Tab. 3 collects the standard deviations for the 4 stellar parameters as a function of the training database.

According to Tab. 3, each parameter behaves differently with respect to the change of the databases. This is mainly due to the number of unique values of the parameter in the database. For that reason, is well represented by TDB1 without data augmentation whereas and require a larger database to be well represented. can be well represented with TDB3 with data augmentation whereas can be predicted with TDB2 with data augmentation. Finally, is can be predicted using TDB3 with data augmentation.

| Database | TDB1 | |||

|---|---|---|---|---|

| (K) | (dex) | (dex) | ||

| Training | 78 | 0.03 | 0.06 | 0.97 |

| Validation | 112 | 0.11 | 0.07 | 4.00 |

| Test without Noise | 98 | 0.10 | 0.07 | 3.30 |

| Test with Noise | 133 | 0.13 | 0.07 | 5.25 |

| TDB1 with Data Augmentation | ||||

| (K) | (dex) | (dex) | ||

| Training | 109 | 0.03 | 0.09 | 1.40 |

| Validation | 129 | 0.07 | 0.09 | 3.36 |

| Test without Noise | 129 | 0.10 | 0.10 | 3.66 |

| Test with Noise | 152 | 0.12 | 0.10 | 5.00 |

| TDB2 | ||||

| (K) | (dex) | (dex) | ||

| Training | 79 | 0.04 | 0.08 | 1.55 |

| Validation | 104 | 0.10 | 0.08 | 4.00 |

| Test without Noise | 99 | 0.09 | 0.09 | 2.90 |

| Test with Noise | 139 | 0.11 | 0.09 | 5.50 |

| TDB2 with Data Augmentation | ||||

| (K) | (dex) | (dex) | ||

| Training | 89 | 0.04 | 0.10 | 1.85 |

| Validation | 107 | 0.07 | 0.10 | 3.40 |

| Test without Noise | 103 | 0.09 | 0.10 | 3.12 |

| Test with Noise | 127 | 0.10 | 0.11 | 4.36 |

| TDB3 | ||||

| (K) | (dex) | (dex) | ||

| Training | 92 | 0.04 | 0.07 | 1.60 |

| Validation | 112 | 0.08 | 0.08 | 3.50 |

| Test without Noise | 105 | 0.08 | 0.08 | 2.70 |

| Test with Noise | 140 | 0.10 | 0.09 | 4.90 |

| TDB3 with Data Augmentation | ||||

| (K) | (dex) | (dex) | ||

| Training | 128 | 0.04 | 0.11 | 1.95 |

| Validation | 136 | 0.06 | 0.11 | 3.20 |

| Test without Noise | 131 | 0.07 | 0.11 | 2.70 |

| Test with Noise | 150 | 0.08 | 0.12 | 3.90 |

5.6 Accuracy for the Optimal Configuration

After selecting the optimal configuration for each stellar parameter, the predicted parameters are displayed in Fig. 10 as a function of the input ones for the training, validation, and the two sets of Test datasets. All data points are located around the line. The dispersion of the observation around that line is due to spectra with very low signal to noise. The accuracy that we found using our CNN architecture seem to be appropriate for A stars as they are comparable to most of previous studies using classical tools ([3]) or more complicated statistical tools ([19, 32]). The same is true for all parameters.

In order to verify the effect of the noise on the predicted parameters, Fig. 11 displays the variation of the accuracy of the predicted values with respect to the input SNR. The figure also displays the observations depending on the values of . The reason for that is that increasing induces blending in the spectra and thus less information to be used in the prediction. This is reflected in the case of low for which the predicted values are found to be more accurate than the case of large .

6 Extrapolating to other spectral types

In order to verify how universal the results are, we checked that the optimization of the code is not dependent on wavelength and/or spectral type, we also tested the procedure on FGK stars. To do that, we have calculated a TDB specific for FGK stars using the parameters displayed in Tab. 4. The wavelength range was selected to coincide with the one of [42]. This range is sensitive to all the concerned stellar parameters.

| Parameters | Range |

|---|---|

| (K) | [4 000,7 000] |

| (dex) | |

| (dex) | |

| () | |

| () | [5 000, 5 400] |

| 60 000 |

A database of 50 000 random synthetic spectra with known stellar labels is used in the training. About 20 000 Test data, with and without noise, were calculated in the same range of Tab. 4 to be used for verification. The optimal NNs that were introduced in Sec. 5 were used again, as a proof of concept, for the FGK TDB. The results are displayed in Tab. 5 for Training, Validation, and tests.

| Training | Validation | Test (no noise) | Test (noise) | |

|---|---|---|---|---|

| (K) | 59 | 62 | 62 | 82 |

| (dex) | 0.04 | 0.05 | 0.05 | 0.07 |

| () | 0.40 | 0.50 | 0.55 | 0.90 |

| (dex) | 0.04 | 0.05 | 0.05 | 0.06 |

Because of the low rotational velocities for FGK stars ( 100 ), the results are more accurate. That is not surprising because drastically affects the shape of the lines as in A stars. The derived errors on the stellar parameters are found to be 82 K, 0.07 dex, 0.90 and 0.06 dex for , , and , respectively (Tab. 5). These results are very promising, but we should be aware of the complications that would arise when using real observations, especially in the case of the cool M stars. These stars have been analyzed in the context of exoplanets search ([54, 46]) and show complications in their spectra mainly related to the continuum normalization. Adapting the data preparation and the CNN will be inevitable in order to take into account these effects. These results also show that when deriving the stellar parameters for specific spectral types, the wavelength region should be selected according to these spectral lines/bands most sensitive to the variations of the parameters one seeks.

7 Discussion and Conclusion

The purpose of this work is not only to find the best tool for the accurate prediction of parameters but also to show the steps that should be taken in order to reach the optimal selection of the CNN parameters. Often scientists use DL as a black box without explaining the choice of the parameters and/or architecture. In this manuscript, we have explained the reason for selecting specific hyperparameters while emphasizing the pedagogical approach. To have a more effective tool, one should change the architecture of the model. The architecture of the model depends on the type and range of the input. In this work we have fixed the architecture and iterated on the hyperparameters only.

Sections 5.1 to 5.4 show that for each stellar parameter, the setup of the network should be changed. This means that for a specific network and a specific stellar parameter, a study should be made to find the optimal configuration of hyperparameters. This is due to the contribution of the specific stellar parameter on the shape of the input spectrum. Using the PCA decomposition, we have reduced the size of the input parameters to only 50 points per spectrum while keeping more than 99.5% of the information. This is recommended in case of large databases and wide wavelength range and could avoid the use of extra pooling layers in the network. This projection technique is not only applicable for AFGK stars but can also be used for cooler stars. Further, ([29, 43]), [53] have applied a projection pursuit regression model based on the independent component analysis compression coefficients to derive , , and of M-type stars.

Although the CNN architecture was not optimized, we were able, using a strategy of finding the best hyperparameters, to reach a level of accuracy that is comparable to other adopted techniques. In fact, we found for A stars, an average accuracy of 0.08 dex for , 0.07 dex for , 3.90 for , and 127 K for . In the case of stars with less than 100 , we found the accuracy to be 90 K, 0.06 dex, 0.06 dex and 2.0 , for , , and , respectively. These accuracy values are signal to noise dependant and reduce as long as the signal to noise increases. Extrapolating the technique to FGK stars also shows that the same network could be applied to different spectral types and different wavelength ranges.

The technique that we followed in this paper could be transferable to any classification problem that involves neural network. In the future we plan to develop a strategy to find the best CNN architecture depending on the input data and the type of the predicted parameters. Once the architecture and the configuration of the parameters is settled, we will be testing the procedure on observational spectra as we did in [42], [43], [19] and [32]. Using only observational data or a combination of synthetic spectra and real observations with well known parameters will allow us to constrain the derived stellar labels while minimizing the critical synthetic gap ([15]). One more criterion that should be taken into account is when applying this technique to real observations, thorough data preparation work should be done to take into account the characteristics of each spectral type (e.g., continuum normalization in M and giant stars, and low number of lines in hot stars.).

References

- [1] Ivan Almeida, Roberta Duarte, and Rodrigo Nemmen. Deep learning model for multiwavelength emission from low-luminosity active galactic nuclei. arXiv e-prints, page arXiv:2102.05809, Feb. 2021.

- [2] Martin Anthony and Peter L. Bartlett. Neural Network Learning: Theoretical Foundations. Cambridge University Press, 1999.

- [3] E. Aydi, M. Gebran, R. Monier, F. Royer, A. Lobel, and R. Blomme. Automated procedure to derive fundamental parameters of B and A stars: Application to the young cluster NGC 3293. In J. Ballet, F. Martins, F. Bournaud, R. Monier, and C. Reylé, editors, SF2A-2014: Proceedings of the Annual meeting of the French Society of Astronomy and Astrophysics, pages 451–455, Dec. 2014.

- [4] Yu Bai, JiFeng Liu, ZhongRui Bai, Song Wang, and DongWei Fan. Machine-learning Regression of Stellar Effective Temperatures in the Second Gaia Data Release. AJ, 158(2):93, Aug. 2019.

- [5] C. A. L. Bailer-Jones. Neural Network Classification of Stellar Spectra. PASP, 109:932, Aug. 1997.

- [6] Dalya Baron. Machine Learning in Astronomy: a practical overview. arXiv e-prints, page arXiv:1904.07248, Apr. 2019.

- [7] Yoshua Bengio. Practical recommendations for gradient-based training of deep architectures. 6 2012.

- [8] Robert W. Bickley, Connor Bottrell, Maan H. Hani, Sara L. Ellison, Hossen Teimoorinia, Kwang Moo Yi, Scott Wilkinson, Stephen Gwyn, and Michael J. Hudson. Convolutional neural network identification of galaxy post-mergers in UNIONS using IllustrisTNG. MNRAS, Mar. 2021.

- [9] F. Castelli and R. L. Kurucz. New Grids of ATLAS9 Model Atmospheres. In N. Piskunov, W. W. Weiss, and D. F. Gray, editors, Modelling of Stellar Atmospheres, volume 210, page A20, Jan. 2003.

- [10] Bo Han Chen, Tomotsugu Goto, Seong Jin Kim, Ting Wen Wang, Daryl Joe D. Santos, Simon C. C. Ho, Tetsuya Hashimoto, Artem Poliszczuk, Agnieszka Pollo, Sascha Trippe, Takamitsu Miyaji, Yoshiki Toba, Matthew Malkan, Stephen Serjeant, Chris Pearson, Ho Seong Hwang, Eunbin Kim, Hyunjin Shim, Ting Yi Lu, Yu-Yang Hsiao, Ting-Chi Huang, Martín Herrera-Endoqui, Blanca Bravo-Navarro, and Hideo Matsuhara. An active galactic nucleus recognition model based on deep neural network. MNRAS, 501(3):3951–3961, Mar. 2021.

- [11] Dami Choi, Christopher J. Shallue, Zachary Nado, Jaehoon Lee, Chris J. Maddison, and George E. Dahl. On empirical comparisons of optimizers for deep learning, 2020.

- [12] M. Cropper, D. Katz, P. Sartoretti, P. Panuzzo, G. Seabroke, M. Smith, C. Dolding, H. Huckle, O. Marchal, K. Benson, and A. Gueguen. Gaia Radial Velocity Spectrometer Performance. In EAS Publications Series, volume 67-68 of EAS Publications Series, pages 69–73, July 2014.

- [13] S. J. Curran, J. P. Moss, and Y. C. Perrott. QSO photometric redshifts using machine learning and neural networks. MNRAS, Feb. 2021.

- [14] C. Dafonte, D. Fustes, M. Manteiga, D. Garabato, M. A. Álvarez, A. Ulla, and C. Allende Prieto. On the estimation of stellar parameters with uncertainty prediction from Generative Artificial Neural Networks: application to Gaia RVS simulated spectra. A & A, 594:A68, Oct. 2016.

- [15] S. Fabbro, K. A. Venn, T. O’Briain, S. Bialek, C. L. Kielty, F. Jahandar, and S. Monty. An application of deep learning in the analysis of stellar spectra. MNRAS, 475(3):2978–2993, Apr. 2018.

- [16] R. Gafeira, D. Orozco Suárez, I. Milić, C. Quintero Noda, B. Ruiz Cobo, and H. Uitenbroek. Machine learning initialization to accelerate Stokes profile inversions. A & A, 651:A31, July 2021.

- [17] Fang Kai Gan, Kenji Bekki, and Hosein Hashemizadeh. SeeingGAN: Galactic image deblurring with deep learning for better morphological classification of galaxies. arXiv e-prints, page arXiv:2103.09711, Mar. 2021.

- [18] Cecilia Garraffo, Pavlos Protopapas, Jeremy J. Drake, Ignacio Becker, and Phillip Cargile. StelNet: Hierarchical Neural Network for Automatic Inference in Stellar Characterization. arXiv e-prints, page arXiv:2106.07655, June 2021.

- [19] M. Gebran, W. Farah, F. Paletou, R. Monier, and V. Watson. A new method for the inversion of atmospheric parameters of A/Am stars. A & A, 589:A83, May 2016.

- [20] M. Gebran, R. Monier, F. Royer, A. Lobel, and R. Blomme. Microturbulence in A/F Am/Fm stars. In Gautier Mathys, Elizabeth R. Griffin, Oleg Kochukhov, Richard Monier, and Glenn M. Wahlgren, editors, Putting A Stars into Context: Evolution, Environment, and Related Stars, pages 193–198, Nov. 2014.

- [21] S. Gill, P. F. L. Maxted, and B. Smalley. The atmospheric parameters of FGK stars using wavelet analysis of CORALIE spectra. A & A, 612:A111, May 2018.

- [22] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. In Yee Whye Teh and Mike Titterington, editors, Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, volume 9 of Proceedings of Machine Learning Research, pages 249–256, Chia Laguna Resort, Sardinia, Italy, 13–15 May 2010. JMLR Workshop and Conference Proceedings.

- [23] Xavier Glorot, Antoine Bordes, and Yoshua Bengio. Deep sparse rectifier neural networks. In Geoffrey Gordon, David Dunson, and Miroslav Dudík, editors, Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, volume 15 of Proceedings of Machine Learning Research, pages 315–323, Fort Lauderdale, FL, USA, 11–13 Apr 2011. JMLR Workshop and Conference Proceedings.

- [24] A. González-Marcos, L. M. Sarro, J. Ordieres-Meré, and A. Bello-García. Evaluation of data compression techniques for the inference of stellar atmospheric parameters from high-resolution spectra. MNRAS, 465(4):4556–4571, Mar. 2017.

- [25] Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT Press, 2016. http://www.deeplearningbook.org.

- [26] G. Guiglion, G. Matijevič, A. B. A. Queiroz, M. Valentini, M. Steinmetz, C. Chiappini, E. K. Grebel, P. J. McMillan, G. Kordopatis, A. Kunder, T. Zwitter, A. Khalatyan, F. Anders, H. Enke, I. Minchev, G. Monari, R. F. G. Wyse, O. Bienaymé, J. Bland-Hawthorn, B. K. Gibson, J. F. Navarro, Q. Parker, W. Reid, G. M. Seabroke, and A. Siebert. The RAdial Velocity Experiment (RAVE): Parameterisation of RAVE spectra based on convolutional neural networks. A & A, 644:A168, Dec. 2020.

- [27] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. 2 2015.

- [28] K. He, X. Zhang, S. Ren, and J. Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In 2015 IEEE International Conference on Computer Vision (ICCV), pages 1026–1034, 2015.

- [29] E. R. Houdebine, D. J. Mullan, F. Paletou, and M. Gebran. Rotation-Activity Correlations in K and M Dwarfs. I. Stellar Parameters and Compilations of v sin I and P/sin I for a Large Sample of Late-K and M Dwarfs. APJ, 822(2):97, May 2016.

- [30] I. Hubeny and T. Lanz. Accelerated complete-linearization method for calculating NLTE model stellar atmospheres. A & A, 262(2):501–514, Sept. 1992.

- [31] Navdeep Jaitly and E. Hinton. Vocal tract length perturbation (vtlp) improves speech recognition. 2013.

- [32] Sarkis Kassounian, Marwan Gebran, Frédéric Paletou, and Victor Watson. Sliced Inverse Regression: application to fundamental stellar parameters. Open Astronomy, 28(1):68–84, May 2019.

- [33] Nitish Shirish Keskar, Dheevatsa Mudigere, Jorge Nocedal, Mikhail Smelyanskiy, and Ping Tak Peter Tang. On large-batch training for deep learning: Generalization gap and sharp minima. 2016. cite arxiv:1609.04836Comment: Accepted as a conference paper at ICLR 2017.

- [34] R. L. Kurucz. Atomic and Molecular Data for Opacity Calculations. RMXAA, 23:45, Mar. 1992.

- [35] Vlad Landa and Yuval Reuveni. Low Dimensional Convolutional Neural Network For Solar Flares GOES Time Series Classification. arXiv e-prints, page arXiv:2101.12550, Jan. 2021.

- [36] Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- [37] Yann LeCun et al. Generalization and network design strategies. Connectionism in perspective, 19:143–155, 1989.

- [38] Xiang-Ru Li, Ru-Yang Pan, and Fu-Qing Duan. Parameterizing Stellar Spectra Using Deep Neural Networks. Research in Astronomy and Astrophysics, 17(4):036, Mar. 2017.

- [39] Andrew L. Maas. Rectifier nonlinearities improve neural network acoustic models. 2013.

- [40] Behnam Neyshabur, Srinadh Bhojanapalli, David Mcallester, and Nati Srebro. Exploring generalization in deep learning. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 30, pages 5947–5956. Curran Associates, Inc., 2017.

- [41] Leon Ofman, Amir Averbuch, Adi Shliselberg, Idan Benaun, David Segev, and Aron Rissman. Automated identification of transiting exoplanet candidates in NASA Transiting Exoplanets Survey Satellite (TESS) data with machine learning methods. New Astronomy, 91:101693, Feb. 2022.

- [42] F. Paletou, T. Böhm, V. Watson, and J. F. Trouilhet. Inversion of stellar fundamental parameters from ESPaDOnS and Narval high-resolution spectra. A & A, 573:A67, Jan. 2015.

- [43] F. Paletou, M. Gebran, E. R. Houdebine, and V. Watson. Principal component analysis-based inversion of effective temperatures for late-type stars. A & A, 580:A78, Aug. 2015.

- [44] David Parks, J. Xavier Prochaska, Shawfeng Dong, and Zheng Cai. Deep learning of quasar spectra to discover and characterize damped Ly systems. MNRAS, 476(1):1151–1168, May 2018.

- [45] V. M. Passegger, A. Bello-García, J. Ordieres-Meré, A. Antoniadis-Karnavas, E. Marfil, C. Duque-Arribas, P. J. Amado, E. Delgado-Mena, D. Montes, B. Rojas-Ayala, A. Schweitzer, H. M. Tabernero, V. J. S. Béjar, J. A. Caballero, A. P. Hatzes, Th. Henning, S. Pedraz, A. Quirrenbach, A. Reiners, and I. Ribas. Metallicities in M dwarfs: Investigating different determination techniques. arXiv e-prints, page arXiv:2111.14950, Nov. 2021.

- [46] V. M. Passegger, A. Bello-García, J. Ordieres-Meré, J. A. Caballero, A. Schweitzer, A. González-Marcos, I. Ribas, A. Reiners, A. Quirrenbach, P. J. Amado, M. Azzaro, F. F. Bauer, V. J. S. Béjar, M. Cortés-Contreras, S. Dreizler, A. P. Hatzes, Th. Henning, S. V. Jeffers, A. Kaminski, M. Kürster, M. Lafarga, E. Marfil, D. Montes, J. C. Morales, E. Nagel, L. M. Sarro, E. Solano, H. M. Tabernero, and M. Zechmeister. The CARMENES search for exoplanets around M dwarfs. A deep learning approach to determine fundamental parameters of target stars. A & A, 642:A22, Oct. 2020.

- [47] Stephen K. N. Portillo, John K. Parejko, Jorge R. Vergara, and Andrew J. Connolly. Dimensionality Reduction of SDSS Spectra with Variational Autoencoders. AJ, 160(1):45, July 2020.

- [48] J. C. Ramírez Vélez, C. Yáñez Márquez, and J. P. Córdova Barbosa. Using machine learning algorithms to measure stellar magnetic fields. A & A, 619:A22, Nov. 2018.

- [49] C. Rhea and L. Rousseau-Nepton. Application of Machine Learning to Optical Spectra — Kinematic Constraints. In American Astronomical Society Meeting Abstracts, volume 53 of American Astronomical Society Meeting Abstracts, page 208.01, Jan. 2021.

- [50] Carter Rhea, Laurie Rousseau-Nepton, Simon Prunet, Julie Hlavacek-Larrondo, and Sébastien Fabbro. A Machine-learning Approach to Integral Field Unit Spectroscopy Observations. I. H ii Region Kinematics. APJ, 901(2):152, Oct. 2020.

- [51] Lorenzo Rosasco, Ernesto De Vito, Andrea Caponnetto, Michele Piana, and Alessandro Verri. Are loss functions all the same? Neural Computation, 16(5):1063–1076, 2004.

- [52] Sebastian Ruder. An overview of gradient descent optimization algorithms. CoRR, abs/1609.04747, 2016.

- [53] L. M. Sarro, J. Ordieres-Meré, A. Bello-García, A. González-Marcos, and E. Solano. Estimates of the atmospheric parameters of M-type stars: a machine-learning perspective. MNRAS, 476(1):1120–1139, May 2018.

- [54] Y. Shan, A. Reiners, D. Fabbian, E. Marfil, D. Montes, H. M. Tabernero, I. Ribas, J. A. Caballero, A. Quirrenbach, P. J. Amado, J. Aceituno, V. J. S. Béjar, M. Cortés-Contreras, S. Dreizler, A. P. Hatzes, Th. Henning, S. V. Jeffers, A. Kaminski, M. Kürster, M. Lafarga, J. C. Morales, E. Nagel, E. Pallé, V. M. Passegger, C. Rodriguez-López, A. Schweitzer, and M. Zechmeister. The CARMENES search for exoplanets around M dwarfs. Not-so-fine hyperfine-split vanadium lines in cool star spectra. A & A, 654:A118, Oct. 2021.

- [55] Kaushal Sharma, Ajit Kembhavi, Aniruddha Kembhavi, T. Sivarani, Sheelu Abraham, and Kaustubh Vaghmare. Application of convolutional neural networks for stellar spectral classification. MNRAS, 491(2):2280–2300, Jan. 2020.

- [56] Connor Shorten and T. Khoshgoftaar. A survey on image data augmentation for deep learning. Journal of Big Data, 6:1–48, 2019.

- [57] Barry Smalley. Observations of convection in A-type stars. In Juraj Zverko, Jozef Ziznovsky, Saul J. Adelman, and Werner W. Weiss, editors, The A-Star Puzzle, volume 224, pages 131–138, Dec. 2004.

- [58] Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1):1929–1958, 2014.

- [59] Rui Wang, A. Li Luo, Jian-Jun Chen, Wen Hou, Shuo Zhang, Yong-Heng Zhao, Xiang-Ru Li, Yong-Hui Hou, and LAMOST MRS Collaboration. SPCANet: Stellar Parameters and Chemical Abundances Network for LAMOST-II Medium Resolution Survey. APJ, 891(1):23, Mar. 2020.

- [60] Sun-Chong Wang. Artificial Neural Network, pages 81–100. Springer US, Boston, MA, 2003.

- [61] Junho Yim, Jeongwoo Ju, Heechul Jung, and Junmo Kim. Image classification using convolutional neural networks with multi-stage feature. In Jong-Hwan Kim, Weimin Yang, Jun Jo, Peter Sincak, and Hyun Myung, editors, Robot Intelligence Technology and Applications 3, pages 587–594, Cham, 2015. Springer International Publishing.

- [62] Bo Zhang, Chao Liu, and Li-Cai Deng. Deriving the Stellar Labels of LAMOST Spectra with the Stellar LAbel Machine (SLAM). ApJS, 246(1):9, Jan. 2020.

- [63] Chiyuan Zhang, Samy Bengio, Moritz Hardt, Benjamin Recht, and Oriol Vinyals. Understanding deep learning requires rethinking generalization. 5th International Conference on Learning Representations, ICLR 2017 - Conference Track Proceedings, 11 2016.

- [64] Yi-Tong Zhou and Rama Chellappa. Computation of optical flow using a neural network. In ICNN, pages 71–78, 1988.

- [65] Xiangxin Zhu, Carl Vondrick, Charless C. Fowlkes, and Deva Ramanan. Do we need more training data? International Journal of Computer Vision, 119(1):76–92, 2016.

- [66] Difan Zou, Yuan Cao, Dongruo Zhou, and Quanquan Gu. Gradient descent optimizes over-parameterized deep relu networks. Machine Learning, 109:467–492, 2019.