Latent Semantic Consensus For Deterministic Geometric Model Fitting

Abstract

Estimating reliable geometric model parameters from the data with severe outliers is a fundamental and important task in computer vision. This paper attempts to sample high-quality subsets and select model instances to estimate parameters in the multi-structural data. To address this, we propose an effective method called Latent Semantic Consensus (LSC). The principle of LSC is to preserve the latent semantic consensus in both data points and model hypotheses. Specifically, LSC formulates the model fitting problem into two latent semantic spaces based on data points and model hypotheses, respectively. Then, LSC explores the distributions of points in the two latent semantic spaces, to remove outliers, generate high-quality model hypotheses, and effectively estimate model instances. Finally, LSC is able to provide consistent and reliable solutions within only a few milliseconds for general multi-structural model fitting, due to its deterministic fitting nature and efficiency. Compared with several state-of-the-art model fitting methods, our LSC achieves significant superiority for the performance of both accuracy and speed on synthetic data and real images. The code will be available at https://github.com/guobaoxiao/LSC.

Index Terms:

Model fitting, deterministic fitting, latent semantic, multiple-structure data.1 Introduction

This study focuses on the problem of estimating reliable geometric model parameters from the data with severe outliers. Many computer vision tasks, such as homography/fundamental matrix estimation [1], motion segmentation [2], vanishing point detection [3], 3D reconstruction [4] and pose estimation [5], involve the geometric models.

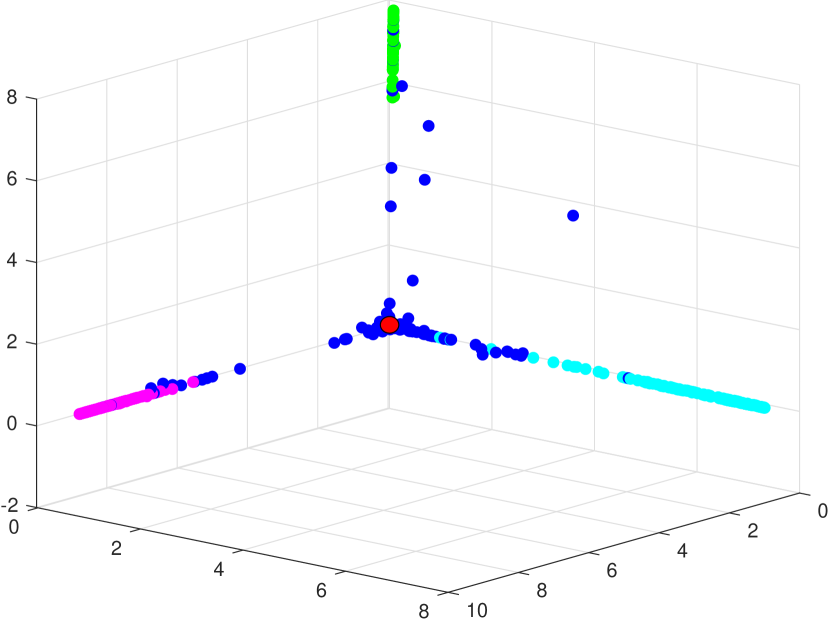

(a) Input data

(c) LSS of data points

(b) Data distribution

(d) LSS of model hypotheses

A model fitting problem typically involves two key steps: first, sampling a set of minimal subsets to generate model hypotheses. Here, a minimal subset is the smallest set of data points needed for formulating a model hypothesis, such as points for a line or a minimum of points for a homography matrix. The second step is selecting the best model hypotheses as the estimated model instances.

Among the sampling algorithms, RANSAC [6] stands out as one of the most popular, valued for its simplicity and effectiveness. However, most existing sampling algorithms, including various guided ones [7, 8], face challenges in providing intractable and consistent solutions due to their inherently random nature. Then, some deterministic sampling methods, e.g., [9, 10, 11, 12, 13], play an important roles for a model fitting method in practical applications. They not only offer tractability but also yield stable sampling results with the same input parameters. Yet, current deterministic sampling methods, i.e., [10, 11, 12], often adopt a global optimization strategy, limiting their applicability to single-structure data model fitting problems. SDF [9] and LGF [13] address multi-structural model fitting problems, but are confined to handling two-view model fitting problems, as they rely on correspondence information like matching scores, lengths, and directions.

To address these challenges, we propose the Latent Semantic Consensus (LSC) method. LSC includes a novel latent semantic consensus based sampling algorithm to handle the general multi-structural deterministic sampling problem. Specifically, we first sample a small number of minimal subset in a deterministic way. Then, we map the data points and the generated model hypotheses into two Latent Semantic Spaces (LSSs), as shown in Fig. 1. For the space of data points, inliers belonging to different model instances are mapped into several independent subspaces, while gross outliers111Outliers encompass gross outliers and pseudo-outliers: the former are outliers for all model instances in the data, while the latter are inliers for some model instances but outliers for others. are concentrated close to the origin. Simultaneously, in the LSS of model hypotheses, those corresponding to the same model instance are mapped into an independent subspace, while others cluster near the origin.

Note that, the effectiveness of LSS depends on the quality of data points and the generated model hypotheses. This is because the outliers and bad model hypotheses will affect the distributions of corresponding points in LSS. Thus, we preserve the latent semantic consensus of two LSSs, to sample high-quality minimal subsets. Initially, we remove some outliers, specifically focusing on those points close to the origin of LSS. Subsequently, we select the data points that correspond to neighbors of each point in LSS as a minimal subset, leveraging the fact that the data points belonging to the same model instance share similar distributions in LSS. Following this, we remap data points and newly generated model hypotheses to two LSSs again. Finally, we further remove some outliers and bad model hypotheses to preserve the latent semantic consensus.

For the second step of model fitting, we design a novel model selection in view of the advantage of our high-quality model hypotheses. Specifically, we explore the latent semantic information in LSS of model hypotheses. In this analysis, we refrain from scrutinizing LSS of data points to mitigate the sensitivity to unbalanced data distribution. Recall that two points share a similar value in a subspace of LSS, if they correspond to the same model instance. Thus, we propose to label the points into their respective subspaces in LSS via an integer linear program. After that, we select the best model hypothesis among the ones that share the same labels as an estimated model instance. Notably, in LSS, all high-order model fitting problems (e.g., homography/fundamental matrix estimation) are regressed to a subspace recovery problem, which is much easier to be dealt with.

Finally, we summarize the key contributions of this work as follows:

-

•

We propose to preserve latent semantic consensus in LSS of data points, for deterministically sampling high-quality minimal subsets. To the best of our knowledge, we are the first one to address general deterministic sampling problem for multi-structural data.

-

•

We design a novel model selection algorithm that formulates the model fitting problem as a simple subspace recovery problem based on LSS of model hypotheses.

-

•

The qualitative and quantitative experiments on several model fitting tasks show that the proposed fitting method is able to achieve better results over several state-of-the-art competing methods.

The rest of the paper is organized as follows: We review some related work in Sec. 2, and describe the components of the proposed fitting method in Sec. 3. We present the experimental results in Sec. 4. We perform some ablation studies on different components of LSC in Sec. 5 and draw conclusions in Sec. 6.

2 Related Work

In this section, we briefly review some related works on random and deterministic model fitting.

2.1 Random Model Fitting Methods

Random model fitting methods can be classified into sampling and model selection methods according to their process. For the first step, to hit all model instances in data, that is, there is at least an all-inlier minimal subset sampled for each model instance, the sampling problem approximately possesses a combinatorial nature, making the number of minimal subsets huge. For an example of data with the outlier ratio , RANSAC [6], one of the most popular sampling algorithms, requires to sample minimal subsets, to hit a model instance with the probability . Here is the minimum number of data points in a minimal subset. Even worse, the sampled minimal subsets contain a large number of bad ones. Then, there are many variants of RANSAC to improve the performance on more complex cases, e.g., ROSAC [14], LO-RANSAC [15], HMSS [16] and CBS [8].

For model selection methods, they can be roughly classified into the consensus analysis-based and the preference analysis-based methods, according to the space they perform. The consensus analysis-based fitting methods, e.g., MSHF [1] and CBG [17], select the best model hypotheses as the estimated model instances; The preference analysis-based methods, e.g., SWS [18], RPA [19], RansaCov [20], T-linkage [21], MultiLink [22], MCT [23] and CLSA [2], label data points and then estimate the parameters of model instances. These two kinds of model fitting methods have their own advantages and disadvantages. Specifically, the former methods are not sensitive to unbalanced data distribution, but they rely heavily on the quality of model hypotheses; in contrast, the latter methods are very sensitive to unbalanced data distribution, but they estimate model instances based on data points, so they do not rely heavily on the generated model hypotheses. Thus, there is not a model selection algorithm that has clear advantage.

Deep learning-based methods have gained popularity in various computer vision tasks, including model fitting [24, 25, 26, 27]. These methods offer a significant advantage in learning powerful representations directly from raw data, eliminating the need for hand-crafted features. However, a drawback is their dependency on large amounts of training data, and its sensitivity to outliers, particularly in multi-structural datasets.

2.2 Deterministic Model Fitting Methods

Compared with random model fitting methods whose results often vary, deterministic model fitting methods are able to provide stable solutions and they also can be tractable. Most of currently existing deterministic model fitting methods cast the fitting task as a global optimization problem. Such as, Li [10] and Chin et al. [11] adopted a tailored branch-and-bound scheme and the A* algorithm to search for a globally optimal solution, respectively; Doan et al. [28] and Le et al. [12] introduced a hybrid quantum-classical algorithm and the maximum consensus criterion for deterministic fitting, respectively; Fan et al. [29] discussed two loss functions to seek geometric models.

These above deterministic methods only work for single-structural data and they also often suffer from high computational complexity. Xiao et al. [9, 13] used the superpixel information and the min-hash technique to propose two heuristic methods for multiple-structural deterministic model fitting. However, they only work for two-view fitting problem due to the information of correspondences they depend on. That is, they cannot be used for general fitting problems, e.g., line fitting and circle fitting.

This paper also proposes a deterministic method, but, it not only can work for multiple-structural data, but also has not the limitation of two-view model fitting. That is, this paper proposes a deterministic methods by preserving latent semantic consensus for general multiple-structural model fitting problems.

It is important to note that CLSA [2] and the proposed method (LSC) both utilize latent semantic analysis to tackle model fitting problems. However, they differ significantly in the following aspects: 1) CLSA employs LSA to eliminate outliers in LSS, whereas LSC preserves latent semantic consensus in LSS for minimal subset sampling. 2) CLSA constructs LSS once, and its effectiveness depends on the input data; in contrast, LSC dynamically updates LSS based on generated model hypotheses, enhancing its effectiveness. 3) CLSA is a random model selection method, whereas LSC follows a deterministic approach for both sampling and model selection. Consequently, LSC provides more stable and superior fitting results compared to CLSA.

3 Proposed Method

In this section, we introduce the details of the Latent Semantic Consensus based model fitting method (called LSC). We first describe the problem formulation of model fitting (Sec. 3.1). Then, we introduce latent semantic for model fitting (Sec. 3.2). After that, we propose the latent semantic consensus based sampling algorithm (Sec. 3.3) and model selection algorithm (Sec. 3.4). Finally, we summarize and analyze LSC in Sec. 3.5.

3.1 Problem Formulation

A geometric model is usually provided beforehand, such as, line, circle, homography matrix, fundamental matrix, etc, for model fitting. Then, given data points , the output of geometric model fitting is the parameter of model instances and the labels of data points. Here, a model instance (or structure) is an instance of the given geometric model, and the labels of data points include inliers belonging to different model instances and outliers.

As aforementioned, a model fitting problem generally contains minimal subsets sampling for model hypothesis generation, and model selection based on the generated model hypotheses. Here, a model hypothesis is a parameter formulation of the given geometric model. For the model of a line, a model hypothesis is , where for a data point ; For the model of a circle, a model hypothesis is , where is the coordinate values of the center of a circle, and is the value of radius, and for a data point ; For the model of a homography matrix, a model hypothesis is matrix in , where for a data point , and and are the image coordinates of the two feature points in the image pair. Of course, the geometric model is not limited to line, circle and homography matrix.

3.2 Latent Semantic Analysis for Model Fitting

In this section, we delve into Latent Semantic Analysis (LSA) [30] as a key component of our deterministic model fitting approach. Drawing inspiration from topic modeling, a widely used technique in uncovering word-use patterns and document connections in word processing [31], LSA proves instrumental. Much like how words within the same topic exhibit similar distributions, our data points and model hypotheses share an analogous relationship to words and documents. In the realm of model fitting, certain data points align with inliers of a model hypothesis, mirroring the association between words and documents in topic modeling. The crux lies in recognizing that some model hypotheses correspond to actual model instances. Just as certain words form parts of a document, specific documents correspond to a broader topic. The parallels between these relationships underscore the efficacy of employing topic modeling principles for our model fitting endeavors.

Among various topic modeling methods [32, 33, 34], we opt for LSA to explore latent semantic information in our deterministic model fitting. Its simplicity, effectiveness, and non-interference with our deterministic approach make it an ideal choice.

To scrutinize the relationship between model hypotheses and data points via LSA, we initiate by constructing a preference matrix [21]. This matrix reflects the relationship between model hypotheses and data points based on the residual value. In essence, for a given data point and a model hypothesis , the preference value is defined as:

| (1) |

where is the Sampson distance [35] (residual value) between and , and is a non-zero threshold. We note that, for a large residual value, the corresponding preference value is small; Conversely, for a small residual value, the corresponding preference value is large.

Then we can construct a preference matrix by Eq. (1) for data points and model hypotheses. Note that, the initial model hypotheses are generated by neighbors of each data point ( is the number of a minimal subset), to preserve the deterministic nature of the proposed method. After that, we introduce LSA to decompose the preference matrix. That is, we decompose by singular value decomposition (SVD) as follows:

| (2) | ||||

i.e.

| (3) |

where and are the orthogonal matrices of left and right singular vectors (here is an identity matrix), respectively. is a diagonal matrix with singular values on the diagonal and the singular values are listed in non-increasing order, i.e., , .

Then, the preference matrix is approximately decomposed by the top singular values in , since the top (even ) of singular values account for over of all singular values, for SVD. i.e.,

| (4) |

Based on Eq. (4), we construct two latent semantic spaces (LSS) for data points and model hypotheses, respectively. For LSS of data points (we call DP-LSS), the points-to-points inner products are written as:

| (5) | ||||

Thus, following LSA, we utilize the rows of as coordinates for DP-LSS. By Eq. (5), data points are project onto LSS, and meanwhile, the dimension of is also reduced to .

For LSS of model hypotheses (we call MH-LSS), the hypotheses-to-hypotheses inner products are written as:

| (6) | ||||

Thus, we adopt the rows of as the coordinates for model hypotheses in MH-LSS. Also, the dimension of is reduced to .

3.3 Latent Semantic Consensus based Sampling Algorithm

In this subsection, we propose a novel latent semantic consensus based sampling algorithm (called LSC-SA). To alleviate the bad influence of outliers, we first remove outliers by analyzing the distribution of the points in DP-LSS.

The points in DP-LSS corresponding to inliers are often situated farther from the origin of coordinates, while other points tend to be closer to the origin, as depicted in Fig. 2. This is attributed to the fact that the value of is influenced by the preference value, and given that inliers typically receive a larger preference value than outliers.

Given a data point and the corresponding projected point in DP-LSS, the distance between and the origin in DP-LSS can be formulated as:

| (7) |

where is the -th row of . Then given the -th row of , i.e., , we can decompose as:

| (8) |

For , the inner products are written as:

| (9) | ||||

For , the inner products are written as:

| (10) | ||||

Therefore, the distance between points and the origin in DP-LSS depends on the preference value. Leveraging the above characteristic allows us to effectively identify and remove outliers. To this end, we introduce information theory [36] to analyze the distance . Specifically, we first measure the gap between and the maximum value in as:

| (12) |

Then, we compute the quantity of information provided by each mapped point :

| (13) |

where represents the probability of to be an outlier.

After that, we remove the points with quantity of information lower than an entropy value and retain the other points:

| (14) |

where is the estimated entropy value.

After that, we integrate latent semantic consensus into DP-LSS to guide the sampling of subsets for model hypothesis generation. Specifically, the value of a point in DP-LSS is derived from the corresponding preference value, fostering similarity among inliers belonging to the same model instance. With the remaining points , we search for (the minimum number of data points to generate a model hypothesis) neighbors for each point. Subsequently, we sample subsets from the input data points, with each subset consisting of neighbors from DP-LSS. In addition, to further refine the sampled subsets, we introduce the model hypothesis updating strategy [9]. This involves leveraging global residual information to mitigate sub-optimal results. The overall process is summarized in the proposed Latent Semantic Consensus-based Sampling Algorithm (LSC-SA), outlined in Algorithm 1.

In Algorithm 1, to select the best model hypotheses in the model hypothesis updating strategy, we assign a model hypothesis a positive weighting value [37]:

| (15) |

where is the estimated inlier noise scale of the -th model hypothesis, and is the bandwidth of the -th model hypothesis, and it is defined as [38]:

| (16) |

For the kernel function , we employ the popular Epanechnikov kernel:

| (19) |

By Eq. (15), a good model hypothesis is assigned a large weight since it includes more inliers with small residual values.

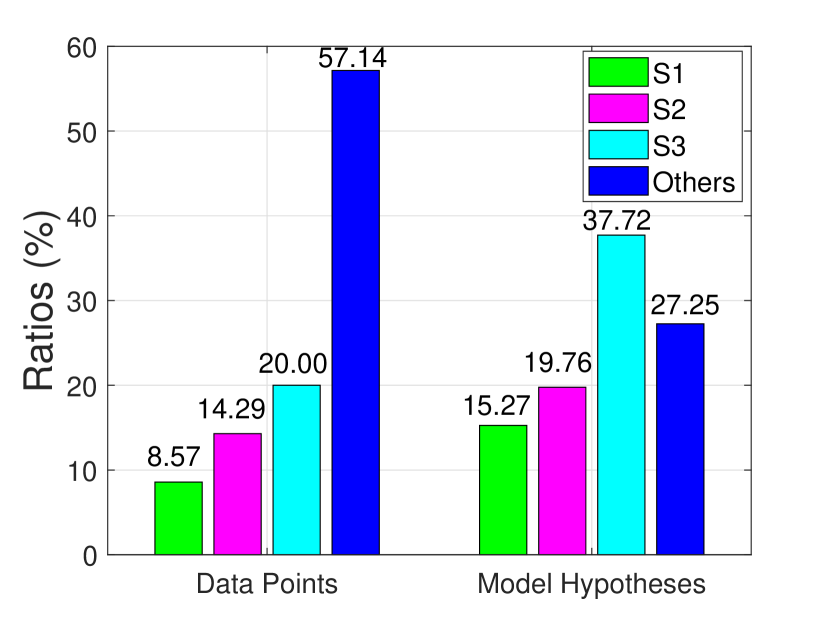

3.4 Latent Semantic Consensus based Model Selection Algorithm

In this subsection, we propose a novel latent semantic consensus based model selection algorithm (LSC-MSA). To mitigate the sensitivity on the distribution of input data points, we analyze MH-LSS for model selection. In the space of generated model hypotheses, the disparities in proportions between different categories are not as pronounced as in the data point space. As illustrated in the example in Fig. 1 (b), the smallest and largest ratios for different categories of data points are and , respectively, while the corresponding ratios for different categories of model hypotheses are and , respectively.

Firstly, we remove bad model hypotheses by the same manner as Eq. (14). This is because, MH-LSS includes the similar character as DP-LSS, that the points corresponding to good model hypotheses are often far from the origin of coordinates while the other points are close to the origin. Then, we formulate the model selection problem as a simple subspace recovery problem in MH-LSS based on the remaining model hypotheses .

Note that, in MH-LSS, the points corresponding to the model hypotheses of the same model instances generally belong to the same one-dimensional subspace. We make some discussions for this claim. Given the preference value of a model hypothesis , i.e. , and the corresponding -th row of , i.e. , we rewrite Eq. (6) as:

| (20) | ||||

Then, we can obtain the following equation:

| (21) | ||||

Finally, we obtain the coordinates of in MH-LSS as:

| (22) | ||||

We can see that, the coordinates of in MH-LSS depend on the preference value of to the input data points. Notably, the model hypotheses corresponding to the same model instance share similar preference to data points. Consequently, the projected points in MH-LSS corresponding to these hypotheses will exhibit the same subspace.

Then, we propose to cluster the remaining mapped points () to several independent subspaces for subspace recovery, where each subspace corresponds to a model instance and each cluster of points belongs to the model instance. Theoretically, we can use a normal clustering method to cluster the remaining points in MH-LSS. However, we propose a simple but effective subspace recovery strategy to label the remaining points in MH-LSS. Specifically, we directly estimate several origin-lines in MH-LSS (lines passing through ), using them for data point clustering.

Our goal is to estimate origin-lines that best capture the remaining points in MH-LSS. Notably, the proposed clustering strategy simplifies the intricate task of segmenting high-dimensional data, reducing it to the straightforward process of clustering points by estimating origin-lines in LSS.

For estimating an origin-line, it only requires to sample one point in MH-LSS to generate a model hypothesis of origin-line as the origin-line always passes through the origin of MH-LSS. To ensure with probability that at least one all-inlier minimal subset is sampled, the number of minimal subsets required to sample is computed as:

| (23) |

where denotes the ratio of gross outliers and denotes the number of data points in a minimal subset (here as only one point is needed to estimate an origin-line). In our case, the ratio of gross outliers (i.e. bad model hypotheses) is small since we have removed most of gross outliers. Thus, we only need sample a very small number of the minimal subsets for the origin-line estimation. For example, for and , is approximately equal to .

For the residual value between an origin-line (derived from a sampled point ) and a point , as shown in Fig. 3, we can compute it as follows:

| (24) | ||||

where denotes the standard inner product.

After sampling a small number of model hypotheses, we adopt an integer linear program to estimate the origin-lines due to its effectiveness. The integer linear program is used to search for significant model hypotheses that cover the largest number of inliers of multiple model instances in data. For points in and model hypotheses , we estimate the origin-lines via an integer linear program as [20]:

| (25) | ||||

where and denote the label of a point and a model hypothesis , respectively. If a point (or a model hypothesis ) is selected to be an inlier of the returned model hypothesis, the label (or ); Otherwise, (or ). represents that the point belongs to the inliers of the model hypothesis :

| (26) |

where is a threshold. We set to be a fixed value for different model fitting tasks, and the influence of its value on the proposed method will be discussed in Sec. 4.2.

After that, we can obtain the labels of the remaining model hypotheses, and we select the model hypotheses with the largest weighting scores by Eq. (15) for the same label as the final estimated model instances.

3.5 The Complete Method and Analysis

Based on the components described in the previous sections, we summarize the complete Latent Semantic Consensus based fitting method (called LSC) in Algorithm 2.

The proposed LSC method comprises a novel sampling algorithm and a new model selection algorithm. In essence, LSC not only analyzes the distributions of points in DP-LSS to generate high-quality model hypotheses but also exploits MH-LSS to accurately estimate model instances. Importantly, LSC involves deterministic nature, ensuring consistent and reliable solutions for model fitting.

For the computational complexity of LSC, in Algorithm 1, the neighborhood construction and the latent semantic space constructing take the main time. For the neighborhood construction, the time complexity is close to . For constructing the latent semantic space (i.e. Step 2), we use truncated SVD where the time complexity depends on the number of singular values retained after truncation. Thus, the computational complexity is approximately , where is the dimension of MH-LSS. Step - is based on the results of remaining model hypotheses (recall that we only deal with a very small number of high-quality model hypotheses), and their time complexity is close to . Therefore, the total complexity of LSC approximately amounts to .

4 Experiments

In this section, we investigate the performance of the proposed LSC fitting scheme on synthetic data and real images for model fitting tasks, including line fitting, circle fitting, homograhy/fundamental matrix estimation and motion segmentation. All experiments are run on MS Windows with Intel Core i- CPU and RAM in Matlab.

4.1 Dataset Setting and Evaluation Metrics

For synthetic data, we use all four datasets from [2] for line fitting, and the datasets from [1] for circle fitting.

For real images, we test all competing methods for single- and multiple-structural data. For multiple-structural data, we adopt all image pairs from the AdelaideRMF dataset [39] for homography and fundamental matrix estimation and all videos from [40] for motion segmentation. For single-structural data, we uses image pairs to construct a dataset (called MS-COCO-F) from the MS-COCO images [41] for homography estimation and image pairs to construct a dataset (called YFCC100M-F) from the YFCC100M dataset [42] for the fundamental matrix estimation task.

To evaluate the performance of a model fitting method, we follow most of model fitting methods to compute the segmentation error (SE) as follows [16]:

| (27) |

4.2 Parameter Analysis and Settings

There are two parameters for LSC, i.e., the threshold in Eq. (1) and the threshold in Eq. (26). To verify the influence of different values of parameters on the performance of LSC, we randomly select image pairs from the MS-COCO-F dataset for single-structural homography estimation, and image pairs from the YFCC100M-F dataset for single-structural fundamental matrix estimation, and all image pairs from the AdelaideRMF dataset for multiple-structural tasks.

For the threshold , we report the quantitative results (the mean and standard deviation values) of SE and the CPU Time obtained by LSC with different values of (-) for the task of homography and fundamental matrix estimation in Fig. 4. Note that, the mean and standard deviation values are obtained by LSC on different image pairs for each . We can see that, for homography estimation, LSC achieves stable values of SE with different values of on the MS-COCO-F dataset, but it obtains different values of SE on the AdelaideRMF datasets due to the influence of multiple structures. The CPU time used by LSC has not obvious change with different values of on the both datasets. We also find that LSC obtains the lowest values of SE when on the AdelaideRMF datasets. Thus, we set for homography estimation in the following experiments. For fundamental matrix estimation, LSC also achieves the same performance on the datasets with single and multiple structures as homography estimation, and it achieves the lowest values of SE when on the AdelaideRMF datasets. Thus, we set for fundamental matrix estimation in the following experiments. For the other tasks, we do not change the values of and set .

For the threshold , we report the quantitative results (the mean and standard deviation values) of SE and the CPU Time obtained by LSC with different values of (-) for the task of homography and fundamental matrix estimation in Fig. 5. Note that, we only test this experiment on the AdelaideRMF datasets since only affects the data with multiple structures. Recall that, we perform the model selection in the latent semantic space and regress all model fitting tasks to the subspace recovery problem, thus, we can set the same values of for all model fitting tasks. From Fig. 5, LSC obtains the lowest values of SE for homography and fundamental matrix estimation when . Thus, in the following experiments, we set .

4.3 Results on Synthetic Data

In this section, we compare the proposed LSC with several state-of-the-art model fitting methods, including MSHF [1], RansaCov [20], T-linkage [21], RPA [19], Multi-link [22], CBG [17] and CLSA [2], for line and circle fitting on synthetic data.

(a) 3 lines

(b) 4 lines

(c) 5 lines

(d) 6 lines

| Data | MSHF | RansaCov | T-linkage | RPA | Multi-link | CBG | CLSA | LSC | |

|---|---|---|---|---|---|---|---|---|---|

| 3 lines | 4.74 | 3.25 | 3.42 | 4.62 | 3.70 | 2.83 | 2.21 | 1.00 | |

| 2.89 | 0.09 | 1.17 | 4.15 | 1.10 | 0.19 | 1.08 | - | ||

| 1.96 | 2.88 | 88.94 | 175.31 | 2.26 | 2.85 | 1.09 | 0.86 | ||

| 6.31 | 2.72 | 9.97 | 2.77 | 6.42 | 3.12 | 2.28 | 2.63 | ||

| 4.87 | 0.81 | 8.19 | 0.63 | 6.75 | 0.32 | 0.49 | - | ||

| 4 lines | 2.12 | 3.46 | 119.12 | 222.11 | 2.49 | 3.40 | 1.23 | 0.91 | |

| 5 lines | 2.71 | 2.50 | 10.67 | 3.16 | 2.94 | 5.33 | 2.41 | 1.33 | |

| 1.18 | 0.15 | 11.18 | 1.76 | 3.85 | 0.53 | 1.38 | - | ||

| 2.11 | 3.51 | 154.61 | 360.55 | 2.84 | 3.10 | 1.42 | 1.23 | ||

| 10.78 | 8.31 | 35.17 | 40.60 | 18.02 | 6.76 | 4.62 | 3.70 | ||

| 3.89 | 1.68 | 9.92 | 8.54 | 7.55 | 0.42 | 2.14 | - | ||

| 6 lines | 2.16 | 4.37 | 193.74 | 430.03 | 3.22 | 3.84 | 1.64 | 1.35 |

4.3.1 Line Fitting

We evaluate the performance of the eight fitting methods for line fitting on four challenging synthetic datasets (see Fig. 6). We repeat each experiment times except for our proposed LSC due to its deterministic nature. We report the mean and standard deviation of SE obtained by the eight fitting methods, and the average CPU time (in seconds) used by the competing methods in Table I.

In Fig. 6 and Table I, most of competing methods are able to achieve good fitting performance, and the proposed LSC achieves the lowest SE and it also is the fastest one among all eight competing methods. The four synthetic datasets involve different challenges, e.g., the “ line” data includes cross data points, the “ line” data includes data points with different distributions, the “ line” data includes the both challenges and the “ line” data is most challenging due to its complex distributions. All eight competing methods achieve low SE on the top two data, and they also achieve the similar performance on the “ line” data expect for T-linkage. This is because that T-linkage automatically estimates the number of model instances in data, and it is hard to do that for complex distributions. For the “ line” data, the values of SE obtained by only CLSA and LSC are lower than .

For the standard deviation performance, the proposed LSC show significant superiority over the other competing methods due to its deterministic nature. For the CPU time, LSC also has advantages since it only handles a small number of high-quality model hypotheses.

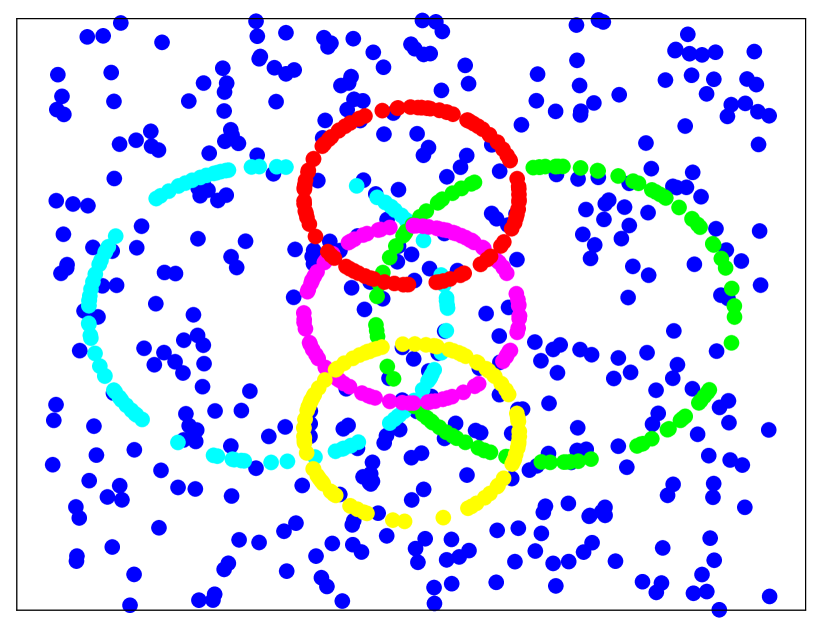

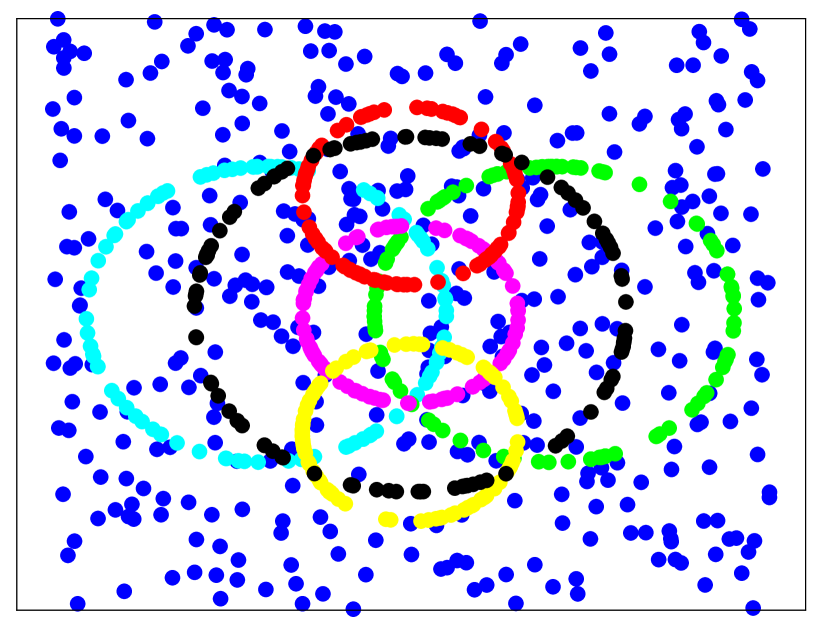

4.3.2 Circle Fitting

(a) 3 circles

(b) 4 circles

(c) 5 circles

(d) 6 circles

| Data | MSHF | RansaCov | T-linkage | RPA | Multi-link | CBG | CLSA | LSC | |

|---|---|---|---|---|---|---|---|---|---|

| 3 circles | 16.84 | 0.52 | 1.53 | 0.58 | 18.714 | 1.95 | 8.35 | 0.71 | |

| 8.59 | 0.07 | 3.91 | 0.08 | 8.179 | 0.52 | 3.06 | - | ||

| 1.66 | 2.56 | 58.44 | 45.46 | 2.26 | 7.80 | 1.37 | 0.63 | ||

| 20.55 | 2.67 | 21.08 | 5.80 | 8.85 | 1.61 | 38.55 | 1.25 | ||

| 9.53 | 0.65 | 8.96 | 6.76 | 5.92 | 0.10 | 2.77 | - | ||

| 4 circles | 2.10 | 3.35 | 89.42 | 74.67 | 2.24 | 3.40 | 5.85 | 0.76 | |

| 5 circles | 10.64 | 0.23 | 7.95 | 0.31 | 25.84 | 1.17 | 10.62 | 0.33 | |

| 7.27 | 0.03 | 7.47 | 0.10 | 6.29 | 0.13 | 7.31 | - | ||

| 1.91 | 2.96 | 100.86 | 85.12 | 2.67 | 5.60 | 1.55 | 0.98 | ||

| 17.81 | 1.99 | 19.47 | 6.68 | 30.32 | 1.94 | 31.55 | 1.00 | ||

| 6.12 | 2.21 | 10.85 | 5.12 | 3.94 | 0.17 | 7.58 | - | ||

| 6 circles | 2.07 | 3.30 | 129.12 | 117.40 | 3.49 | 5.87 | 1.68 | 1.11 |

We perform the same setting as line fitting for circle fitting (see Fig. 7), and report quantitative comparison results obtained by the eight fitting methods in Table II.

From Fig. 7 and Table II, we can see that circle fitting is more challenging that line fitting, and only RansaCov, RPA, CBG and LSC are able to achieve values of SE on all the four datasets. Note that, CLSA is able to achieve good performance for line fitting but bad performance for circle fitting because the generated model hypotheses include more bad ones and CLSA is a model selection method and its performance depends on the quality of model hypotheses. In contrast, LSC not only generates high-quality model hypotheses, but also effectively estimates model instances in data. For the standard deviation performance and the CPU time, LSC shows the same advantages as line fitting for circle fitting.

4.4 Results on Real Images

In this subsection, we evaluate the performance of LSC on all image pairs from the AdelaideRMF dataset with data for two popular fitting tasks, i.e., homography and fundamental matrix estimation. For the competing methods, we add more fitting methods, i.e., RCMSA [43], Prog-X [44], SDF [9] and LGF [13], since they can deal with two-view fitting methods. To show the effectiveness of the proposed LSC, we also test it on two large datasets (i.e., the MS-COCO-F and YFCC100M-F datasets). We compare it with two deterministic fitting methods (i.e., SDF and LGF), and we run RANSAC as a baseline. In addition, we run two deep learning based methods (i.e., OANet [45] and ConvMatch [46]).

4.4.1 Homograhy Estimation

(a) Barrsmith

(d) Neem

(b) Hartley

(e) Elderhallb

(c) Napiera

(f) Johnsona

We show some challenging multiple-structural cases obtained by LSC in Fig. 8, and report quantitative comparisons of eleven fitting methods for homography estimation on the AdelaideRMF dataset in Fig. 9.

In Fig. 9, three deterministic fitting methods (i.e., SDF, LGF and LSC) achieve better performance on SE over the other competing methods. That is, the values of SE only obtained by SDF, LGF and LSC are below . This can show the effectiveness of deterministic fitting methods. For the CPU time, the proposed LSC is the fastest among all competing methods, and it is only one method within one second. From Fig. 8, it is hard to handle the multiple-structural data. For example, there is a model instance with a few correspondences and a model instance with correspondences from two different parts on the “Barrsmith” image pair; Correspondences from two different model instances are close on the “Elderhallb” image pair; The distributions of correspondences from different model instances are totally different on the “Napiera” and “Neem” image pairs; The number of correspondences from different model instances are unbalanced on the “Hartley” and “Johnsona” image pairs. Even so, the proposed LSC is able to successfully estimate all model instances in data, and achieves the best performance on both SE and Time among all competing methods.

We report quantitative comparisons of six fitting methods for homography estimation on the MS-COCO-F dataset in Fig. 10. Two deep learning based methods achieve large values of SE, especially for OANet. This is because we directly use the best model trained by the authors to test, and this is able to evaluate the generation of a deep learning method. RANSAC, SDF, LGF and LSC are unsupervised methods, and LSC achieves the lowest value of SE and it also uses the least time.

(a) Breadcubechips

(d) Breadcartoychips

(b) Carchipscube

(e) Dinobooks

(c) Breadtoycar

(f) Boardgame

4.4.2 Fundamental Matrix Estimation

We show some challenging multiple-structural cases obtained by LSC in Fig. 11 and report quantitative comparisons of eleven fitting methods for fundamental matrix estimation on the AdelaideRMF dataset in Fig. 12.

In Fig. 12, some fitting methods, e.g., RansaCov, MSHF, RCMSA, CLSA, SDF and LGF, achieve small values of SE. But, LSC is able to improve the state-of-the-art performance on SE, and achieves the lowest values of SE among all eleven fitting methods due to the effectiveness of LSC-SA and LSC-MSA algorithms. In addition, LSC is the fastest one among all competing methods due to the small number of high-quality model hypotheses. From Fig. 11, the proposed LSC successfully estimates all model instances in data. Note that, some correspondences are wrongly labeled on some image pairs because different model instances involves different inlier noise scales and it is hard to know the scales in advance.

We report quantitative comparisons of six fitting methods for fundamental matrix estimation on the YFCC100M-F dataset in Fig. 13. All competing methods do not achieve low values of SE, especially for two deep learning based methods (i.e. OANet and ConvMatch) whose values of SE are over . Because the YFCC100M-F dataset involves many challenging cases, e.g., complex background, multiple scales of images and different distributions of correspondences. Even so, LSC achieves the best performance on both SE and Time among all six fitting methods.

(a) 1RT2TC

(b) Cars6

(c) Truck

(d) People2

| Method | SSC [47] | LRR [48] | MTPV [49] | RSIM [50] | MSSC [51] | Subset [52] | LSC |

| 2 motions: 120 sequences | |||||||

| Mean | 1.52 | 1.33 | 1.57 | 0.78 | 0.54 | 0.23 | 0.59 |

| Median | 0.00 | 0.00 | - | 0.00 | 0.00 | 0.00 | 0.00 |

| 3 motions: 35 sequences | |||||||

| Mean | 4.40 | 2.51 | 4.98 | 1.77 | 1.84 | 0.58 | 1.10 |

| Median | 0.56 | 0.00 | - | 0.28 | 0.30 | 0.00 | 0.18 |

| All: 155 sequences | |||||||

| Mean | 2.18 | 1.59 | 2.34 | 1.01 | 0.83 | 0.31 | 0.71 |

| Median | 0.00 | 0.00 | - | 0.00 | 0.00 | 0.00 | 0.00 |

4.4.3 Motion Segmentation

In this subsection, we evaluate the performance of the proposed LSC on the popular dataset, i.e., [40], for the task of motion segmentation, and we compare it with several state-of-the-art motion trajectory segmentation methods, including: SSC [47], LRR [48], MTPV [49], RSIM [50], MSSC [51] and Subset [52]. We report the segmentation errors (in percentage) obtained by all the seven competing methods in Table III, and show some motion trajectory segmentation results obtained by the proposed LSC in Fig. 14. We also report the CPU time used by Subset and LSC on the three datasets in Table IV.

In Fig. 14 and Table III, three model fitting based methods (i.e. MSSC, Subset and LSC) achieve lower values of SE than the other subspace based methods. Subset achieves a little better performance on SE than MSSC and LSC, because Subset samples minimal subsets from three kinds of model, i.e., affine, homography and fundamental matrix, while MSSC and LSC only use the information of homography. For MSSC that samples minimal subsets by RANSAC, LSC uses LSC-SA to do that. LSC achieves better performance than MSSC, and this can show the effectiveness of LSC-SA. In Table IV, LSC is much faster than Subset (about times faster than Subset).

(a) Input images

(b) Epipolar inliers

(c) Camera pose

| Method | RANSAC | LGF | LSC | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Sequence | #Image | TE | MOE | TIME | TE | MOE | TIME | TE | MOE | TIME |

| 00 | 4541 | 1.42 | 1.30 | 1.07 | 1.27 | 1.61 | 0.71 | 1.04 | 1.57 | 0.33 |

| 01 | 1101 | 3.06 | 1.87 | 2.04 | 3.10 | 3.08 | 0.62 | 2.42 | 1.71 | 0.36 |

| 02 | 4661 | 4.01 | 3.80 | 3.51 | 2.49 | 3.28 | 0.52 | 2.24 | 2.67 | 0.40 |

| 03 | 801 | 0.28 | 0.24 | 3.92 | 0.29 | 0.25 | 1.87 | 0.27 | 0.19 | 0.70 |

| 04 | 271 | 1.20 | 1.14 | 1.49 | 1.65 | 1.58 | 0.92 | 0.97 | 0.91 | 0.44 |

| 05 | 2761 | 1.35 | 1.53 | 2.44 | 0.85 | 1.27 | 1.54 | 0.86 | 0.90 | 0.40 |

| 06 | 1101 | 1.09 | 1.08 | 1.26 | 0.34 | 0.42 | 0.94 | 0.14 | 0.15 | 0.45 |

| 07 | 1101 | 1.02 | 0.98 | 2.66 | 0.67 | 1.28 | 2.51 | 0.44 | 0.48 | 0.46 |

| 08 | 4071 | 1.77 | 2.44 | 2.91 | 3.42 | 2.99 | 0.85 | 1.58 | 1.69 | 0.36 |

| 09 | 1591 | 3.43 | 6.20 | 2.48 | 2.82 | 4.55 | 0.37 | 2.19 | 2.69 | 0.26 |

| 10 | 1201 | 1.53 | 1.80 | 2.40 | 0.83 | 1.80 | 1.17 | 0.70 | 1.34 | 0.32 |

4.4.4 Camera Pose Estimation

In this subsection, we evaluate the performance of the proposed LSC on the popular dataset, i.e., [53], for the task of 6-DoF camera pose estimation. We compare LSC with the deterministic method LGF, and we also use RANSAC as baseline. We report the mean value of the translation error (in meters), maximum orientation error (in degrees) and the CPU time obtained by the three competing methods on each sequence in Table V, and we also show some camera pose results obtained by the proposed LSC in Fig. 15.

4.4.5 Image Registration

(a)

(b)

(c)

| Method | RMSE | MAE | MEE | Time (s) |

|---|---|---|---|---|

| RANSAC | 22.43 | 81.27 | 24.67 | 1.76 |

| LGF | 16.00 | 72.25 | 1.22 | 13.78 |

| LSC | 5.53 | 25.95 | 0.00 | 1.02 |

In this subsection, we evaluate the performance of the proposed LSC on remote sensing dataset [54], for the task of image registration that aims to estimate the transformation model to align source images with target images with the maximum extent. We compare LSC with the deterministic method LGF, and we also use RANSAC as baseline. We show some remote sensing image registration results obtained by the proposed LSC in Fig. 16. In Table VI, we report the mean value of the root mean square error (RMSE), maximum error (MAE), median error (MEE) and the CPU time obtained by the three competing methods [54]:

| (28) |

| (29) |

| (30) |

where and are -th pixel coordinates in source and target images, respectively. and are the number of coordinates and the transformation derived from the source and target image. denotes the Euclidean norm of vectors.

From Fig. 16 and Table VI, we can see that LSC is able to obtain good performance for image registration. For the all four evaluation metrics, LSC show obvious advantages over RANSAC and LGF. Note that, LGF is slower than RANSAC, since LGF includes a hash function that often tasks much time in complex scenes.

5 Ablation study

5.1 The impact of sampling algorithms

(a) Total

(b) Ratio

A sampling algorithm is very important for the final fitting performance, thus, we further discuss the sampling algorithm in this section. Specifically, we provide a quantitative comparison on all image pairs from the AdelaideRMF dataset with the ten state-of-the-art sampling methods, including RANSAC, NAPSAC [55], LO-RANSAC [15], G-MLESAC [56], PROSAC [14], Multi-GS [7], RCMSA [43], CBS [8], SDF [9] and LGF [13].

We report the total number of sampled minimal subsets and the ratio of all-inlier minimal subsets on the sampled minimal subsets (called the inlier-subset ratio) obtained by the competing methods within one second in Fig. 17. We can see that, most of random sampling methods achieve a large number of minimal subsets within one second, while three deterministic fitting methods (i.e., SDF, LGF and LSC-SA) only sample a few hundred subsets. This is because most of random sampling methods try to cover all model instances in data by increasing the number of subsets, in contrast, deterministic sampling methods often adopt a heuristic strategy to improve the quality of subsets while reducing the subsets including outliers. Thus, the inlier-subset ratios obtained by deterministic sampling methods are significantly higher than the ones obtained by random sampling methods.

For deterministic sampling methods, LSC-SA shows obvious advantages over SDF and LGF, that is, LSC-SA not only samples the most number of subsets among the three deterministic sampling methods, but also achieves the largest inlier-subset ratio.

5.2 The impact of initialization sampling density

To evaluate the impact of initialization sampling density on the proposed method, we test different numbers of initialization sampling subsets for the task of homography estimation and fundamental matrix estimation on the MS-COCO-F and YFCC100M-F dataset, respectively. We set the sampling number from to , increasing by each time, where represents the number of input correspondences. We report the quantitative results in Fig. 18.

We can see that, on the MS-COCO-F dataset, LSC achieves a relatively stable mean value of SE when the sampling number exceeds . On the YFCC100M-F dataset, there is no significant fluctuation in SE at different sampling numbers. In terms of the CPU time, LSC also exhibits no significant variation. Thus, LSC is not sensitive to sampling initialization across different densities.

5.3 The impact of model selection strategy

The proposed LSC includes an important components, i.e. LSC-MSA for model selection. To evaluate the impact of LSC-MSA, we test a new version LSC-DBSCAN that uses DBSCAN [57] to cluster the remaining points in MH-LSS for model selection. We perform LSC-MSA and LSC-DBSCAN on all image pairs of the AdelaideRMF dataset for the task of homography estimation and fundamental matrix estimation, and report SE in Fig. 19.

We can see that, for the homography estimation, LSC-MSA and LSC-DBSCAN are able to obtain good performance on out of the image pairs. LSC-MS still achieves low SE on the remaining four image pairs, but LSC-DBSCAN fails. For the fundamental matrix estimation, LSC-MSA and LSC-DBSCAN achieves the same values of SE on out of the image pairs, and LSC-MSA achieves lower values of SE than LSC-DBSCAN on all the remaining nine image pairs. This can show the effectiveness of LSC-MSA on the model selection for model fitting.

6 Conclusions

In this paper, we propose LSC, a deterministic fitting method designed for general multi-structural model fitting problems. LSC provides consistent and reliable solutions compared to many existing methods. Unlike current deterministic approaches, LSC handles both multi-structural data and general model fitting problems, such as line and circle fitting. Specifically, LSC leverages two latent semantic spaces of data points and model hypotheses, preserving consensus to remove outliers, generate high-quality model hypotheses, and effectively estimate model instances. Experimental results on both synthetic data and real images demonstrate that LSC outperforms several state-of-the-art model fitting methods in terms of accuracy and speed.

While LSC demonstrates commendable fitting performance, its model selection algorithm may encounter challenges when the number of models significantly increases, especially in complex fitting tasks. This challenge primarily arises from the uneven distribution of model hypotheses across various models in the input data. As the number of models escalates, this existing imbalance becomes more pronounced, posing difficulties for most fitting methods to identify models with fewer model assumptions. Moreover, optimal results often require adjusting parameter settings for various fitting tasks, driven by distinctions in data characteristics, noise levels and task complexities. These issues will be the focus of our ongoing research efforts.

7 Acknowledgment

This work was supported by the National Natural Science Foundation of China under Grants 62125201, 62072223 and 62020106007.

References

- [1] H. Wang, G. Xiao, Y. Yan, and D. Suter, “Searching for representative modes on hypergraphs for robust geometric model fitting,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 41, no. 3, pp. 697–711, 2019.

- [2] G. Xiao, H. Wang, J. Ma, and D. Suter, “Segmentation by continuous latent semantic analysis for multi-structure model fitting,” Int. J. Comput. Vis., pp. 1–23, 2021.

- [3] J.-K. Lee and K.-J. Yoon, “Joint estimation of camera orientation and vanishing points from an image sequence in a non-manhattan world,” Int. J. Comput. Vis., vol. 127, no. 10, pp. 1426–1442, 2019.

- [4] J. Ma, X. Jiang, A. Fan, J. Jiang, and J. Yan, “Image matching from handcrafted to deep features: A survey,” Int. J. Comput. Vis., vol. 129, no. 1, pp. 23–79, 2021.

- [5] K. Joo, H. Li, T.-H. Oh, and I. S. Kweon, “Robust and efficient estimation of relative pose for cameras on selfie sticks,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 44, no. 9, pp. 5460 – 5471, 2022.

- [6] M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Comm. ACM, vol. 24, no. 6, pp. 381–395, 1981.

- [7] T.-J. Chin, J. Yu, and D. Suter, “Accelerated hypothesis generation for multistructure data via preference analysis,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 34, no. 4, pp. 625–638, 2012.

- [8] R. Tennakoon, A. Sadri, R. Hoseinnezhad, and A. Bab-Hadiashar, “Effective sampling: Fast segmentation using robust geometric model fitting,” IEEE Trans. Image Process., vol. 27, no. 9, pp. 4182–4194, 2018.

- [9] G. Xiao, H. Wang, Y. Yan, and D. Suter, “Superpixel-guided two-view deterministic geometric fitting,” Int. J. Comput. Vis., vol. 127, no. 4, pp. 323–339, 2019.

- [10] H. Li, “Consensus set maximization with guaranteed global optimality for robust geometry estimation,” in Proc. IEEE Int. Conf. Comput. Vis., 2009, pp. 1074–1080.

- [11] T.-J. Chin, P. Purkait, A. Eriksson, and D. Suter, “Efficient globally optimal consensus maximisation with tree search,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 39, no. 4, pp. 758–772, 2017.

- [12] H. Le, T.-J. Chin, A. Eriksson, T.-T. Do, and D. Suter, “Deterministic approximate methods for maximum consensus robust fitting,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 3, pp. 842 – 857, 2021.

- [13] G. Xiao, J. Ma, S. Wang, and C. Chen, “Deterministic model fitting by local-neighbor preservation and global-residual optimization,” IEEE Trans. Image Process., vol. 29, pp. 8988–9001, 2020.

- [14] O. Chum and J. Matas, “Matching with prosac-progressive sample consensus,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2005, pp. 220–226.

- [15] O. Chum, J. Matas, and J. Kittler, “Locally optimized ransac,” Pattern Recog., pp. 236–243, 2003.

- [16] R. Tennakoon, A. Bab-Hadiashar, Z. Cao, R. Hoseinnezhad, and D. Suter, “Robust model fitting using higher than minimal subset sampling.” IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 2, pp. 350–362, 2016.

- [17] S. Lin, H. Luo, Y. Yan, G. Xiao, and H. Wang, “Co-clustering on bipartite graphs for robust model fitting,” IEEE Trans. Image Process., vol. 31, pp. 6605–6620, 2022.

- [18] P. Purkait, T.-J. Chin, H. Ackermann, and D. Suter, “Clustering with hypergraphs: The case for large hyperedges,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 39, no. 9, pp. 1697–1711, 2017.

- [19] L. Magri and A. Fusiello, “Multiple structure recovery via robust preference analysis,” Image Vis. Comput., vol. 67, pp. 1–15, 2017.

- [20] ——, “Multiple model fitting as a set coverage problem,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2016, pp. 3318–3326.

- [21] ——, “T-linkage: A continuous relaxation of j-linkage for multi-model fitting,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2014, pp. 3954–3961.

- [22] L. Magri, F. Leveni, and G. Boracchi, “Multilink: Multi-class structure recovery via agglomerative clustering and model selection,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2021, pp. 1853–1862.

- [23] L. Magri and A. Fusiello, “Fitting multiple heterogeneous models by multi-class cascaded t-linkage,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2019, pp. 7460–7468.

- [24] X. Liu, G. Xiao, R. Chen, and J. Ma, “Pgfnet: Preference-guided filtering network for two-view correspondence learning,” IEEE Trans. Image Process., vol. 32, pp. 1367–1378, 2023.

- [25] S. Chen, G. Xiao, Z. Shi, J. Guo, and J. Ma, “Ssl-net: Sparse semantic learning for identifying reliable correspondences,” Pattern Recog., vol. 146, p. 110039, 2024.

- [26] X. Miao, G. Xiao, S. Wang, and J. Yu, “Bclnet: Bilateral consensus learning for two-view correspondence pruning,” Proc. AAAI Conf. Artif. Intell., pp. 1–8, 2024.

- [27] J. Guo, G. Xiao, S. Wang, and J. Yu, “Graph context transformation learning for progressive correspondence pruning,” Proc. AAAI Conf. Artif. Intell., pp. 1–8, 2024.

- [28] A.-D. Doan, M. Sasdelli, D. Suter, and T.-J. Chin, “A hybrid quantum-classical algorithm for robust fitting,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2022, pp. 417–427.

- [29] A. Fan, J. Ma, X. Jiang, and H. Ling, “Efficient deterministic search with robust loss functions for geometric model fitting,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 44, no. 11, pp. 8212 – 8229, 2022.

- [30] S. Deerwester, S. T. Dumais, G. W. Furnas, T. K. Landauer, and R. Harshman, “Indexing by latent semantic analysis,” J. Am. Soc. of Information Science, vol. 41, no. 6, pp. 391–407, 1990.

- [31] H. Zhang, B. Chen, Y. Cong, D. Guo, H. Liu, and M. Zhou, “Deep autoencoding topic model with scalable hybrid bayesian inference,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 12, pp. 4306–4322, 2021.

- [32] Y. Wang, “Survey on deep multi-modal data analytics: Collaboration, rivalry, and fusion,” ACM Trans. Multimed. Comput. Commun. Appl., vol. 17, no. 1s, pp. 1–25, 2021.

- [33] H. Wang, Y. Wang, Z. Zhang, X. Fu, L. Zhuo, M. Xu, and M. Wang, “Kernelized multiview subspace analysis by self-weighted learning,” IEEE Trans. Multimed., vol. 23, pp. 3828–3840, 2020.

- [34] B. Qian, Y. Wang, R. Hong, and M. Wang, “Adaptive data-free quantization,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 7960–7968.

- [35] P. H. Torr and D. W. Murray, “The development and comparison of robust methods for estimating the fundamental matrix,” Int. J. Comput. Vis., vol. 24, no. 3, pp. 271–300, 1997.

- [36] L. Ferraz, R. Felip, B. Martínez, and X. Binefa, “A density-based data reduction algorithm for robust estimators,” in Proc. Iberian Conf. Pattern Recognit. Image Anal., 2007, pp. 355–362.

- [37] H. Wang, T.-J. Chin, and D. Suter, “Simultaneously fitting and segmenting multiple-structure data with outliers,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 34, no. 6, pp. 1177–1192, 2012.

- [38] M. Wand and M. Jones, “Kernel smoothing,” Chapman and Hall, 1994.

- [39] H. S. Wong, T.-J. Chin, J. Yu, and D. Suter, “Dynamic and hierarchical multi-structure geometric model fitting,” in Proc. IEEE Int. Conf. Comput. Vis., 2011, pp. 1044–1051.

- [40] R. Tron and R. Vidal, “A benchmark for the comparison of 3-d motion segmentation algorithms,” in Proc. IEEE Conf. Comput. Vis. Pattern Recog., 2007, pp. 1–8.

- [41] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in Proc. Eur. Conf. Comput. Vis., 2014, pp. 740–755.

- [42] B. Thomee, D. A. Shamma, G. Friedland, B. Elizalde, K. Ni, D. Poland, D. Borth, and L.-J. Li, “Yfcc100m: The new data in multimedia research,” Commun. ACM, vol. 59, no. 2, pp. 64–73, 2016.

- [43] T.-T. Pham, T.-J. Chin, J. Yu, and D. Suter, “The random cluster model for robust geometric fitting,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 36, no. 8, pp. 1658–1671, 2014.

- [44] D. Barath and J. Matas, “Progressive-X: Efficient, Anytime, Multi-Model Fitting Algorithm,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2019, pp. 3779–3787.

- [45] J. Zhang, D. Sun, Z. Luo, A. Yao, L. Zhou, T. Shen, Y. Chen, L. Quan, and H. Liao, “Learning two-view correspondences and geometry using order-aware network,” in Proc. IEEE Int. Conf. Comput. Vis., 2019, pp. 5845–5854.

- [46] S. Zhang and J. Ma, “Convmatch: Rethinking network design for two-view correspondence learning,” in Proc. AAAI Conf. Artif. Intell., 2023, pp. 3472–3479.

- [47] E. Elhamifar and R. Vidal, “Sparse subspace clustering: Algorithm, theory, and applications,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 11, pp. 2765–2781, 2013.

- [48] G. Liu, Z. Lin, S. Yan, J. Sun, Y. Yu, and Y. Ma, “Robust recovery of subspace structures by low-rank representation,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 1, pp. 171–184, 2013.

- [49] Z. Li, J. Guo, L.-F. Cheong, and S. Z. Zhou, “Perspective motion segmentation via collaborative clustering,” in Proc. IEEE Int. Conf. Comput. Vis., 2013, pp. 1369–1376.

- [50] P. Ji, M. Salzmann, and H. Li, “Shape interaction matrix revisited and robustified: Efficient subspace clustering with corrupted and incomplete data,” in Proc. IEEE Int. Conf. Comput. Vis., 2015, pp. 4687–4695.

- [51] T. Lai, H. Wang, Y. Yan, T. J. Chin, and W. L. Zhao, “Motion segmentation via a sparsity constraint,” IEEE Trans. Intell. Transp. Syst., vol. 18, no. 4, pp. 973–983, 2016.

- [52] X. Xu, L.-F. Cheong, and Z. Li, “3d rigid motion segmentation with mixed and unknown number of models,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 1, pp. 1–16, 2021.

- [53] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in Proc. IEEE Conf. Comput. Vis. Pattern Recogn., 2012, pp. 3354–3361.

- [54] X. Jiang, J. Ma, A. Fan, H. Xu, G. Lin, T. Lu, and X. Tian, “Robust feature matching for remote sensing image registration via linear adaptive filtering,” IEEE Trans. Geosci. Remote Sens., vol. 59, no. 2, pp. 1577–1591, 2020.

- [55] D. Nasuto and J. B. R. Craddock, “Napsac: High noise, high dimensional robust estimation,” in Proc. Bri. Mach. Vis. Conf., 2002, pp. 458–467.

- [56] B. J. Tordoff and D. W. Murray, “Guided-mlesac: faster image transform estimation by using matching priors,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 27, no. 10, pp. 1523–1535, 2005.

- [57] M. Ester, H.-P. Kriegel, J. Sander, and X. Xu, “A density-based algorithm for discovering clusters in large spatial databases with noise,” IEEE Trans. Knowledge and Data Eng., pp. 226–231, 1996.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/79ad2044-a1aa-4c64-8321-b3c252d4e956/guobaoxiao.jpg) |

Guobao Xiao (Senior Member, IEEE) received the Ph.D. degree from Xiamen University, China. He is currently a Tenured Professor at Tongji University, China. He has published over papers in journals and conferences including IEEE TPAMI/TIP, IJCV, ICCV, ECCV, etc. His research interests include machine learning, computer vision and pattern recognition. He has been awarded the best PhD thesis award in China Society of Image and Graphics (a total of ten winners in China). He also served on the program committee (PC) of CVPR, ICCV, ECCV, etc. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/79ad2044-a1aa-4c64-8321-b3c252d4e956/junyu.jpg) |

Jun Yu (Senior Member, IEEE) received the B.Eng. and Ph.D. degrees from Zhejiang University, China. He is currently a Professor at Harbin Institute of Technology (Shenzhen), China. He has authored or co-authored more than 100 scientific articles. His research interests have included multimedia analysis, machine learning, and image processing. He has served as a program committee member for top conferences including CVPR, ACM MM, AAAI, IJCAI, and has served as associate editors for prestigious journals including IEEE Trans. CSVT and Pattern Recognition. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/79ad2044-a1aa-4c64-8321-b3c252d4e956/x94.png) |

Jiayi Ma (Senior Member, IEEE) received the B.S. and Ph.D. degree from the Huazhong University of Science and Technology, Wuhan, China. He is currently a Professor at Wuhan University. He has authored or co-authored more than 200 refereed journal and conference papers, including IEEE TPAMI/TIP, IJCV, CVPR, ICCV, ECCV, etc. He has been identified in the 2019-2022 Highly Cited Researcher lists from the Web of Science Group. He is an Area Editor of Information Fusion. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/79ad2044-a1aa-4c64-8321-b3c252d4e956/dengpingfan.jpg) |

Deng-Ping Fan (Senior Member, IEEE) received his PhD degree from the Nankai University in 2019. He joined Inception Institute of AI in 2019. He has published about top journal and conference papers such as TPAMI, CVPR, ICCV, ECCV, etc. His research interests include computer vision and visual attention, especially on Camouflaged Object Detection. He won the Best Paper Finalist Award at IEEE CVPR 2019, the Best Paper Award Nominee at IEEE CVPR 2020. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/79ad2044-a1aa-4c64-8321-b3c252d4e956/shaoling.jpg) |

Ling Shao (Fellow, IEEE)is a Distinguished Professor with the UCAS-Terminus AI Lab, University of Chinese Academy of Sciences, Beijing, China. He was the founding CEO and Chief Scientist of the Inception Institute of Artificial Intelligence, Abu Dhabi, UAE. He was also the Initiator, founding Provost and EVP of MBZUAI, UAE. His research interests include generative AI, vision and language, and AI for healthcare. He is a fellow of the IEEE, the IAPR, the BCS and the IET. |