Large Order-Invariant Bayesian VARs with

Stochastic Volatility††thanks: We would like to thank Chenghan Hou for many helpful comments.

Abstract

Many popular specifications for Vector Autoregressions (VARs) with multivariate stochastic volatility are not invariant to the way the variables are ordered due to the use of a Cholesky decomposition for the error covariance matrix. We show that the order invariance problem in existing approaches is likely to become more serious in large VARs. We propose the use of a specification which avoids the use of this Cholesky decomposition. We show that the presence of multivariate stochastic volatility allows for identification of the proposed model and prove that it is invariant to ordering. We develop a Markov Chain Monte Carlo algorithm which allows for Bayesian estimation and prediction. In exercises involving artificial and real macroeconomic data, we demonstrate that the choice of variable ordering can have non-negligible effects on empirical results. In a macroeconomic forecasting exercise involving VARs with variables we find that our order-invariant approach leads to the best forecasts and that some choices of variable ordering can lead to poor forecasts using a conventional, non-order invariant, approach.

Keywords: large vector autoregression, order invariance, stochastic volatility, shrinkage prior

JEL classifications: C11, C52, C55

1 Introduction

Vector Autoregressions (VARs) are one of the most popular models in modern macroeconomics. In recent years, two prominent developments have occured in the Baysian VAR literature. First, beginning with Banbura, Giannone, and Reichlin (2010) researchers have been working with large VARs involving dozens or even hundreds of dependent variables. Second, there is an increasingly recognition, in most macroeconomic datasets, of the need to allow for time variation in VAR error variances, see, among many others, Clark (2011). Both of these developments have led to VAR specifications which are not invariant to the way the variables are ordered in the VAR. This does not matter in the case where a logical ordering of the variables suggests itself (e.g. as in some structural VARs) or when the number of variables in the VAR is very small since the researcher can consider all possible ways of ordering the variables. But with a large number of variables it is unlikely that a logical ordering suggests itself and it is not practical to consider every possible ordering. These considerations motivate the present paper. In it we describe an alternative VAR specification with stochastic volatility (SV) and prove that it is invariant to the ways the variables are ordered. We develop a computationally-efficient Markov Chain Monte Carlo (MCMC) algorithm which is scaleable and allows for Bayesian estimation and forecasting even in high dimensional VARs. We carry out empirical work with artificial and real data which demonstrates the consequences of a lack of order invariance in conventional approaches and that our approach does not suffer from them.

There are two basic insights that underlie our approach. The first is that it is the use of a Cholesky decomposition of the error covariance matrix that leads to order dependence. Beginning with influential early VAR-SV papers such as Cogley and Sargent (2005) and Primiceri (2005), this Cholesky decomposition has been used in numerous papers. The second is that SV can be used to identify structural VARs, see Bertsche and Braun (2020). We adopt a VAR-SV specification that avoids the use of the Cholesky decomposition. If we were working with a homoscedastic VAR, our model would be unidentified. But we let the SV achieve identification. In theory we prove order invariance and, in practice, we show that it works well.

The remainder of this paper is organized as follows. The next section discusses ordering issues in VAR-SVs. The third section introduces our multivariate SV process and proves that it is identified and order invariant. The fourth section adds the VAR component to the model and discusses Bayesian estimation of the resulting order-invariant VAR-SV. The fifth section uses artificial data to illustrate the performance of our model in terms of accuracy and computation time. The sixth section investigates the practical consequences of using the non-order invariant approach of Cogley and Sargent (2005) using artificial and real macroeconomic data. Finally, section seven carries out a forecasting exercise which demonstrates that our order-invariant approach forecasts well, but that forecasts produced by the model of Cogley and Sargent (2005) are sensitive to the way that the variables are ordered and some ordering choices can lead to poor forecast performance.

2 Ordering Issues in VAR-SVs

Ordering issues in VARs relate to the error covariance matrix, not the VAR coefficients themselves. For instance, Carriero, Clark, and Marcellino (2019) use a triangular VAR-SV which involves a Cholesky decomposition of the error covariance matrix, . They demonstrate that, conditional on , the draws of the VAR coefficients are invariant to ordering.111It is worth noting that one major reason for triangularizing the system is to allow for equation by equation estimation of the model. This leads to large computational benefits since there is no need to manipulate the (potentially enormous) posterior covariance matrix of the VAR coefficients in a single block. In particular, Carriero, Clark, and Marcellino (2019) show how, relative to full system estimation, triangularisation can reduce computational complexity of Bayesian estimation of large VARs from to where is VAR dimension. This highlights the importance of development of VAR specifications, such as the one in this paper, which allow for equation by equation estimation. Such invariance to ordering of the VAR coefficients holds for all the models discussed in the paper. Accordingly, in this section and the next we focus on the multivariate stochastic volatility process. We say a model is invariant to ordering if the order of the variables does not affect the posterior distribution of the error covariance matrix, subject to permutations. In other words, if the ordering of variables and in the VAR is changed, then posterior inference on the error covariances and variances associated with variables and is unaffected for all and .

Before discussing multivariate stochastic volatility approaches which are not order invariant, it is worthwhile noting that there are some multivariate SV approaches which are order invariant. Wishart or inverse-Wishart processes (e.g., Philipov and Glickman (2006), Asai and McAleer (2009) and Chan, Doucet, León-González, and Strachan (2018)) are by construction invariant to ordering. However, estimation of SV models based on these multivariate processes is computationally intensive as it typically involves drawing from high-dimensional, non-standard distributions. Consequently, these models are generally not applicable to large datasets. In contrast, the common stochastic volatility models considered in Carriero, Clark, and Marcellino (2016) and Chan (2020) are order invariant and can be estimated quickly even for large systems. However, these models are less flexible as the time-varying error covariance matrix depends on a single SV process (e.g., error variances are always proportional to each other). Another order invariant approach is based on the discounted Wishart process (e.g., Uhlig (1997), West and Harrison (2006), Prado and West (2010) and Bognanni (2018)), which admits efficient filtering and smoothing algorithms for estimation. However, this approach appears to be too tightly parameterized for macroeconomic data and it tends to underperform in terms of both point and density forecasts relative to standard SV models such as Cogley and Sargent (2005) and Primiceri (2005); see, for example, Arias, Rubio-Ramirez, and Shin (2021) for a forecast comparison exercise.

Another model that is potentially order invariant is the factor stochastic volatility model, and recently these models have been applied to large datasets. However, factor models have some well-known drawbacks such as mis-specification concerns associated with assuming a high dimensional volatility process is well approximated with a low dimensional set of factors. Kastner (2019) notes that, if one imposes the usual identification restrictions (e.g., factor loadings are triangular), then the model is not order invariant. Kastner (2019) does not impose those restrictions, arguing that identification of the factor loadings is not necessary for many purposes (e.g. forecasting).

The multivariate stochastic volatility specification that is perhaps most commonly used in macroeconomics is that of Cogley and Sargent (2005). The SV specification of the reduced-form errors, the -dimensional vector , in Cogley and Sargent (2005) can be written as

| (1) |

where is a unit lower triangular matrix with elements and is a diagonal matrix consisting of the log-volatility . By assuming that is lower triangular, the implied covariance matrix on naturally depends on the order of the elements in . In particular, one can show that under standard assumptions, the variance of the -th element of , , increases as increases. Hence, this ordering issue becomes worse in higher dimensional models. In addition, since the stochastic volatility specification in Primiceri (2005) is based on Cogley and Sargent (2005) but with time-varying, it has a similar ordering issue.

To illustrate this key point, we assume for simplicity that . We further assume that the priors on the lower triangular elements of are independent with prior means 0 and variances 1. Since , we can obtain the elements of recursively and compute their variances . In particular, we have

Since for all , the expectation of any cross-product term in is 0. Hence, we have

In other words, the variance of the reduced-form error increases (exponentially) as it is ordered lower in the -tuple, even though the assumptions about and are identical across .

3 An Order-Invariant Stochastic Volatility Model

3.1 Model Definition

In this section we extend the stochastic volatility specification in Cogley and Sargent (2005) with the goal of constructing an order-invariant model. The key reason why the ordering issue in Cogley and Sargent (2005) occurs is because of the lower triangular assumption for . A simple solution to this problem is to avoid this assumption and assume that is an unrestricted square matrix. To that end, let be an vector of variables that is observed over the periods To fix ideas, consider the following model with zero conditional mean:

| (2) |

where is diagonal. Here we assume that is non-singular, but is otherwise unrestricted. Each of the log-volatilities follows a stationary AR(1) process:

| (3) |

for , where and the initial condition is specified as . Note that the unconditional mean of the AR(1) process is normalized to be zero for identification. We call this the Order Invariant SV (OI-SV) model.

3.2 Identification and Order-Invariance

The question of identification of our OI-SV model can be dealt with quickly. If there is no SV, it is well-known that is not identified. In particular, any orthogonal transformation of gives the same likelihood. But with the presence of SV, Bertsche and Braun (2020) show that is identified up to permutations and sign changes:

Proposition 1 (Bertsche and Braun (2020)).

The assumptions in Proposition 1 are stronger than necessary for identification. In fact, Bertsche and Braun (2020) show that having SV processes is sufficient for identification and derive results relating to partial identification which occurs if there are SV processes with . Intuitively, allowing for time-varying volatility generates non-trivial autocovariances in the squared reduced form errors. These additional moments help identify . We refer readers to Lanne, Lütkepohl, and Maciejowska (2010) and Lewis (2021) for a more detailed discussion of how identification can be achieved in structural VARs via heteroscedasticity.

Below we outline a few theoretical properties of the stochastic volatility model described in (2). First, we show that the model is order invariant, in the sense that if we permute the order of the dependent variables, the likelihood function implied by this new ordering is the same as that of the original ordering, provided that we permute the parameters accordingly. Stacking and , note that the likelihood implied by (2) is

where denotes the absolute value of the determinant of the square matrix . Now, suppose we permute the order of the dependent variables. More precisely, let denote an arbitrary permutation matrix of dimension , and we define . By the standard change of variable result, the likelihood of is

where , and with . Note that we have used the fact that and , as all permutation matrices are orthogonal matrices. In addition, since , the first line in the above derivation is equal to . Hence, we have shown that . We summarize this result in the following proposition.

Proposition 2 (Order Invariance).

Let denote the likelihood of the stochastic volatility model given in (2). Let be an arbitrary permutation matrix and define and . Then, the likelihood with dependent variables has exactly the same form but with parameters permuted accordingly. More precisely,

where and .

Next, we derive the unconditional covariance matrix of the data implied by the stochastic volatility model in (2)-(3). More specifically, given the model parameters , and suppose we generate the log-volatility processes via (3). It is straightforward to compute the implied unconditional covariance matrix of .

Since the unconditional distribution of implied by (3) is , the unconditional distribution of is log-normal with mean . Hence, the unconditional mean of is . It then follows that the unconditional covariance matrix of can be written as

where , i.e., scaling each row of by , . From this expression it is also clear that if we permute the order of the dependent variables via , the unconditional covariance matrix of can be obtained by permuting the rows and columns of accordingly. More precisely:

where . Hence, the unconditional variance of any element in does not depend on its position in the -tuple.

This establishes order-invariance in the likelihood function defined by the OI-SV. Of course, the posterior will also be order invariant (subject to permutations and sign switches) if the prior is. This will hold in any reasonable case. All it requires is that the prior for the parameters in equation under one ordering becomes the prior for equation in a different ordering if variable in the first ordering becomes variable in the second ordering.

4 A VAR with an Order-Invariant SV Specification

4.1 Model Definition

We now add the VAR part to our OI-SV model, leading to the OI-VAR-SV which is given by

| (4) |

where is an vector of intercepts, are matrices of VAR coefficients, is non-singular but otherwise unrestricted matrix, and is diagonal. Finally, each of the log-volatility follows the stationary AR(1) process as specified in (3).

4.2 Shrinkage Priors

We argued previously that ordering issues are likely to be most important in high dimensional models. With large VARs over-parameterization concerns can be substantial and, for this reason, Bayesian methods involving shrinkage priors are commonly used. In this sub-section, we describe a particular VAR prior with attractive properties. But it is worth noting that any of the standard Bayesian VAR priors (e.g. the Minnesota prior) could have been used and the resulting model would still have been order invariant.

Let denote the VAR coefficients in the -th equation, . We consider the Minnesota-type adaptive hierarchical priors proposed in Chan (2021), which have the advantages of both the Minnesota priors (e.g., rich prior beliefs such as cross-variable shrinkage) and modern adaptive hierarchical priors (e.g., heavy-tails and substantial mass around 0). In particular, we construct a Minnesota-type horseshoe prior as follows. For , the -th coefficient in the -th equation, let if it is a coefficient on an ‘own lag’ and let if it is a coefficient on an ‘other lag’. Given the constants defined below, consider the horseshoe prior on the VAR coefficients (excluding the intercepts) with :

| (5) | ||||

| (6) | ||||

| (7) |

where denotes the standard half-Cauchy distribution. and are the global variance components that are common to, respectively, coefficients of own and other lags, whereas each is a local variance component specific to the coefficient . Lastly, the constants are obtained as in the Minnesota prior, i.e.,

| (8) |

where denotes the sample variance of the residuals from an AR(4) model for the variable . Furthermore, for data in growth rates is set to be 0; for level data, is set to be zero as well except for the coefficient associated with the first own lag, which is set to be one.

It is easy to verify that if all the local variances are fixed, i.e., , then the Minnesota-type horseshoe prior given in (5)–(7) reduces to the Minnesota prior. Hence, it can be viewed as an extension of the Minnesota prior by introducing a local variance component such that the marginal prior distribution of has heavy tails. On the other hand, if , and , then the Minnesota-type horseshoe prior reduces to the standard horseshoe prior where the coefficients have identical distributions. Therefore, it can also be viewed as an extension of the horseshoe prior (Carvalho, Polson, and Scott, 2010) that incorporates richer prior beliefs on the VAR coefficients, such as cross-variable shrinkage, i.e., shrinking coefficients on own lags differently than other lags.

To facilitate estimation, we follow Makalic and Schmidt (2016) and use the following latent variable representations of the half-Cauchy distributions in (6)-(7):

| (9) | ||||

| (10) |

for and where denotes the inverse Gamma distribution

Next, we specify the priors on other model parameters. Let denote the -th row of for i.e., . We consider independent priors on :

For the parameters in the stochastic volatility equations, we assume the priors for :

4.3 MCMC Algorithm

In this section we develop a posterior sampler which allows for Bayesian estimation of the order-invariant stochastic volatility model with the Minnesota-type horseshoe prior. For later reference, let denote the local variance components associated with and define . Furthermore, let denote the latent variables corresponding to and similarly define . Next, stack , , , and with . Finally, let represent the vector of log-volatility for the -th equation, . Then, posterior draws can be obtained by sampling sequentially from:

-

1.

= ;

-

2.

= ;

-

3.

= ;

-

4.

= ;

-

5.

= ;

-

6.

= .

-

7.

;

-

8.

;

-

9.

;

Step 1. To implement Step 1, we adapt the sampling approach in Waggoner and Zha (2003) and Villani (2009) to the setting with stochastic volatility. More specifically, we aim to draw each row of given all other rows. To that end, we first rewrite (4) as:

| (11) |

where is the matrix of dependent variables, is the matrix of lagged dependent variables with , is the matrix of VAR coefficients and is the matrix of errors. Then, for , we have

where is the -th column of and Hence, the full conditional distribution of is given by

| (12) |

where

The above full conditional posterior distribution is non-standard and direct sampling is infeasible. Fortunately, Waggoner and Zha (2003) develop an efficient algorithm to sample in the case when Villani (2009) further generalizes this sampling approach for non-zero .

The key idea is to show that has the same distribution as a linear transformation of an -vector that consists of one absolute normal and normal random variables. A random variable follows the absolute normal distribution if it has the density function

where is a normalizing constant.

To formally state the results, let denote the Cholesky factor of such that . Let represent the matrix with the -th column deleted and be the orthogonal complement of . Furthermore, define , where , and let . Then, we claim that

where and with .

To prove the claim, let . It suffices to show that if we substitute into (12), are independent with and . To that end, note that by construction, forms an orthonormal basis of , particularly . We first write the quadratic form in (12) as:

Next, note that by construction, spans the same space as . Hence, it follows that

Finally, substituting the quadratic form and the determinant into (12), we obtain

In other words, and , and we have proved the claim.

In order to use the above result, we need an efficient way to sample from . This can be done by using the 2-component normal mixture approximation considered in Appendix C of Villani (2009). In addition, the orthogonal complement of , namely, , can be obtained using the singular value decomposition.222In MATLAB, the orthogonal complement of can be obtained using null. In the sampler we fix the sign of the -th element of to be positive (so that the diagonal elements of are positive). This is done by multiplying the draw by if its -th element is negative.

Step 2. We sample the reduced-form VAR coefficients row by row along the lines of Carriero, Clark, and Marcellino (2019). In particular, we extend the triangular algorithm in Carriero, Chan, Clark, and Marcellino (2021) that is designed for a lower triangular impact matrix to the case where is a full matrix. To that end, define to be a matrix that has exactly the same elements as except for the -th column, which is set to be zero, i.e., Then, we can rewrite (4) as

where and is the -th column of . Let and stack , we have

where and .

Next, the Minnesota-type horseshoe prior on is conditionally Gaussian given the local variance component . More specifically, we can rewrite the conditional prior on in (5) as:

where and (the prior on the intercept is Gaussian). Then, by standard linear regression results, we have

where ,

The computational complexity of this step is the same, i.e., , as in the triangular case considered in Carriero, Chan, Clark, and Marcellino (2021).

Step 3. First, note that the elements of are conditionally independent and we can sample them one by one without loss of efficiency. Next, combining (5) and (9), we obtain

which is the kernel of the following inverse-gamma distribution:

Step 4. Note that and only appear in their priors in (10) and in (5) — recall for coefficients on own lags and for coefficients on other lags. To sample and , first define the index set that collects all the indexes such that is a coefficient associated with an own lag. That is, . Similarly, define as the set that collects all the indexes such that is a coefficient associated with a lag of other variables. It is easy to check that the numbers of elements in and are respectively and . Then, we have

which is the kernel of the distribution. Similarly, we have

Steps 5-6. It is straightforward to sample the latent variables and . In particular, it follows from (9) that . Similarly, from (10) we have .

Step 7. The log-volatility vector for each equation, can be sampled using the auxiliary mixture sampler of Kim, Shephard, and Chib (1998) once we obtain the orthogonalized errors. More specifically, we first compute using (11). Then, we transform the -th column of via . Finally we implement the auxiliary mixture sampler in conjunction with the precision sampler of Chan and Jeliazkov (2009) to sample using as data.

Steps 8-9. These two steps are standard; see, e.g., Chan and Hsiao (2014).

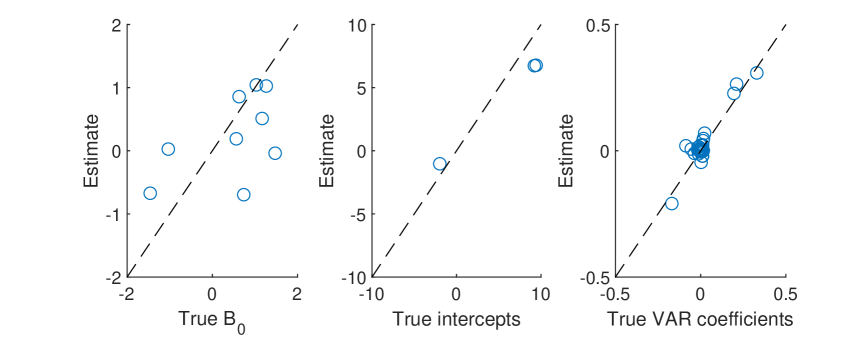

5 Experiments with Artificial Data

In this section we first present results on two artificial data experiments to illustrate how the new model performs under DGPs with and without stochastic volatility. In the first experiment, we generate a dataset from the VAR in (4) with , , and the stochastic volatility processes as specified in (3). We then estimate the model using the posterior sampler outlined in the previous section. The first dataset is generated as follows. First, the intercepts are drawn independently from , the uniform distribution on the interval . For the VAR coefficients, the diagonal elements of the first VAR coefficient matrix are iid and the off-diagonal elements are from ; all other elements of the -th () VAR coefficient matrices are iid For the impact matrix , the diagonal elements are iid , whereas the off-diagonal elements are iid . Finally, for the SV processes, we set and The results of the artificial data experiments are reported in Figures 1-3.

Overall, all the estimates track the true values closely. In particular, the new model is able to recover the time-varying reduced-form covariance matrices. In addition, it is also evident that can be estimated accurately.

In the second experiment, we generate data from the same VAR but with the stochastic volatility component turned off so that the errors are homoscedastic (in particular, we set ). In other words, we have generated data from a homoscedastic, unidentified model. But we are estimating it using the (mis-specified) heteroscedastic model with the posterior sampler outlined in the previous sections. The results are reported in Figures 4- 6.

When the DGP does not have stochastic volatility, elements of the impact matrix are much harder to pin down (as expected since these parameters are not identified). But it is interesting to note that the estimates of the time-varying variances are still able to track the true values fairly closely (as these reduced-form error variances are identified). The VAR coefficients, as well, are well-estimated.

Finally, we document the runtimes of estimating OI-VAR-SV models of different dimensions to assess how well the posterior sampler scales to higher dimensions. More specifically, we report in Table 1 the computation times (in minutes) to obtain 10,000 posterior draws from the proposed OI-VAR-SV model of dimensions and sample sizes . The posterior sampler is implemented in on a desktop with an Intel Core i7-7700 @3.60 GHz processor and 64 GB memory. It is evident from the table that for typical applications with 15-30 variables, the OI-VAR-SV model can be estimated quickly. More generally, its estimation time is comparable to that of the triangular model in Carriero, Clark, and Marcellino (2019).

| 2.5 | 17.5 | 233 | 6.8 | 39.7 | 630 |

6 Sensitivity to Ordering in the VAR-SV of Cogley and Sargent (2005)

In the preceding sections, we have developed Bayesian methods for order-invariant inference in VARs with SV. In this section, we illustrate the importance of this by showing the degree to which results are sensitive to ordering in the VAR-SV of Cogley and Sargent (2005), hereafter CS-VAR-SV, which is one of the most popular VAR-SV specifications in empirical macroeconomics. We do this using both simulated and macroeconomic data. To ensure comparability, we use the same prior for the CS-VAR-SV as for our OI-VAR-SV. The only difference is the prior on . For the CS-VAR-SV, is unit lower triangular, and we assume the lower triangular elements have prior distribution . For OI-VAR-SV, we assume the same prior for the off-diagonal elements of , whereas the diagonal elements are iid . MCMC estimation of the CS-VAR-SV is carried out using the algorithm of Carriero, Chan, Clark, and Marcellino (2021).

6.1 A Simulation Experiment

As discussed in Primiceri (2005), the Cholesky structure of the model in Cogley and Sargent (2005) is unable to accommodate certain co-volatility patterns. To illustrate this point, we simulate observations from the model in (3) and (4) with and . To investigate the consequences of the lower triangular restriction we choose a non-triangular data generating process (DGP). We emphasise that the presence of SV in the DGP means that the model is identified. We set

The VAR coefficients and volatilities are simulated in exactly the same way as described in Section 5. Figure 7 reports the estimated time-varying reduced-form error covariance matrices produced by the CS-VAR-SV and OI-VAR-SV models.

It is clear from the figure that the estimates from the CS-VAR-SV model tend to be flat and do not capture the time-variation in the error covariances well. This is perhaps not surprising as the is restricted to be lower triangular in the CS-VAR-SV. However, the OI-VAR-SV does track the true quite well. Since error covariances play an important role in features of interest such as impulse responses, this illustrates the potential negative consequences of working with triangularized systems.

6.2 Ordering Issues in a 20-Variable VAR

Next we provide a simple illustration of how the choice of ordering can influence empirical results in large VARs using a dataset of popular US monthly variables obtained from the FRED-MD database (McCracken and Ng, 2016). The sample period is from 1959:03 to 2019:12. These variables, along with their transformations are listed in Appendix A. Four core variables, namely, industrial production, the unemployment rate, PCE inflation and the Federal funds rate, are ranked as the first to the fourth variables, respectively. The remaining 16 variables are ordered as in Carriero, Clark, and Marcellino (2019). We estimate the OI-VAR-SV and CS-VAR-SV models with the variables in this order (we call these models OI-VAR-SV-1 and CS-VAR-SV-1). Then we reverse the order of the variables and re-estimate them (these models are OI-VAR-SV-2 and CS-VAR-SV-2).

Estimates (posterior means) of reduced-form variances and covariances of selected variables are reported in Figure 8 and Figure 9. That is, the first figure reports selected diagonal elements of under two different variable orderings, whereas the second figure reports selected off-diagonal elements of .

There are three points to note about these figures. First, as expected, estimates produced by the OI-VAR-SV model under the two different variable orderings are identical (up to MCMC approximation error). Second, estimates produced by the CS-VAR-SV model under the two different variable orderings are often similar to one another, but occasionally differ, particularly in periods of high volatility. Third, estimates from the CS-VAR-SV models are often similar to the OI-VAR-SV models, but sometimes are substantially different, particularly in the error covariances.

We next present results relating to which highlight the differences between our OI-VAR-SV-1 and the CS-VAR-SV-1.333Note that we are comparing the two models under the first ordering of the variables. The comparable figure using the second ordering reveals similar patterns. Figure 10 reports the posterior means of of these two models. Note that the two panels of the figure are very different. Part of this difference is due to the fact that, under OI-VAR-SV, the SV processes are assumed to have zero unconditional means and the diagonal elements of are unrestricted. Hence, the diagonal elements of play a key role in adjusting the scale of the error variances. In contrast the diagonal elements of are restricted to be one for the CS-VAR-SV model. But the key difference lies in the upper-triangular elements of . These are restricted to be zero in the CS-VAR-SV. But several of these upper triangular elements are estimated to be large in magnitude using our OI-VAR-SV model. The data strongly supports an unrestricted impact matrix .

Next, we report the posterior means of the shrinkage hyperparameters and under the two models with two different orderings of the variables. As is clear from the table, the estimates of and can differ substantially when using the CS-VAR-SV model, depending on how the variables are ordered. For example, the estimate of increases by 84% when one reverses the order of the variables. Of course, under the proposed order-invariant model the estimates are the same (up to MCMC approximation error).

| CS-VAR-SV-1 | CS-VAR-SV-2 | OI-VAR-SV-1 | OI-VAR-SV-2 | |

| 0.0177 | 0.0170 | 0.0356 | 0.0358 | |

7 A Forecasting Exercise

Since VARs are commonly used for forecasting, it is interesting to investigate how the sensitivity to ordering of the CS-VAR-SV models affects forecasts and whether working with our order-invariant specification can lead to forecast improvements. Accordingly we carry out a forecasting exercise using the monthly macroeconomic dataset of sub-section 6.2 for the four models (OI-VAR-SV-1, OI-VAR-SV-2, CS-VAR-SV-1 and CS-VAR-SV-2). Forecast performance of the models is evaluated from 1970:03 till the end of the sample. Root mean squared forecast errors (RMSFEs) are used to evaluate the quality of point forecasts and averages of log predictive likelihoods (ALPLs) are used to evaluate the quality of density forecasts. We focus on four core variables: industrial production, the unemployment rate, PCE inflation and the Federal funds rate. Results for three different forecast horizons () are presented in Table 3. We use the iterated method of forecasting to compute longer horizon forecasts.

Note first that, as expected, OI-VAR-SV-1 and OI-VAR-SV-2 are producing the same forecasts (up to MCMC approximation error). CS-VAR-SV-1 and CS-VAR-SV-2 are often producing forecasts which are substantially different both from one another and from those produced by the order-invariant approaches. These differences are not that noticeable in the RMSFEs, but are very noticeable in the ALPLs. This suggests ordering issues are more important for density forecasts than for point forecasts. This is not surprising since ordering issues are important for the multivariate stochastic volatility process (which plays an important role in determining predictive variances) but not for the VAR coefficients (which are the key determinants of predictive means). These findings are similar to those found in smaller VAR-SVs by Arias, Rubio-Ramirez, and Shin (2021).

A careful examination of the ALPL results indicates that the best forecasting model is almost always the OI-VAR-SV and in most cases the forecast improvements are statistically significant relative to the CS-VAR-SV-1 benchmark. A comparison of CS-VAR-SV-1 to CS-VAR-SV-2 yields no simple conclusion. Remember that CS-VAR-SV-1 uses a similar ordering of Carriero, Clark, and Marcellino (2019). For most variables and forecast horizons, this ordering is leading to higher ALPLs than the reverse ordering. This finding is also consistent with the results in Arias, Rubio-Ramirez, and Shin (2021). But there are exceptions such as forecasting the Fed funds rate for and . But the important point is not that, when using CS-VAR-SV models, one ordering is better than the other. The important points are that ordering matters (often in a statistically significant way) and that different variables prefer different orderings. That is, there is no one ordering that forecasts all of the variables best.

| Variables | Models | RMSFE | ALPL | ||||

|---|---|---|---|---|---|---|---|

| Industrial production | CS-VAR-SV-1 | 0.007 | 0.007 | 0.007 | 2.071 | 2.159 | 2.266 |

| CS-VAR-SV-2 | 0.007∗∗ | 0.007∗∗ | 0.007∗∗ | 1.522∗∗∗ | 1.651∗∗∗ | 1.767∗∗∗ | |

| OI-VAR-SV-1 | 0.007 | 0.007 | 0.007∗∗∗ | 3.660∗∗∗ | 3.458∗∗∗ | 3.360∗∗∗ | |

| OI-VAR-SV-2 | 0.007 | 0.007 | 0.007∗∗∗ | 3.659∗∗∗ | 3.459∗∗∗ | 3.358∗∗∗ | |

| Unemployment rate | CS-VAR-SV-1 | 0.159 | 0.169 | 0.173 | 0.005 | 0.689 | 0.707 |

| CS-VAR-SV-2 | 0.158 | 0.169 | 0.173 | 0.124 | 0.761 | 0.742 | |

| OI-VAR-SV-1 | 0.158 | 0.167 | 0.173 | 0.463∗∗∗ | 0.276 | 0.152 | |

| OI-VAR-SV-2 | 0.158 | 0.167∗ | 0.173 | 0.463∗∗∗ | 0.276 | 0.156 | |

| PCE inflation | CS-VAR-SV-1 | 0.002 | 0.002 | 0.002 | 2.210 | 2.311 | 2.425 |

| CS-VAR-SV-2 | 0.002∗∗∗ | 0.002 | 0.002 | 1.811∗∗∗ | 1.919∗∗∗ | 2.024∗∗∗ | |

| OI-VAR-SV-1 | 0.002∗∗ | 0.002 | 0.002∗ | 4.821∗∗∗ | 4.448∗∗∗ | 4.249∗∗∗ | |

| OI-VAR-SV-2 | 0.002∗∗ | 0.002 | 0.002 | 4.823∗∗∗ | 4.453∗∗∗ | 4.260∗∗∗ | |

| Federal funds rate | CS-VAR-SV-1 | 0.492 | 1.566 | 2.216 | 0.222 | 8.473 | 16.704 |

| CS-VAR-SV-2 | 0.497 | 1.597∗ | 2.264 | 0.327∗∗∗ | 6.708∗∗∗ | 12.474∗∗∗ | |

| OI-VAR-SV-1 | 0.493 | 1.576 | 2.233 | 0.294∗∗∗ | 6.956∗ | 13.466∗ | |

| OI-VAR-SV-2 | 0.494 | 1.570 | 2.236 | 0.296∗∗∗ | 7.039 | 13.662 | |

Note: The bold figure indicates the best model in each case. ∗, ∗∗ and ∗∗∗ denote, respectively, 0.10, 0.05 and 0.01 significance level for a two-sided Diebold and Mariano (1995) test. The benchmark model is CS-VAR-SV-1.

8 Conclusions

In this paper, we have demonstrated, both theoretically and empirically, the consequences of working with VAR-SVs which use Cholesky decompositions of the error covariance matrices such as that used in Cogley and Sargent (2005) and are thus not order invariant. We have proposed a new specification which is order invariant which involves working with an unrestricted version of the impact matrix, . Such a model would be unidentified in the homoscedastic VAR but we draw on Bertsche and Braun (2020) to establish that the incorporation of SV identifies the model. We develop an MCMC algorithm which allows for Bayesian inference and prediction in our order-invariant model. In an empirical exercise involving macroeconomic variables we demonstrate the ability of our methods to produce accurate forecasts in a computationally efficient manner.

Appendix A: Data Description

This appendix provides details of the monthly dataset used in the forecasting exercise. The variables and their transformations are the same as in Carriero, Clark, and Marcellino (2019).

| Variable | Mnemonic | Transformation |

|---|---|---|

| Real personal income | RPI | |

| Real PCE | DPCERA3M086SBEA | |

| Real manufacturing and trade sales | CMRMTSPLx | |

| Industrial production | INDPRO | |

| Capacity utilization in manufacturing | CUMFNS | |

| Civilian unemployment rate | UNRATE | |

| Total nonfarm employment | PAYEMS | |

| Hours worked: goods-producing | CES0600000007 | no transformation |

| Average hourly earnings: goods-producing | CES0600000008 | |

| PPI for finished goods | WPSFD49207 | |

| PPI for commodities | PPICMM | |

| PCE price index | PCEPI | |

| Federal funds rate | FEDFUNDS | no transformation |

| Total housing starts | HOUST | log |

| S&P 500 price index | S&P 500 | |

| U.S.-U.K. exchange rate | EXUSUKx | |

| 1 yr. Treasury-FEDFUNDS spread | T1YFFM | no transformation |

| 10 yr. Treasury-FEDFUNDS spread | T10YFFM | no transformation |

| BAA-FEDFUNDS spread | BAAFFM | no transformation |

| ISM: new orders index | NAPMNOI | no transformation |

References

- (1)

- Arias, Rubio-Ramirez, and Shin (2021) Arias, J. E., J. F. Rubio-Ramirez, and M. Shin (2021): “Macroeconomic forecasting and variable ordering in multivariate stochastic volatility models,” Federal Reserve Bank of Philadelphia Working Papers.

- Asai and McAleer (2009) Asai, M., and M. McAleer (2009): “The structure of dynamic correlations in multivariate stochastic volatility models,” Journal of Econometrics, 150(2), 182–192.

- Banbura, Giannone, and Reichlin (2010) Banbura, M., D. Giannone, and L. Reichlin (2010): “Large Bayesian vector auto regressions,” Journal of Applied Econometrics, 25(1), 71–92.

- Bertsche and Braun (2020) Bertsche, D., and R. Braun (2020): “Identification of structural vector autoregressions by stochastic volatility,” Journal of Business & Economic Statistics, just-accepted, 1–39.

- Bognanni (2018) Bognanni, M. (2018): “A class of time-varying parameter structural VARs for inference under exact or set identification,” FRB of Cleveland Working Paper.

- Carriero, Chan, Clark, and Marcellino (2021) Carriero, A., J. C. C. Chan, T. E. Clark, and M. G. Marcellino (2021): “Corrigendum to: Large Bayesian vector autoregressions with stochastic volatility and non-conjugate priors,” working paper.

- Carriero, Clark, and Marcellino (2016) Carriero, A., T. E. Clark, and M. G. Marcellino (2016): “Common drifting volatility in large Bayesian VARs,” Journal of Business and Economic Statistics, 34(3), 375–390.

- Carriero, Clark, and Marcellino (2019) (2019): “Large Bayesian vector autoregressions with stochastic volatility and non-conjugate priors,” Journal of Econometrics, 212(1), 137–154.

- Carvalho, Polson, and Scott (2010) Carvalho, C. M., N. G. Polson, and J. G. Scott (2010): “The horseshoe estimator for sparse signals,” Biometrika, 97(2), 465–480.

- Chan (2020) Chan, J. C. C. (2020): “Large Bayesian VARs: A flexible Kronecker error covariance structure,” Journal of Business and Economic Statistics, 38(1), 68–79.

- Chan (2021) (2021): “Minnesota-type adaptive hierarchical priors for large Bayesian VARs,” International Journal of Forecasting, 37(3), 1212–1226.

- Chan, Doucet, León-González, and Strachan (2018) Chan, J. C. C., A. Doucet, R. León-González, and R. W. Strachan (2018): “Multivariate stochastic volatility with co-heteroscedasticity,” CAMA Working Paper.

- Chan and Hsiao (2014) Chan, J. C. C., and C. Y. L. Hsiao (2014): “Estimation of stochastic volatility models with heavy tails and serial dependence,” in Bayesian Inference in the Social Sciences, ed. by I. Jeliazkov, and X.-S. Yang, pp. 159–180. John Wiley & Sons, Hoboken.

- Chan and Jeliazkov (2009) Chan, J. C. C., and I. Jeliazkov (2009): “Efficient simulation and integrated likelihood estimation in state space models,” International Journal of Mathematical Modelling and Numerical Optimisation, 1(1), 101–120.

- Clark (2011) Clark, T. E. (2011): “Real-time density forecasts from Bayesian vector autoregressions with stochastic volatility,” Journal of Business and Economic Statistics, 29(3), 327–341.

- Cogley and Sargent (2005) Cogley, T., and T. J. Sargent (2005): “Drifts and volatilities: Monetary policies and outcomes in the post WWII US,” Review of Economic Dynamics, 8(2), 262–302.

- Diebold and Mariano (1995) Diebold, F. X., and R. S. Mariano (1995): “Comparing predictive accuracy,” Journal of Business and Economic Statistics, 13(3), 253–263.

- Kastner (2019) Kastner, G. (2019): “Sparse Bayesian time-varying covariance estimation in many dimensions,” Journal of econometrics, 210(1), 98–115.

- Kim, Shephard, and Chib (1998) Kim, S., N. Shephard, and S. Chib (1998): “Stochastic volatility: Likelihood inference and comparison with ARCH models,” Review of Economic Studies, 65(3), 361–393.

- Lanne, Lütkepohl, and Maciejowska (2010) Lanne, M., H. Lütkepohl, and K. Maciejowska (2010): “Structural vector autoregressions with Markov switching,” Journal of Economic Dynamics and Control, 34(2), 121–131.

- Lewis (2021) Lewis, D. J. (2021): “Identifying shocks via time-varying volatility,” The Review of Economic Studies.

- Makalic and Schmidt (2016) Makalic, E., and D. F. Schmidt (2016): “A simple sampler for the horseshoe estimator,” IEEE Signal Processing Letters, 23(1), 179–182.

- McCracken and Ng (2016) McCracken, M. W., and S. Ng (2016): “FRED-MD: A monthly database for macroeconomic research,” Journal of Business and Economic Statistics, 34(4), 574–589.

- Philipov and Glickman (2006) Philipov, A., and M. Glickman (2006): “Multivariate stochastic volatility via Wishart processes,” Journal of Business and Economic Statistics, 24, 313–328.

- Prado and West (2010) Prado, R., and M. West (2010): Time Series: Modeling, Computation, and Inference. Chapman and Hall/CRC.

- Primiceri (2005) Primiceri, G. E. (2005): “Time varying structural vector autoregressions and monetary policy,” Review of Economic Studies, 72(3), 821–852.

- Uhlig (1997) Uhlig, H. (1997): “Bayesian vector autoregressions with stochastic volatility,” Econometrica, 65(1), 59–73.

- Villani (2009) Villani, M. (2009): “Steady-state priors for vector autoregressions,” Journal of Applied Econometrics, 24(4), 630–650.

- Waggoner and Zha (2003) Waggoner, D., and T. Zha (2003): “A Gibbs sampler for structural vector autoregressions,” Journal of Economic Dynamics and Control, 28(2), 349–366.

- West and Harrison (2006) West, M., and J. Harrison (2006): Bayesian Forecasting and Dynamic Models. Springer Science & Business Media.