Large Language Model Assisted Optimal Bidding of BESS in FCAS Market: An AI-agent based Approach

Abstract

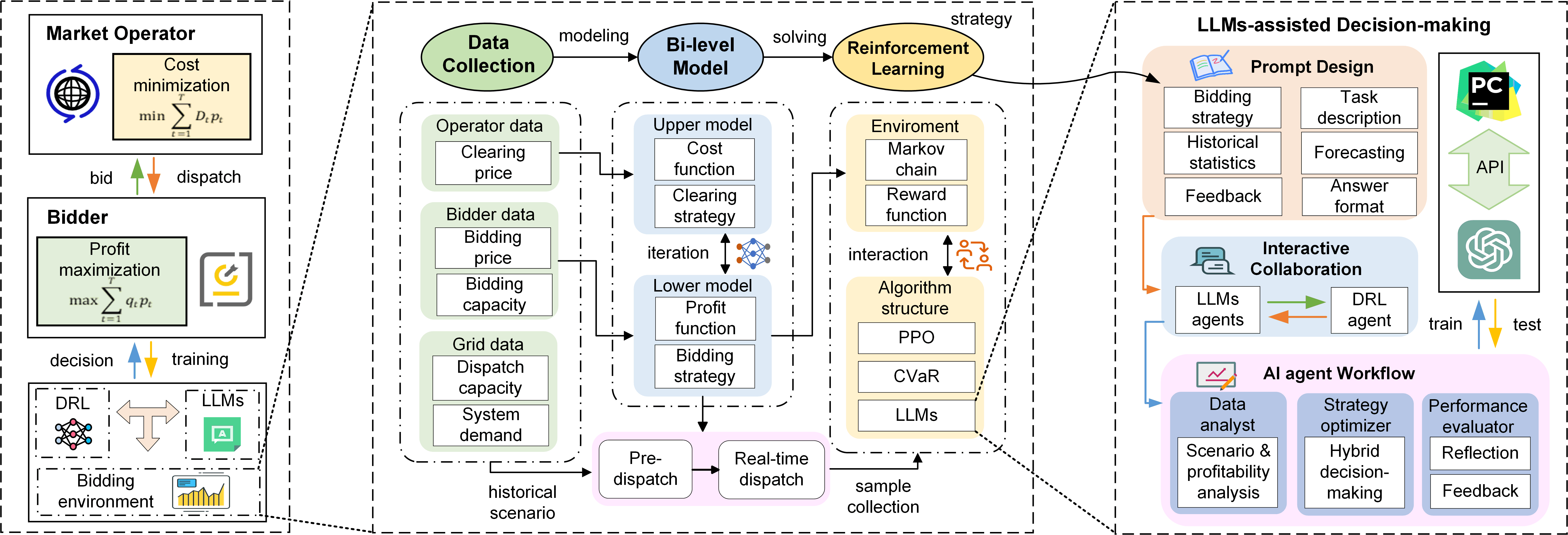

To incentivize flexible resources such as Battery Energy Storage Systems (BESSs) to offer Frequency Control Ancillary Services (FCAS), Australia’s National Electricity Market (NEM) has implemented changes in recent years towards shorter-term bidding rules and faster service requirements. However, firstly, existing bidding optimization methods often overlook or oversimplify the key aspects of FCAS market procedures, resulting in an inaccurate depiction of the market bidding process. Thus, the BESS bidding problem is modeled based on the actual bidding records and the latest market specifications and then formulated as a deep reinforcement learning (DRL) problem. Secondly, the erratic decisions of the DRL agent caused by imperfectly predicted market information increases the risk of profit loss. Hence, a Conditional Value at Risk (CVaR)-based DRL algorithm is developed to enhance the risk resilience of bidding strategies. Thirdly, well-trained DRL models still face performance decline in uncommon scenarios during online operations. Therefore, a Large Language Models (LLMs)-assisted artificial intelligence (AI)-agent interactive decision-making framework is proposed to improve the strategy timeliness, reliability and interpretability in uncertain new scenarios, where conditional hybrid decision and self-reflection mechanisms are designed to address LLMs’ hallucination challenge. The experiment results demonstrate that our proposed framework has higher bidding profitability compared to the baseline methods by effectively mitigating the profit loss caused by various uncertainties.

Index Terms:

Energy storage, deep reinforcement learning, large language model, frequency regulation, bidding strategyI Introduction

In the pursuit of mitigating climate change and transitioning to low-carbon, sustainable goals, renewable energy development has advanced yet its extensive integration into power grids has significantly impacted system stability and security. In Australia’s National Electricity Market (NEM), the Frequency Control Ancillary Services (FCAS) market purchases frequency regulation (FR) capacities from bidders to ensure stable system frequency [1]. In 2023, a new 1-second FCAS market [2] was introduced in NEM to incentivize flexible resources such as Battery Energy Storage Systems (BESSs) to offer responses within one second. Considering the high cost of BESSs [3], it is imperative to develop highly profitable bidding strategies.

Existing studies have focused on the optimization of bidding strategies in ancillary service markets. The study in [4] adopts a “predict-then-optimize” framework for a single system in a single market, utilizing linear programming (LP) based on predicted prices to seek the optimal bidding strategy of BESS in the energy market. Furthermore, [5] applies a genetic algorithm (GA) to address the bidding problem of a wind-BESS system in multiple markets, considering the joint bidding capacity allocation in energy and frequency reserve markets. [6] models a stochastic game to find Nash equilibrium bidding solutions for multiple virtual power plants (VPPs) in energy and frequency markets. However, mathematical methods are constrained by low efficiency and an over-reliance on forecasting, hence facing challenges in making timely bidding decisions with high returns in dynamic market conditions [7]. More importantly, existing solutions are not applicable in the real-world FCAS market because the actual bidding procedure has various compulsory requirements, such as bids must be step-like over multiple bands, and bidding price modification is prohibited in the real-time market which is often ignored or oversimplified. Bidders are also often assumed to be price-takers, omitting the responses of market operators.

Artificial intelligence (AI)-based methods, such as reinforcement learning (RL) and deep RL (DRL), have been applied to bidding optimization in ancillary markets [8, 9] and can improve efficiency and get rid of the over-reliance on forecasting by learning from historical experiences [10]. In [11], inverse RL (IRL) is used to seek the objective of BESSs based on bidding data in energy and ancillary service markets. Additionally, [12] utilizes multi-agent RL (MARL) to address the bidding game among multiple VPPs while ensuring fairness among bidders. However, the aleatoric uncertainty arising from dynamic environments and the epistemic uncertainty caused by limited training data remain challenges limiting the practical applications of DRL [13].

In DRL bidding problems, the aleatoric uncertainty comes from uncertain market behaviors and imperfect insight and results in DRL agents making erratic decisions under imperfectly predictable information. Conditional value-at-risk (CVaR), as a popular risk measure, can enhance economic benefits by managing market risk [14]. In [6], CVaR is integrated into a risk-averse mathematical model to reduce the risk of overbidding. If CVaR is incorporated into the DRL model to control the risk of erratic decisions of the DRL agent, the profit loss due to the aleatoric uncertainty can be effectively mitigated.

The epistemic uncertainty is from uncommon market conditions outside the training set and exposes the weakness of DRL agents performing well during training but poorly in new environments [15]. [16] suggests that Large Language Models (LLMs) are promising in driving DRL agents to make more reliable decisions in new environments, leveraging their rich knowledge and natural language processing capabilities. [17] proposes an LLMs-assist autonomous driving planning to better handle uncertain driving scenarios. [18] suggests a “GPT-in-the-loop” method, utilizing GPT to aid agent systems in interactive decision-making for better performance and interpretability. Thus, if unfaithful decisions by LLMs’ hallucination [19] can be mitigated, it will be a great opportunity to reduce DRL’s profit loss in unfamiliar market conditions through interactive collaboration between DRL and LLMs.

Therefore, this paper establishes models that comply with the actual rules of NEM, proposes a CVaR-DRL algorithm to seek the optimal bidding strategies to improve the profitability of BESS under market uncertainty and dynamic bidding risks, and builds an LLMs-assisted AI-agent interactive decision-making framework to enhance the bidding performance of the DRL agent in new scenarios through LLMs-assisted AI-agent interactive collaboration. Fig. 1 shows the framework of the bidding system. The main contributions are as follows:

-

•

To address the inability of existing bidding solutions based on models with oversimplified market procedures, price taker assumptions, linear approximations, etc., to cope with practical requirements, a bi-level bidding game problem between the market operator and the price-maker BESS is modeled based on the actual bidding records of each bidder in the FCAS market and the NEM’s two-stage multi-band bidding requirements.

-

•

To handle the weakness of traditional mathematical and DRL methods in terms of efficiency, performance and risk resilience, a CVaR-DRL model is proposed for bidding optimization to manage the risk of erratic decisions of the DRL agent and reduce the profit loss caused by uncertain market conditions and imperfect insight.

-

•

To improve the strategy timeliness, reliability, and interpretability of DRL in unfamiliar scenarios, an LLMs-assisted AI-agent interactive decision-making framework is built for online market analysis, assisted decision and interpretable feedback, where conditional hybrid decision and self-reflection mechanisms are designed to mitigate unfaithful decisions by LLMs’ hallucination.

The paper is organized as follows: Section II covers research preliminaries. Section III presents the DRL problem and proposed algorithm. Section IV analyzes case study results. Section V concludes the paper.

II Preliminaries

II-A FCAS Markets Rules and Mechanisms

In Australia’s NEM, the Australian Energy Market Operator (AEMO) ensures that the system frequency remains within the normal operation band by purchasing sufficient capacity from FCAS providers’ bids to match the FCAS demand [20]. FCAS is divided into regulation and contingency services: regulation FCAS is dispatched to correct minor frequency deviations while contingency FCAS restores larger frequency deviations when the frequency exceeds the standard band [1]. Depending on the directions of frequency correction, they can be further classified as raised services and lower services. The specific market operation has the following stages.

II-A1 Bidding Stage

The NEM operates a real-time spot market only but has a pre-dispatch stage and a real-time stage [21]. In the pre-dispatch stage, bidders are required to submit the next 24-hour bids one day in advance. A standard bid has 10 bidding bands including 10 bidding capacities and prices, and they must be monotonically increasing. In the real-time stage, the bidding prices will no longer be modified but the capacities can be flexibly adjusted via re-bids before the next trading interval [1]. This rule allows bidders to adjust the capacity allocation in dynamic real-time market conditions while ensuring the clearing prices do not experience undesired large fluctuations due to re-bidding.

II-A2 Clearing Stage

In the market clearing stage, AEMO aims to purchase sufficient bidding capacities with minimal costs. In each trading interval, AEMO will sort all bids by price and prioritize enabling bids with lower prices until the dispatched capacity exactly matches the FCAS demand. Subsequently, the highest price among all enabled bids will be selected as the clearing price [1]. It is worth noting that AEMO considers each bidder’s capacity constraints across the energy, regulation, and contingency markets, meaning that the total dispatched capacity of this bidder across these three markets cannot exceed its maximum output power limit [22].

II-A3 Settlement Stage

In the settlement stage, enabled bidders will be settled based on the enabled capacity and the clearing price. Note that the enabled capacity is different from the actually dispatched capacity. Even if a bidder is on standby as reserve capacity, it will obtain the same revenue [1].

II-B Market Clearing Model

At each trading interval , AEMO will deploy sufficient capacity from bids to meet the demand in the order of the cost. and denote the -th bidding capacity and bidding price respectively of the -th bidder at time . Then AEMO will determine the enabled capacity and the clearing price which will be settled to the -th bidder. Let denote the lower regulation, raise regulation, lower contingency, and raise contingency FCAS markets respectively. The market clearing model is as follows:

| (1a) | ||||

| s.t. | (1b) | |||

| (1c) | ||||

| (1d) | ||||

| (1e) | ||||

| (1f) | ||||

| (1g) | ||||

| (1h) | ||||

| (1i) | ||||

| (1j) | ||||

| (1k) | ||||

| (1l) | ||||

| (1m) | ||||

where the optimization objective (1a) is to minimize the costs across all FCAS markets. (1b)-(1c) aim to determine the bidding capacity of each bidder by selecting at most one bidding band from ten bands. (1d) means that AEMO can flexibly enable the capacity from the selected bidding capacity . Similar to (1b), (1e) determines the corresponding bidding price at the selected band. (1f)-(1h) aims to use the big M method to determine the maximum enabled bidding price (i.e. the clearing price ), where M is a very large value. (1i) ensures the overall enabled FCAS capacity plus the overall market supply variation is equal to the FCAS demand . (1j) ensures the joint enabled capacity of each bidder in regulation lower, contingency lower FCAS markets plus the charging capacity in energy market never exceed its maximum charging capacity . Similarly, (1k) ensures the joint enabled capacity of each bidder in regulation raise, contingency raise FCAS markets plus the discharging capacity in energy market never exceed its maximum discharging capacity . As shown in (1l), and are binaries.

II-C BESS Bidding Model

II-C1 Day-ahead Bidding Model

As required by AEMO, an initial bid with prices and capacities should be submitted 24 hours in advance. Different from the bidding capacities, the submitted bidding prices cannot be further changed.

| (2a) | ||||

| s.t. | (2b) | |||

| (2c) | ||||

| (2d) | ||||

| (2e) | ||||

| (2f) | ||||

| (2g) | ||||

| (2h) | ||||

| (2i) | ||||

| (2j) | ||||

| (2k) | ||||

| (2l) | ||||

| (2m) | ||||

| (2n) | ||||

| (2o) | ||||

| (2p) | ||||

| (2q) | ||||

| (2r) | ||||

| (2s) | ||||

where is the probability of scenario in scenario set . The optimization objective (2a) is to maximize the profits from the joint energy and FCAS markets with the minimum degradation cost within a 24-hour optimization window . Similar to (1b), (2b) is used for bidding band selection but it seeks the ranges instead of values. Specifically, since the bidding price is variable from 0 to the bidding price cap , it will span 12 prices from 0, ten bidding prices to the price cap and be located in one range from their 11 ranges and that is the reason for 11 binaries. (2c) and (2e) ensure that the bidding prices and capacities of the model are monotonically increasing. (2f)-(2g) find the bidding bands in all bids in which is located and (2d) finds the capacities in the selected bidding bands. (2h) ensures that the capacity of the BESS plus the capacities of other bidders in market plus the overall market supply variation matches the demand in market , where and are relaxation variables. (2i)-(2k) updates the state of charge (SoC) of the BESS within the SoC operation ranges, where is the FR command in market . (2l)-(2m) ensure that the charging power and the discharging power of the BESS are within the maximum charging capacity and the maximum discharging capacity and it cannot be charged and discharged simultaneously. Similar to (1j)-(1k), (2n)-(2o) ensure the joint capacities in energy and FCAS markets do not exceed the maximum capacities. (2q) calculates the coefficient to estimate the degradation cost of the BESS, where and are the battery cell cost and the maximum cycle numbers of the BESS [6]. Note that since the bid should be unique, the variables forming the bid (,, and ) are unique solutions in .

II-C2 Real-time Bidding Model

AEMO allows bidders to adjust capacities via re-bid in response to the latest changes in the real-time market, hence the real-time model aims to adjust the bidding capacities under real-time prediction and fixed bidding prices by the day-ahead model.

| (3a) | ||||

| s.t. | (3b) | |||

| (3c) | ||||

| (3d) | ||||

| (3e) | ||||

| (3f) | ||||

| (3g) | ||||

| (3h) | ||||

| (3i) | ||||

| (3j) | ||||

| (3k) | ||||

| (3l) | ||||

| (3m) | ||||

| (3n) | ||||

| (3o) | ||||

where (3a) is to determine the adjusted capacities (, and ) in the energy and FCAS markets within a 5-minute rolling window. Unlike the rough day-ahead forecasts of the 24-hour stochastic scenarios, the real-time model uses more accurate real-time deterministic forecasts as input parameters. Note that (3e)-(3f) aim to seek the located bidding bands of the clearing prices instead of the bidding prices in the day-ahead model because are considered as fixed parameters in the real-time stage. Also, the capacity adjustment in (3g) should ensure that the supply meets the demand in real time.

II-D Supply Estimation Model

Before using the above models, it is necessary to present the model that calculates the overall market supply variation mentioned in the previous models, i.e. . The clearing price is determined by both the FCAS supply and demand, taking a real-world scenario in the FCAS market as an example, bidders typically put more capacity into arbitrage during peak energy prices, and hence the clearing prices in FCAS markets will be higher to purchase capacity that can meet the demand. However, the data on clearing prices and demands are given by AEMO whereas the data on supply is incomplete. Hence, it is hard to describe the above scenarios caused by the market supply variation. To better describe the relationship between price and supply-demand balance under actual scenarios, we build a model to estimate the overall market supply variation under given clearing prices and demands .

| (4a) | ||||

| s.t. | (4b) | |||

| (4c) | ||||

| (4d) | ||||

| (4e) | ||||

| (4f) | ||||

| (4g) | ||||

| (4h) | ||||

| (4i) | ||||

| (4j) | ||||

where the optimization objective (4a) is to find the supply variation at the supply-demand balancing point. (4b)-(4e) can find the available capacity in market under the given price . Since and are given, the only unknown variable can be calculated by (4f)-(4h). can be both positive and negative, which can be divided into a positive component and a negative component .

II-E Optimization Framework

As shown in Fig. 2, we formulate the problem as a bi-level game model, where the upper-level model is the market clearing model as a leader, and the lower-level model is the BESS bidding model as a follower. First, the unknown parameter in the problem can be obtained based on the predicted clearing price and demand by solving the supply estimation model (4a). Then, in the pre-dispatch stage, the bidding price and capacity of the BESS are obtained by solving the day-ahead bidding model (2a). In the real-time stage, the bidding price and capacity of the BESS are introduced into model (1a) to obtain an initial clearing price. Then, the clearing price is introduced into model (3a) to obtain the bidding capacity. In the two-level game, model (1a) and model (3a) iteratively update their strategies until Nash equilibrium or exceed the iteration limit. Note that all the above models take the predicted market information as inputs. Finally, in the actual dispatch stage, model (4a) is solved based on the actual clearing price and demand to obtain the parameter , and then the bidding strategy from model (3a) is introduced into model (1a) to obtain the actual clearing price and enabled capacity, as well as the final actual profit of the BESS.

III Methodology

III-A MDP Formulation

To formulate the proposed problem as a DRL problem, a Markov Decision Process (MDP) including a state space , an action space , a transition probability function , and a reward function is necessary to be defined in the form of a DRL environment for the interaction with the DRL agent.

-

•

State space: A state space contains a set of states that the agent can observe from the environment. At time , the SoC of BESS , the predicted energy price , FCAS prices and demands in market are defined as states.

-

•

Action space: An action space contains a set of actions which is the bidding strategy of the agent. At time , the bidding capacities in the FCAS markets as well as the output and in the energy market are defined as actions.

-

•

Transition probability function: . The state at is uncertain under given observation and bidding strategy at .

-

•

Reward function: . When the agent takes an action and transforms to , it receives a reward . We define the profits of the BESS including the revenues in the joint energy and FCAS markets and the degradation costs as the rewards. Similar to the objective function of model (3a), the basic reward function can be written as:

(5) where and are the solutions of the market clearing model (1a), which means that there are interactions between the agent and the market.

Unlike the instantaneous rewards in FCAS, arbitrage rewards tend to be sparse and delayed. To encourage the BESS to charge before discharging and receiving rewards, the potential-based reward shaping (PBRS) [23] is employed to assist the agent in learning strategies for joint markets. The reward function (5) is modified by adding an extra reward based on prior knowledge:

| (6) |

| (7) |

where potential function measures the gap between charged energy and energy threshold . is the projector of to avoid overcharging. In general, the dense artificial reward signals drive the agent to reach the sub-goal of charging at least a certain amount of energy in each episode.

In order to ensure that the bidding strategy of the agent complies with market standards and rules, any action out of specification is limited, for we have:

| (8) |

| (9) |

| (10) |

| (11) |

where the maximum function is used to ensure the bidding capacities are monotonically increasing in each FCAS market. The minimum function is used to ensure the joint capacities in energy and FCAS markets are within the limitations of the maximum charging or discharging capacity. In addition, when is larger than , are limited to 0, and when is smaller than , are limited to 0, which ensures is always within the limitations of the SoC. Once the MDP is defined, the DRL agent will interact with the environment to obtain experience samples and learn strategies to maximize the cumulative rewards of the scenarios. After offline training, the trained model will be applied to the online bidding system as the decision engine.

III-B LLMs-assisted AI-agent Interactive Decision-making Framework

The epistemic uncertainty leads to a declined bidding performance of the DRL agent in unfamiliar scenarios outside the training set. Thus, we propose an LLMs-assisted AI-agent interactive decision-making framework to decompose the complicated bidding task into more manageable sub-tasks and deploy multiple LLMs-based agents to address the components in an orderly modular pattern. Compared to the DRL model trained on historical data, LLMs can analyze the latest market conditions in real time, enhancing the strategy timeliness. To reduce profit loss in unfamiliar scenarios, LLMs can adjust inferior strategies by conditional hybrid decisions and incrementally integrate new knowledge into DRL through MDP reshaping, thereby improving the strategy reliability. Additionally, unlike the ”black box” nature of DRL networks, LLMs can act as interpreters to provide explanations to users, thereby increasing the interpretability. An external evaluation and self-reflection mechanism are designed in the “DRL-LLMs” loop to mitigate the hallucination problem in LLMs.

III-B1 Definition of LLMs-assisted Agents

-

•

Data Analyst (Agent-1): Agent-1 aims to detect whether the state space during online operation is common in the historical training set (True) or not (False). Then it analyzes the potential profitability in each FCAS market from historical statistics, predicted price trends, and prediction quality for subsequent adjustment guidance.

-

•

Strategy Optimizer (Agent-2): The target of Agent-2 is to adopt a hybrid decision to make higher profits :

(12) To mitigate unreliable decisions by LLMs’ hallucination, we introduce another condition as an external evaluation mechanism to constrain rigidly. is enabled only when its cumulative return within a half-hour rolling horizon is higher than that of , thereby ensuring a safe profit margin. Dynamically varying conditions over time also avoid a consistent bias towards one alternative.

-

•

Performance Evaluator (Agent-3): With the ability of LLMs’ self-reflection and multi-agent interaction, Agent-3 evaluates the bidding performance of and and then provides feedback to reinforce the agents. It can also act as an interpreter for agents’ decision explanations.

III-B2 Interactive Agent Communication and Collaboration

We first define the user prompt and formalize it as which includes observation , action , reward and context to formulate the problem for LLMs.

-

•

Observation contains the state space , the action space , and the market historical statistics and prediction.

-

•

Action needs to be defined individually for each agent. For Agent-1, the actions are the detected and analyzed results on in text form. For Agent-2, the actions are the adjusted bidding capacities in numerical form. For Agent-3, the actions are the evaluation results in text form.

-

•

Reward contains the numerical performance of the decisions, which will be converted to text form as feedback.

-

•

Context includes the problem description, the task definitions, the task targets, the constraints on action , the expected answer format, and any necessary information.

For a given prompt , LLMs can observe and determine the agents’ actions in the requested format. The multi-agent workflow is organized as follows. First, Agent-1 sends its detected and analyzed results to Agent-2. Then Agent-2 performs hybrid decisions based on the current conditions and the given information. The original and the adjusted are executed synchronously in the environment to obtain the rewards and . Finally, Agent-3 provides feedback including quantitative assessments and qualitative analysis based on each agent’s performance for strategy correction at subsequent iterations.

During online operation, LLMs can realize interactive collaboration between multiple LLMs-assisted AI agents and the DRL agents by affecting the MDP in the environment. First, the tuple (,,) under the original strategy will be replaced by the new tuple (,,) under the LLMs-assisted new strategy and stored in the experience replay. Then the DRL network parameters are fine-tuned based on gradients through incremental learning from superior trajectories. Unlike the traditional method of penalizing inferior trajectories, adjusted trajectories can mitigate the profit loss in unfamiliar scenarios and drive DRL to better adapt to new environments.

III-C CVaR-DRL Algorithm

In addition to epistemic uncertainty, aleatoric uncertainty also impacts the profitability of the BESS. Due to the prediction errors, the observation of DRL agent exists certain noises in our problem instead of fixed in some traditional problems. To reduce the risk of uncertain MDP and improve the algorithm performance, the CVaR-DRL algorithm is proposed, where the proximal policy optimization (PPO) architecture is employed as the basic decision-making engine, CVaR [24] is introduced as risk metrics and the maximum entropy principle [25] is applied for model robustness enhancement.

A standard DRL aims to find a policy parameterized by that maximizes the expected cumulative reward:

| (13) |

where is a discount factor. Deterministic policy often struggles to cope with uncertainty in MDPs and tends to fall into local optimum, hence, we first combine the concept of entropy with DRL to introduce randomness into the policy so that DRL agents can fully explore the environment. Mathematically, the objective function can be modified as follows:

| (14) |

where the term maximizes the policy entropy under coefficient to encourage the agent to adopt the action distributions with high entropy and improve the generalization capabilities of policy under the uncertainty.

In addition, we introduce CVaR to quantify the expected loss under uncertainty in the worst scenarios:

| (15) |

where is the confidence level. denotes the projector of . denotes the threshold in the loss distribution. is the trajectory return under policy . Based on the optimization method in [26], (15) can be constrained by CVaR to avoid profit loss in the worst scenarios. The rewritten objective function is as follows:

| (16) |

where is the reward tolerance. Then the Lagrangian relaxation is employed to unconstrain the problem:

| (17) |

where is the Lagrange multiplier. Based on the PPO architecture, the objective function (17) can be optimized by the following gradients (18)-(20) in terms of , and :

| (18) |

| (19) | ||||

| (20) |

where is the scenario under policy . is the indicator function. is the estimated advantage function, is the importance sampling ratio, is the clip parameter and the clip function is used to remove the overestimation and underestimation by upper limit and lower limit . Typically, the advantage function is estimated by the Generalized Advantage Estimator (GAE):

| (21) |

where is the GAE parameter and V(s) is the value function.

In summary, Algorithm 1 presents the implementation and procedure of the LLMs-assisted AI-agent CVaR-DRL.

IV Case Study

The datasets in this paper are provided by AEMO, including historical clearing prices, demands, and bids in FCAS markets and energy prices in South Australia. ARIMA is employed to predict market prices and demands. The FR commands are simulated based on the regulation D signal from PJM. The scenarios are randomly sampled from the dataset in 24-hour periods. The time interval of the MDP is 5 minutes which matches the trading interval of NEM. The capacity of the BESS is 100MWh with an SoC range from 0.2 to 0.8. The charging/discharging power is 50MW with an efficiency of 0.95%. The battery cell cost is $0.5/Wh with a maximum cycle number of 10,000. is set at 40MWh. The value of is 0.9, determined by identifying the inflection point on the CVaR curve with respect to . The value of is 12,500, calculated as the average of CVaR and VaR at selected . The hyperparameter setting for the DRL algorithm is shown in Table I. LLMs employ the latest GPT-4 Turbo model.

| Hyperparameter | Value | Hyperparameter | Value |

| Batch size | 2880 | Mini-batch size | 72 |

| Actor learning rate | 0.001 | Critic learning rate | 0.001 |

| Discount factor | 0.99 | Entropy coefficient | 0.01 |

| Clip threshold | 0.2 | GAE parameter | 0.95 |

| Hidden layer | 2 | Node number | [256,256] |

| Activation function | Tanh | Optimizer | Adam |

IV-A Discussion on Market Clearing Model

The role of the market clearing model is to acquire sufficient capacities from various bidders to satisfy the market demand and establish clearing prices. Fig. 3 illustrates the clearing results for all participants in the market clearing model when the BESS bids 10MW, 30MW, and 50MW respectively. When the BESS bids 10 MW, the market clearing model has to purchase additional capacities from other bidders to ensure the supply-demand balance, resulting in higher clearing prices. As the bidding capacity of the BESS increases to 30MW, fewer capacities are needed from other bidders, leading to a decrease in clearing prices. As the BESS bids 50MW, the clearing prices are significantly lower. Thus, as a price maker, the BESS’s bidding capacities influence the market clearing prices.

IV-B BESS Cost-benefit Analysis

To assess the profitability of the mathematical and DRL models, we evaluate their benefit performance in the joint energy and FCAS markets. Fig. 4 displays the bidding results of the mathematical model, wherein the BESS bids higher capacities during price spikes in the contingency markets, while mainly allocating capacities to the regulation markets under normal conditions. Fig. 5 shows the bidding results of the DRL model, which tends to distribute its capacities more uniformly across both the contingency and regulation markets.

Fig. 6 provides statistical data on the clearing prices under both strategies, which aggregates the maximum, mean, and minimum values of each FCAS market into a 24-hour profile, along with their averaged values. Generally, the market trends show that raised markets typically have higher prices than lower markets, and regulation markets are priced above contingency markets. Nevertheless, the contingency markets exhibit greater price volatility, offering rare but large profit opportunities. In both bidding strategies, the models generally allocate more capacities to the regulation market than to the contingency markets to secure stable revenues. However, notable differences exist in handling contingency capacities: the mathematical model tends to shift regulation capacities to the contingency markets after predicting the price spikes, whereas the DRL model consistently bids a portion of capacities into the contingency market to pursue potential profit opportunities. Additionally, the clearing results are different between the models, where the peak prices achieved by the DRL model in both contingency raise and lower exceed $1,000/MW, significantly surpassing those of the mathematical model.

Table II provides a summary of the profits of the BESS under the strategies of the DRL model and the mathematical model. In the energy market, the arbitrage profits of the BESS utilizing the DRL model show an increase of 7.69% over those achieved by the mathematical model. In the regulation markets, profits from the DRL model both lower and raise regulation are increased by 62.23% and 21.90%, respectively. Additionally, the DRL model enhances profits in the contingency markets by 8.77% and 7.67% and achieves a 5.63% reduction in degradation costs. Overall, the BESS under the DRL model generates $37,163 more in profit than the mathematical model, which demonstrates the superior performance of the DRL model in maximizing bidding benefits.

| Revenue | DRL model | Mathematical model |

| Energy arbitrage | $81,240 | $75,436 |

| Regulation lower | $13,937 | $8,591 |

| Regulation raise | $98,160 | $80,525 |

| Contingency lower | $6,387 | $5,872 |

| Contingency raise | $73,199 | $67,985 |

| Degradation cost | -$47,024 | -$49,673 |

| Overall profit | $225.899 | $188,736 |

IV-C Evaluation of Bidding Risk

To assess the role of risk management in bidding strategies, we analyze the detailed distribution of bidding bands of each market for both the CVaR-DRL and the original DRL algorithms. Fig. 7 presents the box plot representation of the BESS bidding distribution for the CVaR-DRL model. The bidding bands are monotonically increasing and range from band 1 to band 10. Band1 represents the maximum capacity that can be purchased at the lowest clearing price, whereas band10 corresponds to the maximum capacity at the highest price. A higher concentration of capacity in the lower bands suggests that the BESS prioritizes having its bids accepted and disregards the risk of lower clearing prices and profits. Conversely, a concentration in the higher bands indicates a pursuit of higher clearing prices, with less consideration of the risk of bid failure. In terms of median, bidding capacities tend to be low in band1 across all markets, gradually increasing up to approximately band3, which illustrates the BESS attempts to elevate clearing prices while maintaining stable revenue. Beyond band 3, the lower extremes of capacity bids stabilize above 10MW, with the upper extremes continuing to rise slightly, indicating that in some scenarios, the BESS might incrementally increase bids to between 30MW and 35MW to further elevate the clearing prices. The outliers highlight the capacity distribution in rare cases.

Fig. 8 shows the case of the original DRL model. Compared to the proposed algorithm, the original DRL has a wider distribution both in terms of band dimension and capacity dimension. Specifically, the median on regulation capacity ceases to increase at around band 8, with the lower extreme dropping below 10MW and the upper extreme reaching up to 40MW. A similar pattern is observed in the contingency capacity, where the outliers occur significantly. This wider distribution suggests that the original DRL’s strategies are more aggressive, often disregarding the risks associated with bidding failure and potential profit loss. In contrast, the CVaR-DRL algorithm adopts a more conservative strategy by integrating the CVaR, which avoids the heavy profit loss in the worst bidding failure scenario. In terms of bidding profits, the CVaR-DRL in the same testing scenarios shows an increase of $7,879 and $1,240 in the regulation and contingency markets respectively, reflecting the profit advantages of risk management in bidding strategies.

IV-D Evaluation of LLMs’ Strategies

The offset of FCAS market patterns over time may cause the DRL agent’s original strategy to no longer adapt well to new scenarios and lead to a decline in profitability. Thus, LLMs aim to detect the scenario changes in market patterns online for better timeliness, adjust strategies within a safe profit margin for better reliability, and provide explanations to users for better interpretability.

Fig. 9 compares the bidding results under the LLMs-assisted strategy and the DRL original strategy in the new scenario outside the training set. Typically, in the price axis, the volatility of contingency market clearing prices is significantly higher than that of the regulation market because the demand is often more urgent. However, in this scenario, the regulation market experienced greater volatility. Agent-1 detected the profit opportunities arising from this abnormal market condition and hence in the capacity axis, Agent-2 partially shifted capacity from the contingency markets to regulation markets. In contrast, the original strategy of reserving more contingency capacity based on historical experience would result in profit losses in this scenario, indicating that the LLMs’ bidding strategy is more reliable in terms of profitability in such uncommon scenarios.

In the time axis, the capacity shifting occurred only during the first half of the scenario. For the rest of the period, the regulation prices had returned to common market conditions, thus the original strategy remained essentially unchanged to avoid over-reliance on LLMs’ decisions and ensure a safe profit margin considering the possibility of hallucinations. This reflects the ability of LLMs in terms of timeliness to monitor dynamic changes in market conditions online.

To better demonstrate the workflow of LLMs, we partially excerpt the prompt and answers during interaction with LLMs as shown in Fig. 10. In this process, multiple agents iteratively update their strategies through interactive collaboration based on feedback and affect the interaction between the DRL agent and the environment, thus assisting the DRL agent in making better bidding strategies in new scenarios. In addition, LLMs provide the motivation and rationale for each agent’s decision, which enhances the interpretability of the strategies.

V Conclusion

In this paper, we model the complete bidding process of BESS in the joint energy and FCAS markets. We develop the CVaR-DRL algorithm to improve the bidding robustness under aleatoric uncertainties and propose the LLMs-based framework to improve the bidding generalization capabilities under epistemic uncertainties. The experiment results show that the proposed method achieves higher bidding profits compared to the traditional mathematical methods. The CVaR-DRL better reduces the bidding risks caused by forecasting uncertainties. The LLMs enhance the bidding performance of strategies in new scenarios through online market condition analysis, hybrid decision-making, and self-reflection in the “DRL-LLMs” loop.

References

- [1] AEMO, Guide to Ancillary Services in the National Electricity Market, 2023.

- [2] AEMO, Market Ancillary Service Specification, 2023.

- [3] Y. Zheng, Z. Y. Dong, F. J. Luo, K. Meng, J. Qiu, and K. P. Wong, “Optimal allocation of energy storage system for risk mitigation of discos with high renewable penetrations,” IEEE Transactions on Power Systems, vol. 29, no. 1, pp. 212–220, 2014.

- [4] A. A. Mohamed, R. J. Best, X. Liu, and D. J. Morrow, “Single electricity market forecasting and energy arbitrage maximization framework,” IET Renewable Power Generation, vol. 16, no. 1, pp. 105–124, 2022. [Online]. Available: https://ietresearch.onlinelibrary.wiley.com/doi/abs/10.1049/rpg2.12345

- [5] L. Feng, X. Zhang, C. Li, X. Li, B. Li, J. Ding, C. Zhang, H. Qiu, Y. Xu, and H. Chen, “Optimization analysis of energy storage application based on electricity price arbitrage and ancillary services,” Journal of Energy Storage, vol. 55, p. 105508, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2352152X22015006

- [6] Z. Wang, C. Li, X. Zhou, R. Xie, X. Li, and Z. Dong, “Stochastic bidding for vpps enabled ancillary services: A case study,” Applied Energy, vol. 352, p. 121918, 2023. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0306261923012825

- [7] C. Guo, X. Wang, Y. Zheng, and F. Zhang, “Real-time optimal energy management of microgrid with uncertainties based on deep reinforcement learning,” Energy, vol. 238, p. 121873, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0360544221021216

- [8] Y. Li, N. Yu, and W. Wang, “Machine learning-driven virtual bidding with electricity market efficiency analysis,” IEEE Transactions on Power Systems, vol. 37, no. 1, pp. 354–364, 2022.

- [9] W. Chen, J. Qiu, J. Zhao, Q. Chai, and Z. Y. Dong, “Customized rebate pricing mechanism for virtual power plants using a hierarchical game and reinforcement learning approach,” IEEE Transactions on Smart Grid, vol. 14, no. 1, pp. 424–439, 2023.

- [10] Y. Ye, D. Qiu, X. Wu, G. Strbac, and J. Ward, “Model-free real-time autonomous control for a residential multi-energy system using deep reinforcement learning,” IEEE Transactions on Smart Grid, vol. 11, no. 4, pp. 3068–3082, 2020.

- [11] Q. Tang, H. Guo, and Q. Chen, “Multi-market bidding behavior analysis of energy storage system based on inverse reinforcement learning,” IEEE Transactions on Power Systems, vol. 37, no. 6, pp. 4819–4831, 2022.

- [12] X. Liu, S. Li, and J. Zhu, “Optimal coordination for multiple network-constrained vpps via multi-agent deep reinforcement learning,” IEEE Transactions on Smart Grid, 2022.

- [13] W. R. Clements, B. Van Delft, B.-M. Robaglia, R. B. Slaoui, and S. Toth, “Estimating risk and uncertainty in deep reinforcement learning,” arXiv preprint arXiv:1905.09638, 2019.

- [14] S. Alexander, T. Coleman, and Y. Li, “Minimizing cvar and var for a portfolio of derivatives,” Journal of Banking & Finance, vol. 30, no. 2, pp. 583–605, 2006, risk Management and Optimization in Finance. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0378426605000841

- [15] O. Lockwood and M. Si, “A review of uncertainty for deep reinforcement learning,” in Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, vol. 18, no. 1, 2022, pp. 155–162.

- [16] Y. Cao, H. Zhao, Y. Cheng, T. Shu, G. Liu, G. Liang, J. Zhao, and Y. Li, “Survey on large language model-enhanced reinforcement learning: Concept, taxonomy, and methods,” arXiv preprint arXiv:2404.00282, 2024.

- [17] S. Sharan, F. Pittaluga, M. Chandraker et al., “Llm-assist: Enhancing closed-loop planning with language-based reasoning,” arXiv preprint arXiv:2401.00125, 2023.

- [18] N. Nascimento, P. Alencar, and D. Cowan, “Gpt-in-the-loop: Adaptive decision-making for multiagent systems,” arXiv preprint arXiv:2308.10435, 2023.

- [19] Z. Ji, T. Yu, Y. Xu, N. Lee, E. Ishii, and P. Fung, “Towards mitigating LLM hallucination via self reflection,” in Findings of the Association for Computational Linguistics: EMNLP 2023, H. Bouamor, J. Pino, and K. Bali, Eds. Singapore: Association for Computational Linguistics, Dec. 2023, pp. 1827–1843. [Online]. Available: https://aclanthology.org/2023.findings-emnlp.123

- [20] AEMO, The National Electricity Market Fact Sheet, 2021.

- [21] J. Riesz, J. Gilmore, and I. MacGill, “Frequency control ancillary service market design: Insights from the australian national electricity market,” The Electricity Journal, vol. 28, no. 3, pp. 86–99, 2015. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1040619015000494

- [22] AEMO, FCAS Model in NEMDE, 2021.

- [23] A. Ng, D. Harada, and S. J. Russell, “Policy invariance under reward transformations: Theory and application to reward shaping,” in International Conference on Machine Learning, 1999. [Online]. Available: https://api.semanticscholar.org/CorpusID:5730166

- [24] C. Ying, X. Zhou, H. Su, D. Yan, N. Chen, and J. Zhu, “Towards safe reinforcement learning via constraining conditional value-at-risk,” arXiv preprint arXiv:2206.04436, 2022.

- [25] B. Eysenbach and S. Levine, “Maximum entropy rl (provably) solves some robust rl problems,” arXiv preprint arXiv:2103.06257, 2021.

- [26] Y. Chow and M. Ghavamzadeh, “Algorithms for cvar optimization in mdps,” Advances in neural information processing systems, vol. 27, 2014.