LAPFormer: A Light and Accurate Polyp Segmentation Transformer

Abstract

Polyp segmentation is still known as a difficult problem due to the large variety of polyp shapes, scanning and labeling modalities. This prevents deep learning model to generalize well on unseen data. However, Transformer-based approach recently has achieved some remarkable results on performance with the ability of extracting global context better than CNN-based architecture and yet lead to better generalization. To leverage this strength of Transformer, we propose a new model with encoder-decoder architecture named LAPFormer, which uses a hierarchical Transformer encoder to better extract global feature and combine with our novel CNN (Convolutional Neural Network) decoder for capturing local appearance of the polyps. Our proposed decoder contains a progressive feature fusion module designed for fusing feature from upper scales and lower scales and enable multi-scale features to be more correlative. Besides, we also use feature refinement module and feature selection module for processing feature. We test our model on five popular benchmark datasets for polyp segmentation, including Kvasir, CVC-Clinic DB, CVC-ColonDB, CVC-T, and ETIS-Larib.

Index Terms:

Polyp Segmentation, Deep LearningI Introduction

I-A Overview

Colorectal cancer (CRC) is the most common cancer around the world [1]. Colonoscopy has always been recognised as the standard diagnostic for the early detection of colorectal cancer. Therefore, several deep learning methods have been proposed to aid clinical system in identifying colonic polyps. Among them, segmentation approach is significantly considered as the most appropriate way with promising result recently. However, colonoscopy has some limitations. In some previous reports, about 18% of polyps are missed from the diagnosis process [2, 3]. This is because it is an operator-driven procedure and solely dependent on the knowledge and skills of the endoscopist. With the current colonoscopy equipment, less experienced endoscopists cannot distinguish polyp regions during colonoscopy examinations [4]. More importantly, previous research has shown that increasing polyp detection accuracy by 1% reduces colorectal cancer risk by approximately 3%. Therefore, improving polyp detectability and robust segmentation tools are important in this problem.

Recently, with the vigorous development of deep learning technology, the accuracy of many classical problems has improved, including image segmentation problems. Various studies aimed to develop CADx models for automatic polyp segmentation. There are also a few studies aiming to build a specific model for polyp segmentation. HarDNet-MSEG [5] is one of them, which is an encoder-decoder architecture base on HarDNet [6] backbone that achieved high performance on the Kvasir-SEG dataset with processing speed up to 86 FPS. AG-ResUNet++ improved UNet++ with attention gates and ResNet backbone. Another study called Transfuse combined Transformer and CNN using BiFusion module [7]. ColonFormer [8] used MiT backbone, Uper decoder, and residual axial reverse attention to further boost the polyp segmentation accuracy. NeoUNet [9] and BlazeNeo [10] proposed effective encoder-decoder networks for polyp segmentation and neoplasm detection. Generally, research in changing model architecture is still a potential approach.

Among the recent deep learning architecture, Transformer based architecture has attracted the most attention. To efficient semantic segmentation, incorporating the advantages of a hierarchical Transformer encoder with a suitable decoder head has been widely researched. In this paper, we utilize a Transformer backbone as an encoder and propose a novel, light, and accurate decoder head for polyp segmentation task. The proposed decode head consists of a light feature fusion module which efficiently reduces the semantic gaps between feature from two level scales, and a feature selection module along with a feature refinement module which help handling feature from backbone better and calibrating feature comes from progressive feature fusion module before prediction.

I-B Our contributions

Our main contributions are:

-

•

We propose a Light and Accurate Polyp Sementation Transformer, called LAPFormer, that integrates a hierarchical Transformer backbone as encoder.

-

•

A novel decoder for LAPFormer, which leverages multi-scale features and consists of Feature Refinement Module, Feature Selection Module to produce fine polyp segmentation mask.

-

•

Extensive experiments indicate that our LAPFormer achieves state-of-the-art on CVC-ColonDB [11] and get competitive results on different famous polyp segmentation benchmarks while being less computation complexity than other Transformer-based methods.

II Related Works

II-A Semantic Segmentation

Semantic segmentation is one of the essential tasks in computer vision which is required to classify each pixel in the image. Recently, deep learning had an enormous impact on computer vision field, including semantic segmentation task. Many deep learning models are based on fully convolutional networks (FCNs) [12], which encoder gradually reduces the spatial resolution and captures more semantic context of an image with larger receptive fields. However, CNN still has small receptive fields, leading to missing context features. To overcome this limitation, PSPNet [13] proposed Pyramid Pooling Module to enhance global context, DeepLab [14] utilized atrous convolution to expand receptive fields.

II-B Vision Transformer

Transformer [15] is a deep neural network architecture, originally proposed to solve machine translation task in natural language processing. Nowadays, Transformer has a huge influence on natural language processing and other tasks, including computer vision. Vision Transformer (ViT) [16] is the first model successfully apply Transformer in computer vision by dividing an image into sequences and treating each sequence as a token, and then putting them into Transformer. Following this success, PVT [17], Swin [18], and SegFormer [19] are designed as hierarchical Transformer, generating feature maps at different scales to enhance the local feature, thus improving the performance in dense prediction tasks, including detection and segmentation.

II-C Polyp segmentation

Recent advances in deep learning have helped solve medical semantic segmentation tasks effectively, including polyp segmentation. However, it remains a challenging problem due to medical image characteristics, and polyps come in different sizes, shapes, textures, and colors. U-net [20] is a well-known medical image segmentation model consisting of residual connections to preserve local features between encoder and decoder. Inspired by U-net, most models [21], [22], [23], [5], [24], [25], for medical image segmentation use an architecture containing a CNN backbone as an encoder, and a decoder tries to fuse features at different scales to generate segmentation result. PraNet [26], SFA [27] focus on the distinction between a polyp boundary and background to improve segmentation performance. CaraNet [28] designs a attention module for small medical object segmentation. Since the successful application of Transformer in computer vision, current methods [29], [30], [8] have utilized the capability of Transformer as a backbone for polyp segmentation task and yielded promising results. Other methods [31], [7], [32] use both Transformer and CNN backbone for feature extraction and combine features from two brands, thus enhancing segmentation result.

III Proposed Method

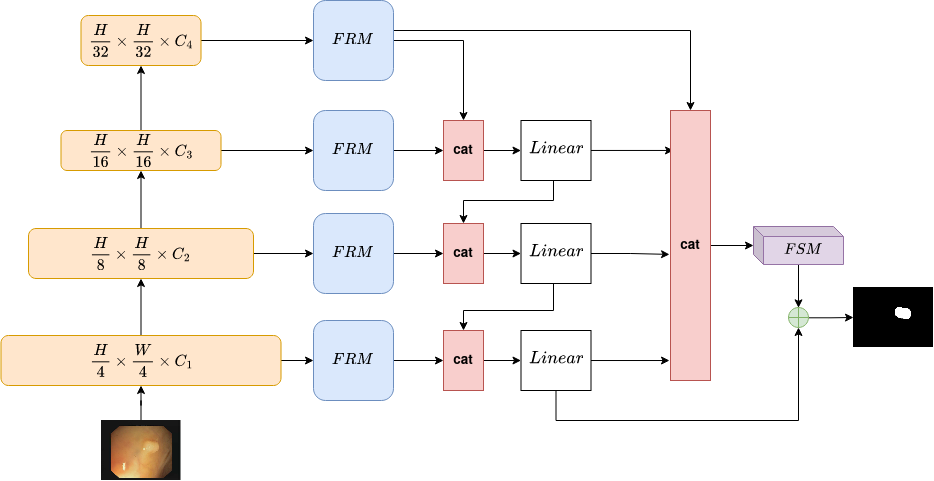

In this section, we describe the proposed LAPFormer in detail. An overview of our model is presented in Fig 1.

III-A Encoder

We choose MiT (Mix Transformer) proposed in [19] as our segmentation encoder for two main reasons. First, it consists of a novel hierarchically structured Transformer encoder which produces multi-level multi-scale feature outputs. Second, MiT uses a convolutional kernel instead of Positional Encoding (PE), therefore avoiding decreased performance when the testing resolution differs from training. Convolutional layers are argued to be more adequate for extracting location information for Transformer than other methods. Furthermore, MiT uses small image patches of size , which are proved to favor intensive dense prediction tasks like semantic segmentation.

Assume that we have input image with spatial dimensions of (represent the height, width and channel). MiT generates four different level feature with resolution where and is larger than . Basically, MiT has six variants with same architecture but varies in sizes, from MiT-B0 to MiT-B5.

III-B Progressive Feature Fusion

Dense prediction is a famous task in computer vision which consists of Object Detection, Semantic Segmentation, … Object of vastly different scales all appear in the same picture. Therefore, multi-scale features are heavily required to generate good results. The most popular way to utilize multi-scale features is to construct a feature pyramid network (FPN) [33]. However, as pointed out in [34] and [35], there are big semantic gaps between feature from non-adjacent scales, features from two scale levels apart are weakly correlated, while features between adjacent scales are highly correlated.

In FPN, features from upper scale are upscaled then directly added to features from lower scale. We argue that this is sub-optimal, when fusing features, they should be further processed before directly fusing to next level. We propose Progressive Feature Fusion module, which progressively fuses features from upper scales to lower scales, therefore, reduce the information gap between the low resolution, high semantic feature maps and high resolution, low semantic ones.

Instead of fusing feature maps with addition operation, we use concatenation operation. Feature maps of all scale are upsampled to . Then feature maps from upper scale are progressively fused with lower scale as follow:

where is features from scale ; is features from one scale lower than ; is concatenation operation; Linear is a fully-connected layer.

III-C Aggregation for Prediction

On conventional Encoder-Decoder architecture, after performing multi-scale feature fusion from feature pyramid, other models [20], [36], [23], [29] often perform prediction on the last output feature maps, which has the highest resolution (Fig 2(a)). Others adopt auxiliary predictions during training [37], [28] but during test time, they only make prediction from the highest resolution feature map (Fig 2(b)).

We argue that predicting on only the highest resolution feature maps is sub-optimal. This make the highest resolution feature maps to carry a lot of information, but information can be lost during feature fusion process. Adding information from high semantic branches (Figure 2(c)) before prediction can provide network with coarse polyp regions, therefore, easing the work of highest resolution feature maps.

III-D Feature Selection Module

After channel concatenation to increase information for prediction, it is crucial to emphasize feature maps that contain important details and suppress redundant information.

The data flow of Feature Selection Module is shown on Fig 3. Output feature maps from Aggregation for Prediction is scaled with a weighting vector which indicates the importance of each channel. Then we conduct channel reduction to keep the importance ones for prediction. The weighting vector is calculated as:

where is obtained by applying average pooling on output feature maps from Aggregation for Prediction mentioned above; are two fully-connected layers; is ReLU activation function and is Hard Sigmoid activation function.

Overall, the process of Feature Selection Module (FSM) can be formulated as:

where is output feature maps from Aggregation for Prediction; is weighting vector; is intermediate feature maps; is output of FSM module.

III-E Low level connection

Low level features are important in semantic segmentation task, and its importance is especially high in polyp segmentation, because the boundary between polyp and its surroundings are not easily distinguishable.

We further enhance prediction on polyp boundary using a skip-connection. The skip-connection connects output feature maps from FSM with the lowest level feature maps in the feature hierarchy from Progressive Feature Fusion. This is a simple but surprisingly effective way which boosts the performance of our model.

III-F Feature Refinement Module

Normally, feature maps of every scale from backbone pass through a lateral connection. It merges feature maps from upper scale into lower scale feature maps. Lateral connection is implemented using a Convolution. In Object Detection settings, this isn’t a problem, because features after feature fusion continue to be refined in the Detection Head.

However, in our Decoder settings, this is sub-optimal for multiple reasons:

-

•

All module in our Decoder part use Convolution. This hampers the learnability of model in the Decoder.

-

•

Convolution in lateral connection reduces number of channels in two top-most scale. This process can cause information lost.

-

•

Convolution in lateral connection also increases number of channels in two bottom-most scale. This process can cause information redundancy.

We propose to replace lateral connection with our Feature Refinement Module (FRM). Backbone feature maps from different levels are refined with FRM. It should be noted that each level has its own respective FRM. FRM is implemented using a Convolution, followed by a BatchNorm layer and ReLU activation function (Fig 4). This is very simple but harmonize well with our Encoder and Decoder architecture. Using Convolution brings two benefits:

-

•

Convolution further refines features coming from backbone, therefore, enhances the learning capability of Decoder.

-

•

Transformer Encoder lacks Local Receptive Field, result in lacking of local details. Convolution effectively emphasizes local features and cleans up noises.

IV Experiments

Dataset and Evaluation Metrics: We construct experiments on five polyp segmentation datasets: Kvasir [38], CVC-ClinicDB [39], CVC-ColonDB [11], CVC-T [40] and ETIS-Larib Polyp DB [41]. We follow the experimental scheme mentioned in PraNet [26] and UACANet [37] which randomly extract 1450 images both from Kvasir and CVC-ClinicDB to construct a training dataset. We used the same training dataset as in PraNet and UACANet. Then we perform evaluation on the rest of Kvasir and CVC-ClinicDB. We also evaluate on CVC-ColonDB, CVC-T and ETIS to show our model’s generalization ability on unseen datasets. For performance measuring, we use mean Dice and mean IoU score as evaluation metrics for our experiments.

Implementation details: Our implementation is based on PyTorch and MMSegmentation [42] toolbox. Training is performed on Google Colab virtual machine, with a NVIDIA Tesla V100 GPU and 16GB RAM. We used AdamW optimizer with initial learning rate of 0.0001, along with Cosine Annealing [43] scheduler. We resize images to for training and testing. For data augmentations, we employ flip, slight color jittering and cutout [44]. Our loss function is a combination of Binary Cross Entropy and Dice Loss. Our model is trained 5 times for 50 epochs with batch size of 16. Reported results are averaged over 5 runs.

IV-A Ablation Study

For ablation study, we use MiT-B1 backbone and train model for 50 epochs average over 5 runs. All results are reported under Table I

| Methods | GFLOPS | Params (M) | Kvasir | ClinicDB | ColonDB | CVC-T | ETIS | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mDice | mIoU | mDice | mIoU | mDice | mIoU | mDice | mIoU | mDice | mIoU | |||

| SegFormer Head | 5.5 | 13.68 | 0.902 | 0.844 | 0.904 | 0.851 | 0.754 | 0.667 | 0.838 | 0.764 | 0.753 | 0.672 |

| PFF | 6.53 | 13.81 | 0.904 | 0.846 | 0.895 | 0.838 | 0.765 | 0.676 | 0.846 | 0.769 | 0.753 | 0.669 |

| PFF + A | 8.57 | 14.07 | 0.903 | 0.844 | 0.895 | 0.840 | 0.769 | 0.680 | 0.852 | 0.774 | 0.759 | 0.676 |

| PFF + A + FSM | 8.58 | 14.21 | 0.906 | 0.851 | 0.897 | 0.841 | 0.769 | 0.680 | 0.855 | 0.776 | 0.759 | 0.675 |

| PFF + A + FSM + Skip | 8.58 | 14.21 | 0.904 | 0.846 | 0.898 | 0.844 | 0.774 | 0.687 | 0.858 | 0.781 | 0.764 | 0.682 |

| LAPFormer Head | 10.54 | 16.3 | 0.910 | 0.857 | 0.901 | 0.849 | 0.780 | 0.695 | 0.859 | 0.781 | 0.768 | 0.686 |

Progressive Feature Fusion (PFF) and Aggregation for Prediction. We demonstrate the effect of Progressive Feature Fusion (PFF) in Fig 5. Visualization is done on feature maps closest to prediction head. After applying PFF, we can see that our model accurately localizes Polyp region and clears up noises from other regions. PFF effectively boosts performance of our Encoder’s across almost all datasets. Aggregating all feature maps produced by PFF helps utilize multi-scale features better and brings huge performance gain on ETIS, which consists of many small polyps. Results for both of these components are shown on the second and third row of Table I

Feature Selection Module (FSM). Applying FSM before giving prediction helps the prediction head to focus on important regions. The strength of FSM is demonstrated on Fig 6. With the same output feature maps from PFF + A, adding FSM refines the polyp segmentation region, highlights details which were not captured before. The result of FSM is shown on the forth row of Table I

Low level connection. Low level features have always been important in dense prediction tasks. For polyp segmentation, integrating low level features produces finer segmentation masks, especially the boundary regions. The importance of low level features is shown on Fig 7, adding more information from low level features refines polyp segmentation mask. This low level connection consistently boosts our model performance on almost all datasets, results are shown on the fifth row of Table I.

Feature Refinement Module (FSM). Incoperating Feature Refinement Module (FSM) increases our model’s performance on all datasets. Results after applying FSM is shown on last row of Table I. With FSM, we are able to scale our model with stronger backbone. All variations of our model are shown on Table II.

| Methods | Kvasir | ClinicDB | ColonDB | CVC-T | ETIS | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| mDice | mIoU | mDice | mIoU | mDice | mIoU | mDice | mIoU | mDice | mIoU | |

| LAPFormer-S | 0.910 | 0.857 | 0.901 | 0.849 | 0.781 | 0.695 | 0.859 | 0.781 | 0.768 | 0.686 |

| LAPFormer-M | 0.913 | 0.863 | 0.911 | 0.861 | 0.800 | 0.722 | 0.856 | 0.790 | 0.784 | 0.709 |

| LAPFormer-L | 0.917 | 0.866 | 0.915 | 0.866 | 0.815 | 0.735 | 0.891 | 0.821 | 0.797 | 0.720 |

IV-B Comparison with State-of-the-Art

We compare our results with existing approaches on 5 benchmark datasets. Table III shows the results of SOTA methods. Our model achieves competitive performance on all datasets while being incredibly lighter than other methods. FLOPs and Params are shown on Table IV.

| Method | Kvasir | ClinicDB | ColonDB | CVC-T | ETIS | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| mDice | mIoU | mDice | mIoU | mDice | mIoU | mDice | mIoU | mDice | mIoU | |

| PraNet [26] | 0.898 | 0.840 | 0.899 | 0.849 | 0.709 | 0.640 | 0.871 | 0.797 | 0.628 | 0.567 |

| Polyp-PVT [30] | 0.917 | 0.864 | 0.937 | 0.889 | 0.808 | 0.727 | 0.900 | 0.833 | 0.787 | 0.706 |

| SANet [24] | 0.904 | 0.847 | 0.916 | 0.859 | 0.753 | 0.670 | 0.888 | 0.815 | 0.750 | 0.654 |

| MSNet [25] | 0.907 | 0.862 | 0.921 | 0.879 | 0.755 | 0.678 | 0.869 | 0.807 | 0.719 | 0.664 |

| TransFuse-L* [7] | 0.920 | 0.870 | 0.942 | 0.897 | 0.781 | 0.706 | 0.894 | 0.826 | 0.737 | 0.663 |

| SSFormer-L [29] | 0.917 | 0.864 | 0.906 | 0.855 | 0.802 | 0.721 | 0.895 | 0.827 | 0.796 | 0.720 |

| ColonFormer-L [8] | 0.924 | 0.876 | 0.932 | 0.884 | 0.811 | 0.733 | 0.906 | 0.842 | 0.801 | 0.722 |

| ColonFormer-XL [8] | 0.920 | 0.870 | 0.923 | 0.875 | 0.814 | 0.735 | 0.905 | 0.840 | 0.795 | 0.715 |

| LAPFormer-S | 0.910 | 0.857 | 0.901 | 0.849 | 0.781 | 0.695 | 0.859 | 0.781 | 0.768 | 0.686 |

| LAPFormer-M | 0.913 | 0.863 | 0.911 | 0.861 | 0.800 | 0.722 | 0.856 | 0.790 | 0.784 | 0.709 |

| LAPFormer-L | 0.917 | 0.867 | 0.915 | 0.866 | 0.815 | 0.735 | 0.891 | 0.821 | 0.797 | 0.719 |

| Methods | GFLOPs | Params (M) |

|---|---|---|

| PraNet [26] | 13.11 | 32.55 |

| CaraNet [28] | 21.69 | 46.64 |

| TransUNet [31] | 60.75 | 105.5 |

| ColonFormer-S [8] | 16.03 | 33.04 |

| ColonFormer-L [8] | 22.94 | 52.94 |

| SSFormer-S [29] | 17.54 | 29.31 |

| SSFormer-L [29] | 28.26 | 65.96 |

| LAPFormer-S | 10.54 | 16.3 |

| LAPFormer-L | 18.96 | 47.22 |

V Conclusion

In this work, we propose a novel light and accurate Transformer-based model for Polyp segmentation called LAPFormer. The decoder part of LAPFormer is capable of capturing not only rich semantic features for coarse polyp region but also boundary regions of polyp to produce a fine segmentation mask. Extensive experiments show that our model achieves competitive results while being significantly less in computation cost.

VI Acknowledge

This work is partially supported by Sun-Asterisk Inc. We would like to thank our colleagues at Sun-Asterisk Inc for their advice and expertise. Without their support, this experiment would not have been accomplished.

References

- [1] J. Bernal, N. Tajkbaksh, F. J. Sanchez, B. J. Matuszewski, H. Chen, L. Yu, Q. Angermann, O. Romain, B. Rustad, I. Balasingham et al., “Comparative validation of polyp detection methods in video colonoscopy: results from the miccai 2015 endoscopic vision challenge,” IEEE transactions on medical imaging, vol. 36, no. 6, pp. 1231–1249, 2017.

- [2] N. H. Kim, Y. S. Jung, W. S. Jeong, H.-J. Yang, S.-K. Park, K. Choi, and D. I. Park, “Miss rate of colorectal neoplastic polyps and risk factors for missed polyps in consecutive colonoscopies,” Intestinal research, vol. 15, no. 3, p. 411, 2017.

- [3] J. Lee, S. W. Park, Y. S. Kim, K. J. Lee, H. Sung, P. H. Song, W. J. Yoon, and J. S. Moon, “Risk factors of missed colorectal lesions after colonoscopy,” Medicine, vol. 96, no. 27, 2017.

- [4] V. Wadhwa, M. Alagappan, A. Gonzalez, K. Gupta, J. R. G. Brown, J. Cohen, M. Sawhney, D. Pleskow, and T. M. Berzin, “Physician sentiment toward artificial intelligence (ai) in colonoscopic practice: a survey of us gastroenterologists,” Endoscopy international open, vol. 8, no. 10, pp. E1379–E1384, 2020.

- [5] C.-H. Huang, H.-Y. Wu, and Y.-L. Lin, “Hardnet-mseg: A simple encoder-decoder polyp segmentation neural network that achieves over 0.9 mean dice and 86 fps,” arXiv preprint arXiv:2101.07172, 2021.

- [6] P. Chao, C.-Y. Kao, Y.-S. Ruan, C.-H. Huang, and Y.-L. Lin, “Hardnet: A low memory traffic network,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 3552–3561.

- [7] Y. Zhang, H. Liu, and Q. Hu, “Transfuse: Fusing transformers and cnns for medical image segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 14–24.

- [8] N. T. Duc, N. T. Oanh, N. T. Thuy, T. M. Triet, and D. V. Sang, “Colonformer: An efficient transformer based method for colon polyp segmentation,” arXiv preprint arXiv:2205.08473, 2022.

- [9] P. Ngoc Lan, N. S. An, D. V. Hang, D. V. Long, T. Q. Trung, N. T. Thuy, and D. V. Sang, “Neounet: Towards accurate colon polyp segmentation and neoplasm detection,” in International Symposium on Visual Computing. Springer, 2021, pp. 15–28.

- [10] N. S. An, P. N. Lan, D. V. Hang, D. V. Long, T. Q. Trung, N. T. Thuy, and D. V. Sang, “Blazeneo: Blazing fast polyp segmentation and neoplasm detection,” IEEE Access, 2022.

- [11] N. Tajbakhsh, S. R. Gurudu, and J. Liang, “Automated polyp detection in colonoscopy videos using shape and context information,” IEEE transactions on medical imaging, vol. 35, no. 2, pp. 630–644, 2015.

- [12] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 3431–3440.

- [13] H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia, “Pyramid scene parsing network,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2881–2890.

- [14] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille, “Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs,” IEEE transactions on pattern analysis and machine intelligence, vol. 40, no. 4, pp. 834–848, 2017.

- [15] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [16] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

- [17] W. Wang, E. Xie, X. Li, D.-P. Fan, K. Song, D. Liang, T. Lu, P. Luo, and L. Shao, “Pyramid vision transformer: A versatile backbone for dense prediction without convolutions,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 568–578.

- [18] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10 012–10 022.

- [19] E. Xie, W. Wang, Z. Yu, A. Anandkumar, J. M. Alvarez, and P. Luo, “Segformer: Simple and efficient design for semantic segmentation with transformers,” Advances in Neural Information Processing Systems, vol. 34, pp. 12 077–12 090, 2021.

- [20] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention. Springer, 2015, pp. 234–241.

- [21] A. Srivastava, S. Chanda, D. Jha, U. Pal, and S. Ali, “Gmsrf-net: An improved generalizability with global multi-scale residual fusion network for polyp segmentation,” arXiv preprint arXiv:2111.10614, 2021.

- [22] Z. Zhou, M. M. Rahman Siddiquee, N. Tajbakhsh, and J. Liang, “Unet++: A nested u-net architecture for medical image segmentation,” in Deep learning in medical image analysis and multimodal learning for clinical decision support. Springer, 2018, pp. 3–11.

- [23] D. Jha, P. H. Smedsrud, M. A. Riegler, D. Johansen, T. De Lange, P. Halvorsen, and H. D. Johansen, “Resunet++: An advanced architecture for medical image segmentation,” in 2019 IEEE International Symposium on Multimedia (ISM). IEEE, 2019, pp. 225–2255.

- [24] J. Wei, Y. Hu, R. Zhang, Z. Li, S. K. Zhou, and S. Cui, “Shallow attention network for polyp segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 699–708.

- [25] X. Zhao, L. Zhang, and H. Lu, “Automatic polyp segmentation via multi-scale subtraction network,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 120–130.

- [26] D.-P. Fan, G.-P. Ji, T. Zhou, G. Chen, H. Fu, J. Shen, and L. Shao, “Pranet: Parallel reverse attention network for polyp segmentation,” in International conference on medical image computing and computer-assisted intervention. Springer, 2020, pp. 263–273.

- [27] Y. Fang, C. Chen, Y. Yuan, and K.-y. Tong, “Selective feature aggregation network with area-boundary constraints for polyp segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2019, pp. 302–310.

- [28] A. Lou, S. Guan, and M. Loew, “Caranet: Context axial reverse attention network for segmentation of small medical objects,” arXiv preprint arXiv:2108.07368, 2021.

- [29] J. Wang, Q. Huang, F. Tang, J. Meng, J. Su, and S. Song, “Stepwise feature fusion: Local guides global,” arXiv preprint arXiv:2203.03635, 2022.

- [30] B. Dong, W. Wang, D.-P. Fan, J. Li, H. Fu, and L. Shao, “Polyp-pvt: Polyp segmentation with pyramid vision transformers,” arXiv preprint arXiv:2108.06932, 2021.

- [31] J. Chen, Y. Lu, Q. Yu, X. Luo, E. Adeli, Y. Wang, L. Lu, A. L. Yuille, and Y. Zhou, “Transunet: Transformers make strong encoders for medical image segmentation,” arXiv preprint arXiv:2102.04306, 2021.

- [32] E. Sanderson and B. J. Matuszewski, “Fcn-transformer feature fusion for polyp segmentation,” in Annual Conference on Medical Image Understanding and Analysis. Springer, 2022, pp. 892–907.

- [33] T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2117–2125.

- [34] X. Wang, S. Zhang, Z. Yu, L. Feng, and W. Zhang, “Scale-equalizing pyramid convolution for object detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 13 359–13 368.

- [35] J. Pang, K. Chen, J. Shi, H. Feng, W. Ouyang, and D. Lin, “Libra r-cnn: Towards balanced learning for object detection,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 821–830.

- [36] O. Oktay, J. Schlemper, L. L. Folgoc, M. Lee, M. Heinrich, K. Misawa, K. Mori, S. McDonagh, N. Y. Hammerla, B. Kainz et al., “Attention u-net: Learning where to look for the pancreas,” arXiv preprint arXiv:1804.03999, 2018.

- [37] T. Kim, H. Lee, and D. Kim, “Uacanet: Uncertainty augmented context attention for polyp segmentation,” in Proceedings of the 29th ACM International Conference on Multimedia, 2021, pp. 2167–2175.

- [38] D. Jha, P. H. Smedsrud, M. A. Riegler, P. Halvorsen, T. d. Lange, D. Johansen, and H. D. Johansen, “Kvasir-seg: A segmented polyp dataset,” in International Conference on Multimedia Modeling. Springer, 2020, pp. 451–462.

- [39] J. Bernal, F. J. Sánchez, G. Fernández-Esparrach, D. Gil, C. Rodríguez, and F. Vilariño, “Wm-dova maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians,” Computerized medical imaging and graphics, vol. 43, pp. 99–111, 2015.

- [40] D. Vázquez, J. Bernal, F. J. Sánchez, G. Fernández-Esparrach, A. M. López, A. Romero, M. Drozdzal, and A. Courville, “A benchmark for endoluminal scene segmentation of colonoscopy images,” Journal of healthcare engineering, vol. 2017, 2017.

- [41] J. Silva, A. Histace, O. Romain, X. Dray, and B. Granado, “Toward embedded detection of polyps in wce images for early diagnosis of colorectal cancer,” International journal of computer assisted radiology and surgery, vol. 9, no. 2, pp. 283–293, 2014.

- [42] M. Contributors, “MMSegmentation: Openmmlab semantic segmentation toolbox and benchmark,” https://github.com/open-mmlab/mmsegmentation, 2020.

- [43] I. Loshchilov and F. Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” arXiv preprint arXiv:1608.03983, 2016.

- [44] T. DeVries and G. W. Taylor, “Improved regularization of convolutional neural networks with cutout,” arXiv preprint arXiv:1708.04552, 2017.