KTWIN: A Serverless Kubernetes-based Digital Twin Platform

Abstract

Digital Twins (DTs) systems are virtual representations of physical assets allowing organizations to gain insights and improve existing processes. In practice, DTs require proper modeling, coherent development and seamless deployment along cloud and edge landscapes relying on established patterns to reduce operational costs. In this work, we propose KTWIN a Kubernetes-based Serverless Platform for Digital Twins. KTWIN was developed using the state-of-the-art open-source Cloud Native tools, allowing DT operators to easily define models through open standards and configure details of the underlying services and infrastructure. The experiments carried out with the developed prototype show that KTWIN can provide a higher level of abstraction to model and deploy a Digital Twin use case without compromising the solution scalability. The tests performed also show cost savings ranging between 60% and 80% compared to overprovisioned scenarios.

keywords:

Digital Twin , Serverless , Kubernetes , Event-Driven Architecture , Internet of Thingsurl]http://www.inf.ufrgs.br/ jwickboldt

1 Introduction

Digital Twins (DTs) are dynamic virtual model representations of real-world objects, processes, or services. These systems are designed to collect highly distributed data from different devices, sensors, and other sources to create a reliable virtual model of a physical asset or process [1]. The data collected enable organizations to monitor performance, predict potential issues, and implement responsive measures using advanced analytics and Artificial Intelligence-based models. The adoption of Digital Twins spans various industries such as manufacturing, health care, smart cities, and the energy sector.

The growing number of connected devices and the increasing volume of data generated make it challenging to move and process all the data in the Cloud, especially for time-sensitive application requirements. The advances of Mobile Edge Computing (MEC) technologies drove the deployment of micro data centers closer to end users to fit time requirements and, at the same time, overcome computing resource constraints and energy consumption restrictions of the Internet of Things IoT edge devices [2]. In addition, Mobile Cloud Computing (MCC) architecture emerges as a solution for offloading data processing from edge devices to centralized cloud data centers, thus enhancing the user experience and providing greater scalability with access to extensive cloud resources [3]. In such a complex and diverse landscape, building, deploying, and operating Digital Twin components becomes difficult; hence, it is important to establish operations patterns and rely on automation and abstractions to reduce operational burden and costs.

Building a DT system requires a solid understanding of its specific domain requirements to design the diverse number of entities and their relationships. There are several ways to define Digital Twin entities using Ontology-based models, such as OntoUML and DEXPI. More recently, the open-source community joint effort with industry has designed the Digital Twin Definition Language (DTDL) [4] which allows developers and domain experts to describe the twin graph in self-defined terms of domain models. However, since these languages focus on DT model definitions, there is a lack of ways to describe technical application aspects that compose the deployment of a Digital Twin, such as the number of allocated CPU and memory, the software implementation required to respond to specific events and the application auto-scaling settings.

Moreover, existing platforms are mostly vendor-specific implementations in which end-users cannot easily move their solution to another vendor stack without a considerable amount of rework, causing the vendor lock-in problem. Additionally, because the existing offerings operate mostly in the cloud, there is not enough flexibility to move services to edge locations, closer to the end user. An automated vendor-agnostic and unified edge-to-cloud platform solution could reduce the operational burden while providing a higher level of abstraction to keep DT services up and running.

Hence, in this research, we propose KTWIN, a Serverless Kubernetes-based platform, that allows DT operators to easily define models through open standards and configure details of the underlying service and infrastructure, such as resource allocation, container-based services deployments, and auto-scaling policies. KTWIN utilizes Serverless principles to abstract infrastructure management, enabling on-demand scaling, efficient resource utilization, and reduced operational overhead while ensuring high availability and responsiveness for Digital Twin applications. The platform was designed using the state-of-the-art Cloud Native open-source technologies, and it can be deployed in any Kubernetes cluster, in the cloud, or at edge locations. KTWIN leverages the rich environment of Kubernetes and allows for further enhancements and open collaboration within the DT industry and academy. Additionally, KTWIN maps and orchestrates domain entity specification to containers with implementations that can be defined by the DT owners. The benefits of using KTWIN include: (1) reduction of operational costs by automating manual operations, (2) more control and flexibility over the underlying container-based services that compose the DT landscape, (3) can be deployed at any Kubernetes cluster (on-premise, in the edge, in the cloud) reducing the chance of vendor lock-in.

The main contributions of this research are (1) the definition of an extended DTDL language to provide a higher level of abstraction in which DT owners can define domain entities and at the same time have control over the underlying services and operational layer, (2) the design and implementation of a Kubernetes Operator to orchestrate the DT components as containers as well as all the underlying services, messaging routing rules and data storage, (3) and the deployment of a New York City-based Smart City Digital Twin use-case to validate the usage of the platform. The verified prototype results show that it is possible to provide a higher level of abstraction and model design flexibility while still providing scalability and good system performance. The experiments also compare KTWIN resource usage and response time against scenarios of under-provisioned and over-provisioned resources. KTWIN achieves resource usage efficiency closer to the under-provisioned scenario, while still providing performance near the over-provisioned scenario.

The remainder of this article is organized as follows. Section 3 presents previous work in the DT field including real-world use cases, aspects regarding modeling and designing DTs and the existing cloud offerings. Section 4 presents the KTWIN requirements and its architecture design including their main components, resource definition, message routing, and event storage. Section 5 explains the implementation details of the proof-of-concept built to evaluate the proposed design. In Section 6, we present the evaluation methodology and discuss the results of the proposed experiments. Finally, Section 7 concludes the research and present future works.

2 Background

In recent years, the Digital Twin (DT) research field has seen significant advancements and contributions from academia leveraging the benefits of DT systems to several industries. Researchers say that the Digital Twin market size can be worth US$ 110.1 billion by 2028 [5]. This section reviews the different use cases that Digital Twins can be applied, as well as the existing platforms and frameworks offerings and their limitations.

2.1 Digital Twin Use Cases

A Digital Twin is a virtual representation of a physical system or process, designed to facilitate bidirectional communication between the real-world system and its digital counterpart [6]. DTs enable the development of value-added services, such as real-time monitoring, predictive maintenance, optimization, and simulation, that can be adopted by a variety of industries such as Manufacturing, Smart Cities, Energy and Healthcare.

In the context of the Manufacturing area, a DT can be utilized to solve several field problems such as optimizing production processes, monitoring equipment health, applying optimization to the supply chain process, and performing predictive maintenance activities. Sierla et al. [7] build a Digital Twin to automate assembly planning and orchestrate the production resources in a manufacturing cell based on digital product descriptions. The concept introduced in the research is presented in general terms in UML (Unified Modeling Language) and the implementation provides a 3D simulation environment using Automation Markup Language for digital product descriptions. Tao an Zhang [8] propose a Digital Twin Shop-floor (DTS) providing an effective way to reach the physical-virtual convergence in the manufacturing industry and improve production efficiency, accuracy, and transparency. The system components are broken down into a Physical Shop-floor (PS), Virtual Shop-floor (VS), Shop-floor Service System (SSS), and Shop-floor Digital Twin Data (SDTD). The paper also presents the key technologies required to build the proposed system and the challenges during a DTS implementation process for future studies. It lacks an actual implementation and evaluation of the proposed solution.

The modernization of cities opens the adoption of Digital Twin technologies to improve problems of medium-sized cities around the globe. Digital Twins can be applied to improve public transportation systems and address mobility problems, enhance the decision-making process in urban planning, monitor and predict environmental and resource management such as carbon emission and waste reduction, or detect anomalies and optimize operations in power and water plants. Dembski et al. [9] present a novel Digital Twin case study applied in the town of Herrenberg, Germany. The research presents a prototype and the deployment of sensor devices in different city locations to collect data. The use case prototype includes a 3D city model, a mathematical street network model, urban mobility simulation, airflow simulation based on collected environment data, sensor network data analysis and people’s movement routes. The prototype allows developers to easily extend the urban digital flow by adding new code and modules in a visual interface. Ford and Wolf [10] exploit Smart Cities with Digital Twins (SCDT) for Disaster Management Digital Twin system for Smart Cities. The authors present and test a conceptual model of a SCDT for disaster management and discuss the issues to be addressed in the development and deployment of SCDT for disaster management.

Digital Twins can improve the energy industry by providing valuable insights into system performance and optimization, reducing power downtime, providing efficient energy planning to fit the supply and demand, as well as optimizing energy household consumption. In this context, Fathy and Jaber [11] propose a data-driven multi-layer Digital Twin of the energy system that aims to mirror the actual energy consumption of households in the form of a Household Digital Twin (HDT). The model intends to improve the efficiency of energy production, modeled as an Energy Production Digital Twin (EDT), by flattening the daily energy demand levels. This is done by collaboratively reorganizing the energy consumption patterns of residential homes to avoid peak demands while accommodating the resident’s needs and reducing their energy costs. In addition, Gao et al. [12] present a Digital Twin-based approach to optimize the operation of an automatic stacking crane handling containers regarding energy consumption. The article developed a virtual container yard that syncs real container yard information to improve automatic stacking crane scheduling. Then, a mathematical model followed by a Q-learning-based optimization step is applied to minimize the total energy consumption for completing all tasks. The output can be used by managers and operators to choose the appropriate strategy to accomplish energy-saving and efficiency goals. In the Healthcare ecosystem, Digital Twins can be applied for many use cases such as creating a model replica of a single patient for health monitoring and treatment planning, simulating and testing a medical device during the design phase or even modeling an entire healthcare system to monitor to analyze vaccination campaigns, for instance. Pilati et al. [13] developed a Digital Twin which integrates the physical and virtual health systems to create a sustainable and dynamic vaccination center focusing on using the minimum space and resources while guaranteeing a good service for patients. The research implements a discrete event simulation model to try to find the best configuration in terms of human resources and queues for a real vaccination clinic to maximize the number of patients vaccinated in the shortest period.

In the context of health monitoring and treatment planning, Liu and Zhang et al. [14] propose a novel cloud-based Digital Twin healthcare framework for elderly patients (CloudDTH). The solution plans, monitors, diagnoses and predicts the health of individuals by data acquired via wearable medical devices, toward the goal of personal health management. The proposed implementation can be divided into three phases: crisis early warning, real-time supervision, and scheduling and optimization. The data utilized to monitor and simulate individual health includes data acquired from physical medical wearable devices from elderly patients (e.g. heartbeat, body temperature, blood pressure), external environmental factors such as temperature change, and medical records.

2.2 Digital Twins: Existing Services and Frameworks

In the general context of Digital Twin platforms Eclipse Ditto [15], Microsoft Azure Digital Twins [16] and AWS IoT TwinMaker [17] are the primary references. The Azure Digital Twin is a Platform as a Service (PaaS) offered by the Microsoft Azure suite that enables organizations to create digital replicas of physical environments, systems, and processes. Microsoft’s Digital Twin platform leverages the power of the Azure cloud and integrates with a wide range of other Microsoft services and tools, such as IoT Hub, Event Hubs, Event Grids, and Service Bus. Azure Digital Twins service allows to model entities and their relationship in a JSON-like language called Digital Twin Definition Language (DTDL) forming a conceptual graph that can be visualized and queried in the Digital Twin Explorer.

Amazon Web Services offers the AWS IoT TwinMaker service to build operational digital twins of physical and digital systems. AWS IoT TwinMaker allows organizations to create digital visualizations using measurements and analysis from a variety of real-world sensors, cameras, and enterprise applications to help you keep track of your physical factory, building, or industrial plant. The platform also provides tools to model your system by using an entity-component-based knowledge graph composed of entities, components, and relationships using a proprietary JSON-like language. In addition, the TwinMaker service allows configuring and loading data from time-series data sources, and it builds 3D visualizations on top of it.

Eclipse Ditto is an open-source framework for Digital Twins maintained by Eclipse Foundation. Eclipse Ditto focuses on connecting physical devices to their digital counterparts, enabling interaction and synchronization between both. It allows device virtual representation encompassing their state, properties and behaviors. Ditto supports multiple protocols for interacting with digital twins, allowing users to query, modify, and subscribe to changes in the twin’s state.

The existing PaaS solutions offer a wide variety of features, but they do not provide enough flexibility to deploy Digital Twin solutions to different landscapes, such as edge locations, and limit organizations to work with hybrid or multi-cloud environments. Moreover, they mostly are vendor-specific implementations, reducing interoperability and reusability [6], and limiting organizations to move their solutions to another vendor stack without a considerable amount of rework. Lastly, all the services mentioned above offer less control over operations and infrastructure, preventing users from personalizing settings, and monitoring application health and events propagation. In this context, an open-source Digital Twin platform built with Cloud Native solutions can offer flexibility and independence to organizations to build microservice-based distributed systems to leverage more Digital Twin use cases.

3 Previous Work in Digital Twins

This section delves into the state of the art in DT research, highlighting recent advancements, frameworks, and architectures, in the field. Grieves et al.[18] formalized that a DT system is a composition of three primary elements: a real-world space composed of a set of objects or systems, a virtual space representation of the real-world space, and a link in which the data flow between both spaces. Tao et al. [19] enhanced the definition and established the Five-Dimensional Digital Twin model composed by: a physical entity model, virtual entity model, services model, data model and connection model.

Several prior works have proposed frameworks for implementing DTs). Talasila et al. [20] propose a Digital Twin framework to manage Digital Twin assets, making them available as a service to other users. The proposed framework automates the management of reusable assets, storage, provision of computing infrastructure, communication and monitoring tasks. Users operate at the level of Digital Twins and delegate the rest of the work to the Digital Twin as a service framework. The paper does not present any real implementation or evaluation scenarios, relying on high-level design aspects of what a Digital Twin platform should contain.

Picone et al. article [21] presents the Edge Digital Twins (EDT) architectural model and its implementation. EDT enables lightweight replication of physical devices and provides a digital abstraction layer to facilitate the interaction with IoT devices and services, enhancing IoT digitalization and interoperability. The EDT architectural blackprint targets to be complementary rather than in competition with existing edge modules and DT solutions (also in the cloud). The proposal provides the following advantages: remove the responsibility from physical assets to handle the integration with cloud DTs, reduce vendor lock-in with cloud providers by introducing EDTs as middleware layers between devices and cloud DT services, and allow applications to interact with EDT locally with MQTT protocol improving performance and reliability.

Furthermore, Wang et al. [22] developed a Mobility Digital Twin (MDT) framework defined as an artificial intelligence-based data-driven cloud–edge–device framework for mobility services. The MDT consists of three static building blocks in the physical space (Human, Vehicle, and Traffic) and their associated Digital Twins in the digital space. The paper presents an example of cloud–edge architecture built with Amazon Web Services (AWS) to accommodate the proposed MDT framework and to fulfill its digital functionalities of storage, modeling, learning, simulation, and prediction.

Context-aware and adaptive Digital Twin models have been studied by researches. Hribernik et al. [23] discusses the potential of context-aware, autonomous, and adaptive DTs being the building blocks for tomorrow’s Digital Factories. Bellavista et al. [24] propose a vision of DT design and implementation to contain adaptive, autonomous, and context-aware functionalities. The paper also propose and implements a set of reusable micro-services design patterns allowing engineers to meet these new demanding requirements while keeping complexity and management costs under control.

Minerva et al. [25] provide a comprehensive survey of technologies, scenarios, application cases, and architectural models relevant to the implementation of the DT concept. The study consolidates key definitions, specifications, and implementations of DTs across diverse technological domains, offering insight into how the concept has evolved and been adopted in various industries. The article explores the applicability of DTs in key IoT application scenarios and proposes a framework for evaluating its implementation with modern software architectures.

Bicocchi et al. [26] explored the concept of end-to-end trustworthiness in the context of DTs. The research identifies the six key characteristics that enable trustworthiness, i.e., entanglement awareness, variable load resilience, edge-to-cloud continuum mobility, declarative representation, observability, and security. In addition, The authors designed a blackprint architecture that natively supports end-to-end trustworthiness and discussed a proof-of-concept implementation.

In the context of serverless computing, Wang et al. [27] discuss the usage of a Serverless architecture for IoT applications and build a prototype with Cloud-Native solutions to be deployed at the edge. The authors state that according to their experiments, the serverless approach uses fewer resources than the traditional “serverfull” computing approach. In contrast, serverless workloads presented a higher response time due to cold-start start-up time. The prototype and its evaluation were not implemented considering the Digital Twin context, focusing on a more general serverless IoT use case.

Bellavista et al. [28] introduces a microservices-based and Serverless-ready model for DTs, establishing the foundation for cost-effective DT deployment. The research results show that the combined use of microservices and serverless computing has significant potential to address challenges such as accommodating variable application requirements. The authors implemented a hypothetical Digital Twin setup combining two Serverless deployments, one relying on a cloud provider Serverless function and one using a Serverless framework at the edge, and one micro-service-based deployment. The findings show that the Serverless implementation performs an order of magnitude worse than microservices. The proposed study lacks a real Digital Twin use case implementation, does not provide any serverless abstraction for DT data model definitions, and does not discuss event storage.

The Digital Twin literature still has many unexplored study areas, missing an actual deployment of a Digital Twin implementation built on top of Cloud-Native solutions. To the best of our knowledge, no prior work has proposed an event-driven, Serverless-based architecture for DT systems that provides Serverless abstractions to enable end-users to seamlessly combine flexible model design with system-specific configurations. This work addresses these gaps by introducing a novel solution that includes (1) an extended DTDL for higher-level abstraction, enabling end users to define domain entities while maintaining control over underlying services, (2) an automated process to orchestrate DT components creation, modification and deletion, including messaging, routing, and data storage, and (3) the development and deploiyment of a Smart City DT use case to validate the platform. This novel approach is expected to significantly enhance the adoption of DT technology by simplifying the development and deployment of new and diverse use cases.

4 KTWIN Architecture Design

KTWIN, a Kubernetes-based Serverless platform for Digital Twins, automates the orchestration of Digital Twin services allowing operators to easily define twin’s data models and their relationships reducing the complexity of deploying and managing DT systems. The following subsections outline the architectural requirements and decisions of KTWIN and detail its main components. Additionally, they describe the implemented custom resources, event routing mechanisms, and key aspects of data management and storage.

4.1 Architectural Requirements and Design Choices

Understanding the distinct needs of the personas involved in the design, development, and operational lifecycle of Digital Twins is instrumental in shaping KTWIN’s architectural requirements and in ensuring that the platform is tailored to meet their specific challenges and objectives. Therefore, we defined KTWIN revolving around three primary personas, described as follows:

-

1.

The DT Domain Expert is primarily responsible for the strategic direction and governance of the Digital Twin ecosystem. Their responsibilities include defining the functional requirements by modeling entities and describing their behaviors, often leveraging an ontology-based language to ensure precise representation of domain-specific entities. This requires a system capable of adapting to diverse domains through open and flexible modeling tools.

-

2.

The DT Developer focuses on implementing and validating services that encapsulate the domain logic defined by the DT Domain Expert. The developer seeks for abstractions such as Function as a Service (FaaS), service containerization, and the usage of Software Development Kits (SDKs), to streamline service creation and deployment.

-

3.

Lastly, the DT Operator responsibilities include the deployment and maintenance of the Digital Twin solution, combining domain requirements with system-level configurations. Their role requires an unified, vendor-neutral specification language that enables seamless deployment across cloud, on-premise, and edge environments.

4.1.1 Architectural Requirements

Orchestration Abstraction. The orchestration abstraction provided by KTWIN significantly streamlines the process of designing and managing Digital Twin systems by reducing complexity in implementation and maintenance. High-level data modeling, powered by ontology-based and open-standard tools, allows users to define and manage entities, relationships, and behaviors in a structured and reusable manner. This approach ensures cross-domain compatibility, enabling a consistent representation of Digital Twin systems. Furthermore, the simplified configuration process enables users to have an enhanced control and flexibility over the underlying services, combining domain-specific configurations with system-specific requirements to set up and operate the platform effectively. By abstracting underlying complexities, KTWIN democratizes access to sophisticated Digital Twin capabilities, reducing barriers to adoption and minimizing implementation and maintenance costs.

Self-Contained Modules. The modularity inherent in the platform ensures that the developers define twin behaviors as self-contained, interchangeable functions, allowing development, deployment, updates, and scaling of the individual services. By defining twin’s implementation as independent and self-contained functions, KTWIN enhances maintainability, as updates or replacements to a module can be performed with minimal disruption. Additionally, modularity fosters reusability, allowing components to be repurposed across multiple Digital Twin implementations, reducing redundancy and accelerating development cycles.

Data Acquisition and Ingestion. Data acquisition and ingestion in KTWIN are critical for integrating real-world data into Digital Twin systems, ensuring accurate and up-to-date models. The platform supports diverse data sources, including IoT devices and edge systems, through a centralized broker that supports multiple protocols such as MQTT, HTTP, and AMQP. This capability allows KTWIN to collect, route, and process events efficiently, whether they originate from high-frequency sensors or intermittent data sources. Furthermore, the system can adapt to increasing data volumes and evolving workload demands effectively, allowing individual components to scale independently based on real-time demand. The event-driven design also ensures resilience, as services can continue functioning even during high loads or partial failures.

Services Interoperability. Interoperability enables seamless integration and operation across diverse systems and environments. By adhering to open standards and leveraging cloud-native tools, the platform ensures compatibility with a broad range of technologies, promoting portability and reducing vendor lock-in. Additionally, KTWIN supports deployment across multi-cloud, edge, and on-premises infrastructures, allowing organizations to tailor the platform to their specific operational needs. This capability ensures that Digital Twin systems can operate efficiently regardless of the underlying environment, making KTWIN a versatile solution for heterogeneous ecosystems.

The aforementioned requirements provide a clear understanding of the key architectural considerations that shaped the design and development of KTWIN platform. They played a pivotal role in guiding the architectural decision-making process, detailed as follows.

4.1.2 Architectural Design Choices

Serverless. KTWIN-based applications requires high scalability, low operational overhead, and the ability to handle variable workloads efficiently. Serverless computing platforms provide Function(s)-as-a-Service (FaaS) by hosting individual callable functions. These platforms provide reduced hosting costs, fast deployment, automatic scaling, high availability, fault tolerance, and dynamic elasticity through automatic provisioning and management of compute infrastructure [29], making it ideal for handling variable workloads with low operational overhead. The proposed KTWIN Serverless architecture implements automated services infrastructure provisioning based on the provided ontology resources definition. The usage of vendor-specific Serverless platforms come with certain limitations, including cold start latency, execution time restrictions, and the risk of vendor lock-in [29].

Event-Driven. Event-Driven Architecture (EDA) allows to decouple services, scale effectively, and process events in real-time. KTWIN employs a centralized broker ensuring the processing of events regardless of their source and supporting multiple communications protocols, including MQTT, HTTP and AMQP. Additionally, its event-based routing mechanism is automatically configured based on ontology’s relationship definitions, simplifying development and operation efforts for the platform users. The KTWIN event-driven architecture provides the flexibility and resilience necessary for Digital Twin systems, allowing independent service evolution, fault tolerance, and improved extensibility. Furthermore, the event-driven microservices design seamlessly integrates with Serverless architectures, offering inherent benefits such as high availability, fault resilience, and cost efficiency.

Kubernetes and Container-based Services. Kubernetes serves as a backbone for KTWIN, providing scalability, flexibility, and resilience to manage complex containerized applications across diverse environments. KTWIN leverages on Kubernetes operators and Custom Resource Definitions (CRDs) to automate the management and provisioning of cluster infrastructure. Kubernetes also enables multi-cloud and edge-based deployments strategies needed for microservice-based architecture while avoiding vendor lock-in. Lastly, Kubernetes integrates with rich cloud-native environments, including Serverless platforms. This integration enables KTWIN to leverage Function-as-a-Service (FaaS) models.

Cloud Native-based Open Platform. KTWIN leverages the rich Cloud Native ecosystem to meet scalability, flexibility, and resilience needs. The adoption of open solutions and standards benefits from an open, innovative and vendor-neutral ecosystem that encourages innovation. The rich open ecosystem also provides cost-efficient and mature tools to manage deployment, observability and security. In terms of ontology-based domain definition languages, KTWIN leverages the Digital Twins Definition Language (DTDL) to offer a flexible and open approach for describing digital twin models of smart devices.

KTWIN advances the state-of-the-art on DT platforms by addressing key limitations in existing approaches while introducing innovative capabilities that have yet to be thoroughly explored in the literature. It achieves this by (1) reducing operational costs through the automation of manual processes, streamlining deployment and management tasks, (2) offering enhanced control and flexibility over the container-based services that constitute the DT landscape, enabling tailored configurations to meet diverse requirements for DTs of any domain, and (3) supporting deployment across any Kubernetes cluster, whether on-premises, at the edge, or in the cloud, minimizing the risk of vendor lock-in, and providing organizations with greater autonomy and adaptability.

4.2 KTWIN Main Components

This section explains the high-level architecture definition proposed for KTWIN, which can be divided into Control Plane and Application plane components as depicted in Figure 1. The Control Plane components allow human operators to define the Digital Twin entities and their relationships through a generic resource definition file, allowing the deployment of DTs of any domain knowledge. The human operator interacts with the KTWIN Operator which is responsible for orchestrating the creation of all the underlying components and services from the Application Plane required to deploy the Digital Twin scenario. The operator contains the KTWIN-related resources knowledge, meaning that it knows all the steps and underlying resources required to instantiate a new twin within the platform. The Operator executes creation, modification and deletion requests against the Orchestrator component.

The Orchestrator applies and maintains the desired resource states defined by the operator which incurs creating a new Twin Service, maintaining the expected number of Event Dispatchers, or keeping the Event Broker routing rules up-to-date. The configured resources are persisted into the Orchestrator storage. In addition, the definition of the resource model includes domain-related entities, their corresponding data model attributes, the different instances of an entity, and their relationships with other entities. The entity’s relationships compose a Twin Graph. Because Application Plane components require the Twin Graph information to process and propagate events to related entities, the graph data is replicated to a fast access cache storage. Hence, Application Plane components can consume the graph information through the Twin Graph Service, decoupling the control plane storage from the application plane components and offering higher scalability and faster response time.

The Application Plane components are responsible for processing the Digital Twin events and storing them for further data analysis and visualization. Real-world edge devices interact with KTWIN by sending events that represent a state change in some defined entities of the Digital Twin. Devices such as an actuator can also subscribe and receive events from KTWIN indicating that some action must be executed in the edge. The events are published to or subscribed from the Event Broker through the Gateway component. The Gateway acts as a load balancer from ingress requests and redirects the traffic according to the protocol or endpoint: while HTTPS requests are redirected to the Event Broker Ingress which publishes them to the Event Broker, AMQP and MQTT connections are directly forwarded to the Event Broker. Incoming broker requests are routed to the corresponding target based on in-broker routing rules that send events to different in-broker queues and are subscribed by Event Dispatchers. The Event Dispatchers are lightweight components responsible for subscribing events and dispatching them to the corresponding Twin Service service deployed by the end user.

The Twin Service are designed and deployed as a Serverless function that contains a custom implementation of some Digital Twin domain defined by the DT domain expert. Each Twin Service is associated with a real twin and is responsible for processing one more event type. Twin Services can communicate with its real-world counterpart or with another Twin Service in which it has a relationship. In addition, they can be scaled down to zero or up to thousands of instances, offering improved performance and efficiency in resource usage.

The Event Store is a highly scalable data store designed to save the large number of events generated by real-world devices and processed by Virtual Services. Events persisted to the Event Store are queued with the broker and subscribed by the Event Store Dispatcher that dispatches them to the Event Store Service. In addition, end-users can implement Twin Service services to obtain the latest status of some twins using Event Store Service APIs. The Event Store APIs also allow external systems to fetch persisted events in batches.

KTWIN provides support for clients to publish events using a variety of protocols, such as MQTT, AMQP, and HTTP. In this context, the KTWIN Dispatchers are event dispatchers built to implement protocol conversion. For instance, they convert real-world MQTT events published by devices into in-cluster events, as well as in-cluster events back to MQTT device events. They are required because KTWIN uses a set of event header metadata information to route messages to the corresponding target service, which cannot be implemented using the MQTT4 protocol. In contrast, the device’s AMQP-generated events can be directly published into Event Broker since AMQP offers routing headers support. Lastly, the Event Broker Ingress is a request ingress service used by Twin Services or real devices to publish events into the Event Broker, not requiring them to establish a stateful connection with it, for instance, HTTP. More information about these components and their implementation is provided in the next section.

4.3 Digital Twin Resources Definition and Event Routing

KTWIN provides resources and data abstractions for Digital Twin operators to define their own Digital Twin models and relationships allowing the deployment of any DT domain. It uses the open-source Digital Twin Definition Language (DTDL) as the base entity definition framework and implements twin message routing rules on top of these definitions. In addition, KTWIN enhances the existing specification language by adding some system’s related information that can be configured to customize the deployment settings of a Digital Twin use case.

The KTWIN resource definition framework is divided into two main definition resources: Twin Interface and Twin Instance. The Twin Interface describes the attributes, relationships, and other types of contents common for any Digital Twin of that type, being reusable. A Twin Instance is an instance of some interface in the real world. In this context, a Twin Interface Car has attributes, such as color, year, and length, and relationships, such as owner and wheels. A Twin Interface can be created for each car that belongs to a Digital Twin, where each vehicle is unique and has its corresponding representation in the real world. In addition, users may also define system-specific settings such as allocated resources and auto-scaling attributes.

The Twin Interface definition allows domain experts to describe properties, relationships, commands, and inherit a parent interface. The Twin Instance definitions allow users to create Instances of interfaces, and configure relationships with other instances. Tables 1 and 2 show the fields in each resource specification.

| Field | Description |

| Name | The Twin Interface Name unique identifier |

| Properties | List of properties that define the Twin Interface. A property has a name, description, and data type or schema definition (in case of an enumeration). |

| Relationships | List of relationships with other Twin Interfaces. A relationship has a name, a description, a target Twin Interface, and a multiplicity indicator. |

| Commands | List of commands and actions that can be executed in a Twin Interface. Only Twin Interfaces with a common relationship can trigger a command of a Twin Interface. A Command has a name, description and schema definition. |

| Parent Twin Interface | It is possible for a Twin Interface to extend another Interface. In this context, all properties, relationships, and commands are inherited by the child Interface. |

| Service Settings | It is possible for operators to define the container implementation that is going to be used to execute events of the specific Twin Interface, CPU and memory reserved for the container, as well as auto-scaling metrics. |

| Routing and Persistence Settings | Operators may also define whether a specific type of event must be persisted in the Event Store, or if some Instance’s events must be propagated to an Instance with a relationship for data aggregation. |

| Field | Description |

| Name | The Twin Instance Name unique identifier. |

| Twin Interface | The Twin Interface that the Instance is associated with. |

| Properties | The list of properties defined in the Twin Interface. The Twin Instance property has the same name as defined in the Instance and its corresponding value. |

| Relationships | The list of relationships defined in the Twin Interface. A relationship has a name, the target Twin Interface type and the target Twin Instances. |

The resources described previously are used by KTWIN Operator to build an event routing mechanism without any manual intervention from the DT operator. The messaging routing rules support the following communication flows: (1) an event generated by the real device instance and sent to the Twin Service service, (2) an event generated by the Twin Service instance and sent to the corresponding real device, (3) an event generated by some Twin Service and sent to another Twin Service (these twins must have some relationship), and (4) an event generated by some Twin Service and published to Event Store. KTWIN uses the Twin Interface and Twin Instance definitions as well their relationships to build event propagating and routing built-in rules within the Event Broker.

KTWIN divides events into four subcategories which identify the context in which an event happened: real, virtual, command, and store. The subcategories information is included in the event message, together with the Twin Interface and the Twin Instance identifiers to identify the source of the event and determine its targets. In case of an MQTT connection, real devices publish events to the Event Broker topic containing the Twin Interface and the Twin Instance information the event is associated to, such as ktwin.real.<twin-interface>.<twin-instance>. For HTTP and AMQP requests, this information is provided in the message headers. Events published by devices following the previously mentioned pattern are routed to the corresponding Twin Service. The same devices can subscribe to events generated by its Twin Service counterpart in the topic ktwin.virtual.<twin-interface>.<twin-instance>.

Twin Service with a common relationship in the twin graph can propagate events to each other using the command event category, with the following pattern in the message header: ktwin.command.<twin-interface>.<twin-instance>.<command-name>. In addition, events that are supposed to be routed to the event store for persistence use the following routing header: ktwin.store.<twin-interface>.<twin-instance>. Finally, in case some Twin Interface does not require computing any information with the generated device event, users may configure KTWIN to route real events directly to the Event Store using the routing and persisting settings in the Twin Interface specification. Figure 2 shows how the routing implementation works with hypothetical examples of A and B entities.

Although the routing keys contain information about the Twin Instance and the Twin Interface, the in-broker routing is mostly done at the interface level, thus reducing the number of routing rules required within the broker. In this context, the routing is implemented using a start-with logic, such as ktwin.<event-category>.<twin-interface>.*. Once the user-implemented service receives the event, the routing key is decoded from the message, so the application logic knows the instance owner of that message. The application later uses this information for data propagation to the corresponding related entities using command events or data persistence in the event store. In this context, in case some command event must be generated, the application must have access to the graph relations. The previously described steps introduce some complexity for end-users to implement this logic, hence KTWIN offers a Software Development Kit (SDK) to be imported by end-users when writing their functions. The SDK implements the routing key’s decoding and command-based event propagation based on twin graph data in a user-friendly way.

4.4 Data Management and Storage

The Digital Twin Definition graph defined by the domain experts is stored within the Control Plane store and maintained by Orchestrator. It allows DT operators to easily manage and visualize the defined Twin Interfaces, their corresponding instances, attributes and commands. However, the Twin Graph information must also be available for the application plane services implementations during the event processing. Hence, the Digital Twin graph data is replicated and cached in the Twin Graph Cache to avoid impacts of Application Plane incoming requests to Control Plane components, reducing the response time and minimizing the control plane disruption. The graph cache implementation uses a key-value in-memory data store.

Application Plane twin events are persisted into a scalable and reliable Event Store database. Events are stored in a time series format containing the history of all events generated for a specific instance during its lifespan. Events are asynchronously published to Event Broker and routed to the Event Store Dispatcher before being persisted in the database by the Event Store Service. In addition to the event payload, the persisted data includes the timestamp in which the event occurred, and the Twin Instance and Twin Interface that the events belong to.

In addition to data persistence, the Event Store Service provides APIs to consume past events persisted in the data store. User-defined services can query the latest status of some Twin Instance stored and perform business logic using this data while processing incoming events. Additionally, external applications can consume a batch history of events of a specific Twin Instance or query by events of several instances of the same interface for further data analytics and machine learning. Data analytics and machine learning discussions are out of the scope of this initial research and are future research opportunities for KTWIN use cases.

5 KTWIN System Design and Implementation

This section describes the high-level KTWIN prototype implemented in this study. First, it presents the implementation architecture, how each component was implemented, and the corresponding chosen technologies. Next, we show how KTWIN offers Digital Twin modeling abstractions using Kubernetes Custom Resource and implements event definitions and routing using Cloud Event specification. The section also presents how collected device data is stored within the Event Store to allow further data analysis. Finally, we conclude the section by presenting how KTWIN implements observability requirements. The implemented prototype and deployment setup are available at https://github.com/Open-Digital-Twin.

5.1 KTWIN Architecture Implementation

The KTWIN implemented prototype embraces Cloud-Native technologies allowing end-user applications to be implemented, deployed, and orchestrated as containerized microservices providing resilience and scalability. Figure 3 shows the building blocks of the implementation evaluated in this investigation.

The main personas involved in KTWIN development and operational process are: DT Domain Expert, DT Developer and DT Operator. The DT Operator creates the Custom Resource Definition (CRD) files to model the Digital Twin use case within KTWIN and apply the resources using Kubernetes CLI. The CRD files are based on the ontology definition originally provided by the DT Domain Expert. The provided files are processed by KTWIN Operator, developed in Golang using Kubebuilder, an open-source SDK library that facilitates seamless interaction with Kubernetes Control Plane APIs. These CRD files are stored in Kubernetes etdc storage, ensuring reliable and consistent data management. The KTWIN Operator creates the required Application Plane resources to instantiate the defined use case. The container-based functions implemented by the DT Developer are pulled from a container registry and deployed as a Twin Service. Finally, the DT Domain Expert can analyze the processed data using some visualization tool that fetches the data from the Event Store.

The Twin Graph Service pulls the defined graph stored in etdc storage through Kubernetes Control plane APIs, split into subgraphs of Twin Interfaces that contain all Twin Instances and their relationship and caches in Redis, an in-memory key-value storage. The cache service exposes the subgraph data via Restful API so user-defined services can fetch the graph information of a particular Twin Interface while the service starts up. The subgraph content must be immediately available and provide fast data access to reduce impacts on the cold start time of the service.

KTWIN Application Plan components are implemented on top of Knative [30], an open-source Kubernetes-based platform designed to build, deploy, and manage modern serverless workloads. Knative extends Kubernetes to provide a set of middleware components that enable the deployment and management of serverless applications. It is the core foundation of Cloud Run in Google Cloud Platform (GCP) bringing together the best of both Serverless and containers, such as increasing the developer’s productivity by three times [31].

The Event Broker, one of the core components of KTWIN, was implemented by instantiating a Knative Broker resource, which deploys a RabbitMQ broker and provides an endpoint for event ingress to which producers can post their events. RabbitMQ is a scalable and reliable messaging broker [32] able to handle millions of concurrent IoT connections [33]. In addition, RabbitMQ supports open standard protocols, including AMQP and MQTT, and provides MQTT-AMQP protocol interoperability [33] and header-based routing.

The Twin Services were implemented as independent application containers and deployed using the Knative Service custom resource. The CRD creates the required Kubernetes resources to allow scaling up and down multiple replicas of the container and exposes an HTTP endpoint within the cluster. The Twin Service event subscription was implemented using a Knative Trigger custom resource. A Trigger allows users to define to each service an event that will be forwarded based on some message metadata. The routing rules are implemented within the Event Broker using the RabbitMQ Topology Operator and use Cloud Event [34] specification metadata fields in the routing rules. Knative Dispatchers subscribe to events in the Event Broker queues and post them to the corresponding Twin Services based on the provided routing rules. Twin Service generated events are published to the created event Event Broker Ingress and routed to the corresponding subscriber.

The Event Store Dispatcher is a standard subscriber created during the KTWIN installation steps that subscribes to events with Event Store as the target. These events are posted to the Event Store Service and persisted in a ScyllaDB cluster. ScyllaDB is a NoSQL distributed database, compatible with Apache Cassandra, designed for data-intensive applications requiring high performance and low latency [35]. Scylla database was deployed using the official ScyllaDB Operator [36] to automate the NoSQL cluster deployment process and operational tasks such as scaling, auto-healing, rolling configuration changes, and version upgrades.

The implemented prototype also includes a set of MQTT publisher’s applications developed in Golang and deployed as containers to produce IoT-generate data used during the evaluation scenarios. The containerized applications allow the creation of a configurable number of threads allowing the execution of thousands of MQTT publisher devices within a single container without exceeding Kubernetes pods limits per node. Although KTWIN allows devices to publish messages in different protocols, such as HTTP Cloud Event-based messages or AMQP, the implemented devices use MQTT because it is widely adopted in IoT systems due to its lightweight design [37].

The devices publish MQTT events to RabbitMQ Event Broker which provides AMQP-MQTT protocol interoperability. However, RabbitMQ does not support MQTT routing based on Cloud Event specification headers. To address this limitation, KTWIN Dispatchers convert the MQTT message routing, originally based on RabbitMQ routing-key routing, to a header key routing rule. This conversion ensures that MQTT edge device messages are properly converted to Cloud Events. The same limitation does not exist for AMQP or HTTP messages, since they are compatible with Cloud Event spec. This limitation in RabbitMQ opens up possibilities for future enhancements in the proposed implementation.

Finally, KTWIN provides observability capabilities and allows DT Operators to monitor the service’s health in Grafana dashboards. The Grafana dashboard built for this prototype displays metrics scraped by Prometheus including event throughput per event type, application CPU and memory consumption, and response time.

5.2 Kubernetes Custom Resources

A Kubernetes Custom Resource Definition (CRD) is a way to extend the Kubernetes API by defining and managing custom resource types. KTWIN makes use of CRD to allow Digital Twin Domain Experts to define Digital Twin entities, their attributes and relation, in addition to system-specific definitions, such as allocated CPU or memory and auto-scaling policies. The developed KTWIN prototype enhances the open-source Digital Twin Definition language (DTDL) by adding system-specific configuration to the ontology-based definition language. The implemented prototype has two DTDL-based CRDs: Twin Interface and Twin Instance.

The Twin Interface custom resource represents some Digital Twin entity and it describes its name, attributes, relationships, commands, service auto-scaling policies, the related container image that implements the interface behavior and the amount of memory and CPU requested for the container. The Twin Instance is an instance of some interface in the real world. The instance custom resource contains the instance name, the corresponding Twin Interface identifier, and references to the related Twin Instances to which there is a relationship. Both custom resources are defined using the YAML format and can be applied to the cluster using the Kubernetes standard client kubectl.

The KTWIN Operator was built on top of Kubebuilder which provides an SDK to interact with Kubernetes Control Plane APIs. When creating a new KTWIN custom resource instance, it triggers the creation of a variety of Kubernetes resources in the Application Plane. These steps are orchestrated by the KTWIN operator and Kubernetes Control Plane APIs. The implemented solution uses RabbitMQ Topology Operator that allows the creation of in-broker resources such as Queues, Bindings and exchanges using Kubernetes Custom Resources. The KTWIN operator uses these custom resources to instantiate the required broker elements to implement the routing rules based on the provided Digital Twin entities and their relationships and commands.

5.3 Events Definition and Routing

KTWIN events definition and routing rely on in-broker routing mechanisms. Figure 4 shows how KTWIN enhances the routing rules implemented by Knative using RabbitMQ exchanges, queues and bindings. The Broker’s internal resources are created using the RabbitMQ Messaging Topology Kubernetes Operator which manages RabbitMQ messaging topologies using Kubernetes Custom Resources.

In Figure 4, it is possible to visualize that devices publish events to the MQTT topic ktwin.real.<twin-interface>.<twin-instance>. RabbitMQ natively supports MQTT-AMQP interoperability by emulating the MQTT broker via a unique MQTT Topic Exchange, so all publishers and subscribers are virtually connected to the same exchange with a routing key as ktwin.real.<twin-interface>.<twin-instance>. KTWIN creates routing key-based bindings in the MQTT Topic Exchange to redirect messages to the MQTT Dispatcher Queue for each Twin Interface created by the Operator. The MQTT Dispatcher subscribes to these routing-key-based messages, converts them to a Cloud Event message, and republishes them to the Broker Exchange. The Broker Exchange uses the Cloud Event type header to redirect messages to the corresponding queue and subscribers. The broker exchange bindings include routing rules for (1) events generated by the real instance and redirected by its virtual instance service, (2) events generated by a Twin Service and sent to another Twin Service (Command), (3) events propagated to the Event Store, and (4) event generated by the virtual instance and sent to the real instance. The events generated by Twin Services that must be redirected to the real devices are converted from Cloud Event to a routing key message by the Cloud Event Dispatcher, republished to the MQTT Topic exchange, and finally delivered to the device subscribed to the MQTT topic ktwin.virtual.<twin-interface>.<twin-instance>.

KTWIN provides a Software Development Kit (SDK) for handling events and propagating them to edge devices, event stores or related virtual services. The SDK implements all the complexity of event handling using the Twin Graph entity relation data, allowing end users to focus on the application logic. KTWIN provides an SDK version in both Golang and Python programming languages. An SDK implementation example is provided in the following sections.

5.4 Graph and Events Storage

The Twin Graph defined by end-users is stored as CRDs in the Kubernetes etcd storage, an open source, distributed, consistent key-value store for shared configuration distributed systems or clusters. The graph data are replicated and cached in the Twin Graph Redis instance, a key-value in-memory cache providing submillisecond response times. Virtual Services require access to the Twin Graph to publish data entities with relationships. This information is fetched from Twin Graph Service RESTful APIs while the service start-up, hence requiring an ultra-fast response time to reduce impacts in the cold start. The Twin Graph information does not need to be fetched by the end-user application code, since KTWIN SDK implements this logic and provides a user-friendly interface to consume these data.

The Event Store database stores the entire history of Twin Instances events during its life cycle. Event Store was implemented using ScyllaDB, an open-source NoSQL database for data-intensive apps that require high performance and low latency. ScyllaDB provides high availability, scalability, and efficient resource utilization, being used for a large number of use cases, including real-time analytics and IoT data storage. User-defined services can consume the latest state of some twin event by Event Store Service. In addition, external systems can fetch a batch of Twin Instance persisted events using the same APIs.

5.5 Observability Solution

Observability is crucial for understanding the health, performance, and behavior of distributed systems. KTWIN Observability was implemented using Prometheus and Grafana. Prometheus is an open-source monitoring and alerting toolkit designed for reliability and scalability. It scrapes metrics from various services and stores them in a time-series database, enabling real-time monitoring and alerting. Grafana is an open-source analytics and monitoring platform that allows users to define and configure dashboards to visualize Prometheus metrics. Together, Prometheus and Grafana provide a powerful observability stack that allows KTWIN users to gain insights into the system, detect issues early, and ensure optimal performance.

KTWIN provides a dashboard that allows the DT Operator to monitor the number of different event types processed, the CPU and memory usage of the service, as well as its response time. The dashboard was built and used during the evaluation scenarios of this investigation. Prometheus scrapes KTWIN components metrics data through the metrics endpoint at regular intervals defined in its configuration. The scraped data is then stored in its time-series database to be consumed by Grafana.

6 Evaluation Methodology and Results

In this section, the evaluation methodology of this research is described. The evaluation involves designing, implementing, and deploying a Smart City Digital Twin use case using KTWIN. First, this section describes in more detail the ontology of the selected use case as well as the implementation details, followed by the different evaluation scenarios, and the evaluation setup. The final sections present the evaluation results of the executed experiments, including service scalability and the KTWIN Serverless capabilities analysis.

6.1 Smart City Evaluation Use Case

In order to evaluate the proposed solution, a Smart City use case was designed, implemented, and deployed using KTWIN. The selected use case was based on the DTDL ontology for Smart Cities developed by Open Agile Smart Cities (OASC) and Microsoft [38]. The open-source ontology model was publicly released to accelerate the development of Digital Twin-based solutions for Smart Cities. The DTDL is composed of ontology-based definitions from NGSI-LD information model specification [39] and ETSI SAREF ontologies for Smart Cities (Saref4City) [40]. The final model comprises entities in Urban Mobility, Environment, Waste, Parking, Buildings, Parks, Ports, City Objects, Administrative Areas, and more. A summary of the corresponding group of Twin Interfaces created for the evaluation scenarios is presented in more detail as follows.

Administrative Area: set of entities that represent the organizational structure of a city. At the highest level, the City represents a large human settlement comprising administrative regions such as neighborhoods or districts. A Neighborhood is a geographically localized community within a city, suburb, or rural area, functioning as a smaller administrative unit. Neighborhoods can compute and aggregate data from various entities, such as monitoring air quality in specific regions or tracking the availability of public parking spots in designated areas.

Utilities: this category encompasses a set of entities designed to provide essential resources for residents. A Smart Pole is located on a specific street within a neighborhood and is equipped with various sensors to monitor environmental quality metrics, including air quality, noise levels, crowd density, traffic flow, and weather conditions. Additionally, a Smart Pole can integrate with an Electric Vehicle (EV) Charging Station to support sustainable transportation. Streetlights are associated with Smart Poles, enabling the monitoring of lighting levels across different city regions. If a lamp becomes defective, the system can detect it and provide actionable information for timely replacement.

Environment: set of entities used to measure the environment quality observed in certain neighborhood of the city. The Air Quality Observed represents the air quality in a certain region of the city in the determined period. It registers the density of different gases in a certain area such as Carbon dioxide (CO2), Carbon monoxide (CO), and Sulfur dioxide (SO2). The implemented service classifies the Air Quality Index (AQI) [41] of the area into Good, Moderate, Unhealthy for Sensitive Groups, Unhealthy, Very Unhealthy, and Hazardous based on the gas density observed by the sensors. The service also updates the air quality index of the neighborhood interface to which it is related. The weather variables observations of a smart pole region are registered in the Weather Observed interface. The interface contains weather-related information such as temperature, precipitation, humidity, snow height, wind direction and speed, and atmospheric pressure. The implemented service aggregates the measured data and computes additional information such as the dew point, feel likes temperature, and pressure tendency. Lastly, the noise level observed entity contains information about the sound pressure level in a certain region of the city.

Mobility: set of entities used for monitoring and managing urban mobility. The Crowd Flow Observed entity tracks crowd congestion, including the number of people, flow direction, and average speed at locations like subway stations or bus terminals. Traffic Flow Observed monitors traffic conditions, providing data such as vehicle spacing and headway times to assess congestion levels. EV Charging Stations are smart facilities linked to Smart Poles, enabling electric vehicles to charge with status updates like available, in use, or out of service, and compatibility with specific vehicle types. Parking Spots represent designated parking areas, with dynamic statuses (free, occupied, or closed) and can be categorized as Off-Street (e.g., garages) or On-Street (e.g., public roads). Parking Groups optionally organize spots by criteria like floor or street.

Cross-Domain: a set of generic entities that can be shared across different domains. A Device can be any kind of sensor, actuator, or camera that generates data for the system. Devices are associated with the majority of the entities previously listed. For instance, a device was involved in order to measure the air quality of a certain area of the city or to report the parking slot availability. The device interface is designed to monitor different operational data from sensors, for example, each device contains its own battery level that can be measured to monitor the device’s health.

The open source DTDL ontology was converted to KTWIN CRDs files using KTWIN CLI implemented as part of the prototype. Each DTDL entity was mapped to a Twin Interface and a set of Twin Instances, and later applied to the cluster to be provisioned by the KTWIN Operator. The CRDs files mostly include the entity’s data model definitions, such as attributes, data-type definitions, and existing interface relationships. The Smart City design effort also included the creation of additional properties and commands, not available in the original DTDL, to fulfill the events definition scenarios described in Table 3. Finally, a set of container-based applications were developed using KTWIN SDK, a library used to abstract event handling, propagation and persistence within KTWIN. The containers were implemented as stateless functions to handle events for the deployed Twin Instances, and defined in the deployment specification files. The same artifacts included parameterized settings to configure auto-scaling policies and control the allocated CPU and memory. The generated artifacts for the prototype evaluation are available on GitHub 333https://github.com/Open-Digital-Twin/ktwin-article.

6.2 Evaluation Scenarios and Metrics

The evaluation scenario of this research aims to reproduce a set of the rich number of events that happen during a day-in-the-life of a Smart City. The simulation design took into account (1) events that are generated throughout the day on a defined frequency, contributing to a fixed data traffic, and (2) events that are generated at a specific moment of the day and do not follow a well-established frequency or are generated because some event criteria in the system were reached, contributing to unpredictable data traffic. In this context, the environment-measured data such as air quality index, city weather, temperature, and noise level observed are events generated at a fixed frequency throughout the full day. However, events directly related to city mobility, such as observed crowds and traffic flows, changes in parking spot availability, and the monitoring of available electric vehicle charging stations, present variable frequencies during the day depending on the city’s peak and off-peak hours. Another example of events that present unpredictable traffic is the monitoring of the battery level of sensor devices - each device has its battery level consumption and recharge particularities, so it is difficult to predict when this kind of event will be generated. In-system events, such as the Air Quality Index warning alert when some Smart Pole identifies a low-quality air measurement, are also part of the evaluation scenario.

Table 3 shows the events implemented and analyzed in the evaluation scenario with their corresponding time interval variation for peak and off-peak hours. The selected event interval considered for simulation compression was from 24 hours to 24 minutes, which means that a 10-second interval represents ten minutes in the real world, allowing the execution of several evaluation experiments in a reduced timeframe. The experiment divides the day simulation into six windows of four hours each, corresponding to 240 seconds in the simulation time. Each event type may differ in frequency within each time window, allowing the reproduction of the variation of events at different moments of the day, such as a city’s peak and off-peak hours. In addition to the generated MQTT device events, Twin Services generates virtual events back to the edge devices and publishes events for command executions and data persistence.

| Event Type | Interval |

| Air Quality observed in the Smart Pole neighborhood | 10s |

| Noise Level observed in the Smart Pole neighborhood | 10s |

| Weather observed in the Smart Pole neighborhood | 10s |

| Crowd Flow observed in the Smart Pole neighborhood | 5s - 30s |

| Traffic Flow observed in the Smart Pole neighborhood | 5s - 10s |

| Streetlight’s on/off status | 720s |

| Electric Vehicle Station availability | 10s - 80s |

| Public Parking Spot availability | 5s - 80s |

| Update available spots in public parking | 2s - 80s |

| Sensor devices battery level health status | 460s |

| Air Quality Index warning alert to Neighborhood | In-system event |

Table 4 shows all cloud event types implemented and deployed using KTWIN in the evaluation scenarios. Each event type contains the Twin Interface identifier the event is associated with. In addition, each event has a subtype identifier that is used to route the event to the corresponding target. The subtypes are the following: real, virtual, command, and store. While real devices generate events with real identifiers, virtual events are generated by their virtual counterpart. The event with store subtype indicates that the event must be routed to the Event Store, and the command subtype represents some message exchange between two instances with a common relationship. Table 4 also contains the throughput range of events per second published to the Event Broker for the different city sizes deployed in the evaluation scenarios.

| Event Type | Throughput Range (events per second) |

| ktwin.command.city-pole.updateairqualityindex | 5 - 126 |

| ktwin.command.ngsi-ld-city-offstreetparking.updatevehiclecount | 0.5 - 11 |

| ktwin.command.s4city-city-neighborhood.updateairqualityindex | 5 - 118 |

| ktwin.real.ngsi-ld-city-airqualityobserved | 5 - 100 |

| ktwin.real.ngsi-ld-city-crowdflowobserved | 10 - 201 |

| ktwin.real.ngsi-ld-city-device | 7 - 169 |

| ktwin.real.ngsi-ld-city-evchargingstation | 0.2 - 4 |

| ktwin.real.ngsi-ld-city-noiselevelobserved | 5 - 100 |

| ktwin.real.ngsi-ld-city-parkingspot | 0.5 - 10 |

| ktwin.real.ngsi-ld-city-streetlight | 1.7 - 22 |

| ktwin.real.ngsi-ld-city-trafficflowobserved | 10 - 201 |

| ktwin.real.ngsi-ld-city-weatherobserved | 5.5 - 102 |

| ktwin.store.ngsi-ld-city-airqualityobserved | 5 - 125 |

| ktwin.store.ngsi-ld-city-crowdflowobserved | 10 - 208 |

| ktwin.store.ngsi-ld-city-device | 6.7 - 118 |

| ktwin.store.ngsi-ld-city-offstreetparking | 0.5 - 11 |

| ktwin.store.ngsi-ld-city-streetlight | 1.7 - 33 |

| ktwin.store.ngsi-ld-city-trafficflowobserved | 10 - 371 |

| ktwin.store.ngsi-ld-city-weatherobserved | 5 - 127 |

| ktwin.store.s4city-city-neighborhood | 5 - 122 |

| ktwin.virtual.ngsi-ld-city-device | 6.7 - 119 |

The design of the experiment sizing was based on the New York City dataset of Mobile Telecommunications Franchise Pole Reservation Locations [42]. The public dataset contains the locations of street light poles, traffic light poles and utility poles reserved by companies authorized by the New York City Department of Information Technology and Telecommunications (NYCOTI). The report describes the number of poles installed in each of the five main boroughs: Manhattan, Brooklyn, Queens, Bronx, and Staten Island. Regarding the city mobility metrics, the number of off-street parking spots and electrical vehicle charging stations for the evaluation scenario considered public data maintained by the NYC Department of Transportation (DOT) [43].

In this context, the prototype evaluation of this study used the previously mentioned New York City public data to define the different sizing scenarios for each Twin Interface part of the Smart City Digital Twin Definition model. The evaluation was performed for 1, 5, 10 and 20 neighborhoods to analyze how the system scales when the load increases by 5, 10, and 20 times. In addition, because the experiment timeframe was compressed to a short period of 24 minutes, the number of neighborhoods was reduced to reduce the number of events generated per second at a similar rate. Regarding the amount of generated events, the reduced experiment setup represents approximately a 24-hour city of 1200 neighborhoods. The total number of Twin Instances created during the experiment is presented in Table 5.

| Twin Interface | # Twin Instances | |||

| Neighborhoods | 1 | 5 | 10 | 20 |

| Smart Poles | 50 | 250 | 500 | 1000 |

| Streetlights | 50 | 250 | 500 | 1000 |

| Air Quality Observed | 50 | 250 | 500 | 1000 |

| Crowd Flow Observed | 50 | 250 | 500 | 1000 |

| Traffic Flow Observed | 50 | 250 | 500 | 1000 |

| Noise Level Observed | 50 | 250 | 500 | 1000 |

| Weather Observed | 50 | 250 | 500 | 1000 |

| EV Charging Station | 1 | 5 | 10 | 20 |

| Off-Street Parking | 1 | 5 | 10 | 20 |

| Off-Street Parking Spots | 20 | 100 | 200 | 400 |

| Sensor Devices | 220 | 1110 | 2200 | 4400 |

| Total | 593 | 2965 | 5930 | 11860 |

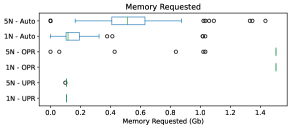

The execution of the experiment was divided into two steps. First, the above scenarios were executed 3 times each with autoscale and scale-to-zero features enabled to verify how the prototype scales for different city sizes using Knative Serverless functions. The minimum number of pods per Twin Instances was set to zero and the maximum was set to 18. The Event Store service thresholds were set to 1 and 25, because it has a higher throughput. The selected upper thresholds correspond to the maximum amount of pods that could be created considering the memory limits of the nodes set to each they were deployed. The autoscale settings were configured using the concurrency metric, which determines the number of simultaneous requests that each replica of an application can process at any given time, as the value 5. Later, the scenarios of 1 and 5 neighborhoods were executed 3 times each with a fixed number of pods to reproduce scenarios of over-provisioning computing resources (160 pods, 14 per Twin Instance + 20 for the Event Store) and under-provisioning computing resources (13 pods, 1 per Twin Instance + 3 for the Event Store). Each simulation aimed to reproduce 24 hours of Smart City events in a 24-minute timeframe.

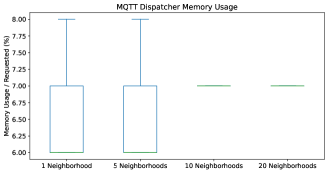

The experiments focused on evaluating performance and resource utilization using a set of carefully selected metrics: the number of pods, CPU, memory, and response times. The number of pods metric represents the number of deployed instances in the system and is used to understand how the system scales service instances for different workload sizes. The CPU metrics include CPU requested, reflecting the amount of CPU requested for each service instance, and CPU usage, indicating the actual percentage of resource utilization. Similarly, memory metrics comprised memory requested, representing planned memory allocation, and memory usage, showing real consumption levels. To measure performance under varying loads, response time metrics were analyzed across multiple percentiles: p50 (median) to gauge typical user experience, p90 and p95 to capture performance under higher loads, and p99 to evaluate system behavior during peak or extreme conditions. Together, these metrics provided a comprehensive understanding of the system’s scalability, efficiency, and performance across deployment configurations and neighborhood sizes.

6.3 Evaluation Setup

The evaluation setup was performed in a private Kubernetes cluster comprising 20 machines. The nodes were divided into three groups based on their sizing and responsibilities: Core (Intel Xeon, AMD Opteron with 24-64+ threads, 16Gb-24Gb RAM), Services (Intel i7/i5 with 4 threads, 4-16Gb RAM) and Devices (Intel i7/i5 with 4 threads, 4-16Gb RAM). Each group of machines runs different workloads and the assignment of the workload pods to the corresponding groups was implemented using Kubernetes node selector based on predefined labels.