Kolmogorov–Arnold networks in molecular dynamics

Abstract

We explore the integration of Kolmogorov–Arnold Networks (KANs) into molecular dynamics (MD) simulations to improve interatomic potentials. We propose that widely used potentials, such as the Lennard–Jones (LJ) potential, the embedded atom model (EAM), and artificial neural network (ANN) potentials, can be interpreted within the KAN framework. Specifically, we demonstrate that the descriptors for ANN potentials, typically constructed using polynomials, can be redefined using KAN’s non-linear functions. By employing linear or cubic spline interpolations for these KAN functions, we show that the computational cost of evaluating ANN potentials and their derivatives is reduced.

I Introduction

Molecular dynamics (MD) simulations have revolutionized the field of materials science, providing detailed atomistic insights into the structure, properties, and behavior of materials under various conditions [1, 2, 3, 4, 5]. By solving Newton’s equations of motion for a system of interacting atoms, MD simulations can model processes such as diffusion, phase transitions, mechanical deformation, and chemical reactions. Despite their widespread utility, traditional MD simulations are often limited by the accuracy and efficiency of the force fields used to approximate interatomic forces.

Ab initio molecular dynamics (AIMD), particularly those based on density functional theory (DFT), offer enhanced accuracy by explicitly accounting for the electronic structure of materials [6, 7]. However, the computational cost of AIMD is prohibitive for simulating large systems or long-time scales, restricting its application to relatively small and short simulations. This limitation underscores the need for more efficient computational methods that do not compromise on accuracy.

Machine learning (ML) has emerged as a transformative approach to address these challenges in MD simulations. By training on energies obtained from DFT calculations, ML models, particularly artificial neural networks (ANNs), can accurately predict potential energy surfaces and interatomic forces [8]. Integrating ML with MD, known as machine learning molecular dynamics (MLMD), offers a promising pathway to enhance both the accuracy and efficiency of simulations [9, 10, 11, 12, 13, 14].

Developing novel and effective ML methods tailored specifically for MD simulations is crucial to further unlocking the potential of MLMD [14, 9, 12, 13, 15, 16, 17]. For MLMD, multi-layer perceptrons (MLPs) are mainly used for ANN. Recently, Kolmogorov-Arnold networks (KANs), an alternative to MLPs, have been proposed [18]. They are extensions of Kolmogorov–Arnold representation theorem [19, 20], which states that any continuous function can be represented as a superposition of continuous functions of a single variable. This theoretical framework provides a powerful tool for decomposing complex functions into simpler, more manageable component functions.

In this paper, we explore the relations between KANs and potential energy in molecular dynamics. From the KAN perspective, the Lennard–Jones (LJ) potential, embedded atom mode (EAM), and ANN potential can all be viewed as forms of KANs. Utilizing linear or cubic spline interpolations, we can reduce the computational cost of the ANN potential.

This paper is organized as follows. In Sec. II, we introduce KANs. In Sec. III, we discuss the relation between the KANs and potentials used in molecular dynamics. The KAN descriptor is introduced in Sec. IV. The numerical results are shown in Sec. V. We introduce the linear and cubic spline interpolations to approximate the KAN descriptor for the speedup of simulations. In Sec. VI, the conclusion is given.

II Kolmogorov–Arnold Networks

II.1 Kolmogorov–Arnold representation theorem

Recently, KANs have been proposed inspired by the Kolmogorov–Arnold representation theorem [18]. The Kolmogorov–Arnold representation theorem shows that a multivariate function can be represented by a superposition of univariate functions [19, 20]. For a smooth , a function is represented as

| (1) |

where , and (See, Fig. 1).

For example, one can easily confirm that the multiplication is represented by a superposition of univariate functions like Eq. (1),

| (2) |

II.2 Kolmogorov–Arnold Networks

Liu et al. have extended the idea of the Kolmogorov–Arnold representation theorem [18]. By regarding Eq. (1) as a two-layer network with one hidden layer with neurons, the more general form of the networks can be given by

| (3) | ||||

| (4) | ||||

| (5) | ||||

| (6) | ||||

| (7) | ||||

| (8) |

which is called KAN. The schematic of KAN is shown in Fig. 2.

The multivariate function is represented by a superposition of non-linear “activation” functions . To construct function , a shape of the non-linear functions is optimized. For example, in the implementation by Liu et al., each KAN layer is composed of the sum of the spline function and the SiLU activation function. There are various kinds of basis functions, such as Legendre polynomials, Chebyshev polynomials, and Gaussian radial distribution functions [21, 22, 23, 24].

III Potential energy in molecular dynamics

III.1 Various kinds of potential energies

In the field of molecular dynamics, accurately estimating the forces on each atom is crucial. Since the force on the -th atom at is derived from the derivative of the total potential energy , i.e.,

| (9) |

An accurate and fast estimation of the potential energy is one of the most important challenges.

Various kinds of potentials have been proposed for molecular dynamics. For example, the LJ potential is a simplified model that describes the essential features of interactions between simple atoms and molecules [25]. The EAM is an approximation describing the interatomic potential and is particularly appropriate for metallic systems [26].

Calculations based on DFT, known as ab initio calculations, are among the most accurate methods for evaluating potential energy. AIMD has many applications in material design. However, reducing the computational effort required for AIMD remains a key issue for its broader application to phenomena on large lengths and time scales.

The use of ANNs, which imitate DFT energies through machine learning, is seen as a promising solution to this issue [8]. Research in machine-learning molecular dynamics has grown rapidly over the last decade [14, 9, 12, 13, 15, 16, 17, 11]. Various network structures have been proposed, including graph neural networks [27, 28, 12, 13, 9, 10, 11, 12, 13, 14] and transformer architectures [15].

III.2 Relation between Kolmogorov–Arnold networks and potential energy

III.2.1 Lennard–Jones potential

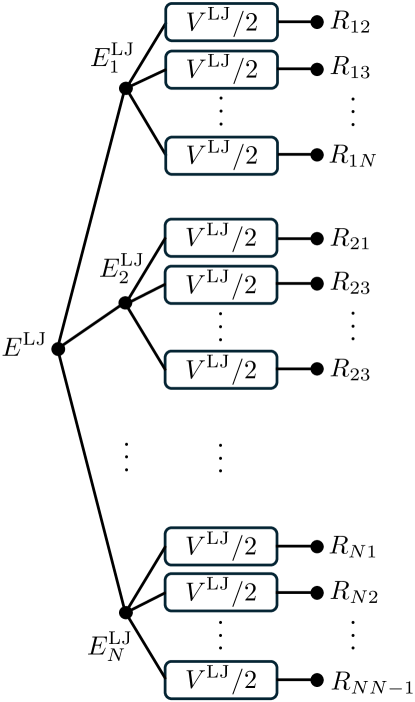

Let us discuss the relation between KAN and potential energy in molecular dynamics. Using the LJ potential, the total potential energy for atoms is expressed as

| (10) | ||||

| (11) |

where, with empirically determined coefficients and ,

| (12) | ||||

| (13) | ||||

| (14) |

The LJ potential can be represented by a type of KAN, as shown in Fig. 3.

As shown in the KAN shown in Eq. (3), a non-linear function depends on indices of both input and output layers and . In the LJ potential model, the elements of the initial input are distances between two atoms, which should have a permutation symmetry. Therefore, all non-linear functions are , similar to weight sharing in MLP networks. We call this feature “function sharing.”

III.2.2 Embedded atom model potential

The total potential energy in the EAM is divided into two contributions, namely a pairwise part and a local density part:

| (15) | ||||

| (16) | ||||

| (17) |

The functions , , and are constructed empirically or by fitting the potential energy calculated by DFT calculations. In actual simulations, the value of these functions is evaluated by linear interpolation. In terms of KAN, the local potential energy can be rewritten as

| (18) | ||||

| (19) | ||||

| (20) |

where is the element type of the -th atom. The EAM potential can be represented by a type of KAN, as shown in Fig. 4.

In terms of KAN, the EAM potential can be extended by adding more hidden layers and/or units.

III.2.3 Aarificial neural network potential

There are various kinds of ANN potentials. In this paper, we focus on Behler–Parrinello type neural networks [8]. In their formulation, the potential energy is assumed to be constructed from a sum of fictitious atomic energies, similar to the LJ and EAM potentials. Furthermore, the atomic energy is considered a functional of the atomic environment around the central atom, defined inside a sphere with a cutoff radius . The feature values of the atomic environment, called descriptors, were introduced to ensure the translational and rotational invariance of the atomic energy. Therefore, the total and atomic energies are given as

| (21) |

where , , and are the total energy, the atomic energy for the -th atom, and descriptor of the atomic environment for the centered -th atom, respectively. There are many kinds of descriptors. For example, in the case of the Chebyshev descriptor [11], the descriptor is determined as

| (22) | ||||

| (23) | ||||

| (24) |

| (25) | ||||

| (26) | ||||

| (27) | ||||

| (28) |

where

| (29) | ||||

| (30) | ||||

| (31) |

is the -th Chebyshev polynomial of the first kind, and is a cutoff function that smoothly goes to zero at . For example, the following function is used for many cases:

| (32) |

We introduce the shorthand notations as

| (33) | ||||

| (34) | ||||

| (35) | ||||

| (36) | ||||

| (37) |

When one considers MLP with two hidden layers, the -th atomic energy is given as

| (38) | |||

| (39) | |||

| (40) |

where is an activation function of -th layer such as , and is the normalized descriptor, of which element is defined as

| (41) |

where

| (42) | ||||

| (43) |

Here, and are the mean value and standard deviation in a total data set. The ANN potential can be represented as a KAN, as shown in Fig. 5.

Let us discuss the relation between the KAN and ANN potential. We focus on the first layer shown in Eq. (40). This equation is rewritten as

| (44) |

where we define and as

| (45) | ||||

| (46) | ||||

| (47) |

and

| (48) | ||||

| (49) | ||||

| (50) |

respectively. By substituting Eqs. (26) and (28) into Eq. (45), and are given as

| (51) | ||||

| (52) |

where

| (53) | ||||

| (54) | ||||

| (55) | ||||

| (56) | ||||

| (57) | ||||

| (58) |

In terms of KAN, the one-dimensional non-linear functions , , , and are regarded as activation functions in the KAN structure. The above equations show that these non-linear functions are expanded by the Chebyshev polynomials.

IV Kolmogorov–Arnold Network descriptor

IV.1 Definition

We propose a trainable descriptor called “KAN descriptor” for interatomic potentials, defined as

| (59) |

where

| (60) | |||

| (61) |

Here, and are radial (two-body) and angular (three-body) non-linear functions, which should be optimized.

The total energy is expressed as

| (62) |

where is the -th atomic potential constructed by neural networks with the KAN descriptor as shown in Fig. 6. It should be noted that both the function and the KAN descriptor are trainable. When one considers MLP with one hidden layer, the -th atomic energy is expressed as

| (63) | ||||

| (64) | ||||

| (65) |

The network architecture with KAN descriptors is shown in Fig. 7.

In the original KAN layer shown in Eq. (8), non-linear functions depend on indices of input and output neurons. However, in the KAN descriptor, the non-linear functions and do not depend on an index of input neurons and only on the kinds of atoms and an index of output neurons since atoms with the same types are indistinguishable. In Fig. 6, it looks there are distinct functions and distinct functions for . However, the number of non-linear functions of the KAN descriptor is usually much smaller than or and depends on the number of the kind of atoms. For example, consider 10 water molecules surrounding the -th hydrogen atom in an H2O system. In this case, only two functions ( and ) and three functions (, , and ) are required although .

These non-linear functions should be optimized to approximate DFT energy. The non-linear functions can be expanded using B-spline functions, Gaussian radial distribution functions, or Chebyshev polynomials. During the training procedure, the coefficients of the non-linear functions are adjusted. Once accurate non-linear functions with appropriate coefficients are obtained, values can be evaluated using linear or spline interpolation. In this study, we employ the Chebyshev polynomials as

| (66) | ||||

| (67) | ||||

| (68) | ||||

| (69) |

We mention the representation ability of the non-linear functions. The number of the trainable parameters is for the KAN descriptor . In addition, these non-linear functions depend on the kinds of atoms as same as the original Chebyshev descriptor [11]. Therefore, the KAN descriptor can avoid dimensional explosion with respect to the number of atom kinds like the symmetry functions [8].

IV.2 Relation between the Chebyshev and the Kolmogorov–Arnold network descriptors

The relations between the coefficients of the non-linear functions of the KAN descriptor and the coefficients defined in Eqs. (44)–(45) are expressed as

| (70) | ||||

| (71) | ||||

| (72) | ||||

| (73) | ||||

| (74) |

In the previous Chebyshev descriptor, , , and are calculated by a data set. In the KAN descriptor, these variables can be trainable. We note that the MLP with two-hidden layers with the Chebyshev descriptor can be regarded as the MLP with one-hidden layer with the KAN descriptor, since the KAN descriptor is trainable.

V Results

V.1 Target system and method

We consider iron with vacancies and dislocations. We use the training dataset available on GitHub as described in Ref. [29]. The KAN descriptor and MLP are implemented using the machine-learning package Flux.jl version 0.14.11, written in the Julia language[30, 31]. The sizes of the total dataset, training dataset, and test dataset are 6348, 5713, and 635, respectively. The energy of each atomic structure is calculated using Quantum Espresso, a first-principles calculation tool based on density functional theory [32]. The details of the calculation are provided in Ref. [29]. We adopt an MLP with one hidden layer for . The dimension of the input vector and the number of units in the hidden layer were set as and , respectively. To train the ANN potential, we use the limited-memory Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm implemented in the Optim.jl package[33]. For each optimization with the limited-memory BFGS, we perform 10 iterations to reduce the loss function defined as

| (75) |

Here, the batch size in the Optim.jl package is 100. and are the potential energies calculated by first-principles calculation and ANN, respectively.

V.2 Shape of the non-linear functions in KAN descriptors

Let us discuss the shape of the non-linear functions in KAN descriptors. We consider two parameter sets ( and . As shown in Fig. 8, the shape of the non-linear functions in KAN descriptors depends on .

In the region [Å], the curves strongly depend on . It should be noted that there is no data with [Å] in the training and test data set. As shown in Fig. 9, the distribution of inter-atomic distance is not uniform. In the region where no data is found in the training data set, the shape of the non-linear function in the KAN descriptor can not affect the quality of the ANN potential.

In terms of KANs, if the non-linear functions are well optimized with a fixed number of functions, the loss converges with increasing the number of the Chebyshev polynomials. As shown in Fig. 10, the limits of the expressive power of the KAN descriptors are reached with increasing the number of the Chebyshev polynomials for radial non-linear functions.

V.3 Fast evaluation of the Kolmogorov–Arnold network descriptors

We propose a method of a fast evaluation of the ANN potential with KAN descriptors. The conventional ANN potential constructed by the Chebyshev polynomial descriptor can be regarded as the ANN potential with KAN descriptors. The computational cost to evaluate the KAN descriptor is . With the use of linear or spline interpolation with points, the computational cost becomes which does not depend on the order of Chebyshev polynomials. We note that the computational cost of the forces can also be reduced with the use of interpolations.

We discuss the interpolation point dependence of the computational time and accuracy. In the top panel in Fig. 11, we show the residual norm of the KAN descriptors defined as

| (76) |

where is the input vector constructed by interpolations, and . We adopt a linear interpolation and natural cubic spline interpolation implemented in Interpolation.jl. The elapsed time is measured in MacBook Pro (14 inch, 2023) with Apple M2 Max processor. The parameters of the Chebyshev polynomial is . We should note that the code that we made written in Julia language might not be fully optimized. Therefore, we can discuss the elapsed time only qualitatively. As shown in Fig. 11, the elapsed time of each interpolation weakly depends on , which is consistent with a theoretical value . We also discuss the mean absolute loss determined as

| (77) |

where is the number of the test data set. To discuss the accuracy of the interpolated KAN descriptors, we calculate the difference between the MAE with the ANN potential with Chebyshev polynomials and that with the interpolated KAN descriptors. The MAE of the Chebyshev descriptors is [meV/atom]. As shown in the bottom panel in Fig. 11, the difference reduces with increasing interpolation points.

We should note that, for actual machine-learning MD simulations, it is more better to implement the KAN descriptor to an open-source ANN potential package such as ænet [11]. Since the implementation might not be complicated, we will propose the software in the future.

VI Conclusion

In this study, we have integrated KAN into MD simulations to enhance the efficiency of potential energy models. Our investigation reveals that the KAN framework can represent commonly used potentials, such as the LJ, EAM, and ANN potentials. This reinterpretation allows for the application of KAN’s non-linear functions to introduce the KAN descriptor for ANN potentials. Our results demonstrate that by employing linear or cubic spline interpolations for these KAN functions, computational savings can be achieved without reducing accuracy. Future work will focus on further refining the KAN descriptors and integrating them into open-source ANN potential packages such as ænet.

Acknowledgements.

This work was partially supported by JSPS KAKENHI Grants Nos. 22H05111, 22H05114, 22K03539, and 23H03996. This work was also partially supported by “Joint Usage/Research Center for Interdisciplinary Large-scale Information Infrastructures (JHPCN)” and “High Performance Computing Infrastructure (HPCI)” in Japan (Project ID: jh240028 and jh240059). We would like to thank Dr. Mitsuhiro Itakura for the variable discussions. Additionally, we are grateful to Dr. Hideki Mori for providing information about his training dataset for iron with vacancies and dislocations.References

- Rahman and Stillinger [1971] A. Rahman and F. H. Stillinger, Molecular dynamics study of liquid water, J. Chem. Phys. 55, 3336 (1971).

- Wang et al. [2010] S. Wang, S. Li, Z. Cao, and T. Yan, Molecular dynamic simulations of ionic liquids at graphite surface, J. Phys. Chem. C Nanomater. Interfaces 114, 990 (2010).

- Kubicki and Lasaga [1988] J. Kubicki and A. Lasaga, Molecular dynamics simulations of SiO 2 melt and glass; ionic and covalent models, American Mineralogist 73, 941 (1988).

- Engel et al. [2015] M. Engel, P. F. Damasceno, C. L. Phillips, and S. C. Glotzer, Computational self-assembly of a one-component icosahedral quasicrystal, Nat. Mater. 14, 109 (2015).

- Kobayashi et al. [2023] K. Kobayashi, M. Okumura, H. Nakamura, M. Itakura, M. Machida, S. Urata, and K. Suzuya, Machine learning molecular dynamics reveals the structural origin of the first sharp diffraction peak in high-density silica glasses, Sci. Rep. 13, 18721 (2023).

- Iftimie et al. [2005] R. Iftimie, P. Minary, and M. E. Tuckerman, Ab initio molecular dynamics: concepts, recent developments, and future trends, Proc. Natl. Acad. Sci. U. S. A. 102, 6654 (2005).

- Mouvet et al. [2022] F. Mouvet, J. Villard, V. Bolnykh, and U. Rothlisberger, Recent advances in first-principles based molecular dynamics, Acc. Chem. Res. 55, 221 (2022).

- Behler and Parrinello [2007] J. Behler and M. Parrinello, Generalized neural-network representation of high-dimensional potential-energy surfaces, Phys. Rev. Lett. 98, 146401 (2007).

- Nagai et al. [2020] Y. Nagai, M. Okumura, K. Kobayashi, and M. Shiga, Self-learning hybrid monte carlo: A first-principles approach, Phys. Rev. B Condens. Matter 102, 041124 (2020).

- Nagai et al. [2024] Y. Nagai, Y. Iwasaki, K. Kitahara, Y. Takagiwa, K. Kimura, and M. Shiga, High-Temperature atomic diffusion and specific heat in quasicrystals, Phys. Rev. Lett. 132, 196301 (2024).

- Artrith et al. [2017] N. Artrith, A. Urban, and G. Ceder, Efficient and accurate machine-learning interpolation of atomic energies in compositions with many species, Phys. Rev. B 96, 014112 (2017).

- Batzner et al. [2022] S. Batzner, A. Musaelian, L. Sun, M. Geiger, J. P. Mailoa, M. Kornbluth, N. Molinari, T. E. Smidt, and B. Kozinsky, E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials, Nat. Commun. 13, 2453 (2022).

- Musaelian et al. [2023] A. Musaelian, S. Batzner, A. Johansson, L. Sun, C. J. Owen, M. Kornbluth, and B. Kozinsky, Learning local equivariant representations for large-scale atomistic dynamics, Nat. Commun. 14, 579 (2023).

- Bartók et al. [2010] A. P. Bartók, M. C. Payne, R. Kondor, and G. Csányi, Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons, Phys. Rev. Lett. 104, 136403 (2010).

- Thölke and De Fabritiis [2022] P. Thölke and G. De Fabritiis, TorchMD-NET: Equivariant transformers for neural network based molecular potentials, arXiv [cs.LG] (2022).

- Sivaraman et al. [2020] G. Sivaraman, A. N. Krishnamoorthy, M. Baur, C. Holm, M. Stan, G. Csányi, C. Benmore, and Á. Vázquez-Mayagoitia, Machine-learned interatomic potentials by active learning: amorphous and liquid hafnium dioxide, Npj Comput. Mater. 6, 1 (2020).

- Wang et al. [2024] Y. Wang, T. Wang, S. Li, X. He, M. Li, Z. Wang, N. Zheng, B. Shao, and T.-Y. Liu, Enhancing geometric representations for molecules with equivariant vector-scalar interactive message passing, Nat. Commun. 15, 313 (2024).

- Liu et al. [2024] Z. Liu, Y. Wang, S. Vaidya, F. Ruehle, J. Halverson, M. Soljačić, T. Y. Hou, and M. Tegmark, KAN: Kolmogorov-arnold networks, arXiv [cs.LG] (2024).

- Kolmogorov [1956] A. Kolmogorov, On the representation of continuous functions of several variables by superpositions of continuous functions of a smaller number of variables, Proceedings of the USSR Academy of Sciences 108, 179 (1956).

- Braun and Griebel [2009] J. Braun and M. Griebel, On a constructive proof of kolmogorov’s superposition theorem, Constr. Approx. 30, 653 (2009).

- Bhattacharjee [2024] S. S. Bhattacharjee, Torchkan: Simplified kan model with variations, https://github.com/1ssb/torchkan/ (2024).

- Nagai [2024] Y. Nagai, Fluxkan: Julia version of the torchkan, https://github.com/cometscome/FluxKAN.jl (2024).

- Ss et al. [2024] S. Ss, K. Ar, G. R, and A. Kp, Chebyshev polynomial-based kolmogorov-arnold networks: An efficient architecture for nonlinear function approximation, arXiv [cs.LG] (2024).

- Li [2024] Z. Li, Kolmogorov-arnold networks are radial basis function networks, arXiv [cs.LG] (2024).

- Schwerdtfeger and Wales [2024] P. Schwerdtfeger and D. J. Wales, 100 years of the lennard-jones potential, J. Chem. Theory Comput. 20, 3379 (2024).

- Daw and Baskes [1984] M. S. Daw and M. I. Baskes, Embedded-atom method: Derivation and application to impurities, surfaces, and other defects in metals, Phys. Rev. B 29, 6443 (1984).

- Takamoto et al. [2022] S. Takamoto, C. Shinagawa, D. Motoki, K. Nakago, W. Li, I. Kurata, T. Watanabe, Y. Yayama, H. Iriguchi, Y. Asano, T. Onodera, T. Ishii, T. Kudo, H. Ono, R. Sawada, R. Ishitani, M. Ong, T. Yamaguchi, T. Kataoka, A. Hayashi, N. Charoenphakdee, and T. Ibuka, Towards universal neural network potential for material discovery applicable to arbitrary combination of 45 elements, Nat. Commun. 13, 2991 (2022).

- Batatia et al. [2022] I. Batatia, D. P. Kovács, G. N. C. Simm, C. Ortner, and G. Csányi, MACE: Higher order equivariant message passing neural networks for fast and accurate force fields, arXiv [stat.ML] (2022).

- Mori et al. [2023] H. Mori, T. Tsuru, M. Okumura, D. Matsunaka, Y. Shiihara, and M. Itakura, Dynamic interaction between dislocations and obstacles in bcc iron based on atomic potentials derived using neural networks, Phys. Rev. Mater. 7, 063605 (2023).

- Innes et al. [2018] M. Innes, E. Saba, K. Fischer, D. Gandhi, M. C. Rudilosso, N. M. Joy, T. Karmali, A. Pal, and V. Shah, Fashionable modelling with flux, CoRR abs/1811.01457 (2018), arXiv:1811.01457 .

- Innes [2018] M. Innes, Flux: Elegant machine learning with julia, Journal of Open Source Software 10.21105/joss.00602 (2018).

- Giannozzi et al. [2009] P. Giannozzi, S. Baroni, N. Bonini, M. Calandra, R. Car, C. Cavazzoni, D. Ceresoli, G. L. Chiarotti, M. Cococcioni, I. Dabo, A. Dal Corso, S. de Gironcoli, S. Fabris, G. Fratesi, R. Gebauer, U. Gerstmann, C. Gougoussis, A. Kokalj, M. Lazzeri, L. Martin-Samos, N. Marzari, F. Mauri, R. Mazzarello, S. Paolini, A. Pasquarello, L. Paulatto, C. Sbraccia, S. Scandolo, G. Sclauzero, A. P. Seitsonen, A. Smogunov, P. Umari, and R. M. Wentzcovitch, QUANTUM ESPRESSO: a modular and open-source software project for quantum simulations of materials, J. Phys. Condens. Matter 21, 395502 (2009).

- Mogensen and Riseth [2018] P. K. Mogensen and A. N. Riseth, Optim: A mathematical optimization package for Julia, Journal of Open Source Software 3, 615 (2018).