heightadjust=all, floatrowsep=columnsep \floatsetup[table]capposition=top \newfloatcommandfigureboxfigure[\nocapbeside][]

Kernel Quantization for Efficient Network Compression

Abstract

This paper presents a novel network compression framework, Kernel Quantization (KQ), targeting to efficiently convert any pre-trained full-precision convolutional neural network (CNN) model into a low-precision version without significant performance loss. Unlike existing methods struggling with weight bit-length, KQ has the potential in improving the compression ratio by considering the convolution kernel as the quantization unit. Inspired by the evolution from weight pruning to filter pruning, we propose to quantize in both kernel and weight level. Instead of representing each weight parameter with a low-bit index, we learn a kernel codebook and replace all kernels in the convolution layer with corresponding low-bit indexes. Thus, KQ can represent the weight tensor in the convolution layer with low-bit indexes and a kernel codebook with limited size, which enables KQ to achieve significant compression ratio. Then, we conduct a 6-bit parameter quantization on the kernel codebook to further reduce redundancy. Extensive experiments on the ImageNet classification task prove that KQ needs 1.05 and 1.62 bits on average in VGG and ResNet18, respectively, to represent each parameter in the convolution layer and achieves the state-of-the-art compression ratio with little accuracy loss.

1 Introduction

In recent years, deep convolutional neural networks (CNNs) have achieved astonishing success in a variety range of computer vision tasks, such as image classification [14, 11], semantic segmentation [16], action recognition [20], and video restoration [24, 8]. The promising results of CNNs are mainly attributed to the massive learnable parameters, which then benefit from abundant annotated data and computing platform improvement. Unfortunately, the increasing of learnable parameter consumes more memory and other computational resources, making it hard to deploy on resource-limited devices. According to [4], network parameters have significant redundancy, which inspired many works on network compression [10]. Among all of the network compression methods, network quantization attracts much attention for its ability in reducing the number of bits needed to represent each parameter.

In network quantization, continuous parameters are mapped to a certain amount of discrete values (codebook). Thus, each parameter is represented by an index. However, network quantization still needs at least one bit to represent each parameter, leading to the theoretical compression ratio limit of 32 times. Impressive attempts have been made to achieve this limit in [18, 3, 12]. In [6], the author proposed to use symmetric quantization and achieved the state-of-the-art performance among low-bit quantization methods, but still facing about 3% accuracy loss on larger network structures such as ResNet18 [11] and VGG [21]. Another approach is to train a low-bit network from scratch with some well-designed strategies. Zhang et al. [28] proposed to train a neural network and learn quantizer with arbitrary-bit precision.

A severe problem along with reaching the theoretical compression ratio limit is the loss of accuracy. Almost all of these methods are suffering from significant accuracy loss, especially when using three or fewer bits on large-scale datasets (e.g., ImageNet [19]). Because of too few discrete values in the codebook, the convolution kernel lacks variety (the fewer entries in the codebook, the fewer combinations the kernels have). To the best of our knowledge, for all of the conventional quantization methods, 1-bit quantization leads to significant accuracy loss and 2-bit quantization has relatively lower accuracy loss but with limited compression ratio of 16 times.

To overcome the dilemma of variety and the theoretical compression ratio, we first propose to consider the convolution kernel as the quantization unit to bind the theoretical compression ratio limit to the kernel size, which normally is , instead of each parameter. Secondly, we propose to apply 6-bit quantization to the kernel codebook to further compress the model meanwhile preserve the variety of kernels. With these two steps, we are able to significantly compress the CNN without the limitation of theoretical compression ratio and achieve comparable accuracy to the full-precision model. For a kernel, we are able to increase the theoretical limit from 32 to 288.

In summary, our major contributions in this paper are as follows:

-

•

The theoretical compression ratio limit of conventional network quantization method is introduced and analyzed, and a new perspective of both kernel-level and weight-level quantization is proposed to inspire others to break through the limitation.

-

•

A novel method, Kernel Quantization (KQ), is proposed to consider each kernel as a unit for kernel-level quantization and parameter quantization is then used to compress the kernel codebook.

-

•

The proposed method is applied on popular CNN architectures and achieves significant compression ratio (on average 1.05 and 1.62 bits for VGG and ResNet18 to represent each parameter in the convolution layer, respectively) while having better accuracy compared to conventional network quantization methods.

The remainder of the paper is organized as follows. Section 2 presents related works on network quantization. Section 3 introduces the proposed Kernel Quantization in detail and analysis the theoretical compression limits for conventional quantization methods. Section 4 provides the implement details, hyper parameter analysis and the experiment results. Section 5 concludes the paper.

2 Related Works

In 2016, [9] proposed a quantization method which generates a codebook of discretization values using k-means clustering and maps all the parameters to the closest entry in the codebook. But the proposed method needs at least 4-bit for convolution layer and 2-bit for fully connected layer. [29] proposed an incremental network quantization method that performs weight partition, group-wise quantization and re-training in an iterative manner. But their method suffers significant accuracy loss when using 2-bit quantization. [2] derived that network quantization problem is related to entropy-constrained scalar quantization in information theory and designed a network quantization scheme that minimizes the performance loss with respect to quantization given a compression ratio constraint.

A different branch of network quantization is to train a low-bit network from scratch with some well-designed strategies [18]. [12] proposed to use binary weights and activations directly for computing the parameter gradients. This method is able to reduce memory size drastically, but results in significant accuracy loss on large datasets such as ImageNet. [3] proposed to train BinaryConnect network with binary weights while keeping gradients as real values. Recently, [6] have pushed the extremely low-bit network quantization record forward by a large margin. The author proposed to generate the codebook in a symmetrical manner. [15] proposed to cast the original problem into several sub-problems. These method performs well on small networks like AlexNet, but suffers from non-negligible accuracy drop on larger models, e.g., VGG and ResNet18.

There are also works trying to exploit the benefit of representing multiple parameters with one index. [7] proposed to quantize fully connected layers in networks with vectors as quantization unit, but representing three parameters with one index did not provide promising compression ratio. In [13], the author did quantization on each row of the convolution kernel. However, such method performs well on small datasets but suffers significant performance reduction in large datasets such as ImageNet [19]. In [22] the author explored further but they introduced enormous hyper parameters that closely connected to the quantization performance and failed in finding a framework to efficiently quantize CNN.

Among all these methods, extremely low-bit quantization is still an open problem and far from being solved, especially when using large networks and large datasets. In this paper, we focus on boosting the quantization performance and breaking through the theoretical compression ratio limit, then propose the Kernel Quantization.

3 Methods

In this section, we provide the insight and detailed description of Kernel Quantization (KQ) method. The overall framework is shown in Figure 1.

3.1 Kernel-level Quantization

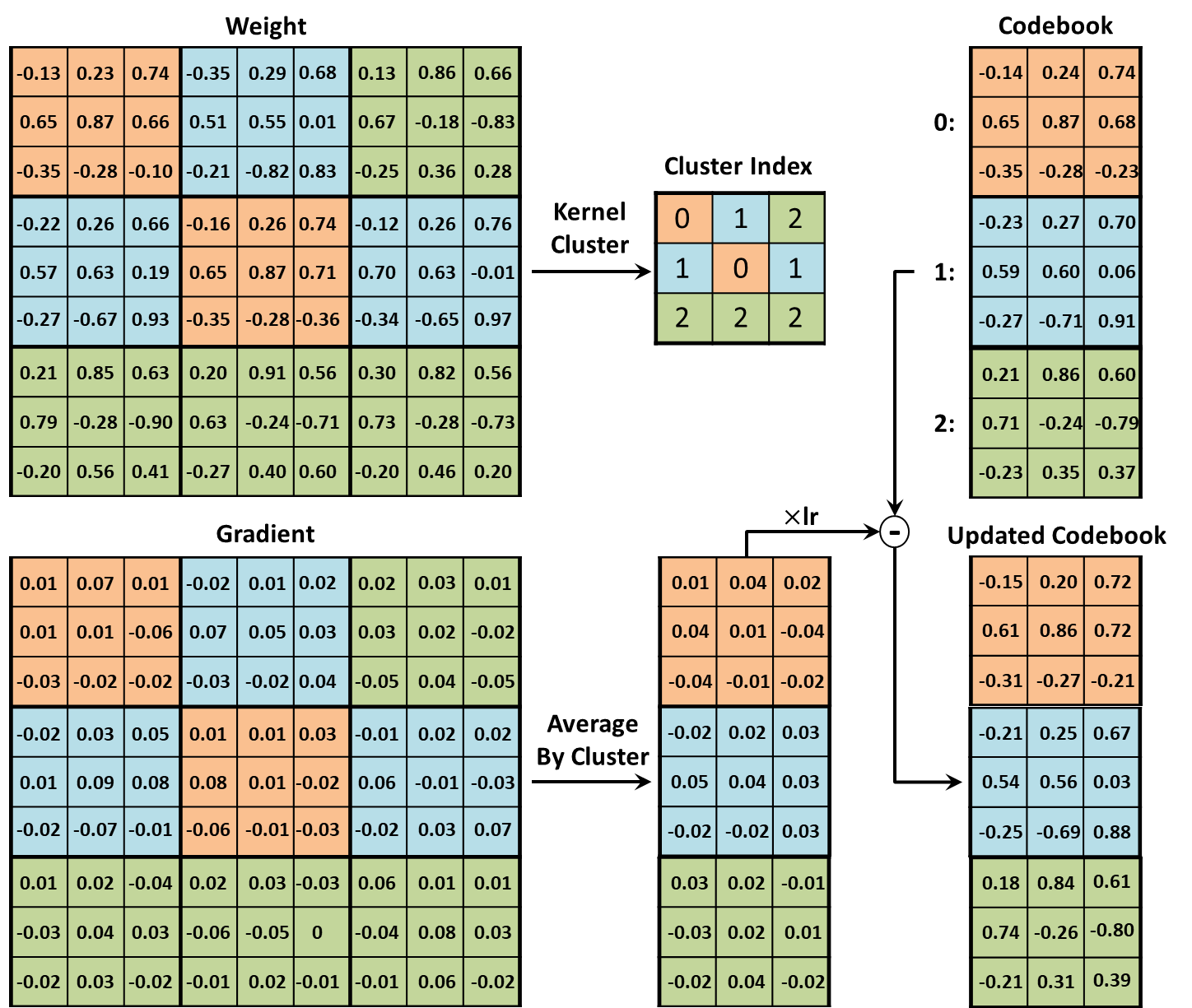

The pipeline of kernel-level quantization is shown in Figure 2. For a convolution layer with weight tensor , where denotes the kernel size, and are input and output channel, respectively. We reshape it as a matrix , where denotes the total number of kernels in , denotes the -th kernel in . We generate a entry kernel codebook . The kernel set corresponding to entry is where is the number of kernels assigned to . We adopt k-means to minimize the following distance:

| (1) |

All kernels in are mapped to corresponding entries in the kernel codebook. We represent each kernel with an index to the corresponding entry. Kernel-level quantization is performed in a layer by layer manner. During retraining, back propagation on codebook is done in a scheme like conventional quantization. We first calculate the gradient of each parameter in . Then, we calculate the elementwise average of gradients that are mapped to the same entry. The average gradients are used to update the kernel codebook values as follows,

| (2) |

where is the network loss, and are values of entry in the codebook after updating for and times, is the learning rate.

In conventional quantization methods, only the bit length needs to be carefully selected. Given , the codebook size is usually set as , because the size of the codebook is relatively small. But for kernel-level quantization, the codebook size is significantly larger. Carefully selecting the codebook size to balance accuracy and storage saves plenty of space. Thus, it is important to find the appropriate codebook size with adequate time complexity.

A naive approach is setting the codebook size to different orders of 2 in descending order until it reaches a certain threshold accuracy. This method makes full use of index bits while failed to select the appropriate size of codebook precisely. Fitting the codebook size to orders of 2 may either waste space to store redundant entries or lower the accuracy because of insufficient codebook size. Therefore, we propose a binary search approach searching for appropriate codebook size. We notice that network accuracy increases along with codebook size increasing. Thus, we set up a tolerable maximal accuracy drop for kernel-level quantization on each layer. Then we use a binary search to find the best codebook size that close to the target accuracy. In this way, simply given the parameters, the network adaptively finds the suitable codebook size and compression ratio. Thus, we precisely control the size of the codebook and the trade-off between the codebook size and network accuracy.

We define the initial entry ratio as and the threshold ratio for target accuracy . We test the reference accuracy without quantization on the current layer. The initial codebook size is , and the corresponding baseline quantized accuracy is . We define the target accuracy as

| (3) |

Then we search for the codebook size with binary search to reach the target accuracy . The upper bound of codebook size is initialized as and lower bound is initialized to zero. The testing codebook size is . Then kernel-level quantization generates a codebook with entries and quantizes the layer to test the validation accuracy. If the validation accuracy is higher than , we set , else . These steps are repeated until reaching the target accuracy or the maximum number of iteration. After finished binary search and quantized current convolution layer, the whole CNN is retrained for one epoch to finetune the model before quantizing next layer. The overall pseudo-code for the kernel quantization step is shown in Algorithm 1.

3.2 Kernel Codebook Quantization

We further compress the kernel codebook after quantized kernels on all convolution layers. The model storage after kernel-level quantization includes two parts, codebook and indexes. Total number of bits needed to store the quantized convolution layer is

| (4) |

where is the bit length for each parameter in the kernel codebook. When is large, storing entries in the codebook consumes massive space. So quantizing the kernel codebook provides us with additional compression ratio. We adopt a simple yet effective 6-bit parameter quantization method for codebook quantization.

We use the layer by layer strategy to preserve performance. First, we do k-means clustering on all parameters in the codebook. A small trick is that since different entries appear for different times in , the more times an entry appears, the more important it is. So we use the entry appearance time as the weight of the kernel and perform weighted k-means. This helps the algorithm to pay more attention to preserving important parameters. Retraining of codebook quantization follows the same scheme as kernel quantization. We update the parameters with the average gradients from different kernels mapped to the same entry. Retraining is conducted after quantizing codebook for every two layers and iterate for one epoch. After quantizing all codebooks of the network, we further run a 6-bit quantization on the fully-connected layer with the same layer by layer k-means clustering method.

It is worth to notice that KQ does not add much extra burden on testing. In the testing phase, the procedure is the same as conventional quantization methods with extra mapping. KQ first recovers the kernel codebook, then recovers the weight tensor from kernel codebook.

3.3 Theoretical Compression Ratio Limit Analysis

Conventional quantization methods use an index to map each parameter to an entry in the codebook. When the length of the index is shortened by reducing the size of the codebook, the total storage is reduced. The compression ratio of conventional quantization (denoted as ) is

| (5) |

where is the bit length of a full precision parameter, which is 32-bit in most cases, is the size of the codebook, is the length of each entry in the codebook. We obtain the theoretical limit when there are only two entries in the codebook and each parameter is represented with 1-bit. The theoretical limit of conventional quantization is 32.

KQ bypasses this limit by mapping each kernel, instead of each parameter, to an entry in the codebook. Thus, KQ uses one index to represent nine parameters (for a kernel). The compression ratio of KQ (denoted as ) is

| (6) |

When the limit is approached, is 2. The equation turns to be ( is too small compared to ), the theoretical compression ratio is .

4 Experiments

To evaluate the performance of the proposed Kernel Quantization (KQ) method, we perform experiments on the large scale benchmark image dataset: ImageNet (ILSVR2012) [19]. We apply KQ on VGG [21], ResNet18 [11] and GoogLeNet [23] architectures.

4.1 Experimental setup

We evaluate KQ on the image classification task. All of the images are resized to . The images are then randomly cropped to patches with mean subtraction and random flipping without any data augmentation. We report the top-1 accuracy on the validation set under single-center-crop testing.

We implement KQ on PyTorch [17] platform and the referenced full precision CNN models are from the torchvision package. During retraining, we use SGD optimizer with learning rate 0.001, momentum 0.9. In the experiments, we only conduct kernel quantization on kernels of size . We adopt the Yinyang k-means [5] to deal with massive samples and cluster centers. Yinyang k-means has exactly the same results compared with conventional k-means but provides a significant boost in clustering speed.

For the sake of narrative, we always name the first convolution layer as conv1, the second convolution layer as conv2 and so on. When computing the compression ratio, we count all convolution layers regardless of the kernel size. In the rest of this section, we use ”K” for Kernel-Level Quantization, “C” for Codebook Quantization, and ”K+C” for apply Codebook Quantization after Kernel-Level Quantization.

To better compare KQ with other quantization methods, we use the average number of bits per parameter (denoted as ) as the measurement. It is defined as the total number of bits needed to represent the convolution weight divided by the total number of parameters in the convolution weight:

| (7) |

It is the reciprocal of compression ratio in Equation 6 multiplied by 32 (full precision bits).

|

|

|

|

|

|

4.2 Ablation Studies

4.2.1 Kernel Reconstruction Error Analysis

Instead of finding the best match for each parameter in the codebook, kernel-level quantization only finds the kernel level best match. Theoretically, for a kernel and a codebook for conventional quantization with entries, the codebook size needed for kernel-level quantization to represent all the possible combinations in the conventional quantization method is . Network quantization aims to represent parameters with fewer bits. Thus, is a small integer in low-bit quantization. [21] discovered that stacking smaller kernels obtains the same size of receptive field as a larger kernel. As the smaller kernel has fewer parameters and is computationally efficient, contemporary CNN architectures prefer smaller kernels than larger kernels. Therefore, is as small as , making KQ easily achieves equivalent, if not better than, representation ability to conventional extremely low-bit quantization methods.

To exploit the reconstruction error of KQ and conventional methods, we statistics the distance between the original weight and quantized weight in Figure 3 under different codebook size or parameter bit length. The experiments are conducted on the 2nd, 7th, and 13th layers of VGG. We find among all three layers, KQ with only 128 entries significantly outperforms conventional 1-bit quantization. Compression ratios of KQ only are 18, 38.08, and 40.33, respectively. After applying codebook quantization, compression ratios are 33.15, 40.53, 40.98 and average numbers of bits per parameter are 0.965, 0.789, 0.780, respectively. KQ with 256, 2048 and 512 entries on three layers outperform the conventional 2-bit quantization methods, respectively. Comparing with both 1-bit and 2-bit conventional quantization, KQ achieves lower reconstruction error with higher compression ratio.

4.2.2 Hyperparameter Analysis

| Threshold Ratio | 0.25 | 0.5 | 0.75 | 1.0 | ||

|---|---|---|---|---|---|---|

| Top-1 Accuracy | 70.1% | 69.8% | 69.4% | 68.5% | ||

|

1.82 | 1.52 | 1.19 | 1.17 |

| Initial Ratio | 0.25 | 0.4 | 0.5 | 0.6 | ||

|---|---|---|---|---|---|---|

| Top-1 Accuracy | 67.9% | 69.2% | 69.4% | 69.5% | ||

|

1.12 | 1.19 | 1.19 | 1.23 |

There are two key hyperparameters in KQ, threshold ratio and initial ratio . We explore the effect of and to the performance on VGG . The results are shown in Table 1, 2.

As the value of threshold ratio gets lower, the average number of bits turns higher. This is reasonable since given a fixed initial ratio , if the threshold ratio is lower, KQ tends to compress the network more conservative with a higher target accuracy . As shown in Fig 3, the relationship between distance and logarithm of codebook size is almost linear, so adjusting is an effectively way to precisely adjust the compression ratio and compressed network accuracy.

The initial ratio controls the least average bits per parameter KQ achieves. By setting , after iterations in binary search, KQ represents last several layers with a codebook with only entries. Thus the index for each kernel only consumes 8-bit. While for and , KQ generates a codebook with and entries in last several layers, using 9-bit and 10-bit to represent each entry in the codebook, respectively. The reason we prefer using instead of iteration number is performing k-means clustering in deeper layers consumes more time.

Since when and , KQ provides the best trade-off between the accuracy and compression ratio, we adopt such settings in the experiment on VGG.

4.3 Results on VGG

VGG is widely used in a variety of computer vision tasks. It has 13 convolution layers and 3 fully connected layers connected in a sequential manner. All internal convolution layers (the first layer excluded) in VGG use convolution kernel. So we apply KQ on all convolution layers.

| Method |

|

Baseline |

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| HWGQ | 2 | 69.8% | 64.1% | 5.7% | |||||||

| LQ-Net | 2 | 72.0% | 68.8% | 3.2% | |||||||

| TSQ | 2 | 71.1% | 69.1% | 2.0% | |||||||

| LQ-Net | 1 | 72.0% | 67.1% | 4.9% | |||||||

| SYQ | 1 | 69.4% | 66.2% | 3.2 % | |||||||

| ours(K) | 1.19 | 71.6% | 69.4% | 2.2% | |||||||

| ours(K+C) | 1.05 | 71.6% | 69.2% | 2.4% |

As shown in Table 3, we compare our method with some of the state-of-the-art methods. Kernel-level quantization itself is able to achieve on average 1.19 bits per parameter, which is about 26.9 times compression. Comparing with the methods which use approximately 2 bits per parameter, we outperform all the methods with fewer bits. As for LQ-Net [28] and SYQ [6] with 1 bit, KQ uses similar bits while has much better performance than both methods. After further applying Codebook Quantization, our method is able to get 30.5 times compression with only another 0.2% accuracy drop. Overall speaking, KQ achieves a great balance between compression and accuracy, and outperforms all other methods.

| Kernel | Compression Ratio | Average Number of Bits | ||||

|---|---|---|---|---|---|---|

| Layer | Number | Codebook Size | K | K+C | K | K+C |

| conv1 | 60 | 33.33% | 7.94% | 10.67 | 2.54 | |

| conv3 | 1008 | 15.80% | 5.78% | 5.05 | 1.84 | |

| conv5 | 1088 | 7.14% | 4.44% | 2.28 | 1.42 | |

| conv7 | 640 | 4.45% | 3.65% | 1.42 | 1.16 | |

| conv9 | 512 | 3.32% | 3.16% | 1.06 | 1.01 | |

| conv11 | 512 | 3.32% | 3.16% | 1.06 | 1.01 | |

| conv13 | 512 | 3.32% | 3.16% | 1.06 | 1.01 | |

To better understand how KQ works on different layers, we list the layerwise compression ratio in Table 4. An obvious observation is deeper layers has smaller kernel codebook than shallower layers. This observation, on the one hand, proves there is more redundancy in the deeper layers. On the other hand, it shows the shallower layers of VGG are more important, and network compression tasks exploit major compression ratio gain in deeper layers. Since the max iteration is set to 8, , and the last five layers all have kernels, the kernel codebook size of 512 presents that it is halved from consecutively for eight times and the validation accuracy is still above the target accuracy. This implies with more iterations in binary search,KQ has potential to boost the performance further.

|

|

|

|

We further analysis the distribution of index after the kernel quantization. As shown in Figure 4, we count the appearances of each entry in the codebook on the first 4 layers of VGG. It is clear that the distributions of the statistics are non-uniform, which makes it possible to further losslessly compress the network with coding methods like Huffman coding in [9].

4.4 Results on ResNet18

| Kernel | Compression Ratio | Average Number of Bits | ||||

|---|---|---|---|---|---|---|

| Layer | Number | Codebook Size | K | K+C | K | K+C |

| conv1 | 1203 | 33.28% | 9.33% | 10.64 | 2.98 | |

| conv4 | 609 | 18.34% | 6.26% | 5.87 | 2.00 | |

| conv7 | 2974 | 22.31% | 7.57% | 7.15 | 2.42 | |

| conv10 | 6987 | 15.17% | 6.51% | 5.01 | 2.15 | |

| conv13 | 2303 | 5.92% | 4.49% | 1.95 | 1.48 | |

| conv15 | 7679 | 7.44% | 5.06% | 2.38 | 1.62 | |

We also evaluate KQ on ResNet18 architecture. Unlike the VGG, ResNet18 has batch normalization layers and skip connections directly connecting a deeper layer with a shallower layer to prevent the gradient vanishing. For ResNet18 network, we set , , and the maximum iteration is 8.

| Method |

|

Baseline |

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| INQ | 3 | 68.3% | 68.1% | 0.2% | |||||||

| INQ | 2 | 68.3% | 66.0% | 2.3% | |||||||

| HWGQ | 2 | 67.3% | 59.6% | 7.7% | |||||||

| LQ-Net | 2 | 70.3% | 64.9% | 5.4% | |||||||

| SYQ | 2 | 69.1% | 68.0% | 1.1% | |||||||

| QN | 2 | 70.3% | 69.1% | 1.2% | |||||||

| XNOR-net | 1 | 69.3% | 51.2% | 18.1% | |||||||

| LQ-Net | 1 | 70.3% | 62.6% | 7.7% | |||||||

| SYQ | 1 | 69.1% | 62.9% | 6.2% | |||||||

| QN | 1 | 70.3% | 66.5% | 3.8% | |||||||

| ours(K) | 2.99 | 69.7% | 69.0% | 0.7% | |||||||

| ours(K+C) | 1.62 | 69.7% | 68.7% | 1.0% |

We compare KQ with some of the state-of-the-art methods and show the results in Table 6. Compared to INQ [29], although with only kernel-level quantization, INQ has less accuracy loss with the same average number of bits, but after applying the Codebook Quantization and further lower the average bit length, KQ significantly outperforms the INQ with 0.38 fewer average number of bits and less accuracy loss. And KQ has better performance with fewer bits comparing to other 2-bit quantization methods. Under 1-bit setting, KQ achieves significant improvement with a little more bits.

In Table 5, we report the layerwise compression ratio of KQ on ResNet18. Comparing with VGG, we need larger codebook size on most of the layers. The most obvious difference is deeper layers of ResNet18 need larger codebook size than shallower layers. This is an aspect demonstrating the compactness of ResNet18. Meanwhile, KQ achieves 1.62 bits per parameter without noticeable accuracy loss on such a compact network, proving the effectiveness of KQ.

As the increasing of codebook size, we need more bits to represent each index. However, in KQ, each index represents nine parameters. For 1-bit increase of index bit length, the average number of bits per parameter only increase by but the codebook size is doubled. With this merit, KQ achieves an extremely low average number of bits per parameter on most large networks.

4.5 Comparison with Structural Quantization

| Method | Compression Ratio | Top-1 Acc Loss |

|---|---|---|

| Deep k-Means | 2 | 0.17% |

| Deep k-Means | 3 | 0.32% |

| Deep k-Means | 4 | 1.95% |

| GreBdec | 4.5 | 1.4% |

| K+C | 5.78 | -0.2% |

To further demonstrate the superiority of KQ, we evaluate KQ with the state-of-the-art structural quantization method deep k-means [13] and structural compression method GreBdec [27] using GoogLeNet [23] on ImageNet dataset. We set and for KQ on GoogLeNet. Table 7 shows the compression ratio of the above methods on convolution layers. Our method outperforms both of them with higher accuracy and compression ratio. This further proves the high efficiency of the proposed KQ algorithm. Compared with deep k-means, we credit the superiority in performance to KQ’s ability in preserving the tendency of the kernel, while [13] proposed to use each row of the kernel as quantization unit failed to do so. As one of key functions of the convolution kernel is that it can extract the texture feature as a filter from the signal. The tendency of parameters in the convolution kernel is critical in preserving this ability. Given a row in kernel, it has the same distance to and , but quantizing into is obviously better in preserving performance, [13] failed in such case while our KQ considers the kernel as a whole and better deals with these kinds of cases. Moreover, in [13], each index represents three parameters while in KQ, each parameter represents nine parameters (for a kernel with size ). This provides a higher theoretical compression ratio for KQ.

5 Conclusion

In this work, we present a novel network quantization method, Kernel Quantization (KQ), which aims to provide a highly efficient method with high compression ratio and low accuracy loss. By considering kernel as the quantization unit, KQ boosts the theoretical limit of quantization from 32 to 288 and gives researchers more space for improvement. KQ combines the kernel and weight level quantizations in a unified framework. The experiments prove that KQ needs 1.05 and 1.62 bits for VGG and ResNet18 to represent each parameter and achieves the state-of-the-art compression ratio with little accuracy loss. Although KQ’s improvement in performance is significant, there are still several future directions for research to exploit the potential of KQ better. In our current implementation, we use k-means and relative accuracy change to determine codebook. However, this could be changed to methods like [29], where kernels are quantized in descending order of importance to better preserve accuracy instead of minimizing kernel reconstruction loss. Moreover, we use k-means to further quantize codebooks and fully-connected layers. It could be possible to use lower bits with methods like [6] to quantize codebook and fully-connected layers in a symmetric way.

References

- [1] Zhaowei Cai, Xiaodong He, Jian Sun, and Nuno Vasconcelos. Deep learning with low precision by half-wave gaussian quantization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5918–5926, 2017.

- [2] Yoojin Choi, Mostafa El-Khamy, and Jungwon Lee. Towards the limit of network quantization. arXiv preprint arXiv:1612.01543, 2016.

- [3] Matthieu Courbariaux, Yoshua Bengio, and Jean-Pierre David. Binaryconnect: Training deep neural networks with binary weights during propagations. In Advances in neural information processing systems, pages 3123–3131, 2015.

- [4] Misha Denil, Babak Shakibi, Laurent Dinh, Nando De Freitas, et al. Predicting parameters in deep learning. In Advances in neural information processing systems, pages 2148–2156, 2013.

- [5] Yufei Ding, Yue Zhao, Xipeng Shen, Madanlal Musuvathi, and Todd Mytkowicz. Yinyang k-means: a drop-in replacement of the classic k-means with consistent speedup. In Proceedings of the 32Nd International Conference on International Conference on Machine Learning - Volume 37, ICML’15, pages 579–587, 2015.

- [6] Julian Faraone, Nicholas Fraser, Michaela Blott, and Philip HW Leong. Syq: Learning symmetric quantization for efficient deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4300–4309, 2018.

- [7] Yunchao Gong, Liu Liu, Ming Yang, and Lubomir D. Bourdev. Compressing deep convolutional networks using vector quantization. CoRR, abs/1412.6115, 2014.

- [8] Jun Guo and Hongyang Chao. Building an end-to-end spatial-temporal convolutional cetwork for video super-resolution. In Thirty-First AAAI Conference on Artificial Intelligence, 2017.

- [9] Song Han, Huizi Mao, and William J Dally. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv preprint arXiv:1510.00149, 2015.

- [10] Song Han, Jeff Pool, John Tran, and William Dally. Learning both weights and connections for efficient neural network. In Advances in neural information processing systems, pages 1135–1143, 2015.

- [11] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [12] Itay Hubara, Matthieu Courbariaux, Daniel Soudry, Ran El-Yaniv, and Yoshua Bengio. Binarized neural networks. In Advances in neural information processing systems, pages 4107–4115, 2016.

- [13] Zhenyu Wu Zhangyang Wang Ashok Veeraraghavan Yingyan Lin Junru Wu, Yue Wang. Deep k-means: re-training and parameter sharing with harder cluster assignments for compressing deep convolutions. ICML, 2018.

- [14] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pages 1097–1105, 2012.

- [15] Cong Leng, Zesheng Dou, Hao Li, Shenghuo Zhu, and Rong Jin. Extremely low bit neural network: Squeeze the last bit out with admm. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- [16] Dong Nie, Li Wang, Lei Xiang, Sihang Zhou, Ehsan Adeli, and Dinggang Shen. Difficulty-aware attention network with confidence learning for medical image segmentation. In Thirty-Third AAAI Conference on Artificial Intelligence, 2019.

- [17] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in PyTorch. In NIPS Autodiff Workshop, 2017.

- [18] Mohammad Rastegari, Vicente Ordonez, Joseph Redmon, and Ali Farhadi. Xnor-net: Imagenet classification using binary convolutional neural networks. In European Conference on Computer Vision, pages 525–542. Springer, 2016.

- [19] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3):211–252, 2015.

- [20] Yemin Shi, Yonghong Tian, Yaowei Wang, and Tiejun Huang. Sequential deep trajectory descriptor for action recognition with three-stream cnn. IEEE Transactions on Multimedia, 19(7):1510–1520, 2017.

- [21] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [22] Sanghyun Son, Seungjun Nah, and Kyoung Mu Lee. Clustering convolutional kernels to compress deep neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), pages 216–232, 2018.

- [23] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Computer Vision and Pattern Recognition (CVPR), 2015.

- [24] Chuan Wang, Haibin Huang, Xiaoguang Han, and Jue Wang. Video inpainting by jointly learning temporal structure and spatial details. In Thirty-Third AAAI Conference on Artificial Intelligence, 2019.

- [25] Peisong Wang, Qinghao Hu, Yifan Zhang, Chunjie Zhang, Yang Liu, and Jian Cheng. Two-step quantization for low-bit neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4376–4384, 2018.

- [26] Jiwei Yang, Xu Shen, Jun Xing, Xinmei Tian, Houqiang Li, Bing Deng, Jianqiang Huang, and Xian-sheng Hua. Quantization networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [27] Xiyu Yu, Tongliang Liu, Xinchao Wang, and Dacheng Tao. On compressing deep models by low rank and sparse decomposition. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017.

- [28] Dongqing Zhang, Jiaolong Yang, Dongqiangzi Ye, and Gang Hua. Lq-nets: Learned quantization for highly accurate and compact deep neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), pages 365–382, 2018.

- [29] Aojun Zhou, Anbang Yao, Yiwen Guo, Lin Xu, and Yurong Chen. Incremental network quantization: Towards lossless cnns with low-precision weights. arXiv preprint arXiv:1702.03044, 2017.