Kernel Embedding based Variational Approach for Low-dimensional Approximation of Dynamical Systems

Abstract

Transfer operators such as Perron-Frobenius or Koopman operator play a key role in modeling and analysis of complex dynamical systems, which allow linear representations of nonlinear dynamics by transforming the original state variables to feature spaces. However, it remains challenging to identify the optimal low-dimensional feature mappings from data. The variational approach for Markov processes (VAMP) provides a comprehensive framework for the evaluation and optimization of feature mappings based on the variational estimation of modeling errors, but it still suffers from a flawed assumption on the transfer operator and therefore sometimes fails to capture the essential structure of system dynamics. In this paper, we develop a powerful alternative to VAMP, called kernel embedding based variational approach for dynamical systems (KVAD). By using the distance measure of functions in the kernel embedding space, KVAD effectively overcomes theoretical and practical limitations of VAMP. In addition, we develop a data-driven KVAD algorithm for seeking the ideal feature mapping within a subspace spanned by given basis functions, and numerical experiments show that the proposed algorithm can significantly improve the modeling accuracy compared to VAMP.

1 Introduction

It has been shown that complex nonlinear processes can be accurately described by linear models in many science and engineering fields, including wireless communications [19, 56], molecular dynamics [54, 4, 55], fluid dynamics [28, 43] and control theory [1], where the linear models can be expressed by a unified formula

| (1) |

and the expectation operator can be removed for deterministic systems. In such models, the state variable is tranformed into a feature space by the transformation , and the dynamics with lag time is characterized by a linear time-invariant system in the feature space. Then, all the dynamical properties of the system can be quantitatively analyzed after estimating the transition matrix from data via linear regression. A special case of the linear models is Markov state models [39, 35] for conformational dynamics, which is equivalent to the well-known Ulam’s method [8, 14]. In a Markov state model, the feature mapping consists of indicator functions of subsets of state and represents the transition probability from subset to subset . Besides Markov state models and the Ulam’s method, a large number of similar modeling methods, e.g., dynamic mode decomposition [38, 2, 48, 21], time-lagged independent component analysis (TICA) [30, 34, 41], extended dynamic mode decomposition (EDMD) [50, 16, 40], Markov transition models [52], variational approach of conformation dynamics (VAC) [31, 32, 33], variational Koopman models [54] and their variants based on kernel ebmeddings [15, 16] and tensors [14, 33], are proposed by using different feature mappings.

From the perspective of operator theory, all the models in the form of (1) can be interpreted as algebraic representations of transfer operators of systems, including Frobenius-Perron (FP) operators and Koopman operators, and some of them are universal approximators for nonlinear dynamical systems under the assumption that the dimension of feature space is large enough [20] or the infinite-dimensional kernel mappings are utilized [45]. However, due to the limitation of computational cost and requirements of dynamical analysis, the low-dimensional approximation of transfer operators is still a critical and challenging problem in applications [17].

One common way to solve this problem is to identify the dominant dynamical structures, e.g., metastable states [9, 37], cycles [6] and coherent sets [11], and achieve the corresponding low-dimensional representations via spectral clustering. But this strategy assumes that an accurate high-dimensional model is known a priori, which is often violated especially for large-scale systems.

Another way for deterministic systems is to seek the feature mapping by minimizing the regression error of (1) under the constraint that the state variable can also be accurately reconstructed from [23, 24]. Notice that the constraint is necessary, otherwise a trivial but uninformative model with and could be found. Some similar methods are developed for stochastic systems by considering (1) as a conditional generative model, where the parameters of can be trained based on the likelihood or the other statistical criteria [51, 25]. However, these methods are applicable only if are non-negative functions and usually involves the intractable probability density estimation.

In recent years, the variational approach has led to great progress for low-dimensional dynamical modeling, which was first proposed for time-reversible processes [31, 32, 27, 54] and extended to non-reversible processes in [53]. In contrast with the other methods, this approach provides a general and unified framework for data-driven model choice, reduction and optimization of dynamical systems based on the presented variational scores related to approximation errors of transfer operators. It can be easily integrated with deep learning to effectively analyze high-dimensional time series in an end-to-end manner [26, 3]. The existing variational principle based methods suffer from two drawbacks: First, it is necessary to assume that the transfer operator is Hilbert-Schimdt (HS) or compact as an operator between two weighted spaces so that the maximum values of variational scores exist. But there is no easy way to test the assumption especially when we do not have strong prior knowledge regarding the system. Specifically, it can be proved that the assumption does not hold for most deterministic systems. Second, even for stochastic systems which satisfies the assumption, the common variational scores are possibly sensitive to small modeling variations, which could affect the effectiveness of the variational approach.

In this work, we introduce a kernel embedding based variational approach for dynamical systems (KVAD) using the theory of kernel embedding of functions [44, 46, 47, 45], where the modeling error is measured by using the distance between kernel embeddings of transition densities. The kernel based variational score in KVAD provides a robust and smooth quantification of differences between transfer operators, and is proved to be bounded for general dynamical systems, including deterministic and stochastic systems. Hence, it can effectively overcome the difficulties of existing variational methods, and expands significantly the range of applications. Like the previous variational scores, the kernel based score can also be consistently estimated from trajectory data without solving any intermediate statistical problem. Therefore, we develop a data-driven KVAD algorithm by considering as a linear superposition of a given set of basis functions. Furthermore, we establish a relationship between KVAD, diffusion maps[5] and the singular components of transfer operators. Finally, the effectiveness the proposed algorithm is demonstrated by numerical experiments.

2 Problem formulation and preliminaries

For a Markovian dynamical system in the state space , its dynamics can be completely characterized by the transition density

| (2) |

and the time evolution of the system state distribution can be formulated as

| (3) |

Here denotes the state of the system at time and is the probability density of . The transfer operator is called the Perron-Frobenius (PF) operator333Another commonly used transfer operator for Markovian dynamics is Koopman operator [49], which describes the evolution of observables instead of probability densities, and is the dual of the PF operator. In this paper, we focus only on the PF operator for convenience of analysis., which is a linear but usually infinite-dimensional operator. Notice that the deterministic dynamics in the form of is a specific case of the Markovian dynamics, where is a Dirac function centered at , and the corresponding PF operator is given by

By further assuming that the conditional distribution of for given can always be represented by a linear combination of density basis functions , we obtain a finite-dimensional approximation of the transition density:

| (4) |

The feature mapping are real-valued observables of the state , and provide a sufficient statistic for predicting the future states. Based on this approximation, the time evolution equation (3) of the state distribution can then be transformed into a linear evolution model of the feature functions in the form of (1) with the transition matrix

| (5) |

and many dynamical properties of the Markov system, including spectral components, coherent sets and the stationary distribution, can be efficiently from the linear model.

It is shown in [20] that Eq. (4) provides a universal approximator of Markovian dynamics if the set of basis function is rich enough. But in this paper, we focus on a more practically problem: Given a small , find and with such that the modeling error of (4) is minimized.

2.1 Variational principle for Perron-Frobenius operators

We now briefly introduce the variational principle for evaluating the approximation quality of linear models (1). The detailed analysis and derivations can be found in [53].

For simplicity of notation, we assume that the available trajectory data are organized as

where are set of all transition pairs occurring in the given trajectories, and we denote the limits of empirical distributions of by and .

Due to the above analysis, the approximation quality of (4) can be evaluated by the difference between the PF operator deduced from and the actual one. In the variational principle proposed by [53], is considered as a mapping from to with inner products

From this insight, the Hilbert-Schmidt (HS) norm of the modeling error can be expressed as a weighted mean square error of conditional distributions

| (6) |

and has the decomposition

with

for . Here denotes the mean value over all transition pairs as . Because is a constant independent of modeling and can be easily estimated from data via empirical averaging, we can learn parametric models of and by maximizing as a variational score, which yields the variational approach for Markov processes (VAMP) [53].

It can be seen that the variational principle is developed under the assumption that is an HS operator.444This assumption can be relaxed to compactness of for some variants of the variational principle, but the relaxed assumption is not satisfied by deterministic systems either (see Proposition 1). However, in many practical applications, it is difficult to justify the assumption for unknown transition densities. Particularly, for deterministic systems, this assumption does not hold and the maximization of could lead to unreasonable models.

Proposition 1.

For a deterministic system , if is an infinite-dimensional Hilbert space,

-

1.

is not a compact operator from to and hence not an HS operator,

-

2.

can be maximized by an arbitrary density basis with and .

Proof.

See Appendix A. ∎

2.2 Kernel embedding of functions

Moving away from dynamical systems for a moment, here we introduce the theory of kernel embedding of functions [46, 45], which will be utilized to address the difficulty of VAMP in Section 3.

A kernel function is a symmetric and positive definite function, which implicitly defines a kernel mapping from to a reproducing kernel Hilbert space , and the inner product of satisfies the reproducing property

By using the kernel mapping, we can embed a function in the Hilbert space as

Here is an injective mapping for if is a universal kernel [47], and we can then measure the similarity between functions and by the distance between and :

where and . The most commonly used universal kernel for is the Gaussian kernel , where denotes the bandwidth of the kernel.

In the specific case where both and are probability density functions, is called the maximum mean discrepancy (MMD) and can be estimated from samples of [45].

3 Theory

In this section, we develop a new variational principle for Markovian dynamics based on the kernel embedding of trainstion densities.

Assuming that is a universal kernel and bounded by , is also bounded in with

Motivated by this conclusion, we propose a new measure for approximation errors of PF operators

| (7) |

by replacing the norm with in (6), which is finite for both deterministic and stochastic systems if . In contrast with Eq. (6), the new measure provides a more general way to quantify modeling errors of dynamics. Furthermore, from an application point of view, Eq. (7) provides a more smooth and effective representation of modeling errors of the conditional distributions.

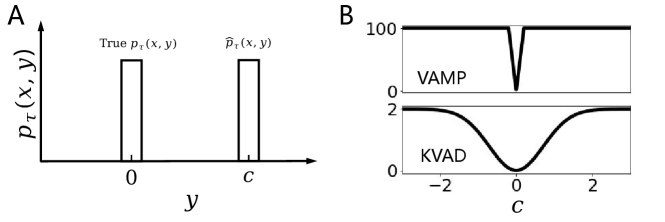

Example 2.

Let us consider a one-dimensional system with and . Suppose that for a given , and are separately uniform distributions within and as shown in Fig. 1A, where is the model parameter. In VAMP, the approximation error between the two conditional distributions are calculated as , and it can be observed from Fig. 1B that this quantity is a constant independent of except in a small range . But kernel embedding based error in (7) is a smooth function of and provides a more reasonable metric for the evaluation of .

The following proposition shows that Eq. (7) can also be derived from the HS norm of operator error by treating as a mapping from to .

Proposition 3.

Proof.

See Appendix B. ∎

As a result of this proposition, we can find optimal and by maximizing .

4 Approximation scheme

In this section, we derive a data-driven algorithm to estimate the optimal low-dimensional linear models based on the variational principle stated in Proposition 3.

4.1 Approximation with fixed

We first propose a solution for the problem of finding the optimal given that is fixed.

Proposition 4.

If is a full-rank matrix, the solution to is

| (8) |

and

| (9) | |||||

where denotes the expected value with and independently drawn from the joint distribution of transition pairs.

Proof.

See Appendix C. ∎

As in Eq. (8) is the joint distribution of transition pairs , we can get a nonparametric approximation of

| (10) |

by replacing the the transition pair distribution with its empirical estimate. This result gives us a linear model (1) with transition matrix

| (11) | |||||

with and denoting the Penrose-Moore pseudo-inverse of , which is equal to the least square solution to the regression problem .

4.2 Approximation with unknown

We now consider the modeling problem with the normalization condition

| (12) |

where and are both unknown, and we make the Ansatz to represent as linear combinations of basis functions . Furthermore, we assume without loss of generality that the whitening transformation is applied to the basis functions so that

| (13) |

(See, e.g., Appendix F in [53] for the details of whitening transformation.)

It is proved in Appendix D that there must be a solution to under constraint (12) satisfying

| (14) |

Therefore, we can model in the form of

| (15) |

Substituting this Ansatz into the KVAD score, shows that can be computed as the solution to the maximization problem:

| (16) |

with

| (17) |

being a matrix representation of . Here

is the Gram matrix of , and . This problem has the same form as principal component analysis problem and can be effectively can be solved via the eigendecomposition of matrix [13]. The resulting KVAD algorithm is as follows, and it can be verified that the normalization conditions (12) exactly holds for the estimated transition density (see Appendix E).

-

1.

Select a set of basis function with .

-

2.

Perform the whitening transformation so that (13) holds.

-

3.

Perform the truncated eigendecomposition

where , are square roots of the largest eigenvalues of , and consists of the corresponding dominant eigenvectors. This step is a bottleneck of the algorithm due to the large size Gram matrix , and the computational cost can be reduced by Nyström approximation or random fourier features [10, 36].

- 4.

4.3 Component analysis

Due to the fact that are non-trainable, the approximate PF operator obtained by the KVAD algorithm can be decomposed as

It is worth pointing out that obtained by KVAD algorithm are variational estimates of the th singular value, left singular function and right singular function of the operator defined by

| (18) |

where . Thus, the KVAD algorithm indeed performs truncated singular value decomposition (SVD) of (see Appendix F).

At the limit case where the all singular components of are exactly estimated by KVAD, we have

| (19) | |||||

for all . The distance measures the dynamical similarity of two points in the state space, and can be approximated by the Euclidean distance derived from coordinates as shown in (19). Hence, KVAD provides an ideal low-dimensional embedding of system dynamics, and can be reinterpreted a variant of the diffusion mapping method for dynamical model reduction [5] (see Appendix G).

5 Relationship with Other Methods

5.1 EDMD and VAMP

It can be seen from (11) that the optimal linear model obtained by KVAD is consistent with the model of EDMD [49] for given feature functions . However, the optimization and the dimension reduction of the observables are not considered in the conventional EDMD.

Both VAMP [53] and KVAD solve this problem by variational formulations of modeling errors. As analyzed in Sections 2.1 and 3, KVAD is applicable to more general systems, including deterministic systems, compared to VAMP. Moreover, VAMP needs to represent both and by parametric models for dynamical approximation, whereas KVAD can obtain the optimal from data without any parametric model for given . Our numerical experiments (see Section 6) show that KVAD can often provide more accurate low-dimensional models than VAMP when the same Ansatz basis functions are used.

5.2 Conditional mean embedding, kernel EDMD and kernel CCA

For given two random variables and , the conditional mean embedding proposed in [46] characterizes the conditional distribution of for given by the conditional embedding operator with

where denotes the kernel mapping and can be consistently estimated from data. When applied to dynamical data, this method has the same form as the kernel EDMD and its variants [42, 16, 15], and is indeed a specific case of KVAD with Ansatz functions and dimension (see Appendix H).

In addition, for most kernel based dynamical modeling methods, the dimension reduction problem is not thoroughly investigated. In [18], a kernel method for eigendecomposition of transfer operators (including PF operators and Koopman operators) was developed. But as analyzed in [53], the dominant eigen-components may not yield an accurate low-dimensional dynamical model. Kernel canonical correlation analysis (CCA) [22] can overcome this problem as a kernelization of VAMP, but it is also applicable only if is a compact operator from to .

Compared to the previous kernel methods, KVAD has more flexibility in model choice, where the dimension and model class of can be arbitrarily selected according to practical requirements.

6 Numerical experiments

In what follows, we demonstrate the benefits of the KVAD method for studies of nonlinear dynamical systems by two examples, and compare the results from KVAD with VAMP and kernel EDMD, where the basis functions in VAMP and kernel functions in kernel EDMD are the same as those in KVAD. For kernel EDMD, the low-dimensional linear model is achieved by leading eigenvalues and eigenfunctions as in [18], which characterizes invariant subspaces of systems.

Example 5.

Van der Pol oscillator, which is a two-dimensional system governed by

where and are standard Wiener processes. The flow field of this system for is depicted in Fig. 2A. We generate transition pairs for modeling, where the lag time , are randomly drawn from , and are obtained by the Euler-Maruyama scheme with step size .

Example 6.

Lorenz system defined by

with being standard Wiener processes. Fig. 2B plots a trajectory of this system in the state space with . We sample transition pairs from a simulation trajectory with length and lag time as training data for each , and perform simulations by the Euler-Maruyama scheme with step size .

In both examples, the feature mapping is represented by basis functions

with all components of and randomly drawn from , which are widely used in shallow neural networks [12, 7]. The kernel is selected as the Gaussian kernel with for the oscillator and for the Lorenz system.

Fig. 2 shows estimates of singular values of (KVAD), singular values of (VAMP) and absolute values of eigenvalues of (kernel EDMD) with different noise parameters, where singular values must be nonnegative real numbers but eigenvalues could be complex or negative. We see that the singular values and eigenvalues given by VAMP and kernel EDMD decay very slowly. Hence, it is difficult to extract an accurate model from the estimation results of VAMP and kernel EDMD for a small . Especially for VAMP, a large number of singular values are close to as analyzed in Proposition 1 when the systems are deterministic with . In contrast, the singular values utilized in KVAD rapidly converges to zero, which implies one can effectively extract the essential part of dynamics from a small number of feature mappings.

The first two singular components of for obtained by KVAD are shown in Fig. 3 (see Section 4.3).555The is approximated by multiple delta functions and hard to visualize. It can be observed that of the oscillator characterize transitions between left-right and up-down areas separately. Those of the Lorenz systems are related to the two attractor lobes and the transition areas.

It is interesting to note that the singular values of given by KVAD are slightly influenced by as illustrated in Fig. 2. Our numerical experience also show that the right singular functions remain almost unchanged for different (see Fig. 5 in Appendix I). This phenomenon can be partially explained by the fact that the variational score estimated by (17) is not sensitive to small perturbations of if the bandwidth of the kernel is large. More thorough investigations on this phenomenon will be performed in future.

In order to quantitively evaluate the performance of the three methods, we define the following trajectory reconstruction error:

where is the true trajectory data and is the conditional mean value of obtained by the model. The average error over multiple replicate simulations is minimized if and only if equals to the exact conditional mean value of for all . For all the three methods,

where is the least square solution to the regression problem [49]. Fig. 4 summarizes of reconstruction errors of the two systems obtained with different choices of the model dimension and noise parameter , and the superiority of our KVAD is clearly shown.

7 Conclusion

In this paper, we combine the kernel embedding technique with the variational principle for transfer operators. This provides a powerful and flexible tool for low-dimensional approximation of dynamical system, and effectively addresses the shortcomings and limitations of the existing variational approach. In the proposed KVAD framework, a bounded and well defined distance measure of transfer operators is developed based on kernel embedding of transition densities, and the corresponding variational optimization approach can be applied to a broader range of dynamical systems than the existing variational approaches.

Our future work includes the convergence analysis of KVAD and optimization of kernel functions. From the algorithmic point of view, the main remaining question is how to efficiently perform KVAD learning from big data with deep neural networks. It will be also interesting to apply KVAD to multi-ensemble Markov models [55] for data analysis of enhanced sampling.

Appendix

For convenience of notation, we define

and

for , and an inner product .

Appendix A Proof of Proposition 1

The proof of first conclusion is given in Appendices A.5 and B of [53], and we prove here the second conclusion.

We first show . Because is a positive semi-definite matrices, it can be decomposed as

| (20) |

where is a orthogonal matrix and is a diagonal matrix. Let and , we have

Under the assumption of and , we have

and

with by considering that . Consequently,

which yields the second conclusion of this proposition.

Appendix B Proof of Proposition 3

Let be an orthonormal basis of . We have

Therefore, is an HS operator with

if is bounded by , and

If , we get

and

Appendix C Proof of Proposition 4

Let us first consider the case where . Then

which leads to

and

We now suppose that and let

Becuase , we have

and

Considering that the transition density defined by is equivalent to that by as

we can obtain

and

Appendix D Proof of (14)

Appendix E Normalization property of estimated transition density

For the transition density obtained by the KVAD algorithm, we have

for . Therefore,

Appendix F Singular value decomposition of

Because is also an HS operator from to , there exists the following SVD:

| (21) |

Here denotes the th largest singular value, and are the corresponding left and right singular functions. According to the Rayleigh variational principle, for the th singular component, we have

| (22) |

under constraints

| (23) |

and the solution is .

From the above variational formulation of SVD, we can obtain the following proposition:

Proposition 7.

The singular functions of satisfies

if .

Proof.

We first show that by contradiction. If , can be decomposed as

Because

we can get that and

with

which leads to a contradiction. Therefore, .

Based on this proposition, can be approximated by

| (24) |

with (13) being satisfied. Substituting the Ansatz (24) into (22, 23) and replacing expected values with empirical estimates yields

This problem for all can be equivalently solved by the KVAD algorithm in Section 4.2. Consequently, are variational estimates of the th singular value, left singular function and right singular function of the operator .

Appendix G Proof of (19)

From (21) and the orthonormality of , we have

If the KVAD algorithm gives the exact approximation of singular components and for , we can get

Appendix H Comparison between KVAD and conditional mean embedding

We consider

for in KVAD, and assume that is invertible. Here is the th column of , and is the kernel mapping and can be explicitly represented as a function from to a (possibly infinite-dimensional) Euclidean space with [29]. For a given data set , an arbitrary can be decomposed as

with and , and

So, we can assume without loss of generality that each can be represented as a linear combination of , and therefore all invertible can generate the equivalent model. For convenience of analysis, we set and

Then

Appendix I Estimated singular components of Examples 5 and 6 with

References

- [1] S. L. Brunton, B. W. Brunton, J. L. Proctor, and J. N. Kutz, Koopman invariant subspaces and finite linear representations of nonlinear dynamical systems for control, PloS one, 11 (2016), p. e0150171.

- [2] K. K. Chen, J. H. Tu, and C. W. Rowley, Variants of dynamic mode decomposition: Boundary condition, koopman, and fourier analyses, Journal of Nonlinear Ence, 22 (2012), pp. 887–915.

- [3] W. Chen, H. Sidky, and A. L. Ferguson, Nonlinear discovery of slow molecular modes using state-free reversible vampnets, Journal of chemical physics, 150 (2019), p. 214114.

- [4] J. D. Chodera and F. Noé, Markov state models of biomolecular conformational dynamics, Curr Opin Struct Biol, 25 (2014), pp. 135–144.

- [5] R. R. Coifman and S. Lafon, Diffusion maps, Applied and computational harmonic analysis, 21 (2006), pp. 5–30.

- [6] N. D. Conrad, M. Weber, and C. Schútte, Finding dominant structures of nonreversible markov processes, Multiscale Modeling & Simulation, 14 (2016), pp. 1319–1340.

- [7] S. Dash, B. Tripathy, et al., Handbook of Research on Modeling, Analysis, and Application of Nature-Inspired Metaheuristic Algorithms, IGI Global, 2017.

- [8] G. Debdipta, T. Emma, and P. D. A., Constrained ulam dynamic mode decomposition: Approximation of perron-frobenius operator for deterministic and stochastic systems, IEEE Control Systems Letters, (2018), pp. 1–1.

- [9] P. Deuflhard and M. Weber, Robust perron cluster analysis in conformation dynamics, Linear algebra and its applications, 398 (2005), pp. 161–184.

- [10] P. Drineas and M. W. Mahoney, On the nyström method for approximating a gram matrix for improved kernel-based learning, journal of machine learning research, 6 (2005), pp. 2153–2175.

- [11] K. Fackeldey, P. Koltai, P. Névir, H. Rust, A. Schild, and M. Weber, From metastable to coherent sets—time-discretization schemes, Chaos: An Interdisciplinary Journal of Nonlinear Science, 29 (2019), p. 012101.

- [12] G.-B. Huang, Q.-Y. Zhu, and C.-K. Siew, Extreme learning machine: theory and applications, Neurocomputing, 70 (2006), pp. 489–501.

- [13] I. T. Jolliffe and J. Cadima, Principal component analysis: a review and recent developments, Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 374 (2016), p. 20150202.

- [14] O. Junge and P. Koltai, Discretization of the frobenius-perron operator using a sparse haar tensor basis: The sparse ulam method, Siam Journal on Numerical Analysis, 47 (2009), pp. 3464–3485.

- [15] Y. Kawahara, Dynamic mode decomposition with reproducing kernels for koopman spectral analysis, in Advances in neural information processing systems, 2016, pp. 911–919.

- [16] I. G. Kevrekidis, C. W. Rowley, and M. O. Williams, A kernel-based method for data-driven koopman spectral analysis, Journal of Computational Dynamics, 2 (2016), pp. 247–265.

- [17] S. Klus, F. Nüske, P. Koltai, H. Wu, I. Kevrekidis, C. Schütte, and F. Noé, Data-driven model reduction and transfer operator approximation, Journal of Nonlinear Science, 28 (2018), pp. 985–1010.

- [18] S. Klus, I. Schuster, and K. Muandet, Eigendecompositions of transfer operators in reproducing kernel hilbert spaces, Journal of Nonlinear Science, 30 (2020), pp. 283–315.

- [19] A. Konrad, B. Y. Zhao, A. D. Joseph, and R. Ludwig, A markov-based channel model algorithm for wireless networks, Wireless Networks, 9 (2003), pp. 189–199.

- [20] M. Korda and I. Mezić, On convergence of extended dynamic mode decomposition to the koopman operator, Journal of Nonlinear Science, 28 (2018), pp. 687–710.

- [21] J. N. Kutz, S. L. Brunton, B. W. Brunton, and J. L. Proctor, Dynamic Mode Decomposition: Data Driven Modeling of Complex Systems, 2016.

- [22] P. L. Lai and C. Fyfe, Kernel and nonlinear canonical correlation analysis, International Journal of Neural Systems, 10 (2000), pp. 365–377.

- [23] Q. Li, F. Dietrich, E. M. Bollt, and I. G. Kevrekidis, Extended dynamic mode decomposition with dictionary learning: A data-driven adaptive spectral decomposition of the koopman operator, Chaos: An Interdisciplinary Journal of Nonlinear Science, 27 (2017), p. 103111.

- [24] B. Lusch, J. N. Kutz, and S. L. Brunton, Deep learning for universal linear embeddings of nonlinear dynamics, Nature communications, 9 (2018), pp. 1–10.

- [25] A. Mardt, L. Pasquali, F. Noé, and H. Wu, Deep learning markov and koopman models with physical constraints, arXiv: Computational Physics, (2019).

- [26] A. Mardt, L. Pasquali, H. Wu, and F. Noé, Vampnets for deep learning of molecular kinetics, Nature communications, 9 (2018), pp. 1–11.

- [27] R. T. McGibbon and V. S. Pande, Variational cross-validation of slow dynamical modes in molecular kinetics, Journal of Chemical Physics, 142 (2015), p. 03B621_1.

- [28] I. Mezić, Analysis of fluid flows via spectral properties of the koopman operator, Annual Review of Fluid Mechanics, 45 (2013), pp. 357–378.

- [29] H. Q. Minh, P. Niyogi, and Y. Yao, Mercer’s theorem, feature maps, and smoothing, in International Conference on Computational Learning Theory, Springer, 2006, pp. 154–168.

- [30] L. Molgedey and H. G. Schuster, Separation of a mixture of independent signals using time delayed correlations, Physical Review Letters, 72 (1994), pp. 3634–3637.

- [31] F. Noe and F. Nuske, A variational approach to modeling slow processes in stochastic dynamical systems, Multiscale Modeling and Simulation, 11 (2013), pp. 635–655.

- [32] F. Nuske, B. G. Keller, G. Perezhernandez, A. S. J. S. Mey, and F. Noe, Variational approach to molecular kinetics, Journal of Chemical Theory and Computation, 10 (2014), pp. 1739–1752.

- [33] F. Núske, R. Schneider, F. Vitalini, and F. Noé, Variational tensor approach for approximating the rare event kinetics of macromolecular systems, Journal of Chemical Physics, 144 (2016), pp. 149–153.

- [34] G. Perezhernandez, F. Paul, T. Giorgino, G. De Fabritiis, and F. Noe, Identification of slow molecular order parameters for markov model construction, Journal of Chemical Physics, 139 (2013), p. 015102.

- [35] J.-H. Prinz, H. Wu, M. Sarich, B. Keller, M. Senne, M. Held, J. D. Chodera, C. Schütte, and F. Noé, Markov models of molecular kinetics: Generation and validation, Journal of chemical physics, 134 (2011), p. 174105.

- [36] A. Rahimi and B. Recht, Random features for large-scale kernel machines, in Advances in neural information processing systems, 2008, pp. 1177–1184.

- [37] S. Röblitz and M. Weber, Fuzzy spectral clustering by pcca+: application to markov state models and data classification, Advances in Data Analysis and Classification, 7 (2013), pp. 147–179.

- [38] P. J. Schmid and J. Sesterhenn, Dynamic mode decomposition of numerical and experimental data, Journal of Fluid Mechanics, 656 (2010), pp. 5–28.

- [39] C. Schütte, A. Fischer, W. Huisinga, and P. Deuflhard, A direct approach to conformational dynamics based on hybrid monte carlo, Journal of Computational Physics, 151 (1999), pp. 146–168.

- [40] C. Schútte, P. Koltai, and S. Klus, On the numerical approximation of the perron frobenius and koopman operator, Journal of Computational Dynamics, 3 (2016), pp. 1–12.

- [41] C. R. Schwantes and V. S. Pande, Improvements in markov state model construction reveal many non native interactions in the folding of ntl9, Journal of Chemical Theory and Computation, 9 (2013), pp. 2000–2009.

- [42] , Modeling molecular kinetics with tica and the kernel trick, Journal of chemical theory and computation, 11 (2015), pp. 600–608.

- [43] A. S. Sharma, I. Mezi, and B. J. Mckeon, Correspondence between koopman mode decomposition, resolvent mode decomposition, and invariant solutions of the navier-stokes equations, Phys.rev.fluids, 1 (2016).

- [44] A. Smola, A. Gretton, L. Song, and B. Schölkopf, A hilbert space embedding for distributions, in International Conference on Algorithmic Learning Theory, Springer, 2007, pp. 13–31.

- [45] L. Song, K. Fukumizu, and A. Gretton, Kernel embeddings of conditional distributions: A unified kernel framework for nonparametric inference in graphical models, IEEE Signal Processing Magazine, 30 (2013), pp. 98–111.

- [46] L. Song, J. Huang, A. Smola, and K. Fukumizu, Hilbert space embeddings of conditional distributions with applications to dynamical systems, in Proceedings of the 26th Annual International Conference on Machine Learning, 2009, pp. 961–968.

- [47] B. K. Sriperumbudur, K. Fukumizu, and G. R. Lanckriet, Universality, characteristic kernels and rkhs embedding of measures., J. Mach. Learn. Res., 12 (2011).

- [48] J. H. Tu, C. W. Rowley, D. M. Luchtenburg, S. L. Brunton, and J. N. Kutz, On dynamic mode decomposition: Theory and applications, ACM Journal of Computer Documentation, 1 (2014), pp. 391–421.

- [49] M. O. Williams, I. G. Kevrekidis, and C. W. Rowley, A data–driven approximation of the koopman operator: Extending dynamic mode decomposition, Journal of Nonlinear Science, 25 (2015), pp. 1307–1346.

- [50] M. O. Williams, I. G. Kevrekidis, and C. W. Rowley, A data driven approximation of the koopman operator: Extending dynamic mode decomposition, Journal of Nonlinear Science, (2015).

- [51] H. Wu, A. Mardt, L. Pasquali, and F. Noe, Deep generative markov state models, in Advances in Neural Information Processing Systems, 2018, pp. 3975–3984.

- [52] H. Wu and F. Noé, Gaussian markov transition models of molecular kinetics, Journal of Chemical Physics, 142 (2015), p. 084104.

- [53] , Variational approach for learning markov processes from time series data, J. Nonlinear Sci., 30 (2020), pp. 23–66.

- [54] H. Wu, F. Núske, F. Paul, S. Klus, P. Koltai, and F. Noé, Variational koopman models: Slow collective variables and molecular kinetics from short off-equilibrium simulations, The Journal of Chemical Physics, 146 (2017), p. 154104.

- [55] H. Wu, F. Paul, C. Wehmeyer, and F. Noé, Multiensemble markov models of molecular thermodynamics and kinetics, Proceedings of the National Academy of Sciences, 113 (2016), pp. E3221–E3230.

- [56] M. Yue, J. Han, and K. Trivedi, Composite performance and availability analysis of wirelesscommunication networks, IEEE Transactions on Vehicular Technology, 50 (2001), pp. p.1216–1223.