Keen2Act: Activity Recommendation in Online Social Collaborative Platforms

Abstract.

Social collaborative platforms such as GitHub and Stack Overflow have been increasingly used to improve work productivity via collaborative efforts. To improve user experiences in these platforms, it is desirable to have a recommender system that can suggest not only items (e.g., a GitHub repository) to a user, but also activities to be performed on the suggested items (e.g., forking a repository). To this end, we propose a new approach dubbed Keen2Act, which decomposes the recommendation problem into two stages: the Keen and Act steps. The Keen step identifies, for a given user, a (sub)set of items in which he/she is likely to be interested. The Act step then recommends to the user which activities to perform on the identified set of items. This decomposition provides a practical approach to tackling complex activity recommendation tasks while producing higher recommendation quality. We evaluate our proposed approach using two real-world datasets and obtain promising results whereby Keen2Act outperforms several baseline models.

1. Introduction

Users are increasingly adopting social collaborative platforms for collaborative activities. GitHub and Stack Overflow are two such popular platforms; GitHub is a collaborative software development platform that allows code sharing and version control, while Stack Overflow is a technical question-and-answer community-based website. As these social collaborative platforms gain popularity, many research studies have proposed recommender systems to improve the usability of these platforms. For example, there are works that recommend Stack Overflow questions for users to answer (Wang et al., 2015, 2016). Similarly, in GitHub, researchers have proposed methods to recommend relevant repositories to a user (Guendouz et al., 2015; Jiang et al., 2017).

Many of the existing works, however, focus largely on recommending either items or a single type of activity to users, ignoring the fact that the users can perform multiple types of activity on these platforms. For example, GitHub users may fork or watch repositories and Stack Overflow users may answer or favorite questions. Recommending multiple types of activities to a user is a challenging task. A naïve solution would be to recommend individual activities separately, treating them as independent tasks. However, there might be insufficient observations for learning the user activities at such granularity. Another possible solution is to simply learn the different types of activities together, but such solution creates sparsity issue, having to learn for all possible item-activity pairs.

To tackle these challenges, we propose a new recommendation approach called Keen2Act111Source code: https://gitlab.com/bottle_shop/scp/keen2act, which learns the users’ item-level and activity-level interests in a step-wise manner to achieve (joint) item-activity recommendation. In particular, the main contribution of Keen2Act is that it features a novel two-stage process that decomposes the activity recommendation problem into predicting a user’s interests at item level and subsequently predicting at activity level. To the best of our knowledge, this is also the first work that achieves multi-typed activity recommendation in social collaborative platforms. Finally, empirical studies on real-world GitHub and Stack Overflow datasets have shown promising results whereby the proposed approach outperforms several baseline methods.

2. Related Work

Research studies on prediction and recommendation in social collaborative platforms broadly fall into two categories: (i) finding experts to perform certain platform tasks (Riahi et al., 2012; Yang et al., 2013; Choetkiertikul et al., 2015; Xu et al., 2016; Wang et al., 2017; Huang et al., 2017; Allaho and Lee, 2014; Yu et al., 2016; Rahman et al., 2016) and (ii) recommending items to users in a platform (de Souza et al., 2014; Wang et al., 2016; Guendouz et al., 2015; Zhang et al., 2014; Jiang et al., 2017; Wang et al., 2015). Under category (i), the works on Stack Overflow mainly involve devising methods to find experts to answer questions (Riahi et al., 2012; Yang et al., 2013; Choetkiertikul et al., 2015; Xu et al., 2016; Wang et al., 2017), while for GitHub a user is identified as an expert if (s)he reviews pull-requests and code for repositories (Yu et al., 2016; Rahman et al., 2016). The works under category (ii) largely focus on recommending items to users, without specifying activities to be performed on the items. For example, several works aim to recommend relevant Stack Overflow posts (de Souza et al., 2014; Wang et al., 2015) and Github repositories (Zhang et al., 2014; Jiang et al., 2017) to users.

Our work deviates from the existing works under category (ii) in several ways. Firstly, to the best of our knowledge, Keen2Act constitutes the first work that focuses on recommending not only items but also specific activities under each recommended item. Our approach has also been applied to more than one platform (i.e., GitHub and Stack Overflow).

3. Proposed Approach

3.1. Problem Formulation

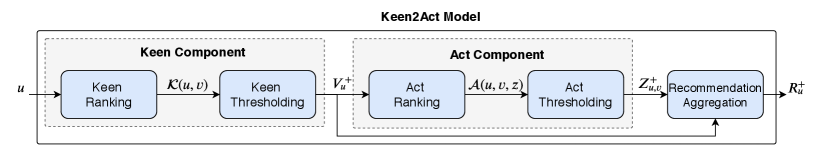

We define the activity recommendation problem as follows: For a given user, which items should (s)he choose and what activities should (s)he perform on those items? The proposed Keen2Act approach addresses this joint item-activity recommendation problem by breaking it down into two sub-problems: the Keen and Act tasks. The former aims to identify the set of items that a user is potentially interested in, while the latter aims to subsequently determine the set of activities to perform on the items of interest. An outline of our proposed Keen2Act model is given in Figure 1.

We first denote a particular user, item, and activity using the notation , and , respectively. We also let , and denote the set of all users, all items, and all activities, respectively. For a given user , the Keen component determines whether an item should be included in the set of items selected by that user, as follows:

| (1) |

where is the Keen ranking score for user-item pair , and is the Keen decision threshold for . Subsequently, the Act component determines whether an activity should be part of the set of activities performed by user on a selected item :

| (2) |

where is the Act ranking score for the tuple , and is the Act decision threshold for .

The main intuition behind the Keen followed by Act steps is that, prior to determining an activity on item , user must be sufficiently keen in item in the first place. When there is a lack of keenness (i.e., ), the user should not perform any activity at all on item . Conversely, only when the user shows a sufficient level of keenness (i.e., ), he/she can proceed with selecting which activities to be performed on item . This two-stage decision process helps not only reduce the search space (by filtering out less relevant items) but also improve the quality of the final item-activity recommendation.

The ranking scores and can each be realized using any machine learning model. In this work, we choose to use a multilinear model called Factorization Machine (FM) (Rendle, 2012), which has shown good performance in a variety of recommendation tasks based on sparse data. It is also worth noting that the decision thresholds and are parameters that are learnable from data and are specific to each item and activity , respectively.

Finally, a Recommendation Aggregation process takes place to combine and in order to arrive at the final list of recommended item-activity pairs. More specifically, for a given user-item-activity triplet , the Recommendation Aggregation process corresponds to a decision function :

| (3) |

where is an indicator function and is an AND logical operator.

3.2. Keen Component

Keen ranking. We consider the problem of identifying the parameters of the Keen model as a learning-to-rank task, and it is thus sensible to use a loss function optimized for ranking. Among the myriad of ranking loss functions, the Weighted Approximately Ranked Pairwise (WARP) loss (Weston et al., 2011) in particular has been shown as a good criterion for recommendation tasks. An appealing trait is that WARP works for data that have only implicit feedback, making it well-suited to our problem. The key idea of WARP is that, for a given positive (observed) example, the remaining negative (unobserved) examples are randomly sampled until we find a pair of positive and negative examples for which the current model incorrectly ranks the negative example higher than the positive one. We can then perform a model update only based on these violating examples.

Adopting WARP to the context of our Keen model, we can write the loss function with respect to the Keen model parameters as:

| (4) |

where transforms the rank of a positive item into a weight. Here the function can be defined as a margin-penalized rank of the following form:

| (5) |

Choosing a transformation function such as would allow the model to optimize for a better Precision@. However, directly minimizing such a loss function via gradient-based algorithms would be computationally intractable, as equation (5) sums over all items, resulting in a slow per-gradient update. We can circumvent this issue by replacing with the following sampled approximation (Weston et al., 2011): we sample items until we find a violation, i.e, , and subsequently estimate the rank, i.e., , by . In addition to the WARP loss, we need an appropriate regularization term to control our model complexity. In this work, we employ L2 regularization, which is differentiable and suitable for gradient-based methods as well. This leads to an overall, regularized ranking loss function :

| (6) |

where is a user-specified -regularization parameter. The term is used to mitigate data overfitting by penalizing large parameter values, thus reducing the model complexity.

Keen thresholding. Once the Keen ranking step is done, we need to determine the appropriate decision thresholds in order to decide whether to include item into . Ideally, we wish to identify a threshold such that the set of selected items matches the set of ground-truth, observed items as close as possible. However, it is difficult to learn the thresholds using such a set matching objective. We therefore relax this via the cross-entropy loss function, and for the Keen model this would be:

| (7) |

where is the cross entropy function, is the sigmoid function, and is an indicator function reflecting the ground truth for whether item belongs to the set of items selected by user .

3.3. Act Component

Act ranking. The ranking loss formulation for the Act model is similar to that of the Keen model except that the former deals with ranking of activities at the level of user-item pair. In particular, the WARP loss function associated with the Act model is given by:

| (8) |

where the rank function is likewise estimated by a sampled approximation . Accordingly, adding the L2 penalty to control the model complexity, the overall regularized WARP loss for the Act model is given by:

| (9) |

where is the L2-regularization parameter.

Act thresholding. Similar to the thresholding in the Keen model, we can estimate the decision threshold of the Act model using the following cross-entropy loss:

| (10) |

where is an indicator function reflecting the ground truth for whether activity belongs to the set of activities carried out by user on a selected item (i.e., ).

3.4. Recommendation Aggregation

The final step in the Keen2Act model is to generate a sorted list of recommended item-activity pairs for a given user , by aggregating the selected item set and chosen activity set as computed by the Keen and Act models respectively. To achieve this, we generate by enumerating the selected items starting from the one with the highest , and then for each selected item, we enumerate the selected activities starting from the highest . This results in a recommendation list whereby the item ranking takes precedence over the activity ranking.

3.5. Learning Procedure

To minimize the ranking losses and as well as threshold losses and , we devise an incremental learning procedure based on a variant of stochastic gradient descent called Adaptive Moment Estimation (Adam) (Kingma and Ba, 2015). Algorithm 1 summarizes the procedure, which takes in the Keen interactions and Act interactions as inputs, and outputs the model parameters and as well as thresholds and .

The overall algorithm consists of two phases: rank learning and threshold learning. In the first phase, we carry out Adam to update the model parameters and by finding a pair of positive and negative examples that violate the desired (Keen or Act) ranking. In the second phase, we also perform Adam update on and by enumerating at the item and activity levels respectively.

4. Experiment

Datasets. We experiment on two public datasets: GitHub (Gousios, 2013) and Stack Overflow222https://archive.org/details/stackexchange. For both datasets, we retrieve active users (i.e., users who have performed at least 10 activities) and their activities performed between October 2013 to March 2015. For GitHub dataset, we obtain the fork and watch activities performed by 33,453 users on over 400k repositories. For Stack Overflow dataset, we retrieve the answer and favorite activities performed by 23,612 users on over 1 million questions. Table 1 summarizes the two datasets.

Features. From the datasets, we construct two sets of features:

-

•

User features: We adapt the co-participation similarity scores introduced in (Lee and Lo, 2017) to measure the level of co-participation between a given user and other users in the platform. For example, we compute the number of times two users co-fork and co-watch a GitHub repository. As such, each user is represented with a count vector with dimensions, where is equal to the number of users in the platform.

-

•

Item features: For each item (i.e., Stack Overflow question and GitHub repository), we compute its TF-IDF vector based on its description tags. Hence, each item is represented with a TD-IDF vector with dimensions, where is equal to the total number of tags used to describe the items.

Baselines. As a few related works perform activity recommendations, we adapt and apply some of the commonly used item recommendation methods to our scenario. Specifically, we compare our model to two variants of Factorization Machine (FM):

- •

-

•

FM_WARP (Weston et al., 2011). This method uses WARP loss to maximize the rank of positive examples by repeatedly sampling negative examples until a rank violation is found.

The user by item-activity interaction matrix, user, and item features are used as input for both baseline methods. All the parameters of baseline methods are empirically set to the optimal values. Besides the baselines, we also test several variants of our model:

-

•

Keen Model. Using only the Keen model, we retrieve a set of items that the user is interested in and recommend the user to perform all activities on the retrieved items.

-

•

Act Model. Using only the Act model, we consider all possible activities for all possible user-item pair and recommend activities which meet the Act threshold.

-

•

Keen2Act. Our full model with both Keen and Act modules.

| GitHub | Stack Overflow | ||

|---|---|---|---|

| #Users | 33,453 | #Users | 23,612 |

| #Repositories | 461,931 | #Questions | 1,020,809 |

| #Fork Activities | 445,084 | #Answer Activities | 860,302 |

| #Watch Activities | 1,730,181 | #Favorite Activities | 544,617 |

Training and testing splits. In all our experiments, we randomly select 80% activities of each user to form the training set and use the remaining activities as testing set. As such, all users are observed in the training set. However, some items might be new in the test set (corresponding to a cold start problem). Note that the user and item features are computed based on the observations in the training set. We repeat this process 5 times, resulting in 5 training-testing splits based on which we evaluate our model.

Evaluation metric. We use the Mean Average Precision at top (MAP@) as a primary metric in our experiments, which is popularly used to evaluate recommendation models (Tsoumakas et al., 2009). We vary from to in order to examine the sensitivity of our model. Note that setting is equivalent to computing MAP.

| Dataset | Model | MAP@5 | MAP@10 | MAP@20 | MAP@50 | MAP |

|---|---|---|---|---|---|---|

| GitHub | FM_BPR | 0.120 | 0.128 | 0.127 | 0.113 | 0.036 |

| FM_WARP | 0.300 | 0.299 | 0.276 | 0.233 | 0.077 | |

| Keen Model | 0.298 | 0.297 | 0.269 | 0.224 | 0.074 | |

| Act Model | 0.250 | 0.243 | 0.232 | 0.219 | 0.058 | |

| Keen2Act | 0.348 | 0.347 | 0.325 | 0.284 | 0.099 | |

| Stack | FM_BPR | 0.103 | 0.109 | 0.108 | 0.100 | 0.033 |

| Overflow | FM_WARP | 0.180 | 0.183 | 0.177 | 0.160 | 0.050 |

| Keen Model | 0.238 | 0.234 | 0.230 | 0.209 | 0.054 | |

| Act Model | 0.191 | 0.191 | 0.188 | 0.186 | 0.053 | |

| Keen2Act | 0.259 | 0.249 | 0.227 | 0.210 | 0.064 |

4.1. Results and Discussion

Table 2 shows the MAP@ results averaged over the 5 runs of our activity recommendation experiments. We observe that Keen2Act consistently outperforms all the other methods. Specifically, Keen2Act outperforms FM_BPR and FM_WARP by 175% and 29% respectively in Stack Overflow, and 94% and 28% respectively in GitHub. As we increase , we also notice deterioration in MAP@ results. This can be attributed to the Precision@ metric favoring a model that outputs a ranked list with the relevant (i.e., observed) item-activity pairs leading the list. That is, as increases, it is likely that more and more irrelevant item-activity pairs would appear in between the relevant item-activity pairs, pushing the Precision@ lower.

Additionally, we can see that the improvement of Keen2Act over the baselines is greater in Stack Overflow than in GitHub. A possible reason is due to the sparsity of user activities. More specifically, GitHub users are observed to perform more activities concentrated on a small set of items (i.e., denser user by item-activity interaction matrix), whereas Stack Overflow users tend to perform fewer activities spread across a large set of items. The denser interaction matrix for GitHub allows the baseline methods to have sufficient observations to achieve competitive results.

Comparing the different variants of our proposed model, we can see that Keen2Act outperforms the Keen model, suggesting that it is inadequate to learn only the item-level interests of the users when recommending activities. Keen2Act also outperforms the Act model, which demonstrates the importance of the Keen step in learning the item-level interests before activity-level interests. It is also interesting to see that the Keen model performs fairly well in comparison to the other methods. We can attribute this to reduced sparsity in the problem space it is operating at, i.e., the Keen model only makes item-level recommendation while the other methods recommend at the activity level. Finally, it is worth noting that the MAP@ scores of various models are generally low, showcasing the complexity of the activity recommendation problem.

5. Conclusion

In this paper, we put forward a new Keen2Act modeling approach to recommending items and multiple type of activity to a user in a step-wise manner. The efficacy of the approach has been demonstrated in experiments using real-world GitHub and Stack Overflow datasets. In the future, we would like to extend Keen2Act using deep representation learning and conduct more comprehensive experiments to benchmark it against more state-of-the-art methods. We also plan to apply Keen2Act to other social platforms as well as consider a larger number of activity types.

References

- (1)

- Allaho and Lee (2014) Mohammad Y Allaho and Wang-Chien Lee. 2014. Increasing the responsiveness of recommended expert collaborators for online open projects. In 23rd ACM International Conference on Conference on Information and Knowledge Management. 749–758.

- Choetkiertikul et al. (2015) Morakot Choetkiertikul, Daniel Avery, Hoa Khanh Dam, Truyen Tran, and Aditya Ghose. 2015. Who will answer my question on Stack Overflow?. In 24th Australasian Software Engineering Conference. 155–164.

- de Souza et al. (2014) Lucas BL de Souza, Eduardo C Campos, and Marcelo de A Maia. 2014. Ranking crowd knowledge to assist software development. In 22nd International Conference on Program Comprehension. 72–82.

- Gousios (2013) Georgios Gousios. 2013. The GHTorent dataset and tool suite. In 10th Working Conference on Mining Software Repositories. 233–236.

- Guendouz et al. (2015) Mohamed Guendouz, Abdelmalek Amine, and Reda Mohamed Hamou. 2015. Recommending relevant GitHub repositories: A collaborative-filtering approach. International Conference on Networking and Advanced Systems.

- Huang et al. (2017) Chaoran Huang, Lina Yao, Xianzhi Wang, Boualem Benatallah, and Quan Z Sheng. 2017. Expert as a service: Software expert recommendation via knowledge domain embeddings in stack overflow. In IEEE International Conference on Web Services. 317–324.

- Jiang et al. (2017) Jyun-Yu Jiang, Pu-Jen Cheng, and Wei Wang. 2017. Open source repository recommendation in social coding. In 40th International ACM SIGIR Conference on Research and Development in Information Retrieval. 1173–1176.

- Kingma and Ba (2015) Diederik P Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. International Conference on Learning Representations.

- Lee and Lo (2017) Roy Ka-Wei Lee and David Lo. 2017. GitHub and Stack Overflow: Analyzing developer interests across multiple social collaborative platforms. In International Conference on Social Informatics. 245–256.

- Rahman et al. (2016) Mohammad Masudur Rahman, Chanchal K Roy, and Jason A Collins. 2016. CORRECT: Code reviewer recommendation in GitHub based on cross-project and technology experience. In 38th International Conference on Software Engineering Companion. 222–231.

- Rendle (2012) Steffen Rendle. 2012. Factorization machines with libFM. ACM Transactions on Intelligent Systems and Technology 3, 3 (2012), 1–22.

- Rendle et al. (2009) Steffen Rendle, Christoph Freudenthaler, Zeno Gantner, and Lars Schmidt-Thieme. 2009. BPR: Bayesian personalized ranking from implicit feedback. In 25th Conference on Uncertainty in Artificial Intelligence. 452–461.

- Riahi et al. (2012) Fatemeh Riahi, Zainab Zolaktaf, Mahdi Shafiei, and Evangelos Milios. 2012. Finding expert users in community question answering. In 21st International Conference on World Wide Web. 791–798.

- Tsoumakas et al. (2009) Grigorios Tsoumakas, Ioannis Katakis, and Ioannis Vlahavas. 2009. Mining multi-label data. In Data Mining and Knowledge Discovery Handbook. Springer, 667–685.

- Wang et al. (2017) Jian Wang, Jiqing Sun, Hongfei Lin, Hualei Dong, and Shaowu Zhang. 2017. Convolutional neural networks for expert recommendation in community question answering. Science China Information Sciences 60, 11 (2017), 110102.

- Wang et al. (2016) Lin Wang, Bin Wu, Juan Yang, and Shuang Peng. 2016. Personalized recommendation for new questions in community question answering. In IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining. 901–908.

- Wang et al. (2015) Wei Wang, Haroon Malik, and Michael W Godfrey. 2015. Recommending posts concerning API issues in developer Q&A sites. In IEEE/ACM 12th Working Conference on Mining Software Repositories. 224–234.

- Weston et al. (2011) Jason Weston, Samy Bengio, and Nicolas Usunier. 2011. WSABIE: Scaling up to large vocabulary image annotation. In 22nd International Joint Conference on Artificial Intelligence.

- Xu et al. (2016) Congfu Xu, Xin Wang, and Yunhui Guo. 2016. Collaborative expert recommendation for community-based question answering. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases. 378–393.

- Yang et al. (2013) Liu Yang, Minghui Qiu, Swapna Gottipati, Feida Zhu, Jing Jiang, Huiping Sun, and Zhong Chen. 2013. CQARank: Jointly model topics and expertise in community question answering. In 22nd ACM international conference on Information & Knowledge Management. 99–108.

- Yu et al. (2016) Yue Yu, Huaimin Wang, Gang Yin, and Tao Wang. 2016. Reviewer recommendation for pull-requests in GitHub: What can we learn from code review and bug assignment? Information and Software Technology 74 (2016), 204–218.

- Zhang et al. (2014) Lingxiao Zhang, Yanzhen Zou, Bing Xie, and Zixiao Zhu. 2014. Recommending relevant projects via user behaviour: an exploratory study on GitHub. In 1st International Workshop on Crowd-based Software Development Methods and Technologies. 25–30.