Joint Training and Reflection Pattern Optimization for Non-Ideal RIS-Aided Multiuser Systems

Abstract

Reconfigurable intelligent surface (RIS) is a promising technique to improve the performance of future wireless communication systems at low energy consumption. To reap the potential benefits of RIS-aided beamforming, it is vital to enhance the accuracy of channel estimation. In this paper, we consider an RIS-aided multiuser system with non-ideal reflecting elements, each of which has a phase-dependent reflecting amplitude, and we aim to minimize the mean-squared error (MSE) of the channel estimation by jointly optimizing the training signals at the user equipments (UEs) and the reflection pattern at the RIS. As examples the least squares (LS) and linear minimum MSE (LMMSE) estimators are considered. The considered problems do not admit simple solution mainly due to the complicated constraints pertaining to the non-ideal RIS reflecting elements. As far as the LS criterion is concerned, we tackle this difficulty by first proving the optimality of orthogonal training symbols and then propose a majorization-minimization (MM)-based iterative method to design the reflection pattern, where a semi-closed form solution is obtained in each iteration. As for the LMMSE criterion, we address the joint training and reflection pattern optimization problem with an MM-based alternating algorithm, where a closed-form solution to the training symbols and a semi-closed form solution to the RIS reflecting coefficients are derived, respectively. Furthermore, an acceleration scheme is proposed to improve the convergence rate of the proposed MM algorithms. Finally, simulation results demonstrate the performance advantages of our proposed joint training and reflection pattern designs.

Index Terms:

Reconfigurable intelligent surface (RIS), channel estimation, least squares (LS), linear minimum mean-squared error (LMMSE), reflection pattern, majorization-minimization (MM).I Introduction

The deployment of reconfigurable intelligent surfaces (RISs) has drawn a lot of attention as a cost-effective promising solution for wireless communication networks [1, 2, 3, 4, 5, 6, 7, 8]. An RIS usually consists of a large number of low-cost passive adjustable reflecting elements, each of which can be independently controlled to adjust the amplitude and/or the phase of the reflected signals such that the wireless transmission channels are sculpted to fulfill various design goals.

Recently, considerable innovative contributions have been devoted to optimize RIS-aided wireless communications. The joint optimization of the transmit beamforming at the transmitter and the reflection beamforming at the RIS has been studied to maximize the received signal-to-noise ratio (SNR) [9] and to minimize the total transmit power [10] for a single-user RIS-assisted multiple-input single-output (MISO) system. In [11] and [12], the authors considered maximizing the sum rate and the energy efficiency for a downlink RIS-aided multiuser MISO system, respectively. The secrecy rate maximization problem for RIS-assisted multi-antenna communications has been studied in [13, 14] from the physical-layer security perspective. In [15], the authors studied the sum rate maximization problem for RIS-aided full-duplex communications [16, 17]. Moreover, practical low-resolution RIS phase shifts were further considered in single-user [18] and multiuser [19] systems. In [20], the received SNR was maximized for an RIS-aided single-user system by considering the impact of practical transceiver hardware impairments. Different from the above works focusing on flat-fading channels, an RIS-aided orthogonal frequency division multiplexing (OFDM) system over frequency-selective channels was studied in [21, 22, 23, 24]. As revealed in these works, an RIS is proved beneficial for improving the performance of wireless communications.

To fully achieve the benefits of RIS-aided communications, it is necessary to obtain accurate channel state information (CSI), which, however, turns out to be technically challenging [25], since an RIS cannot transmit or receive pilots to assist the channel estimation. To overcome this difficulty, a cascaded channel estimation method was investigated in [26, 27, 28, 30, 29, 31, 32], which only requires pilot signals at the transmitter. Concretely, an “on-off” reflection pattern design, which turns on one RIS element at a time, was first developed in [26] for the cascaded channel estimation. Subsequently, it is found that the channel estimation performance of RIS-aided systems can be enhanced by turning on all the RIS elements during the training phase and configuring the reflection pattern appropriately. In this regard, the authors of [27, 28] optimized the RIS reflection pattern to minimize the mean-squared error (MSE) of the least squares (LS) channel estimator. By exploiting the channel statistics knowledge in RIS-aided systems, the authors of [29] demonstrated that the linear minimum MSE (LMMSE) channel estimator can achieve a better MSE performance. Then, in [30], the joint optimization of the training sequence at the transmitter and the reflection pattern at the RIS was analyzed for the LMMSE estimator. In addition, the channel sparsity was exploited in [31] to assist the cascaded channel estimation. In [32], a more complex RIS-aided multi-cell system was considered and the corresponding CSI acquisition method was investigated. On the other hand, the dimension of the cascaded channel in RIS-aided systems could be quite high due to the large number of RIS reflecting elements, thus leading to excessive training overhead. Several efforts have been made to address this issue [24, 32, 33, 34, 35]. For example, the authors of [24] proposed a group-wise channel estimation method, by partitioning all the RIS elements into several groups, where each group consists of a set of neighboring RIS elements sharing a common reflection coefficient, thus reducing the effective number of RIS elements and the training overhead. A novel two-timescale CSI-based protocol was recently proposed [34, 35], where the beamforming at the base station (BS) was designed based on the instantaneous CSI while the reflection coefficients at the RIS were optimized based on the slow time-varying long-term CSI. As a result, given a fixed RIS reflection pattern that remains unchanged within several coherence blocks, the dimension of the instantaneous channel that needs to be estimated in each block is independent of the number of RIS elements, so that the training overhead can be significantly reduced.

Most of the existing works on the channel estimation for RIS-assisted systems assume unit amplitude signal reflection, i.e., the magnitude of the reflection coefficient of each RIS element is always one, regardless of the phase shift, so as to maximize the reflected signal power. However, it was revealed in [36, 37, 38, 39] that such assumption is actually ideal and the magnitude of the reflection coefficient of a practical RIS element is dependent on the reflection phase [40]. In other words, one cannot adjust the reflection phase while maintaining the unit reflection magnitude at the same time.

Motivated by the above facts, we investigate the joint training and reflection pattern design for channel estimation in an RIS-aided multiuser system considering the LS and LMMSE channel estimators, by explicitly taking into account the characteristics of non-ideal RIS elements. In such cases, most methods developed in prior related works, e.g., in [27, 28, 30], that rely on an ideal RIS with unit-modulus reflection coefficient cannot be applied to the considered problems. In fact, the considered phase-dependent amplitude configuration makes the corresponding optimization nontrivial and challenging. To tackle these challenges, our main contributions are summarized as follows:

-

•

As for the LS channel estimator, we reveal that the optimal training sequences at the user equipments (UEs) are independent of the reflection pattern at the RIS and are orthogonal to each other. Then, we develop a majorization-minimization (MM)-based iterative algorithm to address the reflection pattern design, where a semi-closed form solution is obtained in each iteration.

-

•

As for the LMMSE channel estimator, the corresponding optimization problem turns out to be more difficult to solve. To obtain a tractable and also high-quality solution, we optimize the pilots and the reflection pattern in an alternating way. In particular, by invoking the MM technique, we obtain a closed-form solution and a semi-closed form solution for the pilot sequence and the reflection pattern, respectively.

-

•

The convergence of the two proposed joint training and reflection pattern optimization designs is theoretically proved. Furthermore, we propose an accelerated strategy for the developed algorithms in order to significantly reduce the number of iterations required for reaching the convergence and reduce the overall computational complexity.

The rest of this paper is organized as follows. In Section II, the RIS-aided multiuser communication system and the corresponding LS and LMMSE channel estimation methods are introduced. Section III and Section IV present the proposed MM-based joint training and reflection pattern designs for the LS and LMMSE channel estimators, respectively. In Section V, we propose an acceleration scheme to improve the convergence rate of the proposed MM algorithms. Simulation results are shown in Section VI. Finally, conclusions are provided in Section VII.

Notations: Vectors and matrices are denoted by boldface lower-case and boldface upper-case letters, respectively. and denote the sets of real and complex numbers, respectively. The superscripts , , and denote the transpose, the conjugate, and the conjugate transpose operations, respectively. and denote the Frobenius norm and the trace of a matrix, respectively. denotes the norm of a vector. , , and return the modulus, the phase, and the real part of the input complex number, respectively. and stand for the Kronecker product and the Hadamard product, respectively. returns the submatrix formed by the elements from the -th to the -th rows and from the -th to the -th columns of the input matrix. is the vectorization operation. represents the expectation operation. means that the vector follows a circularly symmetric complex Gaussian distribution with mean and covariance matrix . returns a diagonal matrix with diagonal elements being the entries of the input vector. denotes the identity matrix of size . returns the largest eigenvalue of matrix . denotes the big-O computational complexity notation.

II System Model and Problem Formulation

In this section, we first describe the model and the channel estimation criteria of the considered RIS-assisted multiuser MISO system, and then introduce the problem formulation for the joint design of the uplink training symbols and the RIS reflection coefficients.

II-A System Model

We consider the RIS-assisted uplink multiuser wireless communication system in Fig. 1, which consists of a BS with receive antennas, an RIS with reflecting elements, and single-antenna UEs. Let , , denote the channel between the RIS and the BS, the channel between the -th UE and the RIS, and the channel between the -th UE and the BS, respectively, which are assumed to follow the Rayleigh fading model. Denote the stacked channels of the UEs-RIS link and the UEs-BS link by and , respectively. Moreover, the reflection coefficient matrix at the non-ideal RIS is denoted by , with denoting the reflection coefficient of the -th element at the RIS.

To characterize the RIS phase shifts, we utilize the parallel resonant circuit-based model considered in [40]. More specifically, the equivalent impedance of the -th reflecting element, , is expressed as

| (1) |

where , , , and stand for the effective capacitance, the effective resistance, the bottom layer inductance, and the top layer inductance of the parallel resonant circuit, respectively, and represents the angular frequency of the incident signal. Then, the reflection coefficient of the -th element of the RIS is given by

| (2) |

where and stand for the reflection amplitude and the phase shift, respectively, and denotes the free space impedance. Different from the commonly used unit modulus phase shift model, the amplitude and the phase of in (2) are coupled. Specifically, according to [40], the reflection amplitude of the -th element can be expressed as the following function of the corresponding phase shift :

| (3) |

where the values of the constants , , and depend on the specific circuit parameters in (1). Fig. 2 illustrates an exemplified behaviour of the model in (3), where and .

II-B Cascaded Channel Estimation

Let us focus on the channel estimation for the considered system. An RIS cannot transmit training signals or perform channel estimation. Therefore, we cannot estimate or directly. Nevertheless, it is possible to estimate the cascaded channel of the reflected link at the BS.

We consider a block fading channel model where each block is divided into training-based channel estimation and data transmission stages. By adopting the channel estimation protocol in [28, 31], as shown in Fig. 3 at the top of the next page, we further divide the training stage into subframes, each of which consists of symbols, where and . The RIS coefficients are kept fixed within each subframe and vary for different subframes. The users transmit the same training signals periodically in different subframes. Mathematically, in the -th subframe, , the received signals of consecutive symbols at the BS, denoted by , is given by

| (4) |

where represents the fixed RIS reflection coefficients in subframe and denotes the stacked training symbols of UEs, which is expressed as

| (5) |

Here, stands for the training symbols of the -th UE, . In addition, is the additive Gaussian white noise matrix in the -th subframe, and and stand for the -th column of and , respectively. To proceed, by setting and defining

| (6) |

the received signal in (II-B) becomes

| (7) |

where and is the equivalent channel to be estimated in this work, which consists of the direct and cascaded reflected channels. Furthermore, by gathering the received signal of all the subframes, we obtain

| (8) |

where and denote the received signals and the noises of the whole training phase, respectively, and is relevant to both the training sequence and the reflection pattern, which is expressed as

| (9) |

Define the reflection pattern of subframes at the RIS by

| (10) |

each column of which represents the reflection pattern of the corresponding subframe. Then, based on the definitions in (9) and (10), we obtain the following expression for

| (11) |

where and are of size and , respectively.

The goal of channel estimation for the considered RIS-aided multiuser system is to recover the channel matrix based on the knowledge of the received signal matrix and a properly designed matrix . From (8), we obtain the LS and the LMMSE estimates of as follows [41]:

| (12) | ||||

| (13) |

where denotes the pseudoinverse of , is the correlation matrix of , and denotes the noise variance. The estimation errors corresponding to (12) and (13) are given by

| (14) | ||||

| (15) |

II-C Problem Formulation

In this paper, we aim to design the matrix , i.e., the training sequence at the UE side and the reflection coefficient at the non-ideal RIS, such that the channel estimation MSEs or are minimized. The considered joint optimization problem is formulated as

| subject to | ||||

| (16) |

where is given by (14) for the LS estimator and by (15) for the LMMSE estimator. stands for the transmit power constraint of UE and is the corresponding power budget. means that the entry satisfies the phase shift constraint given in (3). follows from the definition .

Solving the problem in (II-C) is difficult due to the coupled variables and in the objective function and the realistic constraint for the reflection coefficient. We develop efficient algorithms to tackle the problems for the LS and LMMSE channel estimators in the following two sections, respectively.

Remark 1

When considering multi-antenna UEs, we define as , where denotes the pilot matrix sent by the -th UE equipped with antennas and . The optimization problem can be formulated similarly, where the dimensions of , , , and need to be adjusted, and the methods proposed in the following sections can be modified and applied accordingly.

III LS Channel Estimation

In this section, we focus on the optimization problem in (II-C) for the LS channel estimator. Firstly, we prove that the optimal training matrix is independent of the reflection pattern and has an interesting orthogonal property. Then, we propose an efficient MM-based algorithm to optimize the RIS reflection pattern under the considered circuit model for the RIS element, where a semi-closed form solution is derived in each iteration.

III-A Closed-Form Optimal Training Matrix

Although the training matrix and the reflection pattern are coupled in the objective function in problem (II-C), as stated in the following theorem, we can determine the optimal training matrix in a closed form for the LS channel estimator.

Theorem 1

The optimal training matrix for the LS channel estimator of the considered non-ideal RIS-assisted system satisfies the condition:

| (17) |

Proof:

See Appendix A. ∎

It follows from Theorem 1 that any orthogonal training matrix is optimal for the LS channel estimator, which conforms to the results in MIMO communications[41] and ideal RIS-assisted communication systems[27]. Herein, we adopt a simple discrete Fourier transform (DFT)-based training matrix for estimating the uplink multiuser channel. Specifically, the training sequence of each UE is expressed as

| (18) |

where denotes the -th column of the DFT matrix.

III-B Optimization of RIS Reflection Pattern

In this subsection, we consider the optimization with respect to the RIS reflection pattern under the considered non-ideal phase shift constraint, which, according to the proof of Theorem 1, takes the form:

| subject to | ||||

| (19) |

Although the variable has been eliminated, it is still nontrivial to find the optimal solution of problem (III-B) mainly due to the intricate phase shift constraint. In fact, although it has been proved in [27] that the optimal reflection pattern minimizing the MSE of the LS channel estimator is an orthogonal matrix, e.g., the DFT matrix or the Hadamard matrix, for ideal unit-modulus RIS phase shifts, this conclusion does not necessarily hold for the non-ideal phase shift constraints considered in this work. Hence, to design the non-ideal RIS reflection pattern for the LS channel estimator, we develop an MM-based algorithm.

The basic idea of the MM algorithm is to find a series of simple surrogate problems whose objective functions are locally approximated to the original objective function in each iteration, and then iteratively solve the surrogate problems until convergence [42]. To do this, we first derive an appropriate surrogate function with a more tractable form to locally approximate the objective function of problem (III-B), as given in the following proposition.

Proposition 1

For the objective function , a surrogate upper bound for the -th iteration is

| (20) |

where denotes the solution to in the -th iteration of the MM algorithm, and , , and are defined as follows:

| (21) |

Proof:

See Appendix B. ∎

The major advantage of constructing the surrogate function in (20) is that the intractable inversion operation in the objective function of (III-B) is removed and we can perform the minimization of (20) by optimizing each entry of simultaneously. Specifically, replacing the objective function in (III-B) with , we obtain the following problem

| subject to | ||||

| (22) |

In particular, we show that the optimal solution to problem (III-B) can be determined in an element-wise manner.

Proposition 2

The element-wise optimal solution of problem (III-B) in the -th iteration is obtained by

| (23) |

where and is given by

| (24) |

with . The minimization of can be obtained by performing a one-dimensional search over .

Proof:

See Appendix C. ∎

With the solution of the RIS reflection pattern given in (23), the proposed MM-based algorithm for the LS channel estimator is summarized in Algorithm 1. Moreover, we show the convergence of the MM algorithm in the following theorem.

Theorem 2

The proposed MM algorithm always converges to a stationary point of the problem in (III-B).

Proof:

See Appendix D. ∎

Remark 2

In Proposition 2, we address the element-wise optimization of by performing the one-dimensional search over based on the equivalent model in (3). In fact, the reflection coefficient can be modeled by the formulation in (2) directly, and the optimization of can also be obtained by searching for the effective capacitance .

IV LMMSE Channel Estimation

In this section, we address the optimization problem in (II-C) for the LMMSE channel estimator, which can be applied if the channel correlation matrix is known. To begin with, we utilize the matrix inversion lemma [43] and transform as follows:

| (25) |

As a result, the corresponding MSE minimization problem is equivalently reformulated as

| subject to | ||||

| (26) |

Compared to the LS channel estimator, the objective function of problem (IV), denoted as , is more complex, thus making it harder to optimize and separately. Hence, in order to address the considered problem, we propose an MM-based alternating algorithm.

We first construct a tractable surrogate function, as shown in the subsequent lemma, to iteratively upper bound .

Lemma 1

For problem (IV), is upper bounded by given as follows:

| (27) |

where and is an arbitrary feasible solution to .

Proof:

See Appendix E. ∎

We observe that in (1) only consists of a quadratic term and a linear term with respect to , which makes it much easier to be handled than the original . Based on Lemma 1, we address the minimization of by iteratively minimizing , where the variables and are optimized in an alternating manner.

IV-A Optimization of the Training Matrix

We first perform the optimization of with a fixed . Substituting into and omitting the constant terms, the training sequence design under the per-UE transmitter power constraints is formulated as

| subject to | (28) |

where and represents the fixed reflection pattern. Problem (IV-A) can be formulated into a convex problem with respect to the block-diagonal matrix , as follows:

| subject to | ||||

| (29) |

and then solved. However, directly solving (IV-A) can be computationally inefficient due to the large dimension of . Therefore, we propose an MM-based solution for problem (IV-A), which only requires calculating closed-form expressions iteratively and results in a lower computational complexity.

Specifically, we employ the following upper bound to the first term in the objective function, according to [42, eq. (26)]:

| (30) |

where and is the solution of in the previous iteration. For simplicity, the value of can be set to .

By substituting (IV-A) and removing the constant terms, we transform problem (IV-A) into

| subject to | (31) |

where . By invoking the definition in (5), we can readily decompose the problem in (IV-A) into subproblems, with respect to the training sequence design at each UE. Concretely, each subproblem is given by

| subject to | (32) |

where . Note that (IV-A) is a convex quadratic optimization problem which can be readily tackled. In fact, there exists a closed-form optimal solution as shown in the following proposition.

Proposition 3

The optimal solution to problem (IV-A) can be expressed in a closed form as follows:

| (33) |

Proof:

See Appendix F. ∎

By invoking (33) for all the UEs, we accomplish the optimization of with a low computational cost.

IV-B Optimization of the RIS Reflection Pattern

Now we fix and focus on the optimization of the RIS reflection pattern. According to the upper bound in (1), we formulate the subproblem with respect to as

| subject to | ||||

| (34) |

Similar to the optimization of the training matrix tackled in the previous subsection, by employing the upper bound given in [42, eq. (26)], we obtain

| (35) |

and transform problem (IV-B) into

| subject to | ||||

| (36) |

where , is the solution of in the previous iteration, and is calculated as .

By substituting into the objective function of the problem in (IV-B), we obtain , where . Hence, we can solve problem (IV-B) in an element-wise manner. In particular, the solution to each entry of in the -th iteration can be obtained according to

| (37) |

where is derived by performing a one-dimensional search and is calculated by substituting the equivalent phase shift model in (3) into , whose expression is given by

| (38) |

Based on the two proposed solutions, the LMMSE channel estimation algorithm is summarized in Algorithm 2. Regarding the convergence, it can be verified that the algorithm generates a non-increasing sequence based on the alternating optimization method that is utilized. Moreover, the objective value of the problem in (IV) has a finite lower bound. Therefore, Algorithm 2 always converges.

V Accelerated MM Algorithm

When utilizing MM algorithms, the convergence speed usually depends on the tightness of the majorization functions. In the previous sections, in order to solve the channel estimation problems and reduce the computational complexity, the coefficients of the quadratic term, e.g., , in the proposed majorization functions were relaxed and the original objective function was majorized twice successively for the LMMSE estimation. These operations result in a slow convergence speed of the MM method due to the loose surrogate function.

In this section, we employ the squared iterative method (SQUAREM) [44] to accelerate the convergence of the proposed MM-based algorithms. SQUAREM was originally proposed in [44] to accelerate the convergence speed of the expectation-maximization (EM) algorithms. Since MM is a generalization of EM [42], SQUAREM can also be easily applied to MM algorithms [45, 46].

Without loss of generality, we focus on the acceleration of Algorithm 1 for the LS channel estimation problem. The acceleration schemes of Algorithm 2 for the LMMSE channel estimator can be obtained in a similar way. Specifically, given denoting the solution obtained in the -th iteration, we denote the update of in step 5 of Algorithm 1 by Then, the proposed SQUAREM-based accelerated MM scheme is given in Algorithm 3. Note that the updated variable in step 9 may violate the constraints, i.e., , which needs to be projected back to the feasible region (denoted by ). For the element-wise practical circuit model constraint of , the projection can be readily addressed by adjusting the reflection amplitude of each element according to its phase shift based on the equivalent relationship in (3). Moreover, the step length is chosen based on the Cauchy-Barzilai-Borwein (CBB) method, and a back-tracking based strategy is adopted to guarantee the monotonicity of the algorithm.

For the optimization of , a similar approach as Algorithm 3 can be applied, while only changing the projection function in step 9. Specifically, the projection of onto the per-UE transmit power constraint set can be expressed as where .

VI Simulation Results and Complexity Analysis

VI-A Simulation Setup

In this section, the performance of the proposed channel estimation algorithms is evaluated via numerical simulations. Under practical size limitations, the distance between adjacent elements at the RIS cannot be excessively large. Hence, the channels of the RIS elements are also usually spatially correlated [47]. We denote the spatial correlation matrices at the UEs, RIS, and BS by where stands for the spatial correlation coefficient. The correlation coefficients at the UEs, RIS, and BS are denoted as , , and , respectively. The expression of the cascaded channel correlation matrix for the LMMSE estimator is given in Appendix G. The number of training subframes and the number of training symbols in each subframe are set to and , respectively. The noise variance is normalized and the system SNR is defined as , where is identical for all the UEs for simplicity. The parameters in (3) for modeling the practical phase shift constraints are set according to [40]. The simulation parameters are given in Table I unless otherwise specified.

| Notation | Parameter | Value |

| Number of UEs | 4 | |

| Number of RIS reflecting elements | 20 | |

| Number of BS antennas | 16 | |

| Correlation coefficient at the UEs | 0.2 | |

| Correlation coefficient at the RIS | 0.4 | |

| Correlation coefficient at the BS | 0.6 | |

| Minimum reflection amplitude in (3) | 0.2 | |

| Steepness of the function curve in (3) | 2.0 | |

| Horizontal distance between and in (3) | ||

| Algorithm convergence accuracy |

VI-B Normalized MSE Performance Comparisons

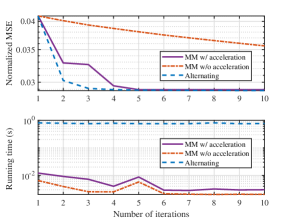

We first validate the effectiveness of the utilized MM-based algorithms. We show the normalized MSE, i.e., , of the LS channel estimation algorithm with respect to the number of iterations in the first subfigure of Fig. 4. The alternating optimization method proposed in [48] is also used to solve problem (III-B) for comparison, which optimizes each element of in an alternating manner, by performing a one-dimensional search with the other variables being fixed. It is found that the convergence speed of the MM algorithm is significantly enhanced by utilizing the proposed acceleration scheme, and the accelerated MM algorithm achieves almost the same MSE performance as the alternating scheme with a similar convergence speed. Moreover, as shown in the second subfigure, the MM-based algorithm has a much shorter running time than the alternating scheme.

For performance comparisons, we consider the following baseline schemes:

VI-B1 Ideal RIS

This corresponds to an ideal RIS, where the amplitude of the reflection coefficient of each element is fixed to while the phase shift can take any values from to . In this case, the solution to in each iteration becomes

| (39) |

for the LS channel estimator, and becomes

| (40) |

for the LMMSE channel estimator. The training matrix is determined via the proposed solutions, i.e., an orthogonal is used for the LS channel estimator and an alternating optimization algorithm based on (40) and Algorithm 2 is utilized for the LMMSE channel estimator.

VI-B2 Ideal RIS projection

This corresponds to projecting the reflection coefficients obtained by the “Ideal RIS” strategy onto the practical constraints in (3), i.e., where is the projected reflection coefficient and denotes the phase shift of the “Ideal RIS” scheme. The training symbols are also obtained utilizing the proposed algorithms.

VI-B3 Naive scheme

This corresponds to computing an orthogonal training matrix according to (18), and to deriving a reflection pattern by projecting each entry of a DFT matrix onto the practical constraints in (3), i.e., where denotes the phase shift of the -th entry of the considered DFT matrix. This naive scheme avoids the optimization process, and it is also utilized as the initial point for the proposed iterative algorithms.

VI-B4 On-off scheme

This corresponds to turning one RIS element with unit amplitude reflection coefficient and to estimating the associated effective channel at a time [26].

Fig. 5 shows the channel estimation error of the LS estimator versus the SNR. It is seen that the normalized MSEs of all the schemes decrease when increasing the SNR. Compared to the “On-off scheme”, the other schemes, where all the RIS elements are turned on during the training phase and the reflection pattern is appropriately configured, achieve much better channel estimation performances. Moreover, the proposed scheme outperforms the “Ideal RIS projection” scheme, since the proposed scheme optimizes the reflection coefficients of the RIS by incorporating the practical phase shift model directly, while the “Ideal RIS projection” scheme cannot guarantee any optimality when the practical phase shift model is considered. On the other hand, we observe that the performance achieved by “Ideal RIS projection” and “Naive scheme” is similar at various SNRs. This is because a DFT-based reflection pattern is already optimal for the LS channel estimator under the ideal unit-modulus coefficient constraints [27]. Fig. 6 illustrates the impact of for the practical phase shift model. As decreases, the phase shift model in (3) deviates from the ideal one, so that the channel estimation performance degrades. Additionally, when increases, the performance gap between the “Ideal RIS projection” and “Proposed scheme” becomes smaller, since the considered phase shift model approaches the ideal one for large .

Fig. 7 evaluates the estimation error of the LMMSE channel estimator, from which similar conclusions can be drawn as in Fig. 5, except that there exists a performance gap between the “Ideal RIS projection” and “Naive scheme” in Fig. 7. This is due to the fact that, in contrast to the LS criterion, a DFT-based orthogonal reflection pattern is no longer optimal for the LMMSE channel estimator even under the ideal unit-modulus coefficient constraint.

Finally, we compare the normalized MSE performance of the LS and LMMSE channel estimators for the considered RIS-aided multiuser system, as illustrated in Fig. 8 and Fig. 9. We observe that, by using some prior knowledge of the channel correlation, the LMMSE channel estimator is capable of achieving a lower MSE than the LS estimator. In particular, compared to the ideal phase shift model, the performance gap between the LS and LMMSE channel estimators becomes larger when considering the non-ideal phase shift model (see Appendix H for a more detailed explanation). In addition, we find from Fig. 9 that the normalized MSE of the considered system decreases when increasing the number of RIS reflecting elements. This is because the reflection pattern is well designed and the RIS can be fully exploited, which, however, requires a longer pilot sequence and leads to a higher training overhead. Finally, a more notable performance gap between the LMMSE and LS channel estimators can be observed when the number of RIS reflecting elements is relatively small.

VI-C Impact of Channel Estimation Training Overhead

In this subsection, we evaluate the impact of the channel estimation training overhead. Without loss of generality, we consider a block fading channel model where the instantaneous CSI remains static within each coherence block of slots and the long-term CSI, i.e., the channel correlation, remains unchanged during coherence blocks. For comparisons, we consider two low-overhead schemes: the grouping scheme [24] and the two-timescale scheme [34, 35]. In the former scheme, neighboring RIS elements are grouped, and they share a common reflection coefficient. Accordingly, the channel estimator provides estimates for the combined channel of each group. In this way, the effective dimension of the RIS is reduced to . In the latter scheme, the reflection pattern at the RIS is designed and fixed within coherence blocks and the effective instantaneous BS-UE channel is estimated in each coherence block, with training overhead , and then used for BS beamforming. We summarize and compare the training overhead and the implementation cost of these schemes in Table II. It can be seen that the training overhead of the proposed method can be reduced via the RIS element grouping and the two-timescale scheme has the lowest training overhead and implementation cost.

| Scheme | Estimated channel | Training overhead | Implementation cost of configuring the reflection pattern |

|---|---|---|---|

| Proposed w/o grouping | Training: Calculated once every blocks; Adjusted once every slots | ||

| Proposed w/ grouping | Transmission: Calculated once and kept fixed within each coherence block | ||

| Two-timescale | Calculated once and kept fixed within coherence blocks |

Next, we compare the average transmission rate of the schemes in Table II. Note that the two-timescale design for a multiuser system in the presence of spatially correlated channels and a non-ideal phase-shift response is very challenging to analyze and, to the best of the authors’ knowledge, it has not been studied in the existing literature. Hence, we focus on a single-UE scenario for this performance comparison. Specifically, we denote the BS-RIS channel, the RIS-UE channel, and the BS-UE channel in the -th coherence block by , , and , respectively. According to [34, Section III], the optimization of the phase shift matrix based on long-term statistics is formulated by

| subject to | (41) |

where the expectation is taken over the instantaneous CSI . By noting and exploiting the first-order Taylor expansion: we address problem (VI-C) by iteratively maximizing under the phase-shift constraint in (3), where denotes the solution obtained in the previous iteration. In each iteration, the problem has an element-wise optimal solution , where is obtained by performing a one-dimensional search over to maximize . After determining the optimal , which is kept fixed within coherence blocks, a pilot symbol is transmitted from the UE to estimate the instantaneous effective BS-UE channel in each coherence block, and, subsequently, the maximum ratio transmission strategy is utilized at the BS for data transmission. As a result, the average transmission rate during coherence blocks is given by , where denotes the transmission rate in the -th coherence block. As for the proposed scheme, in each coherence block a pilot sequence of length is transmitted from the UE to estimate the cascaded channel, by using the proposed channel estimation method, based on which the reflection coefficient matrix at the RIS and the beamforming at the BS are optimized using the method proposed in [40] for data transmission. Denoting the resulting transmission rate in the -th coherence block by , the average rate of the proposed scheme is given by .

The average transmission rates of the schemes in Table II are compared in Fig. 10, where , , and the SNRs for channel estimation and data transmission are both set to 5 dB. It is seen that, compared to the two-timescale scheme, the proposed scheme achieves a higher rate when is relatively small, since the RIS reflection matrix in the two-timescale scheme is predetermined and fixed within blocks while the proposed scheme adjusts the RIS reflection matrix based on the instantaneous CSI. By increasing , the rate of the proposed scheme without grouping first increases and then decreases, and finally becomes worse than that of the two-timescale scheme, which is due to the larger training overhead. Nevertheless, the training overhead of the proposed scheme can be reduced by utilizing the grouping scheme. In particular, the larger , the better the grouping scheme.

VI-D Complexity Analysis

As for the LS channel estimator, is iteratively updated by employing Algorithm 1. In each iteration, the main computational complexity lies in calculating the surrogate function in (20). The associated complexity is and in terms of matrix multiplications and matrix inversions, respectively, and in terms of performing the element-wise one-dimensional search for elements according to (23), where denotes the complexity of the one-dimensional search. Hence, the overall complexity of the LS channel estimator is , where denotes the number of iterations required for convergence. As for the LMMSE channel estimator, the matrices and need to be updated in each iteration of Algorithm 2. The process of updating involves the calculation of matrix multiplications, with complexity , and matrix inversions, with complexity , as well as the largest eigenvalue of a matrix, which can be handled via the efficient power iteration method [49] with complexity . Hence, the total complexity of updating is . The update of has an additional computational cost of because of the element-wise phase search. Hence, the overall complexity of the LMMSE channel estimator is given by , where denotes the number of iterations required for convergence. Considering a typical setup, where and , the total complexities of the LS and the LMMSE channel estimation schemes are and , respectively. Hence, the LMMSE channel estimator has a higher computational complexity than the LS channel estimator.

VII Conclusion

A joint design for training symbols and reflection pattern for RIS-assisted multiuser communication systems with a realistic phase-amplitude reflection model was investigated in this paper. We considered the MSE minimization problem for both LS and MMSE channel estimators, subject to the transmit power constraint at the UEs and a practical phase shift model at the RIS. For the LS criterion, we proved the optimality of the orthogonal training signals and developed an MM-based algorithm to address the reflection pattern design with a semi-closed form solution in each iteration. As for the LMMSE criterion, we proposed to iteratively optimize the training symbols and the reflection pattern, whose optimal solutions in each iteration were obtained in a closed form and a semi-closed form, respectively. The SQUAREM method was further utilized to accelerate the convergence speed of the proposed MM algorithms. Simulation results confirmed that the proposed design can effectively improve the channel estimation performance of RIS-aided channel in the presence of practical phase-amplitude reflection models. In addition, compared to the two-timescale design scheme, the proposed instantaneous channel estimation-based scheme demonstrates superior transmission rates for a low-to-medium size of the RIS, while a grouping strategy is needed when considering a large-size RIS.

Appendix A Proof of Theorem 1

Based on the expression of in (11), we have

| (42) |

Substituting (42) into , we have

| (43) |

Therefore, given an arbitrary reflection pattern , the optimization of amounts to

| subject to | (44) |

where is independent of . It can be readily shown by contradiction that the inequality constraints in (A) must be active at the optimality. Moreover, the optimal solution must have orthogonal rows. Hence, we obtain (17) and the proof is completed.

Appendix B Proof of Proposition 1

Denote the objective function of problem (III-B) by . To find a proper surrogate function of , we utilize the following upper bound [42, Eq. (25)]

| (45) |

where the matrix must satisfy for all . According to (45), we are ready to calculate the first-order and the second-order differentials of . By applying [50], we first compute the first-order differential of as

| (46) |

Then, according to [50] and , we obtain the second-order differential of by

| (47) |

Subsequently, we manipulate the first term of (B) as follows:

| (48) |

where the last equality holds based on the relationship , is the unique permutation matrix satisfying for an arbitrary matrix , and and are defined as follows:

| (49) |

Similar to (B), the second term of (B) can be written as

| (50) |

Combining the results in (B) and (50), we reexpress the second-order differential of as

| (51) |

where The remaining step is to find a matrix such that holds for all feasible . For convenience, we simply choose with . To determine , we first obtain the following upper bound to :

| (52) |

where the inequality (a) follows from with and being Hermitian matrices and the equality (b) follows from [51]. Since the three terms in are Hermitian matrices, we can further upper bound as

| (53) |

where the equality follows from , , and . With similar procedures, can be upper bounded by . Thus, we conclude that .

However, it is still hard to determine the largest eigenvalue of for every feasible solution , since there exist cases where tends to be a singular matrix and thus the largest eigenvalue of tends to be infinite. Fortunately, we can handle this difficulty by fully exploiting the specific form of the considered problem. Specifically, we impose an additional constraint to problem (III-B) with denoting an arbitrary feasible solution, which yields

| subject to | ||||

| (54) |

It can be readily shown that problems (III-B) and (B) have the same global optimal solution. Based on the additional imposed constraint, we can relax the largest eigenvalue of as and accordingly set to . Moreover, we judiciously update in each iteration with being the obtained solution in the previous iteration for facilitating a tighter bound. Note that the above operations will not affect the convergence of the MM algorithm [46].

Appendix C Proof of Proposition 2

Note that the objective function of problem (III-B) can be rewritten as , where . For a fixed , the value of depends on and is independent of the other elements in . Together with the fact that the constraints in problem (III-B) are imposed on each element of independently, we conclude that problem (III-B) can be solved for each element of independently, i.e., in an element-wise manner. Without loss of generality, replacing with and plugging the equivalent phase shift model in (3), is equal to

| (55) |

where the second equality follows from Euler’s formula. This implies that the minimization of can be addressed by performing a one-dimensional search over the phase shift .

Appendix D Proof of Theorem 2

Denote the constraint set of problem (III-B) by . We prove the convergence of Algorithm 1 by verifying the following four conditions according to [52, Sec. III]:

-

1.

;

-

2.

;

-

3.

;

-

4.

is continuous in both and .

In particular, the first two conditions guarantee the convergence of the proposed MM algorithm while conditions 3) and 4) guarantee that the algorithm converges to a stationary point.

Clearly, the considered in (20) is a continuous function and thus condition 4) holds. Next, the utilized bound is obtained according to (45) as shown in Appendix B, where the original function is upper bounded by its second-order Taylor expansion, which is in the right-hand side of (45) and denoted as . It is readily verified from (45) that and and thus we conclude that the conditions 1), 2), and 3) hold for and . Therefore, the four conditions hold and the proof is completed.

Appendix E Proof of Lemma 1

Let us introduce and rewrite the objective function in problem (IV) as . Then, based on the fact that the matrix function is jointly convex in [53], we can lower bound by its first-order Taylor expansion as

| (56) |

where and denote arbitrary feasible points. By substituting with , we obtain an upper bound for by

| (57) |

which is equal to given in Lemma 1.

Appendix F Proof of Proposition 3

The Karush-Kuhn-Tucker (KKT) conditions of problem (IV-A) are given as follows:

| (58) | ||||

| (59) | ||||

| (60) | ||||

| (61) |

where is the optimal solution to and is the optimal dual variable associated with the constraint . We now analyze the KKT conditions to find and .

F-1 Case 1

F-2 Case 2

Appendix G Expression of the Cascaded Channel Correlation

For the purpose of modeling the cascaded channel correlation matrix , we consider the Kronecker channel model [54]: and The elements of the matrices , , and are independent and identically distributed (i.i.d.) Gaussian random variables with zero mean and unit variance. The positive definite matrices and with unit diagonal entries denote the spatial correlation matrices at the BS seen from the reflected link and the direct link , respectively. Similar definitions are employed for the correlation matrices at the UEs, i.e., and , and the correlation matrices at the RIS, i.e., and . For simplicity, we set , , and . Then, we have , , and [55]. To proceed, based on the definition in (6), we have

| (64) |

On the other hand, it is easily seen that and when only one of and is equal to since is independent of and . Therefore, the correlation matrix of the cascaded channel is calculated as

| (65) |

Appendix H Explanation on the MSE Performance Gap between LS and LMMSE Channel Estimators

To explain the MSE performance gap in Figs. 8 and 9 in mathematical terms, we focus on a scenario with a single UE and assume an uncorrelated channel model, i.e., . In this case, considering an RIS with a unit amplitude response, it can be readily found that an orthogonal reflection pattern, i.e., , is optimal for both the LS and LMMSE channel estimators. The corresponding MSEs are given by

| (66) |

respectively, where denotes the SNR. As for the case with the phase reflection model in (3), on the other hand, analytical solutions for become elusive due to the intricate constraints imposed by the realistic model for the reflection coefficient. In this case, we denote the optimized obtained through the proposed iterative algorithms using the LS and LMMSE channel estimation criteria, by and , respectively. Accordingly, the MSEs are given by

| (67) |

In the sequel, we prove mathematically.

To begin with, we consider the following relationship:

| (68) |

where . The inequality is established since is the optimized solution for the LMMSE estimation and lacks optimality under the LMMSE criterion. Next, we recall that better channel estimation performance is obtained if the RIS has a unit-amplitude response, i.e., . Without loss of generality, we define and re-express as

| (69) |

To proceed, we need the following lemma.

Lemma 2

For an arbitrary positive definite matrix where , it holds that

| (70) |

The equality holds if .

Proof:

By applying the Lemma 2 to (H), we obtain

| (74) |

Accordingly, fulfills the following properties:

| (75) |

where the first equality is obtained by substituting (H), the second inequality follows from (H), and the third inequality holds since . Finally, by combining (H) and (H), we obtain . Together with the fact , we conclude that, compared to the case study with an ideal unit amplitude reflection coefficient, the performance gap between the LS and LMMSE channel estimators becomes larger in the presence of a realistic model for the RIS reflection coefficient.

References

- [1] C. Liaskos et al., “A new wireless communication paradigm through software-controlled metasurfaces,” IEEE Commun. Mag., vol. 56, no. 9, pp. 162–169, Sep. 2018.

- [2] Q. Wu and R. Zhang, “Towards smart and reconfigurable environment: Intelligent reflecting surface aided wireless network,” IEEE Commun. Mag., vol. 58, no. 1, pp. 106–112, Jan. 2020.

- [3] M. Di Renzo et al., “Smart radio environments empowered by AI reconfigurable meta-surfaces: An idea whose time has come,” EURASIP J. Wireless Commun. Netw., vol. 2019, May 2019, Art. no. 129.

- [4] E. Basar, M. Di Renzo, J. de Rosny, M. Debbah, M.-S. Alouini, and R. Zhang, “Wireless communications through reconfigurable intelligent surfaces,” IEEE Access, vol. 7, pp. 116753–116773, 2019.

- [5] W. Shi et al., “On secrecy performance of RIS-assisted MISO systems over Rician channels with spatially random eavesdroppers,” IEEE Trans. Wireless Commun., early access. doi: 10.1109/TWC.2023.3348591.

- [6] M. Di Renzo et al., “Smart radio environments empowered by reconfigurable intelligent surfaces: How it works, state of research, and the road ahead,” IEEE J. Sel. Areas Commun., vol. 38, no. 11, pp. 2450–2525, Nov. 2020.

- [7] W. Xu et al., “Edge learning for B5G networks with distributed signal processing: Semantic communication, edge computing, and wireless sensing,” IEEE J. Sel. Topics Signal Process., vol. 17, no. 1, pp. 9–39, Jan. 2023.

- [8] W. Shi, W. Xu, X. You, C. Zhao, and K. Wei, “Intelligent reflection enabling technologies for integrated and green Internet-of-Everything beyond 5G: Communication, sensing, and security,” IEEE Wireless Commun., vol. 30, no. 2, pp. 147–154, Apr. 2023.

- [9] Q. Wu and R. Zhang, “Intelligent reflecting surface enhanced wireless network: Joint active and passive beamforming design,” in Proc. IEEE Global Commun. Conf., Abu Dhabi, United Arab Emirates, Dec. 2018, pp. 1–6.

- [10] Q. Wu and R. Zhang, “Intelligent reflecting surface enhanced wireless network via joint active and passive beamforming,” IEEE Trans. Wireless Commun., vol. 18, no. 11, pp. 5394–5409, Nov. 2019.

- [11] C. Huang, A. Zappone, M. Debbah, and C. Yuen, “Achievable rate maximization by passive intelligent mirrors,” in IEEE Int. Conf. Acoust., Speech and Signal Process. (ICASSP), Calgary, AB, Canada, Apr. 2018, pp. 1–5.

- [12] C. Huang, A. Zappone, G. C. Alexandropoulos, M. Debbah, and C. Yuen, “Reconfigurable intelligent surfaces for energy efficiency in wireless communication,” IEEE Trans. Wireless Commun., vol. 18, no. 8, pp. 4157–4170, Aug. 2019.

- [13] H. Shen, W. Xu, S. Gong, Z. He, and C. Zhao, “Secrecy rate maximization for intelligent reflecting surface assisted multi-antenna communications,” IEEE Commun. Lett., vol. 23, no. 9, pp. 1488–1492, Sep. 2019.

- [14] X. Yu, D. Xu, and R. Schober, “Enabling secure wireless communications via intelligent reflecting surfaces,” in Proc. IEEE Global Commun. Conf. (GLOBECOM), Waikoloa, HI, USA, Dec. 2019, pp. 1–6.

- [15] H. Shen, T. Ding, W. Xu, and C. Zhao, “Beamformig design with fast convergence for IRS-aided full-duplex communication,” IEEE Commun. Lett., vol. 24, no. 12, pp. 2849–2853, Dec. 2020.

- [16] B. Smida et al., “Full-duplex wireless for 6G: Progress brings new opportunities and challenges,” IEEE J. Sel. Areas Commun., vol. 41, no. 9, pp. 2729–2750, Sep. 2023.

- [17] Z. He et al., “Full-duplex communication for ISAC: Joint beamforming and power optimization,” IEEE J. Sel. Areas Commun., vol. 41, no. 9, pp. 2920–2936, Sep. 2023.

- [18] Q. Wu and R. Zhang, “Beamforming optimization for intelligent reflecting surface with discrete phase shifts,” in Proc. IEEE Int. Conf. Acoust., Speech, Signal Process. (ICASSP), Brighton, U.K., May 2019, pp. 7830–7833.

- [19] C. Huang, G. C. Alexandropoulos, A. Zappone, M. Debbah, and C. Yuen, “Energy efficient multi-user MISO communication using low resolution large intelligent surfaces,” in Proc. IEEE Global Commun. Conf. (GLOBECOM), Abu Dhabi, United Arab Emirates, Dec. 2018, pp. 1–6.

- [20] H. Shen, W. Xu, S. Gong, C. Zhao, and D. W. K. Ng, “Beamforming optimization for IRS-aided communications with transceiver hardware impairments,” IEEE Trans. Commun., vol. 69, no. 2, pp. 1214–1227, Feb. 2021.

- [21] B. Zheng and R. Zhang, “Intelligent reflecting surface-enhanced OFDM: Channel estimation and reflection optimization,” IEEE Wireless Commun. Lett., vol. 9, no. 4, pp. 518–522, Apr. 2020.

- [22] S. Lin, B. Zheng, G. C. Alexandropoulos, M. Wen, F. Chen, and S. Mumtaz, “Adaptive transmission for reconfigurable intelligent surface-assisted OFDM wireless communications,” IEEE J. Sel. Areas Commun., vol. 38, no. 11, pp. 2653–2665, Nov. 2020.

- [23] Z. He, H. Shen, W. Xu, and C. Zhao, “Low-cost passive beamforming for RIS-aided wideband OFDM systems,” IEEE Wireless Commun. Lett., vol. 11, no. 2, pp. 318–322, Feb. 2022.

- [24] Y. Yang, B. Zheng, S. Zhang, and R. Zhang, “Intelligent reflecting surface meets OFDM: Protocol design and rate maximization,” IEEE Trans. Commun., vol. 68, no. 7, pp. 4522–4535, Jul. 2020.

- [25] L. Wei et al., “Channel estimation for RIS-empowered multi-user MISO wireless communications,” IEEE Trans. Commun., vol. 69, no. 6, pp. 4144–4157, Jun. 2021.

- [26] Q.-U.-A. Nadeem et al., “Intelligent reflecting surface assisted wireless communication: Modeling and channel estimation,” Dec. 2019, [Online] Available: https://arxiv.org/abs/1906.02360v2.

- [27] C. You, B. Zheng, and R. Zhang, “Intelligent reflecting surface with discrete phase shifts: Channel estimation and passive beamforming,” in Proc. IEEE Int. Commun. Conf. Workshops (ICC Wkshps), Dublin, Ireland, Jun. 2020, pp. 1–6.

- [28] Z. Zhou, N. Ge, Z. Wang, and L. Hanzo, “Joint transmit precoding and reconfigurable intelligent surface phase adjustment: A decomposition-aided channel estimation approach,” IEEE Trans. Commun., vol. 69, no. 2, pp. 1228–1243, Feb. 2021.

- [29] Q.-U.-A. Nadeem et al., “Intelligent reflecting surface-assisted multi-user MISO communication: Channel estimation and beamforming design,” IEEE Open J. Commun. Soc., vol. 1, pp. 661–680, 2020.

- [30] J.-M. Kang, “Intelligent reflecting surface: Joint optimal training sequence and reflection pattern,” IEEE Commun. Lett., vol. 24, no. 8, pp. 1784–1788, Aug. 2020.

- [31] J. Chen, Y.-C. Liang, H. V. Cheng, and W. Yu, “Channel estimation for reconfigurable intelligent surface aided multi-user mmWave MIMO systems,” IEEE Wireless Trans. Commun., vol. 22, no. 10, pp. 6853–6869, Oct. 2023.

- [32] Q. Li, M. El-Hajjar, I. Hemadeh, A. Shojaeifard, and L. Hanzo, “Low-overhead channel estimation for RIS-aided multi-cell networks in the presence of phase quantization errors,” IEEE Trans. Veh. Technol., early access, Dec. 06, 2023, doi: 10.1109/TVT.2023.3339968.

- [33] Z. Wang, L. Liu, and S. Cui, “Channel estimation for intelligent reflecting surface assisted multiuser communications: Framework, algorithms, and analysis,” IEEE Trans. Wireless Commun., vol. 19, no. 10, pp. 6607–6620, Oct. 2020.

- [34] M.-M. Zhao, Q. Wu, M.-J. Zhao, and R. Zhang, “Intelligent reflecting surface enhanced wireless networks: Two-timescale beamforming optimization,” IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 2–17, Jan. 2021.

- [35] Q. Li et al., “Achievable rate analysis of the STAR-RIS-aided NOMA uplink in the face of imperfect CSI and hardware impairments,” IEEE Trans. Commun., vol. 71, no. 10, pp. 6100–6114, Oct. 2023.

- [36] A. Rafique et al., “Reconfigurable intelligent surfaces: Interplay of unit cell and surface-level design and performance under quantifiable benchmarks,” IEEE Open J. Commun. Soc., vol. 4, pp. 1583–1599, 2023.

- [37] M. Di Renzo, F. H. Danufane, and S. Tretyakov, “Communication models for reconfigurable intelligent surfaces: From surface electromagnetics to wireless networks optimization,” Proc. IEEE, vol. 110, no. 9, pp. 1164–1209, Sep. 2022.

- [38] S. Zeng et al., “Intelligent omni-surfaces: Reflection-refraction circuit model, full-dimensional beamforming, and system implementation,” IEEE Trans. Commun., vol. 70, no. 11, pp. 7711–7727, Nov. 2022.

- [39] M. Di Renzo et al., “Digital reconfigurable intelligent surfaces: On the impact of realistic reradiation models,” 2022. Available: https://arxiv.org/abs/2205.09799.pdf

- [40] S. Abeywickrama, R. Zhang, Q. Wu, and C. Yuen, “Intelligent reflecting surface: Practical phase shift model and beamforming optimization,” IEEE Trans. Commun., vol. 68, no. 9, pp. 5849–5863, Sep. 2020.

- [41] M. Biguesh and A. B. Gershman, “Training-based MIMO channel estimation: A study of estimator tradeoffs and optimal training signals,” IEEE Trans. Signal Process., vol. 54, no. 3, pp. 884–893, Mar. 2006.

- [42] Y. Sun, P. Babu, and D. P. Palomar, “Majorization-minimization algorithms in signal processing, communications, and machine learning,” IEEE Trans. Signal Process., vol. 65, no. 3, pp. 794–816, Feb. 2017.

- [43] D. P. Palomar, J. M. Cioffi, and M. A. Lagunas, “Joint Tx-Rx beamforming design for multicarrier MIMO channels: A unified framework for convex optimization,” IEEE Trans. Signal Process., vol. 51, no. 9, pp. 2381–2401, Sep. 2003.

- [44] R. Varadhan and C. Roland, “Simple and globally convergent methods for accelerating the convergence of any EM algorithm,” Scand. J. Statist, vol. 35, no. 2, pp. 335–353, Feb. 2008.

- [45] Z. Wang, P. Babu, and D. P. Palomar, “Design of PAR-constrained sequences for MIMO channel estimation via majorization-minimization,” IEEE Trans. Signal Process., vol. 64, no. 23, pp. 6132–6144, Dec. 2016.

- [46] J. Song, P. Babu, and D. P. Palomar, “Sequence design to minimize the weighted integrated and peak sidelobe levels,” IEEE Trans. Signal Process., vol. 64, no. 8, pp. 2051–2064, Apr. 2016.

- [47] Q. Li et al., “The reconfigurable intelligent surface-aided multi-node IoT downlink: Beamforming design and performance analysis,” IEEE Internet Things J., vol. 10, no. 7, pp. 6400–6414, Apr. 2023.

- [48] S. Zhang and R. Zhang, “Capacity characterization for intelligent reflecting surface aided MIMO communication,” IEEE J. Sel. Areas Commun., vol. 38, no. 8, pp. 1823–1838, Aug. 2020.

- [49] J. H. Wilkinson, The Algebraic Eigenvalue Problem. Oxford, U.K.: Clarendon Press, 1965.

- [50] A. Hjoungnes, Complex-Valued Matrix Derivatives: With Applications in Signal Processing and Communications. Cambridge, U.K.: Cambridge Univ. Press, 2011.

- [51] R. A. Horn and C. R. Johnson, Matrix Analysis. Cambridge, U.K.: Cambridge Univ. Press, 2012.

- [52] L. Zhao, J. Song, P. Babu, and D. P. Palomar, “A unified framework for low autocorrelation sequence design via majorization–minimization,” IEEE Trans. Signal Process., vol. 65, no. 2, pp. 438–453, Jan. 2017.

- [53] S. Boyd and L. Vandenberghe, Convex Optimization. Cambridge, U.K.: Cambridge Univ. Press, 2004.

- [54] D.-S. Shiu, G. Foschini, M. Gans, and J. Kahn, “Fading correlation and its effect on the capacity of multielement antenna systems,” IEEE Trans. Commun., vol. 48, no. 3, pp. 503–513, Mar. 2000.

- [55] A. Gupta and D. Nagar, Matrix Variate Distributions. London, U.K.: Chapman Hall/CRC, 2000.