Joint Speech Activity and Overlap Detection with Multi-Exit Architecture

Abstract

Overlapped speech detection (OSD) is critical for speech applications in scenario of multi-party conversion. Despite numerous research efforts and progresses, comparing with speech activity detection (VAD), OSD remains an open challenge and its overall performance is far from satisfactory. The majority of prior research typically formulates the OSD problem as a standard classification problem, to identify speech with binary (OSD) or three-class label (joint VAD and OSD) at frame level. In contrast to the mainstream, this study investigates the joint VAD and OSD task from a new perspective. In particular, we propose to extend traditional classification network with multi-exit architecture. Such an architecture empowers our system with unique capability to identify class using either low-level features from early exits or high-level features from last exit. In addition, two training schemes, knowledge distillation and dense connection, are adopted to further boost our system performance. Experimental results on benchmark datasets (AMI and DIHARD-III) validated the effectiveness and generality of our proposed system. Our ablations further reveal the complementary contribution of proposed schemes. With score of 0.792 on AMI and 0.625 on DIHARD-III, our proposed system outperforms several top performing models on these datasets, but also surpasses the current state-of-the-art by large margins across both datasets. Besides the performance benefit, our proposed system offers another appealing potential for quality-complexity trade-offs, which is highly preferred for efficient OSD deployment.

1 Introduction

The occurrence of multiple talkers speak simultaneously is common and natural in spontaneous human conversations, especially in scenario of multi-party meetings. The presence of overlapped speech segments however has adverse impacts on most speech analysis systems (such as speech recognition, speaker identification or diarization), which are typically designed in the absence of overlapped speech. For example, it has been reported [1] there is a significant increase in word error rate in segments containing overlapped speech (12% absolute).

1.1 Prior art

To address the issue, various research approaches on overlapped speech detection (OSD) have been developed over the last decade. One popular research direction is to consider OSD as an independent front-end pre-processing task. It can be formulated as a classification problem, to identify each speech frame with binary pairwise or three-class label (non-speech, overlapped speech and single speaker speech). Following the pioneer work [2] that illustrated the success of LSTM-based OSD system, different DNN architectures have been explored, together with various classification and feature engineering techniques.

On top of LSTM-based OSD, Sajjan et al. [3] further showed that LSTM-based models with spectrogram feature provided good separation between single speaker and overlap classes; and instead of using well established speech features, Bi-LSTM applied on trainable SincNet features [4, 5]. Using CNN-based architecture, [6] investigated how frame duration influences the OSD accuracy; On basis of short frames, [7] explored the effects of different features; [8] evaluated CNN-based OSD in terms of the potential improvements to speaker diarization. Instead of performing frame-level or fixed-length OSD, [9] used deep feed-forward sequential memory networks to perform segment-level OSD by leveraging spatial information from multi-channel speech recordings. Using MFCC feature, architecture in [10], consisted of time-delay neural network layers and bidirectional LSTM layers, performed 3-class frame-level classification for overlap detection.

In parallel, another research line is to jointly optimize OSD with downstream task in an end-to-end (E2E) approach. For example, an E2E neural speaker diarization was recently presented in [11], which proposed a multitask learning framework that optimizes speaker diarization conditioned on voice activity detection (VAD) and OSD as two subtasks; by augmenting a RNN-T-based model, a multi-talker RNN-T [12] was designed to recognize speech with multiple talkers; to jointly perform OSD as well as speaker counting, an CNN-based framework was proposed in [13], convolutional recurrent neural networks (CRNN) was used in [14] and Temporal Convolutional Network architecture was proposed for joint VAD, OSD and speaker counting tasks [15].

1.2 Existing problems

Despite these efforts, handling overlapped speech is still challenging and remains an open problem, as claimed in the recent reports on DIHARD III [16] and CHIME-6 [17]. Even with increased amount of overlapped speech from augmentation scheme, the identification of OSD is still much less reliable than that of VAD due to inherent the nature of short segment length and mixture (e.g.[6, 8, 3, 18, 15]).

1.3 Multi-exit architecture

In this paper, our focus is on joint VAD and OSD task. We note that most previous research works share two things in common: 1) classification features are extracted from the same representation space; and 2) classification networks are lightweight with only a few layers. Unlike these prior works, our intuition is that vast majority OSD frames are intrinsically more difficult to be identified than VAD frames. As such, intuitively, we hypothesize that not all speech frames require the same amount of network computation to yield a confident classification.

Based on this hypothesis, we expect to design a network with capability to adapt classification features from different latent spaces for each input sample. To this end, we propose a joint VAD and OSD network based on multi-exit architecture (MEA), which is appealing for improving inference efficiency by predicting easy samples at early exits and hard samples at later exits. To the best of our knowledge, MEA is here explored for the first time on the OSD task.

The remainder of this paper is structured as follows. More details on MEA are introduced in Section 2; our proposed OSD system is described in Section 3; Section 4 reports experimental results; and the paper is concluded in Section 5.

2 Related Works

Put simply, MEA is a layered classification architecture, augmented by early exits that are inserted after intermediate layers. It is regarded as a member of dynamic network family[19], which is an emerging direction and has gained increasing attention on image classification.

MEA mainly adopts the early exiting method for dynamic inference, which allows prediction to quit the network early when samples can already be inferred with high confidence. The first work to propose attaching early exits to a deep network was [20], where standard image classification architectures such as LeNet, AlexNet and ResNet, were augmented by early exits. Later Huang et al.[21] proposed Multi-Scale DenseNet, which was the state-of-the-art MEA.

Fig.1 depicts general diagram of an MEA network with exits. The backbone network as shown in dashed box consists of layers which help introduce branch classifiers settled at different depths of the network. Thus a sequence of intermediate exits’ classifier along with final exit’s classifier are formed. Clearly, classifier at later exit is more accurate and more expensive to compute than the previous classifier. The goal of the network is to learn that maps an input space to a vector of class prediction scores , where and is the number of class. If input is predicted at the exit, its output can be expressed as where denotes the probability distribution over classes and refers to weights up to the exit.

MEAs are typically trained with a multi-task objective: one attaches a loss function to each exit, for example cross-entropy, and minimizes the sum of exit-wise losses, as if each exit formed a separate classification task. During inference, these exits are evaluated in turn and allow to terminate the inference procedure at an intermediate layer, called adaptive inference. Depending whether the computational budget is available, the inference of an multi-exit system can operate in either budget-mode or anytime-mode [22].

Note that researches in MEA-based network mostly focused on its key advantage, that is, reducing computation and save energy using adaptive inference [20, 21]. In contrast, our main objective in this paper, is to classify easy samples at the earliest possible exit, as well as improve the classification performance on hard samples, through leveraging a MEA design during training.

3 Proposed Methods

The overall architecture of our proposed system is illustrated in Fig.2. Its core modules which are built on the basis of CRNN network and training schemes are described respectively in the following subsections.

3.1 Overall Architecture

Our proposed system consists of a baseline subnet and two early exit modules. The baseline subnet (i.e., the orange dashed box in Fig.2) includes one extractor, three Conv2d modules (stacked_Conv2d) and one exit module (composed of one LSTM and one MLP sub-module). The whole process can be outlined below:

| (1) | ||||

To augment the baseline with MEA, two additional early exit modules are attached, placed after first two Conv2d modules respectively. To differentiate from the early exits, the original exit in the baseline is referred as the final exit afterwards. All module details are described below.

3.1.1 Extractor

Our system takes raw speech waveform as the input. With motivation to build an adaptive front-end, the extractor module is constructed by SincNet [5], four 2D-convolution layers, two average pooling layers and squeeze-and-excitation (SE) layers [23]. Here, instead of using traditional handcrafted speech features, we use the SincNet features with cut-off frequencies learned from the raw waveform. The feature dimension is further reduced through the subsequent layers by exploring temporal pattern among features.

3.1.2 Conv2d module

The feature outputs from extractor are further mapped by three cascaded Conv2d modules. Each module includes two convolution layers with different configuration of filter number and kernel size. Note that the output dimension of each Conv2d module is consist to that of the input, to ease attachment of multiple exits with shared LSTM parameters.

3.1.3 LSTM sub-module

Inspired by the architecture of CRNN network [24], LSTM sub-module is placed right after those CNN modules to aggregate feature sequence. Each sub-module has one average pooling layer and one Bi-LSTM layer. It is important to note that, to reduce the capacity of the model, these LSTM sub-modules share their parameters across exits.

3.1.4 MLP sub-module (Classification)

Each MLP sub-module includes two fully connected layers. The former is employed for dimension reduction while the latter noted as classifier for classification. For objective of joint VAD and OSD, this module predicts the probability of occurrence of three classes, labeled as {0: non-speech, 1: single speaker speech, 2: overlapped speech}.

Last of all, we point out that above proposed system architecture is general and can be easily extended to handle other multi-class prediction problems (say, joint activity detection and concurrent speaker counting).

3.2 Training and Inference Schemes

As aforementioned, training an MEA is not trivial. Specific training techniques are needed to address our unique optimization objective. Due to lack of prior information on input complexity, our training principle is to train all exits with objective of overall classification quality.

Nevertheless, jointly optimizing all classifiers within a chain-structured classification system is challenging. In order to improve the back propagation of gradient and make the network easier to train, different feature combination utilization schemes are considered to generate more accurate training supervision. We propose to deploy two possible schemes, knowledge distillation (KD) and dense connection (DC).

3.2.1 KD-based loss

The motivation behind the scheme is to introduce a possibility for different exits to learn from each other. As widely known, KD is a popular technique for knowledge transfer from a pre-trained large-sized network (teacher) to a small-sized one (student). For case of training MEA, we assume that an ensemble representation, as a summary statistic of individual representations from multiple exits, can play a role of teacher. It triggers us to adopt a KD-based method as in [25], which transfers knowledge by encouraging every exit to mimic the probabilistic outputs of the teacher exit.

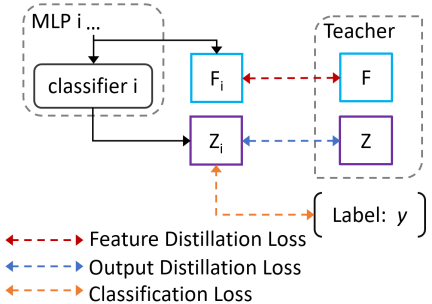

Guided by the idea, we introduce two kinds of KD-losses with respective teacher-student options (as illustrated in Fig.3). In particular, for student option, we consider representations before or after each exit classifier, denoted by (latent features) and (un-normalized probability outputs) for the th-exit respectively. As for teacher and , we adopt the ensemble information from all exits, which can be simplified as and .

With the KD-based loss, the overall training loss can be expressed as a joint loss. It combines an aggregated conventional classification loss with a probability-based distillation loss and a feature-based distillation loss , weighted by coefficients and . That is,

| (2) | ||||

Note that the additional two KD-loss items do not require access to the actual ground-truth .

3.2.2 DC-based scheme

Alternatively, another training strategy is to enforce the network to explicitly control the flow of information via feature fusion. In particular we adopt the DC scheme [21], which uses dense inter-connections to concatenate previous features from early backbone stages to features at later stages. Note that different from the skip connection scheme, DC scheme ensures feature reusability, where output feature of one layer is concatenated to the input to the next layers rather than a summation.

To accommodate this training scheme, the original Conv2d module introduced earlier is accordingly modified to a dense block (as illustrated in Fig.4). The yielded new module is called as Conv2d-DC.

3.2.3 Inference mode

Recall that our main objective is twofold: to achieve overall decent classification performance and to verify the possibility of dynamic inference with computation-accuracy tradeoffs. To this end, we propose two inference modes to access each objective individually.

The first inference mode, called normal mode, is to treat our proposed system as a conventional classification system, by only examine system outputs at the final exit. The other mode is to evaluate all exit outputs under a given threshold . That is, for a test sample, its prediction probabilities at all exits are collected in order. If any of them is higher than the given threshold, the corresponding class label is assigned to the sample, otherwise, the class label at the final exit is used instead. This inference strategy is termed exiting mode.

4 Experiments

4.1 Experimental Setup

4.1.1 Datasets

Three benchmark datasets are utilized for our system training: AMI Meeting [26], DIHARD III [16] and VoxConverse dataset [27]. Apart from the officially designated training dataset, we also generate synthesized data by weighted downmixing of two random speech samples, which offers additional 40% training data. In addition, following a common practice, speech samples are mixed on-the-fly during the training process, which augments each training sample with random noise and reverberation component based on MUSAN and RIRS_NOISES [28, 29] respectively. For system evaluation, both test sets in the DIHARD III and AMI are used to examine our system on both performance and generality. In such a setting, a summary of dataset statistics is reported in Table 1, where training data comprises both official data and our synthesized data. In addition, for the test datasets, the class ratios of both VAD and OSD are provided. Note that the imbalance ratio (IR) between VAD-class and OSD-class is severe, up to 1:11.3, on the DIHARD III test set.

| Dataset | Duration (h) | Class Percentage (%) | |||

| Train | Dev. | Test | OSD | VAD | |

| DIHARD III | 25 + 10 | 9 | 33 | 7 | 79 |

| AMI | 22 + 8.5 | 11 | 9 | 16 | 83 |

| VoxConverse | 15 + 6 | 6 | - | - | - |

4.1.2 Implementation details

Our model takes 1.5s monophonic speech chunks (with sampling rate of 16kHz) as input, and outputs 50 class labels per speech chunk. This corresponds to generate classification results every 30ms.

Full implementation details of our system can be found in Table 2, along with the count (M) of parameters of core modules. The total number of parameters of the model is up to 1.5M when the DC-based scheme is applied (otherwise, it’s around 1.3M).

| Module | Setting | Output shape | #Parameters |

| Input | - | - | |

| Extractor | SincNet(128,251,80) | 0.1 | |

| Conv2d(32,3,1) | |||

| SE(4) + Avg_pool(2,1) | |||

| Conv2d(64,3,1) | |||

| SE(4) + Avg_pool(3,2) | |||

| Conv2d | Conv2d(256,1,1) | 0.5 | |

| () | Conv2d(64,3,1) | ||

| Conv2d-DC () | Conv2d(320,1,1) | 0.7 | |

| Conv2d(80,3,1) | |||

| Conv2d(64,1,1) | |||

| LSTM | Avg_pool(32) + Permute | 0.6 | |

| Bi-LSTM(128) | |||

| MLP | Linear(128) | 0.1 | |

| () | Linear(3) |

Our system is optimized using the training loss , defined in (2). Here we adopt the proportionally-weighted cross entropy (CE) loss for and the Kullback-Leibler (KL) divergence loss for and , respectively. In addition, weights are set by and . With a batch size of 256, our system is trained for 50 epochs using Adam optimizer. The initial learning rate is 0.001 and scales with a factor of 0.6 when there is no loss decrease over 6 epochs.

| VAD | AMI | DIHARD III | ||||

| System | FA | Miss | ER | FA | Miss | ER |

| silero vad [30] | 9.4 | 1.7 | 11.0 | 17.0 | 4.0 | 21.0 |

| pyannote 1.1 [31] | 6.5 | 1.7 | 8.2 | 4.1 | 3.8 | 7.9 |

| pyannote 2.0 [32] | 3.6 | 3.2 | 6.8 | 3.9 | 3.3 | 7.3 |

| Ours | 1.3 | 5.0 | 6.3 | 2.0 | 5.0 | 7.0 |

| OSD | AMI | DIHARD III | ||||||||||

| System | FA | Miss | ER | Precision | Recall | FA | Miss | ER | Precision | Recall | ||

| pyannote 1.1 | 51.1 | 12.1 | 63.2 | 63.2 | 87.9 | 73.5 | 48.2 | 45.2 | 93.4 | 53.2 | 54.8 | 54.0 |

| Raj et al. [10] | - | - | - | 86.4 | 65.2 | 74.3 | - | - | - | - | - | - |

| pyannote 2.0 | 16.9 | 29.4 | 46.3 | 80.7 | 70.5 | 75.3 | 46.9 | 37.2 | 84.1 | 57.2 | 62.8 | 59.9 |

| Ours | 18.6 | 22.3 | 40.9 | 80.7 | 77.7 | 79.2 | 44.0 | 34.5 | 78.5 | 59.8 | 65.5 | 62.5 |

4.1.3 Inference details

Following our training configuration, our system yields up to 5 prediction labels per frame(30ms). Such multiple label options are further post-processed by applying the majority rule voting strategy. Then the one with a majority is chosen as the final predicted label.

As a follow up to label predictions, VAD and OSD segmentation can be simply inferred, where the OSD segments are formed by concatenating the frames with class {2: overlapped}; and VAD segments are obtained by combining the frames of both classes {1: single speaker, 2: overlapped speech}.

4.1.4 Evaluation metrics

Inherited from prior studies, two sets of standard evaluation metrics are adopted in this study. One set of them includes the false alarm rate (FA) missed alarm rate (Miss) and detection error rate (ER); the other contains precision, recall and -score ().

4.2 Performance at Final Exit

In this subsection, we evaluate our proposed system by checking its performance at the final exit. It includes the overall performance analysis and ablation studies on individual performance contribution from each proposed scheme.

4.2.1 Overall Performance Analysis

To better evaluate the ability of our system for joint VAD and OSD, we expand our system evaluation by benchmarking it against several top performing prior arts. For a fair comparison, those competitive prior studies are published recently for OSD on the either AMI or DIHARD III dataset, and results reported in their original publications are quoted.

All detailed comparison results are listed in Table 3 and Table 4 for VAD and OSD, respectively, where the best performance is bold-faced.

Regarding performance on the VAD task, Table 3 shows that in terms of error rate, our model performs best on both datasets. It considerately outperforms the previous SOTA, pyannote 2.0, with relative improvement of 7.3% and 4.1% on the dataset AMI and DIHARD III, respectively. Besides, the table also reveals that: 1) the VAD system [30] has high false alarm rate; 2) pyannote 2.0 [30] outperforms its early version [31]; 3) our system shows the lowest false alarm rates and the best overall error rates. Also, it is worth noting that pyannote 2.0 [30] assigns specific hyper-parameters for different dataset, which is not realistic in practical scenario.

A similar behavior is observed for performances on the OSD task. As shown in Table 4, our proposed system achieves more prominent performance gains over the existing systems. In particular, regarding score, our system surpasses pyannote 2.0 by 5.2% relative on the AMI and 4.3% on the DIHARD III. Notably, this advantage is more pronounced when measure with error rate, with relative 11.7% improvement on the AMI and 6.7% on the DIHARD III, respectively. In addition, on the more challenging DIHARD III test set, our system is considerably advantageous by outperforming prior studies over all metrics.

To ours best knowledge, the results of pyannote 2.0 are the best in the existing studies. By outperforming the pyannote 2.0, our system provides the state-of-the-art performance. In addition, it is worth noting that our system achieves such performance with even smaller model size (around 1.4 million parameters, comparing to 1.5 million used in pyannote 2.0). Lastly, again, the pyannote 2.0 requires to determine hyper-parameters by cross-validation on a validation set, which limits its generalization. In contrast, our system use common parameters for all datasets and avoid the unrealistic dataset-specific tuning as much as possible.

4.2.2 Ablation Studies

To examine individual impact of each proposed scheme, we conduct ablation studies on our system by disabling one scheme at a time. The according experimental results (in terms of -scores) are reported in Table 5.

| Model | AMI | DIHARD III | ||

| OSD | VAD | OSD | VAD | |

| FULL | 79.2 | 96.8 | 62.5 | 96.5 |

| - DC-based scheme | 79.2 | 96.9 | 62.0 | 96.6 |

| - KD-based loss | 79.0 | 96.7 | 61.6 | 96.5 |

| - MEA (Baseline) | 77.2 | 96.8 | 61.6 | 96.6 |

From the table, we make the following key observations:

-

•

high VAD performance is maintained in all ablation experiments, which suggests our system has a very strong baseline;

-

•

disabling the DC-based scheme leads to 0.5% absolute OSD performance drop on the DIHARD III test set;

-

•

further disabling the KD-based scheme results in another 0.4% absolute OSD performance drop on the DIHARD III test set;

-

•

lastly, our baseline system by removing the MEA gets 1.8% OSD performance degradation on the AMI test set, which indicates that MEA is the most important factor for our performance gains.

In all, by breaking down the performance gains on OSD task, it is proven that all our proposed schemes are beneficial and offer complementary contributions to the system performance. Additionally, among three schemes, our proposed architecture is dominant that boosts system performance the most.

4.3 Performance at All Exits

Recall that with leverage of MEA, our system provides a sequence of intermediate exits’ classifier along with final exit’s classifier. Thus far we have discussed the performance of final exit’s classifier. Now we are interested in investigating those intermediate classifiers, by changing the inference mode to the exiting mode.

In particular, since adaptive inference with reduced computation is beyond the scope of this study, our main objective herein is to investigate the feasibility of dynamic inference with computation-accuracy trade-offs. To verify the feasibility, our strategy is to analyze the exiting rate at all exits, given a high exiting threshold for the early exits. In this sense, we argue that if the proportions of early-exited samples, while small, are not negligible, then it becomes feasible to perform dynamic inference.

Motivated by the idea, in our experiments, a high threshold with value of 0.9 is set for those intermediate classifier at exit 1 and 2. Under such a setting, our inference process is switched from previous normal mode to the exiting mode. That is, test samples are firstly classified by the classifier at exit 1, those samples with high probability (no less than 0.9) are exited; the remaining samples are further classified by the classifier at exit 2, again, samples with high probability are exited; all remaining samples are classified by the last classifier at final exit.

With the said sequential inference process, the performance at all exists are evaluated. The resulting experimental results are illustrated in bar plots, as shown in Fig.5. Here the Y-axis denotes the exiting rate, which is calculated as percentage ratio of the number of samples exited at a specific exit to the total sample number, for a given class. In another words, a cluster with three adjacent bars reflects the class distribution among three exists.

From the bar plots, we can obtain a few valuable insights. Firstly, regarding the VAD task, our insights are:

-

1.

similar distribution trend can be observed for both AMI and DIHARD III dataset. That is, the most samples are classified at exit 1 and the least samples at exit 2. It reveals the possibility to perform VAD classification with the dynamic inference, an interesting insight that has not be explored in previous studies;

-

2.

at least 51% speech samples are easy that can be classified at exit 1. This implies the VAD detection may greatly benefited from the dynamic inference;

-

3.

about a quarter to one third samples are difficult that cannot be classified at early exits with high confidence.

Secondly, regarding the OSD task, we observe that:

-

1.

most samples are classified at exit 1 and final exit. And at least 41% overlapped speech samples are easy that can be classified early, showing feasible possibility to perform dynamic inference;

-

2.

more samples of DIHARD III are left to the final classifier for OSD judgment. This implies that on the OSD task, the DIHARD III dataset is more challenging than the AMI.

Lastly, a close look on both tasks shows that, except the OSD task on the DIHARD III, over half samples can be classified at the earliest exit with the lowest complexity. Another finding is that both AMI and DIHARD III show higher OSD exiting rate at late exits (0.46 vs. 0.34 and 0.59 vs.0.25), which means OSD has inherently higher complexity than VAD.

Above results clearly confirm that variant sample complexities exist in both VAD and OSD class, which corroborate our motivation for adopting MEA to address the joint VAD and OSD problem. In addition, observations above suggest a large number of samples can be reliably classified by earlier exits that have lower capacity and complexity. This is a good match for objective of the dynamic inference.

5 Conclusions

In this paper, we investigate the joint VAD and OSD problem, to classify speech frames into classes of non-speech, single speaker and overlapped speakers. Unlike any prior works, we study the problem from a new perspective by proposing a classification system with multi-exit architecture. Our design objective is twofold: improving the overall classification performance and classifying easy samples at the earliest possible exit. To boost system effectiveness, we also propose two training schemes to enhance the proposed architecture.

To verify the efficacy of our proposed system, we conduct extensive experiments on the benchmark dataset of AMI and DIHARD III. Corresponding experimental results show that our system outperforms the previous top performing systems by a considerable margin. With score of 0.792 on AMI and 0.625 on DIHARD III, our system offers (to our best knowledge) the best OSD results reported till date. Beyond that, both training schemes are also proven to be effective and complementary via ablations. Meanwhile, our preliminary study shows that at least 41% overlapped samples can be predicted at early exits with decent accuracy. This indicates our system also has the promising potential to balance performance and computational complexity, which would be further investigated in our future research.

References

- [1] E. Shriberg, A. Stolcke, and D. Baron, “Observations on overlap: Findings and implications for automatic processing of multi-party conversation,” in Seventh European Conference on Speech Communication and Technology, 2001.

- [2] J. T. Geiger, F. Eyben, B. Schuller, and G. Rigoll, “Detecting overlapping speech with long short-term memory recurrent neural networks,” in Interspeech, 2013, pp. 1668–1672.

- [3] N. Sajjan, S. Ganesh, N. Sharma, S. Ganapathy, and N. Ryant, “Leveraging lstm models for overlap detection in multi-party meetings,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, pp. 5249–5253.

- [4] L. Bullock, H. Bredin, and L. P. García-Perera, “Overlap-aware diarization: Resegmentation using neural end-to-end overlapped speech detection,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 7114–7118.

- [5] M. Ravanelli and Y. Bengio, “Speaker recognition from raw waveform with sincnet,” in IEEE Spoken Language Technology Workshop (SLT), 2018, pp. 1021–1028.

- [6] V. Andrei, H. Cucu, and C. Burileanu, “Detecting overlapped speech on short timeframes using deep learning,” in Interspeech, 2017, pp. 1198–1202.

- [7] M. Yousefi and J. H. L. Hansen, “Frame-based overlapping speech detection using convolutional neural networks,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 6744–6748.

- [8] M. Kunesová, M. Hrúz, Z. Zajíc, and V. Radová, “Detection of overlapping speech for the purposes of speaker diarization,” in SPECOM, 2019, pp. 247–257.

- [9] S. Zhang, S. Zheng, W. Huang, M. Lei, H. Suo, J. Feng, and Z. Yan, “Investigation of Spatial-Acoustic Features for Overlapping Speech Detection in Multiparty Meetings,” in Interspeech, 2021, pp. 3550–3554.

- [10] D. Raj, Z. Huang, and S. Khudanpur, “Multi-class spectral clustering with overlaps for speaker diarization,” in IEEE Spoken Language Technology Workshop (SLT), 2021, pp. 582–589.

- [11] Y. Takashima, Y. Fujita, S. W. 0001, S. Horiguchi, P. García, and K. Nagamatsu, “End-to-end speaker diarization conditioned on speech activity and overlap detection,” in IEEE Spoken Language Technology Workshop (SLT), 2021, pp. 849–856.

- [12] A. Tripathi, H. Lu, and H. Sak, “End-to-end multi-talker overlapping speech recognition,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 6129–6133.

- [13] W. Zhang, M. Sun, L. Wang, and Y. Qian, “End-to-end overlapped speech detection and speaker counting with raw waveform,” in IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 2019, pp. 660–666.

- [14] F.-R. Stöter, S. Chakrabarty, B. Edler, and E. A. P. Habets, “Countnet: Estimating the number of concurrent speakers using supervised learning,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 27, no. 2, pp. 268–282, 2019.

- [15] S. Cornell, M. Omologo, S. Squartini, and E. V. 0001, “Detecting and counting overlapping speakers in distant speech scenarios,” in InterSpeech, 2020, pp. 3107–3111.

- [16] N. Ryant, P. Singh, V. Krishnamohan, R. Varma, K. Church, C. Cieri, J. Du, S. Ganapathy, and M. Liberman, “The Third DIHARD Diarization Challenge,” in Interspeech, 2021, pp. 3570–3574.

- [17] S. Watanabe, M. Mandel, J. Barker, E. Vincent, A. Arora, X. Chang, S. Khudanpur, V. Manohar, D. Povey, D. Raj, D. Snyder, A. S. Subramanian, J. Trmal, B. B. Yair, C. Boeddeker, Z. Ni, Y. Fujita, S. Horiguchi, N. Kanda, T. Yoshioka, and N. Ryant, “CHiME-6 Challenge: Tackling Multispeaker Speech Recognition for Unsegmented Recordings,” in 6th International Workshop on Speech Processing in Everyday Environments (CHiME), 2020, pp. 1–7.

- [18] W. Chen, V. T. Pham, E. S. Chng, and X. Zhong, “Overlapped Speech Detection Based on Spectral and Spatial Feature Fusion,” in Interspeech, 2021, pp. 4189–4193.

- [19] Y. Han, G. Huang, S. Song, L. Yang, H. Wang, and Y. Wang, “Dynamic neural networks: A survey,” CoRR, 2021. [Online]. Available: https://arxiv.org/abs/2102.04906

- [20] S. Teerapittayanon, B. McDanel, and H. T. Kung, “Branchynet: Fast inference via early exiting from deep neural networks,” CoRR, 2017. [Online]. Available: http://arxiv.org/abs/1709.01686

- [21] G. Huang, D. Chen, T. Li, F. Wu, L. van der Maaten, and K. Q. Weinberger, “Multi-scale dense networks for resource efficient image classification,” in International Conference on Learning Representations (ICLR), 2018.

- [22] M. Phuong and C. Lampert, “Distillation-based training for multi-exit architectures,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2019, pp. 1355–1364.

- [23] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- [24] B. Shi, X. Bai, and C. Yao, “An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition,” CoRR, 2015. [Online]. Available: http://arxiv.org/abs/1507.05717

- [25] H. Lee and J. Lee, “Students are the best teacher: Exit-ensemble distillation with multi-exits,” CoRR, 2021. [Online]. Available: https://arxiv.org/abs/2104.00299

- [26] J. Carletta, “Unleashing the killer corpus: experiences in creating the multi-everything ami meeting corpus,” Language Resources and Evaluation, vol. 41, no. 2, pp. 181–190, 2007.

- [27] J. S. Chung, J. Huh, A. Nagrani, T. Afouras, and A. Zisserman, “Spot the conversation: speaker diarisation in the wild,” CoRR, 2020. [Online]. Available: https://arxiv.org/abs/2007.01216

- [28] D. Snyder, G. Chen, and D. Povey, “Musan: A music, speech, and noise corpus,” arXiv preprint arXiv:1510.08484, 2015.

- [29] T. Ko, V. Peddinti, D. Povey, M. L. Seltzer, and S. Khudanpur, “A study on data augmentation of reverberant speech for robust speech recognition,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017, pp. 5220–5224.

- [30] S. Team, “Silero vad: pre-trained enterprise-grade voice activity detector (vad), number detector and language classifier,” https://github.com/snakers4/silero-vad, 2021.

- [31] H. Bredin, R. Yin, J. M. Coria, G. Gelly, P. Korshunov, M. Lavechin, D. Fustes, H. Titeux, W. Bouaziz, and M.-P. Gill, “Pyannote.audio: Neural building blocks for speaker diarization,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 7124–7128.

- [32] H. Bredin and A. Laurent, “End-to-end speaker segmentation for overlap-aware resegmentation,” in Interspeech, 2021, pp. 3111–3115.