Joint Sensing and Communications for Deep Reinforcement Learning-based Beam Management in 6G

Abstract

User location is a piece of critical information for network management and control. However, location uncertainty is unavoidable in certain settings leading to localization errors. In this paper, we consider the user location uncertainty in the mmWave networks, and investigate joint vision-aided sensing and communications using deep reinforcement learning-based beam management for future 6G networks. In particular, we first extract pixel characteristic-based features from satellite images to improve localization accuracy. Then we propose a UK-medoids based method for user clustering with location uncertainty, and the clustering results are consequently used for the beam management. Finally, we apply the DRL algorithm for intra-beam radio resource allocation. The simulations first show that our proposed vision-aided method can substantially reduce the localization error. The proposed UK-medoids and DRL based scheme (UKM-DRL) is compared with two other schemes: K-means based clustering and DRL based resource allocation (K-DRL) and UK-means based clustering and DRL based resource allocation (UK-DRL). The proposed method has 17.2% higher throughput and 7.7% lower delay than UK-DRL, and more than doubled throughput and 55.8% lower delay than K-DRL.

Index Terms:

Vision-aided, Localization Uncertainty, Beam management, UK-medoids, Radio Resource Allocation, Deep Reinforcement LearningI Introduction

Future 6G networks rely on millimeter wave (mmWave) bands to provide rich bandwidth, in which highly directional beams are designed to maintain connections between the base stations (BSs) and user equipment (UEs). However, to apply the mmWave bands in future 6G networks, it requires intelligent beam management schemes to overcome the challenges in a dynamic environment such as inefficient beam sweeping, user mobility and location uncertainty, and so on. In beam management techniques, clustering is generally performed based on the location of users, and each cluster is served by a separate beam [1]. BSs need to keep track of the user’s trajectory and dynamically adjust the direction of beams to serve all UEs. Global Positioning System (GPS) is one well-known technology for outdoor localization. However, the application of GPS is limited to line of sight (LOS) scenarios where GPS becomes inefficient in urban canyons and certain other settings. On the other hand, the received signal-based outdoor localization schemes suffer from propagation loss in mmWave network.

Recently, computer vision is introduced to augment localization in wireless communications, and the visual information can address challenges in the traditional localization approaches [2]. For example, vision-aided sensing has been successfully applied for robot localization and navigation in [3]. On the other hand, despite the improvements obtained by advanced localization techniques, localization error is still unavoidable in practice, which may be caused by measurement accuracy, system performance fluctuation, and so on. Consequently, the user’s location errors may further affect the network performance. For example, if the BS observes distorted locations of users, some users may not be covered by the highly directional beams from BS, which will result in packet loss or longer delay. Therefore, a clustering algorithm that can handle location uncertainty is expected to mitigate the effect of user localization error [4]. Finally, network management becomes more and more complicated with evolving network architecture, and machine learning, especially reinforcement learning, becomes significant solutions for network optimization and control [5].

To this end, we first introduce a vision-aided sensing-based localization method, which utilizes satellite images to predict the user locations. Then we propose a UK-medoids based clustering technique for higher clustering accuracy for uncertain objects. Afterwards, we apply deep reinforcement learning (DRL) algorithm for intra-beam radio resource allocation to satisfy Quality-of-Service (QoS) requirements of users [6]. In terms of localization performance, the simulations show that our vision-aided method can significantly reduce the localization error from 17.11 meters to 3.62 meters. For the network performance, we compare the proposed UK-medoids and DRL method (UKM-DRL) with the other two baseline algorithms, and our UKM-DRL method achieves higher throughput and lower delay.

The main contributions of this work are two-fold: firstly, we propose a novel vision-aided sensing-based localization method, and secondly, we propose a UKM-DRL algorithm for joint beam management and resource allocation of mmWave networks with user location uncertainty. The remaining of this paper is organized as follows. Section II presents related work, and Section III shows the network system model. Section IV introduces the dataset and vision-aided technique. Section V explains the UK-medoids based clustering methods and DRL algorithm. Section VI gives simulation results, and Section VII concludes this paper.

II Related Work

User location is one of the most important information for network management[7]. Although the GPS technology has been generally applied, it suffers from degradation caused by none line of sight (NLOS) transmissions in dense urban areas. Recently, vision-aided localization techniques have been proposed as promising alternatives. For example, [8] proposed an improved localization algorithm based on GPS, inertial navigation system, and visual localization, where the visual information is used to compensate for the instability of GPS. In [9], 3D point cloud acquired from depth image is applied to localize and navigate robots, and the authors proposed a fast sampling plane filtering algorithm to reduce the volume of 3D cloud. Meanwhile, [10] proposed two localization schemes based on deep learning and landmark detection to replace GPS. On the other hand, user clustering is an important part of mmWave network for beamforming. A non-orthogonal multiple access system is considered in [11], and the authors optimized the sum rate with a K-means-based algorithm. [12] proposed a user clustering and power allocation algorithm to maximize the sum capacity, and a hierarchical clustering technique is proposed in [13] to obtain the optimal number of clusters instead of having a deterministic number.

Different than the aforementioned works, we first define a computer vision-based localization method, then we propose a UK-medoids based clustering algorithm for beam management that considers the location uncertainty, and a DRL based method for intra-beam resource management. Moreover, we systematically investigate how these technique combinations can improve the mmWave network performance.

III System and Wireless Environment Model

III-A Overall System Architecture

The overall system model of this work is illustrated in Fig. 1. First, given the original dataset, we extract the image features of each episode in the dataset. Then, these features are used as input for a feed-forward neural network, which predicts the user locations. Next, considering the localization uncertainty, we propose a UK-medoids-based clustering method to form cluster groups for beam management. Finally, we apply DRL for the intra-beam resource allocation of each beam.

III-B Wireless Environment Model

We consider a 5G-NodeB (gNB) serving a group of users with different QoS requirements. The UE set is denoted by U and each UE is represented by . Based on observed locations, gNB will produce a certain number of UE clusters, and each cluster will be served by one beam. Here the inter-beam interference can be neglected due to the Orthogonal Frequency Division Multiple Access (OFDMA) technique. In each beam, radio resource is allocated to distribute the Resource Block Groups (RBGs) to the attached users.

As illustrated in Fig. 2, we assume two clusters are formed to cover 6 UEs. We assume the gNB receives distorted location information of the red car , and two beams are formed accordingly. Therefore, due to the wrong beam direction, is covered by neither beam nor , which results in packet loss and longer delay. This scenario explains how localization error can decrease the network performance of beamforming in mmWave networks. In this work, we mainly focus on the localization and clustering techniques, and a more detailed network and channel model can be found in [6].

IV Satellite Image and Vision-aided Localization Method

In this section, we introduce our vision-aided localization method. With the pixel characteristic-based features of satellite images, we aim to improve localization accuracy.

IV-A Satellite Image Dataset

The dataset used for localization is based on measurements from a communications system located at the University of Denmark [14]. In this dataset, one mobile vehicle drives over a region of 2.4 km by 1.25 km and provides measurements, including Signal to Interference and Noise Ratio (SINR), Reference Signal Received Power (RSRP), Reference Signal Received Quality (RSRQ), and Received signal strength indication (RSSI). Each sample contains one satellite image that shows the environment around the vehicle.

| Grass | Building | Road | |

| 12.14 | 5.41 | 15.43 | |

| 21.77 | 8.74 | 27.31 | |

| 31.30 | 3.39 | 11.87 | |

| 42.13 | 3.01 | 4.38 |

| Grass | Building | Road | |

| [0,80] | [0,6] | (6,20] | |

| [12,85] | [1,8] | [0,12] |

IV-B Pixel Characteristic-based Feature

In digital imaging, the domain of the image is partitioned into rows and columns during the sampling process. A pixel represents the area of intersection of one row and one column, which is considered as the smallest element in one picture, then the resolution of the image is . In RGB color model, each pixel is defined by three values representing red, green, and blue ranging from 0 to 255.

The satellite images used in the localization process are RGB images with the same resolution of , and each image is paired with a location sample. Therefore, we count the number of pixels that represent one specific object to compute the proportion of this object in the image. Since there are some common objects in the satellite images such as grass and buildings. We can use this pixel characteristic-based feature to characterize one image. In this work, we selected three objects. As shown in Fig. 3, the three selected objects are grass, gray building, and road. Then we counted the total number of pixels representing three objects respectively as three new features, which will be used to improve the localization accuracy.

To distinguish three objects, we adopted the method from [16]. We selected four color-based characteristics to observe their mean values, where the color characteristics are operations between three basic color values. As shown in Table I, the mean values of for the three categories are quite different. Moreover, the mean values of are significantly different from the other two categories. After observing the histogram of these two values for three categories, we decide the value range for three categories as shown in Table II.

Finally, we deploy 7 features as input of feed forward neural networks (FFNN), including SINR, RSRP, RSRQ, RSSI, the number of pixels representing grass, gray building, and road. Then the FFNN will be trained to predict the user locations based on the input features [15].

V UK-Medoids-based clustering and Deep Reinforcement Learning-based Radio Resource Allocation

To handle localization uncertainty, we first deploy the UK-medoids method for clustering, in which each cluster will be covered by one beam [6]. Then, DRL is applied to distribute physical resource blocks within each beam.

V-A UK-medoids

UK-medoids is an advanced K-medoids based clustering technique [17]. It selects actual data points as cluster centers instead of computing the mean value of clusters. Meanwhile, the UK-medoids algorithm applies uncertain distance functions that are designed to better estimate the real distance between two uncertain objects.

It is presumed that there are positions to form clusters, and the cluster is denoted by . The center of each cluster is denoted by . For each user position , the uncertainty is represented by a known probability distribution function (PDF) . We assume there are two uncertain objects and , and the uncertain region is and , respectively. Then the uncertain distance is computed by:

| (1) |

where is a distance measure between and . The uncertain distance is the expected value of the distance measurement between two uncertain objects.

Then, the medoid of a cluster is computed as the data point in the cluster with the least average dissimilarity to all other points in the cluster. The update method of a cluster center is:

| (2) |

In this paper, we assume the uncertainty region of all locations is specified as a uniform circle with center and radius . Then the PDF for a uniformly distributed circle is shown as below in the polar coordinate system:

| (3) |

where and .

In this way, equation (1) can be rewritten as:

| (4) |

which is the expansion of the previous equation (1) from the rectangular coordinate system to the polar coordinate system. In our work, we use Euclidean distance as our distance measurement.

The UK-medoids algorithm is summarized by Algorithm 1. First, all the uncertain distances between every two objects are computed. Note that these distances only need to be computed once during the whole algorithm, which will reduce the computation complexity. Then, the initial cluster centers are chosen, and it comes to the main loop. The main loop of UK-medoids contains two phases: the first phase is to allocate objects to a cluster, the second phase is to recompute cluster centers according to equation (2). The loop continues until there is no change in the cluster centers.

V-B Deep Reinforcement Learning

UK-Medoids will return a set of clusters, and we assume each cluster will be served by one beam[6]. Then DRL is performed for intra-beam radio resource allocation, which aims to offer high QoS requirements for both ultra-reliable low latency communications (URLLC) and enhanced mobile broadband (eMBB) users. The Markov decision process of DRL is defined as follows:

1) Actions: In each beam, the action is to allocate RBG to user.

2) States: The UE’s channel quality indicator (CQI) feedback is defined as states, which represents the channel condition between UEs and the BS.

3) Reward: URLLC and eMBB users have different QoS requirements, the reward function must take both requirements into account. URLLC users need minimal latency and high reliability, whereas eMBB users require a higher data rate. As such, the reward function has to consider the requirements of different types of users. The reward function is given by:

| (5) |

where is the SINR of the transmission link between RBG to a user in the beam, is the target SINR of eMBB users, is the target latency of URLLC users, and is the queuing delay. Here we use a target SINR to represent the high-reliability requirements of URLLC users. denotes the sigmoid function, which will normalize the reward:

| (6) |

Finally, we deploy Long Short-Term Memory (LSTM) network for Q-value prediction in DRL. As a special recurrent neural network, the LSTM network can better capture the long-term data dependency, which makes it an ideal application for complicated wireless environment.

VI Simulation

VI-A Simulation Settings

VI-A1 Localization settings and dataset

We divide the dataset into 4 regions and each region has its own FFNN to predict the UE locations. The FFNN architecture is . Sigmoid activation functions are employed for hidden layers, whereas linear activation functions are used for output layers.

VI-A2 Resource Allocation Settings

We perform the simulation on MATLAB 5G Toolbox. The mmWave network consists of 1 gNB, and the clustering is implemented every 10 TTIs based on the UE trajectories. The traffic of users follows Poisson distribution and the packet size is 32 bytes. Three beams with 60∘ angle are predefined. The total simulation time is 1400 TTIs, and each TTI is 1/7 ms. The simulation is repeated 5 times, and the confidence interval is 95%.

For UEs’ positions, the location provided by the dataset is considered as exact location, while the localization results are considered as location with error for comparison. We include four scenarios:

-

•

Scenario 1: We applied K-means for clustering and DRL for resource allocation. The scenario is summarized by K-DRL+Location with Error.

-

•

Scenario 2: Compared with scenario 1, the only difference is that we use UK-means for clustering. UK-means is another uncertain clustering method that is developed from K-means [18]. It updates centers as the expected value over PDF and the distance between objects are also the expected distance. The scenario is named by UK-DRL+Location with Error.

-

•

Scenario 3: We deploy our proposed UK-medoids based clustering method and DRL for resource allocation. The scenario is named by UKM-DRL+Location with Error.

-

•

Scenario 4: In scenario 4, we use the exact location and K-means for clustering, which is considered as an optimal baseline. This scenario is named by K-DRL+Exact Location.

Following we first present the localization and coverage results, then we show the network performance in terms of delay and throughput.

VI-B Simulation Results

| Results | Method in [15] | Proposed method | ||

| RMSE | MAE | RMSE | MAE | |

| Region 1 | 11.68 | 6.03 | 3.82 | 1.78 |

| Region 2 | 23.11 | 10.68 | 3.52 | 1.21 |

| Region 3 | 13.68 | 8.85 | 1.38 | 0.97 |

| Region 4 | 16.72 | 6.12 | 3.75 | 1.54 |

| Total | 17.11 | 7.83 | 3.62 | 1.51 |

VI-B1 Localization and coverage results

The localization accuracy is measured by root mean squared error (RMSE) and mean absolute error (MAE). The results of the two measurements are shown in Table III. With the added new features, our proposed method reduces the RMSE to less than 4 meters in each region. For the total localization results, RMSE is reduced from 17.11 meters to 3.62 meters, MAE is reduced from 7.83 meters to 1.51 meters. The main reason is that our vision-aided new features lead to higher accuracy in prediction results.

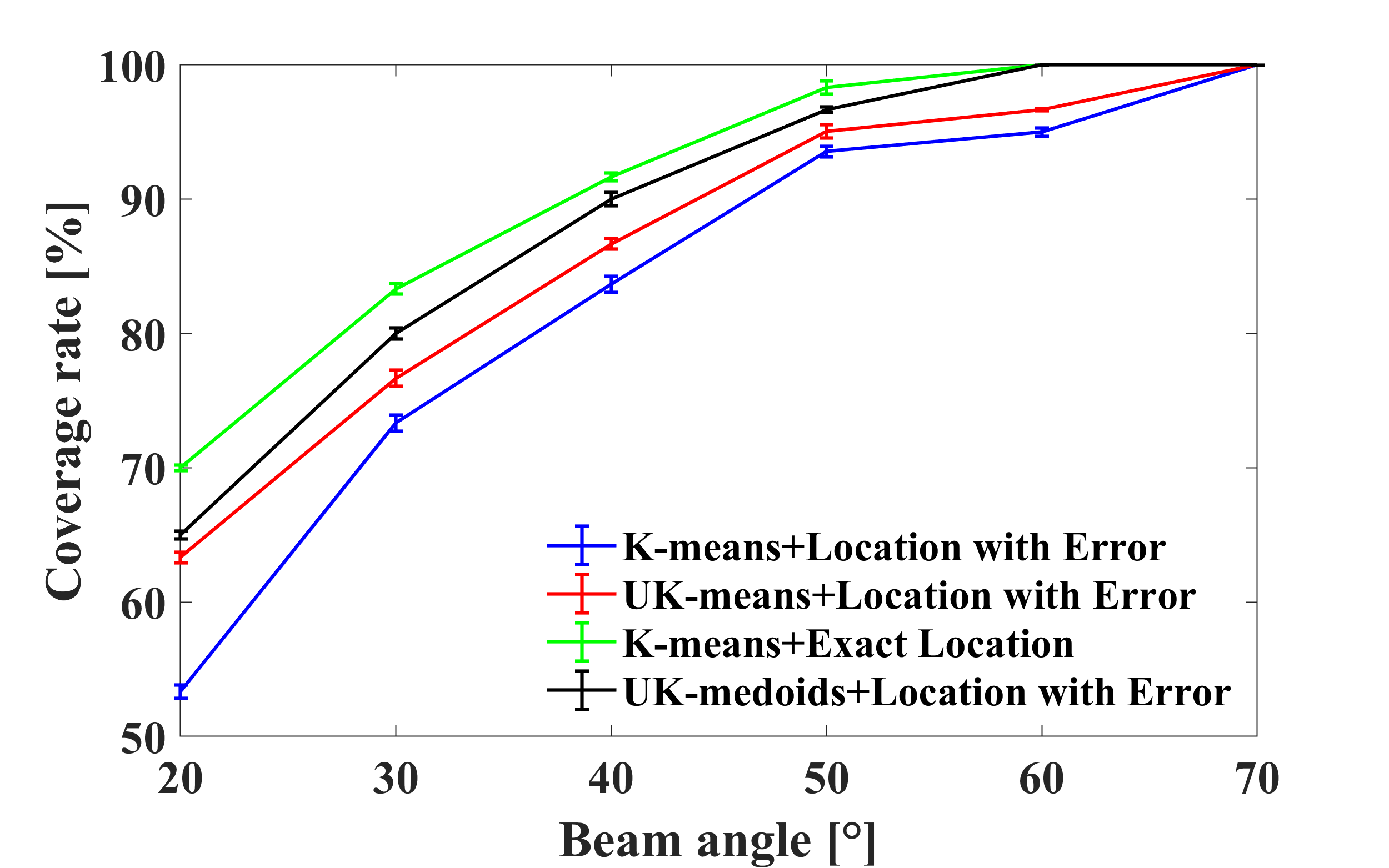

After clustering, we employ one beam to serve each cluster, then it can be easily found whether one UE is covered by a beam. Then coverage rate is computed by dividing the number of covered UEs by the total number of UEs. Fig. 4(a) shows the coverage rate with different number of beams when beam angle is set to 60∘. Scenario 3 and 4 need 5 beams to achieve full coverage while scenario 1 and scenario 2 need 6 beams. Since exact location is applied, the coverage rate of scenario 4 is the highest. With the distorted location, UK-medoids shows its strength when compared with UK-means. Meanwhile, K-means has the lowest coverage rate. Fig. 4(b) presents the average coverage rate under different beam angles when the number of beams is 5. It reveals the same conclusion as Fig. 4(a). One can observe that when beam angle is 20∘, UK-medoids has an improvement of 7.7% than UK-means.

VI-B2 Resource Allocation

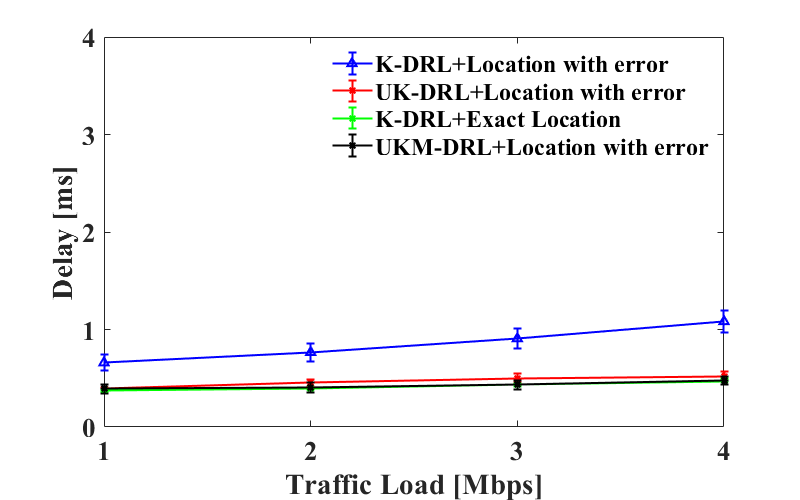

In this section, we compare the network performance under different traffic loads and algorithms. As aforementioned, the error is 17.11 meters by using the localization method from [15], and the 3.62 meters error is achieved by our proposed method. We present the results under both types of errors to better illustrate the advantage of our proposed UKM-DRL method.

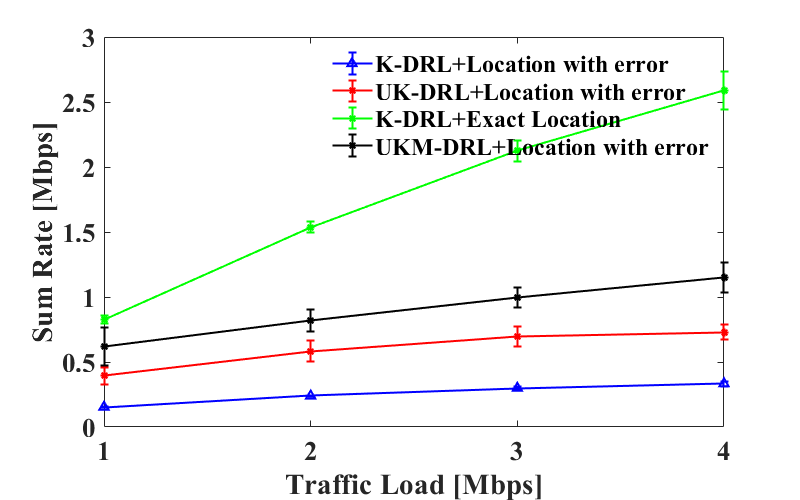

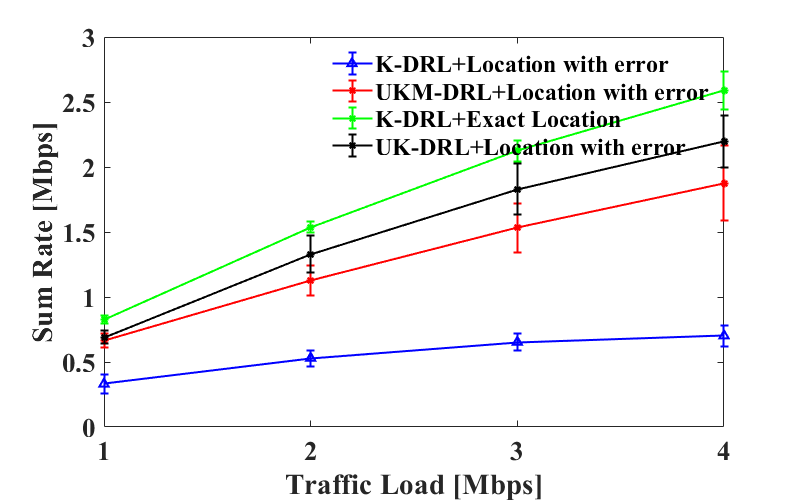

Fig. 5 shows the total data rate of different scenarios under various traffic loads when localization error is 17.11 meters and 3.62 meters. As our optimal baseline, scenario 4 achieves the highest throughput, and the reason is that exact locations allow beams to cover most UEs without any uncertainty. Under both localization errors, UK-medoids outperforms UK-means. K-means produces the worst outcome due to the lack of ability to deal with uncertainty. Moreover, scenarios 1 to 3 can all benefit from reducing the localization error, which is indicated by a higher sum rate. It further demonstrates the capability of our proposed vision-aided localization method.

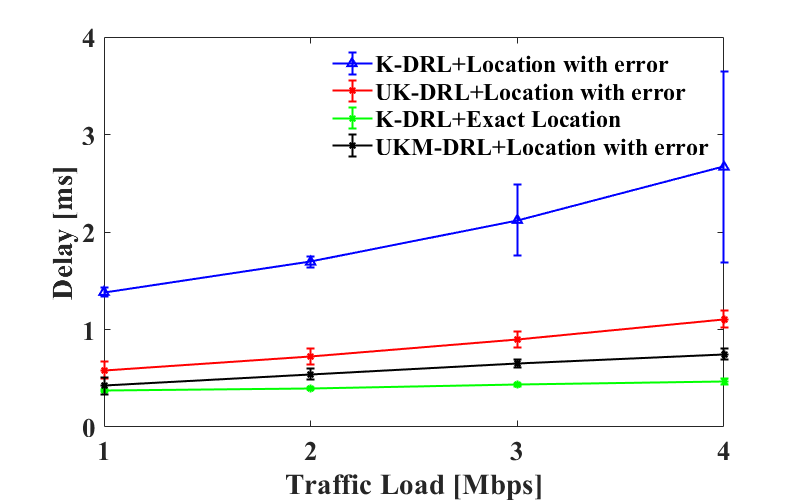

Similarly, Fig. 6 presents the delay under different traffic loads when localization error is 17.11 meters and 3.62 meters. The result is consistent with Fig. 5. The optimal baseline presents the lowest latency. With distorted location, UKM-DRL has lower latency than UK-DRL. At last, since K-means has no ability to handle localization error, it has the highest latency. When the error is 3.62 meters, the latency of both UK-DRL and UKM-DRL are very close to the optical baseline. Compared with the results in Fig. 6(a), the latency of scenarios 1 to 3 are greatly reduced. When the traffic load is 4 Mbps, UKM-DRL has an improvement of 32.4% over UK-DRL under 17.11 meters error and 7.7% under 3.62 meters error.

VII Conclusion

Machine learning is considered as a promising technique to solve the pressing challenges in future 6G networks. In this paper, we investigate the joint vision-aided sensing and communications for beam management of 6G Networks. We first introduce a novel vision-aided localization method to improve localization accuracy. Then we propose a UK-medoids based clustering method to handle the localization uncertainty, and apply the deep reinforcement learning for intra-beam resource allocation. The simulations demonstrate that our proposed method can greatly reduce the localization error, and it achieves lower delay and higher data rate than other baseline algorithms. In the future, we will include more vision-based methods for localization.

Acknowledgment

This work is supported by Ontario Centers of Excellence(OCE) 5G ENCQOR program and Ciena, the NSERC Collaborative Research and Training Experience Program (CREATE) under Grant 497981, and Canada Research Chairs Program. We would like to thank Medhat Elsayed and Hind Mukhtar for their help in the earlier versions.

References

- [1] J. Cui, Z. Ding and P. Fan, ”The Application of Machine Learning in mmWave-NOMA Systems,” in IEEE Vehicular Technology Conference (VTC Spring), pp. 1-6, June 2018.

- [2] T. Nishio, Y. Koda, J. Park, M. Bennis and K. Doppler, ”When Wireless Communications Meet Computer Vision in Beyond 5G,” in IEEE Communications Standards Magazine, vol. 5, no. 2, pp. 76-83, June 2021.

- [3] S. Nilwong, D. Hossain, S. Kaneko, and G. Capi, ”Deep Learning-Based Landmark Detection for Mobile Robot Outdoor Localization,” Machines, Vol.7, no.2, pp.1-14, Apr. 2019.

- [4] S. Ramakrishna, and E. Mary, ”A Survey of Clustering Uncertain Data Based Probability Distribution Similarity”, in International Journal of Computer Science and Network Security, vol. 14, no. 9, pp. 77-81, September 2014.

- [5] H. Zhou, M. Elsayed, and M. Erol-Kantarci, “RAN Resource Slicing in 5G Using Multi-Agent Correlated Q-Learning,” in Proceedings of 2021 IEEE PIMRC, pp.1-6, September 2021.

- [6] M. Elsayed and M. Erol-Kantarci, ”Radio Resource and Beam Management in 5G mmWave Using Clustering and Deep Reinforcement Learning,” in IEEE Global Communications Conference (GLOBECOM), pp. 1-6, December 2020.

- [7] R. Di Taranto, S. Muppirisetty, R. Raulefs, D. Slock, T. Svensson and H. Wymeersch, ”Location-Aware Communications for 5G Networks: How location information can improve scalability, latency, and robustness of 5G,” in IEEE Signal Processing Magazine, vol. 31, no. 6, pp. 102-112, November 2014.

- [8] J. Choi, Y. Park, J. Song, and I. Kweon, ”Localization using GPS and VISION aided INS with an image database and a network of a ground-based reference station in outdoor environments”, in International Journal of Control, Automation and Systems, May 2011.

- [9] J. Biswas and M. Veloso, ”Depth camera based indoor mobile robot localization and navigation,” in IEEE International Conference on Robotics and Automation, pp. 1697-1702, May 2012.

- [10] S. Nilwong, D. Hossain, S.-I. Kaneko, and G. Capi, “Deep learning based landmark detection for mobile robot outdoor localization,” Machines, vol. 7, no. 2, pp. 25-38, 2019. [Online]. Available: https://www.mdpi.com/2075-1702/7/2/25

- [11] J. Cui, Z. Ding, P. Fan, and N. Al-Dhahir, “Unsupervised Machine Learning-Based User Clustering in Millimeter-Wave-NOMA Systems,” IEEE Transactions on Wireless Communications, vol. 17, pp. 7425–7440, November 2018.

- [12] B. Kimy et al., ”Non-orthogonal Multiple Access in a Downlink Multiuser Beamforming System,” in IEEE Military Communications Conference (MILCOM), pp. 1278-1283, November 2013.

- [13] D. Marasinghe, N. Jayaweera, N. Rajatheva, and M. Latva-Aho, “Hierarchical User Clustering for mmWave-NOMA Systems,” in 6G Wireless Summit (6G SUMMIT), pp. 1–5, February 2020.

- [14] J. T. H. L. Christiansen, “Mobile communication system measurements and satellite images,” 2019. [Online]. Available: https://dx.doi.org/10.21227/1xf4-eg98

- [15] H. Mukhtar and M. Erol-Kantarci, ”Satellite Image and Received Signal-based Outdoor Localization using Deep Neural Networks,” in IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), pp. 1-6, September 2021.

- [16] S. Chowdhury, B. Verma, M. Tom and M. Zhang, ”Pixel characteristics based feature extraction approach for roadside object detection,“ International Joint Conference on Neural Networks, pp. 1-8, July 2015.

- [17] F. Gullo, G. Ponti and A. Tagarelli, “Clustering Uncertain Data Via K-Medoids”, in Scalable Uncertainty Management, pp. 229–242, 2008.

- [18] M. Chau, R. Cheng, B. Kao, and J. Ng, “Uncertain data mining: An example in clustering location data,” in Pacific-Asia conference on knowledge discovery and data mining, vol.2, pp. 199-204, April 2006.