Joint Object Contour Points and Semantics for Instance Segmentation

Abstract

The attributes of object contours has great significance for instance segmentation task. However, most of the current popular deep neural networks do not pay much attention to the object edge information. Inspired by the human annotation process when making instance segmentation datasets, in this paper, we propose Mask Point R-CNN aiming at promoting the neural network’s attention to the object boundary. Specifically, we innovatively extend the original human keypoint detection task to the contour point detection of any object. Based on this analogy, we present an contour point detection auxiliary task to Mask R-CNN, which can boost the gradient flow between different tasks by effectively using feature fusion strategies and multi-task joint training. As a consequence, the model will be more sensitive to the edges of the object and can capture more geometric features. Quantitatively, the experimental results show that our approach outperforms vanilla Mask R-CNN by 3.8% on Cityscapes dataset and 0.8% on COCO dataset.

keywords:

Instance Segmentation , Multi-task Learning , Feature Fusion , Objects Contour\ul

1 Introduction

In recent years, due to the emergence of deep networks, the performance of many computer vision applications has been dramatically improved. Classification, object detection, and instances segmentation are hot topics in computer vision community, especially instances segmentation, which includes two tasks: instance recognition and semantic segmentation. Compared with object detection and image level classification, it requires more sophisticated annotation information, therefore, its framework requires a more complex design.

Recently, like Mask R-CNN [1] and MaskLab [2], the champion algorithms of COCO dataset [3] instance segmentation task, the main idea is to divide the instance segmentation pipeline into two stages: the first stage is to locate and classify the objects in the image, and the second stage is to classify the foreground in the bounding box. In this paper, we focus on improving the instance segmentation task of Mask R-CNN, which is a further extension of Faster R-CNN [4]. Specifically, it adds a parallel mask head to the original Faster R-CNN box head. First of all, it obtains the region of interest with a spatial scale of through RoIAlign operation, and then a mask tensor with a spatial scale of is predicted by a small head network with a series of convolution and upsampling operations. But this kind of prediction result is only obtained through binary classification and thereby cannot well represent the geometric properties of the object. To address this drawback, we added an auxiliary task on the basis of Mask R-CNN. As is known, in the pipeline of Mask R-CNN, for the person category in COCO dataset, it can not only perform detection and instance segmentation, alternatively, it can also perform human keypoint detection task simultaneously (i.e. keypoint R-CNN). Inspired by the process of labeling instance segmentation objects, we innovatively extend the keypoint detection task to all object categories. In other words, we add a new keypoint detection auxiliary task to Mask R-CNN, which can enhance the model’s attention to the object boundary by detecting the contour of the object. To sum up, in our method, we use mask head pixel-by-pixel classification as the main task, and focus on the object edge information by aggregating the target edge points simutanously. As a consequence, this multi-task joint training model can well transform the geometric features of the object into the constraints of the binary mask of instance segmentation, and further sharpen the edges of the instance segmentation results to improve the detection performance. Without losing generality, we validate our method on the Cityscapes dataset [5] and the large-scale object detection COCO dataset, and compared with Mask R-CNN baseline with ResNet-50, our method can effectively improve the performance of the model, the AP (Average precision) value on the Cityscapes dataset is 36.9(ours) vs 33.1, and the result on the COCO dataset is 35.4(ours) vs 34.6.

In summary, the main contributions of this work are highlighted as follows:

1. We propose the Mask Point R-CNN, illustrated in Fig. 1, which is a new exploration of instance segmentation by combining the edge information of the object.

2. Compared to Mask R-CNN, we utilize features in the mask prediction branch and keypoint prediction branch with different attributes, and perform joint training through multi-tasks features fusion.

3. Different from the human body keypoint detection, we extend the keypoint detection task to any category boundary point detection, and experimentally prove that it can enhance the network’s attention to the model boundary.

The rest of this paper is organized as follows. In Section 2, we briefly review related work on instance segmentation, multi-task learning, and keypoint detection. In Section 3, we describe the motivation and details of our proposed algorithm. Experimental details and analysis of the results are elaborated in Section 4. In Section 5, we analyze the interaction between our method subtasks and verified their effectiveness through visualization. Finally, we conclude the paper in Section 6.

2 Related Work

This section provides an overview of literatures on instance segmentation, multi-task learning, and keypoint detection.

2.1 Instance segmentation

There are two common ways of achieving instance segmentation: detection first and segmentation first. The detection first method consists of using the object detector to obtain the bounding boxes coordinates preferentially and then segment the instances in the bounding boxes. As an outstanding representative work, He . [1] proposed Mask R-CNN to perform the instance segmentation task by adding a mask prediction branch on the basis of object classification and bounding boxes regression task of Faster R-CNN [4]. Many subsequent methods were proposed based on Mask R-CNN [6, 7, 8, 9, 2]. For instance, Chen . [2]proposed MaskLab that utilized semantic segmentation and direction prediction to implement mask prediction. Huang . [6] proposed MS R-CNN(Mask Scoring R-CNN) that added a mask IoU (Intersection over Union) head by combining instance features and corresponding prediction masks in Mask R-CNN to enhance the consistency between mask quality and mask score. Liu . [7] proposed PAN (Path Aggregation Network) which combined more low-level features through Bottom-up Path Augmentation, and obtained more accurate segmentation through AFP (Adaptive Feature Pooling) and fully-connected fusion. Recently, there are also many excellent detection first methods that focus on building real-time instance segmentation systems [10, 11, 12, 13, 14, 15]. Contrary to the previous method, the segmentation first method is to classify each pixel in the image preferentially and then group them into a single instance. For instance, Hariharan . [16] proposed SDS (Simultaneous detection and segmentation) to locate segmentation candidate regions. This method uses multi-scale combinatorial grouping [17] and obtain bounding boxes simultaneously to achieve instance segmentation. The InstanceCut [18] method utilized the edge graph to divide the segmentation map into different objects. Analogously, the work aforementioned is to perform segmentation tasks by classifying the foreground and background, and our work is to get a better instance segmentation effect by paying attention to the boundary of the object.

2.2 Multi-Task learning

MTL (Multi-Task Learning) is an inductive transfer method that makes full use of domain-specific information hidden in the training signals of multiple related tasks. During backward propagation, multi-task learning allows shared hidden layer-specific features to be used by other tasks. In particular, MTL can learn features that are applicable to several different tasks. Such features are often not easy to learn in a single-task learning network. MTL is widely used in computer vision [6, 8, 9], speech, natural language processing, and other fields [19]. In [20], Su . calibrates missing features in the learning process by combining low-level raw binary attributes and intermediate attributes. In [21], Abdulnabi . proposed the use of a combination of hidden matrix and multi-matrix to decouple model parameters to allow CNN (Convolutional Neural Network) models to share visual knowledge between different categories simultaneously. In [22], Yuan . enhanced the robustness of model coefficient estimation by constructing a hierarchical sparse learning model of multi-task interconnection. In [23], Yim . added an additional task to improve the ability of DNN (Deep Neural Network) to maintain face identity, which using a human face and a binary target pose code to generate a face image with the same identity and target pose. In our method, we use the keypoint detection task in conjunction with the instance segmentation task in the original Mask R-CNN to calibrate the boundary features of the object, the purpose of which is to enhance the gradient flow between different tasks and the fusion of different attribute features.

2.3 Keypoint detection

Keypoint detection technology has been widely used in computer vision research, such as human pose detection tasks [24, 25, 26] and anchor free object detection tasks [27, 28, 29, 30]. The purpose of human body keypoint estimation task is to detect 2D keypoints of the human body, such as joints, facial features, etc., and describes human bone information by these keypoints. Lately, keypoint estimation techniques are also used in many detection tasks. Generally, the current object detection method can be divided into anchor base [31, 32, 33, 34, 35, 4] and anchor free according to whether the proposal is obtained by sliding window. Specifically, the anchor base method utilizes sliding window to generate a bunch of candidate anchor boxes, and then remove the redundant bounding boxes by NMS (Non-Maximum Suppression) [36] to get the final detection results. The anchor free method utilizes keypoint detection technology [37, 38] to obtain the center point or extreme point of the object for target positioning. By contrast, the detection points of this method are all located in the interior of the detected object, so it is more conducive to the learning of network features. Following that intuition, we utilize the keypoint detection branch to detect the edge aggregation points of the target to capture more geometric features of objects.

3 Method

In this section, we will first introduce the motivation of our method, and then briefly review the Mask R-CNN algorithm, finally, describe the design of our model in detail from three aspects: boundary point extraction, model framework, and loss function.

3.1 Motivation

Since most of the existing instance segmentation methods are implemented by pixel-by-pixel classification, these methods depend on finer label information relative to the object detection task. For this reason, making a instance segmentation dataset will be a major expenditure of time and effort. During the annotation process, the annotator needs to obtain the edge contour of the instance by connecting the edge points for building the instance’s mask, so the boundary point features of the instance can well represent its geometric information. We all know that deep learning is a typical data-driven method. Therefore, in order to improve the accuracy of instance segmentation when the amount of data is limited, inspired by the artificial annotating process, we obtain a fixed number of edge aggregation points by uniformly sampling the edge contours of the groundtruth mask, and then utilize the newly added keypoint prediction branch to predict these edge aggregation points. Finally, the binary segmentation mask is obtained by fusing the edge information prediction and the mask prediction.

3.2 Mask R-CNN

Next, we briefly review the framework of Mask R-CNN. Mask R-CNN is extended on the basis of Faster R-CNN, in addition to the shared backbone network and FPN (Feature Pyramid Networks), it in parallel adds a new branch for predicting the object mask on the bounding box recognition branch. The network can also be easily extended to other tasks, such as person keypoint detection (i.e. Keypoint R-CNN). In Keypoint R-CNN, its keypoint head is very similar to the mask head of Mask R-CNN. The mask head obtains an output resolution of through four convolutional layers with a channel of 256 which followed by an up-sampling layer, and finally employ a convolutional layer to make its dimensions consistent with the object category. Meanwhile, the keypoint head obtains an output resolution of through eight convolutional layers with 512 channels and followed by two upsampling layers. Besides, in order to implement Human Pose Estimation in Mask R-CNN, it uses one-hot mask to encode each keypoint position, and the structural design is similar to the mask branch, which uses fully convolutional network to predict a binary mask for each keypoint.

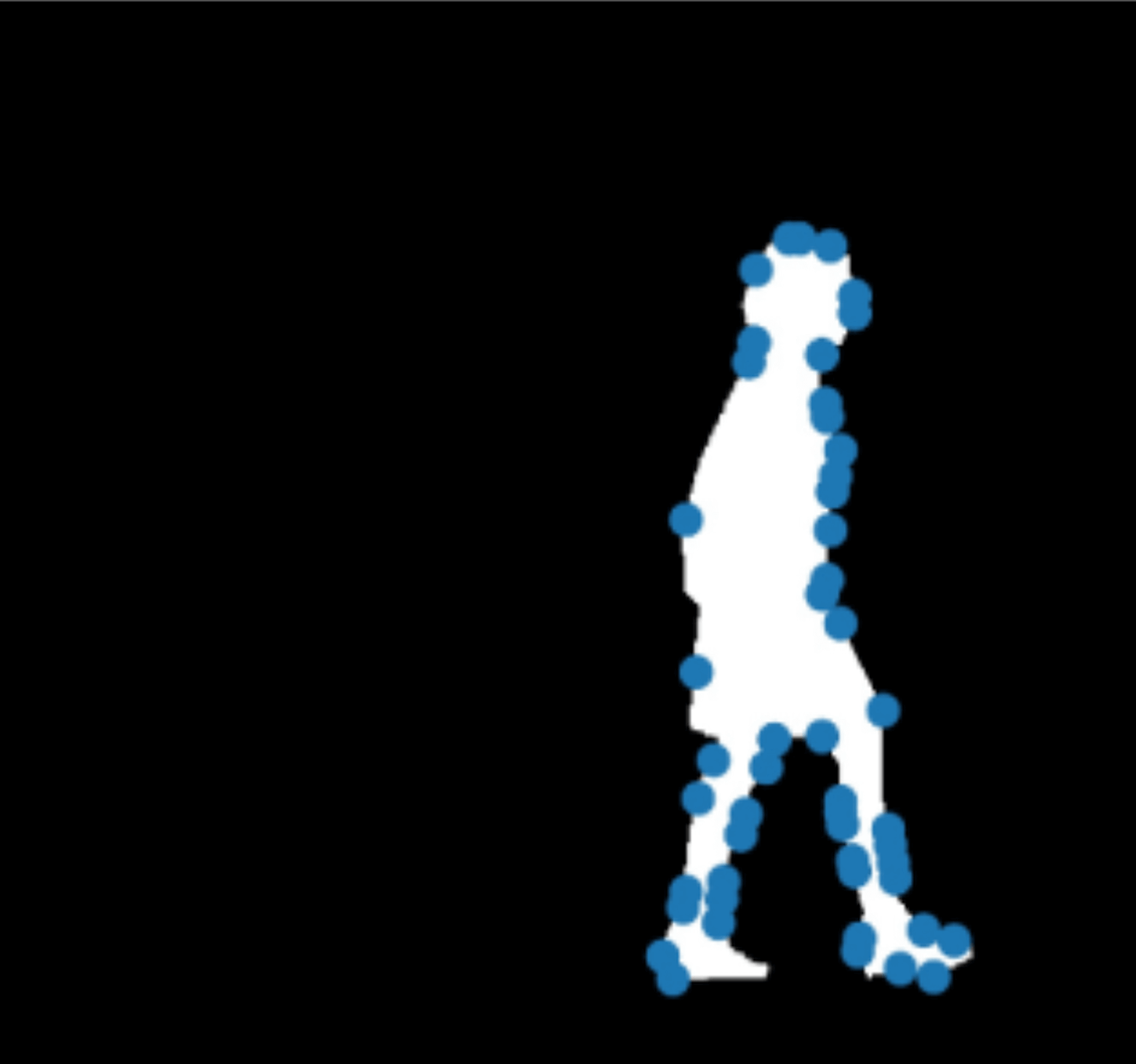

3.3 Boundary point extraction

To train our keypoint detection subtask, we need the labels of the object edge points. But the dataset used for instance segmentation only provides binary groundtruth mask, so we need to make labels for keypoint detection subtasks ourselves. In the keypoint detection task, a heat map is generated for each detection point. Thus, for different instance targets in the training data, we need to extract the contour boundary points of the object to form training labels. However, the CNN (Convolutional Neural Network) network require the input training data with a constant channel dimension, hence we need to extract a fixed number of boundary points correspondingly. In this work, we generate training labels for keypoint detection by sampling the instance contours, as shown in the Fig. 3. But due to the large difference in the scale of the instances in the training dataset, the number of points obtained by sampling may be small. In this regard, we complement it to the required amount by randomly selecting existed sampling points. Besides, we also explored the different sampling method, which will be explained in detail in the experimental section.

| Methods | AP[val] | AP | AP50 | person | rider | car | truck | bus | train | motorcycle | bicycle |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Kendall . [39] | - | 21.6 | 39.0 | 19.2 | 21.4 | 36.6 | 18.8 | 26.8 | 15.9 | 19.4 | 14.5 |

| Arnab . [40] | - | 23.4 | 45.2 | 21.0 | 18.4 | 31.7 | 22.8 | 31.1 | 31.0 | 19.6 | 11.7 |

| SGN [41] | - | 25.0 | 44.9 | 21.7 | 20.0 | 39.4 | 24.7 | 33.2 | 30.8 | 17.7 | 12.3 |

| PolygonRNN++ [42] | - | 25.4 | 45.4 | 29.3 | 21.7 | 48.2 | 21.1 | 32.3 | 23.7 | 13.6 | 13.6 |

| Neven . [43] | - | 27.6 | 50.8 | 34.5 | 26.0 | 52.4 | 21.6 | 31.1 | 16.3 | 20.0 | 18.8 |

| GMIS [44] | - | 27.6 | 44.6 | 29.2 | 24.0 | 42.7 | 25.3 | 37.2 | 32.9 | 17.5 | 11.8 |

| BshapeNet+ [fine-only] [45] | - | 27.3 | 50.4 | 29.7 | 23.3 | 46.7 | 26.0 | 33.3 | 24.8 | 20.3 | 14.1 |

| Mask R-CNN[fine-only] [1] | 31.5 | 26.2 | 49.9 | 30.5 | 22.7 | 46.9 | 22.8 | 32.2 | 18.6 | 19.1 | 16.0 |

| Mask R-CNN*[fine-only] [1] | 33.1 | 28.5 | 55.0 | 34.0 | 25.7 | 51.3 | 24.4 | 32.1 | 22.1 | 20.9 | 17.5 |

| Ours[fine-only] | 36.9 | 31.2 | 56.1 | 37.3 | 28.2 | 55.9 | 28.6 | 35.1 | 23.7 | 21.3 | 19.8 |

3.4 Model framework

According to the definition in [46], we can use symbols to describe multi-task training deep learning models, where represents the number of subtasks. For our framework , it includes the bounding box regression task, the classification task, the target segmentation task, and the newly added keypoint detection task. Suppose that our dataset contains a total of training samples, then , where is the training instance in and is its corresponding training label. In our approach, we used the same training data for different learning tasks with different training labels, i.e. . More formally, the training label for the regression task of the bounding box is the coordinate of the center point of the groundtruth and its width and height. And for the target segmentation task, the training label is the corresponding binary mask, whereas for the keypoint detection task, the training label is the edge aggregation point obtained by sampling the target boundary.

Fig. 1 shows the general architecture of our model. In our pipeline, the box head and mask head are standard components of original Mask R-CNN. Obviously, we can simultaneously perform keypoint joint training with other heads by adding the keypoint detection branch. Suppose that we utilize edge aggregation points and the center point of the object, a total of points, as the keypoint labels information for training. And then a heat map will be generated for each keypoint in the keypoint detection task of Mask R-CNN, so we can get a output mask tensor eventually. As for the mask prediction branch, a output tensor is produced through the fully convolutional network, where represents the total number of object categories. In addition, for capturing the overall edge information of the target, we do the channel-wise addition on the output features of the keypoint prediction branch for mapping the information of multiple keypoint with one hot encoding onto a single heat map. And in the next steps we reduce the heat map into a feature map with the same spatial scale as the mask prediction branch output by applying a convolution layer. In the end, we broadcast the edge information of the target to each channel of the mask prediction branch through feature fusion operation. There is one more thing should notice that we do not use ReLU (Rectified Linear Unit) activation function after the downsampling convolutional layer. As a consequence, our model can pay more attention to the segmentation edge of the object through the direct prediction of the object boundary point and the indirect fusion with the object segmentation feature output by the mask head.

The above fusion process could be mathematically described as follows:

where represents channel-wise multiplication, stands for the stride of the convolution operation, stands for the output features of the keypoint prediction branch, and stands for the output features of the mask prediction branch.

3.5 Loss function

In original Mask R-CNN, the multi-task loss is defined as three parts: , , and . For our model we calculate the loss in the keypoint detection task by using the cross entropy function, which is the same as defined in Mask R-CNN. After the keypoint detection is added to our model, the loss function can be updated as follows:

where represents the weight parameter to balance the loss of the keypoint detection task.

4 Experiments

In this section, we will first introduce our experimental dataset, evaluation criteria, and model parameter settings. Then we conduct ablation experiments from two aspects, namely the edge feature fusion and influence of keypoint. Moreover, we report the results of instance segmentation only using the edge features of the keypoint.

4.1 Dataset and metrics

We verify our algorithm on the Cityscapes dataset, which contains a variety of stereo video sequences recorded in street scenes from 50 different cities. It provides 5000 images with fine annotations of a fixed resolution of , which are splitted into 2975, 500 and 1525 images for train/val/test, and the test dataset labels are not publicly released. We evaluate results based on AP (Average precision) and AP50. We conducte plenty of ablation experiments on the validation set and provided results on the test dataset by uploading our results to the server. We compare our model with other state-of-the-art methods on Cityscapes test subset, and the results are shown Table 1. Training on “fine-only” data, our method outperforms Mask R-CNN by 2.7% on test subset. Moreover, in order to verify that our method is not limited to a specific dataset, we perform experiment on COCO dataset to verify the generalization of our method. And in COCO dataset, it provides 115k images for training and 5k images for verification, and 20k images for testing.

4.2 Implementation Details

We perform our experiments based on the torchvision detection module with a pytorch backend [47]. We take 4 images in one image batch for training. The shorter edges of the images are randomly sampled from [800, 1024] for reducing overfitting. Each training is carried out on two nvidia titan xp GPUs. For instance segmentation task the mask spatial scale is , and for keypoint detection task is . We randomly horizontal flip the input image with a probability of 0.5 to augment the dataset. Because we use the different number of GPUs, different training strategies are used for Citysacpes dataset compared with Mask R-CNN. We train our model a total of 64 epochs, with a learning rate of 0.005 for the fore 48 epochs and 0.0005 for the remaining 16 epochs. Following Mask R-CNN, we use SGD for gradient optimization with weight decay 0.0001 and momentum 0.9. ResNet-50 FPN [48] is taken as the initial model on this dataset, if not specially noted. And for keypoint detection task we use uniform sampling with 100 sampling points. For the COCO dataset we train our model for 24 epochs, with an initial learning rate of 0.005, and reduce it by 0.1 after 16 and 22 epochs, respectively.

4.3 Edge Feature Fusion

The structural design choices for feature fusion: In our framework, feature fusion between multiple tasks is required, but the mask spatial scale of instance segmentation task and keypoint detection task is different, vs . Therefor, we need to ensure that the input features of the fusion operation have the same spatial scale. There are a few design choices shown in Fig. 4 and explained as follows:

| Setting | AP | AP50 |

|---|---|---|

| Mask R-CNN re-implement | 33.1 | 60.0 |

| design (a) | 31.8 | 59.5 |

| design (b) | 36.0 | 63.0 |

| design (c) | 35.6 | 62.3 |

| design (d) | 34.9 | 62.1 |

| Setting | AP | AP50 |

|---|---|---|

| Mask R-CNN re-implement | 33.1 | 60.0 |

| maxpooling | 34.1 | 61.5 |

| avgpooling | 33.6 | 60.7 |

| 3x3 convlution | 36.0 | 63.0 |

| Setting | AP | AP50 |

|---|---|---|

| Mask R-CNN re-implement | 33.1 | 60.0 |

| add | 34.3 | 61.5 |

| max | 31.9 | 61.0 |

| multiply | 36.0 | 63.0 |

| Number of samples | AP | AP50 |

|---|---|---|

| Mask R-CNN re-implement | 33.1 | 60.0 |

| k = 50 | 34.5 | 61.7 |

| k = 100 | 36.0 | 63.0 |

| k = 150 | 35.3 | 62.1 |

| Type of samples | AP | AP50 |

|---|---|---|

| Mask R-CNN re-implement | 33.1 | 60.0 |

| corner | 35.1 | 62.1 |

| uniform | 36.0 | 63.0 |

-

(a) The output of keypoint predictor is size of , and the output of mask predictor is size of . After we do channel-wise addition on the keypoint predictor output, the feature map spatial scale is reduced to by using a convolution layer with a stride of 2.

-

(b) The output of keypoint predictor and mask predictor is the same as that of design (a). The difference is before we do channel-wise addition on the keypoint predictor output, the feature map spatial scale is reduced to by using a convolution layer with a stride of 2.

-

(c) The output of keypoint predictor is size of , and the output of mask predictor is size of . we upsample the output spatial scale of the mask predictor to using transposed convolutional layer.

-

(d) The output of keypoint predictor is size of , and the output of mask predictor is size of .

The experimental results are shown in Table 2. We can see that each methods can achieve performance improvement except method (a), because in method (a) we only use one convolution layer to process the prediction results with a single channel, which will lose a lot of original edge prediction information.

The spatial scale reduction designs for feature fusion: Based on the design of the edge fusion structure, we try to reduce the spatial scale of the keypoint prediction output by using three methods: max pooling, average pooling, and a convolution layer with kernel size of 3 and stride of 2.

Table 3 shows the results for above spatial scale reduction designs, we can see that all the three methods can improve the performance of the model, which further validate the effectiveness of our method. Notably, we can see that compared with a simple pooling operation, for our model, using a convolutional downsampling method with learnable parameters performs better.

The choices of the fusion mode: After channel-wise addition and spatial scale reduction of the keypoint branch output, we can perform the fusion operation. In our experiment, we test three methods of maximum, multiplication, and addition to choose the best fusion strategy. Results are shown in Table 4, we can see that the fusion method of multiplication can be better adapted to our model.

4.4 Influence of keypoint

The model we designed requires training tasks including box head, mask head, and keypoint head simultaneously. But the Cityscapes dataset only provides instance groundtruths masks, so we need to get the bounding box and edge aggregation point labels of the object from the mask of the instance ourselves. For a single target, the bounding box label is uniquely fixed, but for the edge aggregation point label we need to sample it from the edge contour of the target, which means that we have more alternative strategies. Specifically, we separately analyze the impact of corner sampling and uniform sampling on our method, and the results are shown Table 6. Analogously, corner sampling reflects the key geometric information of the object, whereas uniform sampling reflects the overall geometric information of the object. From the experimental results we can see that it is more conducive to the instance segmentation by using keypoint detection technology to capture the overall geometric position information of the object. Additionally, for the case of the number of edge sampling points is less than the required number due to the target being too small when sampling the edge points, we randomly select the existing sampling points for padding.

| Methods | APbb | APmask | APkeypoint |

|---|---|---|---|

| Mask R-CNN, mask-only | 37.7 | 32.4 | - |

| Mask R-CNN, keypoint-only | 36.8 | - | 55.7 |

| Ours (without center point) | 36.7 | 34.3 | 56.0 |

| Ours | 37.2 | 35.2 | 56.4 |

| Ours () | 37.9 | 35.9 | 58.2 |

| AP | AP50 | |

|---|---|---|

| 1 | 36.0 | 63.0 |

| 0.5 | 36.9 | 64.3 |

| 0.2 | 35.1 | 62.1 |

| Methods | APbb | AP | AP | AP | APmask | AP | AP | AP |

|---|---|---|---|---|---|---|---|---|

| Mask R-CNN | 37.9 | 21.7 | 41.3 | 49.6 | 34.6 | 15.8 | 37.2 | 51.1 |

| Ours | 38.3 | 21.3 | 41.8 | 50.3 | 35.4 | 15.7 | 38.4 | 51.9 |

| Methods | Backbone | APmask | AP | AP | AP |

|---|---|---|---|---|---|

| Mask R-CNN [1] | ResNet-50-FPN | 34.9 | 19.0 | 37.4 | 45.0 |

| Mask Scoring R-CNN [6] | ResNet-50-FPN | 35.8 | 16.2 | 37.4 | 51.0 |

| YOLACT [11] | ResNet-101-FPN | 31.2 | 12.1 | 33.3 | 47.1 |

| CondInst [49] | ResNet-50-FPN | 35.4 | 18.4 | 37.9 | 46.9 |

| BlendMask [13] | ResNet-50-FPN | 34.3 | 14.9 | 36.4 | 48.9 |

| PolarMask [10] | ResNet-101-FPN | 32.1 | 14.7 | 33.8 | 45.3 |

| Ours | ResNet-50-FPN | 35.7 | 18.6 | 38.1 | 46.4 |

Furthermore, we demonstrate the influence of different sampling points number on the model’s performance, the results are shown in Table 5. Specifically, we conduct experiments on 50 and 100 sampling points respectively. And we find in the course of the experiment that the further increase of the sampling points number will damage the effectiveness of the model, which can be attributed to we add too much random information in padding operation, since there are a large number of small objects in the dataset. Moreover, in our approach, we use not only the points sampled from the object contour but also the center point of the object when we perform the keypoint estimation task, which is follow the intuition that the geometric relationship between the object boundary point and the center point can be learned during the model training process for improving the estimation accuracy of the boundary point. For example, the center point of the object can be regarded as the origin in a polar coordinate system, for the objects with the same category, the distance and angle of the target boundary point relative to the center point have certain regularity. To verify our analysis, we evaluate the effect of adding the object center point using COCO evaluation metrics, as shown in the Table 7, where APbb represents the AP value of the bounding box detection results, APmask and APkeypoint represent the AP value of the mask predict results and keypoint estimation results respectively, and the subscripts , , and represent small, medium, and large objects, respectively. We can see that our method can simultaneously improve the performance of instance segmentation, keypoint estimation and object detection tasks.

4.5 Influence of of the balance coefficient of the loss function

In our model, keypoint detection is only an auxiliary task, and we hope that it can complete its duties while not affecting other tasks as much as possible. Therefore, we balance the importance of each task in the multi-task joint training by setting the attenuation coefficient of its loss function. The experimental results are shown in the Table 8, from the results in the Table, we can see that our method is very sensitive to the set balance coefficient, and we can get the best performance when we set =0.5.

4.6 Experimental results on COCO dataset

Compared with the Cityscapes dataset, the COCO dataset contains objects of more classes and scales, so it is more challenging for our algorithm. Note that we set the loss balance weight coefficient to 0.1 experimentally on COCO dataset. The experimental results are shown in Table 9. Notably, compared with baseline, our method has effective gains in both instance segmentation and target detection tasks. Moreover, in Table 10 we compare our results with state-of-the-art counterparts on COCO test-dev dataset, and it can be seen from the experimental results that we have obtained comparable performance.

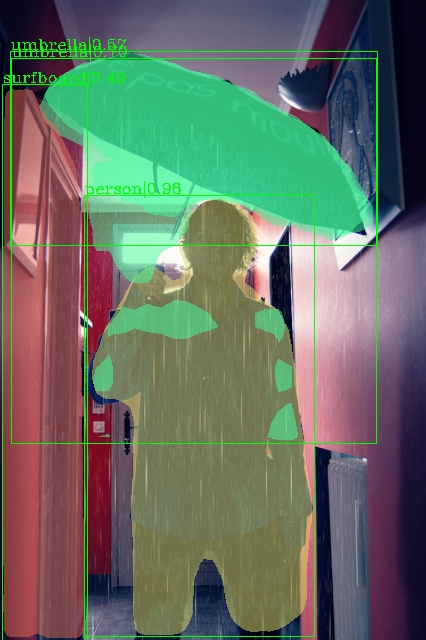

4.7 Qualitative Evaluation on COCO dataset

In order to analyze the performance of our algorithm more intuitively, we show some concrete examples of our method and Mask R-CNN baseline in Fig. 6. It can be seen from the figure that, compared with the baseline, our method can obtain a segmentation effect that fits the contour of the object better, which is thanks to our newly added keypoint detection and auxiliary task and effective fusion strategy.

5 Discussion and Future work

In this section, we will analyze the effectiveness of our method from the view of multi-task joint training and introduce our future work.

In fact, in Mask R-CNN, it has also carried out multi-task joint training of object detection, keypoint estimation, and instance segmentation on the person category in the COCO dataset. However, the experimental results show that only the performance of the keypoint estimation task is slightly improved, whereas both the performance of the detection and segmentation tasks is degraded. We deduce that this is because in the task of human pose estimation, the labels of keypoints are located within the human body, and the overall information of the instances captured by the segmentation task can help locate these keypoints. But for the object detection task including positioning and classification, and the segmentation task composed of pixel-wise classification, the keypoint information does not help, but after we set the weight balance parameters of the keypoint detection task , we can see that the detection performance of these three tasks has been greatly improved. From the above experimental results, we can infer that the performance of the model can be promoted by exploring better balance coefficients in the process of multi-task joint training.

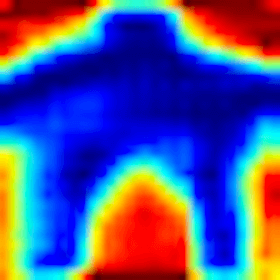

Moreover, in order to verify the effectiveness of our method more intuitively, we visualize the final feature map obtained by the keypoint head and the corresponding feature map obtained by the mask head. Specifically, we normalize the two feature maps respectively and generate corresponding heat maps, some examples are demonstrated in Fig. 5 (the darker pixels in the heat map represent higher scores). From these examples we can infer that the keypoint output feature map obtained by channel-wise addition can not only learn the boundary information of the object, but also get the overall background information. Therefore, the final output feature of the mask branch obtained by fusing the two tasks is equivalent to segment the object by combining the background and foreground features. Besides, in the joint training, we can get the edge point information of the target through the keypoint estimation task. Actually, the target can be segmented by only using the contour information mapped by these edge points. When , we can get AP value of 12.5 on Cityscapes val subset.

In addition, our work still has some problems that need to be solved. First, compared to the original Mask R-CNN, the newly added keypoint branch has more parameters, which will also increase the computational burden, therefore, in the future we need to explore more lightweight designs to optimize our methods. And as mentioned above, our model has a strong sensitivity to the setting of the balance coefficient in the multi-task joint training with different datasets, which is also a direction that needs further research.

6 Conclusion

In this paper, we propose Mask Point R-CNN for instance segmentation, which can enhance the consistency between segmentation prediction results and groundtruths masks by adding edge aggregation point auxiliary detection tasks. The experiments on the Cityscapes dataset and COCO dataset have proved the effectiveness of our method. We hope that our method can have some inspiration for other instance segmentation methods.

References

- [1] K. He, G. Gkioxari, P. Dollár, R. Girshick, Mask r-cnn, in: Proceedings of the IEEE international conference on computer vision, 2017, pp. 2961–2969.

- [2] L.-C. Chen, A. Hermans, G. Papandreou, F. Schroff, P. Wang, H. Adam, Masklab: Instance segmentation by refining object detection with semantic and direction features, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4013–4022.

- [3] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, C. L. Zitnick, Microsoft coco: Common objects in context, in: European conference on computer vision, Springer, 2014, pp. 740–755.

- [4] S. Ren, K. He, R. Girshick, J. Sun, Faster r-cnn: Towards real-time object detection with region proposal networks, in: Advances in neural information processing systems, 2015, pp. 91–99.

- [5] M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth, B. Schiele, The cityscapes dataset for semantic urban scene understanding, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 3213–3223.

- [6] Z. Huang, L. Huang, Y. Gong, C. Huang, X. Wang, Mask scoring r-cnn, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 6409–6418.

- [7] S. Liu, L. Qi, H. Qin, J. Shi, J. Jia, Path aggregation network for instance segmentation, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 8759–8768.

- [8] K. Chen, J. Pang, J. Wang, Y. Xiong, X. Li, S. Sun, W. Feng, Z. Liu, J. Shi, W. Ouyang, et al., Hybrid task cascade for instance segmentation, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 4974–4983.

- [9] S. Qiao, L.-C. Chen, A. Yuille, Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution, arXiv preprint arXiv:2006.02334 (2020).

- [10] E. Xie, P. Sun, X. Song, W. Wang, X. Liu, D. Liang, C. Shen, P. Luo, Polarmask: Single shot instance segmentation with polar representation, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 12193–12202.

- [11] D. Bolya, C. Zhou, F. Xiao, Y. J. Lee, Yolact: Real-time instance segmentation, in: Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 9157–9166.

- [12] D. Bolya, C. Zhou, F. Xiao, Y. J. Lee, Yolact++: Better real-time instance segmentation, arXiv preprint arXiv:1912.06218 (2019).

- [13] H. Chen, K. Sun, Z. Tian, C. Shen, Y. Huang, Y. Yan, Blendmask: Top-down meets bottom-up for instance segmentation, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 8573–8581.

- [14] Y. Lee, J. Park, Centermask: Real-time anchor-free instance segmentation, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 13906–13915.

- [15] S. Peng, W. Jiang, H. Pi, X. Li, H. Bao, X. Zhou, Deep snake for real-time instance segmentation, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 8533–8542.

- [16] B. Hariharan, P. Arbeláez, R. Girshick, J. Malik, Simultaneous detection and segmentation, in: European Conference on Computer Vision, Springer, 2014, pp. 297–312.

- [17] P. Arbeláez, J. Pont-Tuset, J. T. Barron, F. Marques, J. Malik, Multiscale combinatorial grouping, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, pp. 328–335.

- [18] A. Kirillov, E. Levinkov, B. Andres, B. Savchynskyy, C. Rother, Instancecut: from edges to instances with multicut, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 5008–5017.

- [19] L. Zhao, Q. Sun, J. Ye, F. Chen, C.-T. Lu, N. Ramakrishnan, Feature constrained multi-task learning models for spatiotemporal event forecasting, IEEE Transactions on Knowledge and Data Engineering 29 (5) (2017) 1059–1072.

- [20] C. Su, F. Yang, S. Zhang, Q. Tian, L. S. Davis, W. Gao, Multi-task learning with low rank attribute embedding for person re-identification, in: Proceedings of the IEEE international conference on computer vision, 2015, pp. 3739–3747.

- [21] A. H. Abdulnabi, G. Wang, J. Lu, K. Jia, Multi-task cnn model for attribute prediction, IEEE Transactions on Multimedia 17 (11) (2015) 1949–1959.

- [22] C. Yuan, W. Hu, G. Tian, S. Yang, H. Wang, Multi-task sparse learning with beta process prior for action recognition, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2013, pp. 423–429.

- [23] J. Yim, H. Jung, B. Yoo, C. Choi, D. Park, J. Kim, Rotating your face using multi-task deep neural network, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, pp. 676–684.

- [24] L. Pishchulin, E. Insafutdinov, S. Tang, B. Andres, M. Andriluka, P. V. Gehler, B. Schiele, Deepcut: Joint subset partition and labeling for multi person pose estimation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 4929–4937.

- [25] B. Xiao, H. Wu, Y. Wei, Simple baselines for human pose estimation and tracking, in: Proceedings of the European conference on computer vision (ECCV), 2018, pp. 466–481.

- [26] D. Osokin, Real-time 2d multi-person pose estimation on cpu: lightweight openpose, arXiv preprint arXiv:1811.12004 (2018).

- [27] X. Zhou, D. Wang, P. Krähenbühl, Objects as points, arXiv preprint arXiv:1904.07850 (2019).

- [28] X. Zhou, J. Zhuo, P. Krahenbuhl, Bottom-up object detection by grouping extreme and center points, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 850–859.

- [29] H. Law, J. Deng, Cornernet: Detecting objects as paired keypoints, in: Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 734–750.

- [30] K.-K. Maninis, S. Caelles, J. Pont-Tuset, L. Van Gool, Deep extreme cut: From extreme points to object segmentation, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 616–625.

- [31] J. Redmon, S. Divvala, R. Girshick, A. Farhadi, You only look once: Unified, real-time object detection, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779–788.

- [32] J. Redmon, A. Farhadi, Yolo9000: better, faster, stronger, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 7263–7271.

- [33] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, A. C. Berg, Ssd: Single shot multibox detector, in: European conference on computer vision, Springer, 2016, pp. 21–37.

- [34] J. Redmon, A. Farhadi, Yolov3: An incremental improvement, arXiv preprint arXiv:1804.02767 (2018).

- [35] R. Girshick, Fast r-cnn, in: Proceedings of the IEEE international conference on computer vision, 2015, pp. 1440–1448.

- [36] N. Bodla, B. Singh, R. Chellappa, L. S. Davis, Soft-nms–improving object detection with one line of code, in: Proceedings of the IEEE international conference on computer vision, 2017, pp. 5561–5569.

- [37] F. Yu, D. Wang, E. Shelhamer, T. Darrell, Deep layer aggregation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2403–2412.

- [38] A. Newell, K. Yang, J. Deng, Stacked hourglass networks for human pose estimation, in: European conference on computer vision, Springer, 2016, pp. 483–499.

- [39] A. Kendall, Y. Gal, R. Cipolla, Multi-task learning using uncertainty to weigh losses for scene geometry and semantics, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7482–7491.

- [40] A. Arnab, P. H. Torr, Pixelwise instance segmentation with a dynamically instantiated network, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 441–450.

- [41] S. Liu, J. Jia, S. Fidler, R. Urtasun, Sgn: Sequential grouping networks for instance segmentation, in: Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 3496–3504.

- [42] D. Acuna, H. Ling, A. Kar, S. Fidler, Efficient interactive annotation of segmentation datasets with polygon-rnn++, in: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 2018, pp. 859–868.

- [43] D. Neven, B. D. Brabandere, M. Proesmans, L. V. Gool, Instance segmentation by jointly optimizing spatial embeddings and clustering bandwidth, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 8837–8845.

- [44] Y. Liu, S. Yang, B. Li, W. Zhou, J. Xu, H. Li, Y. Lu, Affinity derivation and graph merge for instance segmentation, in: Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 686–703.

- [45] B. R. Kang, H. Lee, K. Park, H. Ryu, H. Y. Kim, Bshapenet: Object detection and instance segmentation with bounding shape masks, Pattern Recognition Letters 131 (2020) 449–455.

- [46] Y. Zhang, Q. Yang, A survey on multi-task learning, arXiv preprint arXiv:1707.08114 (2017).

- [47] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, et al., Pytorch: An imperative style, high-performance deep learning library, in: Advances in Neural Information Processing Systems, 2019, pp. 8024–8035.

- [48] K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [49] Z. Tian, C. Shen, H. Chen, Conditional convolutions for instance segmentation, arXiv preprint arXiv:2003.05664 (2020).