Joint Edge Optimization Deep Unfolding Network

for Accelerated MRI Reconstruction

Abstract

Magnetic Resonance Imaging (MRI) is a widely used imaging technique, however it has the limitation of long scanning time. Though previous model-based and learning-based MRI reconstruction methods have shown promising performance, most of them have not fully utilized the edge prior of MR images, and there is still much room for improvement. In this paper, we build a joint edge optimization model that not only incorporates individual regularizers specific to both the MR image and the edges, but also enforces a co-regularizer to effectively establish a stronger correlation between them. Specifically, the edge information is defined through a non-edge probability map to guide the image reconstruction during the optimization process. Meanwhile, the regularizers pertaining to images and edges are incorporated into a deep unfolding network to automatically learn their respective inherent a-priori information. Numerical experiments, consisting of multi-coil and single-coil MRI data with different sampling schemes at a variety of sampling factors, demonstrate that the proposed method outperforms other state-of-the-art methods.

Introduction

Magnetic resonance imaging (MRI) is a widely used imaging technique in the medical field. However, it has the limitation of a long scanning time. To accelerate the scanning, on one side, the raw data in k-space are partially sampled and various signal processing methods (such as compressed sensing) are proposed to reconstruct high-quality MR image from the undersampled data. On the other side, parallel imaging technique that utilizes multiple coils to scan the anatomy simultaneously is developed. Therefore, the typical parallel MRI reconstruction problem with classical compressed sensing can be modeled as follows:

| (1) |

where is the number of coils, is the MR image to be reconstructed, is the coil sensitivity estimation map, is the Fourier transform operator, is the undersampled matrix, is the partially sampled k-space data, is a trade-off parameter, and is the regularization term that usually enforces sparsity.

In the past years, various regularization terms with corresponding optimization algorithms were proposed in the MRI reconstruction problem and achieved good performance. Among these model-based methods, most of them focus on searching the suitable transform to prompt sparsity (e.g. total variation [1], spline wavelet transforms [2], adaptive learned dictionary [3] and combined transforms [4]) or elaborately designing the variants of sparsity regularizers (e.g. regularizer [5], regularizer [6], and group sparsity [7]). In addition to sparsity, researchers are also exploring some other priors of MR images such as low rank [8], non-local similarity [9], etc. Nevertheless, these methods constrain the entire image with a unified prior indiscriminately and ignore the diverse structures inside the image, which may result in blurred edges or some unwanted artifacts, such as the fake edges in smooth regions. Additionally, edges are important in images as they dominate the content of an image. As shown in Figure 1, a high-quality image is typically accompanied by a distinct and sharp edge, whereas a low-quality image is often characterized by a blurred or indistinct edge. Better preservation of edges is crucial for better image reconstruction.

Considering the above issues, some works have been proposed to impose extra structure or edge priors to reduce the artifacts and improve the quality of the final reconstructed image. Methods with edge priors for MRI reconstruction can be roughly classified into two categories: the traditional model-based methods and the deep learning-based methods. The first category incorporates regularization of the edge into the optimization model, e.g. adaptive edge-preserving regularization [10] and geometrically structured approximation [11]. This kind of methods have two issues, the first is the introduce of edge prior term will increase the complexity of the numerical algorithm or even cause the non-convergence issue. The second issue is that the design of edge prior is critical, a less adequate assumption could be counterproductive. Though the deep learning based methods do not need to face the above dilemma, they have a challenge about how to effectively utilize the edge prior. For most deep learning based methods with edge prior, as far as we know, edges are used as the guidance by being concatenated with the input in a direct [12, 13, 14] or a fancy way [15]. The focus is either on the extraction of the edges or the fusion mechanism between the edge and the MR image. However, how the concatenation play the role of guidance is unclear and is often ignored. The potential of edge has not been fully exploited.

In order to effectively utilize the edge prior as well as avoid the interference of inaccurate prior assumption, the proposed MRI reconstruction model needs to have the following two properties. First, to avoid the influence of inaccurate assumption, the regularizations of the MR image to be recovered do not need to have a explicit expression like in traditional model based methods. Second, to fully exploit the potential of the edge information, effective correlations between edges and the target MR image should be established. Ground on the above discussions, in this paper, we propose a joint edge optimization parallel MRI reconstruction model that seeks a tighter coupling between edges and images to obtain a high-quality reconstructed MR image. A co-regularizer between the MR image and the edge-related variables is utilized to link the two closely, so as to achieve a better reconstruction during the iterate optimization with the assistance of their respective constraints. Specifically, the edge-related variables are characterized as a probability map that measures the probability of a region being a non-edge (smooth region). Meanwhile, to reduce reliance on hand-crafted priors, the regularizations regarding the target MR image and the probability map are incorporated into the proximal mapping operator simulated by neural network modules. The deep unfolding schema is utilized to automatically learn the inherent a-priori information of the MR image and non-edge probability map in the optimization process. Overall, the main contributions of our proposed method are as follows:

-

•

Unlike previous deep learning-based approaches that simply concatenate the edge with MR images to incorporate edge priors, our proposed joint edge optimization MRI reconstruction model employs a more intimate integration way to fuse the edge and images for mutually guidance and enhancement.

-

•

Unlike traditional model-based approaches that rely on manually designed regularizations, our proposed model automatically learns inherent priors of images and edges through the network with a deep unfolding schema.

-

•

In our experiments, datasets from different imaging scenarios are utilized for validation. The quantization metrics are superior compared to other state-of-the-art methods under different acceleration factor and different sampling schemes.

Related work

Accelerated parallel imaging

In accelerated parallel imaging, the algorithm aims to reconstruct high-quality MR images from partially sampled multi-coil k-space data. The methods of MRI reconstruction in parallel imaging scenarios can be categorized into traditional model-based and data-driven methods. In traditional methods, GRAPPA [16] uses interpolation in k-space; SENSE [17] exploits sensitivity estimation maps in the spatial domain to eliminate aliasing artifacts. In addition to this, some of the methods solve the optimization model (1) based on the theory of compressed sensing in parallel imaging scenarios, such as ESPIRiT [18], which combines a multi-coil degradation mechanism and a constrained regularity term for the reconstruction. However, the reconstructed image quality of traditional methods is not stable and the imaging speed is slow.

With the development of deep learning techniques, artificial neural networks have achieved excellence in many tasks in the field of computer vision [19, 20, 21]. Meanwhile, the data-driven methods appear and the researchers explore the potential of neural networks in the field of medical imaging. In the accelerated MRI reconstruction task, Wang et al. [22] and Schlemper et al. [23] proposed for the early time to use convolutional neural networks to learn the mapping relationship between zero-filled undersampled images to high-quality reconstructed images. Hyun et al.[24] utilized U-Net [25] which is an efficient encoder-decoder architecture in segmentation tasks, as the reconstruction modeling framework. Similar works have been done by Sun et al. [26] utilizing recursive dilated networks. Some methods [27][28] restore zero-filled degraded images based on the generative adversarial network architecture as a means of removing aliasing artifacts and redundant noise. In addition, since the original MRI data is in complex form, researchers [29] applied complex convolution operation to jointly process the real and imaginary parts of the MR image to preserve the magnitude and phase information of the image. Moreover, Considering the importance of edge information restoration, EAMRI [15] utilizes the edge detector to extract the image edge, which is used as guidance for constructing the attention map. However, the ”off-the-shelf” edge detectors have their own limitations which will migrate to the reconstruction algorithm. Despite their reconstruction result is generally better than traditional methods with lower inference time, the data-driven reconstruction methods described above are not conducive to the clinical practice due to the lack of interpretability resulting from the black-box nature of neural networks.

Advances in Deep Unfolding for MRI reconstruction

The innovative deep unfolding methods are initially introduced to sparse coding approximations [30], fusing optimization-driven iterative algorithms with the expressive power of neural networks. This methodology has seen successful application across a variety of low-level image processing tasks [31].

For instance, the Denoising Convolutional Neural Network (DPDNN) [32] and the Model-based Deep Learning architecture (MoDL) [33] utilize deep unfolding schema by employing neural networks to embody model-based regularization terms within image domain. E2EVarNet [34] integrate a gradient descent optimization for k-space data enhancements in MRI reconstruction, unfolding the first-order derivatives of regularization function with the neural network. HQS-Net [35] uses a half quadratic splitting method to process the reconstruction model and integrates the neural network module into the optimization process to design an efficient and lightweight reconstruction method; HUMUS-Net [36] integrates the multi-scale network structure and the attention mechanism into the depth-expanded reconstruction method to achieve high-quality reconstruction results, but due to the large size of the parameters of the network model, it is not that efficient in training. Meanwhile, methods like ISTA-Net [37] and ADMM-CSNET [38] take a leap forward by unfolding iterative soft-thresholding and alternating direction multiplier algorithms, respectively, into learning-based models that combine optimized algorithm with data-driven component. VS-Net [39] unfolding the iterative refinement optimization process with neural network to constructing a lightweight architecture, while MGDUN [40] uses neural network structures to semantically replace traditional sampling and modality conversion processes, particularly highlighting its prowess in tasks such as cross-modality super-resolution.

A noteworthy aspect of deep unfolding schema is its inherent reduction of hand-craft design, leaning towards an autonomous optimization. Moreover, the deployment of deep unfolding has not only augmented the clarity and interpretability of computational models but also enhanced the precision of the eventual solutions.

Method

Model

The signal intensity of MR images typically demonstrates a piecewise smooth distribution, which is attributed to the correlation with the underlying tissue structure. With this piecewise smooth property, a redundant system can provide a sparse representation of the MR image, generating coefficients with large amplitude along the locations of image edges and coefficients with near-zero (or zero) amplitude within the smooth regions (non-edge regions). Based on this, the edges and the image can be effectively associated with each other. In this paper, we introduce a probability map named to quantify the probability of a region being smooth (non-edge) and utilize it to constrain the values of transformation coefficient inside the smooth regions to be close to zero. In addition, as MR images depict the structural information of anatomical tissues, they have certain geometrical structures. Consequently, the smooth regions within these MR images are not randomly distributed but conform to specific patterns and characteristics. Therefore, the probability map that measures the likelihood of a region being smooth also should fulfill certain properties and can be imposed with regularizations to satisfy the corresponding properties.

Based on the aforementioned discussion, our proposed joint edge optimization MRI reconstruction model can be formulated as follows:

| (2) | ||||

where denotes a pixel-wise product111 is omitted in the following text for brevity., is the introduced non-edge probability map related to the edge information. The value of ranges from to . When its value is , it indicates that the likelihood of the pixel belonging to a non-edge is , and we do not constrain the corresponding transformation coefficient. On the other hand, when the value is , it suggests that there is a high probability that this pixel belongs to a smooth (non-edge) region, and we expect the transformation coefficient to be close to . Conversely, when the images are updated, the value of will be decreased or increased accordingly based on whether the corresponding pixel belongs to edge or not. denotes the stationary Haar wavelet transformation. , , and are the trade-off parameters of different regularization terms.

The optimization model (2) consists of four terms. The first is the fidelity term, which ensures the data consistency between the reconstructed MR image and the measured k-space data. The third term is the co-regularizer between image and edge-related variables , linked by a transformation , aiming to promote the piece-wise smoothness property of image . The second term and the forth term are the regularizers of the image and the probability map respectively. Instead of imposing hand-crafted priors, we learn the inherent priors of images and edges automatically through the network.

Algorithms

In Model (2), two variables are included, i.e., the reconstructed image and the non-edge probability map . To solve this optimal model, we decouple the two variables and optimize them alternatively by solving the following subproblem (3) and (4):

| (3) |

| (4) | ||||

where denotes the stage index of the iteration. As seen in the above subproblems, the co-regularizer between the reconstructed image and the non-edge probability map exists in both two subproblems. Hence in the alternating optimization process of two variables, they will guide each other for better recovery. For the solution of the two subproblems, we will introduce them in detail in the following.

Edge Information Solver

Inspired by the Half Quadratic Splitting (HQS) [41] method, the subproblem (3) is re-formulated into the following form as:

| (5) |

where is the introduced auxiliary variable, is the trade-off parameter.

Based the above optimization problem, we form the optimization problem for the auxiliary variable V by extracting the terms related to V as follows:

| (6) |

For the optimization of auxiliary variables , it is actually the proximal mapping of regularizer . We use the efficient proximal gradient descent (PGD) to solve the problem as follow:

| (7) |

After updating , the non-edge probability map can be solved by the following optimization model:

| (8) |

which has a closed-form solution as follow:

| (9) |

where denotes element-wise division.

Reconstructed Image Solver

Similar to the solution of the non-edge probability map , we also solve subproblem (4) based on the HQS by introducing the auxiliary variable for the reconstructed image . Subproblem (4) can be rewritten as follow:

| (10) | ||||

where is the trade-off parameter.

Similarly, based the above optimization problem, we construct the optimization problem for the auxiliary variable Z by extracting the terms related to as follows:

| (11) |

which is also a proximal mapping of regularizer , denoted as:

| (12) |

Subsequently, we extract the related terms to build the optimization model for as follows:

| (13) | ||||

As to the optimization of image , it can be obtained by the classical conjugate gradient algorithm. However as the iterative solution process involves repeated undersampling operator , Fourier transform and wavelet transform , the computational burden is an unavoidable issue. Inspired by the effectiveness of the optimization model using lightweight single-step gradient descent in DPDNN [32], we adopt the single-step gradient descent for approximating the reconstructed image . The iterative schema for updating the reconstructed image is shown below:

| (14) | ||||

where is the step size of the single-step gradient descent. denotes the corresponding conjugate operation.

Deep Unfolding Network

The principle of deep unfolding is that the modules of optimize-based approach which need to be manually designed are difficult to determine, so the deep unfolding schema utilize the neural networks to replaced the undetermined module or operator, introducing the representing capability of deep neural networks into the optimizing process. In traditional model-based methods, the design of the regularization terms and are crucial for the final reconstruction. In this paper, to avoid the reliance on hand-crafted prior and the interference of inaccurate assumptions, the neural networks are utilized to automatically learn the inherent priors. Specifically, since the regularization terms and only occur in the proximal operator in Eq. (7) and Eq. (12), we consider the substitution of neural networks for the proximal operator. Thus for Eq. (7) we utilize an edge recovery network (ERN) to replace the proximal gradient operator . And for Eq. (12), the proximal gradient operator is substituted using image denoising network (IDN). Therefore, the optimization for the auxiliary variables and can be rewritten in the following form:

| (15) |

| (16) |

where and are the parameters of the edge recovery network ERN and the image denoising network IDN at the iteration, respectively. As to the specific network structure design of ERN and IDN, we utilize the standard U-Net architecture [25] which is suitable for the image-to-image conversion task due to its encoder-decoder structure that extracts features from images at different scales through multi-layer up-sampling and down-sampling operations. Moreover, the standard U-Net architecture is combined with the residual connection from the module input to output in our method so as to avoid gradient vanishing issue [42].

In the following, we present an overall reconstruction framework for our joint edge optimization model. The optimization process consists of iterative stages, each containing four optimization steps. First, the edge auxiliary variable is optimized using the edge recovery network (ERN). Then, the non-edge probability map is optimized based on the closed-form solution of Eq. (9), known as edge optimization (EO). The reconstructed image auxiliary variable is optimized using the image denoising network (IDN), and finally, the reconstructed image is optimized using single-step gradient descent by Eq. (14), known as image optimization (IO). The detailed optimizing steps at each stage are described in Algorithm 1. As for the initialization of the image , we process the partially sampled k-space data of the multi-coils using the zero-filled method to obtain the image domain data and later fuse the multi-coils data into one channel using sensitivity estimation maps, i.e., ; As for the initialization of the non-edge probability map , first the stationary haar wavelet transform operator transforms the initial MR image to the wavelet domain, then the coefficients of the high-frequency channel are normalized to [0,1] with a maximum-minimum normalization operator . Finally, the initial is obtained by inverting the normalized coefficients as follows:

| (17) |

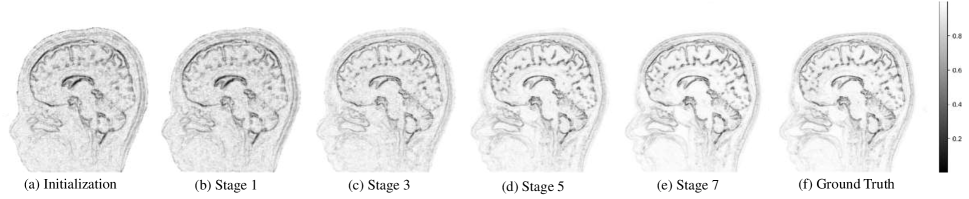

where is the coefficients of the high-frequency channel by pixel-wise absolute operation. The iterative reconstruction framework is shown in Figure 2, where and are used as inputs to the -stage deep unfolding reconstruction network to obtain the optimized edge information and the final reconstructed MR image . In Figure 3, we visualize the non-edge probability map while showing the evolution procedure at different iteration stages.

Training Strategy

Subsequent to the construction of the deep unfolding iterative framework, the training of our reconstruction model follows an end-to-end supervised learning paradigm. Similar with the process of initialization, fully sampled k-space data are exploited to create ground truth image and the non-edge probability map . The training strategy utilizes a multi-component loss function, composed of the mean square error (MSE) for fidelity in image reconstruction and the L1 norm for precision in edge information recovery. Consequently, the total loss function for the joint edge optimization within the reconstruction model is formulated as follows:

| (18) |

Here, symbolizes the number of samples in the training dataset sample scale while and are the respective trade-off parameters that modulate the loss components’ contribution to . In addition, adjusting all of the undetermined parameters in the iterative process manually is a cumbersome task accompanied by a degree of uncertainty. Therefore, to elevate the model training efficiency, all of the undetermined parameters along with the parameters from the edge recovery network (ERN) and the image denoising network (IDN), denoted by and , are collected as trainable entities. This framework ensures the self-adjusting capability of the hyper-parameters throughout the back-propagation process, fostering their inherent adaptability within the reconstruction architecture. With regards to multi-stage iterative model configurations, our investigative results utilizes a non-shared parameter strategy. Such an approach amplifies the quality of the reconstructed image in the context of our joint edge information optimization framework. Accordingly, the learnable parameter set for the proposed reconstruction model is expressed as , encompassing all the iterations and facilitating a tailored optimization at each discrete stage of the unfolding process.

| Method | Cartesian | Random | ||

| R=6 | R=10 | R=6 | R=10 | |

| Zero-Filled | 28.19 | 26.66 | 28.26 | 26.70 |

| U-Net | 37.78 | 35.34 | 38.23 | 35.11 |

| DCCNN | 39.05 | 37.22 | 39.29 | 37.33 |

| MoDL | 39.56 | 37.33 | 39.24 | 37.35 |

| VS-Net | 39.81 | 37.54 | 40.12 | 37.48 |

| RecurrentVarNet | 39.66 | 37.41 | 39.74 | 37.52 |

| EAMRI | 39.76 | 37.55 | 39.43 | 37.49 |

| ours | 39.92 | 37.61 | 40.15 | 37.60 |

Experimental Evaluation

Implementation Details

For our experiments, we employed two NVIDIA RTX 3090 Ti GPUs for our hardware and Pytorch is used as the deep learning framework for building the training and inferring pipeline. The training process utilized the ADAM optimizer [43], which is known for its adaptive learning capabilities. An initial learning rate of 0.01 was chosen to ensure a strong starting point for optimization, and we applied a cosine decay learning rate schedule to reduce the learning rate smoothly over epochs for enhancing the model’s convergence ability. Training was conducted with a small batch size of 2. We repeatedly passed the dataset through the model for 180 epochs to ensure thorough learning and convergence. Our model architecture chosen K = 7 stages in total, based on empirical evidence suggesting this to be optimal for the performance of our method. Each stage’s parameters operated under a non-shared strategy, which provided the flexibility needed to adapt to each specific set of the optimized stages. The ERN and IDN networks were built upon the original U-Net architecture, capitalizing on its symmetrical design with max-pooling layers in the encoder for undersampling and an equal number of transconvolution layers in the decoder for upsampling, ensuring detailed feature extraction and restoration. We opted for Kaiming initialization [44] for setting up the neural network parameters, a method designed to maintain adaptive variance as a countermeasure against gradients diminishing too rapidly during training. Regarding the loss function, we integrated trade-off parameters and , weighted as 1 and 0.1 to balance the total loss function.

| Method | Cartesian Random | Cartesian Equidistant | ||

| R=6 | R=10 | R=6 | R=10 | |

| Zero-Filled | 28.46 | 26.87 | 28.30 | 26.74 |

| U-Net | 30.94 | 28.52 | 30.87 | 28.44 |

| DCCNN | 31.20 | 28.66 | 31.21 | 28.59 |

| RefineGAN | 31.28 | 28.73 | 31.29 | 28.69 |

| ADMM-CSNet | 31.16 | 28.70 | 31.22 | 28.65 |

| HQS-Net | 31.42 | 28.84 | 31.33 | 28.73 |

| EAMRI | 31.45 | 28.80 | 31.37 | 28.74 |

| ours | 31.52 | 28.93 | 31.44 | 28.87 |

Dataset

For a comprehensive assessment, we engage in comparative experiments using both multi-coil and single-coil MRI data. Our ablation study and parameter strategy analysis are conducted on the multi-coil dataset for the parallel imaging scenario.

The multi-coil dataset employed is sourced from the dataset made public in conjunction with the MoDL study [33]. This dataset is derived from a 3D T2 CUBE sequence captured using a 12-coil parallel imaging setup. It encompasses a total of 360 slices allocated for training, complemented by a validation set consisting of 164 slices. Each image slice adheres to a resolution of 256x232. The sensitivity maps, which are indispensable for various parallel imaging reconstruction techniques, were calculated using the ESPIRIT algorithm [18] that utilize the center region of k-space to reconstruct the MR image, aiming at considering the most informative part of the MRI signal for image reconstruction.

In addition to multi-coil data, our methodology was also validated on the fastMRI single-coil knee dataset [46] including varied contrasts such as proton-density weighting with fat-saturated (PDFS) and proton-density (PD) imaging modalities. We randomly sampled 20% of both training and testing samples from the aforementioned repository. The single-coil dataset setting in our experiment included 4034 slices for training purposes and 745 slices designated for testing. Adhering to common evaluation protocols, all slices were uniformly cropped centrally to a standardized dimension of 320x320 pixels.

Quantitative and Qualitative Evaluation

To comprehensively assess the effectiveness of our proposed methodology, we conduct evaluations using both multi-coil and single-coil MRI datasets. The Peak Signal-to-Noise Ratio (PSNR) is utilized as the principal metric for quantitative analysis. In total, we have conducted comparisons with nine additional methods. Among them, U-Net[24], DCCNN[23] and RefineGAN[27] use a pure data-driven paradigm to reconstruct MR images in the image domain using neural network models. RefineGAN uses generative adversarial network and bi-domain cyclic loss function. MoDL[33], VS-Net[39], RecurrentVarNet[45], ADMM-CSNet[38] and HQS-Net[35] use deep unfolding paradigm, which utilize neural networks for unfolding the optimization process of MR images. EAMRI[15] takes the image edge information into account in the reconstruction method and they extract the edge information based on the edge detection operator to guide the generation of the attention map. All competing methods are fine-tuned to their optimal settings for a fair comparison.

In the context of multi-coil MRI data, we explore scenarios involving Cartesian and random undersampling schemes at acceleration factors of 6x and 10x, as documented in Table 1. The experiment results indicate that regardless of the undersampling templates or acceleration rates, our method outperforms the other techniques in terms of PSNR values. This is further supported by error maps presented in Figure 4, which clearly demonstrate that the proposed approach achieves the minimum reconstruction error. For single-coil MRI data, experiments are imparted at both 6x and 10x acceleration using random and equidistant Cartesian undersampling schemes. The quantitative results presented in Table 2 reveal our method’s superior performance in PSNR metric outpacing other algorithms. Supplementing our quantitative analysis is a visual examination of the single-coil reconstruction outcomes, shown in Figure 5, which showcase that our method not only produces reconstructions with precise edge details but also exhibits minimal errors when compared to the fully-sampled reference. Overall, these visual results illustrate our method’s adaptability and performance across variously accelerated MRI scenarios on both multi-coil and single-coil configurations.

Ablation Study

Effectiveness of Joint Edge Optimization

To verify the effectiveness of the joint edge information optimization mechanism, we compare the proposed model with the classical reconstruction model (1) with no edge information involved. Similar to the optimization of the proposed method, the model (1) is transformed by introducing auxiliary variables:

| (19) |

Afterward, the variables are separated to learn the optimization of auxiliary variables using an image denoising network. Moreover, the reconstructed image is updated using a single-step gradient descent with the following iterative format:

| (20) |

| (21) | ||||

Aside from the implementation of edge information optimization, the experimental configuration for model (19) aligns with the proposed method. We conduct a compared experiments on a multi-coil dataset with various accelerated factor ranging from 2 to 10 using random undersampling template. The data presented in Figure 6 clearly demonstrates the superiority of incorporating edge information in the image reconstruction process. We observe that integrating edge optimization markedly improves performance as evidenced by the increasement of PSNR metric. This enhancement supports the argument for the joint edge optimization mechanism’s beneficial role in improving image reconstruction quality.

Effectiveness of Deep Unfolding Network

The architecture of our proposed method employs a deep unfolding network strategy by integrating an ERN module and an IDN module. These two network modules simulate the proximal operators associated with undetermined regularization and parameters in the proposed joint edge optimization model. To validate the effectiveness of the two deep unfolding modules, we conduct an ablation study on ERN and IDN on the multi-coil dataset at 6x random sampling rates. The results are tabulated in Table 3 offering a comparison of reconstruction outcomes. From the compared results, the model’s performance enhancement was observed when deep unfolding modules are involved. When the ERN is involved, the PSNR value attain the increment of dB, validating the advantage of unfolding the edge-related proximal operator for image reconstruction. In addition, a further improvement of dB in PSNR values is observed compared to solely relying on edge-related proximal operator unfolding, which shows the positive effect of the ERN modules. These increments in performance metrics confirm the efficacy of the deep unfolding framework. By capitalizing on the inherent representational capabilities of neural networks, our method excels in reconstructing MR images of superior quality.

| IDN | ERN | PSNR | SSIM |

|---|---|---|---|

| ✗ | ✗ | 35.34 | 0.9278 |

| ✗ | ✓ | 38.61 | 0.9677 |

| ✓ | ✗ | 39.45 | 0.9753 |

| ✓ | ✓ | 40.15 | 0.9843 |

Discussion on the Iterative Parameter Strategy

In a multi-stage deep unfolding reconstruction architecture, there are typically two parameter strategies available for selection: the recursive shared-parameter form and the non-shared form that assigns independent parameters to each iteration stage. The shared-parameter form is characterized by a compact parameter set, promoting speed up inference by minimizing the computational footprint. Conversely, the non-shared form delivers enhanced flexibility, with each stage fine-tuned for optimal performance at the potential cost of increased parameter count.

Our experimental involved testing the performance impact of both strategies across different iteration stages ranging from 1 to 10 on a multi-coil dataset subjected to 6x random sampling. The outcomes of this investigation are graphically presented in Figure 7, providing quantitative evidence for the comparative analysis. The result curves illustrate a superior performance yield when deploying the non-shared parameter configuration within our proposed model. This suggests that the capability to customize each stage’s parameters independently facilitates more effective optimization. Furthermore, an analysis of the trend in reconstruction quality as a function of iterative stages reveals a positive trajectory up until the seventh iteration in the non-shared configuration. Additional iterations beyond this threshold will lead to a decrease in overall performance, a phenomenon we attribute to the increasing parameter scale that lead the model to be overfitting. These observations affirm the importance of tailoring the iterative parameter strategy to the specific requirements of the reconstruction task. Our proposed method, employing the non-shared parameter strategy, appears to reconcile the model’s complexity with the imperative for high-quality image reconstruction.

Conclusion

In this paper, we propose a joint edge optimization deep unfolding network for accelerated MRI reconstruction. In order to explicitly and efficiently utilize the edge information, we design a novel edge utilization mechanism to explicitly optimize the edge information and use it to guide the reconstruction of high-quality MR images. Specifically, we correlate the edge information with the non-edge probability map and design a reconstruction model that incorporates edge-independent constraint terms along with co-regularizer terms between edge and MR image. In addition, we utilize the deep neural networks to unfold the optimization process of the reconstruction model aiming to automatically learn the prior information of MR images and edges. The ablation study verifies the effectiveness of the proposed edge utilization mechanism and the deep unfolding network modules. In the quantitative and qualitative evaluation, our method outperforms the compared methods under different sampling schemes and sampling factors. In future work, we will explore the possibility of joint edge optimization deep unfolding networks for other low-level vision tasks.

References

- [1] L. I. Rudin, S. Osher, and E. Fatemi, “Nonlinear total variation based noise removal algorithms,” Physica D: nonlinear phenomena, vol. 60, no. 1-4, pp. 259–268, 1992.

- [2] Z. Shen, “Wavelet frames and image restorations,” in Proceedings of the International Congress of Mathematicians 2010 (ICM 2010) (In 4 Volumes) Vol. I: Plenary Lectures and Ceremonies Vols. II–IV: Invited Lectures. World Scientific, 2010, pp. 2834–2863.

- [3] S. Ravishankar and Y. Bresler, “MR image reconstruction from highly undersampled k-space data by dictionary learning,” IEEE Transactions on Medical Imaging, vol. 30, no. 5, pp. 1028–1041, 2010.

- [4] Y. Luo, J. Ling, Y. Gong, and J. Long, “A cosparse analysis model with combined redundant systems for MRI reconstruction,” Medical Physics, vol. 47, no. 2, pp. 457–466, 2020.

- [5] B. Ning, X. Qu, D. Guo, C. Hu, and Z. Chen, “Magnetic resonance image reconstruction using trained geometric directions in 2d redundant wavelets domain and non-convex optimization,” Magnetic Resonance Imaging, vol. 31, no. 9, pp. 1611–1622, 2013.

- [6] Y. Wang, J. Wang, and Z. Xu, “On recovery of block-sparse signals via mixed lq norm minimization,” EURASIP Journal on Advances in Signal Processing, vol. 2013, no. 1, pp. 1–17, 2013.

- [7] C. Chen and J. Huang, “Exploiting the wavelet structure in compressed sensing MRI,” Magnetic Resonance Imaging, vol. 32, no. 10, pp. 1377–1389, 2014.

- [8] J. Yao, Z. Xu, X. Huang, and J. Huang, “An efficient algorithm for dynamic MRI using low-rank and total variation regularizations,” Medical Image Analysis, vol. 44, pp. 14–27, 2018.

- [9] X. Qu, Y. Hou, F. Lam, D. Guo, J. Zhong, and Z. Chen, “Magnetic resonance image reconstruction from undersampled measurements using a patch-based nonlocal operator,” Medical Image Analysis, vol. 18, no. 6, pp. 843–856, 2014.

- [10] M. Belge, M. E. Kilmer, and E. L. Miller, “Wavelet domain image restoration with adaptive edge-preserving regularization,” IEEE Transactions on Image Processing, vol. 9, no. 4, pp. 597–608, 2000.

- [11] H. Ji, Y. Luo, and Z. Shen, “Image recovery via geometrically structured approximation,” Applied and Computational Harmonic Analysis, vol. 41, no. 1, pp. 75–93, 2016.

- [12] K. Nazeri, E. Ng, T. Joseph, F. Qureshi, and M. Ebrahimi, “Edgeconnect: Structure guided image inpainting using edge prediction,” in Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, 2019, pp. 0–0.

- [13] A. Luthra, H. Sulakhe, T. Mittal, A. Iyer, and S. Yadav, “Eformer: Edge enhancement based transformer for medical image denoising,” arXiv preprint arXiv:2109.08044, 2021.

- [14] M. Chen and Z. Liu, “Edbgan: Image inpainting via an edge-aware dual branch generative adversarial network,” IEEE Signal Processing Letters, vol. 28, pp. 842–846, 2021.

- [15] H. Yang, J. Li, L. M. Lui, S. Ying, J. Shi, and T. Zeng, “Fast MRI reconstruction via edge attention,” arXiv preprint arXiv:2304.11400, 2023.

- [16] M. A. Griswold, P. M. Jakob, R. M. Heidemann, M. Nittka, V. Jellus, J. Wang, B. Kiefer, and A. Haase, “en-USGeneralized autocalibrating partially parallel acquisitions (GRAPPA).” en-USMagnetic Resonance in Medicine, p. 1202–1210, Jun 2002. [Online]. Available: http://dx.doi.org/10.1002/MRm.10171

- [17] K. P. Pruessmann, M. Weiger, M. B. Scheidegger, and P. Boesiger, “SENSE: sensitivity encoding for fast MRI,” Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, vol. 42, no. 5, pp. 952–962, 1999.

- [18] M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala, and M. Lustig, “en-USESPIRiT–an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA.” en-USMagnetic Resonance in Medicine, vol. 71, no. 3, p. 990–1001, Mar 2014. [Online]. Available: http://dx.doi.org/10.1002/MRm.24751

- [19] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature, vol. 521, no. 7553, pp. 436–444, 2015.

- [20] Y. Luo, Q. Huang, J. Ling, K. Lin, and T. Zhou, “Local and global knowledge distillation with direction-enhanced contrastive learning for single-image deraining,” Knowledge-Based Systems, vol. 268, p. 110480, 2023.

- [21] Y. Luo, B. You, G. Yue, and J. Ling, “Pseudo-supervised low-light image enhancement with mutual learning,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [22] S. Wang, Z. Su, L. Ying, X. Peng, S. Zhu, F. Liang, D. Feng, and D. Liang, “Accelerating magnetic resonance imaging via deep learning,” in 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI). IEEE, 2016, pp. 514–517.

- [23] J. Schlemper, J. Caballero, J. V. Hajnal, A. N. Price, and D. Rueckert, “A deep cascade of convolutional neural networks for dynamic MR image reconstruction,” IEEE Transactions on Medical Imaging, vol. 37, no. 2, pp. 491–503, 2017.

- [24] C. M. Hyun, H. P. Kim, S. M. Lee, S. Lee, and J. K. Seo, “en-USDeep learning for undersampled MRI reconstruction,” en-USPhysics in Medicine Biology, p. 135007, May 2017. [Online]. Available: http://dx.doi.org/10.1088/1361-6560/aac71a

- [25] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18. Springer, 2015, pp. 234–241.

- [26] L. Sun, Z. Fan, Y. Huang, X. Ding, and J. Paisley, “Compressed sensing MRI using a recursive dilated network,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, no. 1, 2018.

- [27] T. M. Quan, T. Nguyen-Duc, and W.-K. Jeong, “Compressed sensing MRI reconstruction using a generative adversarial network with a cyclic loss,” IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1488–1497, 2018.

- [28] G. Yang, S. Yu, H. Dong, G. Slabaugh, P. L. Dragotti, X. Ye, F. Liu, S. Arridge, J. Keegan, Y. Guo et al., “DAGAN: Deep de-aliasing generative adversarial networks for fast compressed sensing MRI reconstruction,” IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1310–1321, 2017.

- [29] S. Wang, H. Cheng, L. Ying, T. Xiao, Z. Ke, H. Zheng, and D. Liang, “DeepcomplexMRI: Exploiting deep residual network for fast parallel MR imaging with complex convolution,” Magnetic Resonance Imaging, vol. 68, pp. 136–147, 2020.

- [30] K. Gregor and Y. LeCun, “Learning fast approximations of sparse coding,” in Proceedings of the 27th International Conference on International Conference on Machine Learning, 2010, pp. 399–406.

- [31] V. Monga, Y. Li, and Y. C. Eldar, “Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing,” IEEE Signal Processing Magazine, vol. 38, no. 2, pp. 18–44, 2021.

- [32] W. Dong, P. Wang, W. Yin, G. Shi, F. Wu, and X. Lu, “Denoising prior driven deep neural network for image restoration,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 10, pp. 2305–2318, 2018.

- [33] H. K. Aggarwal, M. P. Mani, and M. Jacob, “Modl: Model-based deep learning architecture for inverse problems,” IEEE Transactions on Medical Imaging, vol. 38, no. 2, pp. 394–405, 2018.

- [34] A. Sriram, J. Zbontar, T. Murrell, A. Defazio, C. L. Zitnick, N. Yakubova, F. Knoll, and P. Johnson, “End-to-end variational networks for accelerated MRI reconstruction,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part II 23. Springer, 2020, pp. 64–73.

- [35] B. Xin, T. Phan, L. Axel, and D. Metaxas, “Learned half-quadratic splitting network for MR image reconstruction,” in International Conference on Medical Imaging with Deep Learning. PMLR, 2022, pp. 1403–1412.

- [36] Z. Fabian, B. Tinaz, and M. Soltanolkotabi, “Humus-net: Hybrid unrolled multi-scale network architecture for accelerated MRI reconstruction,” Advances in Neural Information Processing Systems, vol. 35, pp. 25 306–25 319, 2022.

- [37] J. Zhang and B. Ghanem, “ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1828–1837.

- [38] Y. Yang, J. Sun, H. Li, and Z. Xu, “ADMM-CSNet: A deep learning approach for image compressive sensing,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 3, pp. 521–538, 2018.

- [39] J. Duan, J. Schlemper, C. Qin, C. Ouyang, W. Bai, C. Biffi, G. Bello, B. Statton, D. P. O’regan, and D. Rueckert, “VS-Net: Variable splitting network for accelerated parallel MRI reconstruction,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, October 13–17, 2019, Proceedings, Part IV 22. Springer, 2019, pp. 713–722.

- [40] G. Yang, L. Zhang, M. Zhou, A. Liu, X. Chen, Z. Xiong, and F. Wu, “Model-guided multi-contrast deep unfolding network for MRI super-resolution reconstruction,” in Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 3974–3982.

- [41] D. Geman and C. Yang, “Nonlinear image recovery with half-quadratic regularization,” IEEE Transactions on Image Processing, vol. 4, no. 7, pp. 932–946, 1995.

- [42] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [43] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [44] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1026–1034.

- [45] G. Yiasemis, J.-J. Sonke, C. Sánchez, and J. Teuwen, “Recurrent variational network: a deep learning inverse problem solver applied to the task of accelerated MRI reconstruction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 732–741.

- [46] J. Zbontar, F. Knoll, A. Sriram, M. Muckley, M. Bruno, A. Defazio, M. Parente, K. Geras, J. Katsnelson, H. Chandarana, Z. Zhang, M. Drozdzal, A. Romero, M. Rabbat, P. Vincent, J. Pinkerton, D. Wang, N. Yakubova, E. Owens, C. Zitnick, M. Recht, D. Sodickson, and Y. Lui, “en-USfastMRI: An open dataset and benchmarks for accelerated MRI.” en-USarXiv: Computer Vision and Pattern Recognition,arXiv: Computer Vision and Pattern Recognition, Nov 2018.