Joint Device Selection and Power Control for Wireless Federated Learning

Abstract

This paper studies the joint device selection and power control scheme for wireless federated learning (FL), considering both the downlink and uplink communications between the parameter server (PS) and the terminal devices. In each round of model training, the PS first broadcasts the global model to the terminal devices in an analog fashion, and then the terminal devices perform local training and upload the updated model parameters to the PS via over-the-air computation (AirComp). First, we propose an AirComp-based adaptive reweighing scheme for the aggregation of local updated models, where the model aggregation weights are directly determined by the uplink transmit power values of the selected devices and which enables the joint learning and communication optimization simply by the device selection and power control. Furthermore, we provide a convergence analysis for the proposed wireless FL algorithm and the upper bound on the expected optimality gap between the expected and optimal global loss values is derived. With instantaneous channel state information (CSI), we formulate the optimality gap minimization problems under both the individual and sum uplink transmit power constraints, respectively, which are shown to be solved by the semidefinite programming (SDR) technique. Numerical results reveal that our proposed wireless FL algorithm achieves close to the best performance by using the ideal FedAvg scheme with error-free model exchange and full device participation.

Index Terms:

Federated Learning, over-the-air computation, device selection, power control, semidefinite relaxation.I Introduction

The future 6th generation (6G) wireless communication systems are envisioned to support various intelligent applications and services, which are empowered by the significant increase of wireless edge devices (e.g., mobile phones and sensors) with growing computation and communication capabilities [1]. A vast amount of data generated by the edge devices can be utilized to train machine learning (ML) models to further enhance the intelligence of those applications and services [2]. Conventional machine learning methods, particularly those based on deep neural networks (DNN), are centralized and require to collect all the raw data to the central server or cloud in order to train the artificial intelligence (AI) model [3]. However, certain privacy and security issues arise during the data migrations, and limited wireless resource also poses some new technical challenges for the design of wireless AI systems [4, 5].

To address the issues of the centralized ML, federated learning (FL), a new distributed ML paradigm, was proposed in [6], which achieves great success and becomes increasingly popular in both the academia and industry. In FL, the distributed terminal devices, orchestrated by a single parameter server (PS), collaboratively train an AI model in an iterative fashion. During the whole iterative process, all participated terminal devices only exchange the model parameters with the PS and keep the raw data locally to protect the privacy and security. Nonetheless, FL faces several new challenges when being implemented in the wireless scenarios: data heterogeneity: different from the centralized ML, FL highly suffers from the data heterogeneity [7, 8], which arises when the distributions of the generated data vary from device to device; and communication complexity: the total training process for FL consumes a large amount of communication resources for serving large bunches of terminal devices, since the desired ML model is in general of high dimension and the number of updating rounds in the training process is thus considerably large [9, 10].

Aimed at enhancing the communication efficiency of the FL, comprehensive studies have been done from a communication perspective [11, 12, 14, 13, 15, 16, 19, 20, 22, 17, 21, 23, 24]. The authors in [11] proposed a joint communication and learning framework and formulated an optimization problem considering the user selection and wireless resource allocation to minimize the FL training loss. In [12], the authors identified the temporal dependency and varying significance of the training rounds, and proposed a joint device selection and bandwidth allocation scheme to maximize the weighted sum of selected clients in the long-term view. In [13], the authors proposed an update-aware device scheduling and resource allocation policy and analyzed the convergence of the FL algorithm with device scheduling. In [14], under the latency constraint, the authors proposed a joint device scheduling and resource allocation scheme to minimize the global loss of wireless FL, which achieves a desirable trade-off between the number of required training rounds and the latency per round. In [15], the authors proposed a joint wireless resource and quantization bits allocation policy to minimize the quantization error while guaranteeing the transmission outage probability of the uplink transmissions for the wireless FL. In [16], the authors proposed a control algorithm to determine the learning parameter to minimize the optimality gap between the expected and optimal loss function values. However, all the aforementioned works are conducted under the digital communications, and consequently the communication overhead and latency still increase with the number of participated edge devices [17]. Recently, by investigating the waveform superposition nature of the wireless multiple access (MAC) channels [18], over-the-air computation (AirComp) enabled analog uplink transmission for model aggregation methods have been proposed for the FL [19, 20, 22, 17, 21, 23, 24]. Specifically, in [19], the authors proposed a joint device scheduling and beamforming design to minimize the mean square error (MSE) of the aggregated signals to accelerate the convergence of the AirComp FL. In [20], the authors proposed an energy-aware device scheduling algorithm under the energy constraint to optimize the AirComp FL performance. In [17], the authors proposed a broadband AirComp FL and investigated the corresponding power control and device scheduling problem, and the authors in [21] further adopts a one-bit quantization scheme to modify the policy adopted in [17]. The authors in [22] proposed a gradient aware power control scheme to enhance the performance of the AirComp FL. In [23], the authors proposed a power control to minimize the optimality gap between the expected and optimal global loss values of the AirComp FL, and parallelly in [24], the authors proposed a joint device selection and power control for the AirComp FL with uniform power scaling and equal weights model aggregation.

All the above researches about FL over wireless channels only considered the uplink transmissions for the model parameters from terminal devices to the PS, assuming the availability of accurate global model at the devices through perfect downlink transmissions. However, the downlink transmissions usually suffer from quantization error, limited transmit power, limited bandwidth, and additive noise, which degrades the performance of the considered FL systems [25, 26, 27, 28, 29]. Therefore, broadcasting of inaccurate global model is also an important issue for the implementation of the FL algorithms. Without considering the wireless channels, the authors in [25] proposed a linear projection method to broadcast a compressed global model to the devices, and the authors in [26] derived the sufficient conditions for controlling the signal-to-noise ratios (SNRs) of both the downlink and uplink transmission to maintain the linear convergence rate of the FL algorithm [26]. Different from [25, 26] and under wireless implementations of FL, the authors in [27, 28] provided the convergence analysis of both the digital and analog downlink transmissions and showed the advantages of the analog downlink transmission, and the same result was presented in [29] numerically. To the best of our knowledge, there are few existing works addressing how to efficiently mitigate the impact of both the downlink and uplink wireless communications on the FL algorithm.

This paper provides a comprehensive analysis on the wireless FL algorithm, considering the analog (uncoded) downlink transmissions for global model broadcasting and AirComp enabled analog uplink transmissions for model aggregation, due to the superiority of the analog downlink and uplink transmissions for wireless FL as mentioned above. Compared to the previous works that only account for the uplink communications of FL [19, 20, 22, 17, 21, 23, 24] and only focus on the convergence analysis for FL [26, 27, 28], the goals of this paper are not only to investigate the impact of both the downlink and uplink wireless communications on the convergence behavior of the considered FL algorithm, but also to mitigate this impact to enhance the FL performance. The main contribution of this paper is summarized as follows:

-

•

We propose an AirComp-based adaptive reweighing scheme for model aggregation, where the adaptive weights are directly determined by the uplink transmit power values of the participated devices in each communication round. With respect to our proposed policy, we analyze the convergence behavior of the wireless FL algorithm and derive the upper bound on the expected optimality gap between the expected and optimal global loss values, which determines how bias the FL converges and theoretically quantifies the impact of both the downlink and uplink wireless communications on the convergence of the wireless FL algorithm, and thus needs to be minimized to enhance the FL performance.

-

•

With only instantaneous channel state information (CSI) known per round of the learning process, we formulate the optimality gap minimization problem as optimizing over the device selection and power control round by round, under both the individual and sum uplink transmit power constraints, respectively. Though the optimality gap minimization problem is a mixed integer programming (MIP) problem, we transform it into a continuous quadratically constrained ratio of two quadratic functions (QCRQ) minimization problem, which is efficiently solved by the semidefinite relaxation (SDR) technique. Furthermore, based on the optimal SDR solution, we derive the optimal device selection and power control to minimize the optimality gap for both the individual and sum uplink power constraint cases.

The reminder of this article is organized as follows. Section II presents the system model. In Section III, we provide the convergence analysis results of the wireless FL system in terms of the optimality gap. In Section IV, we formulate the optimality gap minimization problem and find the optimal device selection and power control to minimize the optimality gap. Numerical results are presented in Section V. Finally, Section VI concludes this paper.

Notation: The bold lower-case letter denotes a vector; the bold upper-case letter denotes a matrix; the calligraphic upper-case letter denotes a set. denotes the expectation operator. denotes the gradient operator. For a vector , denotes the Euclidean norm of ; denotes the transpose of ; denotes the Hermitian transpose of a complex vector . denotes the inner product between vectors and . and denote the null vector and all-one vector, respectively. For a matrix , , , and denote the transpose, trace, and rank, of , respectively. denotes the -th element of . denotes a diagonal matrix with being its diagonal elements. means that matrix is positive semidefinite. denotes the identity matrix. and denote the sets of real numbers and complex numbers, respectively. We denote a circularly symmetric complex Gaussian (CSCG) distribution with the real and imaginary components with variance by . For a set , denotes its cardinality.

II System Model

II-A Preliminaries

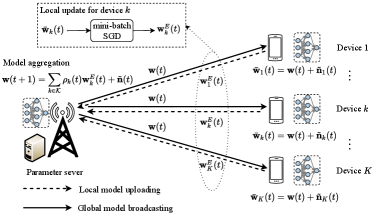

We consider a wireless FL system, as shown in Fig. 1, where one PS coordinates a set of terminal devices through wireless channels to cooperatively train a shared ML model (e.g., DNN), denoted by of dimension . Each terminal device collects its own labeled training data, and constitutes a local data set with data samples, where is the -th input data with dimensions and is the labeled output corresponding to . Then, the total training data set is given as of size .

Though the PS has no access to the data samples distributed at the terminal devices due to the privacy concern, the goal of training a global model can be achieved by solving the following distributed learning problem

| (1) |

where is the fraction of data samples for device and is the local loss function defined as

| (2) |

with being an empirical sample-wise loss function defined by the learning task that quantifies the loss of the model for sample .

For the implementation of FL algorithms, the system solves the distributed learning problem (1) in an iterative fashion following the widely used broadcasting-computation-aggregation (BCA) principle, which involves the following three steps in each iteration: the PS broadcasts a global model to the terminal devices; ) terminal device updates the global model to a local model after several local learning iterations based on its local data samples; the PS aggregates ’s to generate a new global model . In the following, we discuss the considered wireless FL algorithm.

II-B Wireless FL Algorithm

This paper considers the scenario where both the downlink and uplink communications are performed in the analog manner and the quasi-static fading channel model is adopted, i.e., both the downlink and uplink channels remain unchanged during each communication round (corresponding to one FL iteration containing all the three BCA steps) and are independent across different communication rounds following the Rayleigh distribution. Specifically, at the -th communication round, the three steps of the wireless FL algorithm are described as follows:

-

(1)

Broadcasting: In this step, the PS broadcasts the vector to terminal devices, containing the information of the global model and with the downlink transmit power value satisfying

(3) where is the downlink transmit power budget at the -the round. Hence, the received vector at the -th terminal device is given as

(4) where is the complex-valued downlink channel coefficient between the PS and terminal device , and is the independent identically distributed (i.i.d.) CSCG noise vector following the distribution with being the downlink noise power. With perfect knowledge of and the CSI of the downlink channels, terminal device estimates the global model by descaling the received signal , i.e.,

(5) where is the estimated global model for terminal device at the -th round, is the selection indicator for the device at the -th round ( implies that the device is selected to participate in the training at the -th round, and otherwise, ), and is the equivalent noise at the device . Then, terminal device sets its current local model to .

-

(2)

Local Model Update: In this step, terminal device updates its local model by minimizing its local objective (2) via a local optimization algorithm, e.g., the mini-batch stochastic gradient descent (SGD) method, based on its collected data set . When implementing the mini-batch SGD algorithm, the local dataset at the device is divided into several mini-batch with size , and the device runs SGD iterations with each SGD iteration corresponding to one mini-batch. At the -th SGD iteration, , the local model is updated as

(6) where is the learning rate at the -th round, is a mini-batch with size and its data points being independently and uniformly chosen from , and is the stochastic gradient of the local loss function with respect to (w.r.t.) the -th model parameter and randomly sampled mini-batch , i.e.,

(7) with being the -th training data in mini-batch . The initial local model parameter at device before training is set as in (5), i.e., . After SGD iterations, the local model parameter at device is updated as .

-

(3)

Model Aggregation: In this step, the terminal devices upload their updated local model parameters ’s to the PS, and the PS aggregates the received local models to generate a new global model. For the uplink transmissions of ’s, the terminal devices transmit their local model parameters concurrently through AirComp by exploiting the waveform superposition nature of the wireless MAC channel, since the information of interest at the PS for aggregation is just the weighted summation of the local model parameters. Specifically, the updated model parameter at the device is multiplied with a pre-processing factor , which is given as

(8) where is the uplink transmit power value at device , is the complex uplink channel coefficient from device to the PS. With perfect CSI of the uplink channels, the received vector at the PS is given as

(9) where is the i.i.d. CSCG noise vector following the distribution . In this paper, the uplink transmit power values at the devices satisfy the following two types of constraints: For the individual uplink transmit power constraint at each device, the transmit power value of device is supposed to satisfy

(10) where is the individual power budget at the -th round of device ; for the sum uplink transmit power constraint over all devices, the transmit power values of the devices are supposed to satisfy

(11) where is the sum power budget at the -th round of all the participated devices.

The PS scales the received signal with a factor to aggregate and update the global model parameter as

(12) where is set as the summation of all the products , i.e., , with perfect knowledge on all and at the PS. Hence, based on (9) and (12), the model aggregation is given as111The proposed model aggregation scheme requires perfect CSI about both the downlink and uplink channels. Nevertheless, our design and analysis can be extended to the case with imperfect CSI, and the impact of imperfect CSI will be studied for future work.

(13) where is the weight of for aggregation satisfying and is the equivalent noise.

Remark 1.

Due to the dynamic nature of the wireless channels, the terminal devices may encounter a relatively weak downlink channel, i.e., , when downloading the global model from the PS. Then, the received model at device will be severely damaged by the equivalent noise , as shown in (5). Similarly, the devices may also encounter a relatively weak uplink channel, i.e., , when uploading the updated model to the PS. Then, the device may not be capable of transmitting due to the limited transmit power budget according to (8), (10), and (11). Thus, device selection according to the dynamic uplink and downlink channel conditions under the limited transmit power budget is necessary for the wireless FL systems.

As mentioned in Remark 1, when practically implementing the wireless FL algorithm, not all devices are able to participate the training over the whole time. Hence, when considering both the downlink and uplink communications between the PS and the terminal devices, we need to develop an efficient device selection scheme while guaranteeing the convergence of the considered algorithm.

Remark 2.

In our proposed weighted model aggregation scheme (13), under the wireless environment, the adaptive weights in each round are determined directly by the uplink transmit power values of the participated terminal devices, which are controllable and can be optimized to mitigate the impact of wireless communications on the convergence of the considered wireless FL algorithm. This motivates us to design a proper joint device selection and power control scheme.

III Convergence Analysis

In this section, we analyze the convergence behavior of the considered wireless FL algorithm presented in Section II for the general smooth non-convex learning problems. We first present the assumptions and preliminaries, and then present the theoretical results on convergence for the considered wireless FL algorithm in terms of the optimality gap between the expected and optimal global loss values.

III-A Preliminaries

First, we make the following standard assumptions that are commonly adopted in the convergence analysis of the BCA-type FL algorithms [30, 23, 24, 15, 31].

Assumption 1 (L-smooth).

The global loss function is differentiable and the gradient is uniformly Lipschitz continuous with a positive Lipschitz constant , i.e., ,

| (14) |

which is equivalent to

| (15) |

Assumption 2 (Unbiased and bounded variance mini-batch SGD).

The mini-batch SGD is unbiased, i.e.,

| (16) |

and the variance of stochastic gradients in each device is bounded, i.e.,

| (17) |

Assumption 3 (Bounded Gradient Divergence).

The variance of the local gradients for each local device is bounded as

| (18) |

where measures the data heterogeneity [32].

Based on the global model broadcasting in (5), the mini-batch local SGD (6) and (7), and the proposed adaptive reweighing model aggregation scheme (13), the PS updates the global model at the -th round as

| (19) | ||||

where follows the local mini-batch SGD iterations in (6), is to due to , and follows (5) and .

Define the local model difference as

| (20) | ||||

and define the virtual noisy global model as

| (21) |

Thus, we can further rewrite as

| (22) |

III-B Theoretical Results on Convergence

We first present the following lemmas and their proofs that are used in the proof of Theorem 1.

Lemma 1.

The virtual noisy global model defined in (21) is unbiased, i.e.,

| (23) |

and the variance is bounded as

| (24) |

Proof:

Lemma 2.

The expectation of the square norm of the model difference at each round for device is bounded by

| (26) | ||||

Lemma 3.

The sum of the expected square norm of the difference between the local updated model at each SGD iteration and the previous global model is bounded by

| (27) | ||||

Lemma 4.

The expectation of the square norm of the gradient of the global loss function at each round is bounded by

| (28) |

where is the optimal global loss function value.

The proofs of Lemma 2, 3, 4 follow the same ideas in [15], [31], [33], with a slight modification according to the problem that we consider. Now, we present the main convergence analysis results in the following theorem.

Theorem 1.

Proof:

Please see Appendix. A. ∎

Remark 3.

The expected optimality gap between the expected and optimal global loss values given in the right hand side (RHS) of (1) reveals several important insights:

-

1.

When the learning rate is set small enough, i.e., , we have , which implies . In this case, the proposed wireless FL algorithm converges to a biased solution, and the expected optimality gap is upper bounded only by the the last two terms on the RHS of (1), which is a linear combination of the performance gap in each round given in (1).

-

2.

The performance gap in each round consists of 4 terms - with clear physical meanings: term is caused by gradient variance and data heterogeneity, term is caused solely by data heterogeneity, and terms and are caused by the downlink and uplink wireless communications, respectively.

-

3.

If the FL algorithm is performed in the ideal environment, where the broadcasting and model aggregation are not decayed by wireless channels, including the channel fading and additive noise, terms and equals to zero. In this case, the proposed FL algorithm still converges to a biased solution due to the impact of gradient variance and data heterogeneity, which coincides the analysis of inconsistency issue of FL in [34].

IV Optimality Gap Minimization

In this section, we present the joint device selection and power control to minimize the optimimality gap between the expected and optimal global loss values derived in Theorem 1 for the considered wireless FL system under both the individual and sum power constraints, respectively.

IV-A Problem Formulation

As mentioned in Remark 3, by properly choosing the learning rate to guarantee the convergence of the proposed wireless FL algorithm, the optimality gap between the expected and optimal global loss values derived in (1) becomes

| (31) |

which determines the performance of the algorithm when converges and needs to be minimized. Specifically, with instantaneous CSI in each round, we minimize the performance gap over the device selection indicator , downlink transmit power value and uplink transmit power value round by round. Besides, we ignore the constant terms and in that is related to the gradient variance and data heterogeneity, and focus on minimizing the sum of terms and in , since the goal of this paper is to minimize the impact of the downlink and uplink wireless communications on the convergence of the considered wireless FL algorithm. Hence, by dropping the notation for simplicity, we can formulate the following performance gap minimization problem as

| (32a) | ||||

| s. t. | (32b) | |||

| (32c) | ||||

| (32d) | ||||

where , , (32b) denotes the feasible set of the selection indicators, and (32c) is the downlink transmit power constraint with . For the individual power constraint, in (32d) is given as

| (33) |

which is the matrix-vector form of (10), with , being a diagonal matrix with and all other entries being zero; for the sum power constraint, in (32d) is given as

| (34) |

which is the matrix-vector form of (11), with .

Remark 4.

Intuitively, directly minimizing in (31) over multiple rounds with non-causally known CSI could result in better performance than minimizing round by round. However, the unavailability of non-causal information of model parameter and at the PS and device , which determines the downlink and uplink transmit power constraints (32c) and (32d), hinders us to directly apply joint optimization over multiple rounds. Though some existing literature introduces additional assumption that or is upper bounded by some constants [16, 35], which can avoid the requirement of non-causal model information, such assumption is not satisfied for many practical situations, especially when the model dimension is large, and is thus beyond scope of this paper. Hence, this paper chooses to minimize round by round with only causal CSI, since is a linear combination of , as discussed above.

IV-B Optimal Solution

In this section, we propose a joint device selection and power control algorithm to solve problem (32). Obviously, the formulated optimization problem (32) is a typical MIP problem, where the difficulty for solving this problem is the binary selection variables and the non-convex objective function (32a).

However, we could replace the device selection with the uplink transmit power value , based on the observation that the selection indicator is always coupled with uplink transmit power value , and independent of the downlink transmit power value , as shown in (32). Hence, the device is selected only when its uplink transmit power value is positive. Thus, we can drop the selection indicator in (32a) and (32d) and its associated constraint (32b). Besides, to minimize the objective function (32a), the constraint (32c) should be met with equality, i.e., , since (32a) monotonically decreases with . Hence, problem (32) can be equivalently transformed as

| (35a) | ||||

| s. t. | (35b) | |||

For the individual uplink power constraint case, is given as

| (36) |

and for the sum uplink power constraint case, is given as

| (37) |

By rearranging the objective function (35a) in the matrix-vector form, problem (35) is equivalent to

| (38) | ||||

| s. t. |

where with and . Now, problem (38) is a typical QCRQ minimization problem. It has been proved in [36] that with the given constraint (35b) for both the individual and sum uplink power constraint cases (36) and (37), the QCRQ problem (38) can be equivalently rewritten as the following homogeneous version by introducing a real auxiliary variable with , i.e.,

| (39a) | ||||

| s. t. | (39b) | |||

| (39c) | ||||

where for the individual uplink power constraint case, in (39c) is given as

| (40) |

and for the sum uplink power constraint case, in (39c) is given as

| (41) |

Though problem (39) is still non-convex, it can be approximated by its SDR [37]. First, we make the change of variables , and rewrite (39) as

| (42a) | ||||

| s. t. | (42b) | |||

| (42c) | ||||

where and are given as

| (43) |

For the individual uplink power constraint case, in (42c) is given as

| (44) |

where is given as

| (45) |

and for the sum uplink power constraint case, in (42c) is given as

| (46) |

where is given as

| (47) |

Next, by introducing a new variable , which is positive semidefinite (PSD) with rank-one, problem (42) is equivalent to

| (48a) | ||||

| s. t. | (48b) | |||

| (48c) | ||||

| (48d) | ||||

| (48e) | ||||

where for the individual uplink transmit power constraint case, we obtain

| (49) |

for the sum uplink transmit power constraint case, we obtain

| (50) |

Now, problem (48) is still non-convex due to the non-convex rank-one constrain (48d). However, we can ignore the rank-one constraint (48d) and consider the relaxed version of problem (48) as

| (51a) | ||||

| s. t. | (51b) | |||

which is convex and can be efficiently solved by using CVX [38], where we denote the optimal solution to problem (51) as . In the following Theorem 2, we show how to directly derive the optimal solution to the original problem (35) based on for both the individual and sum uplink transmit power constraint cases.

Theorem 2.

Given the optimal solution to problem (51) for both the individual and sum uplink transmit power constraint cases, we construct its -th order leading principal submatrix by deleting its st row and column, which is rank-one and can be decomposed as . Then, the optimal solution to the original problem (35) is given as , where is the -th diagonal element of .

Proof:

The rank-one property of can be explored by utilizing the strong duality between problem (51) and its dual problem, and the special structure of , , , and , similar to the proof of Theorem 1 in [39]. Then, given the rank-one decomposition of as , it’s easy to verify that there always exists a rank-one decomposition of as with . Finally, according to Theorem 3.2 in [36], the optimal solution to the original problem (35) is obtained as . ∎

Finally, the proposed wireless FL algorithm is summarized in Algorithm 1. During each communication round, our proposed device selection and power control scheme only requires to solve one semidefinite programming (SDP) problem in (51). Since the number of the constraints is less than the problem size , the computation complexity of solving problem (51) is [37, 40], which is polynomial w.r.t. the number of the devices.

V Numerical Results

This section evaluates the performance of our proposed wireless FL algorithm for image classification on the well-known MNIST [41] and CIFAR-10 [42] datasets, respectively, where MNIST dataset consists of 10 classes of black-and-white handwriting digits ranging from “0” to “9” with 60000 training and 10000 test samples, and CIFAR-10 dataset consists of 10 classes of colored objects with 50000 training and 10000 test samples.

Here, both the uplink and downlink channels follow the i.i.d. Rayleigh fading, i.e., and are modeled as i.i.d., CSCG random variables with zero mean and unit variance. The downlink and upink noise variances are set as . Given the model dimension , the downlink transmit power budget is set as and the individual uplink transmit power budget is set as , . We consider local devices, and set , , and [28]. The learning rates for both the two tasks are set as with a decaying rate of 0.995 for every 30 communication rounds, which satisfies the condition in Remark 3. We consider balanced and unbalanced data size settings, where we set for the balanced data size setting, and we set being uniformly distributed in the range of for the unbalanced data size setting.

For the proposed scheme, we only present the results with the individual uplink transmit power constraint, and the cases with the sum uplink transmit power constraint are omitted for conciseness, since their results are the same as the individual uplink transmit constraints cases. For performance comparison, we consider three baselines: the ideal FedAvg scheme [6], where the broadcasting and model aggregation are not decayed by the wireless channels including the channel fading and additive noise, with full device participation and fixed weight in (1) for device ; the device selection with MSE threshold scheme, where maximum downlink power transmission and MSE threshold based device selection [19] are adopted; the truncated channel inversion scheme, where maximum downlink power transmission and truncated channel inversion for the uplink transmission and model aggregation in [28] and [43] are adopted.

V-A MNIST dataset

For this task, we aim to train a convolution neural network (CNN) as the classifier model, which consists of three convolution layers (each with 8, 16, and 32 channels, respectively), each followed by a max pooling layer with stride 2; fully connected layer with 10 units; and finally a softmax output layer. All convolutional layers are also followed by batch normalization layers and mapped by ReLU activation. We consider both the i.i.d. and non-i.i.d. cases for this task. For the i.i.d. case, we randomly assign training samples to each device ; while for the non-i.i.d. case, we split the training samples into 5 disjoint subsets with each subset containing 2 classes of images, and each subset is chose to randomly assign training samples to devices. Hence, each device only contains two classes of images and different devices contains different classes of images.

Fig. 2 compares the performance of all the four schemes w.r.t. the communication round in terms of the test accuracy with balanced data size on the MNIST dataset. For the i.i.d. case in Fig. 2(a), it is observed that the test accuracies of the ideal FedAvg scheme, the proposed scheme, and the device selection with MSE threshould scheme increase with the communication round and finally converge. The proposed scheme achieves comparable test accuracy performance to the ideal FedAvg scheme, where the average accuracy over the last 20 communication rounds for the ideal FedAvg scheme is and the average accuracy over the last 20 communication rounds for the proposed scheme is . Besides, the proposed scheme outperforms the device selection with MSE threshould scheme, whose average accuracy over the last 20 communication rounds is . It is observed that all the above three schemes outperform the truncated channel inversion scheme, whose average accuracy over the last 20 communication rounds is . For the truncated channel inversion scheme, the test accuracy first increase with the communication round during round the first 125 rounds, and then becomes unstable during the rest communication rounds, and does not guarantee convergence. A possible explanation for this phenomenon is that the device selection of the truncated channel inversion scheme is only based on the uplink channel conditions, and the equal weight aggregation in this scheme gives more weight for the local model that damaged by the downlink communications, which degrades the training performance.

For the non-i.i.d. case in Fig. 2(b), all the schemes suffer from performance degradation compared to the i.i.d. case in Fig. 2(a), except for the ideal FedAvg scheme, whose average accuracy over the last 20 communication rounds is . The average accuracy over the last 20 communication rounds for the proposed scheme is , which is slightly worse than that of the ideal FedAvg scheme, but still larger than that of the device selection with MSE threshold scheme, whose average accuracy over the last 20 communication rounds is . The truncated channel inversion scheme still has the worst performance, whose convergence is still not guaranteed. Besides, the increasing trend of the proposed scheme becomes less stable compared to that in the i.i.d. case in Fig. 2(a), which is obviously caused by the non-i.i.d. data distributions.

Fig. 3 compares the performance of the four schemes w.r.t. the communication round in terms of the test accuracy with unbalanced data size on the MNIST dataset. For the i.i.d. case in Fig. 3(a), similar to the balanced data size scenario in Fig. 2(a), the test accuracies of the ideal FedAvg scheme, the proposed scheme, and the device selection with MSE threshold scheme increase with the communication round and finally converge. The average accuracy over the last 20 communication rounds of the proposed scheme is , which is slightly lower than the test accuracy of the ideal FedAvg scheme, whose average accuracy over the last 20 communication rounds is , but still larger than both the device selection with MSE threshold and truncated channel inversion schemes and, whose average accuracies over the last 20 communication rounds are and , respectively.

For the non-i.i.d. case in Fig. 3(b), all the four schemes suffer from performance degradation compared to the i.i.d. case in Fig. 3(a), where the average accuracies over the last 20 communication rounds for the ideal FedAvg, proposed, device selection with MSE threshold, and truncated channel inversion schemes are , , , and , respectively. Obviously, the performance of the proposed scheme is still slightly worse than the ideal FedAvg scheme. Note that even the increasing trends of the test accuracy for the truncated channel inversion scheme are still unstable for both the i.i.d. and non-i.i.d. cases, they shows the convergence trend, which is slightly different from the balanced data size case in Fig. 2. This is possibly due to that the equal weight aggregation for the truncated channel inversion scheme combats the participation of the local model damaged by the downlink communications in certain level, since the weight for perfect model aggregation is inherently different in this unbalanced data size case. However, the performance of our proposed scheme still outperforms the truncated channel inversion scheme with unbalanced data size.

V-B CIFAR-10 dataset

For this more challenging task, we aim to train a more complex CNN model, which consists of three convolution layers (each with 32, 32, and 64 channels, respectively), each followed by a max pooling layer with stride 2; two fully connected layers (the first with 64 units and mapped by ReLU activation, and the second with 10 units); and final softmax output layer. All convolutional layers are also followed by batch normalization layers and mapped by ReLU activation. We consider the similar i.i.d. and non-i.i.d. cases to that in Section V-A, where we assign training samples in the same way.

Fig. 4 compares the performance of all the four schemes w.r.t. the communication round in terms of the test accuracy with balanced data size on the CIFAR-10 dataset. For the i.i.d. case in Fig. 4(a), it is observed that the test accuracies of the ideal FedAvg scheme, the proposed scheme, and the device selection with MSE threshold scheme increase with the communication round and finally converge, while the truncated channel inversion scheme fails to converge. The proposed scheme still achieves comparable test accuracy performance to the ideal FedAvg scheme, where the average accuracy over the last 20 communication rounds for the ideal FedAvg scheme is and the average accuracy over the last 20 communication rounds for the proposed scheme is , and outperforms the performance of the device selection with MSE threshold scheme, whose average accuracy over the last 20 communication rounds is . For the non-i.i.d. case in Fig. 4(b), the convergences of the ideal FedAvg scheme, the proposed scheme, and the device selection with MSE threshold scheme are still guaranteed. However, all the above three schemes suffer from performance degradation compared to the i.i.d. case in Fig. 2(a), and their average accuracies over the last 20 communication rounds drop to , , and , respectively. In this case, the truncated channel inversion scheme still fails to converge.

Fig. 5 compares the performance of the four schemes w.r.t. the communication round in terms of the test accuracy with unbalanced data size on the CIFAR-10 dataset. For the i.i.d. case in Fig. 5(a), similar to the balanced data size scenario in Fig. 4(a), the test accuracies of the ideal FedAvg scheme, the proposed scheme, and the device selection with MSE threshold scheme still increase with the communication round and finally converge, while the truncated channel inversion scheme still fails to converge. The performance of the proposed scheme is still slightly worse than the ideal FedAvg scheme, where the average accuracies over the last 20 communication rounds for the ideal FedAvg and proposed schemes are and , respectively. The proposed scheme still outperforms the device selection with MSE threshold scheme, whose average accuracies over the last 20 communication rounds is . For the non-i.i.d. case in Fig. 5(b), the truncated channel inversion scheme still fails to converge, while the convergences of the rest three schemes are still be guaranteed. Similar to the balanced data size case in Fig. 4, those three schemes suffer from performance degradation compared to the i.i.d. case in Fig. 5(a), where the average accuracies over the last 20 communication rounds for the ideal FedAvg scheme, proposed scheme, and the device selection with MSE threshold scheme drop to , , and , respectively. Obviously, the performance of the proposed scheme is still slightly worse than the ideal FedAvg scheme, and better than the device selection with MSE threshold scheme. Intuitively, the reason why the truncated channel inversion scheme fails to converge for the CIFAR-10 dataset in Fig. 4 and 5 is the selection criterion is only based on the uplink channel conditions and it hardly able to mitigate the impact of both the downlink and uplink communications on the convergence of FL for a more complex task.

VI Conclusion

In this paper, we studied the joint device selection and power control for the wireless FL system, considering both the analog downlink and uplink communications between the PS and terminal devices. We first proposed an AirComp-based adaptive reweighing scheme for model aggregation, where the weights are determined by the selected devices and their uplink transmit power values. Then, we analyzed the convergence behavior of the proposed algorithm, in terms of the expected optimality gap between the expected and optimal global loss values, and revealed how the downlink and uplink wireless communications affects the convergence of the considered FL algorithm. Based on the obtained theoretical results, we formulated an optimality gap minimization problem, for both the individual and sum uplink transmit power constraints, respectively, where we minimized the optimality gap round by round with instantaneous CSI in each round and derived the optimal joint device selection and power control by using the SDR technique for both the two constraint cases. Finally, numerical results showed that the proposed scheme performs very close to the ideal FedAvg scheme, and significantly outperforms other baseline schemes, i.e., the device selection with MSE threshold and conventional truncated channel inversion AirComp schemes, for both the i.i.d./non-i.i.d data distributions, and balanced/unbalanced data sizes across the devices.

Appendix A Proof of Theorem 1

First, according to (15), we have

where follows from (22). By taking expectation on both sides of (A), we obtain

| (53) |

Next, we bound the terms and in the RHS of (A) in the following.

First, we study the term .

| (54) |

where follows the inequality , follows (24) in Lemma 1, follows the latter inequality again, and uses the fact that with being the CSCG noise vector, and follows the Jensen’s inequality.

Then, we study the term .

| (56) |

where is due to the fact that both and are zero mean vectors and independent of , follows Assumption 2, follows the identity , and follows the inequality .

For (A), we further need to bound the terms and . First, we obtain

| (57) |

where is due to , follows the Cauchy-Schwarz inequality, and follows Assumption 3 and .

References

- [1] K. B. Letaief, W. Chen, Y. Shi, J. Zhang and Y. A. Zhang, “The roadmap to 6G: AI empowered wireless networks,” IEEE Commun. Mag., vol. 57, no. 8, pp. 84-90, Aug. 2019.

- [2] M. Chiang and T. Zhang, “Fog and IoT: An overview of research opportunities,” IEEE Internet Things J., vol. 3, no. 6, pp. 854–864, Dec. 2016.

- [3] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no. 7553, pp. 436-444, May 2015.

- [4] C.-X. Jiang, H.-J. Zhang, Y. Ren, Z. Han, K.-C. Chen, and L. Hanzo, “Machine learning paradigms for next-generation wireless networks,” IEEE Wireless Commun., vol. 24, no. 2, pp. 98-105, Apr. 2017.

- [5] C. Zhang, P. Patras, and H. Haddadi, “Deep learning in mobile and wireless networking: A survey,” IEEE Commun. Surveys Tuts., vol. 21, no.3, pp. 2224-2287, 3rd Quart., 2019.

- [6] H. B. McMahan, E.Moore, D. Ramage, S. Hampson, and B. A. Y. Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Proc. Int. Conf. Artif. Int. Stat. (AISTATS), 2017, pp. 1273–1282.

- [7] J. Konen, H. B. McMahan, F. X. Yu, P. Richtárik, A. T. Suresh, and D. Bacon, “Federated learning: Strategies for improving communication efficiency,” 2016. [Online]. Available: https://arxiv.org/pdf/1610.05492.pdf

- [8] W. Y. B. Lim, N. C. Luong, D. T. Hoang, Y. Jiao, Y.-C. Liang, Q. Yang, D. Niyato, and C. Miao, “Federated learning in mobile edge networks: A comprehensive survey,” IEEE Commun. Surveys Tuts., vol. 22, no. 3, pp. 2031–2063, 2nd Quart., 2020.

- [9] Y. Zhao, M. Li, L. Lai, N. Suda, D. Civin, and V. Chandra, “Federated learning with non-iid data,” 2018. [Online]. Available: https://arxiv.org/pdf/1806.00582.pdf

- [10] T. Li, A. K. Sahu, M. Zaheer, M. Sanjabi, A. Talwalkar, and V. Smith, “Federated optimization in heterogeneous networks,” 2018. [Online]. Available: https://arxiv.org/pdf/1812.06127.pdf

- [11] M. Chen, Z. Yang, W. Saad, C. Yin, H. V. Poor, and S. Cui, “A joint learning and communications framework for federated learning over wireless networks,” IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 269–283, Jan. 2021.

- [12] J. Xu and H. Wang, “Client selection and bandwidth allocation in wireless federated learning networks: A long-term perspective,” IEEE Trans. Wireless Commun., vol. 20, no. 2, pp. 1188–1200, Feb. 2021.

- [13] M. M. Amiri, D. Gndz, S. R. Kulkarni, and H. V. Poor, "Convergence of update aware device scheduling for federated learning at the wireless edge," IEEE Trans. Wireless Commun., vol. 20, no. 6, pp. 3643-3658, June 2021.

- [14] W. Shi, S. Zhou, Z. Niu, M. Jiang, and L. Geng, “Joint device scheduling and resource allocation for latency constrained wireless federated learning,” IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 453–467, Jan. 2021.

- [15] Y. Wang, Y. Xu, Q. Shi,and T.H. Chang, T. H, “Quantized federated learning under transmission delay and outage constraints,” 2021. [Online]. Available: https://arxiv.org/pdf/2106.09397.pdf

- [16] S. Wang, T. Tuor, T. Salonidis, K. K. Leung, C. Makaya, T. He, and K. Chan, “Adaptive federated learning in resource constrained edge computing systems,” IEEE J. Sel. Areas Commun., vol. 37, no. 6, pp.1205–1221, Jun. 2019.

- [17] G. Zhu, Y. Wang, and K. Huang, “Broadband analog aggregation for low-latency federated edge learning,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 491–506, Jan. 2020.

- [18] B. Nazer and M. Gastpar, “Computation over multiple-access channels,” IEEE Trans. Info. Theory, vol. 53, no. 10, pp. 3498–3516, Oct. 2007.

- [19] K. Yang, T. Jiang, Y. Shi, and Z. Ding, “Federated learning via over-the-air computation,” IEEE Trans. Wireless Commun., vol. 19, no. 3, pp. 2022–2035, Mar. 2020.

- [20] Y. Sun, S. Zhou, Z. Niu and D. Gündüz, "Dynamic scheduling for over-the-air federated edge learning with energy constraints," IEEE J. Sel. Areas Commun., vol. 40, no. 1, pp. 227-242, Jan. 2022.

- [21] G. Zhu, Y. Du, D. Gndz, K. Huang, “One-bit over-the-air aggregation for communication-efficient federated edge learning: Design and convergence analysis,” IEEE Trans. Wireless Commun., vol. 20, no. 3, pp. 2120-2135, Mar. 2020.

- [22] N. Zhang and M. Tao, “Gradient statistics aware power control for over-the-air federated learning,” IEEE Trans. Wireless Commun., vol. 20, no. 8, pp. 5115-5128, Aug. 2021.

- [23] X. Cao, G. Zhu, J. Xu, and S. Cui, “Optimized power control for over-the-air federated edge learning,” in IEEE Int. Conf. Commun. (ICC), 2020, pp. 1–6.

- [24] X. Fan, Y. Wang, Y. Huo, and Z. Tian, “Joint optimization of Communications and federated learning over the air,” 2021. [Online]. Available: https://arxiv.org/pdf/2104.03490.pdf

- [25] S. Caldas, J. Konecny, H. B. McMahan, and A. Talwalkar, “Expanding the reach of federated learning by reducing client resource requirements,” 2018. [Online]. Available: https://arxiv.org/pdf/1812.07210.pdf

- [26] X. Wei and C. Shen. “Federated learning over noisy channels: Convergence analysis and design examples.” 2021. [Online]. Available: https://arxiv.org/pdf/2101.02198.pdf

- [27] M. M. Amiri, D. Gndz, S. R. Kulkarni, and H. Vincent Poor, “Federated learning with quantized global model updates,” 2020. [Online]. Available: https://arxiv.org/pdf/2006.10672.pdf

- [28] M. M. Amiri, D. Gndz, S. R. Kulkarni, and H. Vincent Poor, “Convergence of federated learning over a noisy downlink,” IEEE Trans. Wireless Commun., early access. doi: 10.1109/TWC.2021.3103874.

- [29] J.-H. Ahn, O. Simeone, and J. Kang, “Cooperative learning via federated distillation over fading channels,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2020, pp. 8856–8860.

- [30] X. Li, K. Huang, W. Yang, S. Wang, and Z. Zhang, “On the convergence of fedavg on non-iid data,” in Int. Conf. Learn. Represent. (ICLR), 2020.

- [31] X. Lian, C. Zhang, H. Zhang, C.-J. Hsieh, W. Zhang, and J. Liu, “Can decentralized algorithms outperform centralized algorithms? a case study for decentralized parallel stochastic gradient descent,” in Proc. Adv. Neural Inf. Process. Syst. (NeurIPS), 2017, pp. 5336-5346.

- [32] X. Zhang, M. Hong, S. Dhople, W. Yin and Y. Liu.“Fedpd: A federated learning framework with optimal rates and adaptivity to non-iid data.” 2020. [Online]. Available: https://arxiv.org/pdf/2005.11418.pdf

- [33] Y. J. Cho, J. Wang, and G. Joshi. “Client selection in federated learning: Convergence analysis and power-of-choice selection strategies,” 2020. [Online]. Available: https://arxiv.org/pdf/2010.01243.pdf

- [34] J. Wang, Q. Liu, H. Liang, G. Joshi, and H. V. Poor, “Tackling the objective inconsistency problem in heterogeneous federated optimization,” 2020. [Online]. Available: https://arxiv.org/pdf/2007.07481.pdf

- [35] X. Cao, G. Zhu, J. Xu, and S. Cui, “Transmission power control for over-the-air federated averaging at network edge”, 2021. [Online]. Available: https://arxiv.org/pdf/2111.05719.pdf

- [36] A. Beck and M. Teboulle, “On minimizing quadratically constrained ratio of two quadratic functions,” J. Convex Anal., vol. 17, no. 3, pp. 789–804, Mar. 2010.

- [37] Z.-Q. Luo, W.-K. Ma, A. M.-C. So, Y. Ye, and S. Zhang, “Semidefinite relaxation of quadratic optimization problems,” IEEE Signal Process. Mag., vol. 27, no. 3, pp. 20–34, May 2010.

- [38] M. Grant and S. Boyd. (2016). CVX: Matlab software for disciplined convex programming. [Online]. Available: http://cvxr.com/cvx

- [39] F. Jiang, J. Chen, and A. L. Swindlehurst, “Optimal power allocation for parameter tracking in a distributed amplify-and-forward sensor network,” IEEE Trans. Signal Process., vol. 62, no. 9, pp. 2200–2211, Mar. 2014.

- [40] C. Helmberg, F. Rendl, R. Vanderbei, and H. Wolkowicz, “An interiorpoint method for semidefinite programming,” SIAM J. Optim., vol. 6, no. 2, pp. 342–361, 1996.

- [41] Y. LeCun, C. Cortes, and C. Burges, “The MNIST database of handwritten digits,” http://yann.lecun.com/exdb/mnist/, 1998.

- [42] A. Krizhevsky and G. Hinton, “Learning multiple layers of features from tiny images,” M.S. thesis, Univ. Toronto, Toronto, ON, Canada, Tech. Rep., 2009. [Online]. Available: http://www.cs.toronto.edu/ kriz/ learning-features-2009-TR.pdf

- [43] S. Xia, J. Zhu, Y. Yang, Y. Zhou, Y. Shi and W. Chen, “Fast convergence algorithm for analog federated learning,” in IEEE Int. Conf. Commun. (ICC), 2020, pp. 1–6.