Joint Denoising and Demosaicking with

Green Channel Prior for Real-world Burst Images

Abstract

Denoising and demosaicking are essential yet correlated steps to reconstruct a full color image from the raw color filter array (CFA) data. By learning a deep convolutional neural network (CNN), significant progress has been achieved to perform denoising and demosaicking jointly. However, most existing CNN-based joint denoising and demosaicking (JDD) methods work on a single image while assuming additive white Gaussian noise, which limits their performance on real-world applications. In this work, we study the JDD problem for real-world burst images, namely JDD-B. Considering the fact that the green channel has twice the sampling rate and better quality than the red and blue channels in CFA raw data, we propose to use this green channel prior (GCP) to build a GCP-Net for the JDD-B task. In GCP-Net, the GCP features extracted from green channels are utilized to guide the feature extraction and feature upsampling of the whole image. To compensate for the shift between frames, the offset is also estimated from GCP features to reduce the impact of noise. Our GCP-Net can preserve more image structures and details than other JDD methods while removing noise. Experiments on synthetic and real-world noisy images demonstrate the effectiveness of GCP-Net quantitatively and qualitatively.

Index Terms:

Real-world burst images, jointly denoising and demosaicking, green channel prior.I Introduction

Most consumer grade digital cameras capture natural images using a single-chip CCD/CMOS sensor covered by a color filter array (CFA), resulting in incomplete color sampling at each photoreceptor. The process of interpolating the missing colors from mosaicked CFA data is called color demosaicking. The captured data is inevitably corrupted by noise, especially under low-light conditions. Denoising and demosaicking play crucial roles to obtain high quality images in the camera ISP (image signal processing) pipeline, and a variety of image denoising and demosaicking methods [1, 2, 3, 4, 5] have been proposed.

Previous demosaicking and denoising methods are usually designed independently and implemented sequentially in the ISP. However, the demosaicking errors will complicate the denoising process, or the denoising artifacts can be amplified in the demosaicking process. Therefore, joint denoising and demosaicking (JDD) has received considerable research interests [6, 7, 8, 9, 10, 11, 12, 13]. Traditional JDD methods resort to image priors, such as piecewise smoothness [6] and non-local self-similarity [7], and employ an optimization model for this joint task. Those handcrafted priors, however, are not accurate enough to reproduce the complex image local structures. Recent JDD methods are mostly data-driven learning methods, where a deep convolutional neural network (CNN) is trained on pairwise dataset with noisy mosaicked images and their clean full color ground truths [9, 10, 12, 13, 14]. By learning deep priors from a large amount of data, those CNN based methods achieve much better JDD performance than traditional model based methods.

Existing JDD methods, including those CNN based ones, mostly work on a single CFA image, and we call them JDD-S methods, which have several limitations when applying to real-world CFA data. First, their performance will deteriorate significantly on CFA images with strong noise level. This often occurs for low-end devices such as smartphone cameras due to the small sensor and lens. The situation becomes worse under low-light imaging conditions. Second, current JDD-S methods [9, 10, 12, 13, 14] usually assume additive white Gaussian noise (AWGN) in the training process, which cannot accurately describe the distribution of real-world noise. As a result, strong visual artifacts will appear in the JDD outputs of real-world noisy CFA images.

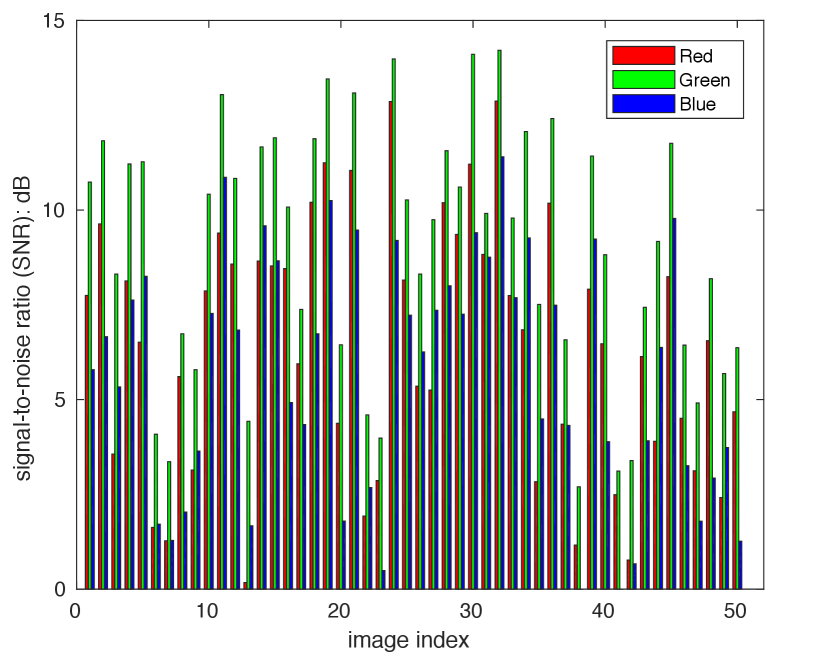

Recently, it has been shown that the denoising performance can be significantly improved by using a set of burst images instead of a single image, especially for the low-light imaging conditions [16, 17, 18, 19, 20]. Inspired by the success of burst image denoising, we propose to perform JDD with real-world burst images, which is called JDD-B. With some realistic noise modeling methods [16, 21], we can synthesize noisy burst images with clean ground truth by reversing the ISP pipeline on high quality video sequences and adding noise into them. Such pairwise data can be used to train the JDD-B model. It is well-known that the green channel of images captured by single-chip digital cameras has better quality than red and blue channels. On one hand, the green channel has twice the sampling rate than red/blue channels in most CFA patterns (e.g., Bayer pattern). On the other hand, the sensitivity of green is better than red/blue [22]. As a result, the green channel has more texture information and higher SNR than red/blue channels in most natural images, which is demonstrated in Fig. 1 by using the SIDD dataset [15]. We call the above fact and prior knowledge the green channel prior (GCP), and use the GCP to design our JDD-B network, namely GCP-Net, to improve the JDD-B performance on real-world burst images.

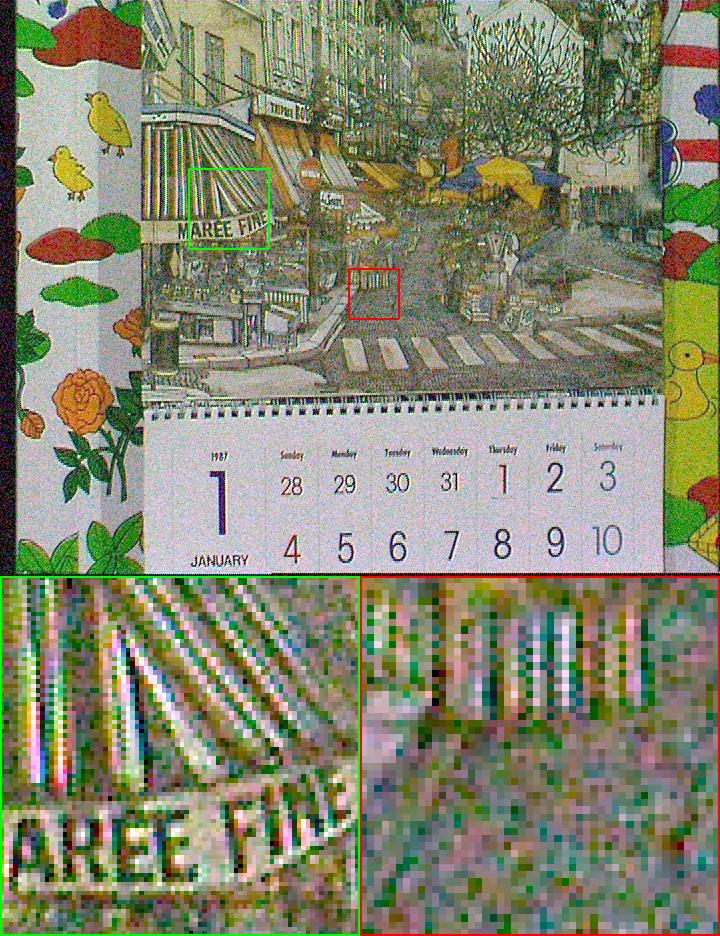

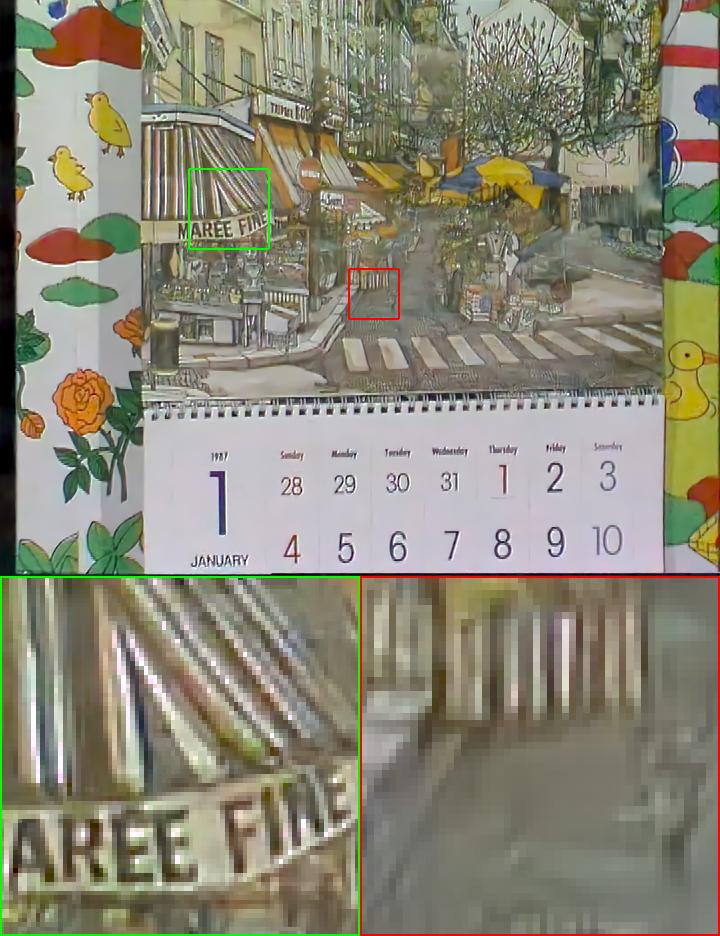

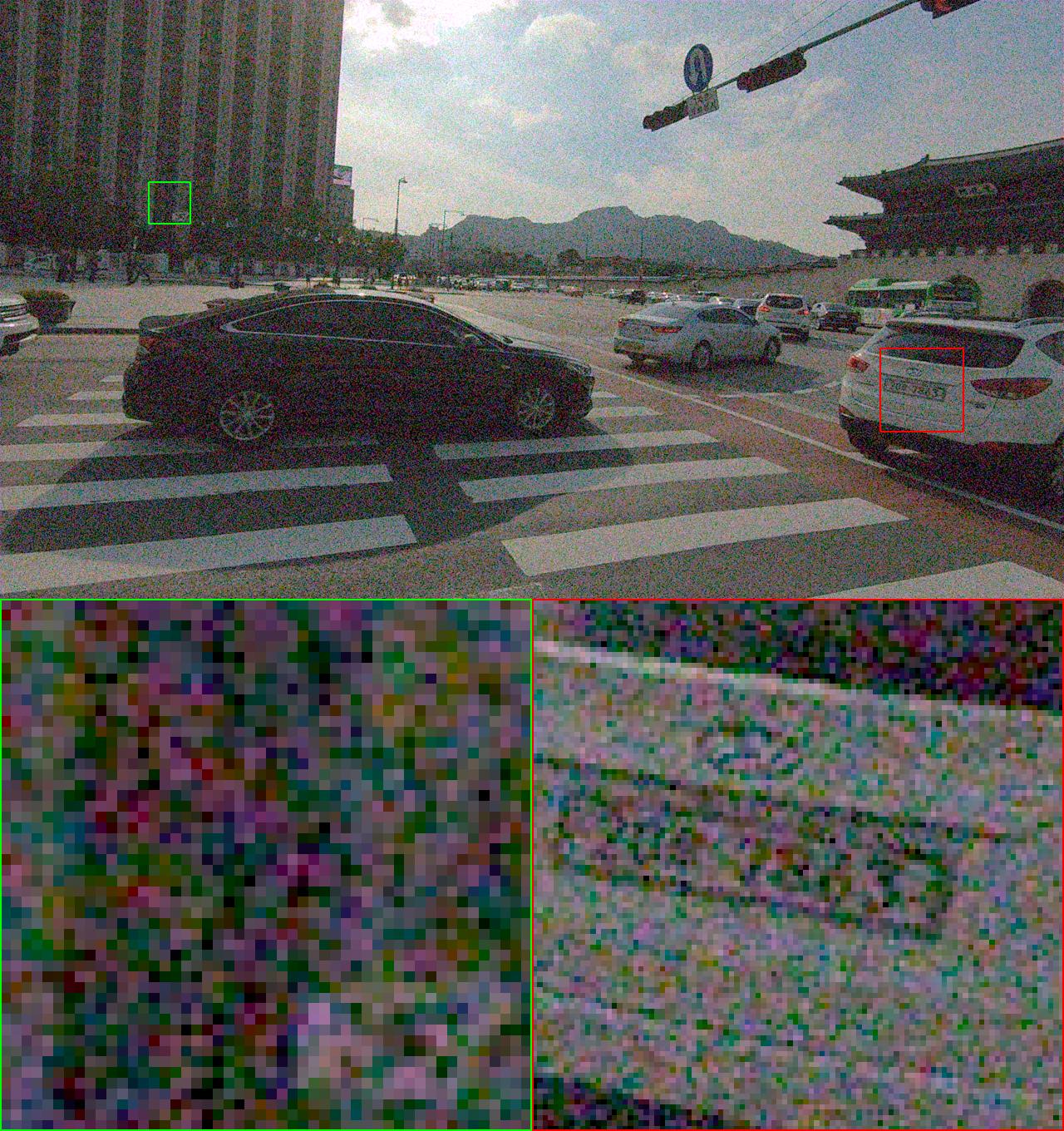

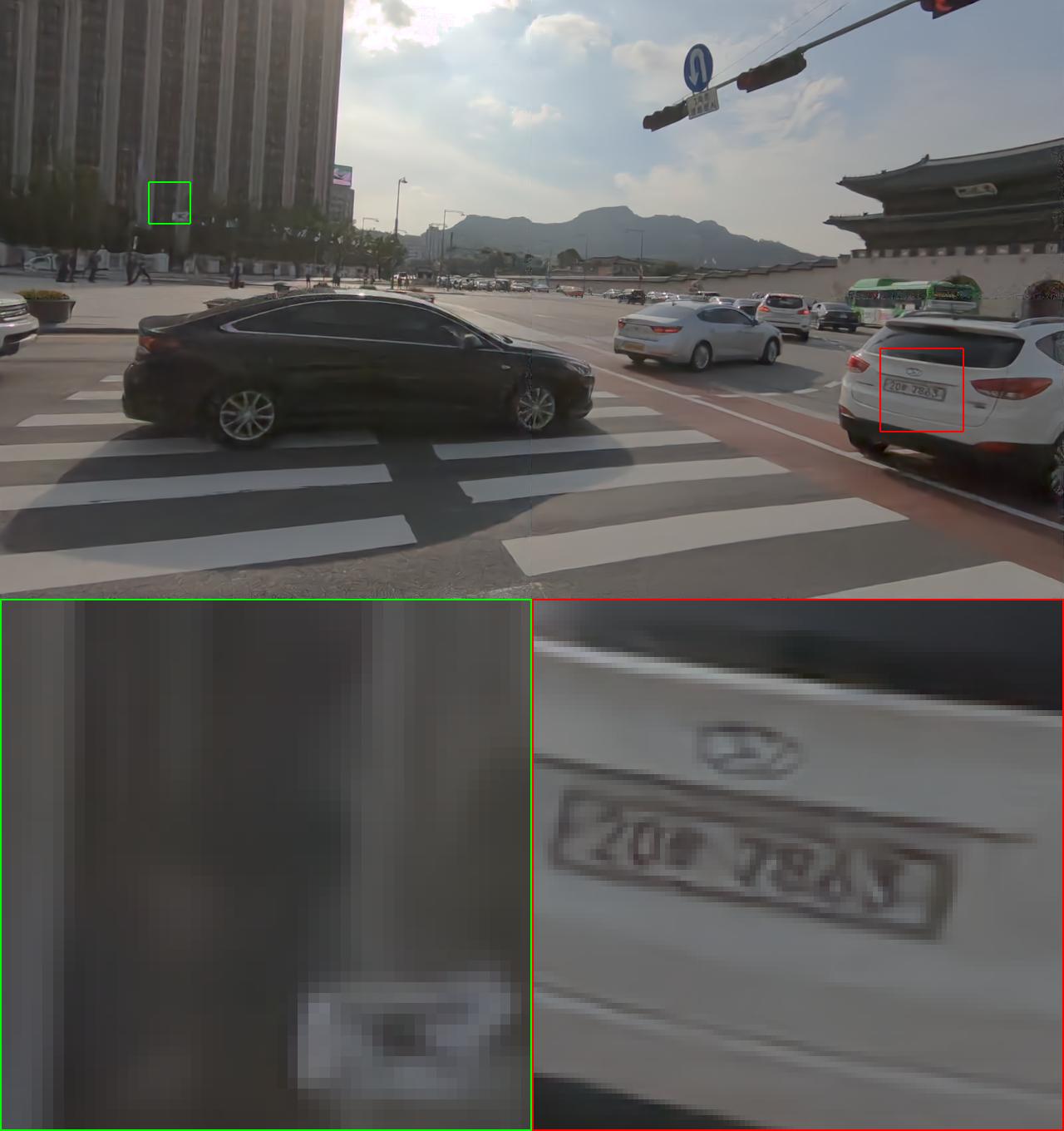

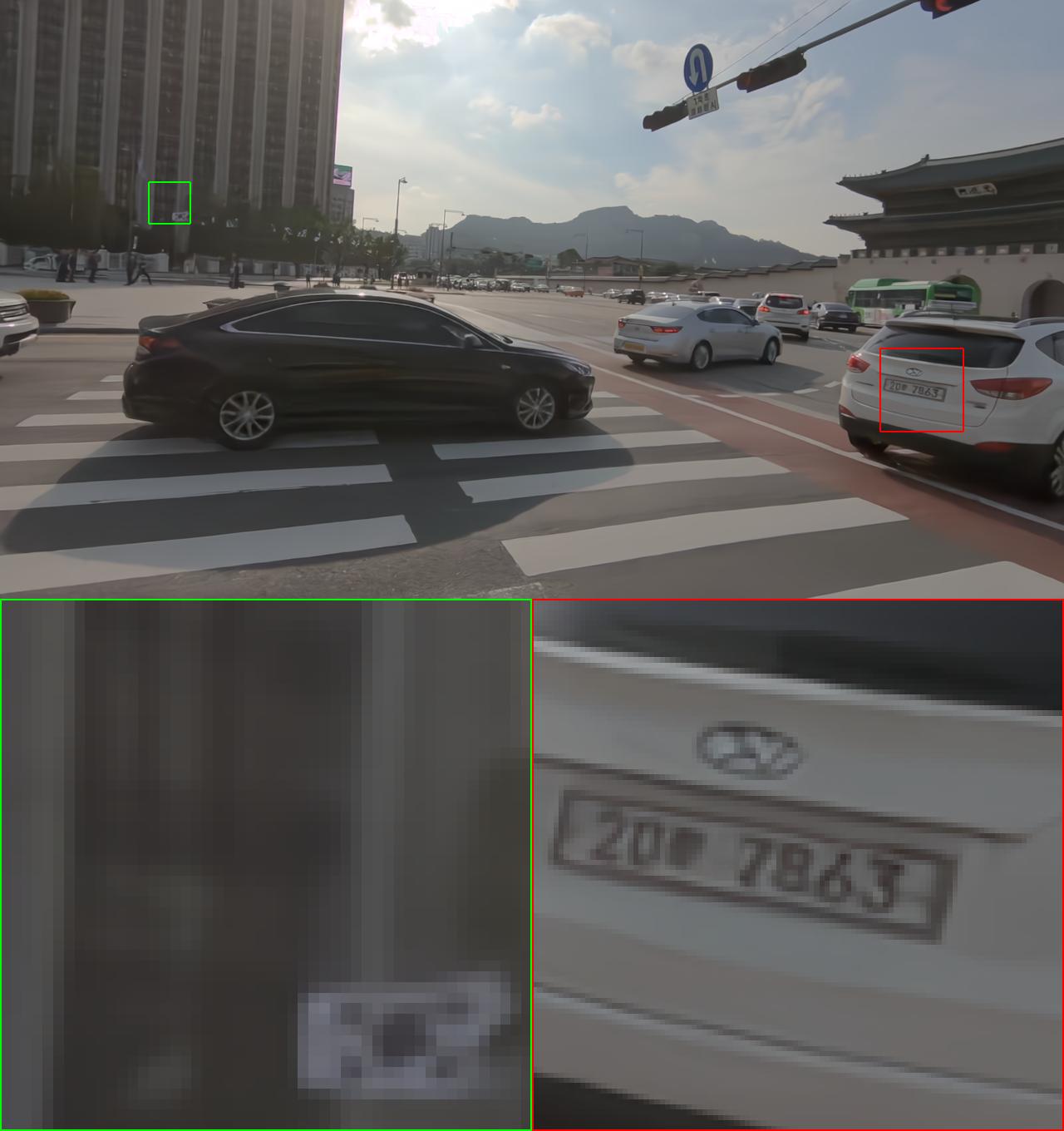

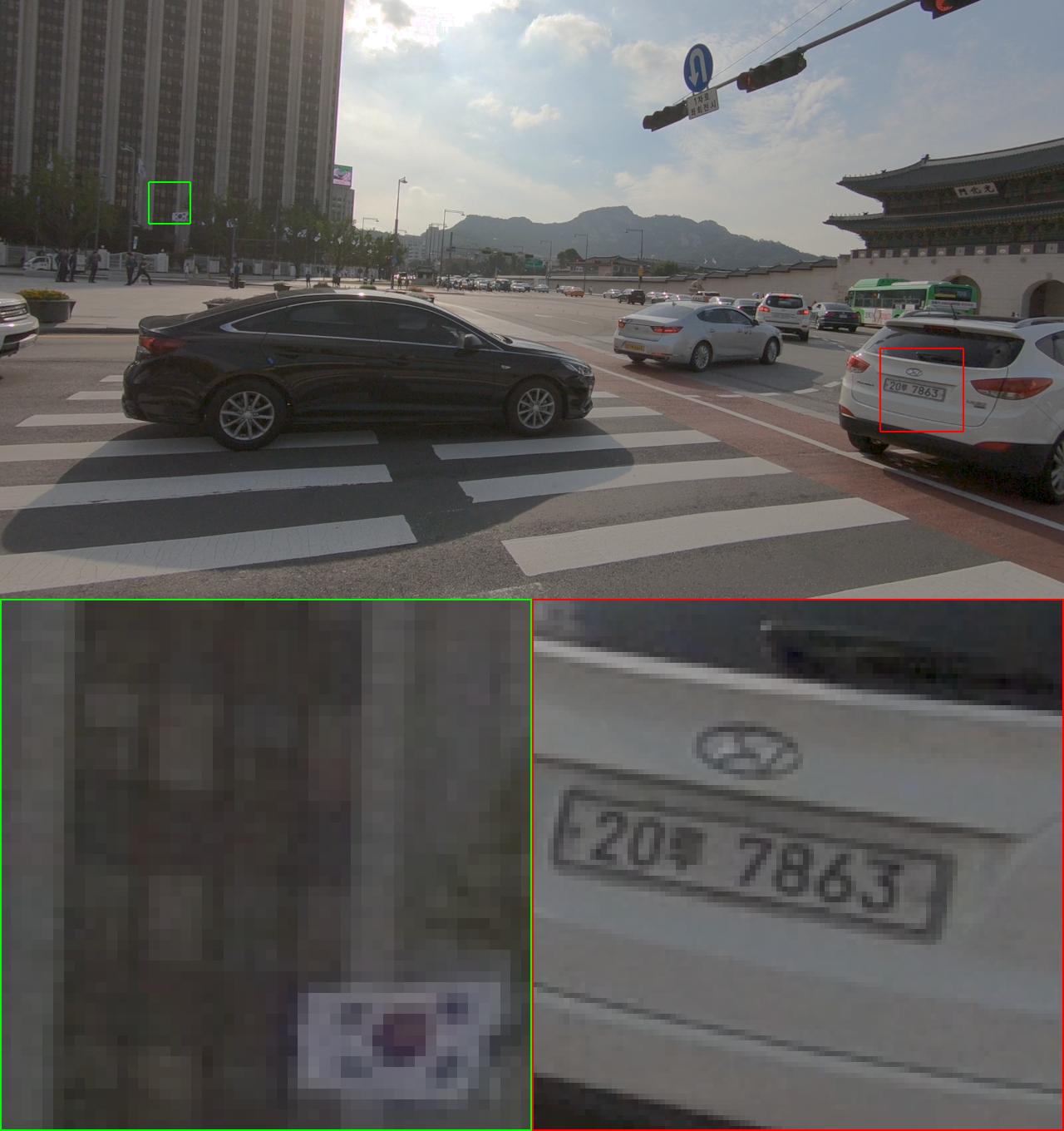

Specifically, in GCP-Net, we extract the GCP features from green channel to guide the deep feature modeling and upsampling of the whole image. The GCP features are also utilized to estimate the offset within frames to relief the impact of noise. As shown in Fig. 2, with GCP, the JDD-B results can preserve more structures and details while removing noise. Our GCP-Net achieves state-of-the-art JDD-B performance on both synthetic noisy images and real-world burst images captured by smartphones.

II Related Work

II-A Joint Denoising and Demosaicking for Single Image

Image denoising and demosaicking are two important steps in camera ISP pipeline. A few methods have been proposed for joint denoising and demosaicking on a single raw image (JDD-S) [9, 23, 10, 11, 12, 13, 14]. In [13], Qian et al. showed that the performance of JDD-S is generally better than performing denoising and demosaicking separately. In [9], a learning-based method was proposed for JDD-S. Henz et al. [10] proposed an auto-encoder architecture to model the color-image capturing process on each monochromatic sensor. Kokkinos et al. [12] proposed a plug-and-play framework for the JDD-S task. To enhance the performance on real-world images, Ehret et al. [11] proposed a mosaic-to-mosaic framework by finetuning the network using mosaic burst images. It should be noted that though Ehret et al. utilized burst images to fine-tune the network, the input of the network is still a single image. Liu et al. [14] proposed a self-guided JDD-S network by considering the advantages of the higher sampling rate of green channel and using this prior to guide the upsampling process. In this paper, we further analyze the noise level imbalance among different color channels in real-world photographs, and perform the JDD task using burst images instead of a single image.

II-B Burst Image Restoration

Compared with single image restoration tasks, burst image processing encounters new challenges on estimating the offsets among different frames caused by camera movement and moving objects. According to the employed alignment frameworks, we partition burst image restoration methods into three categories, i.e., pre-aligned methods [17, 18], kernel-based methods [16, 24, 25, 26] and feature-based alignment [27, 28, 19, 20].

Pre-alignment methods mostly employ optical flow to estimate the motions and perform warping to compensate for temporal offset. The frame-to-frame method [18] utilizes the TV- algorithm [29] to estimate optical flow within frames. ToFlow [17] utilizes the SpyNet [30] as the flow estimation module, which is jointly trained with the denoising module. However, the restoration performance of those methods is largely affected by the accuracy of estimated optical flow, while accurate flow is difficult to obtain especially under large motion and severe noise. Kernel-based methods use convolutional neural networks to predict spatially varying kernels, which perform aligning and denoising simultaneously [16]. Compared with the original KPN [16], Xu et al. [24] and Marinc et al. [24] proposed to learn the deformable kernels and multiple kernels. Xia et al. [26] proposed to predict a set of global basis kernels and the corresponding mixing coefficients to effectively exploit larger denoising kernels.

Comparing with the above two categories of alignment methods, performing alignment in feature domain is a more promising strategy and has achieved SOTA performance on video super-resolution tasks. Liu et al. [27] proposed to use a localization net to estimate spatial transform parameters from deep feature and directly wrap the feature to align shift. TDAN [28] and EDVR [19] were proposed to estimate offset of deformable convolution which is utilized to align the shift in features domain. Comparing with [27] which needs the ground-truth information of spatial transform parameters, TDAN and EDVR do not need such parameters while still achieving SOTA performance. RviDeNet [20] also utilizes DConv to align multi-scale features for burst denoising. In this work, we perform alignment in feature domain and utilize deformable convolution to implicitly compensate for offsets. Moreover, we design an inter-frame module which not only utilizes multi-scale information, but also considers temporal constraint.

III Methods

III-A Problem Specification

Our goal is to recover a clean full-color image, denoted by , from a burst of real-world noisy CFA images, denoted as . The subscript “ref” represents the index of reference frame. Usually, the noisy counterpart of is the center frame in .

The noise in real-world raw images is signal-dependent [31] due to the photon arrival statistics and the imprecision in readout circuitry. The noise introduced by photon sensing, i.e., shot noise, can be modeled as the Poisson distribution, while the noise introduced in readout circuitry, i.e., read noise, can be modeled by the Gaussian distribution. Denote by the desired clean raw image captured at time . The corresponding noisy raw image can be written as [31]:

| (1) |

where and and are the scale parameters for shot noise and read noise, respectively. represents Gaussian distribution.

III-B Green Channel Prior

As discussed in [22], the CMOS sensor has different sensitivity to light of different wavelengths or colors, and in most illumination conditions, green channels are brighter than red and blue channels in Bayer pattern CFA images. Since the real-world noise contains the Poissonian shot noise (see Eq. 1), and the signal-to-noise ratio (SNR) has a square root relationship between signal and noise, the brighter green channel often has a higher SNR than red/blue channels. To validate this, we compute the average SNR of different color channels of 50 real-world noisy raw images randomly chosen from the SIDD [15] benchmark dataset (which contains high-ISO noisy images captured by smartphone cameras), and show the SNR comparison in Fig. 1. One can see that the SNR of green channel is higher than that of red/blue channels for most of the noisy images. In addition, the green channel has twice the sampling rate of red/blue channels. Overall, the green channel preserves better image structure and details than the other two channels. In this paper, we call the prior knowledge that the green channel has higher SNR, higher sampling rate and hence better channel quality than red/blue channels the green channel prior (GCP), which is carefully exploited in this paper to design our JDD-B network.

III-C Network Structure

With GCP, we propose a new network, namely GCP-Net, for JDD-B. Without loss of generality, we assume that the Bayer CFA pattern is used. For each raw image , we reshape it as four R, G, G, B sub-images of the same size so that . We denote the noise map of as , whose value at each location is the standard deviation of signal-dependent noise at that position. The input of our GCP-Net is a sequence of noisy raw images and their corresponding noise maps . The output is the clean full-resolution linear RGB image .

The overview of GCP-Net is illustrated in Fig. 3. GCP-Net consists of two branches, i.e., a GCP branch and a reconstruction branch. In the GCP branch, the green features are extracted from the concatenation of noisy green channels, denoted by , and their noise level maps, denoted by . This process can be written as:

| (2) |

where consists of several Conv+LReLU blocks. These GCP features are utilized as the guided information for the reconstruction branch. We utilize layer-wise guiding strategy and denote the GCP feature of the -th layer as .

The reconstruction branch utilizes the burst images, the corresponding noise maps and the GCP features to estimate the clean full color image. As illustrated in Fig. 3, it consists of three parts: the intra-frame (IntraF) module, the inter-frame (InterF) module and the merge module. The IntraF module is designed to model the deep features of each frame and it utilizes GCP features to guide the feature extraction. The InterF module is to compensate for the shift between frames by using the DConv in feature domain. To reduce the influence of noise in alignment, offset is estimated from the cleaner GCP features. The merge module is designed to aggregate the aligned features and use the GCP features to perform adaptive upsampling for the full-resolution image reconstruction. The details of these modules are presented in the following sections.

III-D Intra-frame Module

The architecture of the IntraF module is shown in Fig. 4. For the -th frame, the input of IntraF includes the noisy raw image , the corresponding noise level map and the GCP feature . Firstly, one simple convolution layer is used to model the initial features as . Then, the initial features are passed to the concatenation of four green channel attention (GCA) blocks, where the GCP features are used to guide the feature extraction and a dual attention mechanism is designed to better deal with the channel-dependent and spatial-dependent noise. We adopt a layer-wise guiding strategy for GCA blocks and empirically find that such a strategy is favorable to the restoration results. Without loss of generality, we use the -th GCA block to present the modeling process. The output features are denoted as:

| (3) |

where represents the -th GCA block.

The detailed structure of the GCA block is shown in Fig. 5. Inspired by [32, 33], the GCP information can be exploited by using pixel-wise scaling and bias, and the enhanced feature can be expressed as:

| (4) |

where and are two learned modulation parameters of the guided layers. We denote the unit to implement Eq. 4 as the green guided (GG) unit. The green-guided features are estimated by two residual blocks, denoted by ,

| (5) |

where is the learned features. As normal Conv layers treat spatial and channel features equally, it is not appropriate to handle the real-world noise which is channel and spatial dependent. To further enhance the representational power of standard Conv+ReLU blocks, channel attention and spatial attention [34, 35, 36] are designed to model the cross-channel and spatial relationship of deep features.

III-D1 Channel attention (CA)

The features of size are firstly converted into a channel descriptor using global average pooling (GAP). To make use of the aggregated information, the channel descriptor is processed by two convolutional layers with kernel size , followed by a sigmoid activation to obtain the activations . The output of CA is the rescaled feature using .

III-D2 Spatial attention (SA)

The SA block is designed to model the spatial dependencies of deep features by rescaling the features using the estimated spatial attention map . Instead of using average pooling and max pooling [36], is adaptively obtained by using two convolutional layers, followed by the sigmoid activation.

The output of the -th GCA block is obtained by:

| (6) |

III-E Inter-frame Module

The extracted features by the IntraF module are then aligned to the reference frame feature in the InterF module. The InterF module aims at modeling the temporal dependency between frames, whose architecture is shown in Fig. 6. We use the deformable convolution to compensate for the offset within frames. To relieve the affect of severe noise and to better model the correlation between neighboring frames, we use the GCP features to estimate the offset. Similar to EDVR [19] and RViDeNet [20], pyramidal processing is utilized to handle possible large motions. Moreover, to better exploit the temporal constraint in the offset estimation, we introduce an LSTM regularization in the offset estimation.

For each pyramidal scale of the -th frame, the inter-frame GCP feature, denoted by , is obtained by using

| (7) |

where is the concatenation operator and is the Conv layer. Then, the temporal regularization is introduced by using ConvLSTM [37], which is a popular 2D sequence data modeling method. The ConvLSTM updates the hidden state and the cell state with:

| (8) |

The updated inter-frame feature can be written as:

| (9) |

As discussed in [38], the LSTM mechanism has limited ability to deal with complex motions. To handle large motions, multi-scale information is aggregated to estimate more accurately the offset :

| (10) |

where and are two convolutional layers, is the upsampling operator with factor 2.

The aligned features at each position can then be obtained by:

| (11) |

in which is the sampling location of deformable convolution kernel, is the modulation scalar. Following [39], since is fractional, bilinear interpolation is applied. The final aligned feature at scale is obtained by:

| (12) |

where refers to general Conv+LReLU layers.

III-F Merge Module

The merge module is designed to merge the aligned features and output the estimated clean RGB image . The aligned features are firstly concatenated and adaptively merged as follows:

| (13) |

where is the merge function by using one simple convolution layer. Then we upsample the features to full-resolution features using GCP adaptive upsampling. Similar to the green guided operator in GCA block, the GCP adaptive upsampling can be expressed as:

| (14) |

where are the green guided features of size , represents the upsampling operator by a factor , and are the two learned modulation parameters. Transpose convolution is used for the upsampling interpolation.

The final estimation can be written as:

| (15) |

where is designed to estimate the final clean RGB image. In this paper, we utilize a three scale U-Net [40] architecture for to exploit the multi-scale information as well as enlarging the receptive field. All the Conv kernels are of size , followed by the nonlinear function LReLU. The upsampling and downsampling operators in are strided convolutions and transpose convolutions.

III-G Loss Function

For the estimated clean , we define the reconstruction loss in the linear color space as follows:

| (16) |

where is the Charbonnier penalty function [41], is set to . As discussed in KPN [16], computing loss in sRGB color space can produce a perceptually more relevant estimation. Therefore, we also introduce a loss in the sRGB color space:

| (17) |

where is the operator which transforms linear RGB color space to sRGB space. In this paper, contains white balance, color correction and gamma compression as in [21]. To sum up, the overall loss to optimize our model is:

| (18) |

where is the trade-off parameter and we simply set to 1 in our experiments.

IV Experiment

IV-A Implement Details

Obtaining ground-truth images for training is difficult for real-world image restoration tasks. In some single-image based restoration works [42, 15, 13], real-world degraded data are collected and the corresponding ground-truth images are physically and/or mathematically estimated for pair-wise training. However, for the burst-images based JDD-B task, the misalignment problem and the coherence between denoising and demosaicking make the ground-truth estimation much more difficult. Therefore, we synthesize training data by using an open high quality video dataset, i.e., Vimeo-90K [17].

Since camera sensor outputs are in the linear color space, we first convert the sRGB images into linear RGB space by using the unprocessing operation in [21], which can be written as . The unprocessing operation includes inverse gamma compression, inverse color correction, inverse tone mapping and inverse white balance. The converted linear RGB frame is taken as the ground-truth image. By using Eq. 1, the noisy raw image can be synthesized as:

| (19) |

where is the mosaic matrix which downsamples a linear RGB image to a Bayer CFA image. Without loss of generality, the RGGB mosaic pattern is used as to generate the data.

| # of GG Units | 0 | 1 | 2 | 3 | 4 | 5 | 4 (w/o GCP upsampling) | using RB to guide |

|---|---|---|---|---|---|---|---|---|

| Clip000 | 32.02 | 32.15 | 32.14 | 32.15 | 32.29 | 32.31 | 32.10 | 30.98 |

| Clip011 | 33.14 | 33.26 | 33.36 | 33.26 | 33.28 | 33.27 | 33.22 | 32.79 |

| Clip015 | 35.64 | 35.79 | 35.83 | 35.80 | 35.79 | 35.79 | 35.74 | 35.18 |

| Clip020 | 32.48 | 32.59 | 32.64 | 32.61 | 32.63 | 32.62 | 32.60 | 32.11 |

| Average | 33.32 | 33.45 | 33.49 | 33.46 | 33.50 | 33.50 | 33.41 | 32.77 |

In our experiments, a number of neighboring frames are used as the input and the central frame is chosen as the reference frame. Following the setting in [16], the noise level parameters and in Eq. 1 are uniformly sampled from the ranges of and , respectively. We adopt the method in [43] to initialize the GCP-Net and use the ADAM [44] algorithm with = 0.9 and = 0.99 to update the network. The size of mini-batch is 2 and the size of each noisy raw patch is with 4 color channels (RGGB). The reconstructed RGB patch is of size with three color channels (RGB). The learning rate is initialized as and it is decreased using the cosine function [45]. It takes about two days to train our model under the PyTorch framework using two Nvidia GeForce RTX 2080 Ti GPU.

IV-B Ablation Study

In this section, we perform ablation studies to discuss the effect of major components in GCP-Net and the setting of some parameters. The Vid4 [46] and REDS4 [19] datasets are used in the experiments.

IV-B1 The effectiveness of GCP

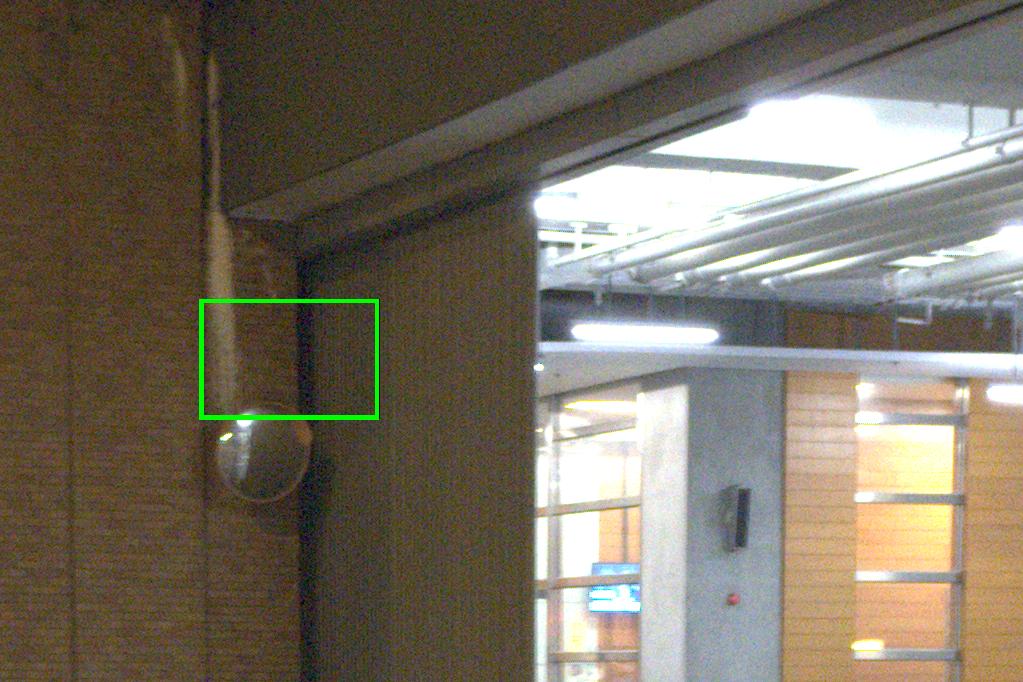

In GCP-Net, GCP features are used to guide the deep feature extraction and the upsampling process. By removing and adding the GG unit (see Fig. 5) in the GCA block, we can analyze the influence of GCP on deep feature extraction. Fig. 7 shows a patch of a noisy image captured in night time by a smartphone camera, the extracted deep features without and with the GG unit, and the JDD results. As expected, using the GCP features to guide the feature extraction is favorable to suppress the noise and preserve more detailed textures, as shown in Figs. 7 (e) and (f).

To quantitatively verify the contribution of GCP, we implement five variants of GCP-Net with different number of GG units and GCA blocks. The quantitative results on the REDS4 dataset are shown in Table I. We can see that using one GG unit, we can obtain 0.13dB gain over the result without using the GCP guidance. The PSNR value can be further improved by increasing the number of GG units from one to four, and the performance gets saturated when the number of GG units is five. Therefore, we use four GG units and GCA blocks in our GCP-Net. We also train a GCP-Net without using the adaptive upsampling in GCA and the result is shown in Table I. One can see that the adaptive upsampling can obtain about 0.1dB gain for the JDD task.

We also train a network by using the red and blue channels to guide the feature extraction. The results are shown in the last column of Table I. We see that using the red and blue channels to guide feature extraction cannot enhance the performance. Instead, it leads to serious performance degradation (about 0.6dB) compared with the network without using GCP. This is not surprising since the red and blue channels have lower SNR and contain less textures (please see Figs. 2(d)-(g)) so that the network fails to extract more guiding information to enhance the deep features.

(a) Noisy image

(b) VBM3D+DMN

(c) KPN+DMN

(d) EDVR*

(e) RViDeNet+DMN

(f) RViDeNet*

(g) Ours

(h) GT

IV-B2 One-stage structure vs. two-stage structure for JDD

There are two types of network structures for JDD: one-stage and two-stage. One-stage algorithms [10, 14] learn to directly estimate the clean demosaicked image, while two-stage algorithms [9, 23, 13, 11, 5] sequentially learn the denoisng task and the demosaicking task. In this part, we evaluate which structure is more effective to reconstruct the full color images from a burst of noisy mosaic images with similar trainable parameters.

We train two variants of GCP-Net as the two-stage networks for evaluation, namely, GCP-Net-DE+DM and GCP-Net-DM+DE. GCP-Net-DE+DM first performs burst denoising to obtain the clean mosaic image and then applies demosaicking. The intermediate denoising loss is applied on the estimated , which is obtained by adding one conv layer after the merge function in Eq. 13. The denoised image is then taken as the input for the remaining layers to perform demosaicking. For GCP-Net-DM+DE, it firstly performs demosaicking on every noisy raw image and then performs burst denoising on the demosaicked images. The demosaicking output of the -th frame is a noisy demosaicked image , which is estimated from the output of the last GCA block in the IntraF module. Since there’s no ground-truth for , we pretrain the first stage of GCP-Net-DM+DE for single image demosaicking and then fine-tune the whole network for JDD task.

Table II lists the average PSNR results of the variants of GCP-Net on the Vid4 and REDS4 datasets. We can see that our one-stage GCP-Net consistently outperforms its two-stage variants. This is mainly because denoising and demosaicking are two highly relevant tasks and the two-stage network is not effective to exploit the correlation information of these two tasks. Similar to those multi-task learning works [47], learning simultaneously the relevant tasks could result in better performance. Thus, we choose to use one-stage structure in GCP-Net.

| Testset | Noise Level | DE + DM | DM + DE | JDD (Ours) |

|---|---|---|---|---|

| Vid4 | High | 31.89 | 31.67 | 32.30 |

| Low | 33.46 | 33.19 | 33.91 | |

| REDS4 | High | 33.00 | 32.80 | 33.50 |

| Low | 35.05 | 34.79 | 35.57 |

IV-B3 Role of inter-frame module

To demonstrate the contribution of the proposed inter-frame module (see Fig. 6), we implement four variants of GCP-Net, i.e., GCP-Net-w/o-GCP, GCP-Net-w/o-inter, GCP-Net-w/o-MS and GCP-Net-w/o-LSTM. Specifically, GCP-Net-w/o-GCP represents the network without using GCP in the inter-frame module. That is, the offset is directly estimated from the original features, instead of GCP features. In GCP-Net-w/o-inter, we remove the inter-frame module and take the concatenation of the output features of IntraF module as the input of the merge module. GCP-Net-w/o-MS is implemented by removing the multi-scale offset estimation in the interF module, and GCP-Net-w/o-LSTM represents the network without temporal regularization in the offset estimation.

Table. III reports the PSNR results of GCP-Net and its four variants with different interF modules. As expected, full GCP-Net achieves the best performance, showing that compensating for the shift between frames is crucial for burst image restoration. Estimating offset from better quality GCP features can obtain 0.05dB improvement on JDD-B. Compared with GCP-Net-w/o-MS, utilizing multi-scale information benefits to handle large and complex motions, which results in 0.50.6dB improvement on the REDS4 dataset. By introducing temporal regularization in the offset estimation part, our full model further improves the JDD-B results by 0.06dB.

| Noise | w/o GCP | w/o Inter | w/o MS | w/o LSTM | full |

|---|---|---|---|---|---|

| High | 33.45 | 32.69 | 32.96 | 33.44 | 33.50 |

| Low | 35.51 | 34.80 | 35.01 | 35.52 | 35.57 |

(a) Noisy image

(b) VBM3D+DMN

(c) KPN+DMN

(d) EDVR*

(e) RViDeNet+DMN

(f) RViDeNet*

(g) Ours

(h) GT

IV-B4 Selection of frame number

In this section, we evaluate the performance of GCP-Net trained with different number of frames, denoted as GCP-Net- with . The results are listed in Tables IV and V. Compared with the network using a single image as input, the network using three frames as input can achieve performance gain by a great margin (i.e., 1.11.5dB on Vid4 and 0.30.4dB on REDS4). By using more frames, GCP-Net-5 further improves GCP-Net-3 by about 0.5dB at high noise level and about 0.4dB at low noise level. By further increasing the frame number from 5 to 7, GCP-Net-7 achieves slight improvement (0.1dB) on the Vid4 dataset and comparable results on the REDS4 dataset. Considering the computational efficiency and the performance gains, we choose to use frames in our model.

| Noise Level | Methods | Calendar | City | Foliage | Walk | Average |

|---|---|---|---|---|---|---|

| High noise level | FlexISP | 20.89/0.6108 | 25.61/0.6015 | 22.41/0.5908 | 23.73/0.5125 | 23.16/0.5789 |

| ADMM | 23.03/0.7187 | 29.80/0.7726 | 26.06/0.6709 | 28.68/0.8459 | 26.89/0.7520 | |

| VBM3D+DMN | 23.07/0.7279 | 30.22/0.7881 | 25.76/0.6344 | 28.38/0.8274 | 26.86/0.7444 | |

| KPN+DMN | 24.31/0.7989 | 30.76/0.8225 | 28.09/0.7984 | 30.78/0.8818 | 28.48/0.8254 | |

| EDVR+DMN | 24.78/0.8228 | 31.98/0.8711 | 28.19/0.8283 | 31.30/0.9001 | 29.07/0.8556 | |

| RviDeNet+DMN | 25.82/0.8602 | 34.03/0.9153 | 30.35/0.8914 | 33.20/0.9324 | 30.85/0.8998 | |

| EDVR* | 24.59/0.8314 | 32.07/0.8670 | 28.19/0.8260 | 31.78/0.9066 | 29.16/0.8578 | |

| RviDeNet* | 27.03/0.8797 | 34.40/0.8206 | 31.14/0.9065 | 34.09/0.9400 | 31.67/0.9117 | |

| GCP-Net-1 | 26.09/0.8478 | 33.08/0.8921 | 29.06/0.8471 | 32.95/0.9240 | 30.29/0.8777 | |

| GCP-Net-3 | 26.83/0.8722 | 34.68/0.9230 | 30.87/0.8971 | 34.41/0.9438 | 31.70/0.9090 | |

| GCP-Net-5, ours | 26.96/0.8797 | 35.58/0.9367 | 31.70/0.9154 | 34.97/0.9504 | 32.30/0.9205 | |

| GCP-Net-7 | 27.02/0.8865 | 35.60/0.9409 | 32.05/0.9240 | 35.10/0.9529 | 32.44/0.9261 | |

| Low noise level | FlexISP | 22.28/0.7292 | 28.26/0.7692 | 24.57/0.7333 | 27.17/0.6958 | 25.57/0.7319 |

| ADMM | 23.61/0.7339 | 30.50/0.7914 | 26.80/0.6944 | 30.27/0.8659 | 27.79/0.7714 | |

| VBM3D+DMN | 23.90/0.7606 | 32.31/0.8558 | 26.86/0.6847 | 30.13/0.8573 | 28.30/0.7896 | |

| KPN+DMN | 25.16/0.8425 | 33.09/0.8920 | 30.52/0.8868 | 32.95/0.9233 | 30.43/0.8860 | |

| EDVR+DMN | 25.68/0.8579 | 34.17/0.9206 | 30.21/0.8931 | 33.32/0.9340 | 30.85/0.9014 | |

| RviDeNet+DMN | 26.26/0.8775 | 35.84/0.9443 | 32.23/0.9312 | 34.86/0.9536 | 32.30/0.9267 | |

| EDVR* | 25.17/0.8630 | 34.51/0.9207 | 30.25/0.8927 | 34.03/0.9416 | 30.99/0.9220 | |

| RviDeNet* | 28.03/0.9024 | 36.54/0.9501 | 33.23/0.9428 | 36.31/0.9628 | 33.53/0.9395 | |

| GCP-Net-1 | 27.16/0.8819 | 35.46/0.9347 | 31.42/0.9104 | 35.38/0.9525 | 32.35/0.9199 | |

| GCP-Net-3 | 27.58/0.8943 | 36.77/0.9517 | 32.97/0.9383 | 36.52/0.9639 | 33.47/0.9371 | |

| GCP-Net-5, ours | 27.58/0.8976 | 37.45/0.9584 | 33.67/0.9479 | 36.96/0.9677 | 33.91/0.9429 | |

| GCP-Net-7 | 27.66/0.9010 | 37.59/0.9607 | 33.86/0.9520 | 37.00/0.9689 | 34.02/0.9456 |

| Noise Level | Methods | Clip000 | Clip011 | Clip015 | Clip020 | Average |

|---|---|---|---|---|---|---|

| High noise level | FlexISP | 23.44/0.5257 | 24.09/0.4820 | 24.37/0.4338 | 23.75/0.5129 | 23.91/0.4886 |

| ADMM | 26.35/0.6897 | 28.97/0.7928 | 29.08/0.8204 | 27.74/0.7919 | 28.04/0.7737 | |

| VBM3D+DMN | 26.04/0.6672 | 27.87/0.7536 | 28.30/0.7708 | 26.52/0.7432 | 27.19/0.7337 | |

| KPN+DMN | 27.36/0.7211 | 29.22/0.7703 | 31.14/0.8299 | 28.36/0.7798 | 29.02/0.7753 | |

| EDVR+DMN | 29.20/0.8164 | 30.76/0.8314 | 33.15/0.8966 | 30.19/0.8498 | 30.83/0.8443 | |

| RviDeNet+DMN | 30.28/0.8519 | 32.06/0.8553 | 33.91/0.8883 | 31.30/0.8737 | 31.89/0.8673 | |

| EDVR* | 29.33/0.8279 | 31.67/0.8535 | 33.47/0.8870 | 30.99/0.8731 | 31.37/0.8607 | |

| RviDeNet* | 31.35/0.8816 | 32.57/0.8672 | 34.88/0.9064 | 31.87/0.8853 | 32.67/0.8851 | |

| GCP-Net-1 | 30.30/0.8557 | 32.94/0.8729 | 34.91/0.9069 | 32.32/0.8926 | 32.62/0.8820 | |

| GCP-Net-3 | 31.46/0.8818 | 32.97/0.8731 | 35.37/0.9115 | 32.30/0.8926 | 33.03/0.8898 | |

| GCP-Net-5, ours | 32.29/0.9035 | 33.28/0.8783 | 35.79/0.9177 | 32.63/0.8979 | 33.50/0.8994 | |

| GCP-Net-7 | 32.39/0.9044 | 33.21/0.8773 | 35.86/0.9180 | 32.58/0.8975 | 33.51/0.8993 | |

| Low noise level | FlexISP | 25.86/0.6932 | 27.49/0.6638 | 27.85/0.6314 | 26.70/0.6878 | 26.97/0.6690 |

| ADMM | 27.38/0.7139 | 30.43/0.8123 | 31.17/0.8463 | 29.24/0.8143 | 29.56/0.7967 | |

| VBM3D+DMN | 27.68/0.7308 | 30.17/0.8061 | 31.36/0.8402 | 28.78/0.8008 | 29.50/0.7945 | |

| KPN+DMN | 29.36/0.8078 | 31.36/0.8270 | 33.23/0.8717 | 30.83/0.8480 | 31.20/0.8386 | |

| EDVR+DMN | 29.95/0.8484 | 31.59/0.8539 | 34.05/0.8959 | 31.16/0.8756 | 31.69/0.8684 | |

| RviDeNet+DMN | 32.20/0.9034 | 33.97/0.8932 | 35.58/0.9134 | 33.45/0.9121 | 33.80/0.9056 | |

| EDVR* | 31.44/0.8922 | 33.59/0.8917 | 35.54/0.9169 | 33.16/0.9129 | 33.43/0.9034 | |

| RviDeNet* | 33.58/0.9285 | 34.63/0.9044 | 36.98/0.9327 | 34.23/0.9230 | 34.86/0.9221 | |

| GCP-Net-1 | 32.66/0.9129 | 34.99/0.9092 | 36.99/0.9329 | 34.67/0.9286 | 34.82/0.9209 | |

| GCP-Net-3 | 33.66/0.9287 | 34.95/0.9087 | 37.33/0.9351 | 34.54/0.9274 | 35.12/0.9250 | |

| GCP-Net-5, ours | 34.45/0.9414 | 35.27/0.9144 | 37.74/0.9403 | 34.82/0.9311 | 35.57/0.9318 | |

| GCP-Net-7 | 34.48/0.9415 | 35.14/0.9116 | 37.77/0.9397 | 34.77/0.9309 | 35.54/0.9309 |

IV-C Comparison Methods

Since currently there is no JDD-B method publically available, for fair comparison, we combine the representative burst image denoising methods with a state-of-the-art demosaicking method, DemosaicNet (DMN) [9], to compare with our GCP-Net. We choose four widely used burst denoising algorithms: VBM3D [48], KPN [16], EDVR [19] and RViDeNet [20]. For VBM3D, the noise level is needed as the input, and we use the method in [49] for noise level estimation. For the KPN, EDVR and RViDeNet models, they are retrained using the same training data as GCP-Net. The retrained EDVR and RViDeNet models adopt 20 residual blocks to perform feature extraction and 40 residual blocks in the reconstruction module. The size of learned per-pixel kernel of retrained KPN is 7 x 7.

We also adjust the structures of EDVR and RViDeNet by adding one upsampling operator in the reconstruction step, and train these models for the JDD-B task. These models are denoted as EDVR* and RViDeNet*, respectively. In addition, we compare with two state-of-the-art JDD-S algorithms: FlexISP [7] and ADMM [8].

Noise image (iPhone X)

Noise patch

KPN+DMN

EDVR+DMN

EDVR*

RviDeNet+DMN

RviDeNet*

Ours

Noise image (Pixel 2)

Noise patch

KPN+DMN

EDVR+DMN

EDVR*

RviDeNet+DMN

RviDeNet*

Ours

Noise image (iPhone 7)

Noise patch

KPN+DMN

EDVR+DMN

EDVR*

RviDeNet+DMN

RviDeNet*

Ours

IV-D Results on Synthetic Data

Two video datasets, i.e., Vid4 [46] and REDS4 [19], are adopted in the experiments. Vid4 is widely used as the test set in the study of video super-resolution, and the video clips (resolution: ) in Vid4 have small motion. The videos in REDS4 dataset have better quality and resolution (720p) but with bigger motions. Video clips are firstly converted to raw space using the unprocessing operator introduced in Sec IV-A. Then the noise is added to the raw images by using Eq. 1. Tables IV and V list the PSNR/SSIM results of different algorithms under different noise levels. Following [16], both PSNR and SSIM are computed after gamma correction to better reflect perceptual quality. One can see that the proposed GCP-Net achieves the best PSNR/SSIM measures. Visual comparisons on Vid4 and REDS4 are presented in Fig. 8 and Fig. 9, respectively.

From Tables IV and V, we can see that the performance of JDD-S methods FlexISP and ADMM is generally far below the learning based multi-frame algorithms. However, the multi-frame based VBM3D+DMN fails to compete with the single frame based ADMM, especially on the REDS4 dataset. This is mainly because VBM3D+DMN assumes AWGN and it cannot handle the large-motion in the videos of REDS4. The CNN-based methods KPN+DMN, EDVR+DMN and RviDeNet+DMN achieve much better performance than JDD-S methods because they can learn to handle the misalignments between adjacent frames and exploit the temporal redundancy for denoising. Nonetheless, one can still see that these methods generate noticeable color artifacts and zippers (see Figs. 8(b)(c)(e) and Figs. 9(b)(c)(e)) near edges and complex textures. This is mainly because they perform burst denoising and color demosaicking separately without considering the correlations between the two tasks. For JDD-B algorithms, i.e., EDVR* and RviDeNet*, their restoration results contain less zippering and color artifacts compared with EDVR+DMN and RviDeNet+DMN, which proves the effectiveness of jointly handling denoising and demosaicking task. However, the results of EDVR* and RviDeNet* suffer from the over-smoothing problem (see Figs. 9(d)(f)). Benefiting from the GCP guidance, our GCP-Net performs the best in the JDD task, making good balance between noise removal and structure preservation (see Fig. 9(g)).

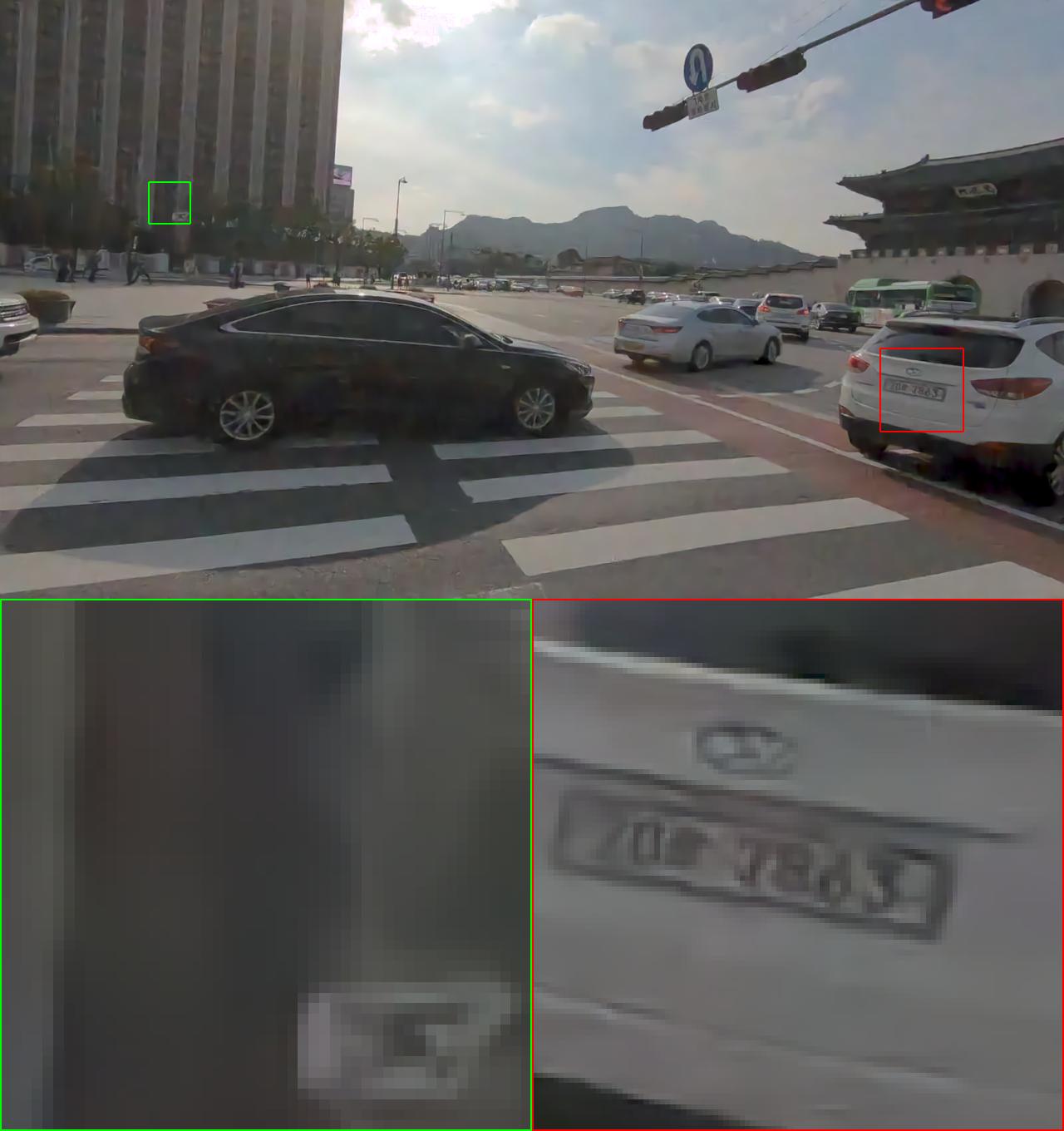

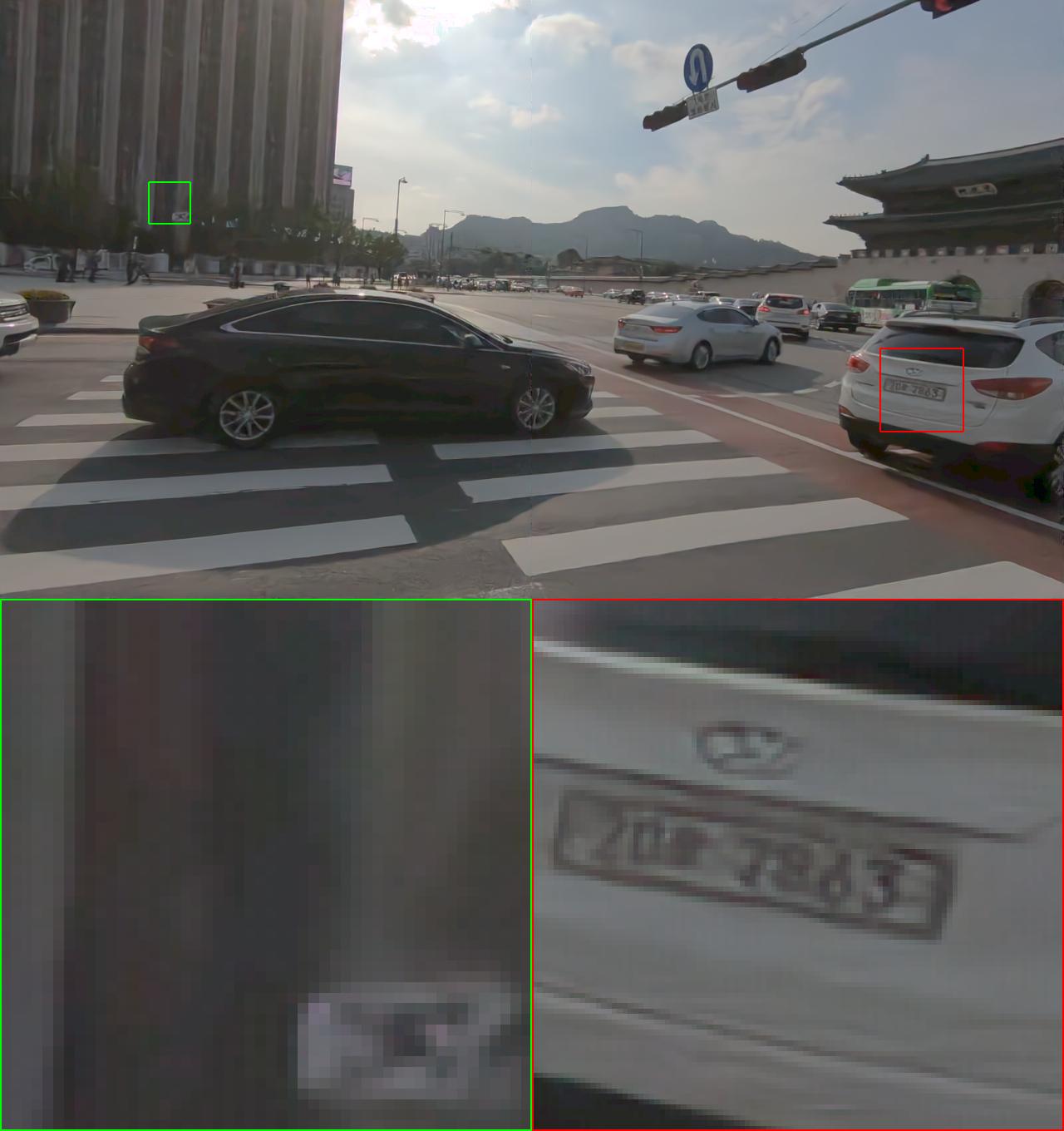

IV-E Results on Real-world Data

We also compare different JDD algorithms using real-world burst raw images captured by several smartphone cameras, including iPhone 7, iPhone X and Pixel 2. Since there is no ground-truth for the collected images, we can only provide qualitative comparison. The restored images are converted to sRGB domain for visualization by using the ISP operations, including white balance, color transfer and gamma compression. The white balance parameters, color matrix and the noise level are collected from the camera metadata.

In Fig. 10, we show the JDD results of several noisy images captured by the three smartphone cameras under normal lighting conditions as well as nighttime environments with a wide range of ISO values. Similar conclusions to the synthetic experiments can be made. KPN+DMN, EDVR+DMN and RviDeNet+DMN can remove noise but will produce zippering artifacts and smooth the image details. By jointly performing denoising and demosaicking, EDVR* and RviDeNet* can reduce the zippering effect but still produce over-smoothed reconstruction. In the zoom-in patch of the second image in Fig. 10, we can also see that RviDeNet* generates artifacts in the high noisy area. Our proposed GCP-Net can effectively remove noise while retaining fine textures. It is also free of the moire pattern. In the last row of Fig. 10, we show the JDD results on night-shot burst images with large motion. We can see that the restoration result of KPN+DMN contains much ghosting artifact, which is mainly caused by the large motion of object (i.e., car) in the burst images. RviDeNet* also generates some motion induced artifacts around the moving objects. The proposed GCP-Net can work stably under such large-motion scenes. This is because we utilize the pyramid offset estimation and the offset is estimated on GCP features which dilute the impact of noise.

IV-F Model Size

Table VI lists the number of model parameters and the number of floating point operations (FLOPs) of our GCP-Net and the comparison methods, i.e., DMN, KPN, EDVR and RviDeNet. The FLOPs are calculated on 5 frames of size . Because of the GCP branch and some attention modules, GCP-Net has more parameters than DMN, KPN and EDVR, but its model is much smaller than RviDeNet. Though the number of parameter and FLOPs of GCP-Net are 2 times and 1.5 times that of EDVR, it achieves significantly better JDD results than EDVR (3.5dB on Vid4 and 2dB on REDS4). Since RviDeNet utilizes a pre-denoising network and non-local modules, it has very high computational cost but achieves lower JDD performance than GCP-Net. Overall, GCP-Net achieves a good balance between JDD effectiveness and efficiency.

| DMN | KPN | EDVR | RviDeNet | GCP-Net | |

|---|---|---|---|---|---|

| #. Params | 0.56M | 5.58M | 6.28M | 57.98M | 13.79M |

| FLOPs | 2.2G | 4.2G | 55.0G | 482.2G | 78.8G |

V Conclusion

Most of the previous joint denoising and demosaicking (JDD) methods worked on a single color filter array (CFA) image. In this paper, we proposed an effective network, namely GCP-Net, for JDD on real-world burst images (JDD-B). Our method took the advantages of green channel prior (GCP), which referred to the fact that the green channel of CFA raw images usually had higher quality and sampling rate than the red and blue channels. The GCP features were used to guide the intra-frame feature extraction, inter-frame fusion and upsampling process of the multi-frame JDD-B task. Our experiments on synthetic data and real-world data quantitatively and qualitatively demonstrated that GCP-Net achieved superior performance to existing state-of-the-art algorithms of JDD. It can remove the heavy noise from images captured in low-light condition, preserve the textures and details without generating much visual artifact.

References

- [1] T. Ehret and G. Facciolo, “A study of two cnn demosaicking algorithms,” Image Processing On Line, vol. 9, pp. 220–230, 2019.

- [2] B. Yang and D. Wang, “An efficient adaptive interpolation for bayer cfa demosaicking,” Sensing and Imaging, vol. 20, no. 1, p. 37, 2019.

- [3] N. Yan and J. Ouyang, “Cross-channel correlation preserved three-stream lightweight cnns for demosaicking,” arXiv preprint arXiv:1906.09884, 2019.

- [4] S. Liu, J. Chen, Y. Xun, X. Zhao, and C.-H. Chang, “A new polarization image demosaicking algorithm by exploiting inter-channel correlations with guided filtering,” IEEE Transactions on Image Processing, vol. 29, pp. 7076–7089, 2020.

- [5] Q. Jin, G. Facciolo, and J.-M. Morel, “A review of an old dilemma: Demosaicking first, or denoising first?” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 514–515.

- [6] L. Condat and S. Mosaddegh, “Joint demosaicking and denoising by total variation minimization,” in 2012 19th IEEE International Conference on Image Processing. IEEE, 2012, pp. 2781–2784.

- [7] F. Heide, M. Steinberger, Y.-T. Tsai, M. Rouf, D. Pajk, D. Reddy, O. Gallo, J. Liu, W. Heidrich, K. Egiazarian et al., “Flexisp: A flexible camera image processing framework,” ACM Transactions on Graphics (TOG), vol. 33, no. 6, pp. 1–13, 2014.

- [8] H. Tan, X. Zeng, S. Lai, Y. Liu, and M. Zhang, “Joint demosaicing and denoising of noisy bayer images with admm,” in 2017 IEEE International Conference on Image Processing (ICIP). IEEE, 2017, pp. 2951–2955.

- [9] M. Gharbi, G. Chaurasia, S. Paris, and F. Durand, “Deep joint demosaicking and denoising,” ACM Transactions on Graphics (TOG), vol. 35, no. 6, pp. 1–12, 2016.

- [10] B. Henz, E. S. Gastal, and M. M. Oliveira, “Deep joint design of color filter arrays and demosaicing,” in Computer Graphics Forum, vol. 37, no. 2. Wiley Online Library, 2018, pp. 389–399.

- [11] T. Ehret, A. Davy, P. Arias, and G. Facciolo, “Joint demosaicking and denoising by fine-tuning of bursts of raw images,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 8868–8877.

- [12] F. Kokkinos and S. Lefkimmiatis, “Iterative joint image demosaicking and denoising using a residual denoising network,” IEEE Transactions on Image Processing, vol. 28, no. 8, pp. 4177–4188, 2019.

- [13] G. Qian, J. Gu, J. S. Ren, C. Dong, F. Zhao, and J. Lin, “Trinity of pixel enhancement: a joint solution for demosaicking, denoising and super-resolution,” arXiv preprint arXiv:1905.02538, 2019.

- [14] L. Liu, X. Jia, J. Liu, and Q. Tian, “Joint demosaicing and denoising with self guidance,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2240–2249.

- [15] A. Abdelhamed, S. Lin, and M. S. Brown, “A high-quality denoising dataset for smartphone cameras,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1692–1700.

- [16] B. Mildenhall, J. T. Barron, J. Chen, D. Sharlet, R. Ng, and R. Carroll, “Burst denoising with kernel prediction networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 2502–2510.

- [17] T. Xue, B. Chen, J. Wu, D. Wei, and W. T. Freeman, “Video enhancement with task-oriented flow,” International Journal of Computer Vision, vol. 127, no. 8, pp. 1106–1125, 2019.

- [18] T. Ehret, A. Davy, J.-M. Morel, G. Facciolo, and P. Arias, “Model-blind video denoising via frame-to-frame training,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 11 369–11 378.

- [19] X. Wang, K. C. Chan, K. Yu, C. Dong, and C. Change Loy, “Edvr: Video restoration with enhanced deformable convolutional networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019, pp. 0–0.

- [20] H. Yue, C. Cao, L. Liao, R. Chu, and J. Yang, “Supervised raw video denoising with a benchmark dataset on dynamic scenes,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2301–2310.

- [21] T. Brooks, B. Mildenhall, T. Xue, J. Chen, D. Sharlet, and J. T. Barron, “Unprocessing images for learned raw denoising,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 11 036–11 045.

- [22] X. Tan, S. Lai, Y. Liu, and M. Zhang, “Green channel guiding denoising on bayer image,” The Scientific World Journal, vol. 2014, 2014.

- [23] R. Tan, K. Zhang, W. Zuo, and L. Zhang, “Color image demosaicking via deep residual learning,” in Proc. IEEE Int. Conf. Multimedia Expo (ICME), 2017, pp. 793–798.

- [24] X. Xu, M. Li, and W. Sun, “Learning deformable kernels for image and video denoising,” arXiv preprint arXiv:1904.06903, 2019.

- [25] T. Marinč, V. Srinivasan, S. Gül, C. Hellge, and W. Samek, “Multi-kernel prediction networks for denoising of burst images,” in 2019 IEEE International Conference on Image Processing (ICIP). IEEE, 2019, pp. 2404–2408.

- [26] Z. Xia, F. Perazzi, M. Gharbi, K. Sunkavalli, and A. Chakrabarti, “Basis prediction networks for effective burst denoising with large kernels,” arXiv preprint arXiv:1912.04421, 2019.

- [27] D. Liu, Z. Wang, Y. Fan, X. Liu, Z. Wang, S. Chang, and T. Huang, “Robust video super-resolution with learned temporal dynamics,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2507–2515.

- [28] Y. Tian, Y. Zhang, Y. Fu, and C. Xu, “Tdan: Temporally deformable alignment network for video super-resolution,” arXiv preprint arXiv:1812.02898, 2018.

- [29] C. Zach, T. Pock, and H. Bischof, “A duality based approach for realtime tv-l 1 optical flow,” in Joint pattern recognition symposium. Springer, 2007, pp. 214–223.

- [30] A. Ranjan and M. J. Black, “Optical flow estimation using a spatial pyramid network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 4161–4170.

- [31] A. Foi, M. Trimeche, V. Katkovnik, and K. Egiazarian, “Practical poissonian-gaussian noise modeling and fitting for single-image raw-data,” IEEE Transactions on Image Processing, vol. 17, no. 10, pp. 1737–1754, 2008.

- [32] K. He, J. Sun, and X. Tang, “Guided image filtering,” in European conference on computer vision. Springer, 2010, pp. 1–14.

- [33] T. Park, M.-Y. Liu, T.-C. Wang, and J.-Y. Zhu, “Semantic image synthesis with spatially-adaptive normalization,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 2337–2346.

- [34] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- [35] S. Anwar and N. Barnes, “Real image denoising with feature attention,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 3155–3164.

- [36] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, M.-H. Yang, and L. Shao, “Learning enriched features for real image restoration and enhancement,” arXiv preprint arXiv:2003.06792, 2020.

- [37] S. Xingjian, Z. Chen, H. Wang, D.-Y. Yeung, W.-K. Wong, and W.-c. Woo, “Convolutional lstm network: A machine learning approach for precipitation nowcasting,” in Advances in neural information processing systems, 2015, pp. 802–810.

- [38] X. Xiang, Y. Tian, Y. Zhang, Y. Fu, J. P. Allebach, and C. Xu, “Zooming slow-mo: Fast and accurate one-stage space-time video super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 3370–3379.

- [39] J. Dai, H. Qi, Y. Xiong, Y. Li, G. Zhang, H. Hu, and Y. Wei, “Deformable convolutional networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 764–773.

- [40] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention. Springer, 2015, pp. 234–241.

- [41] W.-S. Lai, J.-B. Huang, N. Ahuja, and M.-H. Yang, “Deep laplacian pyramid networks for fast and accurate super-resolution,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 624–632.

- [42] T. Plotz and S. Roth, “Benchmarking denoising algorithms with real photographs,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1586–1595.

- [43] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proceedings of the IEEE international conference on computer vision, 2015, pp. 1026–1034.

- [44] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [45] I. Loshchilov and F. Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” arXiv preprint arXiv:1608.03983, 2016.

- [46] C. Liu and D. Sun, “On bayesian adaptive video super resolution,” IEEE transactions on pattern analysis and machine intelligence, vol. 36, no. 2, pp. 346–360, 2013.

- [47] P. Liu and R. Fang, “Wide inference network for image denoising via learning pixel-distribution prior,” arXiv preprint arXiv:1707.05414, 2017.

- [48] D. Kostadin, F. Alessandro, and E. Karen, “Video denoising by sparse 3d transform-domain collaborative filtering,” in European signal processing conference, vol. 149, 2007, p. 2.

- [49] G. Chen, F. Zhu, and P. Ann Heng, “An efficient statistical method for image noise level estimation,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 477–485.