Iterative execution of discrete and inverse discrete Fourier transforms with applications for signal denoising via sparsification

Abstract

We describe a family of iterative algorithms that involve the repeated execution of discrete and inverse discrete Fourier transforms. One interesting member of this family is motivated by the discrete Fourier transform uncertainty principle and involves the application of a sparsification operation to both the real domain and frequency domain data with convergence obtained when real domain sparsity hits a stable pattern. This sparsification variant has practical utility for signal denoising, in particular the recovery of a periodic spike signal in the presence of Gaussian noise. General convergence properties and denoising performance relative to existing methods are demonstrated using simulation studies. An R package implementing this technique and related resources can be found at https://hrfrost.host.dartmouth.edu/IterativeFT.

1Department of Biomedical Data Science

Dartmouth College

Hanover, NH 03755, USA

[email protected]

1 Problem statement

We consider a class of iterative discrete Fourier transform [1] techniques described by Algorithm (1). The structure of this algorithm is broadly motivated by iterative methods such as the alternating direction method of multipliers (ADMM) [2] and expectation-maximization (EM) [3]. The family of algorithms we consider take a real-valued vector as input and then repeatedly perform the following sequence of actions:

-

•

Execute a function on .

-

•

Perform a discrete Fourier transform, , on the output of . The element of the complex-valued vector output by the discrete Fourier transform, , is defined as:

(1) -

•

Execute a function on the complex vector output by the discrete Fourier transform.

-

•

Transform the output of back into the real domain via the inverse discrete Fourier transform, . The element of the real-valued vector output by the inverse discrete Fourier transform, , is defined as:

(2)

This iteration can expressed as:

| (3) |

Convergence of the algorithm is determined by a function that compares to , i.e., the output of on the current iteration to the version from the prior iteration. When convergence is obtained, the output from the last execution of is returned. See Algorithm (1) for a detailed definition. For this general family of algorithms, a key question relates to what combinations of , , and functions and constraints on enable convergence. We are specifically interested in scenarios involving convergence to a value that is relevant for a specific data analysis application, e.g., a denoising or optimization problem.

Inputs:

-

•

Input data

-

•

The maximum number of iterations

Outputs:

-

•

Output data

-

•

Number of iterations completed

1.1 Trivial cases

If both and are the identity function, then convergence occurs after a single iteration and the entire algorithm operates as the identity function, i.e., . Similarly, if just one of or is the identity function, then the algorithm simplifies to the repeated execution of the non-identity function, e.g., if is the identity function then . In general, we will assume that neither nor are the identity function and that the number of iterations until convergence, , is a function of , i.e., if is a random vector, then is a random variable.

1.2 Generalizations

A number of generalizations of Algorithm (1) are possible:

-

1.

Matrix-valued input: Instead of accepting a vector as input, the algorithm could accept a matrix (or higher-dimensional array) with and replaced by two-dimensional counterparts.

-

2.

Complex-valued input: Instead of just real values, elements of the input could be allowed to take complex or hypercomplex (e.g., quaternion or octonion) values with a correponding change to the complex, or hypercomplex, discrete Fourier transform.

-

3.

Alternative invertable discrete transform: The discrete Fourier transform could be replaced by another invertable discrete transform, e.g., discrete wavelet transform [4]. More broadly, a similar approach could be used with any invertable discrete function.

We explore the first generalization in this paper (see Section 4.2) but restrict our interest to the real-valued discrete Fourier transform and leave the other generalizations to future work.

2 Related techniques

Algorithm (1) is related to both standard discrete Fourier analysis techniques and iterative algorithms that alternate between dual problem representations. Although a detailed comparison is not possible without defining , , and , some general observations are possible.

2.1 Relationship to standard discrete Fourier analysis

The discrete Fourier transform is widely used in many areas of data analysis with common applications including signal processing [5], image analysis [6], and efficient evaluation of convolutions [7]. The standard structure for these applications is the transformation of real-valued data (e.g, time-valued signal or image) into the frequency domain via a discrete Fourier transform, computation on the frequency domain representation, and final transformation back into the time/location domain via an inverse discrete Fourier transform. For example, to preserve specific frequencies in a signal, the original data can be transformed into the frequency domain via a discrete Fourier transform, the coefficients for non-desired frequencies set to zero, and a filtered time domain signal generated via an inverse transformation. A similar structure is found in the application of other invertable discrete transforms. A key difference between this type of application and the approach discussed in this paper relates to number of executions of the forward and inverse transforms and the nature of the functions applied to the data in each domain, i.e., and . Specifically, standard discrete Fourier analysis involves just the single application of forward and inverse transforms separated by execution of a single function. In contrast, the method described by Algorithm (1) involves the repeated execution of forward and inverse transforms interleaved by the execution of and functions until the desired convergence conditions are obtained.

2.2 Relationship to other iterative algorithms

Algorithm (1) shares features with a range of methods (e.g., ADMM [2], Dykstra’s algorithm [8], and EM [3]) that alternate between coupled representations of a problem on each iteration. For ADMM, an optimization problem is solved by alternating between 1) estimating the value of the primal variable that minimizes the Lagrangian with the Lagrangian variables fixed and 2) updating the Lagrangian or dual variables using the most recent primal variable value. Dykstra’s algorithm is also used to solve optimization problems and, for many scenarios, is exactly equivalent to ADMM [9]. For EM, a likelihood maximization problem is solved by alternatively 1) finding the probability distribution of latent variables that maximizes the expected likelihood using fixed parameter values and 2) finding parameter values that maximize the expected likihood given the most recent latent variable distribution. ADMM, Dykstra’s algorithm and EM can all therefore be viewed as techniques that alternatively update different parameter subsets of a common function. In contrast, Algorithm (1) alternates between two different functions, and , that are applied to the same data before and after an invertable discrete transform. Thus, while Algorithm (1) is broadly similar to these techniques, it cannot be directly mapped to these methods. We are not aware of existing iterative algorithms that are equivalent to Algorithm (1).

3 Iterative convergence under sparsification

An interesting subclass of the general iterative method detailed in Algorithm 1 involves the use of sparsification functions for both and with identifying convergence when a stable sparsity pattern is achieved in the output of . Iterating between real domain and frequency domain sparsification is motivated by the discrete Fourier transform uncertainty principal [10], which constrains the total number of zero values in the real domain data and frequency domain representation generated by a discrete Fourier transform. Attempts to induce sparsity in one domain will reduce sparsity in the other domain with the implication that setting both and to sparsification functions will not simply result in the generation of a vector of all 0 elements. Instead, for scenarios where the iterative algorithm converages, the solution will represent a stable compromise between real and frequency domain sparsity.

We can explore the general convergence properties of Algorithm 1 using sparsification functions for and via the following simulation design:

-

•

Set to a length vector of random variables.

-

•

Define to generate a sparse version of where the proportion of elements with the smallest absolute values are set to 0.

-

•

Define to generate a sparse version of where the proportion of complex coefficients with the smallest magnitudes are set to .

-

•

Define to identify convergence when the indices of 0 values in the output of are identical on two sequential iterations.

-

•

The discrete and inverse discrete Fourier transforms are realized using the Fast Fourier Transform.

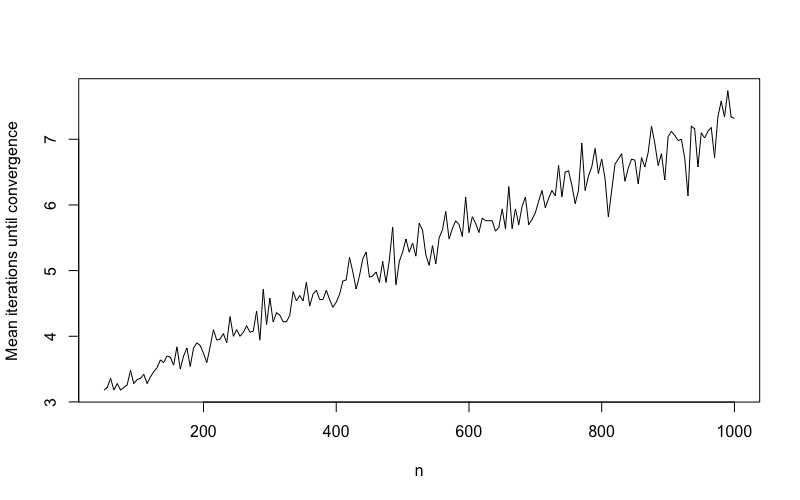

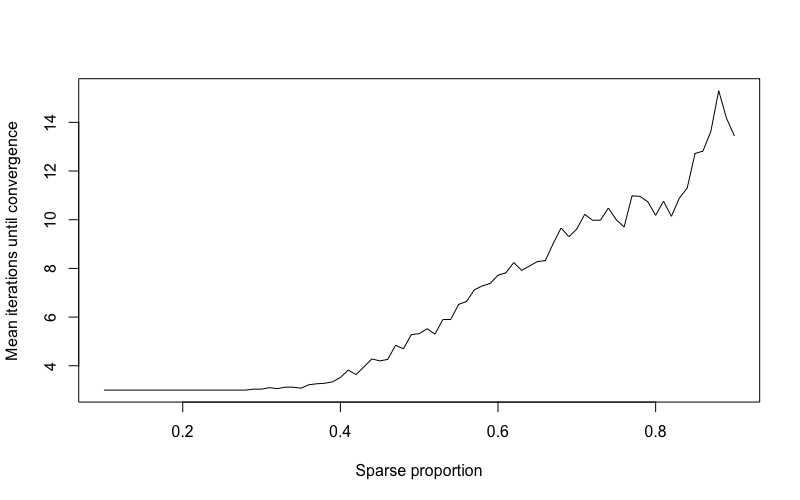

Both general and sparsification versions of Algorithm 1 are supported by the IterativeFT R package available at https://hrfrost.host.dartmouth.edu/IterativeFT. Following this design, we applied the algorithm to 50 simulated vectors for each distinct value in the range from 50 to 1,000 using the sparse proportion of and the maximum number of iterations . Figure 1 below displays the mean number of iterations until convergence as a function of . Figure 2 illustrates the results from a similar simulation that used a fixed of 500 and sparse proportion value ranging from to . For all of the tests visualized in Figures 1 and 2, the algorithm converged to a stable pattern of sparsity in . Not surprisingly, the number of iterations required to achieve a stable sparsity pattern increased with the growth in either or . If and are changed to set all elements with absolute value or magnitude below the mean to 0 (see simulation design in Section 4), the relationship between mean iterations until convergence and is similar to that shown in Figure 1. Changing the generative model for to include a non-random periodic signal (e.g., sinusodial signal or spike signal) also generates similar convergence results.

4 Detection of spike signals using iterative convergence

To assess the pratical utility of a sparsification version of Algorithm 1 for signal denoising, we applied the technique to data generated as either a one-dimensional vector or two-dimensional matrix containing a periodic spike signal with Guassian noise. For the one-dimensional cases, , , and were specified as follows:

-

•

Define to generate a sparse where all elements are set to 0.

-

•

Define to generate a sparse where all elements are set to (here the operation represents the magnitude of the complex number ).

-

•

Define to identify convergence when the indices of 0 values in the output of are identical on two sequential iterations.

For the matrix case, , , and can be executed on a vectorized version of . In the remainder of this paper, we will refer to the version of Algorithm 1 that uses these , , and functions as the IterativeFT method.

4.1 Detection of spike signal in vector-valued input

For the one-dimensional case, the input vector was generated as the combination of a periodic spike signal and Gaussian noise , , with:

-

•

Elements of set to 0 for all and generated as random variables for where is the total number of cycles and is the period.

-

•

Elements of are generated as independent random variables with distribution .

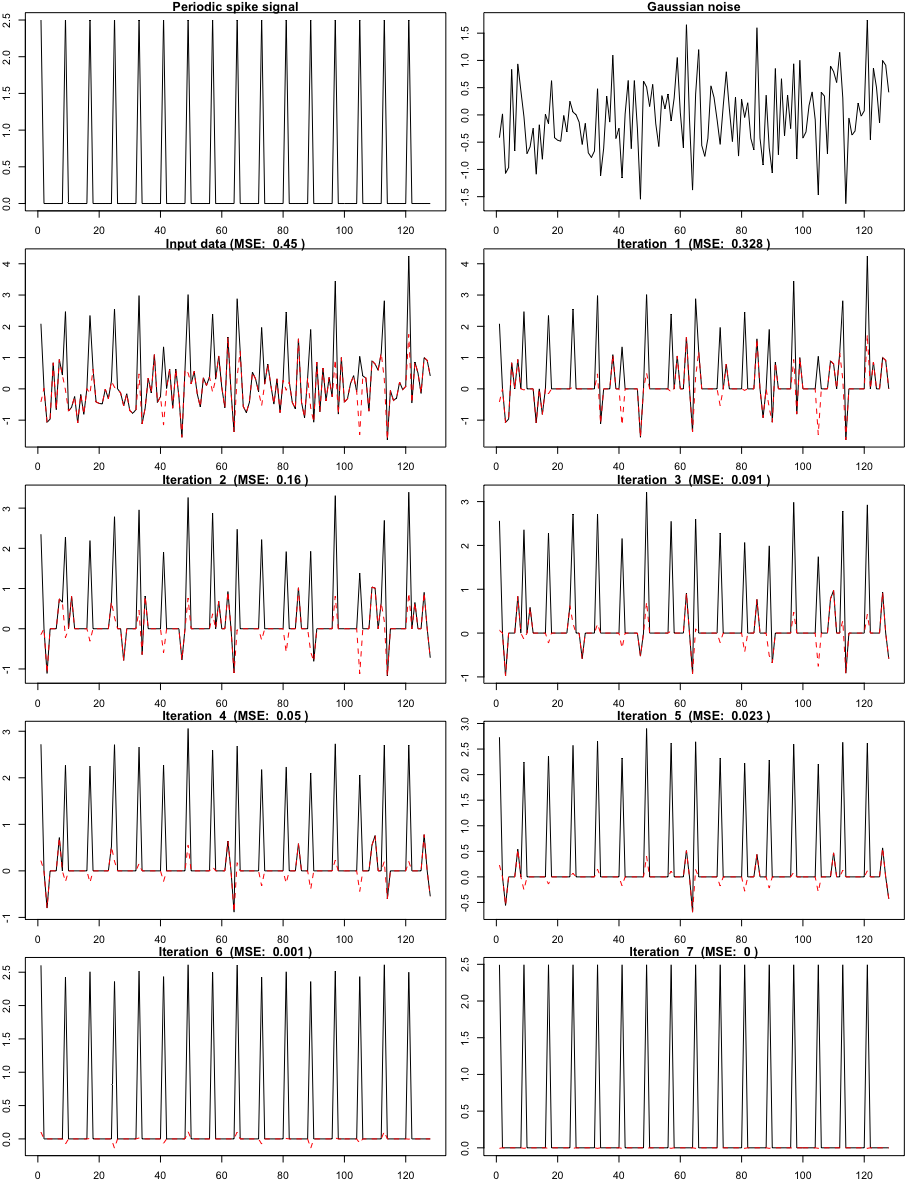

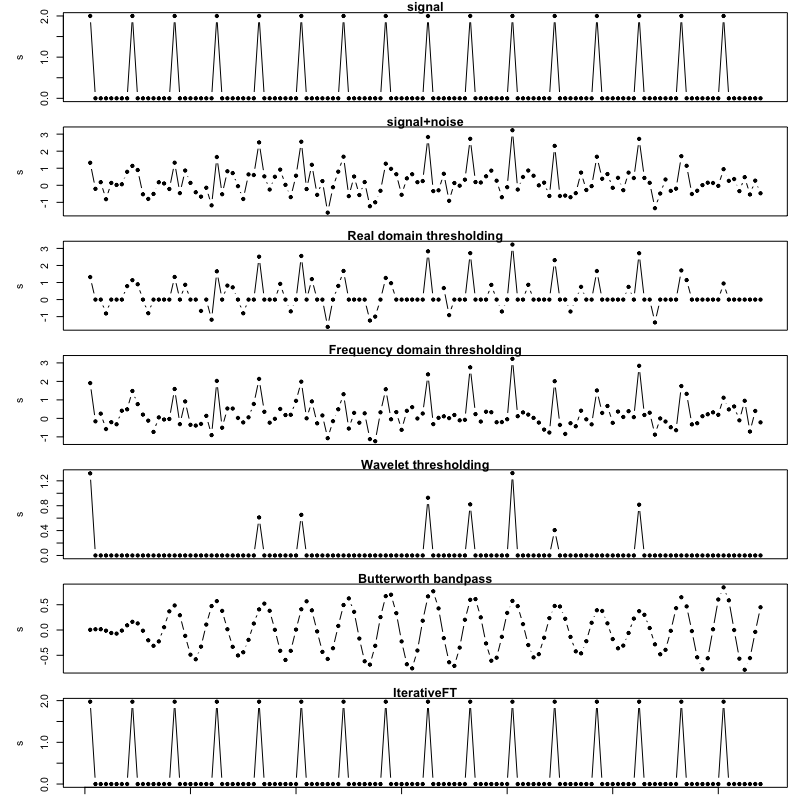

Figure 3 shows an example of generated according to this simulation model with and . For this specific example, the method converges in seven iterations and perfectly recovers the periodic spike signal.

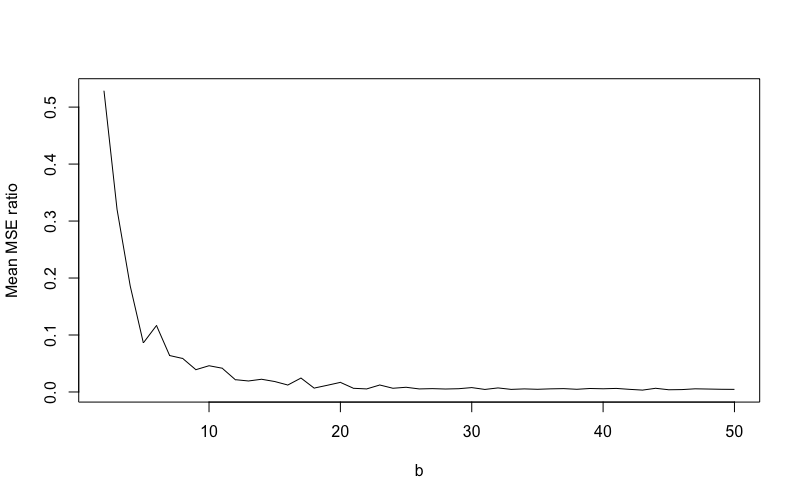

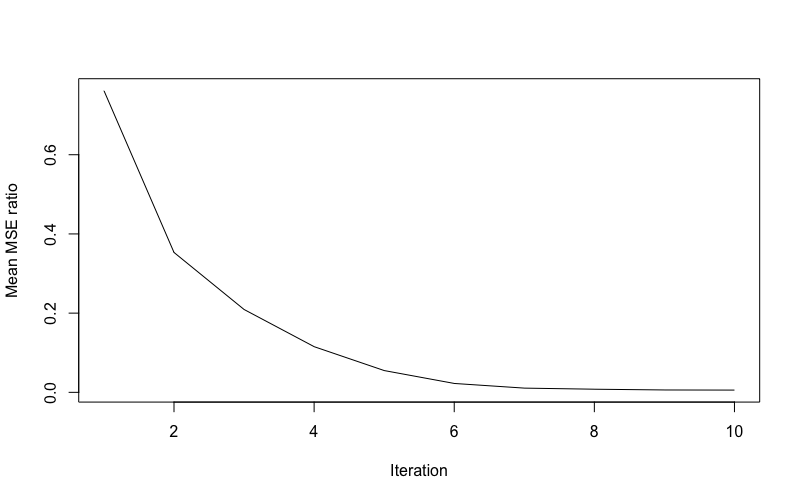

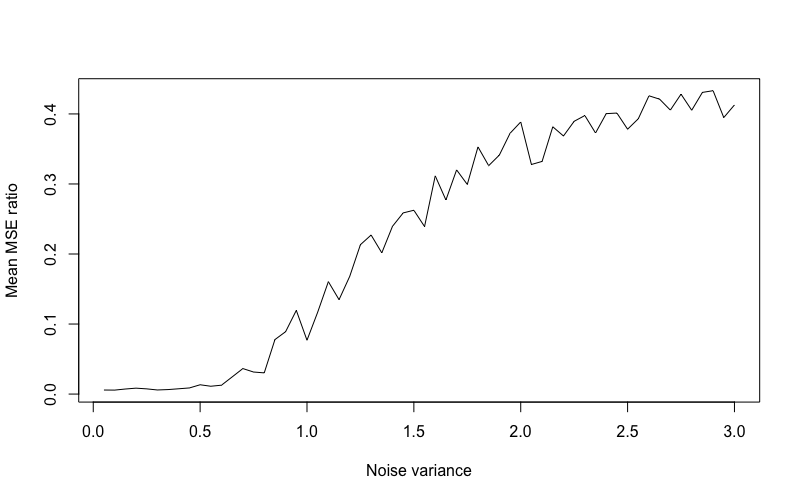

To more broadly charaterize signal recovery for this simulation design, multiple vectors were generated for different values of , and . Figure 4 shows the relationship between the MSE ratio achieved on convergence averaged across 50 simulated vectors and the number of cycles, , captured in . The MSE ratio is specifically computed as where is the mean squared error (MSE) between the output of the method after convergence and the spike signal and is the MSE for the output from the first execution of . For this simulation design, the average MSE ratio is very close to 0 for , which reflects near perfect recovery of the input periodic spike signal.

Figure 5 captures the association between the average MSE ratio and the number of iterations completed by the algorithm ( was fixed at 20 for this simulation). These results demonstrate that signal recovery consistently improves on each iteration of the algorithm with the lowest MSE achieved upon convergence. Figure 6 captures the association between the average MSE ratio achieved on convergence and Gaussian noise variance ( was fixed at 20 for this simulation). These results demonstrate the expected increase in signal recovery error with increase noise variance and, importantly, show that the method still achieves improved noise recovery relative to just a single execution of at high levels of noise.

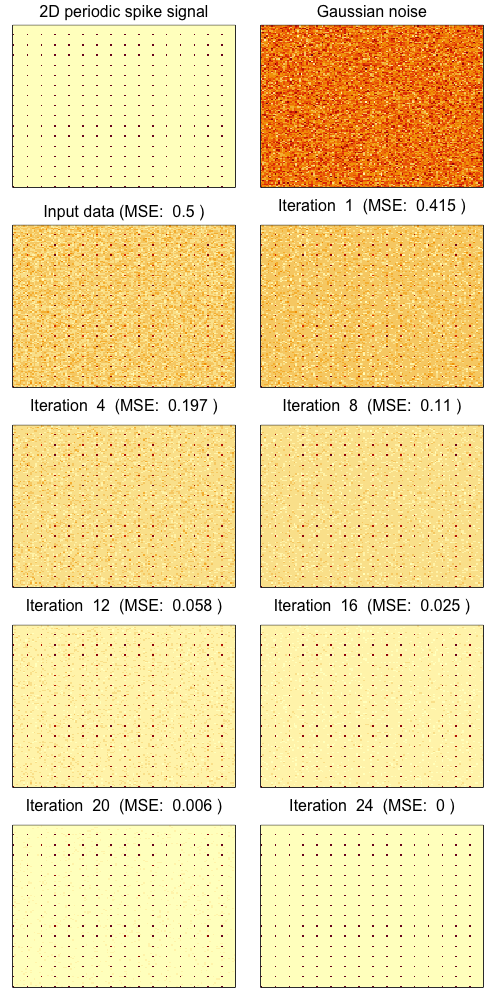

4.2 Detection of spike signal in matrix-valued input

To explore the generalization where the input is a matrix rather than a vector, we also tested a simulation model where an input matrix is generated as follows:

- •

-

•

Create the signal matrix as .

-

•

Generate an noise matrix whose elements are independent random variables.

-

•

Create the input matrix as .

Figure 7 shows an example of generated according to this simulation model with and . For this specific example, the IterativeFT method converges in 24 iterations and perfectly recovers the periodic spike signal matrix. The convergence properties for this type of matrix input when evaluated across multiple simulations mirror those for the vector input as shown in Figures 4, 5, and 6.

4.3 Analysis of spike signal detection

-

•

The elements of the generated input vector will be stochastically larger for elements that are non-zero in the periodic signal vector given that the expected value of is based solely on :

E of sum is sum of E so -

•

These non-zero signal vector elements will therefore be less likely to be set to 0 by the sparsification version of , which results in the frequency spectra of being more strongly based on the frequency spectra of than the spectra of .

-

•

These characteristics of the frequency spectra of the output from mean that the output of will be stochastically larger for elements that correspond to the spectra of .

-

•

The sparsification version of will therefore be more likely to retain frequency components associated with and set to 0 frequency components corresponding to .

-

•

Repeated iterations will reinforce these patterns until a stable sparsity structure is obtained in , which will tend to correspond to the very sparse frequency spectra of .

To understand why the iterative application of both and on real and frequency representations of the data is needed one can consider scenarios where only one of or is performed (as outlined in Section 1.1, such scenarios are inherently non-iterative):

-

•

Only is executed: Although a single execution of the sparsification version of will tend to reduce the error between and , noise of a sufficient amplitude will result in the incorrect removal of some parts of (i.e., negative noise may lower the value of a spike below the threshold) and incorrect retention of some parts of (i.e., the amplitude of the random noise may be larger than the threshold), e.g. Figure 3. The performance of a single execution is assessed more comprehensively in 5.

-

•

Only is executed: In this scenario, only a single execution of is performed on , followed by execution of before finally transforming back via , i.e., the output is given by . If one had prior knowledge of the spectra of , then it would be possible specifically target the desired frequencies via , e.g., a bandpass filter (though as shown in Section 5 below, even prior knowledge of an appropriate frequency band may not yield good denoising performance). Lacking this prior knowledge, however, sparsification via can only be based the magnitude of frequency domain coefficients and, while it will tend to preserve the portions of the spectra of associated with , random noise will cause the incorrect removal of spectral components that are due to and the incorrect retention of spectral components due to . The performance of a single execution is assessed more comprehensively in 5.

5 Comparative evaluation of denoising performance

To evaluate the performance of the IterativeFT method relative to existing signal denoising techniques, a simulation study was undertaken on data generated according to several spike signal patterns with varying signal-to-noise ratios and spike frequencies.

5.1 Simulation design

The input data was generated as a length vector where the elements of were generated as independent random variables and the signal followed one of three different periodic spike signal patterns:

- 1.

-

2.

Alternating sign spike: The sparsity pattern is similar to the uniform spike model above but the non-zero values alternate between and . The first panel in Figure 11 below illustrates this signal pattern.

-

3.

Alternating size spike: The sparsity pattern is similar to the uniform spike model above but the non-zero values alternate between and (these values yield a signal power equivalent to that for a signal with sized spikes). The first panel in Figure 14 below illustrates this signal pattern.

For the simulation results presented in Sections 5.3-5.5 below, and . The noise variance, , was set to yield a signal-to-noise ratio ranging between 0.05 and 2. The value of 1024 corresponds to a Nyquist frequency of 512 Hz and the spike period was set to yield a ratio of spike frequency to Nyquist frequency ranging between 0.0625 (for ) and 1 (for ). For the single examples in Figures 8, 11 and 14, , (i.e., a ratio of spike frequency to Nyquist frequency of 0.25) and was set to yield a signal-to-noise ratio of 1.

5.2 Comparison methods

We compared the denoising performance of the IterativeFT method (Algorithm 1 using the , and functions defined in Section 4) against four other techniques:

-

•

Real domain thresholding: This method performs a single thresholding operation on using .

-

•

Frequency domain thresholding: This method performs a single frequency domain thresholding operation using , i.e., the denoised output is generated as .

-

•

Butterworth bandpass filtering: This method is realized by a Butterworth [11] 3-order bandpass filter (as implemented by the butter() function in v0.3-5 of the gsignal R package [12]) where the band is set to the spike signal frequency (as a fraction of the Nyquist frequency) , e.g, if the spike signal is 0.25 of the Nyquist frequency, the band is set to 0.2 to 0.3.

-

•

Wavelet filtering: This method is based on the usage example for the threshold.wd() function in v4.7.4 of the wavethresh [13] R package. Specifically, a discrete wavelet transform is first applied to using the Daubechies least-asymmetric orthonormal compactly supported wavelet with 10 vanishing moments and interval boundary conditions [14]. This wavelet transform is realized using the wd() function in the wavethresh R package with bc=”interval” and the default wavelet family and smoothness settings. The wavelet coefficients at all levels are then thresholded using a threshold value computed according to a universal policy and madmad deviance estimate on the finest coefficients followed by application of an inverse wavelet transformation.

5.3 Results for uniform spike model

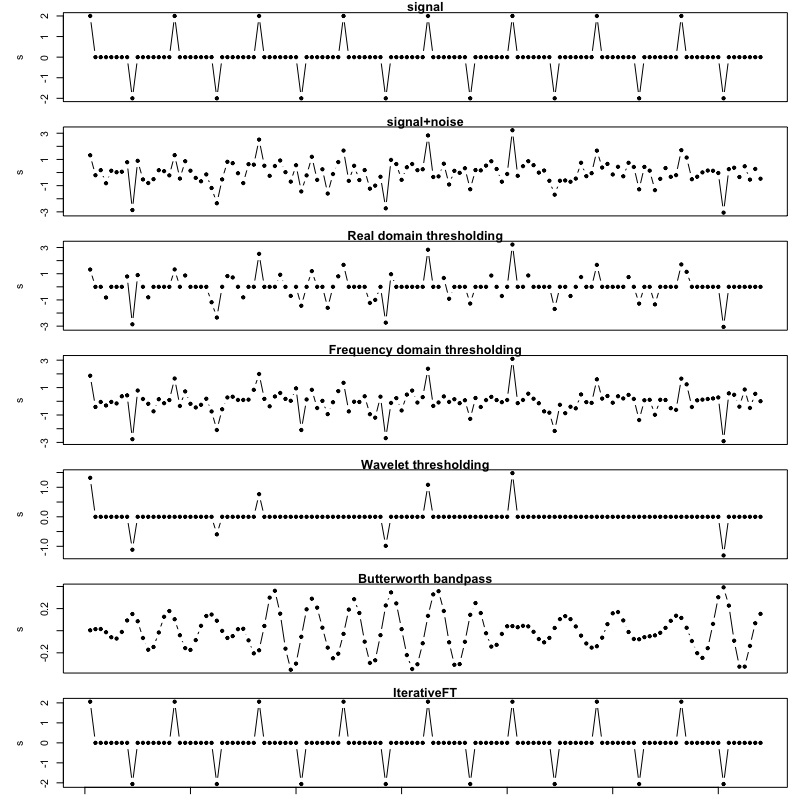

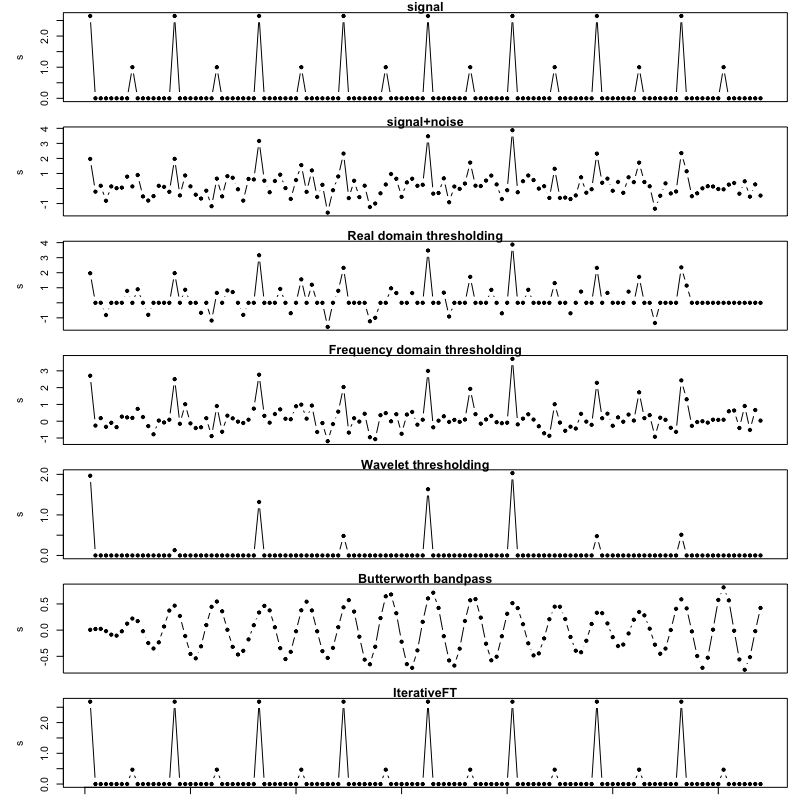

Figures 8-10 illustrate the comparative denoising performance for the evaluated techniques on data simulated according to the uniform spike model. Figure 8 shows the outputs for a single example vector with a signal-to-noise (SNR) ratio of 1 and ratio of spike signal frequency to Nyquist frequency of 0.25. For this example, the IterativeFT method is able to perfectly recover the spike signal. In contrast, the other evaluated techniques have only marginal signal recovery performance.

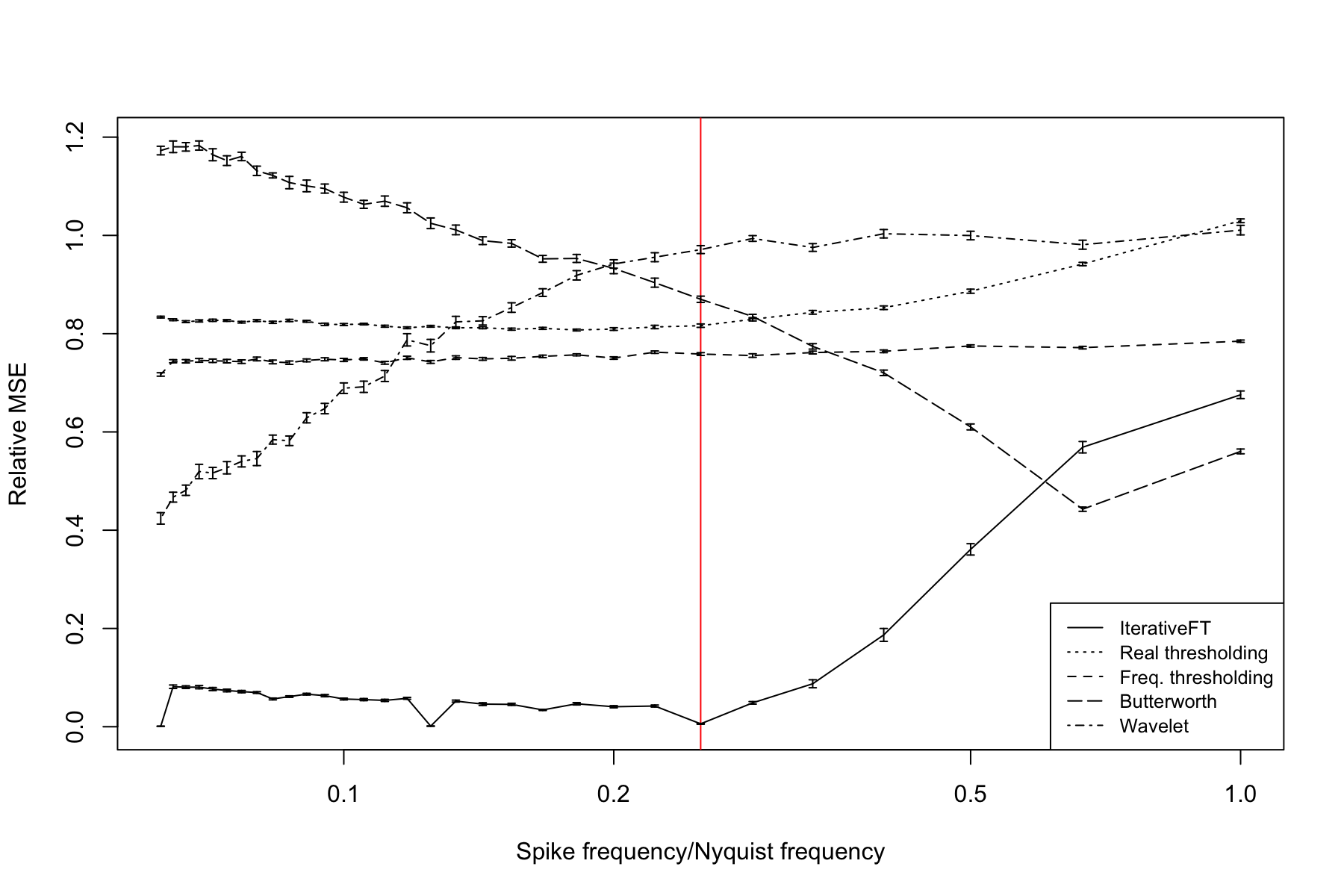

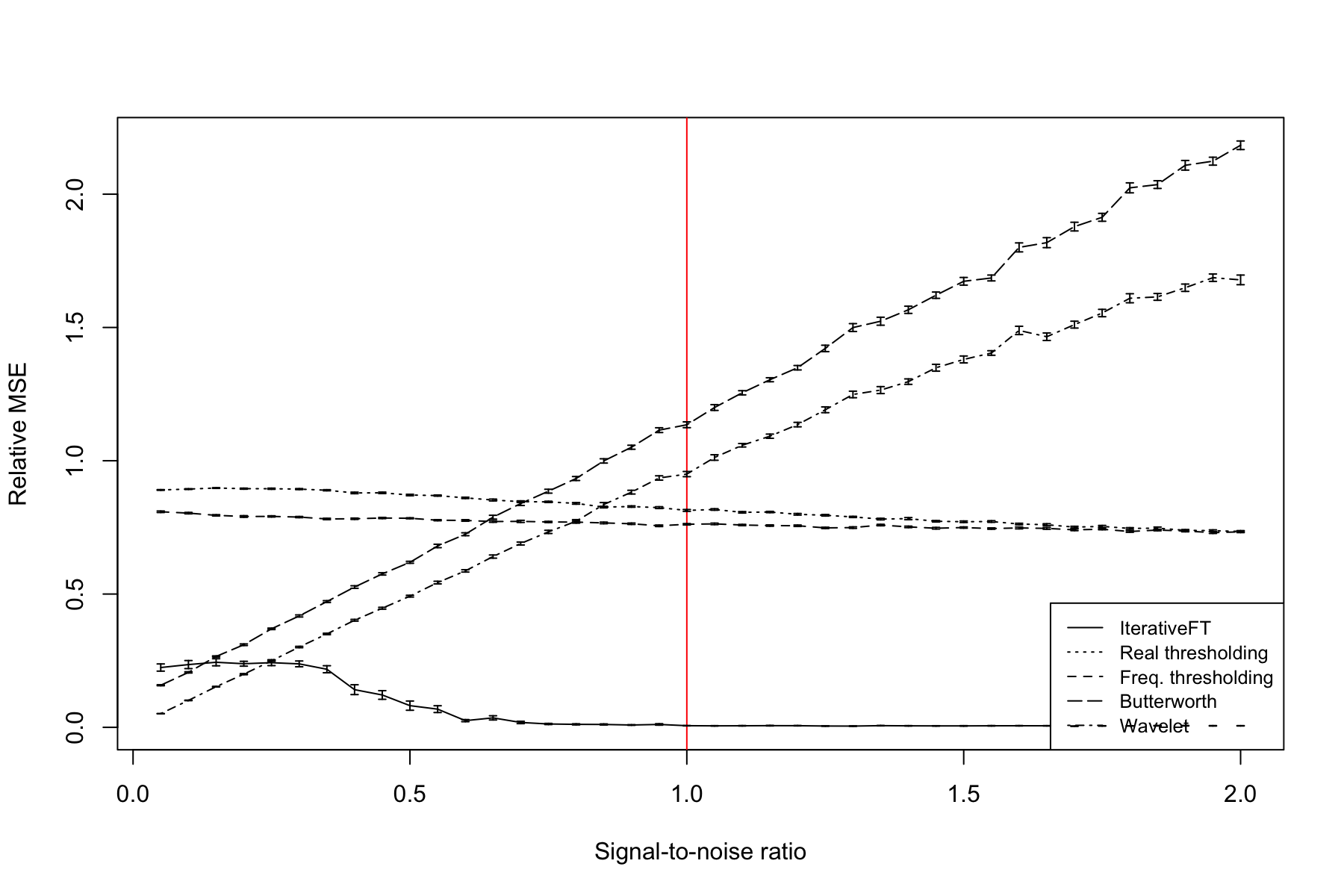

Figure 9 shows the relationship between denoising performance and spike signal frequency at a signal-to-noise (SNR) value of 1. Denoising performance is plotted on the y axis as the mean MSE across 25 simulated inputs between the denoised data and the underlying signal and is plotted relative to the MSE measured on the undenoised data. A relative MSE value equal to 1 indicates null performance, i.e., the denoising method is equivalent to leaving the data unchanged; values above 1 indicate that the method further corrupts the signal. For this simulation model, the IterativeFT technique offers dramatically better denoising performance than the other four techniques with the exception of the high frequency domain where the Butterworth bandpass filter has slightly better performance. The simple real and frequency domain thresholding methods, i.e., a single application of either the or sparsification functions, are slightly better than null and relatively insensitive to signal frequency. The wavelet filter offers the good performance at low frequency values with performance decreasing towards the null level as the signal frequency increases. By contrast, the Butterworth filter is worse than null at low frequency values with performance steadily improving as frequency increases. The three notable dips in relative MSE for the IterativeFT method correspond to spike periods of 32, 16 and 8, which are all factors of 1024, the length of the input vector.

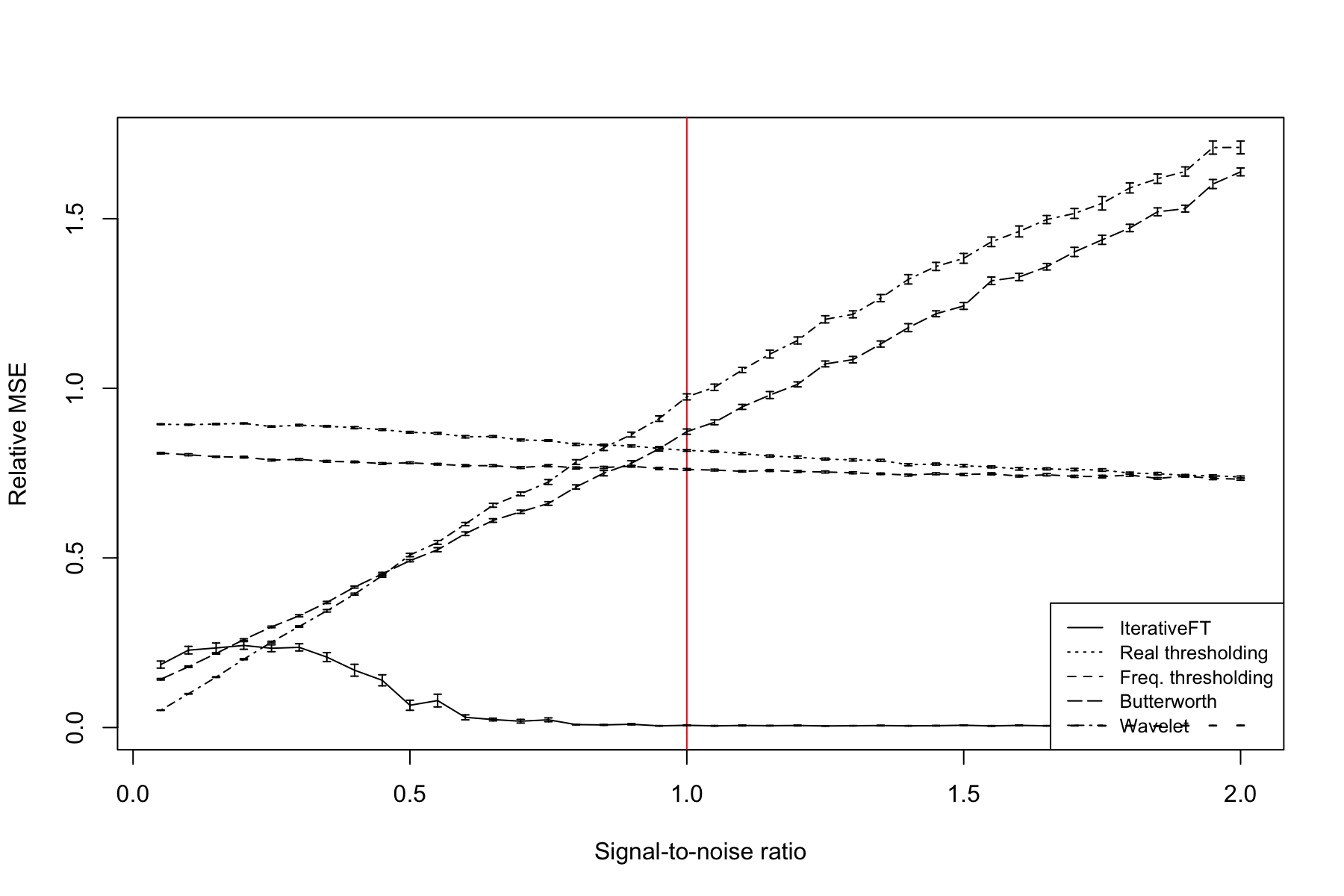

Figure 10 illustrates the relationship between denoising performance and the SNR value at a spike frequency that is 0.25 of the Nyquist frequency. Similar to Figure 9, both of the simple thresholding methods have performance that is only marginally better than null but, as expected, does improve slightly increasing SNR. The IterativeFT method is substantially better than all comparison techniques at SNR values above 0.5. At low SNR values, performance of the IterativeFT method decreases and falls below both the wavelet and Butterworth filtering techniques at SNR values below 0.25. The relative performance of the wavelet and Butterworth methods shows a steady decline with increasing SNR, which is surprising the error should decrease as SNR increases. This unexpected trend is due to the fact that the relative rather than absolute MSE is shown. In particular, the absolute MSE for these methods does decrease as the SNR increases, however, the MSE relative to no modifications increases with both filtering methods giving worse than null performance at SNR values greater than 1.1.

5.4 Results for alternating sign spike model

Figures 11-13 illustrate comparative denoising performance on data simulated according to the alternating sign spike model. Figure 11 shows the outputs for a single example vector with a signal-to-noise (SNR) ratio of 1 and ratio of spike signal frequency to Nyquist frequency of 0.25. Similar to the uniform spike example in Figure 8, the IterativeFT method perfectly recovers the spike signal with poor performance by the comparative techniques.

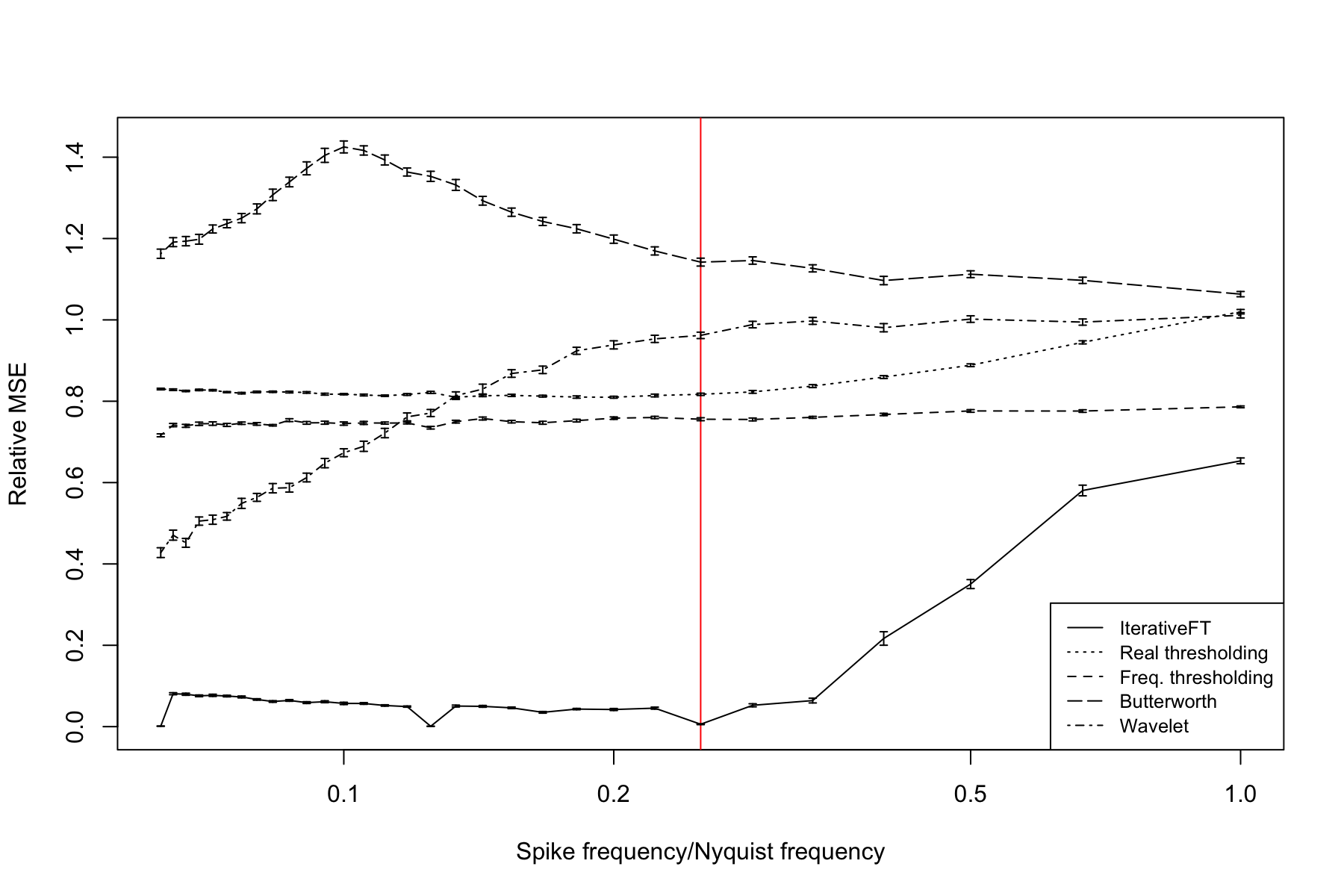

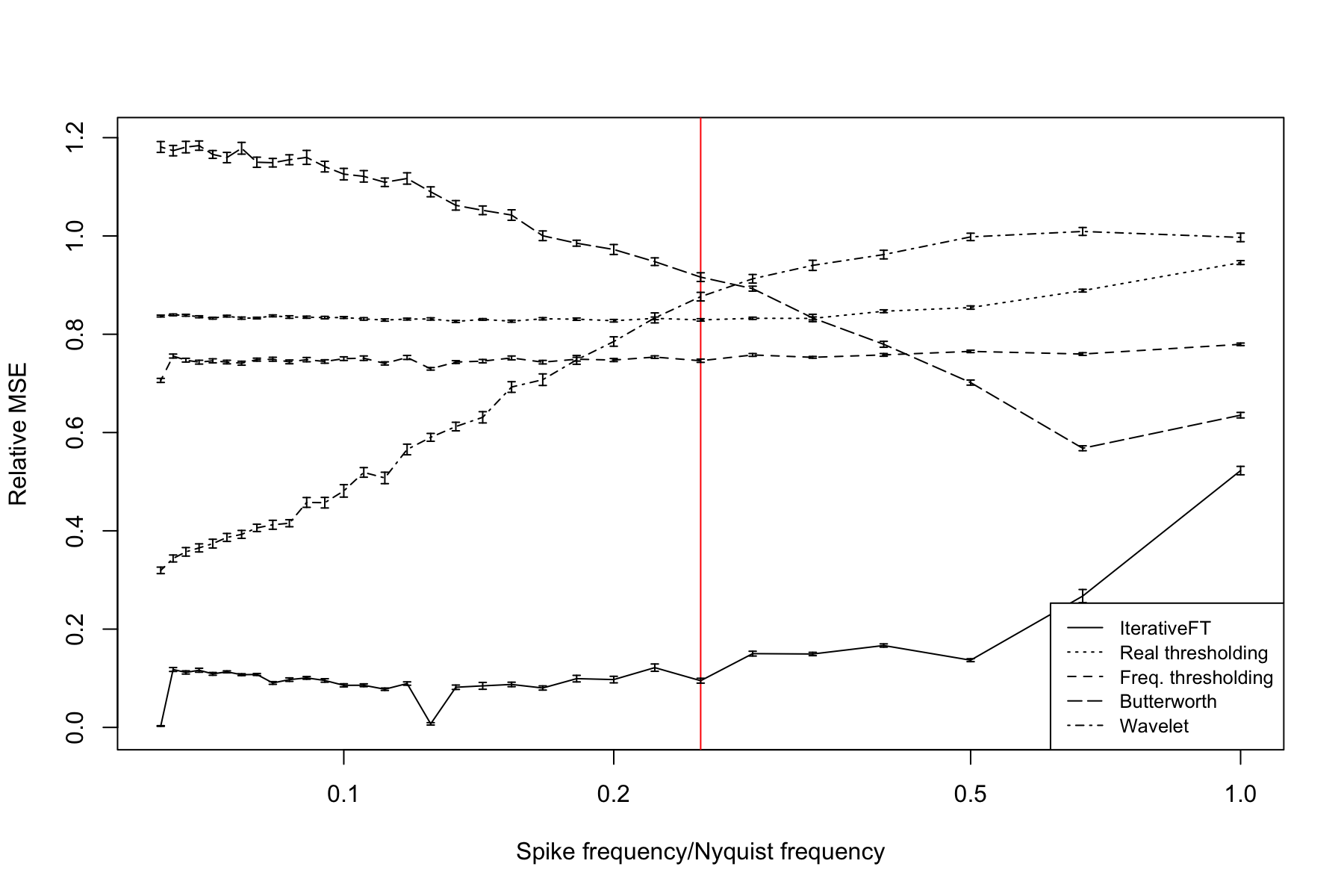

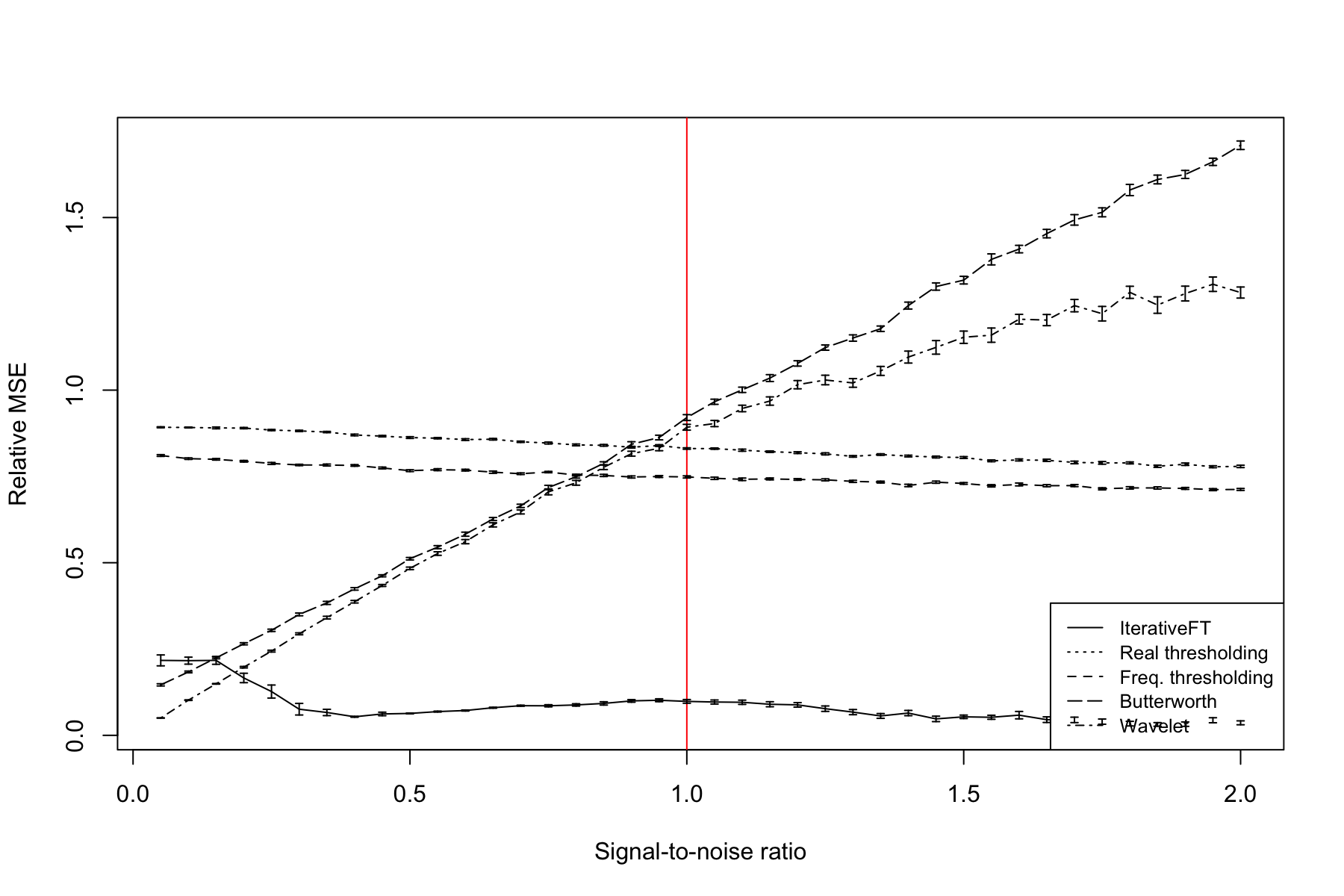

Figures 12 and 13 visualize the performance of the evaluated methods on the alternating sign model relative to signal frequency and SNR. The results are generally similar to those for the uniform spike model with the exception that the Butterworth bandpass filter has worse than null performance across all tested signal frequencies.

5.5 Results for alternating size spike model

Figures 14-16 illustrate comparative denoising performance on data simulated according to the alternating size spike model. Figure 14 shows the outputs for a single example vector with a signal-to-noise (SNR) ratio of 1 and ratio of spike signal frequency to Nyquist frequency of 0.25. Similar to the uniform spike and alternating sign examples, the IterativeFT method provides excellent signal recovery though it does underestimate the magnitude of the smaller spike; the other evaluated methods are worse by comparison.

Figures 15 and 16 visualize the performance of the evaluated methods on the alternating size model relative to signal frequency and SNR. The results are generally similar to those for the uniform spike model with the exception that performance for the Butterworth bandpass filter is never better than the IterativeFT method.

6 Conclusion

In this paper we explored a family of iterative algorithms based on the repeated execution of discrete and inverse discrete Fourier transforms on real valued vector or matrix data. One interesting member of this family, which we refer to as the IterativeFT method, is motivated by the discrete Fourier transform uncertainty principle and involves the application of a thresholding operation to both the real domain and frequency domain data with convergence obtained when real domain sparsity hits a stable pattern. As we demonstrated through simulation studies, the IterativeFT method can effectively recover periodic spike signals across a wide range of spike signal frequencies and signal-to-noise ratios. Importantly, the performance of the IterativeFT method is significantly better than standard non-iterative denoising techniques including real and frequency domain thresholding, wavelet filtering and Butterworth bandpass filtering. An R package implementing this technique and related resources can be found at https://hrfrost.host.dartmouth.edu/IterativeFT. Areas for future work include on this method include:

- •

-

•

Expanding the comparative evaluation to include other denoising techniques, e.g., basis pursuit [15], and a broader range of periodic signal and noise models, e.g., composition of spike signals at multiple frequencies/amplitudes, mixtures of harmonic and spike signals, etc.

-

•

Exploring other classes of , and functions and associated analysis problems, e.g., soft thresholding.

-

•

Exploring the generalization of the IterativeFT method to complex or hypercomplex-valued inputs.

-

•

Exploring the generalization of the IterativeFT method to other invertable discrete transforms, e.g., discrete wavelet transform [4].

Acknowledgments

This work was funded by National Institutes of Health grants R35GM146586 and R21CA253408. We would like to thank Anne Gelb and Aditya Viswanathan for the helpful discussion.

References

- [1] Ronald N Bracewell. The Fourier transform and its applications. McGraw Hill, Boston, 3rd ed edition, 2000.

- [2] Stephen Boyd, Neal Parikh, Eric Chu, Borja Peleato, and Jonathan Eckstein. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn., 3(1):1–122, jan 2011.

- [3] A. P. Dempster, N. M. Laird, and D. B. Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society. Series B (Methodological), 39(1):1–38, 1977.

- [4] Stphane Mallat. A Wavelet Tour of Signal Processing, Third Edition: The Sparse Way. Academic Press, Inc., USA, 3rd edition, 2008.

- [5] D Sundararajan. Fourier Analysis-A Signal Processing Approach. 1st ed. 2018 edition.

- [6] Ann Maria John, Kiran Khanna, Ritika R Prasad, and Lakshmi G Pillai. A review on application of fourier transform in image restoration. In 2020 Fourth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), pages 389–397, 2020.

- [7] Lu Chi, Borui Jiang, and Yadong Mu. Fast fourier convolution. In H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 4479–4488. Curran Associates, Inc., 2020.

- [8] Richard L. Dykstra. An algorithm for restricted least squares regression. Journal of the American Statistical Association, 78(384):837–842, 1983.

- [9] Ryan J. Tibshirani. Dykstra’s algorithm, admm, and coordinate descent: Connections, insights, and extensions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, pages 517–528, Red Hook, NY, USA, 2017. Curran Associates Inc.

- [10] David L. Donoho and Philip B. Stark. Uncertainty principles and signal recovery. SIAM Journal on Applied Mathematics, 49(3):906–931, 1989.

- [11] S. Butterworth. On the Theory of Filter Amplifiers. Experimental Wireless & the Wireless Engineer, 7:536–541, October 1930.

- [12] Van Boxtel, G.J.M., et al. gsignal: Signal processing, r package version 0.3-5 edition, 2021.

- [13] Guy Nason. wavethresh: Wavelets Statistics and Transforms, 2022. R package version 4.7.2.

- [14] Albert Cohen, Ingrid Daubechies, and Pierre Vial. Wavelets on the interval and fast wavelet transforms. Applied and Computational Harmonic Analysis, 1(1):54–81, 1993.

- [15] Shaobing Chen and D. Donoho. Basis pursuit. In Proceedings of 1994 28th Asilomar Conference on Signals, Systems and Computers, volume 1, pages 41–44 vol.1, 1994.