tablesection algorithmsection

Iterative Ensemble Kalman Methods:

A Unified Perspective with Some New Variants

Abstract

This paper provides a unified perspective of iterative ensemble Kalman methods, a family of derivative-free algorithms for parameter reconstruction and other related tasks. We identify, compare and develop three subfamilies of ensemble methods that differ in the objective they seek to minimize and the derivative-based optimization scheme they approximate through the ensemble. Our work emphasizes two principles for the derivation and analysis of iterative ensemble Kalman methods: statistical linearization and continuum limits. Following these guiding principles, we introduce new iterative ensemble Kalman methods that show promising numerical performance in Bayesian inverse problems, data assimilation and machine learning tasks. ††† Applied Mathematics and Computational Science, King Abdullah University of Science and Technology. ††∗ Department of Statistics, University of Chicago.

1 Introduction

This paper provides an accessible introduction to the derivation and foundations of iterative ensemble Kalman methods, a family of derivative-free algorithms for parameter reconstruction and other related tasks. The overarching theme behind these methods is to iteratively update via Kalman-type formulae an ensemble of candidate reconstructions, aiming to bring the ensemble closer to the unknown parameter with each iteration. The ensemble Kalman updates approximate derivative-based nonlinear least-squares optimization schemes without requiring gradient evaluations. Our presentation emphasizes that iterative ensemble Kalman methods can be naturally classified in terms of the nonlinear least-squares objective they seek to minimize and the derivative-based optimization scheme they approximate through the ensemble. This perspective allows us to identify three subfamilies of iterative ensemble Kalman methods, creating unity into the growing literature on this subject. Our work also emphasizes two principles for the derivation and analysis of iterative ensemble Kalman methods: statistical linearization and continuum limits. Following these principles we introduce new iterative ensemble Kalman methods that show promising numerical performance in Bayesian inverse problems, data assimilation and machine learning tasks.

We consider the application of iterative ensemble Kalman methods to the problem of reconstructing an unknown from corrupt data related by

| (1.1) |

where represents measurement or model error and is a given map. A wide range of inverse problems, data assimilation and machine learning tasks can be cast into the framework (1.1). In these applications the unknown may represent, for instance, an input parameter of a differential equation, the current state of a time-evolving signal and a regressor, respectively. Ensemble Kalman methods were first introduced as filtering schemes for sequential data assimilation [21, 22, 42, 47, 50] to reduce the computational cost of the Kalman filter [34]. Their use for state and parameter estimation and inverse problems was further developed in [3, 40, 43, 55]. The idea of iterating these methods was considered in [15, 18]. Iterative ensemble Kalman methods are now popular in inverse problems and data assimilation; they have also shown some potential in machine learning applications [25, 29, 37].

Starting from an initial ensemble iterative ensemble Kalman methods use various ensemble-based empirical means and covariances to update

| (1.2) |

until a stopping criteria is satisfied; the unknown parameter is reconstructed by the mean of the final ensemble. The idea is analogous to classical Kalman methods and optimization schemes which, starting with a single initialization use evaluations of derivatives of to iteratively update until a stopping criteria is met. The initial ensemble is viewed as an input to the algorithm, obtained in a problem-dependent fashion. In Bayesian inverse problems and machine learning it may be obtained by sampling a prior, while in data assimilation the initial ensemble may be a given collection of particles that approximates the prediction distribution. In either case, it is useful to view the initial ensemble as a sample from a probability distribution.

There are two main computational benefits in updating an ensemble of candidate reconstructions rather than a single estimate. First, the ensemble update can be performed without evaluating derivatives of effectively approximating them using statistical linearization. This is important in applications where computing derivatives of is expensive, or where the map needs to be treated as a black-box. Second, the use of empirical rather than model covariances can significantly reduce the computational cost whenever the ensemble size is smaller than the dimension of the unknown parameter . Another key advantage of the ensemble approach is that, for problems that are not strongly nonlinear, the spread of the ensemble may contain meaningful information on the uncertainty in the reconstruction.

1.1 Overview: Three Subfamilies

This paper identifies, compares and further develops three subfamilies of iterative ensemble Kalman methods to implement the ensemble update (1.2). Each subfamily employs a different Kalman-type formulae, determined by a choice of objective to minimize and a derivative-based optimization scheme to approximate with the ensemble. All three approaches impose some form of regularization, either explicitly through the choice of the objective, or implicitly through the choice of the optimization scheme. Incorporating regularization is essential in parameter reconstruction problems encountered in applications, which are typically under-determined or ill-posed [41, 50].

The first subfamily considers a Tikhonov-Phillips objective associated with the parameter reconstruction problem (1.2), given by

| (1.3) |

where and are symmetric positive definite matrices that model, respectively, the data measurement precision and the level of regularization —incorporated explicitly through the choice of objective— and represents a background estimate of Here and throughout this paper we use the notation for symmetric positive definite and vector The ensemble is used to approximate a Gauss-Newton method applied to the Tikhonov-Phillips objective Algorithms in this subfamily were first introduced in geophysical data assimilation [1, 15, 18, 24, 39, 48] and were inspired by iterative, derivative-based, extended Kalman filters [5, 6, 33]. Extensions to more challenging problems with strongly nonlinear dynamics are considered in [49, 60]. In this paper we will use a new Iterative Ensemble Kalman Filter (IEKF) method as a prototypical example of an algorithm that belongs to this subfamily.

The second subfamily considers the data-misfit objective

| (1.4) |

When the parameter reconstruction problem is ill-posed, minimizing leads to unstable reconstructions. For this reason, iterative ensemble Kalman methods in this subfamily are complemented with a Levenberg-Marquardt optimization scheme that implicitly incorporates regularization. The ensemble is used to approximate a regularizing Levenberg-Marquardt optimization algorithm to minimize Algorithms in this subfamily were introduced in the applied mathematics literature [30, 31] building on ideas from classical inverse problems [26]. Recent theoretical work has focused on developing continuous-time and mean-field limits, as well as various convergence results [8, 9, 14, 27, 37, 51]. Methodological extensions based on Bayesian hierarchical techniques were introduced in [10, 11] and the incorporation of constraints has been investigated in [2, 12]. In this paper we will use the Ensemble Kalman Inversion (EKI) method [31] as a prototypical example of an algorithm that belongs to this subfamily.

| Objective | Optimization | Derivative Method | Ensemble Method | New Variant |

|---|---|---|---|---|

| GN | IExKF (2.1) | IEKF (3.1) | IEKF-SL (4.1) | |

| LM | LM-DM (2.2) | EKI (3.2) | EKI-SL (4.2) | |

| LM | LM-TP (2.3) | TEKI (3.3) | TEKI-SL (4.3) |

The third subfamily, which has emerged more recently, combines explicit regularization through the Tikhonov-Phillips objective and an implicitly regularizing optimization scheme [14, 13]. Precisely, a Levenberg-Marquardt scheme is approximated through the ensemble in order to minimize the Tikhonov-Phillips objective In this paper we will use the Tikhonov ensemble Kalman inversion method (TEKI) [13] as a prototypical example.

1.2 Statistical Linearization, Continuum Limits and New Variants

Each subfamily of iterative ensemble Kalman methods stems from a derivative-based optimization scheme. However, there is substantial freedom as to how to use the ensemble to approximate a derivative-based method. We will focus on randomized-maximum likelihood implementations [24, 35, 50], rather than square-root or ensemble adjustment approaches [3, 59, 28]. Two principles will guide our derivation and analysis of ensemble methods: the use of statistical linearization [60] and their connection with gradient descent methods through the study of continuum limits [51].

The idea behind statistical linearization is to approximate the gradient of using pairs in such a way that if is linear and the ensemble size is sufficiently large, the approximation is exact. As we shall see, this idea tacitly underlies the derivation of all the ensemble methods considered in this paper, and will be explicitly employed in our derivation of new variants. Statistical linearization has also been used within Unscented Kalman filters, see e.g. [60].

Differential equations have long been important in developing and understanding optimization schemes [44], and investigating the connections between differential equations and optimization is still an active area of research [54, 56, 57, 61]. In the context of iterative ensemble methods, continuum limit analyses arise from considering small length-steps and have been developed primarily in the context of EKI-type algorithms [8, 52]. While the derivative-based algorithms that motivate the ensemble methods result in an ODE continuum limit, the ensemble versions lead to a system of SDEs. Continuum limit analyses are useful in at least three ways. First, they unveil the gradient structure of the optimization schemes. Second, viewing optimization schemes as arising from discretizations of SDEs lends itself to design of algorithms that are easy to tune: the length-step is chosen to be small and the algorithms are run until statistical equilibrium is reached. Third, a simple linear-case analysis of the SDEs may be used to develop new algorithms that satisfy certain desirable properties. Our new iterative ensemble Kalman methods will be designed following these observations.

While our work advocates the study of continuum limits as a useful tool to design ensemble methods, continuum limits cannot fully capture the full richness and flexibility of discrete-based implementable algorithms, since different algorithms may result in the same SDE continuum limit. This insight suggests that it is not only the study of differential equations, but also their discretizations, that may contribute to the design of iterative ensemble Kalman algorithms.

1.3 Main Contributions and Outline

In addition to providing a unified perspective of the existing literature, this paper contains several original contributions. We highlight some of them in the following outline and refer to Table 1 for a summary of the algorithms considered in this paper.

-

•

In Section 2 we review three iterative derivative-based methods for nonlinear least-squares optimization. The ensemble-based algorithms studied in subsequent sections can be interpreted as ensemble-based approximations of the derivative-based methods described in this section. We also derive informally ODE continuum limits for each method, which unveils their gradient flow structure.

-

•

In Section 3 we describe the idea of statistical linearization. We review three subfamilies of iterative ensemble methods, each of which has an update formula analogous to one of the derivate-based methods in Section 2. We analyze the methods when is linear by formally deriving SDE continuum limits that unveil their gradient structure. A novelty in this section is the introduction of the IEKF method, which is similar to, but different from, the iterative ensemble method introduced in [60].

-

•

The material in Section 4 is novel to the best of our knowledge. We introduce new variants of the iterative ensemble Kalman methods discussed in Section 3 and formally derive their SDE continuum limit. We analyze the resulting SDEs when is linear. The proposed methods are designed to ensure that (i) no parameter tuning or careful stopping criteria are needed; and (ii) the ensemble covariance contains meaningful information of the uncertainty in the reconstruction in the linear case, avoiding the ensemble collapse of some existing methods.

-

•

In Section 5 we include an in-depth empirical comparison of the performance of the iterative ensemble Kalman methods discussed in Sections 3 and 4. We consider four problem settings motivated by applications in Bayesian inverse problems, data assimilation and machine learning. Our results illustrate the different behavior of some methods in small noise regimes and the benefits of avoiding ensemble collapse.

-

•

Section 6 concludes and suggests some open directions for further research.

2 Derivative-based Optimization for Nonlinear Least-Squares

In this section we review the Gauss-Newton and Levenberg-Marquardt methods, two derivative-based approaches for optimization of nonlinear least-squares objectives of the form

| (2.1) |

We derive closed formulae for the Gauss-Newton method applied to the Tikhonov-Phillips objective , as well as for the Levenberg-Marquardt method applied to the data-misfit objective and the Tikhonov-Phillips objective These formulae are the basis for the ensemble, derivative-free methods considered in the next section.

As we shall see, the search directions of Gauss-Newton and Levenberg-Marquardt methods are found by minimizing a linearization of the least-squares objective. It is thus instructive to consider first linear least-squares optimization before delving into the nonlinear setting. The following well-known result, that we will use extensively, characterizes the minimizer of the Tikhonov-Phillips objective in the case of linear .

Lemma 2.1.

It holds that

| (2.2) |

where does not depend on and

| (2.3) | ||||

| (2.4) |

Equivalently,

| (2.5) | ||||

| (2.6) |

where is the Kalman gain matrix given by

| (2.7) |

Proof.

Bayesian Interpretation

Lemma 2.1 has a natural statistical interpretation. Consider a statistical model defined by likelihood and prior Then Equation (2.2) shows that the posterior distribution is Gaussian, and Equations (2.3)-(2.4) characterize the posterior mean and precision (inverse covariance). We interpret Equation (2.5) as providing a closed formula for the posterior mode, known as the maximum a posteriori (MAP) estimator.

More generally, the generative model

| (2.9) | ||||

gives rise to a posterior distribution on with density proportional to Thus, minimization of corresponds to maximizing the posterior density under the model (2.9).

2.1 Gauss-Newton Optimization of Tikhonov-Phillips Objective

In this subsection we introduce two ways of writing the Gauss-Newton update applied to the Tikhonov-Phillips objective . We recall that the Gauss-Newton method applied to a general least-squares objective is a line-search method which, starting from an initialization sets

where is a search direction defined by

| (2.10) |

where is a length-step parameter whose choice will be discussed later. In order to apply the Gauss-Newton method to the Tikhonov-Phillips objective, we write in the standard nonlinear least-squares form (2.1). Note that

where

Therefore we have

| (2.11) |

The following result is a direct consequence of Lemma 2.1.

Lemma 2.2 ([6]).

The Gauss-Newton method applied to the Tikhonov-Phillips objective admits the characterizations:

| (2.12) |

and

| (2.13) |

where and

Proof.

The search direction of Gauss-Newton for the objective is given by

| (2.14) | ||||

| (2.15) | ||||

| (2.16) | ||||

| (2.17) |

Applying Lemma 2.1, using formulae (2.4) and (2.6), we deduce that

which establishes the characterization (2.12). The equivalence between (2.12) and (2.13) follows from the identity (2.7), which implies that and ∎

We refer to the Gauss-Newton method with constant length-step applied to as the Iterative Extended Kalman Filter (IExKF) algorithm. IExKF was developed in the control theory literature [33] without reference to the Gauss-Newton optimization method; the agreement between both methods was established in [6]. In order to compare IExKF with an ensemble-based method in Section 3, we summarize it here.

Input: Initialization , length-step .

For do:

-

1.

Set

-

2.

Set

(2.18) or, equivalently,

(2.19)

Output:

The next proposition shows that in the linear case, if is set to 1, IExKF finds the minimizer of the objective (1.3) in one iteration, and further iterations still agree with the minimizer.

Proposition 2.3.

Proof.

Choice of Length-Step

When implementing Gauss-Newton methods, it is standard practice to perform a line search in the direction of to adaptively choose the length-step For instance, a common strategy is to guarantee that the Wolfe conditions are satisfied [16, 45]. In this paper we will instead simply set for some fixed value of and we will follow a similar approach for all the derivative-based and ensemble-based algorithms we consider. There are two main motivations for doing so. First, it is appealing from a practical viewpoint to avoid performing a line search for ensemble-based algorithms. Second, when is small each derivative-based algorithms we consider can be interpreted as a discretization of an ODE system, while the ensemble-based methods arise as discretizations of SDE systems. These ODEs and SDEs allow us to compare and gain transparent understanding of the gradient structure of the algorithms. They will also allow us to propose some new variants of existing ensemble Kalman methods. We next describe the continuum limit structure of IExKF.

Continuum Limit

It is not hard to check that the term in brackets in the update (2.19)

is the negative gradient of , which reveals the following gradient flow structure in the limit of small length-step

| (2.20) | ||||

with preconditioner

We remark that in the linear case, where is the posterior covariance given by (2.6), which agrees with the inverse of the Hessian of the Tikhonov Phillips objective.

2.2 Levenberg-Marquardt Optimization of Data-Misfit Objective

In this subsection we introduce the Levenberg-Marquardt algorithm and describe its application to the data misfit objective . We recall that the Levenberg-Marquardt method applied to a general least-squares objective is a trust region method which, starting from an initialization sets

where

Similar to Gauss-Newton methods, the increment is defined as the minimizer of a linearized objective, but now the minimization is constrained to a ball in which we trust that the objective can be replaced by its linearization. The increment can also be written as

where

| (2.21) |

The parameter plays an analogous role to the length-step in Gauss-Newton methods. Note that the Levenberg-Marquardt increment is the unconstrained minimizer of a regularized objective. It is for this reason that we say that Levenberg-Marquardt provides an implicit regularization.

We next consider application of the Levenberg-Marquardt method to the data-misfit objective which we write in standard nonlinear least-squares form:

| (2.22) |

Lemma 2.4.

The Levenberg-Marquardt method applied to the data misfit objective admits the following characterization:

| (2.23) |

where

Proof.

Note that the increment is defined as the unconstrained minimizer of

| (2.24) | ||||

The result follows from Lemma 2.1. ∎

Similar to the previous section, we will focus on implementations with constant length-step , which leads to the following algorithm.

Input: Initialization , length-step .

For do:

-

1.

Set

-

2.

Set

(2.25)

Output:

When , the following linear-case result shows that ILM-DM reaches the minimizer of in one iteration. However, in contrast to IExKF, further iterations of ILM-DM will typically worsen the optimization of , and start moving towards minimizers of

Proposition 2.5.

Proof.

The proof is identical to that of Proposition 2.3, noting that in the linear case ∎

Example 2.6 (Convergence of ILM-DM with invertible observation map).

Suppose that is invertible and Then, writing

and noting that [4], it follows that , where is the unique solution to That is, the iterates of ILM-DM converge to the unique minimizer of the data misfit objective

Choice of Length-Step

When implementing Levenberg-Marquardt algorithms, the length-step parameter is often chosen adaptively, based on the objective. However, similar to Section 2.1, we fix to be a small value which leads to an ODE continuum limit.

Continuum Limit

We notice that, in the limit of small length-step , update (2.25) can be written as

The term is the negative gradient of , which reveals the following gradient flow structure

| (2.26) | ||||

where the preconditioner is interpreted as the prior covariance in the Bayesian framework.

2.3 Levenberg-Marquardt Optimization of Tikhonov-Phillips Objective

In this subsection we describe the application of the Levenberg-Marquardt algorithm to the Tikhonov-Phillips objective .

Lemma 2.7.

The Levenberg-Marquardt method applied to the data misfit objective admits the following characterization:

where

Proof.

Note that the increment is defined as the unconstrained minimizer of

| (2.27) | ||||

| (2.28) |

which has the same form as Equation (2.24) replacing with with and with ∎

Setting leads to the following algorithm.

Input: Initialization , length-step .

For do:

-

1.

Set

-

2.

Set

(2.29)

Output:

Proposition 2.8.

Suppose that is linear and . The output of Algorithm 3 satisfies

| (2.30) |

Proof.

The result is a corollary of Proposition 2.5. To see this, note that can be viewed as a data-misfit objective with data forward model and observation matrix Then, ILM-TP can be interpreted as applying ILM-DM to and the objective as its Tikhonov-Phillips regularization. ∎

Example 2.9 (Convergence of ILM-TP with linear invertible observation map.).

It is again instructive to consider the case where is invertible. Then, following the same reasoning as in Example 2.6 we deduce that the iterates of ILM-TP converge to the unique minimizer of

Continuum Limits

3 Ensemble-based Optimization for Nonlinear Least-Squares

In this section we review three subfamilies of iterative methods that update an ensemble employing Kalman-based formulae, where denotes the iteration index and is a fixed ensemble size. Each ensemble member is updated by optimizing a (random) objective defined using the current ensemble and/or the initial ensemble . The optimization is performed without evaluating derivatives by invoking a statistical linearization of a Gauss-Newton or Levenberg-Marquardt algorithm. In analogy with the previous section, the three subfamilies of ensemble methods we consider differ in the choice of the objective and in the choice of the optimization algorithm. Table 2 summarizes the derivative and ensemble methods considered in the previous and the current section.

| Objective | Optimization | Derivative Method | Ensemble Method |

|---|---|---|---|

| GN | IExKF | IEKF | |

| LM | ILM-DM | EKI | |

| LM | ILM-TP | TEKI |

Given an ensemble we use the following notation for ensemble empirical means

and empirical covariances

Two overarching themes that underlie the derivation and analysis of the ensemble methods studied in this section and the following one are the use of a statistical linearization to avoid evaluation of derivatives, and the study of continuum limits. We next introduce these two ideas.

Statistical Linearization

If is linear, we have

which motivates the approximation in the general nonlinear case

| (3.1) |

where here and in what follows denotes the pseudoinverse of Notice that (3.1) can be regarded as a linear least-squares fit of pairs normalized around their corresponding empirical means and . We remark that in order for the approximation in (3.1) to be accurate, the ensemble size should not be much smaller than the input dimension .

Continuum Limit

We will gain theoretical understanding by studying continuum limits. Specifically, each algorithm includes a length-step parameter and the evolution of the ensemble for small can be interpreted as a discretization of an SDE system. We denote by the sample paths of the underlying SDE. For each , we have , and we view as an approximation of for . Similarly as above, we define as the corresponding empirical covariances at time .

3.1 Ensemble Gauss-Newton Optimization of Tikhonov-Phillips Objective

3.1.1 Iterative Ensemble Kalman Filter

Given an ensemble , consider the following Gauss-Newton update for each :

| (3.2) |

where is the length-step, and is the minimizer of the following (linearized) Tikhonov-Phillips objective (cf. equation (2.17))

| (3.3) |

Notice that we adopt the statistical linearization (3.1) in the above formulation. Applying Lemma 2.1, the minimizer can be calculated as

| (3.4) |

or, in an equivalent form,

| (3.5) |

where

Combining (3.2) and (3.5) leads to the Iterative Ensemble Kalman Filter (IEKF) algorithm.

Input: Initial ensemble sampled independently from the prior, length-step .

For do:

-

1.

Set

-

2.

Draw and set

(3.6)

Output: Ensemble means

We highlight that IEKF is a natural ensemble-based version of the derivative-based IExKF Algorithm 1 with update (2.18). Algorithm 4 is a slight modification of the iterative ensemble Kalman algorithm proposed in [60]. The difference is that [60] sets rather than . Our modification guarantees that Algorithm 4 is well-balanced in the sense that if , and is linear, then the output of Algorithm 4 satisfies that, as

where is the minimizer of given in Equation (1.3). This is analogous to Proposition 2.3 for IExKF. A detailed explanation is included in Section 3.1.2 below.

Other statistical linearizations and approximations of the Gauss-Newton scheme are possible. We next give a high-level description of the method proposed in [48], one of the earliest applications of iterative ensemble Kalman methods for inversion in the petroleum engineering literature. Consider the alternative characterization of the Gauss-Newton update (3.4). However, instead of using a different preconditioner for each step, [48] uses a fixed preconditioner . Note that can be viewed as an approximation of

This leads to the following algorithm.

Input: Initial ensemble sampled independently from the prior, length-step .

-

1.

Set

For do:

-

1.

Set

-

2.

Draw and set

Output: Ensemble means

We note that IEKF-RZL is a natural ensemble-based version of the derivative-based IExKF Algorithm 1 with update (2.19). We have empirically observed in a wide range of numerical experiments that Algorithm 4 is more stable than Algorithm 5, and we now give a heuristic argument for the advantage of Algorithm 4 in small noise regimes.

-

•

The update formula in Algorithm 4 is motivated by the large approximation

which only requires that is a good approximation of .

-

•

The update formula in Algorithm 5 may be derived by invoking a large approximation of several terms. In particular, the error arising from the approximation

gets amplified when is small.

3.1.2 Analysis of IEKF

In the literature [48, 60], the length-step is sometimes set to be 1. Here we state a simple observation about Algorithm 4 when and is linear. We further assume that for all . Then , and the update (3.6) can be simplified as

where . If are sampled independently from the prior and we let , we have and, by the law of large numbers, for any ,

which is the posterior mean (and mode) in the linear setting. In other words, in the large ensemble limit, the ensemble mean recovers the posterior mean after one iteration. However, while the choice may be effective in low dimensional (nonlinear) inverse problems, we do not recommend it when the dimensionality is high, as might not be a good approximation of . Thus, we introduce Algorithms 4 and 5 with a choice of length-step resembling the derivative-based Gauss-Newton method discussed in Section 2.1. Further analysis of a new variant of Algorithm 4 will be conducted in a continuum limit setting in Section 4.

Remark 3.1.

Some remarks:

-

1.

Although this will not be the focus of our paper, the IEKF Algorithm 4 (together with the EKI Algorithm 6 and TEKI Algorithm 7 to be discussed later) enjoys the ‘initial subspace property’ studied in previous works [31, 51, 13] by which, for any and any initialization of

This can be shown easily for the IEKF Algorithm 4 by expanding the term in in the update formula (3.6).

-

2.

Since we assume a Gaussian prior on , a natural idea is to replace by and by in the update formula (3.6). We pursue this idea in Section 4.1, where we introduce a new variant of Algorithm 4 and analyze it in the continuum limit setting. While the initial subspace property breaks down, this new variant is numerically promising, as shown in Section 5.

3.2 Ensemble Levenberg-Marquardt Optimization of Data-Misfit Objective

3.2.1 Ensemble Kalman Inversion

Given an ensemble , consider the following Levenberg-Marquardt update for each :

| (3.7) |

where is the minimizer of the following regularized (linearized) data-misfit objective (cf. equation (2.24))

| (3.8) |

and will be regarded as a length-step. Notice that we adopt the statistical linearization (3.1) in the above formulation. Applying Lemma 2.1, we can calculate the minimizer explicitly:

| (3.9) |

or, in an equivalent form,

| (3.10) |

We combine (3.7) and (3.10), substitute , and make another level of approximation . This leads to the Ensemble Kalman Inversion (EKI) method [31].

Input: Initial ensemble , length-step .

For do:

-

1.

Set

-

2.

Draw and set

(3.11)

Output: Ensemble means

We note that EKI is a natural ensemble-based version of the derivative-based ILM-DM Algorithm 2. However, an important difference is that the Kalman gain in ILM-DM only uses the iterates to update and is kept fixed. In contrast, the ensemble is used in EKI to update and .

3.2.2 Analysis of EKI

In view of the definition of , we can rewrite the update (3.11) as a time-stepping scheme:

| (3.12) |

where are independent. Taking the limit , we interpret (3.12) as a discretization of the SDE system

| (3.13) |

If is linear, the SDE system (3.13) turns into

| (3.14) |

Proposition 3.2.

For the SDE system (3.13), assume is linear, and suppose that the initial ensemble is drawn independently from a continuous distribution with finite second moments. Then, in the large ensemble size limit (mean-field), the distribution of has mean and covariance , which satisfy

| (3.15) | ||||

| (3.16) |

Furthermore, the solution can be computed analytically:

| (3.17) | ||||

| (3.18) |

In particular, if has full column rank (i.e., , ), then, as ,

| (3.19) | ||||

| (3.20) |

If has full row rank (i.e., , ), then, as

| (3.21) | ||||

| (3.22) |

Proof.

The proof technique is similar to [23]. We will use that

where First, note that (3.15) follows directly from (3.14) using that in the mean field limit can be replaced by . To obtain the evolution of , note that

where the last term accounts for the Itô correction. This simplifies as

which gives Equation (3.16). To derive exact formulas for and , we notice that

and

Remark 3.3.

Some remarks:

-

1.

In fact, (3.22) always holds, without rank constraints on . The proof follows the similar idea as in [51]. This can be viewed from Equation (3.24), where we can perform eigenvalue decomposition , and show that , where are diagonal matrices and as . The statement that (or if has full column rank) is referred to as ‘ensemble collapse’. This can be interpreted as ‘the images of all the particles under collapse to a single point as time evolves’. Our numerical results in Section 5 show that the ensemble collapse phenomenon of Algorithm 6 is also observed in a variety of nonlinear examples and outside the mean-field limit, with moderate ensemble size . These empirical results justify the practical significance of the linear continuum analysis in Proposition 3.2.

-

2.

Under the same setting of Proposition 3.2, if we further require that the initial ensemble is drawn independently from the prior distribution , then in the mean-field limit we have and , leading to

which are the true posterior mean and covariance, respectively. However, we have observed in a variety of numerical examples (not reported here) that in nonlinear problems it is often necessary to run EKI up to times larger than to obtain adequate approximation of the posterior mean and covariance. Providing a suitable stopping criteria for EKI is a topic of current research [52, 32] beyond the scope of our work.

3.3 Ensemble Levenberg-Marquardt Optimization of Tikhonov-Phillips Objective

3.3.1 Tikhonov Ensemble Kalman Inversion

Recall that we define

Then, given an ensemble , we can define

and empirical covariances

Furthermore, we define the statistical linearization :

| (3.25) |

It is not hard to check that

with defined in (3.1).

Given an ensemble , consider the following Levenberg-Marquardt update for each :

where is the minimizer of the following regularized (linearized) Tikhonov-Phillips objective (cf. equation (2.28))

| (3.26) |

and will be regarded as a length-step. We can calculate the minimizer explicitly, applying Lemma 2.1:

| (3.27) |

or, in an equivalent form,

| (3.28) |

Similar to EKI, in Equation (3.28) we substitute , and make the approximation . This leads to Tikhonov Ensemble Kalman Inversion (TEKI), described in Algorithm 7.

Input: Initial ensemble , length-step .

For do:

-

1.

Set

-

2.

Draw and set

(3.29)

Output: Ensemble means

We note that TEKI is a natural ensemble-based version of the derivative-based ILM-TP Algorithm 3. However, the Kalman gain in ILM-TP keeps fixed, while in TEKI and are updated using the ensemble.

3.3.2 Analysis of TEKI

When is small, we can rewrite the update (3.29) as a time-stepping scheme:

| (3.30) |

where are independent. Taking the limit , we can interpret (3.30) as a discretization of the SDE system

| (3.31) |

If is linear, is also linear and the SDE system (3.31) can be rewritten as

| (3.32) |

Proposition 3.4.

For the SDE system (3.31), assume is linear, and that the initial ensemble is made of independent samples from a distribution with finite second moments. Then, in the large ensemble limit (mean-field), the distribution of has mean and covariance , which satisfy:

| (3.33) | ||||

| (3.34) |

Furthermore, the solution can be computed analytically:

| (3.35) | ||||

| (3.36) |

In particular, as ,

| (3.37) | ||||

| (3.38) | ||||

Notice that converges to the true posterior mean.

4 Ensemble Kalman methods: New Variants

In the previous section we discussed three popular subfamilies of iterative ensemble Kalman methods, analogous to the derivative-based algorithms in Section 2. The aim of this section is to introduce two new iterative ensemble Kalman methods which are inspired by the SDE continuum limit structure of the algorithms in Section 3. The two new methods that we introduce have in common that they rely on statistical linearization, and that the long-time limit of the ensemble covariance recovers the posterior covariance in a linear setting. Subsection 4.1 contains a new variant of IEKF which in addition to recovering the posterior covariance, it also recovers the posterior mean in the long-time limit. Subsection 4.2 introduces a new variant of the EKI method. Finally, Subsection 4.3 highlights the gradient structure of the algorithms in this and the previous section, shows that our new variant of IEKF can also be interpreted as a modified TEKI algorithm, and sets our proposed new methods into the broader literature.

4.1 Iterative Ensemble Kalman Filter with Statistical Linearization

In some of the literature on iterative ensemble Kalman methods [48, 60], the length-step ( in Algorithms 4 and 5) is set to be 1. Although this choice of length-step allows to recover the true posterior mean in the linear case after one iteration (see Section 3.1.2), it leads to numerical instability in complex nonlinear models. Alternative ways to set include performing a line-search that satisfies Wolfe’s condition, or using other ad-hoc line-search criteria [24]. These methods allow to be adaptively chosen throughout the iterations, but they introduce other hyperparameters that need to be selected manually.

Our idea here is to slightly modify Algorithm 4 so that in the linear case its continuum limit has the true posterior as its invariant distribution. In this way we can simply choose a small enough and run the algorithm until the iterates reach a statistical equilibrium, avoiding the need to specify suitable hyperparameters and stopping criteria. Our empirical results show that this approach also performs well in the nonlinear case.

In the update Equation (3.6), we replace each of the by a perturbation of the prior mean , and we replace by the prior covariance matrix in the definition of . Details can be found below in our modified algorithm, which we call IEKF-SL.

Input: Initial ensemble , step size .

For do:

-

1.

Set .

-

2.

Draw , and set

(4.1)

Output: Ensemble means

It is natural to regard the update (4.1) as a time-stepping scheme. We rewrite it in an alternative form, in analogy to (3.4):

| (4.2) |

where

We interpret Equation (4.2) as a discretization of the SDE system

| (4.3) |

where

The diffusion term can be derived using the fact that

If is linear and the empirical covariance has full rank for all , then , , and the SDE system can be decoupled and further simplified:

| (4.4) |

Proposition 4.1.

For the SDE system (4.3), assume is linear and holds. Assume that the initial ensemble is made of independent samples from a continuous distribution with finite second moments. Then, for , the mean and covariance of satisfy

| (4.5) | ||||

| (4.6) |

Furthermore, as ,

| (4.7) | ||||

| (4.8) | ||||

In other words, and converge to the true posterior mean and covariance, respectively.

Proof.

It is clear that, for fixed are independent and identically distributed. The evolution of the mean follows directly from (4.4), and the evolution of the covariance follows from (4.4) by applying Itô’s formula. It is then straightforward to derive (4.7) and (4.8) from (4.5) and (4.6), respectively. ∎

4.2 Ensemble Kalman Inversion with Statistical Linearization

Recall that in the formulation of EKI, we define a regularized (linearized) data-misfit objective (3.8), where we have a regularizer on with respect to the norm . However, in view of Proposition 3.2, under a linear forward model , the particles will ‘collapse’, meaning that the empirical covariance of will vanish in the large limit. One possible solution to this is ‘covariance inflation’, namely to inject certain amount of random noise after each ensemble update. However, this requires ad-hoc tuning of additional hyperparameters. An alternative approach to avoid the ensemble collapse is to modify the regularization term in the Levenberg-Marquardt formulation (3.8). The rough idea is to consider another regularizer on in the data space, as we describe in what follows.

We define a new regularized data-misfit objective, slightly different from (3.8):

| (4.9) |

where

| (4.10) |

and is defined in (3.1). The regularization term can be decomposed as

The first term can be regarded as a regularization on with respect to the prior covariance . The second term can be regarded as a regularization on with respect to the noise covariance . Applying Lemma 2.1, we can calculate the minimizer of (4.9):

| (4.11) |

where the second equality follows from the definition of , and the third equality follows from the matrix identity (2.7). This leads to Algorithm 9.

Input: Initial ensemble , step size .

For do:

-

1.

Set .

-

2.

Draw and set

(4.12)

Output: Ensemble means

For small , we interpret the update (4.12) as a discretization of the coupled SDE system

| (4.13) |

where

For our next result we will work under the assumption that is constant, which in particular requires that the empirical covariance has full rank for all Importantly, under this assumption and the SDE system is decoupled:

| (4.14) |

Proposition 4.2.

For the SDE system (4.13), assume is linear and holds. Assume that the initial ensemble is made of independent samples from a continuous distribution with finite second moments. Then the mean and covariance of satisfy

| (4.15) | ||||

| (4.16) |

In particular, if has full column rank (i.e., , ), then, as ,

| (4.17) | ||||

| (4.18) |

If has full row rank (i.e., , ), then, as

| (4.19) | ||||

| (4.20) |

Proof.

Note that here, in contrast to the setting of Proposition 3.2, the distribution of does not depend on the ensemble size and we have simply and with The evolution of follows directly from (4.14). To obtain the evolution of , we use a similar technique as in the proof of Proposition 3.2. Applying Itô’s formula,

which recovers (4.16).

4.3 Gradient Structure and Discussion

LM Algorithms in the Continuum Limit

Levenberg-Marquardt algorithms have a natural gradient structure in the continuum limit. This was shown in Equation (2.26), where the preconditioner corresponds to the regularizer that is used in the Levenberg-Marquardt algorithm (2.21). Ensemble-based Levenberg-Marquardt algorithms also possess a gradient structure. To see this, we consider an update , where is the unconstrained minimizer of the same objective as (4.9)

except that we allow any positive (semi)definite matrix to act as a ‘regularizer’. The resulting continuum limit is given by the SDE system

| (4.22) |

where are continuous time analogs of . Notice that is exactly , so that we may rewrite (4.22) as

| (4.23) |

which is a perturbed gradient descent with preconditioner . We recall that in EKI and in EKI-SL. Other choices of are possible and will be studied in future work.

Gauss-Newton Algorithms in the Continuum Limit

Gauss-Newton algorithms also have a natural gradient structure in the continuum limit. As we shown in Equation (2.20), the Gauss-Newton method applied to a Tikhonov-Phillips objective can be regarded as a gradient flow with a preconditioner that is the inverse Hessian matrix of the objective. Ensemble-based Gauss-Newton methods also possess a similar gradient structure. Recall that in Equation (4.3) we formulate the continuum limit of IEKF-SL:

| (4.24) |

Notice that the drift term is exactly , so we may rewrite (4.24) as

| (4.25) |

which is a perturbed gradient descent with preconditioner , the inverse Hessian of .

A Unified View of Levenberg-Marquardt and Gauss-Newton Algorithms in the Continuum Limit

As a conclusion of above discussion, in the continuum limit Levenberg-Marquardt algorithms (e.g., EKI, EKI-SL) are (perturbed) gradient descent methods, with a preconditioner determined by the regularizer used in the Levenberg-Marquardt update step. Gauss-Newton algorithms (e.g., IEKF, IEKF-SL) are also (perturbed) gradient descent, with a preconditioner determined by the inverse Hessian of the objective.

Interestingly, there are cases when the two types of algorithms coincide, even with the same amount of perturbation in the gradient descent step. A way to see this is to set the Levenberg-Marquardt regularizer to be the inverse Hessian of the objective. In equation (4.23), if we consider the Tikhonov-Phillips objective (i.e., replace by , by , by ), and set , then (4.23) and (4.25) will coincide, leading to the same SDE system. This is the reason why we do not introduce a ‘TEKI-SL’ algorithm, as it is identical to IEKF-SL.

Relationship to Ensemble Kalman Sampler (EKS)

Another algorithm of interest is the Ensemble Kalman Sampler (EKS) [23, 17]. Although not discussed in this work, the EKS update is similar to (4.24). In fact, if we replace by the ensemble covariance , and use the fact that by definition, we immediately recover the evolution equation of EKS:

Then an Euler-Maruyama discretization is performed to compute the update formula in discrete form. However, as we find out in several numerical experiments, the length-step needs to be chosen carefully. If the noise has a small scale, EKS often blows up in the first few iterations, while other algorithms with the same length-step do not. Also, if we consider a linear forward model , EKS still requires a mean field assumption in order for it to converge to the posterior distribution, while IEKF-SL approximately needs particles where is the input dimension.

5 Numerical Examples

In this section we provide a numerical comparison of the iterative ensemble Kalman methods introduced in Sections 3 and 4. Our experiments highlight the variety of applications that have motivated the development of these methods, and illustrate their use in a wide range of settings.

5.1 Elliptic Boundary Value Problem

In this subsection we consider a simple nonlinear Bayesian inverse problem originally presented in [20], where the unknown parameter is two dimensional and the forward map admits a closed formula. These features facilitate both the visualization and the interpretation of the solution.

5.1.1 Problem Setup

Consider the elliptic boundary value problem

| (5.1) | ||||

It can be checked that (5.1) has an explicit solution

| (5.2) |

We seek to recover from noisy observation of at two points and . Precisely, we define and consider the inverse problem of recovering from data of the form

| (5.3) |

where . We set a Gaussian prior on the unknown parameter . In our numerical experiments we let the true parameter be and use it to generate synthetic data with noise level

5.1.2 Implementation Details and Numerical Results

We set the ensemble size to be . The initial ensemble is drawn independently from the prior. The length-step is fixed to be 0.1 for all methods. We run each algorithm up to time , which corresponds to 100 iterations.

We plot the level curves of the posterior density of in Figure 1. The forward model is approximately linear, as can be seen from the contour plots in Figure 1 or directly from Equation (5.2). Hence it can be used to validate the claims we made in previous sections.

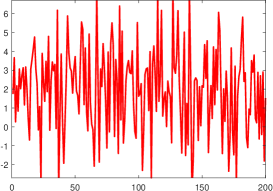

Figure 2 compares the time evolution of the empirical covariance of the different methods. The IEKF-RZL algorithm consistently blows up in the first few iterations due to the small noise which, as discussed in Section 3.1, is an important drawback. Due to the poor performance of IEKF-RZL in small noise regimes, we will not include this method in subsequent comparisons. The EKI and TEKI plots show the ‘ensemble collapse’ phenomenon discussed in Sections 3.2 and 3.3. Note that the size of the empirical covariances of EKI and TEKI decreases monotonically, and by the 100-th iteration their size is one order of magnitude smaller than that of the new variants IEKF-SL and EKI-SL. The ensemble collapse of EKI and TEKI can also be seen in Figure 1, where we plot all the ensemble members after 100 iterations. In contrast, Figure 2 shows that the size of the empirical covariances of the new variants IEKF-SL and EKI-SL stabilizes after around 40 iterations, and Figure 1 suggests that the spread of the ensemble matches that of the posterior. These results are in agreement with the theoretical results derived in Sections 4.2 and 4.1 in a linear setting. Although we have not discussed the IEKF method when is small, its ensemble covariance does not collapse in the linear setting, as can be seen in Figure 2.

In Figures 3 and 4 we show the performance of the different methods along the full iteration sequence. Here and in subsequent numerical examples we use two performance assessments. First, the relative error, defined as where is the ensemble’s empirical mean, which evaluates how well the ensemble mean approximates the truth. Second, we assess how each ensemble method performs in terms of its own optimization objective. Precisely, we report the data-misfit objective for EKI and EKI-SL, and the Tikhonov-Phillips objective for IEKF, TEKI and IEKF-SL. We run 10 trials for each algorithm, using different ensemble initializations (drawn from the same prior), and generate the error bars accordingly. Since this is a simple toy problem, all methods perform well. However, we note that the first iterations of EKI and TEKI reduce the objective faster than other algorithms.

5.2 High-Dimensional Linear Inverse Problem

In this subsection we consider a linear Bayesian inverse problem from [31]. This example illustrates the use of iterative ensemble Kalman methods in settings where both the size of the ensemble and the dimension of the data are significantly smaller than the dimension of the unknown parameter.

5.2.1 Problem setup

Consider the one dimensional elliptic equation

| (5.4) | ||||

We seek to recover from noisy observation of at equispaced points We assume that the data is generated from the model

| (5.5) |

where are independent. By defining and letting be the observation operator defined by , we can rewrite (5.5) as

where . The forward problem (5.4) is solved on a uniform mesh with meshwidth by a finite element method with continuous, piecewise linear basis functions. We assume that the unknown parameter has a Gaussian prior distribution, with covariance operator with homogeneous Dirichlet boundary conditions. This prior can be interpreted as the law of a Brownian bridge between and . For computational purposes we view as a random vector in and the linear map is represented by a matrix The true parameter is sampled from this prior (cf. Figure 5), and is used to generate synthetic observation data with noise level .

5.2.2 Implementation Details and Numerical Results

We set the ensemble size to be . The initial ensemble is drawn independently from the prior. The length-step is fixed to be 0.05 for all methods. We run each algorithm up to time , which corresponds to 600 iterations.

We note that the linear inverse problem considered here has input dimension , which is much larger than the ensemble size . Combining the results from Figure 5, 6 and 7, EKI and EKI-SL clearly overfit the data. In contrast, TEKI over-regularizes the data, and we easily notice the ensemble collapse. The IEKF and IEKF-SL lie in between, and approximate the truth slightly better.

5.3 Lorenz-96 Model

In this subsection we investigate the use of iterative ensemble Kalman methods to recover the initial condition of the Lorenz-96 system from partial and noisy observation of the solution at two positive times. The experimental framework is taken from [14] and is illustrative of the use of iterative ensemble Kalman methods in numerical weather forecasting.

5.3.1 Problem Setup

Consider the dynamical system

| (5.6) |

Here denotes the coordinate of the current state of the system and is a constant forcing with default value of . The dimension is often chosen as 40. We want to recover the initial condition

of (5.6) from noisy partial measurements at discrete times :

| (5.7) |

where is the subset of observed coordinates, and are assumed to be independent. In our numerical experiments we set , , , . We set the prior on to be a Gaussian . The true parameter is shown in Figure 8, and is used to generate the observation data according to (5.7) with noise level

5.3.2 Implementation Details and Numerical Results

We set the ensemble size to be . The initial ensemble is drawn independently from the prior. The length-step is fixed to be 0.05 for all methods. We run each algorithm up to time , which corresponds to 600 iterations.

This is a moderately high dimensional nonlinear problem, where the forward model involves a black-box solver of differential equations. Figure 8 clearly indicates an ensemble collapse for the EKI and TEKI methods. Although they are still capable of finding a descending direction of the loss direction (see Figures 9 and 10), iterates get stuck in a local minimum. In contrast, we can see the advantage of IEKF, EKI-SL and TEKI-SL in this setting: intuitively, a broader spread of the ensemble allows these methods to ‘explore’ different regions in the input space, and thereby to find a solution with potentially lower loss.

We notice that in practice ‘covariance inflation’ is often applied in EKI and TEKI algorithms, by manually injecting small random noise after each ensemble update. In general, this technique will ‘force’ a non-zero empirical covariance, prevent ensemble collapse and boost the performence of EKI and TEKI. However, the amount of noise injected is an additional hyperparameter that should be chosen manually, which should depend on the input dimension, observation noise, etc. Here we give a fair comparison of the different methods introduced, under the same time-stepping setting, with as few hyperparameters as possible.

5.4 High-Dimensional Nonlinear Regression

In this subsection we consider a nonlinear regression problem with a highly oscillatory forward map introduced in [19], where the authors investigate the use of iterative ensemble Kalman methods to train neural networks without back propagation.

5.4.1 Problem Setup

We consider a nonlinear regression problem

where , and is defined by

| (5.8) |

where are random matrices with independent entries. We set , and . We want to recover from . We assume that the unknown has a Gaussian prior . The true parameter is set to be , where is the all-one vector. Observation data is generated as .

By definition, is highly oscillatory, and we may expect that the loss function, either Tikhonov-Phillips or data misfit objective, will have many local minima. Figure 11 visualizes the Tihonov-Phillips objective function with respect to two randomly choosen coordinates while other coordinates are fixed to value of 2. The data misfit objective exhibits a similar behavior.

5.4.2 Implementation Details and Numerical Results

We set the ensemble size to be . The initial ensemble is drawn independently from the prior. The length-step is fixed to be 0.05 for all methods. We set for the observation noise. We run each algorithm up to time , which corresponds to 600 iterations.

We notice that this is a difficult problem, due to its high dimensionality and nonlinearity. All methods except for IEKF-SL are not capable of reconstructing the truth. In particular, from Figures 12, 13 and 14, both EKI and TEKI do a poor job with relative error larger than 1, while IEKF and EKI-SL have slightly better performance. It is worth noticing from Figure 14 that IEKF-SL has a larger Tikhonov-Phillips objective function, with a much lower relative error. This may suggest that other types of regularization objectives can be used, other that the Tikhonov-Phillips objective, to solve this problem.

6 Conclusions and Open Directions

In this paper we have provided a unified perspective of iterative ensemble Kalman methods and introduced some new variants. We hope that our work will stimulate further research in this active area, and we conclude with a list of some open directions.

-

•

Continuum limits have been formally derived in our work. The rigorous derivation and analysis of SDE continuum limits, possibly in nonlinear settings, deserves further research.

-

•

We have advocated the analysis of continuum limits for the understanding and design of iterative ensemble Kalman methods, but it would also be desirable to develop a framework for the analysis of discrete, implementable algorithms, and to further understand the potential benefits of various discretizations of a given continuum SDE system.

-

•

From a theoretical viewpoint, it would be desirable to further analyze the convergence and stability of iterative ensemble methods with small or moderate ensemble size. While mean-field limits can be revealing, in practice the ensemble size is often not sufficiently large to justify the mean-field assumption. It would also be important to further analyze these questions in mildly nonlinear settings.

-

•

An important methodological question, still largely unresolved, is the development of adaptive and easily implementable line search schemes and stopping criteria for ensemble-based optimization schemes. An important work in this direction is [32].

- •

-

•

Ensemble methods are cheap in comparison to derivative-based optimization methods and Markov chain Monte Carlo sampling algorithms. It is thus natural to use ensemble methods to build ensemble preconditioners or surrogate models to be used within more expensive but accurate computational approaches.

-

•

Finally, a broad area for further work is the application of iterative ensemble Kalman filters to new problems in science and engineering.

Acknowledgments

NKC is supported by KAUST baseline funding. DSA is thankful for the support of NSF and NGA through the grant DMS-2027056. The work of DSA was also partially supported by the NSF Grant DMS-1912818/1912802.

References

- [1] S. I. Aanonsen, G. Nævdal, D. S. Oliver, A. C. Reynolds, B. Vallès and others. The ensemble Kalman filter in reservoir engineering–a review. Spe Journal, 14, 03, 393-412, 2009.

- Albers et al. [2019] D. J. Albers, P.-A. Blancquart, M. E. Levine, E. E. Seylabi and A. M. Stuart. Ensemble Kalman methods with constraints. Inverse Problems, 35(9), 2019.

- Anderson et al. [2001] J. L. Anderson. An ensemble adjustment Kalman filter for data assimilation. Monthly Weather Review, 129(12), 2884-2903, 2001.

- [4] B. D. O. Anderson and J. B. Moore. Optimal Filtering. Information and System Sciences Series, 1979.

- [5] B. M. Bell. The iterated Kalman smoother as a Gauss–Newton method. SIAM J. Optim., 4, 626–636, 1994.

- Bell and Cathey [1993] B. M. Bell and F. W. Cathey. The iterated Kalman filter update as a Gauss-Newton method. IEEE Transactions on Automatic Control, 38(2):294–297, 1993.

- [7] D. P. Bertsakas. Incremental least squares method and the extended Kalman filter. SIAM J. Optim 6, 807–822, 1996.

- Blömker et al. [2019] D. Blömker, C. Schillings, P. Wacker and S. Weissmann. Well posedness and convergence analysis of the ensemble Kalman inversion. Inverse Problems, 2019.

- Bloömker et al. [2018] D. Blömker, C. Schillings and P. Wacker. A strongly convergent numerical scheme from ensemble Kalman inversion. SIAM Journal on Numerical Analysis, 56(4):2537–2562, 2018.

- Chada [2018] N. K. Chada. Analysis of hierarchical ensemble Kalman inversion. arXiv preprint arXiv:1801.00847, 2018.

- [11] N. K. Chada, M. A. Iglesias, L. Roininen and A. M. Stuart. Parameterizations for ensemble Kalman inversion. Inverse Problems, 34(5), 2018.

- Chada et al. [2019a] N. K. Chada, C. Schillings and S. Weissmann. On the incorporation of box-constraints for ensemble Kalman inversion. arXiv preprint arXiv:1908.00696, 2019a.

- Chada et al. [2019b] N. K. Chada, A. M. Stuart and X. T. Tong. Tikhonov regularization within ensemble Kalman inversion. SIAM J. Numer. Anal., 58(2), 1263–1294, 2020b.

- Chada et al. [2019b] N. K. Chada and X. T. Tong. Convergence acceleration of ensemble Kalman inversion in nonlinear setting. arXiv preprint arXiv:1911.02424, 2019b.

- Chen et al. [2012b] Y. Chen and D. S. Oliver. Ensemble randomized maximum likelihood method as an iterative ensemble smoother. Mathematical Geosciences, 2012b.

- Dennis et al. [2019b] J. E. Dennis and R. B. Schnabel. Numerical Methods for Unconstrained Optimization and Nonlinear Equations. SIAM, 1996b.

- Ding and Li [2019] Z. Ding and Q. Li. Ensemble Kalman sampling: mean-field limit and convergence analysis. arXiv preprint arXiv:1910.12923, 2019.

- [18] A. Emerick and A. C. Reynolds. Ensemble smoother with multiple data assimilation, Computers and Geosciences, 55, 3–15, 2013.

- [19] E. Haber, F. Lucka and L. Ruthotto. Never look back-a modified enkf method and its application to the training of neural networks without back propagation. arXiv preprint arXiv:1805.08034, 2018.

- [20] O. G. Ernst, B. Sprungk and H. J. Starkloff. Analysis of the ensemble and polynomial chaos Kalman filters in Bayesian inverse problems, SIAM/ASA Journal on Uncertainty Quantification, 3(1), 823–851, 2015.

- [21] G. Evensen. Data Assimilation: The Ensemble Kalman Filter. Springer, 2009.

- [22] G. Evensen and P. J. Van Leeuwen. Assimilation of geosataltimeter data for the agulhas current using the ensemble Kalman filter with a quasi-geostrophic model, Monthly Weather Review, 128, 85–86, 1996.

- Garbuno-Inigo et al. [2019] A. Garbuno-Inigo, F. Hoffmann, W. Li and A. M. Stuart. Interacting Langevin diffusions: Gradient structure and ensemble Kalman sampler. arXiv preprint arXiv:1903.08866, 2019.

- Gu and Oliver [2007] Y. Gu and D. S. Oliver. An iterative ensemble Kalman filter for multiphase fluid flow data assimilation. Spe Journal, 12(04):438–446, 2007.

- Haber et al. [2018] E. Haber, F. Lucka and L. Ruthotto. Never look back-A modified EnKF method and its application to the training of neural networks without back propagation. arXiv preprint arXiv:1805.08034, 2018.

- [26] M. Hanke. A regularizing Levenberg-Marquardt scheme, with applications to inverse groundwater filtration problems. Inverse Problems, 13, 79–95, 1997.

- Herty and Visconti [2018] M. Herty and G. Visconti. Kinetic methods for inverse problems. arXiv preprint arXiv:1811.09387, 2018.

- Grooms [2020] I. Grooms A note on the formulation of the Ensemble Adjustment Kalman Filter. arXiv preprint arXiv:2006.02941, 2020.

- Guth et al. [2020] P. A. Guth, C. Schillings and S. Weissmann. Ensemble Kalman filter for neural network based one-shot inversion. arXiv preprint arXiv:2005.02039, 2020.

- [30] M. A. Iglesias. A regularising iterative ensemble Kalman method for PDE-constrained inverse problems. Inverse Problems, 32, 2016.

- [31] M. A. Iglesias, K. J. H. Law and A. M. Stuart. Ensemble Kalman methods for inverse problems. Inverse Problems, 29, 2013.

- [32] M. A. Iglesias and Y. Yang. Adaptive regularisation for ensemble Kalman inversion with applications to non-destructive testing and imaging. arXiv preprint arXiv:2006.14980, 2020.

- Jazwinski [2007] A. H. Jazwinski. Stochastic Processes and Filtering Theory. Courier Corporation, 2007.

- [34] R. E. Kalman. A new approach to linear filtering and prediction problems, Trans ASME (J. Basic Engineering), 82, 35–45, 1960.

- Kelly and Stuart [2014] D. Kelly, K. J. H. Law and A. M. Stuart. Well-posedness and accuracy of the ensemble Kalman filter in discrete and continuous time. Nonlinearity, 27(10), 2014.

- [36] B. Katltenbacher, A. Neubauer and O. Scherzer. Iterative Regularization Methods for Nonlinear Ill-Posed Problems. Radon Series on Computational and Applied Mathematics, de Gruyter, Berlin, 1st edition, 2008.

- Kovachki and Stuart [2019] N. B. Kovachki and A. M. Stuart. Ensemble Kalman inversion: A derivative-free technique for machine learning tasks. Inverse Problems, 2019.

- [38] Y. Lee. regularization for ensemble Kalman inversion. arXiv preprint arXiv:2009.03470, 2020

- Li and Reynolds [2007] G. Li and A. C. Reynolds. An iterative ensemble Kalman filter for data assimilation. In SPE annual technical conference and exhibition. Society of Petroleum Engineers, 2007.

- Lorentzen et al. [2001] R. J. Lorentzen, K.-K. Fjelde, J. Froyen, A. C. Lage, G. Noevdal and E. H Vefring. Underbalanced drilling: Real time data interpretation and decision support. In SPE/IADC drilling conference. Society of Petroleum Engineers., 2001.

- [41] S. Lu and S. V. Pereverzev. Multi-parameter regularization and its numerical realization. Numerische Mathematik 118(1), 1–31, 2011.

- [42] A. J. Madja and J. Harlim. Filtering Complex Turbulent Systems. Cambridge University Press, 2012.

- Naevdal et al. [2002] G. Naevdal, T. Mannseth and E. H Vefring. Instrumented wells and near-well reservoir monitoring through ensemble Kalman filter. In Proceedings of 8th European Conference on the Mathematics of Oil Recovery, Freiberg, Germany, 2001.

- [44] A. S. Nemirovsky. Problem Complexity and Method Efficiency in Optimization. Wiley-Interscience series in discrete mathematics, 1983.

- Nocedal and Wright [2006] J. Nocedal and S. Wright. Numerical Optimization. Springer Science and Business Media, 2006.

- [46] D. Oliver, A. C. Reynolds and N. Liu. Inverse Theory for Petroleum Reservoir Characterization and History Matching. Cambridge University Press, 1st edn, 2008.

- [47] S. Reich and C. J. Cotter. Ensemble filter techniques for intermittent data assimilation. l Large Scale Inverse Problems. Computational Methods and Applications in the Earth Sciences, 13, 91–134, 2013.

- Reynolds et al. [2006] A. C. Reynolds, M. Zafari and G. Li. Iterative forms of the ensemble Kalman filter. In ECMOR X-10th European conference on the mathematics of oil recovery, pages cp–23. European Association of Geoscientists & Engineers, 2006.

- [49] P. Sakov, D. S. Oliver and L. Bertino. An Iterative EnKF for strongly nonlinear systems. Monthly Weather Review, 140, 1988–2004, 2012.

- Sanz-Alonso et al. [2019] D. Sanz-Alonso, A. M. Stuart and A. Taeb. Inverse Problems and Data Assimilation. arXiv preprint arXiv:1810.06191, 2019.

- [51] C. Schillings and A. M. Stuart. Analysis of the ensemble Kalman filter for inverse problems. SIAM J. Numer. Anal., 55(3), 1264–1290, 2017.

- [52] C. Schillings and A. M. Stuart. Convergence analysis of ensemble Kalman inversion: the linear, noisy case. Applicable Analysis, 97(1), 107–123, 2018.

- [53] T. Schneider, A. M. Stuart and J-L. Wu. Imposing sparsity within ensemble Kalman inversion. arXiv preprint arXiv:2007.06175, 2020.

- [54] B. Shi, S. Du, M. I. Jordan and W. J. Su. Understanding the acceleration phenomenon via high-resolution differential equations. arXiv preprint arXiv:1810.08907, 2018

- [55] J. A. Skjervheim and G. Evensen. An Ensemble Smoother for Assisted History Matching. SPE Reservoir Simulation Symposium, 21–23 February, The Woodlands, Texas, 2001.

- [56] W. Su, S. Boyd and E. Candes. A differential equation for modeling Nesterov’s accelerated gradient method: Theory and insights. Advances in neural information processing systems., 2510-2518, 2014.

- [57] W. Su, S. Boyd and E. Candes. A differential equation for modeling Nesterov’s accelerated gradient method: Theory and insights. The Journal of Machine Learning Research., 17(1),5312-5354, 2016.

- Tarantola [2015] A. Tarantola. Inverse Problem Theory and Methods for Model Parameter Estimation. SIAM, 2015.

- [59] M. K. Tippett, J. L. Anderson, C. H. Bishop and J. S. Whitaker. Ensemble square root filters. Monthly Weather Review, 131(7), 1485-90, 2003.

- Ungarala [2012] S. Ungarala. On the iterated forms of Kalman filters using statistical linearization. Journal of Process Control, 22(5):935–943, 2012.

- [61] A. Wibisono, A. C. Wilson and M. I. Jordan. A variational perspective on accelerated methods in optimization. Proceedings of the National Academy of Sciences, 113(47):E7351–E7358, 2016.