by-sa

”It Brought the Model to Life”: Exploring the Embodiment of Multimodal I3Ms for People who are Blind or have Low Vision

Abstract.

3D-printed models are increasingly used to provide people who are blind or have low vision (BLV) with access to maps, educational materials, and museum exhibits. Recent research has explored interactive 3D-printed models (I3Ms) that integrate touch gestures, conversational dialogue, and haptic vibratory feedback to create more engaging interfaces. Prior research with sighted people has found that imbuing machines with human-like behaviours, i.e., embodying them, can make them appear more lifelike, increasing social perception and presence. Such embodiment can increase engagement and trust. This work presents the first exploration into the design of embodied I3Ms and their impact on BLV engagement and trust. In a controlled study with 12 BLV participants, we found that I3Ms using specific embodiment design factors, such as haptic vibratory and embodied personified voices, led to an increased sense of liveliness and embodiment, as well as engagement, but had mixed impact on trust.

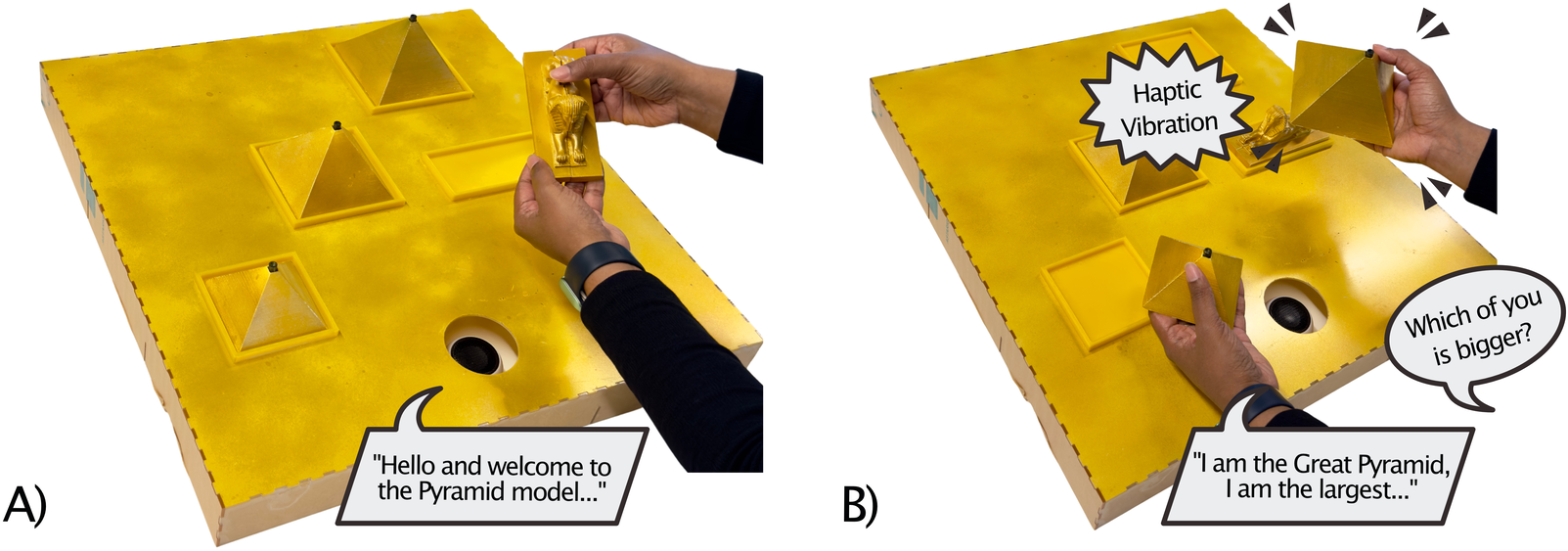

This figure includes two images showcasing one of the interactive 3D-printed models (I3Ms) we designed. Seen is the Egyptian Pyramid I3M operating in High Embodied Mode (HEM). It includes a base made of acrylic that is painted gold. It includes four 3D-printed objects – the Sphinx, and the Pyramids of Khufu, Menkaure, and Khafre. They are also painted gold. In A), the model introduces itself as a user picks up the Sphinx; In B), the user holds the Great Pyramid and Pyramid of Menkaure and asks which is larger, to which the Great Pyramid emits localised haptics and responds using an embodied personified voice and first-person narration.

1. Introduction

The lack of equitable access to graphical information, such as educational materials and navigation maps, can significantly reduce opportunities and overall quality of life for people who are blind or have low vision (BLV) (Butler et al., 2017; Sheffield, 2016). In recent years, 3D printing has been used to create accessible graphics (Stangl et al., 2015; Buehler et al., 2016; Holloway et al., 2018; Hu, 2015). Unlike traditional accessible graphics, 3D printing enables the fabrication of tangible models that directly represent three-dimensional objects and concepts. This allows a broader range of content to be effectively conveyed non-visually. 3D-printed models have demonstrated improved tactual understanding and mental model development compared to tactile graphics, as well as increased engagement (Holloway et al., 2018).

It is now becoming common to add button or touch-triggered audio labels to 3D-printed models (Ghodke et al., 2019; Holloway et al., 2018; Shi et al., 2016; Davis et al., 2020), creating interactive 3D-printed models (I3Ms). Audio labels support independent exploration and reduce the need for braille labels, which many blind people cannot read (of the Blind, 2009), may not fit on the model (Holloway et al., 2018), and can distort the model surface (Holloway et al., 2018; Shi et al., 2016). However, while useful, audio labels can only provide limited, predetermined information. With advances in intelligent agents, recent work has begun integrating conversational agents into I3Ms (Shi et al., 2017; Cavazos Quero et al., 2019; Reinders et al., 2020, 2023). These models afford greater agency and independence to BLV users, allowing them to generate their own queries and potentially access unlimited information about the model. One study involving the co-design of an I3M that combined touch gestures, conversational dialogue, and haptic vibratory feedback, found that participants desired an experience that felt more personal and ‘alive’ (Reinders et al., 2023).

Previous research has shown that imbuing machines with human-like behaviours and characteristics, that is – embodying them – can make them appear more lifelike and alive, increasing social perception and presence (Cassell, 2001; Lankton and Tripp, 2015; Lester et al., 1997; Nowak and Biocca, 2003; Shamekhi et al., 2018). Such embodiment has been found to increase subjective user engagement (Cassell, 2001; Shamekhi et al., 2018) and trust (Bickmore and Cassell, 2001; Bickmore et al., 2013; Minjin Rheu and Huh-Yoo, 2021; Shamekhi et al., 2018). A variety of conversational embodiment factors have been identified to increase embodiment, including speech that mimics human voices (Cassell, 2001), greeting users (Luria et al., 2019; Cassell, 2001; Shamekhi et al., 2018; Lester et al., 1997; Nowak and Biocca, 2003), conversational turn-taking (Cassell, 2001; Kontogiorgos et al., 2020), small talk (Liao et al., 2018; Pradhan et al., 2019; Shamekhi et al., 2018; Cassell, 2001; Bickmore and Cassell, 2001), and exhibiting personality (Lester et al., 1997). Visual embodiment attributes, such as giving the agent a face (Shamekhi et al., 2018; Bickmore and Cassell, 2001; Bickmore et al., 2013; Kontogiorgos et al., 2020), gestures (Cassell, 2001; Bickmore and Cassell, 2001), and employing gaze (Kontogiorgos et al., 2020; Shamekhi et al., 2018), have also been found to increase embodiment, as has physical embodiment (Luria et al., 2017; Kidd and Breazeal, 2004).

However, virtually all prior research has been conducted with sighted users and has not considered BLV users, who may not be able to fully discern or perceive visual characteristics or physical embodiment. Since the usefulness of an accessible graphic or interface depends on users’ willingness to engage with and accept the information it provides (Phillips and Zhao, 1993; Wu et al., 2017; Abdolrahmani et al., 2018), engagement and trust are critical for BLV users. We believe that embodiment, and its links with engagement and trust, may hold significant potential for I3Ms, which are designed to be spoken to, picked up, and touched. Embodied I3Ms could enable BLV students or self-learners to engage in deeper, more meaningful experiences with content, and therefore serve as a catalyst for their broader adoption. Here, we present the first exploration into the design of more embodied I3Ms and the impact of embodiment on the engagement and trust of BLV users. We believe our study is the first to explore embodiment in the context of both BLV users and I3Ms.

We selected five non-visual design factors and created two I3Ms – the Saturn V Rocket and Egyptian Pyramids – that could be configured in two states – High Embodied Mode (HEM) and Low Embodied Mode (LEM). These states differed based on the embodiment design factors. Those factors relating to conversational embodiment (introductions and small talk, embodied personified voices and embodied narration style) have previously been found to increase embodiment. Physical embodiment factors (embodied haptic vibratory feedback and location of speech output) were more novel and were motivated by feedback from BLV users interacting with I3Ms (Reinders et al., 2023). We conducted a within-subject user study with 12 BLV participants, using established questionnaires and subjective ratings to examine how lively, engaging and trustworthy participants perceived each model to be. The main findings of our study include:

-

•

Participants perceived the HEM I3Ms as having a greater sense of liveliness, appearing more embodied compared to LEM I3Ms;

-

•

HEM I3Ms appeared to be more engaging. This adds to research showing that more embodied conversational agents and social robots increase subjective user engagement, establishing that this relationship extends to I3Ms in the context of BLV users;

-

•

Differences in trust between LEM and HEM I3Ms were inconclusive, suggesting the impact of embodiment on trust may be more limited.

Our findings, which represent the first exploration into the embodiment and social perception of embodied I3Ms, provide initial design recommendations for creating I3Ms that BLV users find engaging. These recommendations will be of critical interest to the accessibility research community and to practitioners designing I3Ms for accessible exhibits in public spaces, such as museums and galleries, or as accessible materials for classroom use.

2. Related Work

This work builds on research on: accessible graphics and interactive 3D-printed models for BLV users; conversational agents; and embodied agents.

2.1. Accessible Graphics

BLV people face challenges accessing graphical information, which impacts education opportunities (Butler et al., 2017), makes independent travel difficult (Sheffield, 2016), and causes disengagement with culture and the creative arts (Bartlett et al., 2019). These barriers can lead to reductions in confidence and overall quality of life (Keeffe, 2005).

Graphical information can be made available in formats that improve non-visual access. Traditionally, accessible graphics – known as raised line drawings or tactile graphics – have been used to assist BLV people in accessing information. Tactile graphics are frequently used to facilitate classroom learning (Aldrich and Sheppard, 2001; Rosenblum and Herzberg, 2015) and orientation and mobility (O&M) training (Blades et al., 1999; Rowell and Ungar, 2005). Work has been conducted on the development of interactive tactile graphics, including the NOMAD (Parkes, 1994), IVEO (ViewPlus, [n. d.]), and Talking Tactile Tablet (Miele et al., 2006; Inc, [n. d.]). These devices combine printed tactile overlays with touch-sensitive surfaces, enabling BLV users to explore the graphics tactually and access audio labels by interacting with predefined touch areas. However, as these systems rely on printed tactile graphics, their scope is limited to two-dimensional content.

3D-printed models are an increasingly common alternative to tactile graphics. They enable a broader range of material to be produced, particularly for concepts that are inherently three-dimensional in nature. In recent years, the production cost and effort of 3D-printed models have fallen to levels comparable to tactile graphic production. 3D-printed models are increasingly being applied in various accessible graphic areas: mapping and navigation (Gual et al., 2012; Holloway et al., 2018, 2019b, 2022; Nagassa et al., 2023); special education (Buehler et al., 2016); art galleries (Karaduman et al., 2022; Butler et al., 2023); books (Kim and Yeh, 2015; Stangl et al., 2015); mathematics (Brown and Hurst, 2012; Hu, 2015); graphic design (McDonald et al., 2014); science (Wedler et al., 2012; Hasper et al., 2015); and programming (Kane and Bigham, 2014). Compared to tactile graphics, 3D-printed models have been shown to improve tactual understanding and mental model development among BLV people (Holloway et al., 2018). However, as with traditional tactile graphics, the provision of written descriptions or braille labelling presents challenges. The limited space on models and the low-fidelity of 3D-printed braille can significantly impact the utility and readability of labels (Brown and Hurst, 2012; Taylor et al., 2015; Shi et al., 2016).

2.2. Interactive 3D-Printed Models

To address labelling challenges, limitations on the type of content that can be produced, and to create more engaging and interactive experiences, there is growing interest in the development of interactive 3D-printed models (I3Ms). By combining 3D-printed models with low-cost electronics and/or smart devices, many I3Ms now include button or touch-triggered audio labels that provide verbal descriptions of the printed model (Landau, 2009; Shi et al., 2016; Reichinger et al., 2016; Giraud et al., 2017; Götzelmann et al., 2017; Holloway et al., 2018; Ghodke et al., 2019; Davis et al., 2020). Such I3Ms have been applied across various BLV-accessible graphic areas, including: art (Holloway et al., 2019a; Iranzo Bartolome et al., 2019; Butler et al., 2023); education (Ghodke et al., 2019; Shi et al., 2019; Reinders et al., 2020); and mapping and navigation (Götzelmann et al., 2017; Holloway et al., 2018; Shi et al., 2020). I3Ms with audio labels are especially useful for BLV users who are not fluent braille readers. Stored as text and synthesised in real-time, audio labels are easier to update compared to labels on non-interactive models. Many I3Ms also support multiple levels of audio labelling (Holloway et al., 2018; Shi et al., 2019; Reinders et al., 2020), extracted through unique button presses or touch gestures, enabling them to convey far more information than the written descriptions supplied alongside tactile graphics and non-interactive models.

I3Ms are inherently multimodal. Multimodality can improve the adaptability of a system (Reeves et al., 2004), and when modalities are combined, they can increase the resolution of information the system conveys (Edwards et al., 2015) and enable more natural interactions (Bolt, 1980). For BLV users, combining modalities has been shown to improve confidence and independence (Cavazos Quero et al., 2021). Modality adaptability allows BLV users to choose interaction methods based on context, ability, or effort. For example, a user may be uncomfortable engaging in speech interaction in public due to privacy concerns (Abdolrahmani et al., 2018), opting instead to use button or gesture-based inputs. Richer resolutions of information can be achieved when combining modalities, e.g., the tactile features of a 3D-printed model with haptic vibratory and auditory outputs. Combining modalities is critical to overcoming the ‘bandwidth problem’, in which BLV users’ non-visual senses cannot match the capacity of vision, necessitating their combined use (Edwards et al., 2015).

While early I3Ms primarily relied on button or gesture-based triggered audio labels, recent research has explored integrating speech interfaces and conversational agents. This shift is driven by research finding that BLV people find voice interaction convenient (Azenkot and Lee, 2013), along with widespread adoption (Pradhan et al., 2018) and high usage (Abdolrahmani et al., 2018) of conversational agents among BLV users. For instance, Quero et al. (Cavazos Quero et al., 2019) combined a tactile graphic of a floor plan with a conversational agent that focused on indoor navigation; however, voice interaction was performed through a connected smartphone rather than the graphic itself. Other works have developed voice-controlled agents to guide BLV users in exploring 3D-printed representations of gallery pieces (Iranzo Bartolome et al., 2019; Cavazos Quero et al., 2018). These systems, however, have primarily focused on basic command-driven interactions more analogous to voice menus rather than conversational dialogue. Shi et al. (Shi et al., 2017, 2019) proposed incorporating conversational agents into I3Ms to allow BLV users to expand their understanding of the modelled content.

Recent research into I3Ms has begun to explore modalities beyond audio and touch. Quero et al. (Cavazos Quero et al., 2018) designed an I3M representing an art piece that integrated localised audio, wind, and heat output. However, participants faced challenges in interpreting the semantic mapping of modalities, e.g., whether heat represented the morning sun or the shine of starlight. In our previous work, we found that BLV users desired I3Ms that combined touch, haptic vibratory feedback, and conversational dialogue (Reinders et al., 2020). Additionally, we co-designed an I3M with BLV co-designers to explore how these modalities could create natural interactions (Reinders et al., 2023), inspired by the ‘Put-That-There’ paradigm (Bolt, 1980). This work led to five I3M design recommendations, including: support interruption-free tactile exploration; leverage prior interaction experience with personal technology; support customisation and personalisation; support more natural dialogue; and tightly coupled haptic feedback. These studies motivated our current work, with participants finding the I3M engaging, and several beginning to embody it.

2.3. The Embodiment of Agents

Dourish (Dourish, 2001) presents a seminal view that embodiment “denotes a form of participative status”, where embodied interaction in natural forms of communication is influenced both by physical presence and context. They posit that this perspective applies to “spoken conversations just as much as to apples or bookshelves”. Within the design of agent-based systems, embodiment is largely understood as the use of different modalities – e.g., voice, visual output, gestures, gaze – to imbue machines with human-like behaviours and characteristics, making them appear more ‘alive’; with an enhanced perception of social presence that is capable of approximating human-human social interaction (Cassell, 2000; Lester et al., 1997; Lankton and Tripp, 2015; Biocca, 1999). Such agents are often described as being more ‘lifelike’ or possessing ‘lifelikeness’ (Lester et al., 1997; Cassell et al., 1999; Cassell and Vilhjálmsson, 1999; Cassell and Thorisson, 1999; Lester et al., 1999).

Research into embodiment has predominantly focused on sighted users. As systems become more embodied, users’ perceptions of social presence can increase, motivating users to treat them more favourably (Reeves et al., 2004). Lankton et al. (Lankton and Tripp, 2015) described how social presence can make systems appear more sociable, warm, and personal, while Cassell (Cassell, 2001) noted that embodying technology allows users to locate intelligence, illuminating what would otherwise be an ‘invisible computer’. Importantly, embodied agents exhibiting higher levels of social presence and perception have been shown to enhance user perception of engagement (Shamekhi et al., 2018; Luger and Sellen, 2016; Heuwinkel, 2013; Cassell, 2001) and trust (Bickmore and Cassell, 2001; Shamekhi et al., 2018; Bickmore et al., 2013; Minjin Rheu and Huh-Yoo, 2021).

In the HCI community, efforts to embody intelligent agents have focused on enhancing social perception through conversational, visual, and physical attributes. Conversational embodiment includes mimicking human voices (Cassell, 2001), small talk (Liao et al., 2018; Pradhan et al., 2019; Cassell, 2001; Shamekhi et al., 2018; Bickmore and Cassell, 2001), greetings (Cassell, 2001; Luria et al., 2019; Shamekhi et al., 2018; Lester et al., 1997), and conversational turn-taking (Cassell, 2001; Kontogiorgos et al., 2020). Lester et al. (Lester et al., 1997) described the persona effect, proposing that social presence can increase when agents exhibit personality, making them appear more lifelike. Many conversational agents, like Siri, incorporate attributes of conversational embodiment.

Visually embodied agents, which are often also conversationally embodied, associate systems with virtual avatars or characters, many of which have faces (Cassell, 2001; Kontogiorgos et al., 2020; Bickmore et al., 2013), and are capable of gesturing (Cassell, 2001; Bickmore and Cassell, 2001) and gaze (Kontogiorgos et al., 2020; Shamekhi et al., 2018; Bickmore and Cassell, 2001). Visual embodiment extends beyond the visual feedback that mainstream conversational agents emit, e.g., rings of light or animations used to indicate that agents are processing or ‘thinking’. Depending on the task, visually and conversationally embodied agents can improve social perceptions (Shamekhi et al., 2018; Luria et al., 2019; Cassell, 2001; Nowak and Biocca, 2003), trust (Bickmore and Cassell, 2001; Bickmore et al., 2013; Minjin Rheu and Huh-Yoo, 2021; Shamekhi et al., 2018), and engagement (Shamekhi et al., 2018; Cassell, 2001).

Embodied agents can extend beyond virtual embodiment and include physical bodies (Kontogiorgos et al., 2020; Luria et al., 2017). Lura et al. (Luria et al., 2017) found that users’ situational awareness was higher when using a physically embodied robot to perform smart-home tasks compared to an unembodied voice agent. Robots capable of emitting human-like warmth have been associated with increased perceptions of friendship and presence (Nie et al., 2012). Recent research has explored the use of haptic vibratory feedback to create lifelike cues, such as heartbeats (Borgstedt, 2023) and handshaking (Bevan and Stanton Fraser, 2015). Physically embodied agents can be perceived as having higher social presence than non-physically embodied agents (Kidd and Breazeal, 2004). Additionally, they have been found to be more forgivable during unsuccessful interactions; however, depending on their realism, thi can become distracting in high-stakes scenarios (Kontogiorgos et al., 2020).

2.4. The Embodiment of I3Ms

The design of embodied agents has traditionally focused on the perception of sighted users. However, in the last decade, work has begun exploring how human-human conversational cues can be converted into non-visual formats for BLV users. Many of these efforts utilise haptic belts/headsets (Rader et al., 2014; McDaniel et al., 2018) or AR glasses (Qiu et al., 2016, 2020) to convey body movements like head shaking, nodding, and gaze. Despite this, the impact of agent embodiment, and specifically embodied I3Ms, on BLV users’ perceptions remains unstudied.

In our previous work, we found that a number of participants desired I3Ms that felt more lively and human (Reinders et al., 2023). Participants suggested integrating a conversational agent with a personality, incorporating haptic vibratory feedback to make the I3M feel alive, and enabling speech to originate directly from the model itself. This desire for more human-like interactions aligns with findings with conversational agents. Choi et al. (Choi et al., 2020) reported that many BLV users valued human-like conversation with conversational agents as critical in relationship building, while Abdolrahmani et al. (Abdolrahmani et al., 2018) observed that BLV users preferred agents they could talk with as if they were other people rather than pieces of technology. Karim et al. (Karim et al., 2023) recommended that agents have customisable personalities, recognising that such features may not be relevant in all scenarios, such as group settings. Collins et al. (Collins et al., 2023), however, found hesitance among BLV users towards embodied AI agents in VR applications. Whether these perspectives and desires extend to I3Ms is unknown.

Further impetus for studying I3M embodiment comes from the links between embodiment, engagement, and trust with embodied agents that have been previously identified for sighted users. Trust and engagement are especially critical for BLV users, as the usefulness of accessible graphics, aids, or tools depends on users’ willingness to engage with and accept/rely on the information they provide. This directly determines the extent to which users rely on these tools (Phillips and Zhao, 1993; Wu et al., 2017; Abdolrahmani et al., 2018). Therefore, it is crucial to explore whether I3Ms can be effectively embodied and whether embodiment fosters greater trust and engagement between users and their I3Ms.

3. Embodied Design Factors

Our work was influenced by interpretations of embodiment and embodied interaction proposed by Dourish (Dourish, 2001) and Cassell (Cassell, 2001). Interfaces can be embodied using a range of design characteristics, including visual embodiment, conversational embodiment, and physical embodiment. However, as BLV users, particularly those who are totally blind, may not be able to fully discern visual characteristics, we approached I3M embodiment from a purely non-visual perspective. Traditional visual embodiment design factors, such as virtual avatars or gaze, were not considered. We drew from existing literature to identify a range of design factors shown to enhance perceived levels of social perception and embodiment. These were split between conversational and physical embodiment.

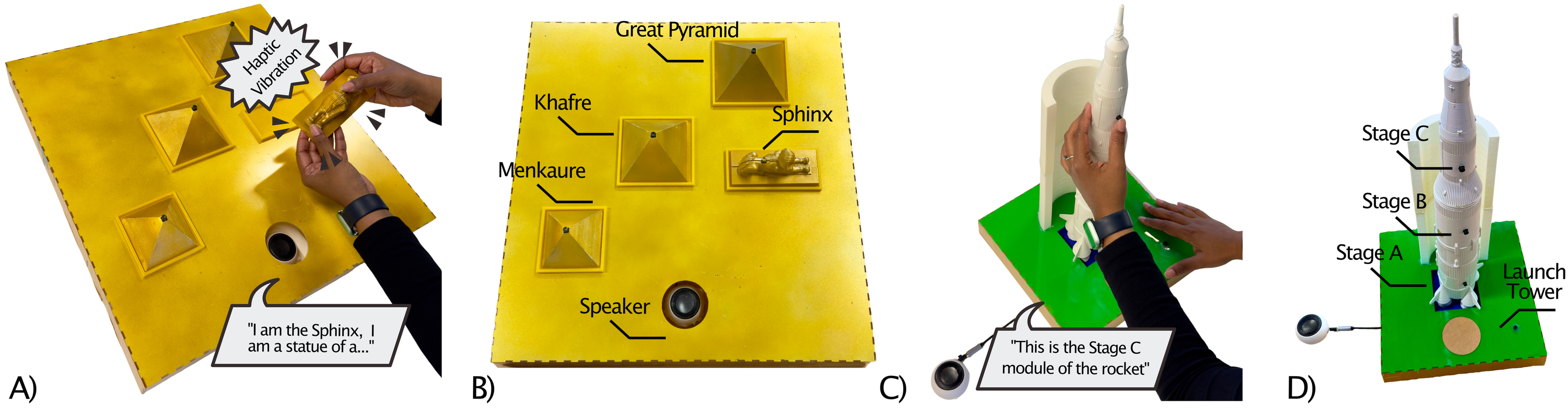

3.1. Model Selection & Design

To investigate conversational and physical embodiment, we created two I3Ms – (1) the Egyptian Pyramids and (2) the Saturn V Rocket [Figure 3]. These subjects were selected because they represent the types of materials commonly found in museums, galleries, or in science or history classes. Additionally, they facilitated the design of models with multiple components that could be individually picked up, detached, and manipulated, which has been shown to increase engagement (Reinders et al., 2020). Each I3M can be configured in two states – High Embodied Mode (HEM) or Low Embodied Mode (LEM) – based on five non-visual design factors (Sections 3.2 & 3.3).

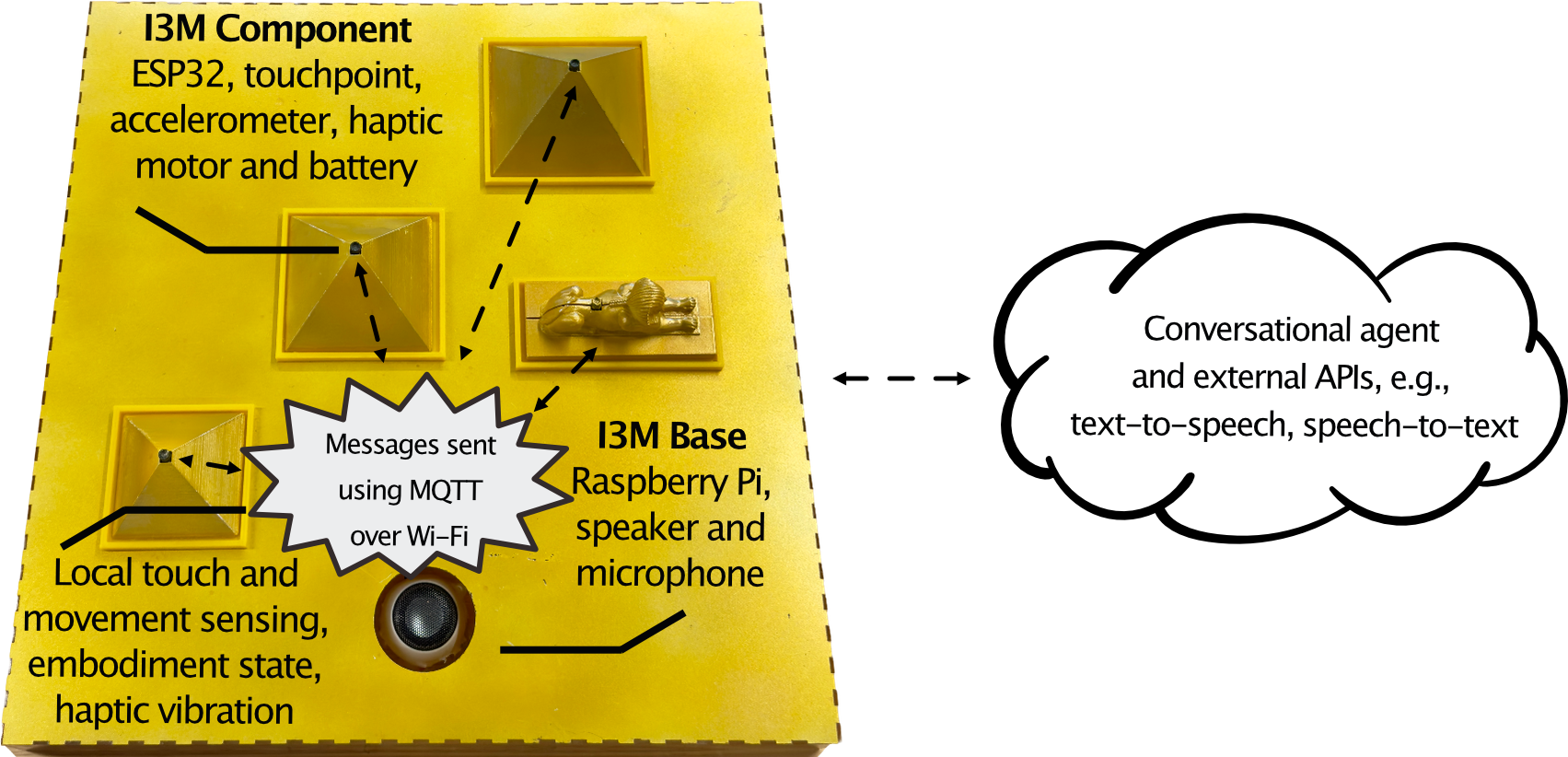

This figure includes an image outlining the architecture of the HEM I3Ms. Shown is a picture of the Egyptian Pyramid I3M, with annotations on top pointing to the various components and hardware it includes. The I3M Base includes a Raspberry Pi, speaker, and microphone. Four I3M Components are highlighted, each including an ESP32, touchpoint, accelerometer, haptic motor and battery. A bubble is depicted in between the Base and Components, highlighting the communication of messages using MQTT over Wi-Fi. These messages include local touch and movement sensing, embodiment state, and haptic vibrations. To the right is a bubble encompassing all the external APIs and libraries that the I3Ms use. This includes Dialogflow for the conversational agent, as well as text-to-speech and speech-to-text libraries.

The base of each I3M was constructed from laser-cut acrylic, serving as a stand to hold the constituent components of the I3M and to house a Raspberry Pi, speaker, and microphone (Figure 2). The Pi powered each I3M and handled the following responsibilities:

-

•

Maintaining a Wi-Fi connection with each I3M component and operating as a message broker (MQTT) to facilitate messaging between the Raspberry Pi and each component.

-

•

Managing the embodied state of the I3M by setting the design factors to operate in either HEM or LEM mode.

-

•

Controlling speech and auditory output through the connected speaker and microphone, using the Picovoice Porcupine wake word library and Google Cloud Speech-to-Text and Text-to-Speech for speech input and synthesis.

-

•

Connecting to the I3M’s conversational agent, built using Google Dialogflow, and integrating ChatGPT to perform external searches triggered by Dialogflow’s fallback intent.

Each I3M had four 3D-printed components. For the Saturn V Rocket, these were the Stage A, Stage B, and Stage C modules, and the Launch Tower. For the Egyptian Pyramid, these included the Sphinx, Great Pyramid, Pyramid of Menkaure, and Pyramid of Khafre. Each component had an ESP32 microcontroller embedded in the print, providing localised touch and movement sensing and haptic vibratory output. The microcontroller had integrated Wi-Fi, capacitive touch-sensing GPIO pins, and was connected to a touchpoint 3D-printed with conductive filament, a 3.7V 400mAh lithium polymer battery, an MPU6050 accelerometer and gyroscope, a DRV2605L haptic motor controller, and a vibrating haptic disc.

This figure includes four images showcasing our two I3Ms. In A), the Egyptian Pyramids I3M is configured in HEM. The user is pressing the touchpoint on the Sphinx, which emits localised haptics, and, using an embodied personified voice and first-person narration, responds through a speaker contained within the I3M’s enclosure. In B), the I3M consists of four 3D-printed components – the Great Pyramid, Sphinx, and Pyramids of Khafre/Menkaure – each of these is labelled, as is the speaker that is embedded inside the I3M enclosure/case in HEM. In C), the Saturn V Rocket I3M is configured in LEM. It includes a base made of acrylic that is painted green, representing the ground. Shown is a user pressing the Stage C module touchpoint. Speech output is played back through a speaker that is housed externally from the I3Ms enclosure — “This is the Stage C module of the rocket”; In D), the Saturn V Rocket I3M consists of four 3D-printed components, the Stage A, Stage B and Stage C rocket modules, which can be detached, and the rockets Launch Tower. The rocket components can be stacked on top of one another as they have magnets attached.

3.1.1. Touch Gestures.

Each I3M component, such as the Sphinx, had a touchpoint that protruded from its surface and was printed using a touch capacitive filament. Touch sensors underwent an automatic calibration process upon I3M startup. Touch gestures were implemented based on findings from our previous co-design work (Reinders et al., 2023). To enable independent tactile exploration, touchpoints needed to be activated before gestures could be used. Users could perform an Activate Press gesture by holding the touchpoint down for one second. This would activate the sub-component and play an audio label identifying it. Once activated, users could perform a Double Press gesture to cycle through 12 audio labels that provided different facts and information about the sub-component. For example, for the Sphinx, this included details about its location, the material it was made from, its construction date, purpose, size, and physical appearance. The final gesture supported was a Long Press, performed by holding down the touchpoint for two seconds. This gesture invoked the I3M’s conversational agent.

3.1.2. Conversational Agent.

Users could perform a Long Press or use the wake word – ‘Hey Model’ – to invoke the I3M’s conversational agent. The attached microphone recorded user queries, which were processed by the agent built using Google Dialogflow. The agent was trained to answer questions related to each component using a corpus containing all the information extractable via touch gestures. This dataset contained an average of 500 words of facts per component. For example, for the Sphinx, this included details about its missing nose, potential astronomical significance, historical restorations, and symbolic meaning. Like audio labels, speech responses were output through the I3M’s speaker, synthesised using the same high-quality voice. If the agent could not answer a query directly, it offered to perform an external search. Upon approval, the agent would send the query to ChatGPT, configured with a context to ensure consistency with the other agent outputs. For example, “You are an intelligent assistant that answers questions in 25 words or less about ancient Egypt and the Pyramids, including the Pyramid of Menkaure, Khafre, Khufu, and the Sphinx of Giza”.

3.1.3. Haptic Vibratory Feedback.

Each model component contained a haptic disc capable of delivering localised vibratory feedback, generated using the DRV2605 Waveform library. Haptic vibrations were emitted as system feedback when touch gestures were performed, following established recommendations (Reinders et al., 2023). These corresponded to the type of gesture executed: Activate (Strong Buzz, 150ms), Double (Strong Short Double Click), or Long Press (Strong Buzz, 500ms).

3.2. Conversational Embodiment Design Factors

In earlier work, we observed that I3M conversational agents should speak with high-quality, human-like voices (Reinders et al., 2023). When designing our I3Ms, the researchers made the decision that voice quality should be independent of embodiment state, to prevent preferences for high-quality voices from overpowering other design factors.

3.2.1. DF#1: Introductions and Small Talk.

Choi et al. (Choi et al., 2020) found that BLV people prefer agents that engage in human-like conversation, as this can help in relationship building. Embodied agents have been designed to introduce themselves to users (Shamekhi et al., 2018; Cassell, 2001; Luria et al., 2019; Lester et al., 1997) and engage in small talk (Liao et al., 2018; Pradhan et al., 2019; Cassell, 2001; Shamekhi et al., 2018). In our I3Ms, when configured in HEM, the conversational agent introduces itself to the user, and could detect and respond to small talk during interactions, using a version of Dialogflow’s small talk module. To avoid interrupting independent tactile exploration, HEM I3Ms only engage in user-initiated small talk, adhering to an established design recommendation (Reinders et al., 2023). This design factor can be configured as follows:

-

•

HEM: When turned on, the I3M is introduced, e.g., “Hello and welcome to the Pyramid model. Let’s learn about ancient Egyptian history together!”. Additionally, when a user initiates small talk, the I3M responds appropriately, e.g., when greeted with “Hello Sphinx”, it replies, “Hi, how are you?”.

-

•

LEM: When an I3M is turned on, a loading message is played, e.g., “Loading Pyramid model”. When a user engages in small talk, the I3M does not respond.

3.2.2. DF#2: Embodied Personified Voices.

Influenced by works where users personified and imbued characters into conversational agents (Purington et al., 2017; Pradhan et al., 2019; Lester et al., 1997), we designed HEM so that model components could ‘speak with their own unique voice’. This was achieved by assigning distinct synthesised voices with each model component, emulating aspects of a one-for-one social presence (Luria et al., 2019). HEM components cannot converse among themselves, in line with Luria et al. (Luria et al., 2019), who observed that users felt discomfort when two active social presences interacted with each other. In contrast, LEM I3Ms employ a singular voice, operating under a one-for-all social presence, where all model components are inhabited as a group.

-

•

HEM: Each I3M component speaks with its own unique synthesised voice, using a one-for-one social presence.

-

•

LEM: All I3M components speak with a unified synthesised voice, using a one-for-all social presence.

3.2.3. DF#3: Embodied Narration Style.

To further explore the personification of the models, we scripted speech output to be narrated from either a first or third-person perspective. This was influenced by work showing that some users anthropomorphise agents by using first and second-person pronouns (Liao et al., 2018; Coeckelbergh, 2011).

-

•

HEM: I3M components phrase verbal responses using first-person narration, e.g., “I am the Great Sphinx of Egypt. I am a statue of a reclining sphinx, a mythical creature. I have the head of a human and the body of a lion. Many suggest that my nose was lost to erosion, vandalism, or damage”. This also extended to external search responses fetched using ChatGPT, which had a modified context, e.g., “You are the Great Sphinx of Egypt and serve as an intelligent assistant, you answer questions in 25 words or less from a first-person perspective about yourself, ancient Egypt, and the Pyramids”.

-

•

LEM: Verbal responses are generated using objective third-person narration, e.g., “This is the Great Sphinx of Egypt. It is a statue of a reclining sphinx, a mythical creature with the head of a human and the body of a lion. Many suggest its nose was lost to erosion, vandalism, or damage”.

3.3. Physical Embodiment Design Factors

I3Ms are inherently designed to be perceived physically, i.e., picked up and tactually observed or manipulated. We sought to explore ways in which the tangible nature of I3Ms could be enhanced by physically embodying presence.

3.3.1. DF#4: Embodied Vibratory Feedback.

In our previous research, a BLV user described an I3M component as ‘lifeless’ except when it was emitting haptic vibrations (Reinders et al., 2023). This influenced our focus on richer haptic vibratory feedback, supported by other work exploring how haptics imbue lifelike cues (Nie et al., 2012; Bevan and Stanton Fraser, 2015; Borgstedt, 2023). We designed HEM I3Ms to emit localised haptic vibratory feedback, creating a sense of physical presence. In contrast, LEM I3Ms generate haptics, but only as system feedback confirming gesture inputs. This decision aligns with the recommendations in (Reinders et al., 2023), since model usability could be impacted if haptics were turned off entirely.

-

•

HEM: I3M components generate haptic vibratory feedback to embody a sense of physical presence. Components use haptics to highlight themselves during interactions, e.g., when a component identifies itself it emits a localised vibration (Transition Ramp Up - 0 to 100%), or when it is referenced during an auditory response (Strong Buzz, 1000ms).

-

•

LEM: Haptics are used only to confirm when a touch gesture has been correctly performed. Components do not use localised haptics to embody physical presence.

3.3.2. DF#5: Location of Speech Output.

Pradhan et al. (Pradhan et al., 2019) identified that an agent’s proximity can influence how human-like it is perceived. Agents closer to the user, or capable of operating across multiple devices to broadcast ubiquity, are often thought of as more present. Due to size constraints, we could not embed speakers directly into I3M components. However, the location of the auditory output can be configured to vary by the position of the speaker.

-

•

HEM: The speaker used by the I3M to output speech is housed within the enclosure.

-

•

LEM: The speaker used by the I3Ms to output speech is positioned externally from the enclosure, approximately 30cm to the left side of the model, reducing proximity.

4. User Study – Methodology

4.1. Hypotheses

We designed a controlled user study to explore and understand whether I3Ms configured with different embodied design factors influence BLV end-users’ perceptions of model embodiment, engagement, and trustworthiness. The study utilised both the Saturn V Rocket I3M and Egyptian Pyramids I3M, each configurable into two states – High Embodied Mode (HEM) and Low Embodied Mode (LEM) – as described in Section 3. We hypothesised that:

-

•

H#1: HEM I3Ms are perceived as more embodied than LEM I3Ms

-

•

H#2: HEM I3Ms are perceived as more engaging than LEM I3Ms

-

•

H#3: HEM I3Ms are perceived as more trustworthy than LEM I3Ms

4.2. Participants

Twelve BLV participants were recruited from our lab’s participant contact pool (Table 1). This sample size () falls within the range commonly seen in BLV accessibility studies, which often involve anywhere between 6-12 participants (Holloway et al., 2022; Nagassa et al., 2023; Shi et al., 2017, 2020) due to the low incidence of blindness in the general population and associated recruitment challenges (Butler et al., 2021).

Participants ranged in age from 27 to 78 years ( = 50, = 16.6). Nine participants self-reported as totally blind, while three reported being legally blind with low levels of light perception. Participants also varied in their prior experience with tactile graphics: Many reported substantial exposure and confidence (), some reported some use but lacked confidence (), and others reported limited or no exposure ( and , respectively).

Familiarity with 3D-printed models was slightly less common. All participants regularly used conversational agents, including Google Assistant (), Siri (), Alexa (), and ChatGPT (). These interfaces were accessed on various devices, such as smartphones (), smart speakers/displays (), smartwatches (), computers () and tablets ().

Participant demographic information, level of vision, accessible formats used, familiarity with tactile graphics and 3D models, and use of conversational agents. Participant #: 1 2 3 4 5 6 7 8 9 10 11 12 Level of Vision: Legally Blind ✓ - ✓ - - - - - - - ✓ - Totally Blind - ✓ - ✓ ✓ ✓ ✓ ✓ ✓ ✓ - ✓ Accessible Formats Used: Braille ✓ ✓ ✓ ✓ ✓ ✓ - ✓ ✓ ✓ ✓ ✓ Audio ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ Tactile Graphics ✓ ✓ ✓ ✓ ✓ ✓ - ✓ ✓ ✓ ✓ ✓ 3D Models - ✓ ✓ ✓ ✓ ✓ - - - - ✓ ✓ Familiarity (1: Not Familiar - 4: Very Familiar): Tactile Graphics 2 3 3 4 4 4 1 3 4 1 4 3 3D Models 1 2 3 4 4 4 1 2 3 1 4 3 Conversational Interfaces Used: Alexa - ✓ - - - ✓ - ✓ - ✓ - - ChatGPT ✓ ✓ - - ✓ - - ✓ - - - - Google Assistant ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ - ✓ ✓ Siri - ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ - ✓ ✓

4.3. Study Measures

We used a series of questionnaires and asked participants to subjectively rate the I3Ms in order to investigate our hypotheses.

For Hypothesis H#1, to measure how embodied participants perceived the I3Ms, we utilised the Godspeed Questionnaire Series (GQS) (Bartneck et al., 2009). Originally devised to measure users’ social perceptions of robot and agent-based systems, GQS subscales such as anthropomorphism and intelligence have recently been applied to measure aspects of embodiment and liveliness in conversational agents (Shamekhi et al., 2018) and robots with human-like abilities (Kontogiorgos et al., 2020). We selected four subscales – anthropomorphism, animacy, likeability, and intelligence – as we felt each provides insight into components of embodied sociability. For example, anthropomorphism captures the attribution of human-like characteristics, animacy reflects perceptions of liveliness, likeability gauges formation of positive impressions, and intelligence focuses on perception of ability. Additionally, we also explored model embodiment by asking participants for their subjective perceptions by expressing the concept of ‘embodiment/embodied’ using the terms ‘lively/liveliness’ to ensure clarity. We felt this terminology would have more meaning to participants, and aligns with previous works that have used the similar terms ‘lifelike/lifelikeness’ to describe embodied agents (Lester et al., 1997; Cassell et al., 1999; Cassell and Vilhjálmsson, 1999; Cassell and Thorisson, 1999; Lester et al., 1999).

To explore Hypothesis H#2, we used two engagement measures – the User Engagement Scale [Short Form] (UES-SF) and the Playful Experiences Questionnaire (PLEXQ). The UES-SF measures user engagement as the depth of a user’s perceived investment with a system (O’Brien, 2016). It consists of 12 five-point Likert items across four subscales – focused attention, perceived usability, aesthetic appeal, and reward (O’Brien et al., 2018). The UES-SF has been widely applied across HCI to measure engagement in contexts such as interactive media (Carlton et al., 2019), video games (Wiebe et al., 2014), and 3D-printed building plans for BLV people (Nagassa et al., 2023). We used all four subscales. To complement the UES-SF, we also used PLEXQ, which measures playfulness, pleasurable experiences, and playful engagement (Boberg et al., 2015). PLEXQ is commonly used to assess how engaging games and game-like experiences are (Bischof et al., 2016; Cho et al., 2024). We used eight subscales that we felt were most relevant to I3Ms – captivation, challenge, control, discovery, exploration, humor, relaxation, and sensation.

To supplement these measures of perceived engagement, we also captured two behavioural metrics (time spent and interactions performed during tasks), as more time spent with the model and more interactions may indicate greater immersion and enjoyment (O’Brien and Lebow, [n. d.]; Doherty and Doherty, 2018). Note that comparing these between the LEM and HEM conditions was meaningful as the length of responses in both conditions was similar and every interaction type was available in both modes.

To investigate Hypothesis H#3 and measure trust, we utilised the Human-Computer Trust Model (HCTM). The HCTM conceptualises trust as a multifaceted construct, encompassing users’ perceptions of the perceived risk, benevolence, competence, and reciprocity during interactions. These perceptions can influence users’ reliance on a system and their likelihood of continued use (Siddharth Gulati and Lamas, 2019). Previous work has demonstrated the utility of HCTM in assessing trustworthiness in conversational agents like Siri (Gulati et al., 2018), machine learning systems (Guo et al., 2022), and other human-like technologies such as large language models (Salah et al., 2023) and chatbots (Degachi et al., 2023).

Based on pilot study feedback, we made minor adjustments to specific subscale items in the UES-SF and PLEXQ measures. For instance, the aesthetic appeal (UES-SF) and sensation (PLEXQ) subscales were adjusted, as concepts of “attractiveness”, “aesthetics”, and “visuals” held little meaning to our pilot user in BLV contexts. They recommended adding the phrase “to my senses” to these items. Per (O’Brien et al., 2018)’s guidance on modifying the UES-SF, we did not report an overall UES score, instead focusing on individual components of engagement. These adjustments also motivated our decision to add our own questions gathering participants’ subjective ratings of the I3Ms, supplementing the validated scales and providing additional nuance and insight. The modified UES-SF and PLEXQ, along with GSQ and HCTM, are provided in the Appendices.

4.4. Experiment Conditions

Our user study used a within-subject design. All participants were exposed to (one) LEM and (one) HEM-configured I3M. To control for bias related to model type (Rocket/Pyramids) and design factor state (LEM/HEM), the order in which the I3Ms were presented, along with their associated LEM/HEM configuration, was counterbalanced. The activities that participants completed with each I3M remained the same, regardless of its LEM/HEM state, and could be completed in either mode without significant difficulty.

4.5. Procedure

Each user study session lasted approximately two hours and included at least one researcher being present. Sessions began with the researcher providing an overview of the research project, and were divided into the following stages:

-

(1)

Training. Participants were guided through a 10-minute training exercise, which allowed them to familiarise themselves with how I3Ms operate. We designed and built an I3M that represented a non-descript sphere for training. Participants were first asked to explore the I3M tactually when it was turned off, before being taught how to extract basic information from the I3M using touch gestures, and asking questions through the conversational interface. The training I3M did not operate in either LEM or HEM mode and would, using a low-quality synthesised voice, only respond by confirming when interactions had been successfully performed (e.g., “Double Press”, “Recording query”). Operating outside of LEM/HEM states was a deliberate design decision to allow training of basic interaction functionality without biasing future LEM/HEM exposure.

-

(2)

Exposure to LEM/HEM I3Ms.

-

(a)

Activities. Participants were introduced to their first I3M, configured in one of the LEM/HEM states, and completed a walkthrough activity. The I3M identified and described each component it included, with participants given the opportunity to tactually explore the I3M throughout. These walkthroughs were carefully curated so that participants encountered the majority of system functionality specific to the HEM/LEM design factors111The only exception to this was the small talk component of the introductions and small talk design factor, which, unlike all other design factors that were explicit, required user initiation (see Section 3.2.1).. After the walkthrough, participants were given up to five minutes to explore the models, during which they could interact with the I3M in any way they wished. Participants were then asked to complete four researcher-directed information-gathering tasks (e.g., finding out how long it took to build the Great Pyramid, what happened to the nose of the Sphinx, or the significance of the Pyramids). Participants could access this information using their choice of either touch gesture interaction or using the model’s conversational agent. Before concluding, participants were given up to an additional five minutes for a free play task designed to mimic undirected, real-world use. They were instructed to discover something interesting about the modelled concept that they were not aware of prior.

-

(b)

Questionnaire Scales. Participants were taken through the questionnaire scales – GSQ, PLEXQ, UES-SF, and HCTM. In addition, participants were asked to rate how lively, engaging, and trustworthy the I3M was, using 5-point Likert scales. On average, these took 15 minutes to complete.

-

(c)

Remaining Model. Participants would then complete 2(a) and 2(b) again with the second I3M, configured in the remaining LEM/HEM state. Participants spent an average of 30 minutes with each I3M.

-

(a)

-

(3)

Semi-Structured Interview. At the end of the session, participants were asked questions about specific interactions with the LEM/HEM I3Ms and were asked to rank how lively, engaging and trustworthy each model was. We also asked about the role each design factor played, and whether they impacted perceptions of how lively, engaging, and trustworthy the I3Ms were. On average, it took 20 minutes to answer these questions.

4.6. Data Collection & Analysis

All sessions were video-recorded and subsequently transcribed. Collected data included responses to scale questions, semi-structured interview questions, and participant comments made during task completion. The time participants spent completing tasks, as well as the number of interactions they performed, were also recorded. Descriptive statistics were calculated on all subscale responses – GSQ, UES-SF, PLEXQ, and HCTM – as well as for interview questions that ranked the I3Ms and individual embodiment design factors.

For data that did not follow a normal distribution (e.g., our scale data), we conducted non-parametric statistical tests, specifically Wilcoxon signed-rank tests. Binomial tests were performed on the rankings of the I3Ms and the impact ratings of individual embodiment design factors. Paired t-tests were conducted on data that was normally distributed (e.g., time taken to complete tasks). We opted for one-tailed tests because our hypotheses were explicit in nature (described in Section 4.1). This approach was deliberate, allowing us to dedicate more power to detecting effects in one direction.

The analysis should be interpreted in light of both the exploratory nature of our user study and our small sample size ( 12). This influenced our approach in two ways. First, from the outset, due to our small sample size, we decided not to use 0.05 as the sole determinant of significance (Shamekhi et al., 2018; Cramer and Howitt, 2004), instead marking results 0.05 as significant (∗) and 0.05 0.1 as marginally significant (∧). Second, given the exploratory nature of our work, in order to reduce the risk of overlooking meaningful results (false negatives), we chose not to apply corrections for multiple comparisons (although this does increase the risk of false positives).

5. Results

Results are presented based on our hypotheses (Section 4.1) and separated across the embodiment of the I3Ms, engagement, and trust. Each section presents quantitative results, including questionnaire scales, rankings of the models and HEM/LEM-configured design factors, and qualitative results, in the form of participant responses from the semi-structured interview.

5.1. Embodiment of I3Ms

5.1.1. GSQ Scales.

HEM I3Ms elicited higher mean scores compared to I3Ms with LEM across all GSQ subscales – anthropomorphism ( +0.67), animacy ( +0.46), likeability ( +0.27), and intelligence ( +0.29). We conducted one-tailed Wilcoxon signed-rank tests for each GSQ subscale (Table 2), using design configuration (HEM, LEM) as the independent variable to assess significance. The positive effect of HEM was statistically significant across all GSQ subscales – anthropomorphism (), animacy (), likeability (), and intelligence ().

| Godspeed Questionnaire | |||||||||||

| Descriptive Statistics | Wilcoxon Test | ||||||||||

| HEM I3Ms | LEM I3Ms | ||||||||||

| Scale | M | SD | V | M | SD | V | z-score | p-value | |||

| Anthropomorphism | 4.00 | 3.75 | 1.36 | 1.85 | 3.00 | 3.08 | 1.35 | 1.83 | 2.288 | 0.011∗ | |

| Animacy | 5.00 | 4.17 | 1.14 | 1.31 | 4.00 | 3.71 | 1.37 | 1.87 | 1.895 | 0.029∗ | |

| Likeability | 5.00 | 4.58 | 0.68 | 0.47 | 4.50 | 4.31 | 0.78 | 0.60 | 2.428 | 0.008∗ | |

| Intelligence | 5.00 | 4.46 | 0.71 | 0.50 | 4.50 | 4.17 | 0.90 | 0.81 | 2.333 | 0.010∗ | |

| Did you find that the I3M felt lively? | |||||||||||

| Liveliness | 5.00 | 4.50 | 0.65 | 0.42 | 4.00 | 4.00 | 0.91 | 0.83 | 2.121 | 0.017∗ | |

| Did the design factors impact your perception of how lively the HEM I3M was? | |||||||

| Design Factor | Descriptive Statistics | Binomial Test | |||||

| M | SD | V | k, n | p-value | 95th CI | ||

| DF#1: Introductions & Small Talk | 4.00 | 4.17 | 0.55 | 0.31 | 11, 12 | ¡0.001∗ | 0.661, 1.000 |

| DF#2: Embodied Personified Voices | 4.00 | 4.25 | 0.72 | 0.52 | 10, 12 | 0.003∗ | 0.562, 1.000 |

| DF#3: Embodied Narration Style | 4.00 | 4.17 | 0.90 | 0.81 | 10, 12 | 0.003∗ | 0.562, 1.000 |

| DF#4: Embodied Vibratory Feedback | 4.50 | 4.33 | 0.85 | 0.72 | 11, 12 | ¡0.001∗ | 0.661, 1.000 |

| DF#5: Location of Speech Output | 4.00 | 4.00 | 0.82 | 0.67 | 8, 12 | 0.057∧ | 0.391, 1.000 |

5.1.2. How Lively Were The I3Ms?

Participants rated how lively each I3M was immediately after being exposed to it. HEM I3Ms were perceived as more lively ( +0.50) compared to the LEM configuration (Table 2). This was statistically significant (). In the post-activity interview, participants ranked the I3Ms based on perceived liveliness. Two-thirds of participants () indicated that the HEM I3Ms had higher liveliness compared to the LEM I3Ms, with the remainder split between no difference () and the LEM configuration (). A binomial test revealed the difference between the number of participants who ranked the HEM I3Ms higher and those who either selected the LEM I3M or could tell no difference was statistically significant (, , ).

Most participants were emphatic in their selection. P2 described how the HEM I3M’s design factors “brought it [the model] to life”, continuing, “it [the HEM I3M] created a relationship, [it is] like dealing with something that is alive, it *is* talking to you”. P8 referred to the HEM I3M as “more human-like and interactive, more natural”, while P11 noted that the HEM I3M “was more like an entity… less of a computer program”. Similarly, P4 mentioned that the HEM I3M “seemed to want to interact with me … [whereas] the other one could have been talking to the moon”. Six participants explicitly referred to the HEM I3M as being “more human-like” in their explanations (P3, P4, P8, P10, P11 and P12), with P3 also stating that the LEM I3M was “too machine-like”. However, P5 felt that the HEM and LEM configurations appeared just as lively as one another, stating that “they were both pretty active”. One participant (P1) selected the LEM Rocket I3M as the most lively, citing specific elements of that model’s design as the determining factor. P1 explained, “the rocket… [its] three sections made it more real” (P1).

5.1.3. Impact of Design Factors on Liveliness.

Participants rated how each HEM design factor influenced their perception of I3M liveliness using a 5-point Likert scale (Table 3). All factors appeared to influence perceptions of the liveliness of the HEM I3Ms. Embodied vibratory feedback elicited the highest mean score, while location of speech output scored the lowest. Binomial tests revealed that the difference between the number of participants who agreed or strongly agreed, and those who were neutral or below, was statistically significant for all factors, apart from location of speech output, which was marginally significant (, , ).

5.2. Engagement of I3Ms

5.2.1. UES & PLEXQ Scales.

HEM I3Ms elicited higher mean scores across three of the four UES-SF subscales (Table 4) – focused attention ( +0.17), perceived usability ( +0.45), and aesthetic appeal ( +0.14). Wilcoxon results for these subscales were significant for both perceived usability () and aesthetic appeal (), but marginally significant for focused attention (). The reward subscale, however, showed a higher mean score for LEM I3Ms ( +0.11), and was non-significant.

HEM I3Ms exhibited marginally higher mean scores across seven PLEXQ subscales. Significant results were found for control ( +0.22, ) and sensation ( +0.17, ), while humor ( +0.16) was marginally significant (). One subscale, exploration, showed a higher mean score for LEM I3Ms ( +0.08).

| User Engagement Scale | |||||||||||

| Descriptive Statistics | Wilcoxon Test | ||||||||||

| HEM I3Ms | LEM I3Ms | ||||||||||

| Scale | M | SD | V | M | SD | V | z-score | p-value | |||

| Focused Attention | 4.00 | 3.86 | 0.95 | 0.90 | 4.00 | 3.69 | 1.00 | 0.99 | 1.500 | 0.067∧ | |

| Perceived Usability | 5.00 | 4.56 | 0.55 | 0.30 | 4.00 | 4.11 | 0.84 | 0.71 | 3.025 | 0.001∗ | |

| Aesthetic Appeal | 5.00 | 4.53 | 0.60 | 0.36 | 4.00 | 4.39 | 0.64 | 0.40 | 1.889 | 0.029∗ | |

| Reward | 5.00 | 4.64 | 0.54 | 0.29 | 5.00 | 4.75 | 0.43 | 0.19 | -2.000 | 0.977 | |

| Playful Experiences Questionnaire | |||||||||||

| Captivation | 3.00 | 3.03 | 1.34 | 1.80 | 3.00 | 3.00 | 1.20 | 1.44 | 0.233 | 0.408 | |

| Challenge | 5.00 | 4.61 | 0.49 | 0.24 | 5.00 | 4.58 | 0.55 | 0.30 | 0.333 | 0.369 | |

| Control | 4.00 | 4.25 | 0.76 | 0.58 | 4.00 | 4.03 | 0.76 | 0.58 | 2.138 | 0.016∗ | |

| Discovery | 5.00 | 4.50 | 0.60 | 0.36 | 5.00 | 4.42 | 0.72 | 0.52 | 0.905 | 0.183 | |

| Exploration | 4.00 | 4.42 | 0.60 | 0.35 | 5.00 | 4.50 | 0.60 | 0.36 | -1.732 | 0.954 | |

| Humor | 4.00 | 4.22 | 0.85 | 0.73 | 4.00 | 4.06 | 0.97 | 0.94 | 1.328 | 0.092∧ | |

| Relaxation | 5.00 | 4.31 | 0.91 | 0.82 | 4.00 | 4.22 | 0.82 | 0.67 | 0.676 | 0.249 | |

| Sensation | 5.00 | 4.53 | 0.60 | 0.36 | 4.00 | 4.36 | 0.67 | 0.45 | 1.897 | 0.029∗ | |

| Did you find that the I3M felt engaging? | |||||||||||

| Engagement | 5.00 | 4.67 | 0.47 | 0.22 | 4.00 | 4.25 | 0.60 | 0.35 | 2.236 | 0.013∗ | |

5.2.2. How Engaging Were The I3Ms?

When asked to rate how engaging each I3M was, results indicated that HEM I3Ms were more engaging ( +0.42) than LEMs (Table 4). This difference was statistically significant (). In the post-activity interview, the majority of participants () ranked the HEM I3Ms as more engaging than the LEM condition, while the remaining participants () found no difference. A binomial test revealed that these rankings were statistically significant (, , ).

Participants clearly articulated their reasons, with seven directly referencing the HEM I3M as being either “more engaging” or “interactive” in their explanations (P2, P3, P5, P8, P9, P10, and P12). P2, who felt the HEM I3M was more engaging, described the difference as “one is more [like] reading an encyclopedia and the other [the HEM I3M] is an experience”, adding that they found the HEM I3M to be more ‘playful’. P7 expanded on this, “[I] wanted to ask [the HEM I3M] more questions, I wanted to get more information, whereas [I] just accepted [the LEM I3M] as fact”. P8 went a step further, stating that the LEM I3M was “boring” while the HEM I3M was “more enthusiastic”. P10 compared the HEM I3M to their screen reader, “Jaws is neutral, it is not interactive, it gets hypnotic. Using different voices is way more engaging!”. Despite being more interested in the subject matter of their LEM I3M, P8 ultimately found the HEM I3M more engaging, explaining, “I was interested in Egypt more than space … but the way the rocket acted made it more engaging”.

As a further indication of engagement, participants spent more time interacting with the HEMs ( 99.5 seconds) compared to the LEMs ( 68.8) during the free play exercise (Table 5). A one-tailed paired t-test revealed this to be statistically significant (, ). Participants also engaged in more interactions with the HEMs ( 3.3) compared to the LEMs ( 2.2). Wilcoxon results indicated this difference was marginally significant ().

Participants also chose to spend more time using the HEM I3Ms during both the model exploration ( +29.8 seconds) and researcher-directed ( +54.4) tasks. Paired t-tests revealed that the effect of the HEMs was statistically significant for time spent during model exploration (, ) and marginally significant for time spent completing the researcher-directed tasks (, ).

Regarding interactions, participants performed more interactions with the HEMs during these tasks. Paired t-tests indicated marginal significance for both model exploration ( +1.8, , ) and researcher-directed tasks ( +0.5, , ). Notably, during the researcher-directed tasks, participants occasionally chose to continue interacting beyond what was required to complete a task, performing additional interactions. These instances favoured the HEMs () over the LEMs ().

| Task Completion (Time Spent) | |||||||||

| Descriptive Statistics | Statistical Test | ||||||||

| HEM I3Ms | LEM I3Ms | ||||||||

| Task | M | SD | M | SD | test-statistic | p-value | |||

| Model Exploration | 240.0 | 228.9 | 63.2 | 180.0 | 199.1 | 40.8 | 2.025 | 0.035∗ | |

| Researcher-Directed | 592.5 | 592.3 | 120.5 | 557.5 | 537.9 | 150.7 | 1.595 | 0.070∧ | |

| Free Play | 92.5 | 99.5 | 39.9 | 60.0 | 68.8 | 17.0 | 2.899 | 0.007∗ | |

| Task Completion (Interactions Undertaken) | |||||||||

| Model Exploration | 13.0 | 11.9 | 2.6 | 10.0 | 10.1 | 3.0 | 1.583 | 0.071∧ | |

| Researcher-Directed | 4.5 | 4.8 | 0.9 | 4.0 | 4.3 | 0.5 | 1.732 | 0.056∧ | |

| Free Play | 2.0 | 3.3 | 2.1 | 2.0 | 2.2 | 0.7 | 1.483 | 0.069∧ | |

5.2.3. Impact of Design Factors on Engagement.

Participants rated how each design factor, presented in the HEM state, impacted their perception of I3M engagement (Table 6). All five design factors appeared to influence how engaging the HEM I3Ms were perceived and were statistically significant – introductions & small talk and location of speech output (both had , , ), and the remaining three factors (all , , ).

| Did the design factors impact your perception of how engaging the HEM I3M was? | |||||||

| Design Factor | Descriptive Statistics | Binomial Test | |||||

| M | SD | V | k, n | p-value | 95th CI | ||

| DF#1: Introductions & Small Talk | 4.00 | 4.08 | 0.76 | 0.58 | 11, 12 | ¡0.001∗ | 0.661, 1.000 |

| DF#2: Embodied Personified Voices | 4.00 | 4.25 | 0.72 | 0.52 | 10, 12 | 0.003∗ | 0.562, 1.000 |

| DF#3: Embodied Narration Style | 4.50 | 4.25 | 0.92 | 0.85 | 10, 12 | 0.003∗ | 0.562, 1.000 |

| DF#4: Embodied Vibratory Feedback | 4.00 | 4.25 | 0.72 | 0.52 | 10, 12 | 0.003∗ | 0.562, 1.000 |

| DF#5: Location of Speech Output | 4.00 | 4.25 | 0.60 | 0.35 | 11, 12 | ¡0.001∗ | 0.661, 1.000 |

5.3. Trustworthiness of I3Ms

5.3.1. HCTM Scales.

| Human Computer Trust Model | |||||||||||

| Descriptive Statistics | Wilcoxon Test | ||||||||||

| HEM I3Ms | LEM I3Ms | ||||||||||

| Scale | M | SD | V | M | SD | V | z-score | p-value | |||

| Perceived Risk | 1.00 | 1.58 | 0.72 | 0.52 | 2.00 | 1.86 | 0.98 | 0.95 | 2.428 | 0.008∗ | |

| Benevolence | 4.00 | 4.14 | 0.95 | 0.90 | 4.00 | 4.03 | 0.90 | 0.80 | 1.633 | 0.051∧ | |

| Competence | 5.00 | 4.50 | 0.55 | 0.31 | 4.00 | 4.31 | 0.66 | 0.43 | 2.646 | 0.004∗ | |

| Reciprocity | 4.00 | 4.31 | 0.70 | 0.49 | 4.00 | 4.08 | 0.80 | 0.63 | 1.929 | 0.027∗ | |

| Did you find that the I3M felt trustworthy? | |||||||||||

| Trustworthiness | 5.00 | 4.58 | 0.49 | 0.24 | 5.00 | 4.50 | 0.65 | 0.42 | 0.577 | 0.282 | |

HEM I3Ms outperformed LEM I3Ms across all four HCTM subscales (Table 7) – perceived risk222The perceived risk subscale relates to the willingness of a user to engage with a system despite possible risks. A lower perceived risk score is desired, signifying that the user is more willing to interact with the system. ( -0.28), benevolence ( +0.11), competence ( +0.19), and reciprocity ( +0.23). Wilcoxon results were significant for three HCTM subscales – perceived risk (), competence (), and reciprocity (). The remaining subscale, benevolence, was marginally significant ().

5.3.2. How Trustworthy Were The I3Ms?

When rating the trustworthiness of each I3M (Table 7), results were less clear. A minor increase in mean score for HEM I3Ms ( +0.08) over LEM I3Ms was observed; however, this difference was nonsignificant (). In the post-activity interview ranking, the majority of the participants () indicated that there was no major discernible difference in trust between the HEM and LEM models. The remaining participants () ranked the HEM I3Ms higher. A binomial test showed that this result was nonsignificant (, , ).

Participants described feeling indecisive, often basing their interpretation of trust on the believability of the information provided by the models. P2 noted that both models were “just giving [them] facts” and that their manner of acting or speaking did not matter. P4 explained that as both HEM and LEM I3Ms were “accessing information from the internet … that [they] trusted it to only access certain [appropriate] things”. Despite reporting no major differences in their ratings, P11 suggest that “incorrect facts, [when] talking in first person reduces trust”, while P5, who also rated no difference, focused on the salient physical design of the I3Ms, expressing concern about ‘the height of the rocket … knocking it over”.

5.3.3. Impact of Design Factors on Trust.

Participants were divided on how the individual HEM design factors influenced their perceptions of the trustworthiness of the I3Ms (Table 8). Embodied vibratory feedback elicited the highest mean score, while embodied narration style scored the lowest. Apart from embodied vibratory feedback, which was marginally significant (, , ), all other design factors were nonsignificant.

| Did the design factors impact your perception of how trustworthy the HEM I3M was? | |||||||

| Design Factor | Descriptive Statistics | Binomial Test | |||||

| M | SD | V | k, n | p-value | 95th CI | ||

| DF#1: Introductions & Small Talk | 4.00 | 3.67 | 0.62 | 0.39 | 7, 12 | 0.158 | 0.315, 1.000 |

| DF#2: Embodied Personified Voices | 3.50 | 3.67 | 0.75 | 0.56 | 6, 12 | 0.335 | 0.245, 1.000 |

| DF#3: Embodied Narration Style | 3.00 | 3.42 | 0.95 | 0.91 | 5, 12 | 0.562 | 0.181, 1.000 |

| DF#4: Embodied Vibratory Feedback | 4.00 | 3.83 | 0.69 | 0.47 | 8, 12 | 0.057∧ | 0.391, 1.000 |

| DF#5: Location of Speech Output | 3.50 | 3.67 | 0.75 | 0.56 | 6, 12 | 0.335 | 0.245, 1.000 |

6. Discussion

6.1. Hypothesis #1: HEM I3Ms are perceived as more embodied than LEM I3Ms

Participants felt that HEM I3Ms were more lively, with an increased perception of embodiment compared to the LEM I3Ms. When ranking the I3Ms on liveliness, 8/12 selected HEM I3Ms (Section 5.1.2). This was also statistically significant, with most participants providing emphatic explanations for their selections, supporting H#1.

Results from the Godspeed Questionnaire Series also supported H#1 and allowed us to explore different dimensions and key aspects of embodiment, adding nuance to our understanding of how the I3Ms appeared embodied (Table 2). All GQS subscales were statistically significant. It is particularly noteworthy that the anthropomorphism and animacy subscales were rated more positively for HEM I3Ms, as these subscales deal with the attribution of human-like behaviours and perception of life (Bartneck et al., 2009), which are key components of embodiment. The likeability and intelligence subscales were also perceived more favourably for HEM I3Ms.

Overall, our findings and participant comments help to support H#1 that BLV users do perceive I3Ms configured with HEM design factors as more embodied, ‘present’, and ‘lively’. These findings align with work on the embodiment of other interfaces in non-BLV contexts, including robots (Kontogiorgos et al., 2020) and conversational agents (Luria et al., 2017; Shamekhi et al., 2018; Luria et al., 2019), which has found that imbuing machines with human-like behaviours can enhance their perceived embodiment and sense of presence, creating a sense of ‘being there’ (Shamekhi et al., 2018).

6.2. Hypothesis #2: HEM I3Ms are perceived as more engaging than LEM I3Ms

Participants rated higher levels of engagement with HEM I3Ms compared to LEM I3Ms. When ranking which I3M was more engaging, the overwhelming majority (10/12) selected HEM (Section 5.2.2). These differences were statistically significant, supporting H#2.

We used the User Engagement Scale and Playful Experiences Questionnaire (Table 4) to explore different dimensions of engagement to add to and help contextualise our understanding of how engaging participants found the I3Ms. The UES-SF indicated that HEM I3Ms had higher average scores than LEM I3Ms across 3/4 subscales. Of these subscales, two were statistically significant, and one was marginally significant. These subscales focus on the extent to which users feel absorbed in an interaction (focused attention) and the usability/negative affect experienced during interactions (perceived usability), both of which are critical dimensions of engagement (O’Brien et al., 2018). The aesthetic appeal subscale, which the researchers adjusted to be more meaningful in BLV contexts – encompassing aesthetics beyond those purely visual – was also statistically significant. This is particularly noteworthy, with HEM-configured I3Ms providing more engaging sensory experiences. The reward subscale, centred on valued experiential outcomes, was not significant with respect to our hypothesis, and was the only subscale where LEM I3Ms received higher mean scores. This may indicate that the additional presence and feedback of HEM I3Ms could, in some circumstances, reduce initial curiosity.

It is our belief that several PLEXQ subscales may have had reduced meaning to participants, potentially as a result of the controlled nature and limited time exposure of the study. For example, subscales that focus on finding something hidden (discovery) or unwinding through playful experiences (relaxation) may have been less relevant. Interestingly, one subscale, exploration, elicited higher mean values for LEM I3Ms, possibly for similar reasons to the UES-SF reward subscale. Despite this, several PLEXQ results added support to H#2, with subscales related to excitement (sensation), enjoyment/amusement (humor), and power (control) being more favourably observed with HEM I3Ms.

Across all tasks, participants spent more time interacting with the HEM I3Ms and performed a greater number of interactions compared to the LEM I3Ms. We feel this was particularly noteworthy during the undirected free play task, which was designed to mimic real-world use. Time spent and the number of interactions were statistically significant and marginally significant, respectively. These metrics have previously been used as behavioural measures of engagement in HCI (O’Brien and Lebow, [n. d.]; Doherty and Doherty, 2018), and provide further evidence that participants were more engaged with the HEM I3Ms. They also complement the UES-SF, which ties engagement to the depth of a user’s investment with a system (O’Brien, 2016).

Based on emphatic participant discussions and clear preferences when directly asked, our study provides evidence supporting H#2 that HEM I3Ms are perceived as more engaging. Our scale data adds important nuance to this understanding, with key UES-SF and PLEXQ subscales showing that HEM I3Ms were more favourably perceived. Although ratings for both HEM and LEM I3Ms were generally high, which aligns with works showing that BLV users find 3D-printed models and I3Ms engaging (Nagassa et al., 2023; Reinders et al., 2020; Shi et al., 2019), our results suggest that HEM I3Ms are perceived as even more engaging. More broadly, our findings align with prior work demonstrating that interfaces imbued with more human-like behaviours can positively impact end-user perceptions of engagement (Lester et al., 1997; Cassell et al., 1999; Shamekhi et al., 2018).

6.3. Hypothesis #3: HEM I3Ms are perceived as more trustworthy than LEM I3Ms

Our participants were mixed on whether there were any discernible differences in the trustworthiness of HEM and LEM I3Ms. Hesitance was observed when participants were asked to rank which I3M was the more trustworthy model, with 9/12 indicating no major discernible difference between them (Section 5.3.2). Participants expressed indecisiveness, often basing their interpretation of trust solely on the believability of the information output from the models, rather than their interactions with the I3Ms.

On the other hand, results from the Human-Computer Trust Model (Table 7) suggest that HEM I3Ms may lead to greater trust, as all four HCTM subscales were either significant () or marginally significant (). Results did, however, reveal only very minor differences in mean scores between HEM I3Ms and LEM I3Ms. Despite this, these scales relate to the willingness of users to engage with a system despite the possible risks (perceived risk), whether a system possesses the functionalities needed to depend on it (competence), and a willingness to spend more time using it when support situations arise (reciprocity). The benevolence subscale, which was marginally significant, focuses on whether users believe that a system has the abilities required to help them achieve their goals.

Despite the statistical significance of the HCTM results, we believe that, overall, our results provide only mixed support for Hypothesis H#3. It is the researchers’ view that the HCTM questionnaire and subjective ratings may have been interpreted differently by participants. While the scale data appears to have successfully captured different dimensions of trust based on interactions with the HEM/LEM models, many participants, when asked about the concept of trust subjectively (Section 5.3.2), focused solely on the believability of the information provided by the models, independent of their HEM/LEM state. They tended to prioritise believability of information over considerations as to whether their interactions with the models and their behaviours influenced perceptions of reliance or competence. Our findings also suggest that the HEM design factors tested do not appear to play a strong role in influencing trust. As visually embodied conversational agents have been shown to be subjectively more trustworthy in non-BLV contexts (Heuwinkel, 2013; Bickmore et al., 2013; Sidner et al., 2018; Shamekhi et al., 2018; Gulati et al., 2018), it is clear that more research is needed in order to better understand the impact of embodiment on trustworthiness of I3Ms for BLV users, particularly in real-world situations.

6.4. How Did The Design Factors Impact Embodiment, Engagement and Trust?

Broadly speaking, our embodiment design factors and their implementations were well-received, contributing to how embodied and engaging the HEM I3Ms were perceived. However, connections to trust were mixed. These are discussed below in order of importance.

DF#4: Embodied Vibratory Feedback. Participants shared their enthusiasm regarding haptics. Referring to the liveliness of the HEM I3Ms, P3 described how the use of haptics made them feel “more three-dimensional… realistic”. Participants also discussed how haptics made the HEM I3Ms more engaging, including P5, “[haptics] added an extra sense of interaction”. These emphatic reactions align with other work, where haptics added life to I3Ms (Reinders et al., 2023), and support the growing body of research exploring how haptics can create physically embodied, lifelike cues (Nie et al., 2012; Bevan and Stanton Fraser, 2015; Borgstedt, 2023).

DF#2: Embodied Personified Voices. Participants highlighted how unique voices shaped their perception of embodiment. P9 was emphatic about how it made the HEM model feel more alive, “[it] made [the components] seem like they were their own things”. P6 detailed how the one-for-one social presence of the HEM model gave components their own ‘character’. Regarding engagement, several participants found the voices helpful for tracking which I3M component was active and talking. P2 explained, “different voices … broke the [HEM] model up into separate parts, it defined the objects better”. P8 reflected, “[a] single voice does not stack up”. This aligns with work by Choi et al. (Choi et al., 2020)’s finding that many BLV users value human-like conversation with agents.

DF#3: Embodied Narration Style. Participants frequently linked first-person narration to engagement and embodiment, creating a transformative experience. P2 described it as making interactions feel “like you were talking to someone, vs it talking to you”. Similarly, P4 remarked that it made conversations seem “more human-like … on a one-on-one basis”. While P9 noted that it enhanced HEM presence, they initially felt that “… it was a bit strange anthropomorphizing [a system]… like when Microsoft talks like a human”. The positive reception of first-person narration aligns with other research that has found that users anthropomorphise agents by using first and second-person pronouns (Liao et al., 2018; Coeckelbergh, 2011).

DF#5: Location of Speech Output. Participants noted that closer coupling with the I3M and its speaker impacted engagement. P4 found the HEM I3M’s audio output “easier to take in”, while P8 appreciated being able to “focus more directly on [the HEM I3M]”. P3 described HEM as “more intimate and engaging”. The researchers believe that embedding speakers inside each printed component could further enhance embodiment and engagement. P5 suggested this would make the I3Ms “feel more alive”, echoing feedback from previous work (Reinders et al., 2023). One possibility may be to use ultrasonics to project speech output (Iravantchi et al., 2020), acting as digital ventriloquism.