Is It Still Fair? Investigating Gender Fairness in Cross-Corpus Speech Emotion Recognition

Abstract

Speech emotion recognition (SER) is a vital component in various everyday applications. Cross-corpus SER models are increasingly recognized for their ability to generalize performance. However, concerns arise regarding fairness across demographics in diverse corpora. Existing fairness research often focuses solely on corpus-specific fairness, neglecting its generalizability in cross-corpus scenarios. Our study focuses on this underexplored area, examining the gender fairness generalizability in cross-corpus SER scenarios. We emphasize that the performance of cross-corpus SER models and their fairness are two distinct considerations. Moreover, we propose the approach of a combined fairness adaptation mechanism to enhance gender fairness in the SER transfer learning tasks by addressing both source and target genders. Our findings bring one of the first insights into the generalizability of gender fairness in cross-corpus SER systems.

Index Terms:

speech emotion recognition, fairness, cross-corpus, transfer learning.I Introduction

Speech emotion recognition (SER) has made significant strides by reshaping the landscape of human-computer interaction and enabling emotion-aware applications [1, 2, 3]. Having more generalized SER models has led to growing interest in cross-corpus SER modeling, which aims at developing models capable of generalizing across diverse corpora and linguistic contexts. This entails many strategies to compensate features, domains, or label mismatches, using techniques such as transfer, semi-supervised, and few-shot learning [4, 5, 6, 7]. While these models have shown remarkable recognition performance on the target corpus but what about the fairness in performance?

In recent years, there has been an increasing emphasis on the trustworthiness of intelligent models, particularly concerning aspects such as privacy, fairness, safety, etc. Specifically, The notion of fairness has gained substantial attention and become a focal point of extensive discussions. Numerous studies within the SER domain have recognized the issue of fairness, highlighting the model exhibits biases with sensitive attributes. To address these fairness concerns, various techniques have been proposed, including the use of adversarial networks, reweighing schemes, and the development of loss functions designed to mitigate biases [8, 9, 10, 11]. Some studies have specifically aimed at neutralizing different attributes, such as gender or age, within SER approaches [12, 10]. Most of these efforts have been focused on single-dataset (source-only) scenarios. However, the unfairness can manifest across multiple levels including sample, data distribution, modality, labeling, and domain-related aspects [9, 10, 12]. The effectiveness of the model's fairness performance when deployed on another corpus has received limited, if any, exploration in the context of cross-domain SER scenarios. Emotions, with their intricate nature and cultural influences, manifest a diverse spectrum of expressions and interpretations across different domains and subjects. Hence, it becomes uncertain whether a SER model excelling in one emotional domain will demonstrate similar performance in another, particularly when accounting for cultural-linguistic variations.

However, a SER model tailored to a specific source corpus may introduce unfairness related to sensitive attributes like gender or age in the target corpus, amplifying some emotions while neglecting others. Cross-corpus SER models, designed for generalization across domains or cultural contexts [13, 14], must also ensure fairness across diverse demographics within these domains. This study emphasizes the dual considerations of performance and fairness in cross-corpus SER models. We investigate the generalizability of fairness in cross-lingual SER models, employing a few state-of-the-art transfer learning and fairness techniques from the literature. Gender is specifically examined as the protected attribute of interest. Our study evaluates whether a cross-lingual SER model, which demonstrates fairness within its source corpus by showing no gender sensitivity, maintains this fairness when applied to the target corpus. This study introduces a novel perspective in cross-corpus SER, underscoring the importance of integrating fairness considerations alongside performance when deploying SER systems across corpora.

In this study, for cross-corpus SER fairness investigations, we utilize two large naturalistic speech corpora, MSP-Podcast [15] and BIIC-Podcast [16]. Our experimental results reveal two insights, first despite exhibiting source-specific fairness with various transfer learning approaches, cross-corpus SER generalization can introduce gender biases for the target corpus which questions the fairness of the SER model. Second, we propose a simple fairness mechanism of combined fairness adaptation that integrates fairness towards the source and the target corpus while modeling a cross-corpus SER. Our proposed cross-corpus setting based Combined Fairness Adaptation (CFA) idea achieves significantly better gender fairness (GF) compared to the considered baselines of this study. The preliminary findings of this study demonstrate the effectiveness and necessity of research in this direction.

| Gender-Specific Performance | Fairness | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Neutral | Happiness | Anger | Sadness | Neutral | Happiness | Anger | Sadness | |||||||||

| M | F | M | F | M | F | M | F | SP | EO | SP | EO | SP | EO | SP | EO | |

| FS [5] | 67.08 | 69.66 | 66.69 | 70.00 | 74.22 | 70.94 | 70.54 | 65.58 | 0.305 | 0.215 | 0.385 | 0.355 | 0.343 | 0.335 | 0.339 | 0.423 |

| 58.44 | 48.33 | 51.93 | 64.47 | 57.74 | 70.42 | 51.69 | 62.22 | 0.528 | 0.625 | 0.512 | 0.624 | 0.558 | 0.599 | 0.503 | 0.435 | |

| GAN [17] | 61.47 | 67.67 | 57.23 | 62.73 | 69.50 | 70.30 | 59.45 | 59.92 | 0.348 | 0.302 | 0.386 | 0.304 | 0.306 | 0.329 | 0.304 | 0.396 |

| 58.33 | 44.44 | 64.39 | 47.57 | 63.72 | 47.86 | 46.08 | 66.04 | 0.570 | 0.667 | 0.559 | 0.632 | 0.436 | 0.465 | 0.417 | 0.512 | |

| PA [7] | 75.22 | 73.16 | 64.01 | 68.85 | 65.41 | 68.29 | 60.36 | 65.06 | 0.352 | 0.341 | 0.375 | 0.351 | 0.380 | 0.384 | 0.356 | 0.322 |

| 65.11 | 57.99 | 63.58 | 57.75 | 58.01 | 71.79 | 63.57 | 52.51 | 0.541 | 0.515 | 0.540 | 0.565 | 0.534 | 0.459 | 0.567 | 0.549 | |

| PA-FairW [18] | 73.17 | 79.42 | 74.37 | 75.73 | 69.88 | 60.12 | 74.02 | 63.88 | 0.133 | 0.253 | 0.195 | 0.205 | 0.279 | 0.242 | 0.253 | 0.221 |

| 69.43 | 57.37 | 59.72 | 69.42 | 48.23 | 64.09 | 65.33 | 52.26 | 0.346 | 0.476 | 0.462 | 0.498 | 0.335 | 0.428 | 0.412 | 0.511 | |

| PA-ReW [19] | 73.25 | 74.05 | 73.91 | 70.43 | 59.93 | 63.39 | 65.63 | 68.01 | 0.121 | 0.256 | 0.135 | 0.194 | 0.159 | 0.168 | 0.277 | 0.224 |

| 67.25 | 59.05 | 58.91 | 69.43 | 49.93 | 58.39 | 54.63 | 65.01 | 0.320 | 0.401 | 0.411 | 0.512 | 0.321 | 0.416 | 0.404 | 0.415 | |

II Fairness Generalizability Analyses

II-A Naturalistic Corpora

The MSP-Podcast (MSP-P) [15] corpus contains 166 hours of emotional American English speech (v1.10), sourced from audio-sharing websites. This resource is valuable for SER research due to its extensive size and emotionally balanced dialogues from different individuals. It includes annotations for primary and secondary emotions and emotional attributes. We select 49,018 samples from this corpus, evenly divided between 24,466 male and 24,552 female subject samples.

The BIIC-Podcast (BIIC-P) [16] corpus is a SER database (v1.0) in Taiwanese Mandarin. It contains 157 hours of speech samples from podcasts and follows a data collection methodology similar to the MSP-P corpus. The annotations cover primary and secondary emotional categories, as well as three emotional attributes. Here, we utilize 18,706 samples of the data with 9,654 male and 9,326 female subject samples.

This work considers four primary emotion categories (Neutral, Happiness, Anger, and Sadness) samples for binary SER tasks with predefined train-valid-test sets of the corpora. For a detailed analysis, we analyze each emotion individually rather than as a 4-category SER task.

II-B Experimental Settings and Evaluation Metrics

In our experiments, we use MSP-P as the emotion-labeled source corpus and BIIC-P as the target corpus. We employ popular wav2vec2.0 [20] feature vectors along with different transfer learning and fairness considering architectures from the literature to investigate fairness generalizability on the target corpus in cross-corpus SER models. Optimization is performed using the Adam optimizer with a learning rate of 0.0001 and a decay factor of 0.001, while training involves back-propagation with binary cross-entropy loss. Training proceeds for a maximum of 50 epochs with a batch size of 64 and includes early stopping. Evaluation of SER model performance is based on the Unweighted Average Recall (UAR). To measure fairness, we utilize established metrics: Statistical Parity () and Equal Odds (). This ranges from -1 to 1, where values closer to 0 indicate better fairness. These metrics are commonly used in fairness studies [9, 21]. ensures the same proportion of positive outcomes across groups, regardless of their true characteristics (e.g., gender, race). requires the model to have equal true positive and false positive rates for different groups, ensuring fair performance. By analysing both we are making more consistent investigation.

II-C Fairness Generalizability Evaluations

To examine the generalizability of gender fairness (GF) in cross-corpus SER models, we perform cross-test fairness assessments using state-of-the-art (SOTA) methods in two stages. First, we evaluate several cross-corpus techniques using effective SOTA transfer learning (TL) methods to assess GF generalizability. Second, we apply fairness approaches from the literature to the best-performing TL model to assess GF generalizability after incorporating a source-specific fairness approach. Table I summarizes SER performances (UAR) and fairness evaluations ( and ) across four primary emotions for male and female speakers. Results are averaged from ten experiment repetitions. To assess whether the cross-corpus model that demonstrates GF on the source also maintains GF on the target corpus (beyond just performance), Table I presents the results for both the MSP-P and BIIC-P test sets for the respective cross-corpus model.

GF Generalizability with Various TL Approaches: To analyze GF generalizability using TL approaches, we consider three SOTA methods: Few-shot (FS) [5], which leverages knowledge from source corpora and adapts the model to the target domain, GAN-based (GAN) [17], which employs adversarial training, and phonetically-anchored (PA) [7], which utilizes learning in a shared phonetic space for SER models. Table I highlights the gender-specific performance of these TL models, revealing disparities between males and females over the target BIIC-P corpus. For instance, in the Anger category, UARs for males are 57.74%, 63.72%, and 58.01%, and for females, 70.42%, 47.86%, and 71.79% for FS, GAN, and PA models, respectively. This performance discrepancy is also evident in the source MSP-P test set. Fairness metrics in Table I show gender unfairness across emotional classes over both corpora test sets. For example, in Anger, the values are 0.380 and 0.534 for PA models for MSP-P and BIIC-P, respectively. This behavior can be seen in other emotion categories as well. The results highlight the challenges in GF generalizability for cross-corpus SER TL models, emphasizing the need to address fairness in such scenarios distinctly.

Cross-Corpus GF Generalizability with Fairness Techniques: Given our emphasis on GF in cross-corpus SER settings, we consider two state-of-the-art fairness methods from the literature: Fairway [18], Reweigh [19]. These fairness methodologies are source-specific. However, existing literature lacks fairness techniques specifically designed for cross-corpus settings. Therefore, we proceed to test these existing fairness techniques in conjunction with our best-performing TL method (PA) from the previous section. Table II shows the results of PA-FairW (PA +Fairway) and PA-ReW (PA + Reweigh). Upon analyzing the performance of these models over MSP-P and BIIC-P test sets, we observe that although there is a decrease in the and values for MSP-P (indicating improvement), but no significant reduction is observed for BIIC-P. For instance, for Anger, for MSP-P, (PA-ReW) yields and values of 0.159 and 0.168, respectively, while on BIIC-P set, the and values are still high with 0.321 and 0.416, respectively (shows no improvement). Similar behavior is observed in other emotion categories as well. These findings indicate that even if the model exhibits better source-specific GF, these approaches fail to generalize GF across cross-evaluations.

III Fair Cross-Corpus SER

Building on insights from Section II, cross-corpus SER models face challenges with GF generalizability in target corpora. To address this, we propose a strategy (Fig. 1) for the GF generalized cross-corpus SER. The architecture has two key blocks: an emotion classification (EC) block and a combined fairness adaptation (CFA) block. For testing our idea, in the EC block, we use a basic SER architecture; processing wav2vec2.0 [20] features with a transformer and four fully-connected layers for binary emotion classification. The CFA block adds an auxiliary adversarial network for gender classification (GC) with a reverse gradient layer which transforms EC features into a gender-neutral state by minimizing GC's ability to predict gender from both corpora. Other experimental settings and evaluation metrics match those in Section II-B.

The training process unfolds in stages. Initially, we train the primary SER model on the source corpus, focusing on capturing gender-neutral emotional features while disregarding gender-specific information. Subsequently, we create mini-batches that combine data from both the MSP-P and BIIC-P for gender-neutral adversarial training. The EC aims to generate gender-neutral embeddings, while the GC aims to make accurate gender predictions. This training process uses a binary cross-entropy loss function for classifying gender, penalizing the classifier for making gender predictions based on shared feature representations. In each training iteration, we update both the primary model's weights and the gender classifier's parameters. The inserted reverse gradient layer in learning modifies the gradients backpropagated from the GC branch to make the shared feature representations more gender-neutral. Equation 1 shows the binary classification loss used for EC and GC tasks.

|

|

(1) |

where is the intermediate embeddings produced by the primary EC model. be the true labels for emotions (0 for target, 1 for other) for EC and the gender of speakers (0 for male, 1 for female) for GC task. represents the EC or GC, be the predicted target attribute probability distribution given , and be the parameters. To enforce a similarity between source and target genders, we use a contrastive loss that encourages samples with the same gender to be close in the feature space and those with different genders to be distant from each other. The goal is to make the learned representations of gender-related features similar between the source and target domains. The Equation 4 is used to integrate a source and target similarity loss.

| (2) |

where D is the Euclidean distance between the embeddings of samples and . m is the margin and here is fixed to 1. This loss encourages the model to minimize the distance between samples with the same gender () and maximize the distance between samples with different genders (). Equation 5 illustrates the total loss for the PA-CFA model, which combines the EC loss and the GC loss.

| (3) |

where and are the binary emotion classification and gender classification loss. is the loss for the gender similarity. and are set to 0.5. Here, during training, target gender labels are needed, but not during inference.

| Gender-Specific Performance | Fairness | |||||||||||||||

| Neutral | Happiness | Anger | Sadness | Neutral | Happiness | Anger | Sadness | |||||||||

| M | F | M | F | M | F | M | F | SP | EO | SP | EO | SP | EO | SP | EO | |

| PA-ReW | 72.63 | 75.96 | 68.82 | 67.63 | 68.42 | 70.05 | 63.67 | 68.14 | 0.161 | 0.159 | 0.198 | 0.257 | 0.111 | 0.203 | 0.154 | 0.248 |

| 59.03 | 68.44 | 59.31 | 64.57 | 52.98 | 45.37 | 61.81 | 53.03 | 0.391 | 0.401 | 0.412 | 0.469 | 0.363 | 0.423 | 0.398 | 0.402 | |

| Base-CFA | 73.25 | 70.74 | 75.30 | 71.02 | 75.33 | 76.32 | 71.18 | 73.70 | 0.256 | 0.264 | 0.190 | 0.164 | 0.130 | 0.153 | 0.132 | 0.133 |

| 67.60 | 64.88 | 63.05 | 62.40 | 69.28 | 71.56 | 64.94 | 60.86 | 0.335* | 0.294** | 0.212** | 0.263** | 0.256** | 0.205** | 0.193** | 0.275** | |

| PA-CFA | 74.44 | 71.33 | 76.93 | 70.47 | 79.74 | 82.42 | 70.69 | 74.32 | 0.211 | 0.231 | 0.119 | 0.216 | 0.107 | 0.195 | 0.106 | 0.201 |

| 68.24 | 65.75 | 61.55 | 64.83 | 68.15 | 70.14 | 65.36 | 62.35 | 0.205** | 0.211** | 0.223 ** | 0.206** | 0.260** | 0.287** | 0.236** | 0.241** | |

| PA-Adv | 74.63 | 71.91 | 75.04 | 71.06 | 72.61 | 76.08 | 69.99 | 72.35 | 0.295 | 0.301 | 0.21 | 0.234 | 0.192 | 0.205 | 0.184 | 0.217 |

| 62.89 | 66.05 | 63.94 | 66.38 | 70.18 | 68.84 | 61.23 | 64.21 | 0.281 | 0.345 | 0.273 | 0.357 | 0.322 | 0.306 | 0.251 | 0.365 | |

To align genders between source and target domains, we apply a contrastive loss to encourage similar gender samples to be closer in feature space and dissimilar ones to be farther apart. Equation 4 illustrates how we integrate this source and target similarity loss.

| (4) |

where D is the Euclidean distance between the embeddings of samples and . m is the margin and here is fixed to 1. This loss encourages the model to minimize the distance between samples with the same gender () and maximize the distance between samples with different genders (). Equation 5 illustrates the total loss for the PA-CFA model, which combines the EC loss and the GC loss.

| (5) |

where and are the binary emotion classification and gender classification loss. is the loss for the gender similarity. and are set to 0.5. Here, during training, target gender labels are needed, but not during inference.

IV Experiment and Analyses

Table II shows gender-specific performance and fairness metrics for Base-CFA with a basic SER model and PA-CFA, combined with the PA TL model. Table II demonstrates that on cross-evaluation (on BIIC-P test set), our proposed CFA baseline Base-CFA method outperforms PA-ReW in terms of GF generalizability across most emotion categories. For example, for Anger, Base-CFA achieves balanced gender-specific performance (M:69.28%, F:71.56%) compared to PA-ReW (M:52.98%, F:45.37%). In terms of fairness metrics, CFA shows a significant reduction in both and across all emotions compared to the PA-ReW. For instance, the SP value for Anger decreases by 0.107, reflecting improved GF. Additionally, PA-CFA, which combines phonetic-based constraints with CFA, captures language-specific information in cross-lingual tasks, exceeds the performance of PA-ReW. For example, for Anger, drops from 0.363 to 0.260, a consistent trend across both fairness metrics.

We validate our approach by training a model without the gender similarity loss (), called PA-Adv. Table II shows that PA-CFA still achieves better fairness. For example, in Anger, PA-Adv has a of 0.322, while PA-CFA achieves 0.260, highlighting the effectiveness of in improving fairness by aligning gender features across corpora. For reference, Table III presents the overall UAR performance of the CFA model. From Table III, we can see that PA-CFA shows a slight decrease in performance compared to PA, but it remains competitive over all emotion categories. We believe this minor performance drop is a worthwhile trade-off for improved GF. For reference, the overall UAR represents recall across all classes, irrespective of gender. In Table III, UAR values are shown separately for males and females, so the overall UAR shown in Table III is not an average of gender-specific UARs.

Analysis: We utilize t-SNE plots to explore gender attribute feature spaces in PA-CFA and PA-ReW, shown in Figure 2, aiming to understand why PA-CFA performs better. In Figure 2(a), comparing Anger in Figure 2(a) to Figure 2(b), we see distinct male-female clusters in 2(a) and a mixed distribution in 2(b). This indicates coexisting male and female features from different corpora, across all emotions studied. It underscores the importance of integrating CFA and questions the effectiveness of source-specific fairness in cross-corpus SER.

| Neutral | Happiness | Anger | Sadness | |

|---|---|---|---|---|

| PA | 74.53 | 74.87 | 76.46 | 72.37 |

| PA-CFA | 72.97 | 72.37 | 75.30 | 70.82 |

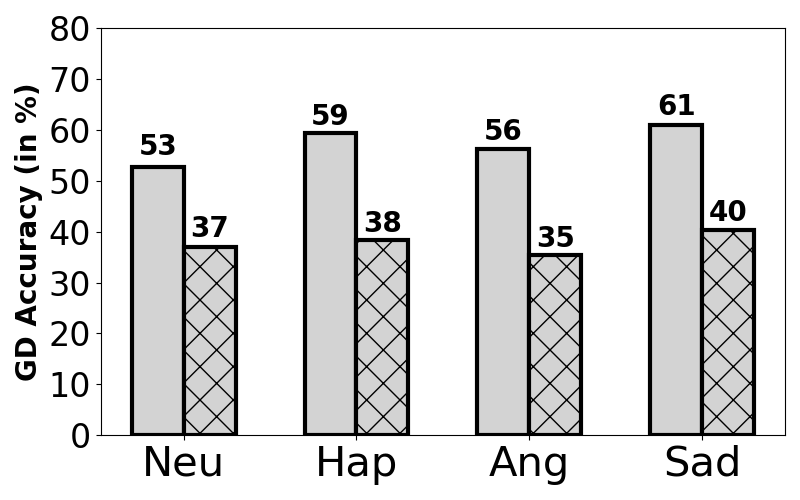

We also analyze embeddings from both the PA-ReW and proposed PA-CFA models to assess gender-related information across the MSP-P and BIIC-P contexts. Figure 3 presents the gender detection (GD) accuracy, with MSP-P on the left and BIIC-P on the right. PA-ReW exhibits varying GD accuracy: lower for MSP-P and higher for BIIC-P, suggesting residual gender information specific to BIIC-P despite lower MSP-P performance. Conversely, PA-CFA shows improved gender neutralization, with similar GD accuracy across both corpora (e.g., for Anger, 36% MSP-P, 35% BIIC-P in Figure 3(b)). In PA-CFA, there are improvements in emotions like Neutral and Anger for MSP-P GD accuracy compared to PA-ReW, reflecting compensation in MSP-P performance due to enhanced gender fairness across MSP-P and BIIC-P corpora.

V Conclusion

This research addresses overlooked fairness concerns in cross-corpus SER, particularly focusing on gender neutrality. Our findings reveal that cross-corpus SER models, while fair within their source corpus, introduce biases when generalized across different corpora. We propose an initial approach, combined fairness adaptation (CFA), to enhance gender neutrality across both source and target corpora in emotion transfer tasks. Initial experiments demonstrate the efficacy of our approach in creating gender-fair cross-corpus SER systems. Future research will refine our fairness mechanism through feature-side analysis to pinpoint specific areas where fairness issues arise in cross-corpus SER settings.

References

- [1] L. Devillers, C. Vaudable, and C. Chastagnol, ``Real-life emotion-related states detection in call centers: a cross-corpora study,'' in Eleventh Annual Conference of the International Speech Communication Association, 2010.

- [2] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, u. Kaiser, and I. Polosukhin, ``Attention is all you need,'' in Proceedings of the 31st International Conference on Neural Information Processing Systems, ser. NIPS'17. Red Hook, NY, USA: Curran Associates Inc., 2017, p. 6000–6010.

- [3] J. Acosta, ``Using emotion to gain rapport in a spoken dialog system,'' Ph.D. dissertation, University of Texas at El Paso, El Paso, TX, USA, December 2009.

- [4] S. Parthasarathy and C. Busso, ``Semi-supervised speech emotion recognition with ladder networks,'' IEEE/ACM transactions on audio, speech, and language processing, vol. 28, pp. 2697–2709, 2020.

- [5] Y. Ahn, S. J. Lee, and J. W. Shin, ``Cross-corpus speech emotion recognition based on few-shot learning and domain adaptation,'' IEEE Signal Processing Letters, vol. 28, pp. 1190–1194, 2021.

- [6] S. Latif, R. Rana, S. Khalifa, R. Jurdak, and B. W. Schuller, ``Self supervised adversarial domain adaptation for cross-corpus and cross-language speech emotion recognition,'' IEEE Transactions on Affective Computing, 2022.

- [7] S. G. Upadhyay, L. Martinez-Lucas, B.-H. Su, W.-C. Lin, W.-S. Chien, Y.-T. Wu, W. Katz, C. Busso, and C.-C. Lee, ``Phonetic anchor-based transfer learning to facilitate unsupervised cross-lingual speech emotion recognition,'' in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- [8] A. Coston, K. N. Ramamurthy, D. Wei, K. R. Varshney, S. Speakman, Z. Mustahsan, and S. Chakraborty, ``Fair transfer learning with missing protected attributes,'' in Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, 2019, pp. 91–98.

- [9] M. Schmitz, R. Ahmed, and J. Cao, ``Bias and fairness on multimodal emotion detection algorithms,'' arXiv preprint arXiv:2205.08383, 2022.

- [10] W.-S. Chien and C.-C. Lee, ``Achieving fair speech emotion recognition via perceptual fairness,'' in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- [11] G. Maheshwari and M. Perrot, ``Fairgrad: Fairness aware gradient descent,'' Transactions on Machine Learning Research, 2023.

- [12] C. Gorrostieta, R. Lotfian, K. Taylor, R. Brutti, and J. Kane, ``Gender de-biasing in speech emotion recognition.'' in Interspeech, 2019, pp. 2823–2827.

- [13] G. Costantini, E. Parada-Cabaleiro, D. Casali, and V. Cesarini, ``The emotion probe: On the universality of cross-linguistic and cross-gender speech emotion recognition via machine learning,'' Sensors, vol. 22, no. 7, p. 2461, 2022.

- [14] M. A. Pastor, D. Ribas, A. Ortega, A. Miguel, and E. Lleida, ``Cross-corpus training strategy for speech emotion recognition using self-supervised representations,'' Applied Sciences, vol. 13, no. 16, p. 9062, 2023.

- [15] R. Lotfian and C. Busso, ``Building naturalistic emotionally balanced speech corpus by retrieving emotional speech from existing podcast recordings,'' IEEE Transactions on Affective Computing, vol. 10, no. 4, pp. 471–483, 2017.

- [16] S. G. Upadhyay, W.-S. Chien, B.-H. Su, L. Goncalves, Y.-T. Wu, A. N. Salman, C. Busso, and C.-C. Lee, ``An intelligent infrastructure toward large scale naturalistic affective speech corpora collection,'' in 2023 10th International Conference on Affective Computing and Intelligent Interaction (ACII). IEEE, 2023.

- [17] B.-H. Su and C.-C. Lee, ``Unsupervised cross-corpus speech emotion recognition using a multi-source cycle-gan,'' IEEE Transactions on Affective Computing, 2022.

- [18] J. Chakraborty, S. Majumder, Z. Yu, and T. Menzies, ``Fairway: a way to build fair ml software,'' in Proceedings of the 28th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, 2020, pp. 654–665.

- [19] F. Kamiran and T. Calders, ``Data preprocessing techniques for classification without discrimination,'' Knowledge and information systems, vol. 33, no. 1, pp. 1–33, 2012.

- [20] A. Baevski, Y. Zhou, A. Mohamed, and M. Auli, ``wav2vec 2.0: A framework for self-supervised learning of speech representations,'' Advances in Neural Information Processing Systems, vol. 33, pp. 12 449–12 460, 2020.

- [21] R. K. Bellamy, K. Dey, M. Hind, S. C. Hoffman, S. Houde, K. Kannan, P. Lohia, J. Martino, S. Mehta, A. Mojsilovic et al., ``Ai fairness 360: an extensible toolkit for detecting,'' Understanding, and Mitigating Unwanted Algorithmic Bias, vol. 2, 2018.