IRMA: Iterative Repair for graph MAtching

Abstract.

The alignment of two similar graphs from different domains is a well-studied problem. In many practical usages, there is no reliable information or labels over the vertices or edges, leaving structural similarity as the only information available to match such a graph. In such cases, one often assumes a small amount of already aligned vertices - called a seed. Current state-of-the-art scalable seeded alignment algorithms are based on percolation. Namely, aligned vertices are used to align their neighbors and gradually percolate in parallel in both graphs. However, percolation-based graph alignment algorithms are still limited in scale-free degree distributions.

We here propose ‘IRMA’ - Iterative Repair for graph MAtching to show that the accuracy of percolation-based algorithms can be improved in real-world graphs with a limited additional computational cost, and with lower run time when used in a parallel version. IRMA starts by creating a primary alignment using an existing percolation algorithm, then it iteratively repairs the mistakes in the previous alignment steps. We prove that IRMA improves on single-iteration algorithms. We then numerically show that it is significantly better than all state-of-the-art seeded graph alignment algorithms on the graphs that they tested.

In scale-free networks, many vertices have a very low degree. Such vertices have a high probability of erroneous alignments. We show that combining iterations with high recall but low precision in the alignment leads in the long run to higher recall and precision for the entire alignment.

1. Introduction

1.1. Graph Matching/alignment

In the simplest form of graph matching (GM - often denoted also Graph Alignment), one is given two graphs and known to model the same data (i.e., there is an equivalence between the graphs vertices). For example, may be the friendship graph from the Facebook social network and the friendship graph from the Twitter social network for the same people. In both cases, the vertices are users and there is an edge between two vertices if the corresponding users are friends in the relevant social network. An assumption common to all GM algorithms is that a friend in one network has a higher than random probability of being a friend in the second network.

The goal of GM is to create a bijection , such that maps a vertex in to a vertex in if they represent the same real-world entities. In the example above, should connect the profiles in Facebook and Twitter of the same person. We note by the ground-truth, i.e., the set of all vertices pairs that represent the same entity in both graphs. Given a bijection , if , and , and the edge , and , the common edge will be defined as a shared edge. The quality measure for the quality of is usually the number of shared edges.

Finding a full bijection is not always optimal, since some vertices may be absent from one of the two graphs. We thus look for a partial bijection: , such that the fraction of shared edges is maximal. A single edge bijection is obviously a simple solution to that. Thus, a trade-off between the number of shared edges and the fraction of mapped vertices is often required. Such a trade-off is obtained by adding constraints on the number of elements in .

1.2. Seeded Graph Matching

In the presence of limited initial information on the bijection, one can use seeded GM. In seeded GM, the input contains beyond also a small , which is a group of vertices pairs known to represent the same real-world entities in and in . A similar problem emerges when the vertices have additional information named labels or meta-data (malhotra2012studying(16), ; nunes2012resolving(20), ). For example, the users on Facebook and Twitter may have additional attributes, such as age, address, and user names. It is obvious that a user named “Bob Marley” in Facebook is much more likely to represent the same person as a user with the same name on Twitter than a user named “Will Smith”. Pairs of vertices with unique and similar attributes can be used as a seed. Even a very small seed can simplify the matching. Here, we focus on seeded GM based, with no labels over the vertices. We propose an iterative approach to seeded GM, which improves the accuracy of existing approaches. Specifically, we improve the ExpandWhenStuck algorithm (further denoted EWS) (EXPAND, ) using an Iterative Repair for graph MAtching (IRMA) algorithm. We show that the results are not only better than EWS, but also of all current seeded GM solvers.

2. Problem Statement

Formally, we assume two graph and , with no attributes on vertices or edges (in contrast for example with knowledge graphs - where each edge has a relation type, or labeled graphs, where each vertex has a label or attributes). We further assume a subset (defined for example as all vertices with a degree above some threshold). Given a bijection , where , and . The edge , is shared if . We are looking for the bijection maximizing the number of shared edges among all edges, s.t. . We focus on real world-graphs with scale-free degree distributions.

3. Related Work

GM is used in different disciplines. In social networking, GM can be used for the de-anonymization of data sets from different domains (korula2013efficient(14), ; yartseva2013performance(30), ). GM is used on proteins from different species in biology to detect functional equivalences (klau2009new(13), ; singh2008global(25), ), and to discover a resemblance between images in computer vision (torresani2008feature(26), ; egozi2012probabilistic(7), ; wiskott1997face(28), ).

The main progress in GM algorithms is based on machine–learning methods, and can be broadly divided into two main categories: profile-based and network-based methods. Profile-based methods rely on the vertices meta-data (e.g., username (zafarani2013connecting, ), spatio-temporal patterns (riederer2016linking, ), posts (goga2013exploiting, ), or writing style (narayanan2012feasibility, ), etc.) to link accounts across different sites. Network-based methods rely on the graph topological structure (koutra2013big, ; tan2014mapping, ; zhou2018deeplink, ). In general, machine learning based methods are characterized by a high run time, in both training and deployment. Multiple theoretical bounds for a GM solution were proposed (cullina2016improved, ; shirani2017seeded, ; cullina2017exact, ; kazemi2015can, ). They make use of several parameters such as seed size, degree distribution, graphs-overlap, etc. Polynomial run-time complexity algorithms were developed that in exchange for scalability may achieve a good alignment only under certain assumptions (fishkind2012seeded, ; mossel2020seeded, ; ding2021efficient, ; fishkind2019seeded, ). GM were also extensively discussed in the context of knowledge graphs, for example for cross-lingual entity alignment or joining entities that represent the object.

An important aspect of GM is scaling, since its practical use is often in large graphs. Current state-of-the-art scalable seeded GM methods are based on gradual percolation, starting from the seed and expanding through common neighbors. This class of algorithms is referred to as Percolation Graph Matching (PGM) methods (korula2013efficient(14), ; yartseva2013performance(30), ; henderson2011s(10), ). Despite having in some cases additional information in the form of labels, (henderson2011s(10), ) showed the crucial importance of relying on edges during the process of GM. Both (chiasserini2016social, ) and (EXPAND, ) present an improvement to (yartseva2013performance(30), ) and are currently among the state-of-the-art PGM algorithms.

We follow here the notations of EWS with minor changes. In the following text, we refer to the input graphs as and . When we mention a pair or , we always refer to a pair of vertices . We denote the pairs as neighboring pairs if the edge and the edge . Finally, a pair conflicts if it conflicts an existing pair in (i.e., and or vice versa).

In PGM algorithms, one maintains a score for each candidate pair, and uses the score to gradually build the set of the matched pairs - . Adding a constant value to the score (also called the mark) of all neighboring pairs of some given pair is called ‘spreading out marks’. is defined as the number of marks that received from other pairs until time , where the unit of time is the insertion of one pair to . As there is a high chance for two pairs to have the same amount of marks, we define to give a priority to pairs with similar degree:

| (1) |

for an infinitesimal , where is the degree of a vertex in graph .

In a nutshell, EWS (see code in Figure 1) starts by adding all the seed pairs into , and spreading out marks to all their neighbors in the appropriate graphs , and . Then, at each time step , EWS chooses a candidate pair that does not conflict , adds it to and spreads out marks to all its neighbors. When there are no pairs left with more than one mark (line 15 in Figure 1), EWS creates an artificial noisy seed (), and uses it to further spread out marks (line 6 in Figure 1). contains all pairs that are: 1) neighbors of matched pairs 2) do not conflict 3) never have been used to spread out marks. The novelty of EWS is the generation of an artificial seed whenever there are no more pairs with more than one mark. The artificial seed is mostly wrong. Yet, EWS manages to use it to match new correct pairs and continue the percolation. At the end of EWS, the set of mapped pairs is returned along with that contains counters of marks for all marked pairs. The last is not needed in EWS, but is used in IRMA. The main contribution of EWS is a dramatic reduction in the size of the required seed set for random networks (graph with vertices and a probability of for each edge). We here show that in scale free degree distribution, seeded GM algorithms still have a low resolution, and propose a novel method to address that.

ExpandWhenStuck 1: is the initial set of seed pairs, ; 2: is the set of used pairs 3: is the set of all marked pairs along with their number of marks 4:while () do 5: for all pairs do 6: Add the pair [u,v] to and add one mark to all of its neighbouring pairs; 7: end for 8: while there exists an unmatched pair with at least 2 marks in that does not conflict do 9: among those pairs select the one maximizing ; 10: Add p=[u,v] to the set ; 11: if [u,v] then 12: Add one mark to all of its neighbouring pairs and add the pair [u,v] to ; 13: end if 14: end while 15: all neighboring pairs [u,v] of matched pairs M s.t. [u,v], and ; 16:end while 17:return , ;

4. Novelty

Most seeded GM algorithm relay on mark spreading from known pairs to unknown pairs. Such algorithms rely on two basic assumptions. First, most true pairs have a large enough number of neighbors that can pass them marks. Second, false pairs have a low probability of receiving a large number of marks. Both assumptions fail in scale-free degree distribution networks. Random pairs of very high degree vertices will receive a lot of marks. In contrast, low degree true pairs will receive practically no marks. IRMA proposes solutions to these two limitations:

-

•

IRMA contains an iterative approach to correct for erroneous pairing. We show that even if a pair of vertices received a high number of marks. Over time, it will receive less mark on average than true pairs. Thus, an iterative approach is proposed to correct erroneous matching of vertices.

-

•

In order to reach low degree vertices, IRMA has an Expansion/Exploration phase allowing to pair low degree pairs, even with a low accuracy. We show that such a phase increases on the long run both the accuracy and the recall.

We show that these two elements drastically improve the performance of seeded GM.

5. Methods

5.1. Evaluation Methods and Stopping Criteria

We use precision and recall to evaluate the performance of algorithms: (i) Precision refers to the fraction of true matches in the set of matched vertices (i.e., pairs in that are in ) - , and (ii) Recall is the size of the intersect of and out of the size of - , where is the set of all pairs of vertices that represent the same entity in both of the graphs. To compare the performance of GM algorithms, we also report the F1–score. To approximate the quality of a solution during the run time, assuming no known ground truth - namely, given an input to the seeded-GM problem and two possible maps , we use as a score to compare the quality of their mapping -

5.2. Data Sets

For fully simulated graphs, we use the above sampling method over Erdos-Renyi graphs (Erdos-Renyi, ), defined as a graph with vertices, where every edge of the possible exists with a probability of . It is common to mark two graphs created by sampling from an Erdos-Renyi graph as (pedarsani2011privacy, ). To test our algorithm on graphs that better represent real-world data, yet to control their level of similarity, we used sampling over the following real-world graphs (further denoted as graph 1, graph 2, and so on, according to their order here (Table 1)).

| Number | Name | Nodes | Edges | Average degree |

| 1 | Fb-pages-media | 27,900 | 206,000 | 14 |

| 2 | Soc-brightkite | 56,700 | 212,900 | 7.8 |

| 3 | Soc-epinions | 26,600 | 100,100 | 7.9 |

| 4 | Soc-gemsec-HU | 47,500 | 222,900 | 9.4 |

| 5 | Soc-sign-Slashdot081106 | 77,300 | 516,600 | 12.1 |

| 6 | Deezer_europe_edges | 28,300 | 92,800 | 6.6 |

-

(1)

Fb-pages-media - Data collected about Facebook pages (November 2017). (http://networkrepository.com/fb-pages-media.php).

-

(2)

Soc-brightkite - Brightkite is a location-based social networking service provider where users shared their locations by checking-in. (http://networkrepository.com/soc-brightkite.php).

-

(3)

Soc-epinions - Controversial Users Demand Local Trust Metrics: An Experimental Study on epinions.com Community (http://networkrepository.com/soc-epinions.php).

-

(4)

Soc-gemsec-HU - The data was collected from the music streaming service Deezer (November 2017). These datasets represent friendship graphs of users from 3 European countries. (http://networkrepository.com/soc-gemsec-HU.php).

-

(5)

Soc-sign-Slashdot081106 - Slashdot Zoo signed social network from November 6 2008. It is noteworthy that this graph was also used in (EXPAND, ) (http://networkrepository.com/soc-sign-Slashdot081106.php).

-

(6)

Deezer_europe_edges - A social network of Deezer users which was collected from the public API in March 2020. (http://snap.stanford.edu/data/feather-deezer-social.html).

5.3. IRMA

The EWS is greedy. At step , it adds to the pair maximizing . If at time the algorithm chooses some wrong pair that obeys for the correct pair , may receive many marks from neighbors later in the algorithm, such that . We argue that such a scenario is highly likely, yet, will never get into as it conflicts with another pair already in .

We thus propose to use the scores at the end of the algorithm () to improve the matching. We suggest an iterative improvement of the match, using the final score of the previous iteration. is defined as the number of marks gained by pair during the -th iteration until time ; is defined accordingly as

| (2) |

Based on these scores, one can establish:

| (3) |

and,

| (4) |

IRMA starts with the initialization of to be the seed set, then it performs a standard EWS iteration (See pseudo code for the Repairing-Iteration and IRMA in Figures 2 and 3, respectively). In the following iterations, at time , while there is a candidate pair with , IRMA adds to the pair maximizing and does not conflict , and spread marks out of it.

IRMA stops the iterations when the mapping quality stops increasing. Formally, the -th iteration starts by initializing , then, at time , it adds to the candidate pair obeying: , and spread marks out of it - updating . The iteration ends when no candidate pair , that does not conflicts , satisfies: .

Since in real-life scenario, we do not know the real mapping, we use to estimate the quality of the score at the current iteration (Section 5.1). We compute where is the matching at the end of the -th iteration. Whenever , IRMA stops and returns . In practice, IRMA stops when , where was empirically set to . Note that this does not ensure convergence of the mapping, only of its score.

Repairing Iteration 1: is an input of all marks from the previous iteration 2:, ; 3:Spread out marks from all pairs in 4:while there exists an unmatched pair with at least 2 marks in or that does not conflict do 5: Among those pairs select the pair ; 6: Add to the set ; 7: Add one mark in to all of its neighboring pairs and add the to 8:end while 9:return

IRMA (Iterative Repair for graph MAtching) 1: 2: 3:while do 4: 5: 6:end while 7:return

5.4. Expansion/Exploration Boost

Each IRMA iteration ends, when there are no pairs left with at least marks, that do not conflict . This threshold is a trade-off between the precision and the recall. If one sets the threshold to a high value, will only contain pairs with high confidence, yet the percolation will stop early - leading to high precision but low recall. Similarly, setting the threshold to one will increase recall at the expense of precision.

However, in scale free distribution, many vertices have a low degree and mya never be reached. We thus suggest performing a “exploration iteration” with a threshold of mark, once IRMA converged. This leads to a drop in precision, but an increase in the recall. Then, one can run regular ”repairing” iterations again to gradually restore the precision. The idea is that while wrong pairs could not comply with the next iterations’ threshold of marks, correct pairs might lead to unexplored areas of the graphs. Such pairs gain marks by their newly revealed neighbors, a-posteriori justifying their insertion to .

5.4.1. Break Condition

Since the iterations performed after the ‘noisy iteration’ are meant to filter out pairs with lower certainty, we can no longer expect to increase between iterations. In practice, the second stage of IRMA (after the expansion boost), restores the precision within a few iterations and rapidly stops improving . We thus empirically set the IRMA to always stop after four repairing iterations, after the expansion boost.

5.5. Parallel Version

In order to develop a GPU version of IRMA, we propose a parallel version. The bottleneck of EWS is in spreading out marks from , which costs updates to the queue of marks. Ideally, we would like to perform multiple mark spreading steps in parallel. However, this impossible since the pair chosen at time depends on the marks spread out in the previous step. Section 6.3 in (EXPAND, ) presents a paralleled version where the main loop has been split into epochs. This version of EWS starts by spreading out marks from the seed, then at each epoch the algorithm greedily takes all possible pairs from the queue one by one - without spreading any mark. When the queue is eventually empty, it simultaneously spread marks from all pairs selected at the current epoch, creating the queue to the next epoch. This method has the advantage of being extremely fast, allowing input graphs with millions of vertices, and has been argued not to fundamentally affect the performance of the algorithm.

We expand this logic to parallelize IRMA. The -th iteration gets as input and starts by greedily adding all possible pairs from the queue into one by one - without spreading any mark. Then, when the queue is empty, we simultaneously spread out marks from all pairs in creating . Iteration returns and .

5.6. Experimental Setup

For each graph (See Section 5.2), we use different graphs-overlap () values in the range of , and various combinations of seed size, and value to test that our results are robust to the graph choice, the value of and the seed size.

When comparing the performance along with different seed sizes, we start with seed sizes of and respectively for graphs . The first seed size is then multiplied by and (see figures 4, 5, 8). We report for each graph the precision, the recall or the F1 score for each realization. There is no division to training and test, nor parameter hypertuning, since there are no free parameters, and no ground truth used except for the seed in IRMA.

To examine the performance of seeded GM algorithms, one needs a pair of graphs, where at least a part of the vertices correspond to the same entities. Given and , we generate , and by twice randomly and independently removing edges with a probability of . We then remove vertices with no edges in and in separately. The edge overlap between and increases with . We name the ‘graphs-overlap’.

6. Results

6.1. EWS on Real-World Graphs

IRMA can be applied to any percolation algorithm. We here use EWS (EXPAND, ) for each iteration, but any other algorithms can be used. We first tested the accuracy of EWS on a set of real-world graphs (section 5.2). We use each of those graphs to create two partially overlapping graphs by twice removing edges from the same graph. The fraction of edges from the original graph () maintained in each of the partially overlapping graphs is denoted . We use values of in the range of .

We computed the precision, recall and F1-score of EWS over all the graphs with graphs overlap () value of as a function of seed size (Figure 4 a-c, respectively for graph 2, Other graphs are similar). Lower values of , produced recall values of less than 0.3 even for a very large seed. All accuracy measures are highly sensitive to . The reason is that a correct pair , corresponding to vertex with degree , has an expected number of common neighbors, which is approximately the number of marks it will get. In random graphs, most vertices have similar degree (a normal distribution of degrees), and if , EWS typically works. More precisely, (EXPAND, ) present a simplified version to the EWS and show that the high accuracy in requires an average degree of above . However, in scale free degree distributions, the vast majority of vertices obey . As such, low graphs-overlap values prevent most vertices from gaining enough marks.

The precision (and also recall and F1) is typically a monotonic non-decreasing function of the seed size, with no clear threshold (Figure. 4). The ratio between the precision and the recall as defined here are equal to the ratio between and , i.e., the algorithm fails to percolate through the entire graph. Again, in scale free degree distributions, many low-degree vertices will never collect enough marks. As a result, often contains only part of the possible pairs to match.

a

. .

. .

.

b

c

6.2. Parallel Version of EWS

The parallel version of EWS splits each iteration into epochs, in which it adds to every possible candidate pair without spreading out marks, and then spreads out marks simultaneously for the next epoch (Figure 7 for some graphs. Other graphs are similar). The parallel version requires a larger seed in order to achieve results similar to the original version. Together with the former findings, we can conclude that while EWS has an excellent accuracy in , it has two main limitations: A) Significantly lower performances on real-world graphs than in , and difficulty to handle graphs-overlap lower than in real-world networks. B) A large difference in real-world networks between the parallel and sequential versions.

We here propose that an iterative version of EWS can reduce these limitations.

6.3. IRMA Algorithm

IRMA is an iterative Graph Matching solver (section 5.3). We first run EWS, and then perform repairing iterations. In each iteration, IRMA uses the marks received at the end of the former iteration to build a new and better map (Figures 2 and 3). The intuition to keep adding recommendations to mapped pairs and use them on the next iteration is simple. Correct pairs share more common neighbors than the wrong ones in the average case, therefore correct pairs are more likely to gain more recommendations, even if they initially contradict another assignment.

6.4. IRMA improves accuracy along iterations

is defined by (the number of marks gained until time ) and uses the difference in the vertices degree to break ties (Eq. 1). While the often in real time a wrong pairing obtains a higher score than a real pairing, consistently, the score of wrong pairs at infinity (or the end of the analysis) is lower (Data not shown).

6.4.1. Proof of improvement

We demonstrate the advantage of IRMA over EWS by showing that performing even one iteration of a ‘Parallel Repairing Iteration’ lowers the probability of mapping wrong pairs when running over graphs.

is based on (the number of marks gained during the -th iteration until time ) see Eq. 2. Intuitively, if is better than , the marks received during the second iteration should be more accurate than in the first iteration, improving , therefore improving . As argued by (EXPAND, ) when analyzing their algorithm, EWS has a complex property of generating noisy seed whenever it gets stuck, which is hard to analyze. For that reason, we analyze a simpler version of that algorithm - ExpandOnce where an artificial seed is generated only at the beginning of the algorithm to ensure a smooth percolation. In addition, When a pair crosses the minimum threshold of marks, it immediately is inserted into (assuming it is not conflicting an existing pair). ExpandOnce keeps percolating by randomly choosing one pair at time that has not been choose before, and uses it to spread out marks.

Theorem 1: Given , let be a wrong pair inserted into at time and let be a right pair conflicting . Assuming at some time a correct pair has been used to spread out marks, the following applies:

| (5) |

Whenever we choose a wrong pair before the right pair , we will eventually avoid the latter insertion of , since it will conflict . Theorem 1 suggests that using will reduce the probability of a mistake in the next iteration - eventually reducing the number of wrong pairs in .

Proof of Theorem 1:

Eq. 5 in Theorem 1 is equivalent to:

| (6) |

In other words, the expected number of marks that will get from now on, will be higher than the expected number of marks will receive. Let be a pair used to spread out marks at time :

-

•

If is a correct pair, and represent the same vertex ( and represented by the same vertex as well). gets one mark if there are edges and . This requires the edge to exist in (which happens with probability ) and to be sampled in (happens with probability ). On the other hand, gets a mark if . This requires two different edges to exist in (probability of ) and to be sampled to and accordingly (probability of ).

-

•

If is a wrong pair, and are represented by the different vertices , (note that cannot conflict ). The pair gets one mark if there are edges and . This happens with probability . The pair gets one mark if there are edges and , which also happens with probability . In fact, it is possible that one of the pairs will have the form . In that case, gets a mark with probability while gets none, as can never be a neighboring pair of .

Let us denote by the number of right pairs used to spread out marks until time , and by be the number of wrong pairs used to spread out marks until time . Let us further denote and similarly . Using the analysis above, one can compute:

| (7) |

| (8) |

Combining Eq. 7 and 8, we obtain:

| (9) |

To prove Eq. 6, it remains to show that . Since we assumed that some correct pair has been used to spread out marks at time , we have . is the probability of an edge to exist, and as we only focus on sparse graphs we assume , leading to:

| (10) |

6.5. Experimental validation

IRMA builds during the -th iteration using

It then uses the advantage of each iteration over the previous one. We have shown the expected advantage of the first Repairing-Iteration over EWS, so is more accurate than on average. The proof above did not use any assumption on the way the initial marks were produced. Thus, the same logic should apply to all following iterations. Thus, is expected to be more accurate than , and the same holds for all following iterations.

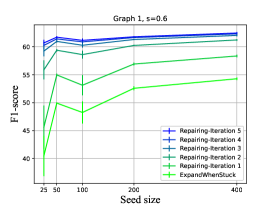

To test the improvement in accuracy, we computed the F1 score for all the real-world networks above (Figure 5). Sub-plots a and b consists of several runs of IRMA with different sizes of seeds, with a fixed graph and graphs-overlap (). Each value is the average of 5 repetitions. The iterations are colored from green to blue. The lowest values are always of EWS, and the results keeps improving until they converge. Using the same experiment over other graphs, we generated sub-plot c, where each line is one IRMA run and it represents the difference in F1 (axis y) between following iterations as a function of the iteration (axis x). All positive values (as they all are) represent an improvement.

The first, most noticeable observation is that IRMA indeed gradually repairs the output map of EWS. In fact, the F1-score results are a monotonic non-decreasing function of the iterations. Moreover, the convergence is very rapid, and it rarely takes more than 5 or 6 iterations until convergences is obtained. Finally, the most interesting thing is that IRMA is less sensitive to initial seed. EWS often achieves lower performances on a larger seeds following fluctuations in the seed composition (see sub-figure a for such a case). Despite accepting EWS’s output as an initial map, IRMA exhibits a dependency on the problem properties (as the input graph and the graphs-overlap) rather than the output of EWS, and has much smoother F1 values. Importantly, the recall (and thus the F1 value) of IRMA, as presented here, does not rise to 1. Instead, it rises to the maximal number of vertices that can have at least two marks. However, it does so even for small seeds.

a

. .

. .

.

b

c

6.5.1. Stopping condition

While the convergence of IRMA in F1 is clear from Figure 5, in real life scenarios, the real map is not known, and F1 cannot be used as a stopping condition. We thus propose to use as a quality measure for , and hence as a stopping condition for the algorithm. We recall that is defined as i.e., the number of edges in the common subgraph induced by the . Since correct pairs share more common neighbors on average, we expect better maps to have higher . Similarly, if , one can assume that is not significantly better than . We run IRMA on graphs 1 with and seed size of 50 respectively, and compared to the F1-score of to test the connection between them (Sub-figure LABEL:fig:exploring_break_cond_a), other settings showed similar results. One can clearly observe that both indices are converging simultaneously and have very similar dynamics (albeit different values as expressed by the different axes in Figure Sub-figure LABEL:fig:exploring_break_cond_a), suggesting that can serve as a proxy for the score.

a

. .

. .

. .

.

b

c

d

6.5.2. Computational cost of IRMA

For a pair , the number of marks to spread out is where is the degree of . Thus, the number of updates to the priority queue from inserting new pairs to is . The cost of each insertion is proportional to the log of the size of the priority-queue, which is bound , so the cost of each insertion is bound by , where is the number of vertices. The total number of updates () is between and , where is the degree. The first case is for random matching (i.e., and are independent), and the second case is for perfect matching . In low variance degree distributions, both cases are equivalent. However, in power-low degree distributions, the second case may be much higher than the first. Thus, one can bound the cost of the algorithm by , but often the bound is tighter - . The Repairing-Iteration has a similar cost, since it is based on the same logic of pulling pairs from the priority queue and spreading out marks. Note that we avoid the problematic case in EWS that the expanded seed may be of , which adds a factor to the cost of spreading marks. In their parallel versions, the boundary is similar, but in practice the Repairing-Iteration takes about the run time of EWS, and as IRMA runs Repairing-Iteration a few times, it has an overall run time 2-3 times higher than EWS, but of the same order. Note that the cost of IRMA as is the case for EWS is much more sensitive to the details of the propagation than to the specific number of vertices or edges. For example, IRMA has similar run times for graphs 1 and 2 used here, although graph 2 has twice as many vertices.

6.6. Expansion to Low Degree Vertices

The Seeded GM problem is a variant of the Maximum Common Edges Subgraph (MCES) problem, with the twist that in seeded GM the target is to use the edge match as a tool to discover an a priori existing match and not the maximal sub-graph. Thus, in contrast with MCES, in seeded GM, one tries to maximize not only the recall, but also the precision. Hence, at some stage, IRMA does not add low-probability pairs.

In IRMA, we use a threshold of 2 marks to add a candidate pair into . Since the Repairing-Iteration method filters wrong matched pairs, it is possible to allow the algorithm to make mistakes in some cases to find new correct pairs. We thus suggest to perform an exploration iteration with a threshold of 1 mark, after convergence, followed by regular repairing iterations to restore the precision.

Since repairing iterations filter out many wrong pairs, is no longer a good condition for testing the convergence. We tested three possible break conditions to the second stage of IRMA: A) The quality of a bijection can be defined through , where is the number of pairs in the set of matched pairs at the end of the iteration - , and is the number of edges among members of shared by and .B) As the target is to reduce down, we tested the condition to avoid many redundant iterations. C) Empirically after a few iterations there is no significant improvement from to . We tested a constant number of iterations.

We computed the difference in accuracy along snapshots after the exploration iteration and compared it to these different measures (Figure 6). We used graphs 1,2,5 and 6 with seed size of 50, 400, 300 and 120 respectively and with all value in and fixed 6 repairing iterations. Sub-plots a and b represent the difference in precision as a function of the difference in and , respectively. A clear correlation between the measures can be observed, yet the variance around the origin (i.e., when IRMA converges) is relatively large. Sub-plot d indicates that the most reliable prediction to the convergence of precision is the number of iteration. We use that as the stopping criteria after the exploration iteration.

We used these additional standard IRMA iterations after the exploration iteration, and tested the precision, recall and F1-score along IRMA’s iterations on graphs 1-3 with seed size of 50, 400 and 800 respectively and with value of and respectively (Figure 7). The relation between the precision and the recall is the ratio between and . Both recall and precision are monotonic non decreasing up to the exploration iteration, followed by a drop in precision together with the increase in recall in the exploration iteration, and the restoration of the precision in 3-4 iterations. Note that while precision is fully restored (and some times even improves), the recall stays higher than before the exploration iteration. In theory, one could repeat the process of doing exploration and repair iterations. However, in practice, the gain of multiple cycles is very small.

a

. .

. .

.

b

c

a

. .

. .

.

b

c

6.7. Comparison to existing methods

Current state of the art seeded GM for scale free graphs include beyond EWS two main methods. Yu et al (yu2021power, ) proposed The D-hop method based on diffusing the seed more than one neighbor away (denoted as PLD). Chiasserini et al (chiasserini2016social, ) published the DDM de-anonymization algorithm (which is equivalent to seed GM) based on parallel graph partitioning. The two studies report different measures on different graphs.

To show that IRMA obtains better results than both algorithms, we repeated their analysis using the setting used in each algorithm, and the accuracy measure. The analysis was performed on the FaceBook and AS datasets (Figure 9). PLD uses a cutoff on the vertices degree that depends on the details of the algorithm, but in their setting is higher than 3. WE thus show our results on vertices of degree above 2 or above 3. In all cases, the precision of IRMA is higher on the same set of verices.

6.8. Parallel Solution

In the parallel version to EWS. The main idea is to split the algorithm into epochs such that marks are spread out only between the epochs, allowing parallel spreading. Using the same concept, we developed a parallel version to Repairing-Iteration in which is rebuilt based only on the marks of the former iteration. The parallel version of IRMA starts by running Parallel-EWS and then performs iterations of Parallel-Repairing-Iteration (see section 5.3).

When comparing (Figure. 8) the parallel-EWS, Parallel-IRMA and regular IRMA (both use exploring iteration). The parallel-IRMA has much better precision and recall than the Parallel-EWS. In sub-plot E, one can see an extreme case where Parallel-IRMA with a seed of 25 pairs is more accurate than Parallel-EWS with 400 pairs in the seed. The Parallel-IRMA and the standard IRMA have very similar recall and precision, making the parallel IRMA an excellent trade-off between run-time and performances. Note that the parallel IRMA has the same run time as the parallel EWS up to multiplication by a small constant, and is much faster, yet much more accurate than the non-parallel EWS.

7. Discussion

We have here extended the EWS seeded-GM algorithm to include iterative corrections of the matching. EWS spreads of marks to common neighbors of previously matched pairs. It is greedy in the sense that once a pair has been matched, it cannot be changed.

We have here shown that real matches tend to accumulate more marks than wrong ones, even when a wrong match has been inserted to the match before the real one, preventing it from being added to the match. As such, one can repair at the end of each iteration the paired matches, and repeat the iteration. The resulting iterative algorithm is named IRMA, which has a better accuracy than the current state-of-the-art GM algorithms.

While in graphs existing seeded GM algorithms get a very high accuracy even for a small seed, the same does not hold for scale-free networks, as are most real-world networks. There are two main reasons for the failure of seeded GM in scale free networks: A) pairs of very high degree vertices (that are not real pairs) easily accumulate wrong marks. In a greedy approach, high-degree vertices will receive a lot of marks from common neighbors, since they tend to have a lot of random common neighbors. B) Very low degree vertices have few neighbors, and as such receive very few marks, and may never be explored.

IRMA solves the first problem by iteratively fixing errors. However, a simple iterative correction cannot solve the second problem. To address the second problem, an expansion iteration was added, where IRMA accumulates a large number of low-degree pairs with a large fraction of wrong-pairs. This is then followed by multiple correction iterations to improve the accuracy, while maintaining a high recall.

Each iteration of IRMA is a full iteration of EWS, and typical applications require 5-10 iterations. The run time could be expected to be 5-10 time longer than EWS. However, in practice, the gain in accuracy of IRMA is much higher than the accuracy difference between the parallel and iterative versions of EWS. As such a parallel version of IRMA can be used which is faster than regular EWS with higher precision and recall.

We have tested alternative methods to fix wrong pairs in each iteration, including the analysis of negative marks (marks from contradicting neighbors), or the relation between marks and degree. However, eventually, the simplest approach to accumulate marks, even after a pair was selected, ended up being the one best improving the precision and recall.

The extension of seeded GM algorithms to scale-free network with limited overlap (low values of ) is essential for their application in real-world networks. However, the model we have used here for the generation of partially overlapping graphs (random sampling from a larger common graph), may not represent the real difference between networks. Thus, an important extension of the current method would be to test it on real world partially overlapping networks models (i.e two different networks, with parts of the vertices representing the same entity).

Another important caveat of IRMA is that in the current version it is applied to unweighted undirected graphs. The extension to directed and weighted graphs is straightforward. However, this would add multiple free parameters to the analysis (such as the difference between the in and out degree distributions, and the weight distribution). We have thus discussed here the simpler case. We expect the results here to hold for more general models, and plan to further test it.

References

- [1] Carla-Fabiana Chiasserini, Michele Garetto, and Emilio Leonardi. Social network de-anonymization under scale-free user relations. IEEE/ACM Transactions on Networking, 24(6):3756–3769, 2016.

- [2] Daniel Cullina and Negar Kiyavash. Improved achievability and converse bounds for erdos-renyi graph matching. ACM SIGMETRICS Performance Evaluation Review, 44(1):63–72, 2016.

- [3] Daniel Cullina and Negar Kiyavash. Exact alignment recovery for correlated erdh o sr’enyi graphs. arXiv preprint arXiv:1711.06783, 2017.

- [4] Jian Ding, Zongming Ma, Yihong Wu, and Jiaming Xu. Efficient random graph matching via degree profiles. Probability Theory and Related Fields, 179(1):29–115, 2021.

- [5] Amir Egozi, Yosi Keller, and Hugo Guterman. A probabilistic approach to spectral graph matching. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1):18–27, 2012.

- [6] P Erdös and A Rényi. On random graphs i. Publicationes Mathematicae Debrecen, 6:290–297, 1959.

- [7] Donniell E Fishkind, Sancar Adali, Heather G Patsolic, Lingyao Meng, Digvijay Singh, Vince Lyzinski, and Carey E Priebe. Seeded graph matching. arXiv preprint arXiv:1209.0367, 2012.

- [8] Donniell E Fishkind, Sancar Adali, Heather G Patsolic, Lingyao Meng, Digvijay Singh, Vince Lyzinski, and Carey E Priebe. Seeded graph matching. Pattern Recognition, 87:203–215, 2019.

- [9] Oana Goga, Howard Lei, Sree Hari Krishnan Parthasarathi, Gerald Friedland, Robin Sommer, and Renata Teixeira. Exploiting innocuous activity for correlating users across sites. In Proceedings of the 22nd International Conference on World Wide Web, pages 447–458, 2013.

- [10] Keith Henderson, Brian Gallagher, Lei Li, Leman Akoglu, Tina Eliassi-Rad, Hanghang Tong, and Christos Faloutsos. It’s who you know: graph mining using recursive structural features. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 663–671, 2011.

- [11] Ehsan Kazemi, S. Hamed Hassani, and Matthias Grossglauser. Growing a graph matching from a handful of seeds. Proceedings of the VLDB Endowment, 8(10):1010–1021, 2015.

- [12] Ehsan Kazemi, Lyudmila Yartseva, and Matthias Grossglauser. When can two unlabeled networks be aligned under partial overlap? In 2015 53rd Annual Allerton Conference on Communication, Control, and Computing (Allerton), pages 33–42. IEEE, 2015.

- [13] Gunnar W Klau. A new graph-based method for pairwise global network alignment. BMC Bioinformatics, 10(1):1–9, 2009.

- [14] Nitish Korula and Silvio Lattanzi. An efficient reconciliation algorithm for social networks. arXiv preprint arXiv:1307.1690, 2013.

- [15] Danai Koutra, Hanghang Tong, and David Lubensky. Big-align: Fast bipartite graph alignment. In 2013 IEEE 13th International Conference on Data Mining, pages 389–398. IEEE, 2013.

- [16] Anshu Malhotra, Luam Totti, Wagner Meira Jr, Ponnurangam Kumaraguru, and Virgilio Almeida. Studying user footprints in different online social networks. In 2012 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, pages 1065–1070. IEEE, 2012.

- [17] Elchanan Mossel and Jiaming Xu. Seeded graph matching via large neighborhood statistics. Random Structures & Algorithms, 57(3):570–611, 2020.

- [18] Arvind Narayanan, Hristo Paskov, Neil Zhenqiang Gong, John Bethencourt, Emil Stefanov, Eui Chul Richard Shin, and Dawn Song. On the feasibility of internet-scale author identification. In 2012 IEEE Symposium on Security and Privacy, pages 300–314. IEEE, 2012.

- [19] André Nunes, Pável Calado, and Bruno Martins. Resolving user identities over social networks through supervised learning and rich similarity features. In Proceedings of the 27th Annual ACM Symposium on Applied Computing, pages 728–729, 2012.

- [20] Pedram Pedarsani and Matthias Grossglauser. On the privacy of anonymized networks. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 1235–1243, 2011.

- [21] Christopher Riederer, Yunsung Kim, Augustin Chaintreau, Nitish Korula, and Silvio Lattanzi. Linking users across domains with location data: Theory and validation. In Proceedings of the 25th International Conference on World Wide Web, pages 707–719, 2016.

- [22] Farhad Shirani, Siddharth Garg, and Elza Erkip. Seeded graph matching: Efficient algorithms and theoretical guarantees. In 2017 51st Asilomar Conference on Signals, Systems, and Computers, pages 253–257. IEEE, 2017.

- [23] Rohit Singh, Jinbo Xu, and Bonnie Berger. Global alignment of multiple protein interaction networks with application to functional orthology detection. Proceedings of the National Academy of Sciences, 105(35):12763–12768, 2008.

- [24] Shulong Tan, Ziyu Guan, Deng Cai, Xuzhen Qin, Jiajun Bu, and Chun Chen. Mapping users across networks by manifold alignment on hypergraph. In Twenty-Eighth AAAI Conference on Artificial Intelligence, 2014.

- [25] Lorenzo Torresani, Vladimir Kolmogorov, and Carsten Rother. Feature correspondence via graph matching: Models and global optimization. In European Conference on Computer Vision, pages 596–609. Springer, 2008.

- [26] Laurenz Wiskott, Norbert Krüger, N Kuiger, and Christoph Von Der Malsburg. Face recognition by elastic bunch graph matching. IEEE Transactions on Pattern Analysis and Machine Intelligence, 19(7):775–779, 1997.

- [27] Lyudmila Yartseva and Matthias Grossglauser. On the performance of percolation graph matching. In Proceedings of the First ACM Conference on Online Social Networks, pages 119–130, 2013.

- [28] Liren Yu, Jiaming Xu, and Xiaojun Lin. The power of d-hops in matching power-law graphs. In Abstract Proceedings of the 2021 ACM SIGMETRICS/International Conference on Measurement and Modeling of Computer Systems, pages 77–78, 2021.

- [29] Reza Zafarani and Huan Liu. Connecting users across social media sites: a behavioral-modeling approach. In Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 41–49, 2013.

- [30] Fan Zhou, Lei Liu, Kunpeng Zhang, Goce Trajcevski, Jin Wu, and Ting Zhong. Deeplink: A deep learning approach for user identity linkage. In IEEE INFOCOM 2018-IEEE Conference on Computer Communications, pages 1313–1321. IEEE, 2018.