(cvpr) Package cvpr Warning: Single column document - CVPR requires papers to have two-column layout. Please load document class ‘article’ with ‘twocolumn’ option (cvpr) Package cvpr Warning: Package ‘hyperref’ is not loaded, but highly recommended for camera-ready version

Invisible-to-Visible: Privacy-Aware Human Segmentation using Airborne Ultrasound via Collaborative Learning Probabilistic U-Net

Abstract

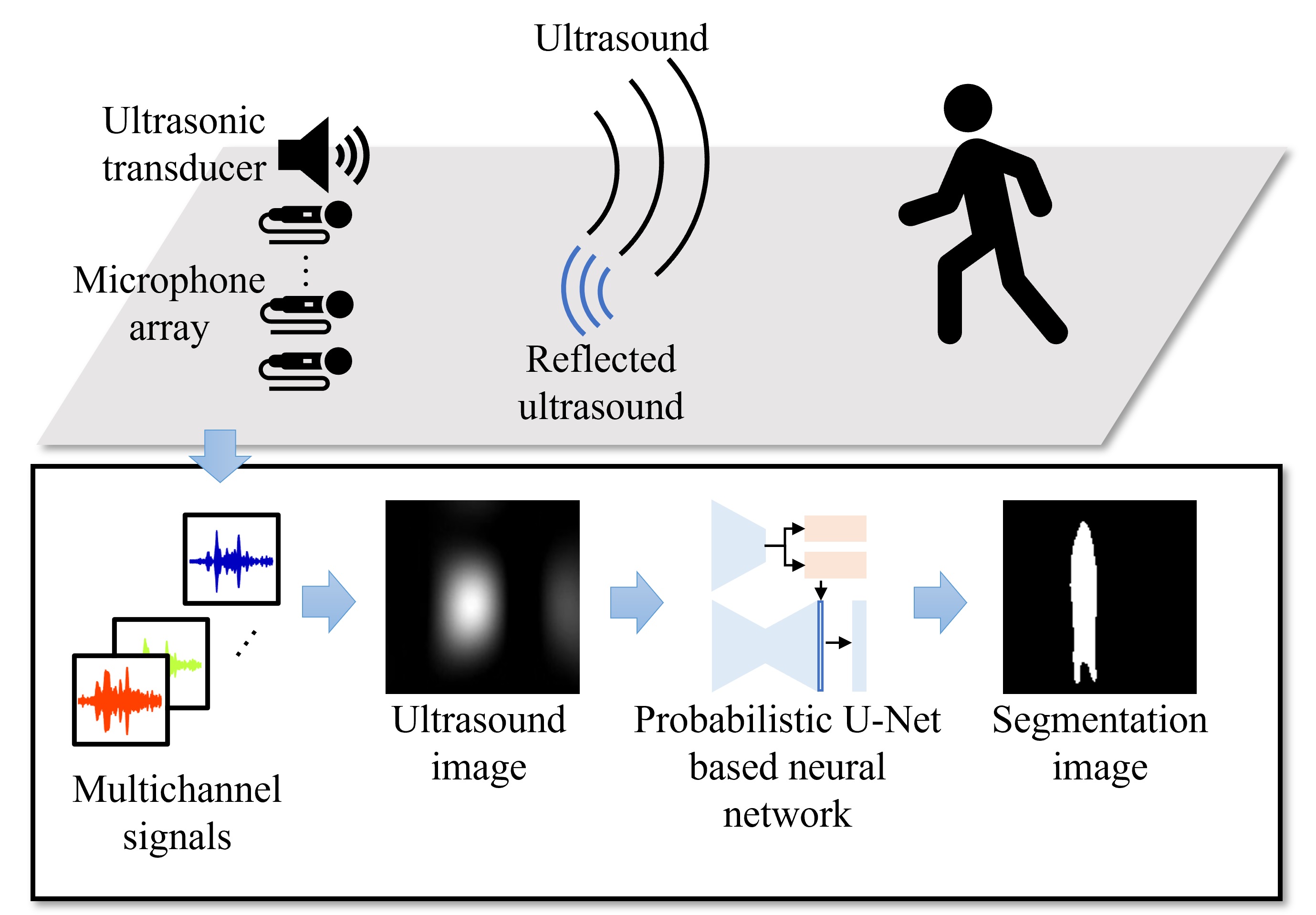

Color images are easy to understand visually and can acquire a great deal of information, such as color and texture. They are highly and widely used in tasks such as segmentation. On the other hand, in indoor person segmentation, it is necessary to collect person data considering privacy. We propose a new task for human segmentation from invisible information, especially airborne ultrasound. We first convert ultrasound waves to reflected ultrasound directional images (ultrasound images) to perform segmentation from invisible information. Although ultrasound images can roughly identify a person’s location, the detailed shape is ambiguous. To address this problem, we propose a collaborative learning probabilistic U-Net that uses ultrasound and segmentation images simultaneously during training, closing the probabilistic distributions between ultrasound and segmentation images by comparing the parameters of the latent spaces. In inference, only ultrasound images can be used to obtain segmentation results. As a result of performance verification, the proposed method could estimate human segmentations more accurately than conventional probabilistic U-Net and other variational autoencoder models.

1 Introduction

Segmentation has attracted wide attention because of its applications, such as medical image diagnoses, robotics, and action recognition [18, 5, 31, 24, 26, 34]. A camera is a well-developed sensor for segmentation tasks. Although camera-based segmentation has been widely investigated and achieved high precision, camera images do not preserve privacy for human segmentation.

Audio signals is a privacy-preserved segmentation [28, 12, 16, 20, 27, 33]. Predicting depth maps and segmentations from audio signals have been proposed [28, 12]. These methods can generate images from invisible physical information and can be used to recognize human actions by analyzing segmentation images. Although these methods can visualize sounding objects, detecting non-sounding objects are difficult. From the human recognition standpoint, people who do not make sounds, such as not talking or walking, cannot be detected.

Airborne ultrasound echoes could be used to detect non-sounding people. There are methods for detecting the surrounding information by analyzing ultrasound echoes [17, 4, 21, 11, 8, 22, 32]. Although these methods can estimate the position of objects, methods that specialize in human recognition and estimate human segmentation have not been well investigated. If human segmentation can be estimated from echoes, it would be possible to detect non-sounding people and it can be used to estimate human action.

In this study, we propose a method for estimating human segmentation from airborne ultrasound detected by multichannel microphones using a neural network. The concept of our work is illustrated in Figure 1. In the sensing section, an ultrasonic transducer at 62 kHz resonance, and 16 channels of microelectromechanical system (MEMS) microphone array are used. The ultrasound emitted by the ultrasonic transducer is reflected by a human body and then captured by microphones. The reflected ultrasound directional images (hereinafter, ultrasound images) can be obtained by analyzing the differences between multichannel ultrasound signals. Because the ultrasound image represents the intensity of the reflected wave at each pixel, it has ambiguous shapes that are far from humans. Therefore, we introduce a deep neural network to obtain human segmentation images from ultrasound images. We use probabilistic models for the task because the ultrasound images have different edge positions from segmentation images. Kohl et al. [15] proposed a probabilistic U-Net that combines variational autoencoder (VAE) [14] and U-Net [25]. During the training phase, the probabilistic U-Net learns a network to match latent spaces of input and the input/ground truth by comparing the latent spaces with Kullback-Leibler divergence (KLD). A latent vector, sampled from the latent space of input/ground truth, combined with the last activation map of a U-Net. The spatial positions of the ultrasound and segmentation images are similar and the edges are different. Hence, the latent distributions of ultrasound and segmentation images are roughly close because of shrinking the spatial dimensions by convolutions. Thus, reducing the distance between latent distributions with precisely estimating the edges is difficult. Therefore, we propose a collaborative learning probabilistic U-Net (CLPU-Net), which uses mean squared error (MSE) instead of KLD to minimize the distance between latent distributions. Experiments showed that human segmentation images could be generated from ultrasound images. To the best of our knowledge, this is the first work to estimate human segmentation images from airborne ultrasounds.

The main contributions to this work are as follows:

-

•

A human body sensing system using an airborne ultrasound and a microphone array for privacy-aware human segmentation.

-

•

An architecture of CLPU-Net that learns a network with matching latent variables from segmentation and ultrasound images.

-

•

We showed that despite a simple mean-squared error, the distance between latent variables can be shortened by expressing the distribution of high-dimensional latent space with mean and variance.

2 Related work

2.1 Privacy-preserved human segmentation

The camera-based methods have been well investigated and have achieved high accuracy. However, privacy concerns should be considered for applications such as home surveillance. Cameraless human segmentation methods have also been investigated to address privacy issues. Wang et al. [29] proposed a method for estimating human segmentation images, joint heatmaps, and part affinity fields using Wi-Fi signals. They used three transmitting-receiving antenna pairs and thirty electromagnetic frequencies with five sequential samples. The channel state information [9] was analyzed to input networks. The networks comprise upsampling blocks, residual convolutional blocks, U-Net, and downsampling blocks. Although this method achieved privacy-friendly fine-grained person perception with Wi-Fi antennas and routers, the Wi-Fi signals are highly affected by the surrounding environment due to the multipath effect.

Alonso et al. [1] proposed an event camera-based semantic segmentation method. The event camera detects pixel information when the brightness changes, such as when the subject moves. They do not capture personal information more clearly than the RGB cameras because event cameras only capture changes in intensities on a pixel-by-pixel basis. In [1], event information from event cameras was formed as -channel images. The first two channels were histograms of positive and negative events, whereas the other four channels were the mean and standard deviation of normalized timestamps at each pixel for the positive and negative events. They showed that an Xception-based encoder–decoder architecture could learn semantic segmentation from the -channel information. Although this method achieves semantic segmentation from privacy-preserved event cameras, it is difficult to detect people who are not moving.

Irie et al. [12] proposed a method that generates segmentation images from sounds. They recorded sounds using -channel microphone arrays. They used Mel frequency cepstral coefficients and angular spectrum from sounds for estimating segmentation images. This method can estimate human and environmental objects only from sounds. However, estimating segmentation images for non-sounding people is difficult in principle because this method analyzes the sound emitted from objects.

2.2 Airborne ultrasonic sensing

Airborne ultrasound could be used to detect non-sounding people. The positions of people can be detected by analyzing ultrasound echos reflected by them. Although the multipath effect influences detection, it is less effective than radio waves because the propagation speed of ultrasound is slower than that of radio waves.

Airborne ultrasonic sensors have been used to detect distance in various industries, such as the automobile [30] and manufacturing industries [7, 3, 13]. Ultrasonic sensors emit short pulses at regular intervals. The ultrasounds are reflected if there are objects in the propagation path. The distance of objects can be determined by analyzing the time differences between emitted and reflected sounds [2].

When sounds are captured using microphone arrays, the directions of objects can be detected in addition to their distances [19]. Sound localization using beamforming algorithms has been developed. A delay-and-sum (DAS) method is a common beamforming algorithm [23]. The DAS method can estimate the direction of sound sources by adding array microphone signals delayed by a given amount of time. By using ultrasonic transducers and microphone arrays, the positions of objects can also be estimated using the DAS with regarding a reflected position as a sound source. Although this method can detect the position of objects, it is difficult to obtain the actual shape of objects from echoes of a single pulse.

Hwang et al. [11] performed three-dimensional shape detection using wideband ultrasound and neural networks. Although the analysis of multiple frequencies can precisely detect the positions and shapes of objects, the sound system for emitting wideband frequency becomes large, and the number of data increases because of the high sampling rate required to sense wideband ultrasound. Therefore, we consider obtaining segmentation images from the positional information on reflected objects analyzed by narrowband frequency ultrasound using a neural network.

3 Ultrasound sensing in the proposed method

First, we describe the hardware setup of our ultrasound sensing system. Then, we describe the preprocessing for converting ultrasound waves to ultrasound images.

3.1 Ultrasound sensing system

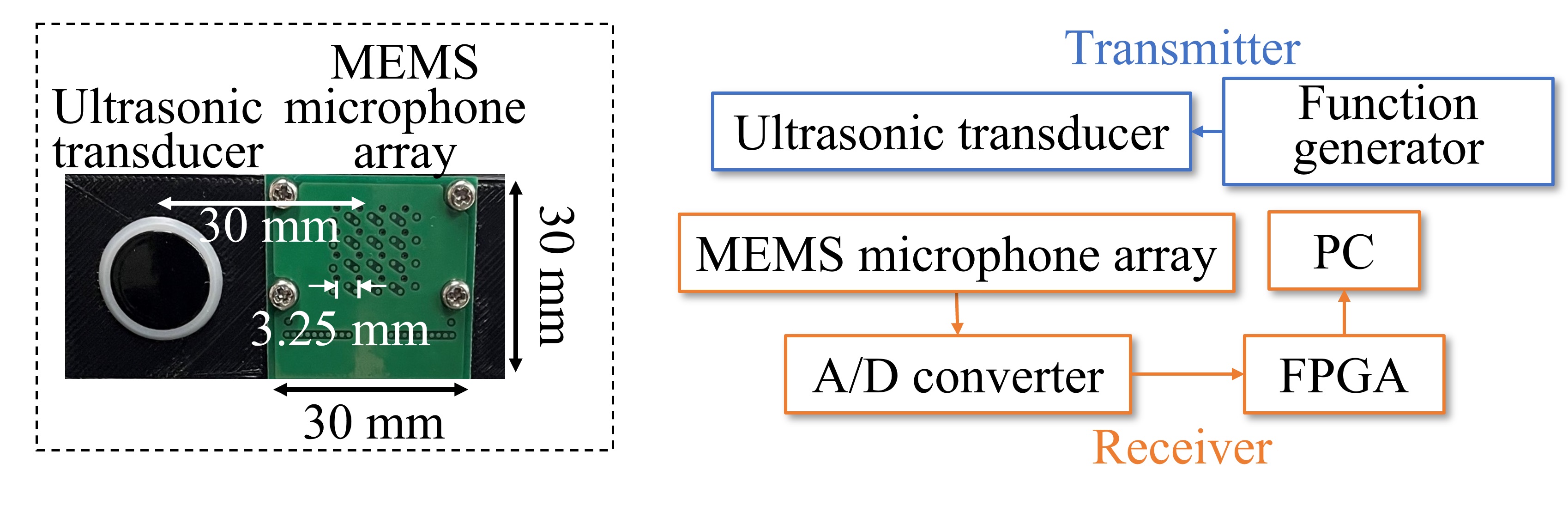

The hardware setup is shown in Figure 2. The transmitter comprised a function generator and an ultrasonic transducer at 62 kHz resonance. The ultrasonic transducer was driven with burst waves of cycles and intervals at using the function generator. The receiver comprised a MEMS microphone array, an analog-to-digital converter, a field-programmable gate array (FPGA), and a PC. A grid MEMS microphone array, whose microphones were mounted on a substrate at intervals, was used. The analog signals captured by the microphones were converted to digital signals and imported into the PC through the FPGA. The distance between the microphone array and ultrasonic transducer was set to .

3.2 Data preprocessing

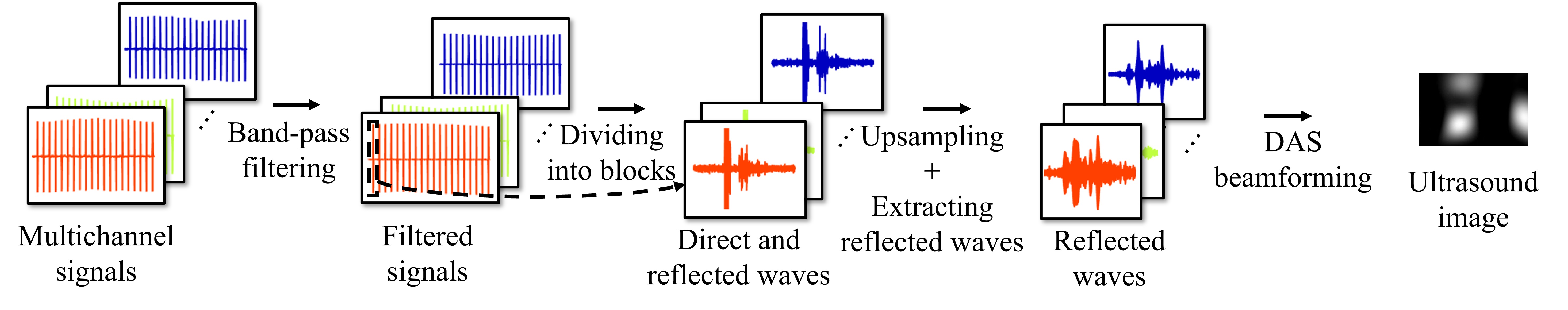

The diagram of the data preprocessing is shown in Figure 3. First, a band-pass filter with a center frequency of and bandwidth of was used for signals captured by the microphones. The filtered ultrasound signals were divided into blocks including direct and reflected waves. Then, we upsampled the ultrasound waves four times at each block to improve the accuracy of direction estimation. Following that, we produced ultrasound images from reflected ultrasounds via DAS beamforming. The beamformed signal can be defined as

| (1) |

where is the time, is the number of microphones, is the signal received by the -th microphone, and is the time delay for the -th microphone, which is determined by the speed of sound and distance between the -th microphone and observing points. We set the observing points in the range of – degrees in the azimuthal direction and – degrees in the polar direction. Then, we calculated beamformed signals and obtained the reflected directional heat maps. To reduce the noise from reflected waves from objects other than people, we calculated ultrasound heat maps by subtracting a reference map from reflected directional heat maps as

| (2) |

where is the pixel of the heat maps, and is the coefficient, which is determined by

| (3) |

where is the index of the maximum pixel of . Notably, the reference map was calculated using the data without people, and was normalized as

| (4) |

when it was converted to ultrasound images .

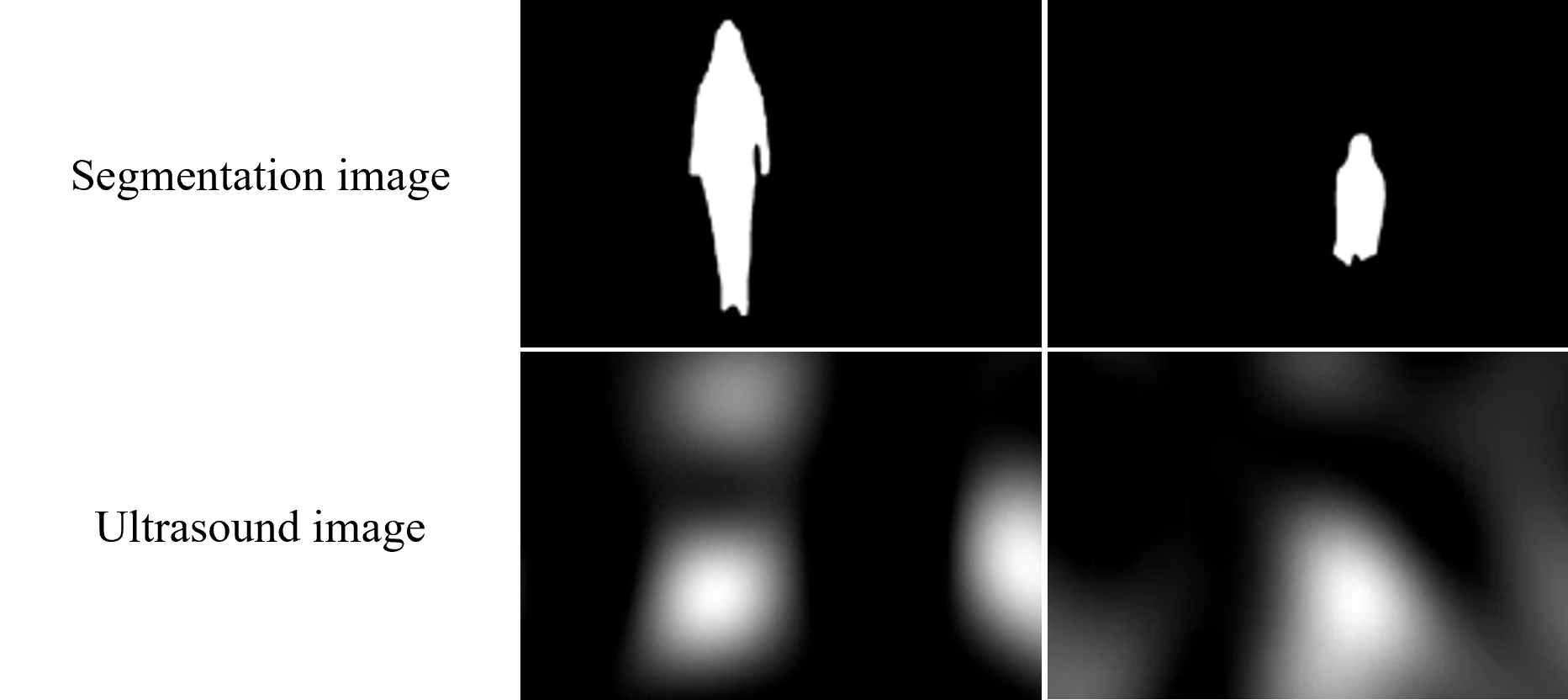

Examples of segmentation and ultrasound images are shown in Figure 4. The segmentation images in the top row were annotated from RGB images. The ultrasound images, which represent the intensity of the reflected ultrasound at each pixel, had ambiguous shapes at the positions corresponding to a person. Furthermore, there were artifacts in the region without any people.

4 Human segmentation via ultrasound

We first describe an overview of the proposed network. Second, we briefly explain probabilistic U-Net, which is the basis of the proposed method. Finally, we describe the proposed network in detail.

4.1 Overview

As shown in Fig. 4, the ultrasound images do not have the shape of a person, and their edges are very different from those of the segmentation images. When there is a lot of ambiguity in the input image or when the difference between input and output is large, it is better to use the probabilistic method than the deterministic method. Thus, we consider performing human segmentation based on probabilistic models. Kohl et al. [15] proposed a probabilistic U-Net combining VAE [14] with U-Net. This method learns prior and posterior networks, which output the latent spaces of input and input/segmentation images, respectively, to be close. The latent variables sampled from the latent space of the posterior network are added to the last layer of U-Net. This method deals with large discrepancies between the input and output images by sampling latent variables from the latent space output by the prior network during the inference phase. However, it is difficult to handle with the existing prior network because the difference in appearance between the ultrasound and segmentation images is huge. Thus, we propose collaborative learning probabilistic U-net based on probabilistic U-Net. Details of the proposed method are described below.

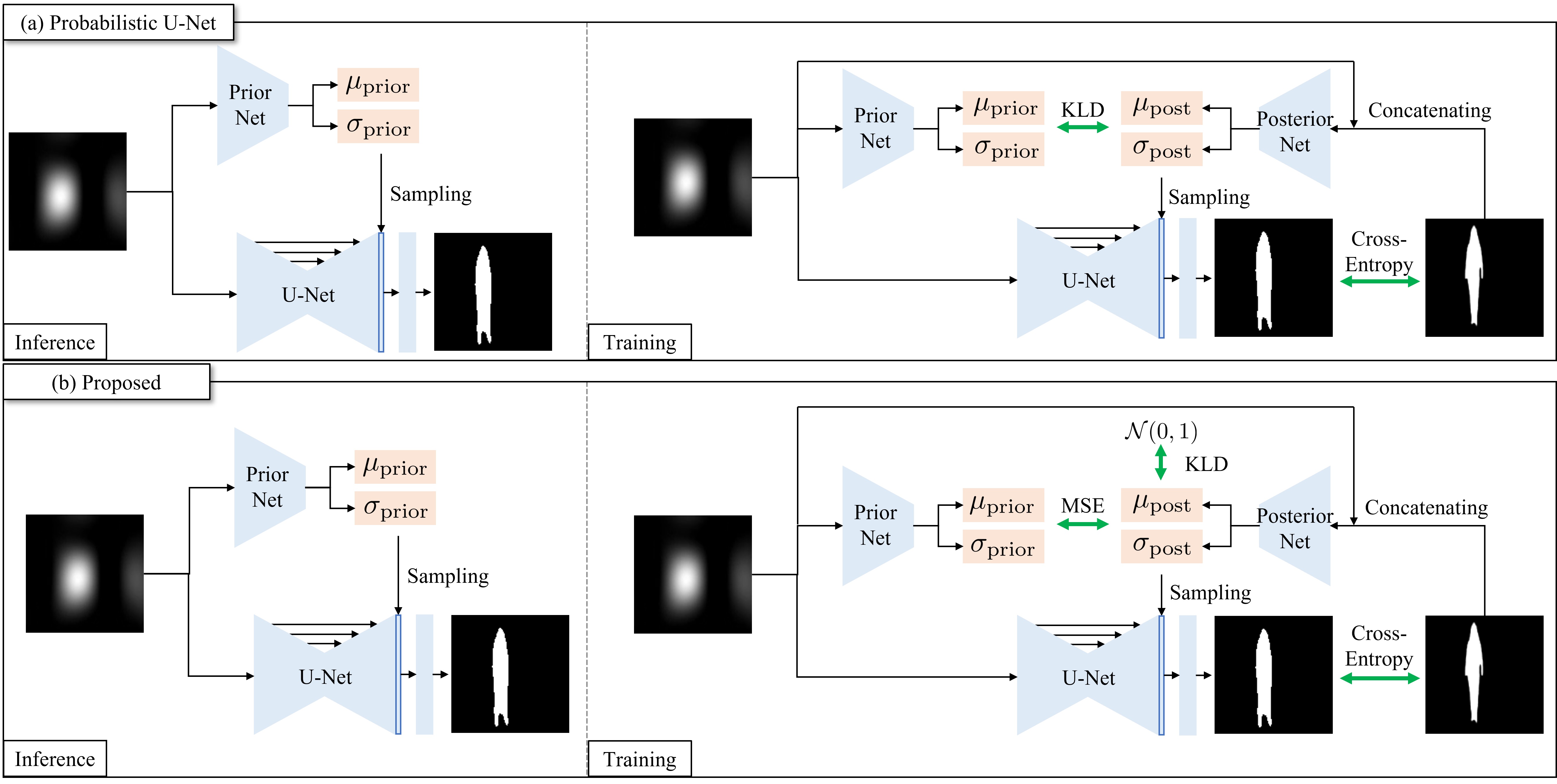

4.2 Probabilistic U-Net [15]

In this section, we explain the probabilistic U-Net. The architecture of the probabilistic U-Net is shown in Figure 5(a). During the inference phase, the input image is input into the prior network and U-Net. The prior network, which is parameterized by , encodes the input image and outputs the parameters of the mean and variance . Then the data was randomly sampled from the prior distribution as

| (5) |

and broadcasted to the N-channel feature map to match the shape to the segmentation image. The feature maps are concatenated at the last layer of the U-Net, and the output image is estimated by convoluting the feature maps from the prior network and U-Net.

During the training phase, the posterior network is added to encode the information about the segmentation image. The posterior network parameterized by encodes the data that contains the input image and segmentation image . The parameters of the mean and variance of the posterior distribution are obtained using the posterior network. Then, the data is sampled from the posterior distribution as

| (6) |

and combined to the feature map at the last layer of the U-Net. The loss function consists of two terms. The first term is cross entropy to minimize the error between the estimated image and ground truth. The second term is KLD to close the distribution between the prior distribution and posterior distribution . The losses are combined as a weighted sum,

where outputs the estimated segmentation image and is the weight parameter.

4.3 CLPU-Net

Although the appearance of ultrasound and ground truth segmentation images differ significantly at the edges, the appearance of the other parts, particularly positions, is relatively similar. Therefore, it is important to learn latent space by focusing on the difference in edges. In probabilistic U-Net, prior distribution and posterior distribution are penalized using KLD. Reducing the distance between the distributions to estimate edges with high accuracy by comparing the distributions obtained after the spatial dimensions decreased at the prior/posterior network. Because the ultrasound and segmentation images are roughly matched other than edges. Thus, we propose a method to use mean squared error (MSE) of means and variances instead of KLD.

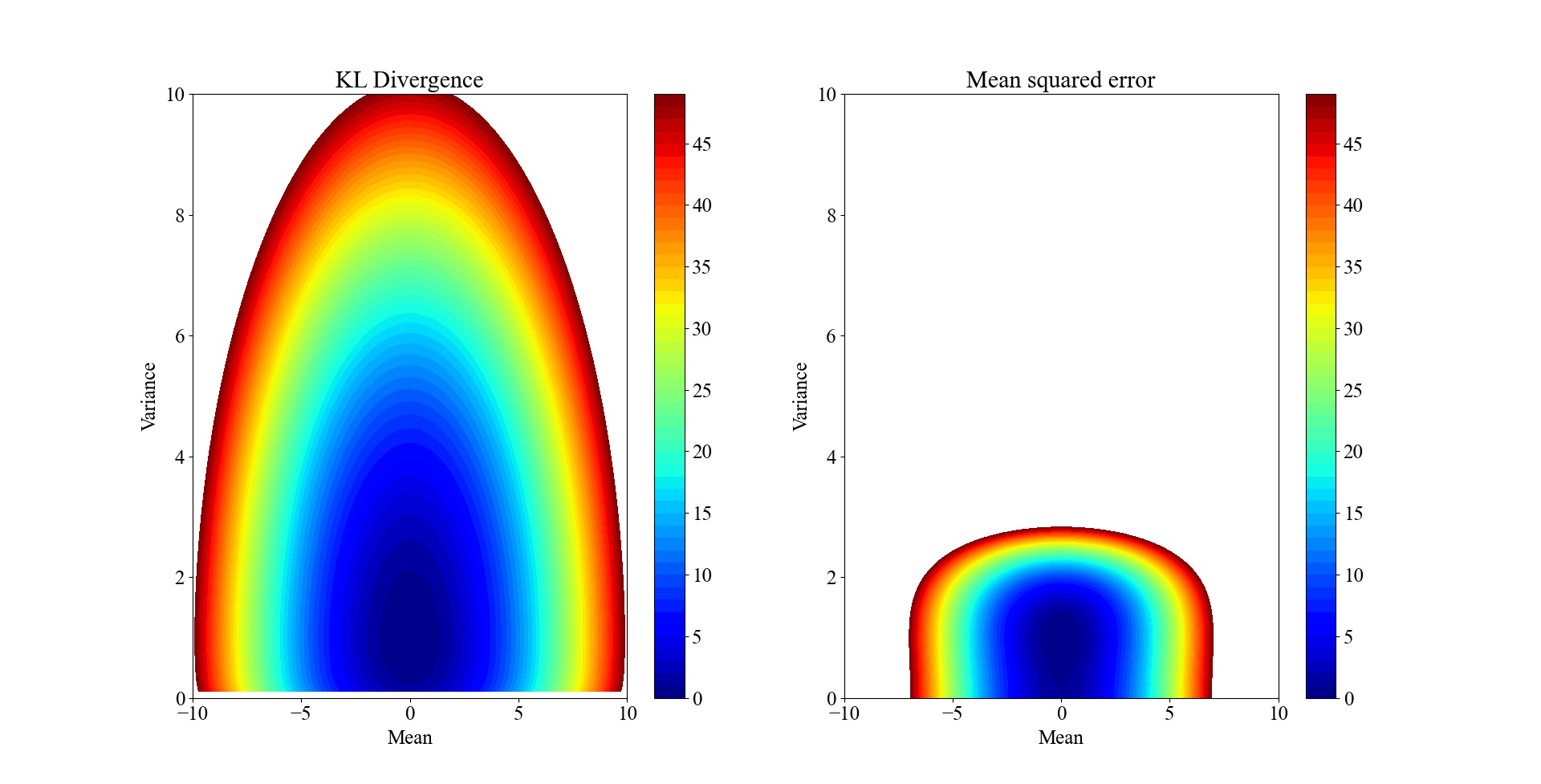

The errors between the standard normal distribution calculated by KLD and MSE are shown in Figure 6. The MSE loss was calculated as

| (7) |

where and are the mean and variance of the standard normal distribution. Since the value around the error of 0 for the MSE loss changes more rapidly than that of the KLD (Fig. 6), the MSE loss is more sensitive than the KLD. Therefore, converging to an optimal result by KLD is difficult because of the small gradient of loss landscape, since the ultrasound and segmentation images are roughly matched other than edges. Thus, we propose a method that uses MSE loss of the means and variances to penalize the differences of the latent spaces. Additionally, KLD regularization is added to the posterior distribution to approach a standard normal distribution.

The proposed network is illustrated in Figure 5(b). The comparison method between the prior and posterior distributions at the training phase distinguishes the probabilistic U-Net. The loss function of the proposed method is as follows:

| (8) |

where is the weights adjusting the scale. The first term is

where is the ultrasound image and is the standard normal distribution. The second term is

| (9) |

where is the dimension of the latent vector.

5 Experiments

In this section, we describe the dataset configuration, experimental setup, and evaluation results.

| Condition number | 1 | 2 | 3 | 4 | 5 | 6 | total |

|---|---|---|---|---|---|---|---|

| Number of images | 7,792 | 7,768 | 7,634 | 7,727 | 7,777 | 7,796 | 46,494 |

5.1 Experimental setup

5.1.1 Datasets

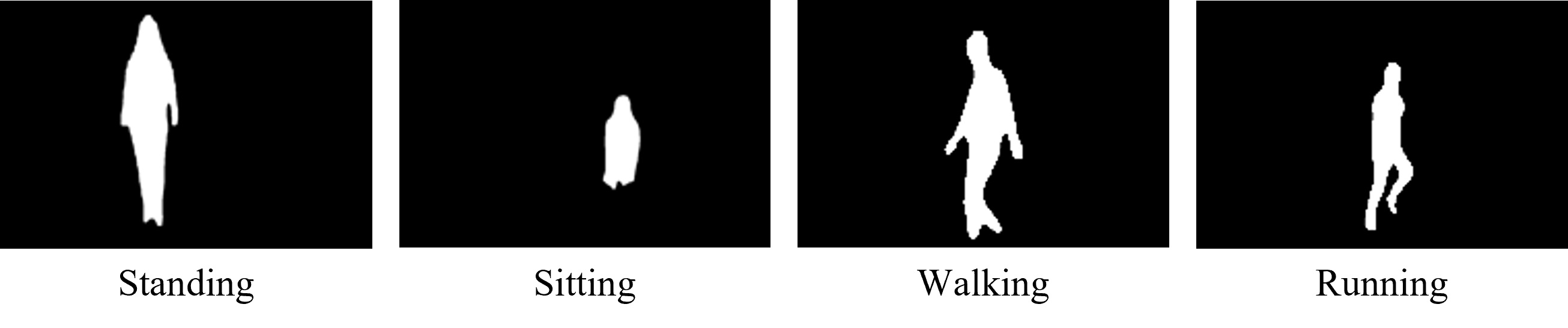

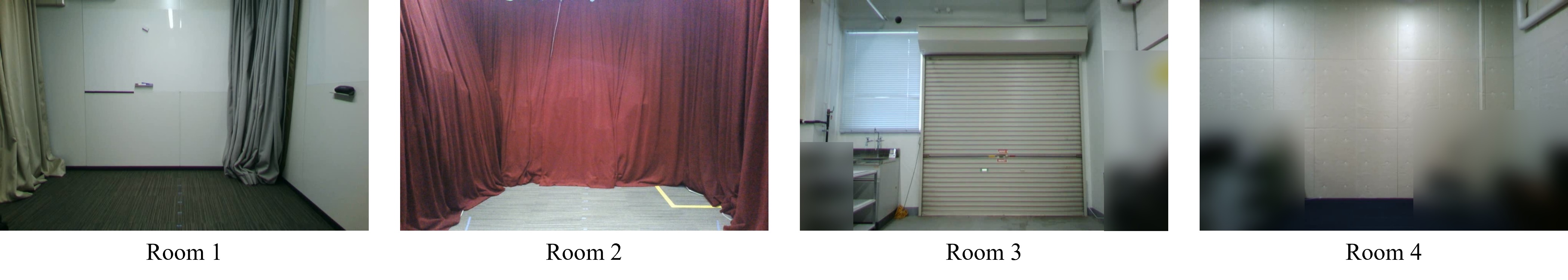

We created a dataset111 This data acquisition experiment was judged by our institution to be not the subject of the examination by the Institutional Review Board. Participants in data acquisition have given their written consent. because no datasets have been previously used airborne ultrasound to detect humans. For , we captured the ultrasounds at sampling from channel microphones and videos at frames per second (fps) from the RGB camera (a built-in camera of Let’s Note, CF-SV7, Panasonic), which was located under the microphone array. The resolution was , and the videos were used for creating segmentation images for the training phase. The data were extracted at fps because the time interval of the ultrasound generation was bursts per second and the frame rate of the video was fps. We automatically produced segmentation images using Mask R-CNN [10]. We used the dataset that people, who were located from to away from the sensing devices, performed motions such as standing, sitting, walking, and running. Examples of the segmentation images were shown in Figure 7. These are the images for standing, sitting, walking, running, respectively. There were six participants and they performed in four different rooms. The rooms are shown in Figure 8. Rooms 1 and 3 were surrounded by relatively acoustically reflective walls and rooms 2 and 4 were surrounded by relatively acoustically absorbent materials. The number of images at each condition is shown in Table 1. The condition numbers to indicate the participants’ number. Images were captured about 7,700 images at each condition, the total number was 46,494.

| Model | IoU | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|---|

| VAE | 0.265 | 0.880 | 0.351 | 0.526 | 0.403 |

| Joint-VAE | 0.278 | 0.889 | 0.376 | 0.507 | 0.392 |

| Probabilistic U-Net | 0.329 | 0.912 | 0.485 | 0.490 | 0.456 |

| CLPU-Net | 0.388 | 0.921 | 0.536 | 0.546 | 0.519 |

5.1.2 Evaluation

We evaluated the proposed method using k-fold cross-validation. To confirm the robustness of the unknown person data, the dataset was divided based on the person, which corresponds to the conditions listed in Tab. 1. Therefore, was set to six, and all six patterns were trained and evaluated, for example learning at condition number 2 to 6 and evaluating at condition number 1. The performance of the model was evaluated using an intersection-over-union (IoU), accuracy, precision, recall, and F1-score.

5.1.3 Implementation details

The prior and posterior networks consisted of four blocks of layers. A block consisted of three pairs of a 2-dimensional convolution layer and ReLU and an average pooling layer. The output channels of the four blocks were in order. All input images were resized to pixels. The dimension of means and variances was set to . The batch size was and the initial learning rate was . We used an Adam optimizer with in training. was and was , respectively, which are determined by a grid search.

5.2 Experimental results

We first describe the performance of the proposed CLPU-Net and compare it with other methods. Then, we described the comparison of the accuracy by distances between the sensor and person. Then, we explain the comparison of the accuracy by rooms. Finally, quantitative results are described.

5.2.1 Performance of CLPU-Net

We first evaluated the performance of the proposed CLPU-Net. Then, we compared our model with probabilistic U-Net, VAE, Joint-VAE [6] to confirm the validity of learning with ultrasound and segmentation images. The VAE and Joint-VAE were trained using segmentation images and inferred using ultrasound images. The CLPU-Net and probabilistic U-Net were trained using ultrasound and segmentation images and inferred using ultrasound images. Table 2 illustrates the qualitative results. Each metric was averaged for all six patterns of the k-fold cross-validation. The proposed CLPU-Net was marked highest in all metrics of the four models.

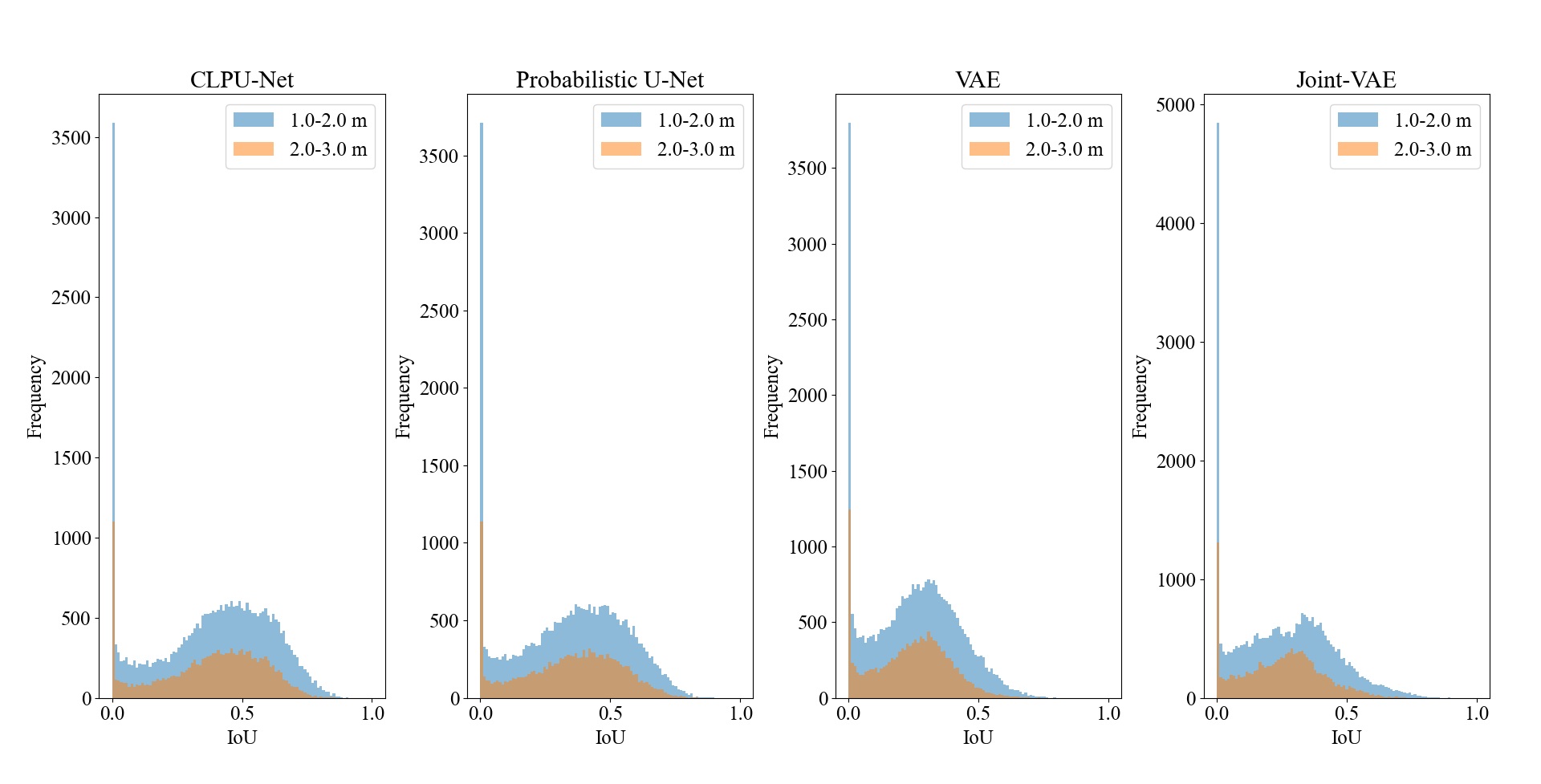

5.2.2 Comparison by distances between sensor and person

The amplitude of the reflected ultrasound decreases as the distance between the sensors and person increases. Therefore, we investigated the robustness of the distance between the sensor and a person. Figure 9 shows the IoU histogram, which categorizes the dataset into two types; distances between m and less than m and distances between m and less than m. The horizontal axis represents the IoU values, and the vertical axis represents the frequency, which means the number of images at each bin. The bin width was set to . The blue bins were the data of distances between m and less than m, and the orange bins were the data of distances between m and less than m. Although the frequencies differ due to the difference in the number of images for the conditions, the trends in these frequency distributions were not very different. Therefore, the accuracy is not affected by the distance within a range of to m. We verified that robustness is sufficient in the range of use because we assume an application will be used at home.

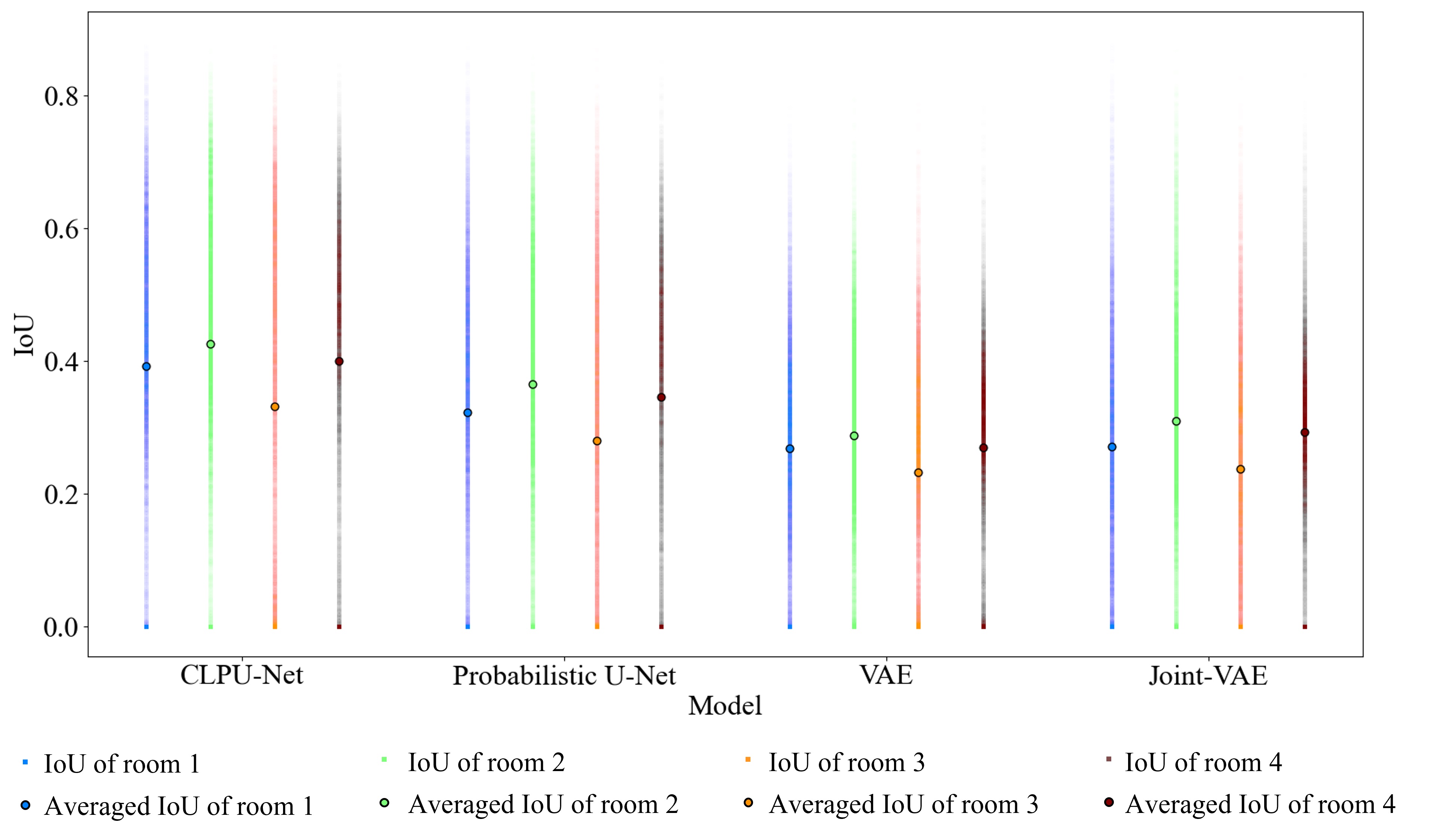

5.2.3 Comparison by rooms

There are various acoustic absorption characteristics in rooms assuming an application will be used at home. Therefore, we investigated the robustness against the room differences. The IoU for each room is illustrated in Figure 10. The IoU of all estimated images were plotted as semi-transparent squares, and the averaged IoU was plotted as a circle with a black line. The blue, green, orange, and brown represent the IoU of rooms , , , and , respectively. The accuracies of rooms and , which have a relatively high acoustic absorption rate, tend to be higher and the accuracies of rooms and , which have a relatively low acoustic absorption rate, tend to be lower. The decrease in accuracy in reverberant rooms seems to be the effect of higher-order reflected and environmental ultrasounds. The accuracies can be improved in reverberant rooms by changing the analysis section of ultrasound waves at preprocessing according to the distance between the sensor and person.

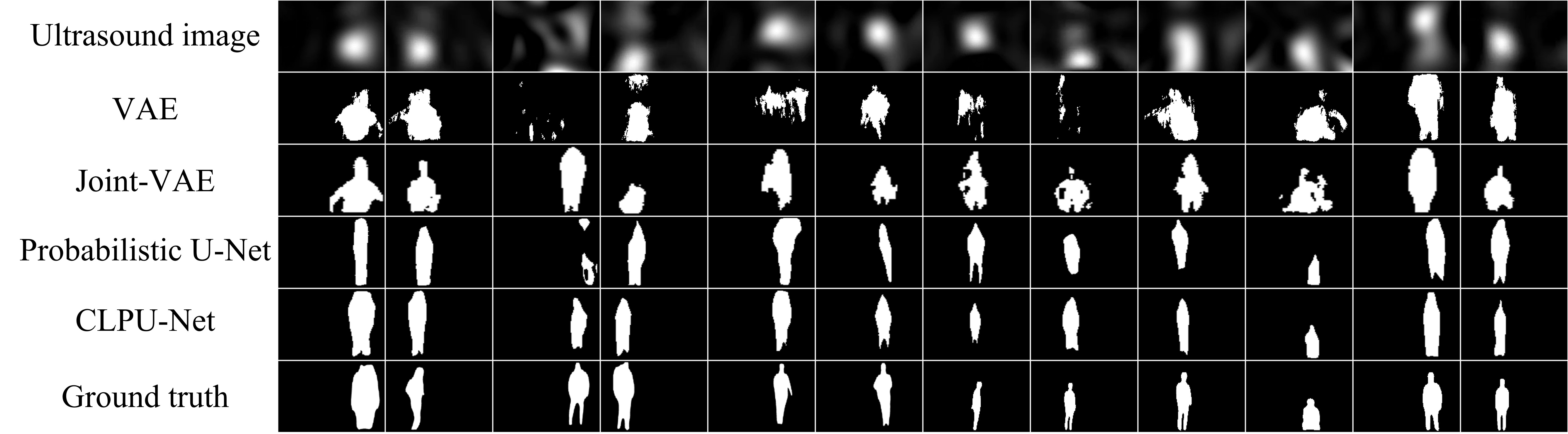

5.2.4 Quantitative result

The quantitative result is shown in Figure 11. In the VAE and Joint-VAE, the shapes are not properly estimated and there are no people in some estimated images. In these methods, the information on ultrasound images was excluded at the training phase, and they cannot estimate segmentation images from ultrasound images. Therefore, using the information in the segmentation images at the training phase affects the estimation. Although the probabilistic U-Net can capture the shapes better than those of the VAE and Joint-VAE, the estimation fails if the input and segmentation images have a large discrepancy. Alternatively, the proposed method has been estimated under such conditions. Some images from CLPU-Net, such as st, th, th, and th columns from the right, are closer to the ground truth. In contrast, those images from probabilistic U-Net tend to swell or shrink.

6 Conclusions

We proposed privacy-aware human segmentation from airborne ultrasound using CLPU-Net. Our method used the MSE of the means and variances, which are the output of the prior and posterior networks, instead of KLD for comparison of the latent spaces of the prior and posterior networks in probabilistic U-Net. This enables optimization suitable for the ultrasound image obtained by our proposed device. This method can be used to detect human actions in situations where privacy is required, such as home surveillance because the sound/segmentation images cannot be reconstructed to RGB images. In the future, we will increase various data and improve the ultrasound image generation process to improve the accuracy in unknown environments.

References

- [1] Inigo Alonso and Ana C. Murillo. EV-SegNet: Semantic segmentation for event-based cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pages 1624–1633, 2019.

- [2] Alessio Carullo and Marco Parvis. An ultrasonic sensor for distance measurement in automotive applications. IEEE Sensors Journal, 1(2):143–147, 2001.

- [3] D.E. Chimenti. Review of air-coupled ultrasonic materials characterization. Ultrasonics, 54(7):1804–1816, 2014.

- [4] Amit Das, Ivan Tashev, and Shoaib Mohammed. Ultrasound based gesture recognition. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 406–410, 2017.

- [5] G.N. Desouza and A.C. Kak. Vision for mobile robot navigation: a survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(2):237–267, 2002.

- [6] Emilien Dupont. Learning disentangled joint continuous and discrete representations. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems (NeurIPS), volume 31. Curran Associates, Inc., 2018.

- [7] Yiming Fang, Lujun Lin, Hailin Feng, Zhixiong Lu, and Grant W. Emms. Review of the use of air-coupled ultrasonic technologies for nondestructive testing of wood and wood products. Computers and Electronics in Agriculture, 137:79–87, 2017.

- [8] Biying Fu, Jakob Karolus, Tobias Grosse-Puppendahl, Jonathan Hermann, and Arjan Kuijper. Opportunities for activity recognition using ultrasound doppler sensing on unmodified mobile phones. In Proceedings of the 2nd international Workshop on Sensor-based Activity Recognition and Interaction, pages 1–10, 2015.

- [9] Daniel Halperin, Wenjun Hu, Anmol Sheth, and David Wetherall. Tool release: Gathering 802.11n traces with channel state information. SIGCOMM Computer Communication Review, 41(1):53, 2011.

- [10] Kaiming He, Georgia Gkioxari, Piotr Dollar, and Ross Girshick. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), pages 2961–2969, 2017.

- [11] Gunpil Hwang, Seohyeon Kim, and Hyeon-Min Bae. Bat-G net: Bat-inspired high-resolution 3D image reconstruction using ultrasonic echoes. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems (NeurIPS), volume 32. Curran Associates, Inc., 2019.

- [12] Go Irie, Mirela Ostrek, Haochen Wang, Hirokazu Kameoka, Akisato Kimura, Takahito Kawanishi, and Kunio Kashino. Seeing through sounds: Predicting visual semantic segmentation results from multichannel audio signals. In International Conference on Acoustics, Speech, and Signal Processing (ICASSP), pages 3961–3964, 2019.

- [13] R. Kažys, A. Demčenko, E. Žukauskas, and L. Mažeika. Air-coupled ultrasonic investigation of multi-layered composite materials. Ultrasonics, 44:e819–e822, 2006.

- [14] Diederik P Kingma and Max Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2014.

- [15] Simon Kohl, Bernardino Romera-Paredes, Clemens Meyer, Jeffrey De Fauw, Joseph R. Ledsam, Klaus Maier-Hein, S. M. Ali Eslami, Danilo Jimenez Rezende, and Olaf Ronneberger. A probabilistic U-Net for segmentation of ambiguous images. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems (NeurIPS), volume 31. Curran Associates, Inc., 2018.

- [16] Gierad Laput, Karan Ahuja, Mayank Goel, and Chris Harrison. Ubicoustics: Plug-and-play acoustic activity recognition. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, pages 213–224, 2018.

- [17] Manni Liu, Linsong Cheng, Kun Qian, Jiliang Wang, Jin Wang, and Yunhao Liu. Indoor acoustic localization: A survey. Human-centric Computing and Information Sciences, 10, 2020.

- [18] Shervin Minaee, Yuri Boykov, Fatih Porikli, Antonio Plaza, Nasser Kehtarnavaz, and Demetri Terzopoulos. Image Segmentation Using Deep Learning: A Survey. arXiv e-prints, page arXiv:2001.05566, 2020.

- [19] Marco Moebus and Abdelhak M. Zoubir. Three-dimensional ultrasound imaging in air using a 2D array on a fixed platform. In 2007 IEEE International Conference on Acoustics, Speech and Signal Processing - ICASSP ’07, volume 2, pages II–961–II–964, 2007.

- [20] Bruna Salles Moreira, Angelo Perkusich, and Saulo OD Luiz. An acoustic sensing gesture recognition system design based on a hidden markov model. Sensors, 20(17), 2020.

- [21] Thomas S Murray, Daniel R Mendat, Kayode A Sanni, Philippe O Pouliquen, and Andreas G Andreou. Bio-inspired human action recognition with a micro-Doppler sonar system. IEEE Access, 6:28388–28403, 2017.

- [22] Kranti Kumar Parida, Siddharth Srivastava, and Gaurav Sharma. Beyond image to depth: Improving depth prediction using echoes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 8268–8277, 2021.

- [23] Vincent Perrot, Maxime Polichetti, François Varray, and Damien Garcia. So you think you can DAS? A viewpoint on delay-and-sum beamforming. Ultrasonics, 111:106309, 2021.

- [24] Intisar Rizwan I Haque and Jeremiah Neubert. Deep learning approaches to biomedical image segmentation. Informatics in Medicine Unlocked, 18:100297, 2020.

- [25] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-Net: Convolutional networks for biomedical image segmentation. In Nassir Navab, Joachim Hornegger, William M. Wells, and Alejandro F. Frangi, editors, Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, pages 234–241, Cham, 2015. Springer International Publishing.

- [26] Feng Shi, Jun Wang, Jun Shi, Ziyan Wu, Qian Wang, Zhenyu Tang, Kelei He, Yinghuan Shi, and Dinggang Shen. Review of artificial intelligence techniques in imaging data acquisition, segmentation, and diagnosis for covid-19. IEEE Reviews in Biomedical Engineering, 14:4–15, 2021.

- [27] Jae Mun Sim, Yonnim Lee, and Ohbyung Kwon. Acoustic sensor based recognition of human activity in everyday life for smart home services. International Journal of Distributed Sensor Networks, 11(9), 2015.

- [28] Arun Balajee Vasudevan, Dengxin Dai, and Luc Van Gool. Semantic object prediction and spatial sound super-resolution with binaural sounds. In Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm, editors, Computer Vision – ECCV 2020, pages 638–655, Cham, 2020. Springer International Publishing.

- [29] Fei Wang, Sanping Zhou, Stanislav Panev, Jinsong Han, and Dong Huang. Person-in-WiFi: Fine-grained person perception using WiFi. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pages 5451–5460, 2019.

- [30] W. Wang, Y. Song, J. Zhang, and H. Deng. Automatic parking of vehicles: A review of literatures. International Journal of Automotive Technology, 15(6):967–978, 2014.

- [31] Linlin Xia, Jiashuo Cui, Ran Shen, Xun Xu, Yiping Gao, and Xinying Li. A survey of image semantics-based visual simultaneous localization and mapping: Application-oriented solutions to autonomous navigation of mobile robots. International Journal of Advanced Robotic Systems, 17(3):1729881420919185, 2020.

- [32] Yadong Xie, Fan Li, Yue Wu, and Yu Wang. HearFit: Fitness monitoring on smart speakers via active acoustic sensing. In IEEE INFOCOM 2021-IEEE Conference on Computer Communications, pages 1–10. IEEE, 2021.

- [33] Koji Yatani and Khai N Truong. Bodyscope: A wearable acoustic sensor for activity recognition. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, pages 341–350, 2012.

- [34] Hong-Bo Zhang, Yi-Xiang Zhang, Bineng Zhong, Qing Lei, Lijie Yang, Ji-Xiang Du, and Duan-Sheng Chen. A comprehensive survey of vision-based human action recognition methods. Sensors, 19(5), 2019.