Investigating Conceptual Blending of a Diffusion Model

for Improving Nonword-to-Image Generation

Abstract.

Text-to-image diffusion models sometimes depict blended concepts in the generated images. One promising use case of this effect would be the nonword-to-image generation task which attempts to generate images intuitively imaginable from a non-existing word (nonword). To realize nonword-to-image generation, an existing study focused on associating nonwords with similar-sounding words. Since each nonword can have multiple similar-sounding words, generating images containing their blended concepts would increase intuitiveness, facilitating creative activities and promoting computational psycholinguistics. Nevertheless, no existing study has quantitatively evaluated this effect in either diffusion models or the nonword-to-image generation paradigm. Therefore, this paper first analyzes the conceptual blending in a pretrained diffusion model, Stable Diffusion. The analysis reveals that a high percentage of generated images depict blended concepts when inputting an embedding interpolating between the text embeddings of two text prompts referring to different concepts. Next, this paper explores the best text embedding space conversion method of an existing nonword-to-image generation framework to ensure both the occurrence of conceptual blending and image generation quality. We compare the conventional direct prediction approach with the proposed method that combines -nearest neighbor search and linear regression. Evaluation reveals that the enhanced accuracy of the embedding space conversion by the proposed method improves the image generation quality, while the emergence of conceptual blending could be attributed mainly to the specific dimensions of the high-dimensional text embedding space.

[]Figure 1. Fully described in the text.

1. Introduction

Text-to-image diffusion models (Sohl-Dickstein et al., 2015; Rombach et al., 2022) generate images depicting blended concepts when an interpolated embedding between embeddings of multiple text prompts is input (Melzi et al., 2023). Figure 1 illustrates the conceptual blending exhibited by a diffusion model, Stable Diffusion (Rombach et al., 2022; Vision and at Ludwig Maximilian University of Munich, 2022). As it uses Contrastive Language-Image Pretraining (CLIP) (Radford et al., 2021) text encoder for computing conditioning text embeddings, it sometimes generates blended concepts (e.g., ``calf in a cave'') when inputting the midpoint of the CLIP text embeddings of two prompts referring to different concepts (``calf'' and ``cave'').

This conceptual blending suggests various promising use cases, although the effect itself has not been well-studied. One such use case is the nonword-to-image generation task (Matsuhira et al., 2023b, a, 2024) which aims to generate images intuitively imageable from a given non-existing word (nonword). Generating such images for a nonword can facilitate creative activities including brand naming and computational psycholinguistics. One approach for this task suggested by an existing study (Matsuhira et al., 2023b, a, 2024) is to generate images depicting concepts of similar-sounding words, assuming that humans associate nonwords with the meanings of such words. Here, conceptual blending can improve intuitiveness when nonwords are associated with multiple words. For instance, if a nonword ``calve'' (/”kæv/111This paper describes word pronunciation using International Phonetic Alphabet (IPA) symbols. These symbols are also used as input of the existing nonword-to-image generation method to calculate pronunciation similarity.) is associated with two similar-sounding words ``calf'' (/”kæf/) and ``cave'' (/”keIv/), it can be more intuitive to generate images depicting the blended concept of the two words than depicting only either concept.

Nonetheless, none of the existing literature provides a clear answer as to under which conditions diffusion models exhibit conceptual blending, and how it emerges in nonword-to-image generation results. Therefore, this paper conducts two evaluations to assess the occurrence of conceptual blending in each situation. The first one evaluates the capability of a pretrained Stable Diffusion to exhibit conceptual blending by detecting the presence of blended concepts in the generated images. Using these detection metrics, the second one evaluates the occurrence of the effect in nonword-to-image generation results. Here, we explore the best text embedding space conversion method in the nonword-to-image generation framework adopting the same pretrained Stable Diffusion model. The conventional framework (Matsuhira et al., 2023b, a, 2024) took a direct approach to train a Multi-Layer Perceptron (MLP) to transfer embeddings between spaces. However, this yields large information loss, which could lead to inaccurate and poor-quality image generation and a reduced chance of conceptual blending. Alternatively, we propose a more accurate method by combining -nearest neighbor search and linear regression. Our evaluation aims to investigate how the reduced loss affects conceptual blending and image generation quality.

Accordingly, this paper makes the following two contributions:

-

•

We quantitatively evaluate a pretrained Stable Diffusion to discover under which conditions it exhibits conceptual blending given an interpolated embedding between two concepts.

-

•

We explore the best text embedding space conversion method in the existing nonword-to-image generation framework to analyze factors that affect the emergence of conceptual blending as well as the image generation quality.

2. Related Work

2.1. Text-to-Image Diffusion Models

A diffusion model (Sohl-Dickstein et al., 2015) is one of the generative models used in most recent text-to-image generation methods (Ramesh et al., 2022; Betker et al., 2023; Nichol et al., 2022; Vision and at Ludwig Maximilian University of Munich, 2022; Saharia et al., 2022). In contrast to conventional generative models (Goodfellow et al., 2014; Kingma and Welling, 2013), it is characterized by its step-by-step image generation procedure which gradually removes noise from a noisy image until a clear image is obtained. It generates images conditioned on a text prompt by utilizing the text embedding computed for the prompt in each denoising step. A latent diffusion model (Rombach et al., 2022) is a more computationally efficient variant. It differs from diffusion models in that it performs the denoising procedure on the latent space instead of the image pixel space. Stable Diffusion (Vision and at Ludwig Maximilian University of Munich, 2022) is one implementation of such a latent diffusion model which adopts the CLIP (Radford et al., 2021) text encoder as the conditioning text embedding calculator and is trained using a large-scale dataset crawled from the Web called LAION-5B (Schuhmann et al., 2022).

2.2. Conceptual Blending

Conceptual blending stems from cognitive linguistics, denoting a cognitive task to blend different concepts in minds to form a new concept inheriting their characteristics (Fauconnier and Turner, 1998; Ritchie, 2004). In Informatics, Melzi et al. (Melzi et al., 2023) were the first to focus on it in diffusion models. Through a case study, they found that a pretrained Stable Diffusion exhibits conceptual blending when generating images from interpolated text embeddings between two concepts, even without additional training. Yet, there has been no other work on conceptual blending in diffusion models and it is still unclear under which conditions these models blend concepts.

One reason would be its seemingly limited use cases. The general text-to-image generation paradigm assumes that users can give clear instructions in the form of text prompts into the model. Hence, if users demand images depicting blended concepts, they can instruct the model by typing detailed texts (e.g., ``an image blending both a calf and a cave''). However, some applications cannot expect users to put such a detailed text prompt, making it hard to satisfy their needs for blending concepts.

One such case is the nonword-to-image generation task (Matsuhira et al., 2023b, a, 2024) which will be described in Section 2.3, where the input contains primarily non-existing words (nonwords). Before improving the nonword-to-image generation performance, Section 3 of this paper quantitatively studies the emergence of the conceptual blending targeting Stable Diffusion.

2.3. Nonword-to-Image Generation Task

The nonword-to-image generation task attempts to generate images intuitively imageable from a given non-existing word (nonword) (Matsuhira et al., 2023b, a, 2024). The difference to the general text-to-image generation task is that the nonword-to-image generation task has no explicit ground-truth concepts that must be depicted in the generated images since nonwords have no general interpretation of their meanings. However, as psycholinguistic studies suggest (Sapir, 1929; Köhler, 1929; Hinton et al., 1995), humans tend to associate specific meanings even with nonwords in a somewhat predictable way. Hence, generating intuitive images for nonwords can profit in various applications including brand naming and language learning, while also fostering computational psycholinguistics.

One existing study (Matsuhira et al., 2023b, a, 2024) tackled this by focusing on the human nature of associating a nonword with its similar-sounding words and generating images containing the concepts of such words. They trained a nonword encoder called NonwordCLIP that computes CLIP embeddings for the spelling or pronunciation of nonwords considering their phonetically similar words and inserted it into the Stable Diffusion architecture in place of the CLIP text encoder. To correct the domain gap between the CLIP embedding space output by their language encoder (pooled embedding space) and that required by Stable Diffusion (last-hidden-state embedding space), they trained an MLP to convert embeddings in the former space into the latter. However, such a direct approach yields large information loss because the CLIP pooled embedding space is a compressed space of the last-hidden-state embedding space and thus less informative. This loss can affect the occurrence of conceptual blending and image generation quality.

Hence, Section 4 proposes a more accurate text embedding space conversion method. To bypass the information loss, the proposed method combines -nearest neighbor search and linear regression. Our evaluation in Section 4 compares the proposed method with the conventional MLP-based approach in terms of both conceptual blending and image generation quality.

3. Investigating Conceptual Blending

This section quantitatively evaluates under which conditions conceptual blending emerges in Stable Diffusion. To assess this, we detect whether an image generation result exhibits conceptual blending by identifying the concepts depicted in each generated image.

3.1. Experimental Setup

3.1.1. Task

Given an interpolated embedding between two text prompts describing concepts A and B, respectively, we measure how often a text-to-image diffusion model exhibits conceptual blending of the two concepts. This paper selects Stable Diffusion222Stable Diffusion-v1-4 on the model card: https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md (Accessed August 7, 2024) (Vision and at Ludwig Maximilian University of Munich, 2022) as a diffusion model which uses the CLIP333CLIP ViT-L/14 on the model card: https://github.com/openai/CLIP/blob/main/model-card.md (Accessed August 7, 2024) (Radford et al., 2021) text encoder to compute the CLIP last-hidden-space text embeddings. For each interpolated embedding, images are generated for analysis.

3.1.2. Evaluation Data

A list of 1,000 existing English nouns representing different concepts is prepared, referred to as EvalNouns1000 hereafter. These nouns are randomly taken from the MRC Psycholinguistic Database (Coltheart, 1981) with the restrictions of word imageability and frequency. Low-imageable and low-frequent words are filtered out to ensure that the concepts can be depicted clearly in images and that the words are not rare in everyday use (See supplementary materials for more details). Next, for two nouns (denoting concepts A and B) selected from EvalNouns1000, an interpolated embedding in the CLIP last-hidden-state space, , is calculated as a linear interpolation between the text embeddings of the two nouns/concepts. In detail, for each pair of the embeddings and corresponding to concepts A and B, the interpolated embedding is calculated as

| (1) |

where denotes an interpolation ratio ranging between 0 and 1. We use the prompt ``a photo of a <WORD>'' for calculating embeddings and where ``<WORD>'' denotes each concept. 1,000 such pairs are created by randomly choosing two nouns from EvalNouns1000. The interpolation ratio for each pair is randomly assigned from 0.1 to 0.9 with the step size 0.1. For each pair, images are generated using the interpolated embedding.

3.2. Detecting a Single Visual Concept

Conceptual blending can be regarded as image generation depicting multiple visual concepts. Hence, to detect this, we first need to classify whether each image depicts a single visual concept.

For this classification, we exploit CLIP score (Radford et al., 2021) which measures the cross-modal similarity between an image and a text. CLIP score is a cosine similarity between the embeddings of an image and a text encoded by a pretrained CLIP444This paper uses CLIP ViT-L/14 for the calculation of CLIP score, too. (Radford et al., 2021). A higher CLIP score between an image and a text indicates a higher likelihood of the image matching the text. Measuring this score between a generated image and a text that describes concept A enables detecting whether the single concept A is depicted in the image.

[]Figure 2. Fully described in the text.

|

[]Figure 3. Fully described in the text.

We approach this by finding the boundary on the CLIP score axis that best classifies the presence of a concept in a generated image. This paper defines it as the naïve Bayes decision boundary between the CLIP scores of matching and mismatching image-text pairs. The matching pairs are those of a generated image and its text prompt used for generating the image. The mismatching pairs are those of a generated image and a text prompt unrelated to the image generation. Here, 10,000 matching pairs are created by generating ten images for each concept in EvalNouns1000 with a prompt ``a photo of a <WORD>''. The mismatching pairs are created by shuffling the correspondence of the matching pairs. In calculating CLIP scores, we perform prompt engineering to increase the number of samples and thus the precision of the scores (See supplemental materials for more details). Figure 2 shows the distribution of the CLIP scores between each pair in the matching and mismatching pairs. The decision boundary indicated in the figure classifies whether a given image-text pair matches or mismatches, detecting the presence of the concept denoted by the text in the image. This single-concept classifier with the threshold is used in later sections to detect conceptual blending in images.

3.3. Detecting Conceptual Blending

Next, a set of generated images for each interpolated embedding is inspected to see whether it exhibits conceptual blending. We detect conceptual blending by identifying the depicted concepts in each image using the single-concept classifiers introduced in Section 3.2.

We first define two types of conceptual blending. Here, two image generation cases are distinguished involving conceptual blending between two concepts, A and B: Blended Concept Depiction (BCD) and Mixed Concept Depiction (MCD). BCD corresponds to the narrow sense of conceptual blending, where at least of the generated images show the blended concept of concepts A and B. MCD denotes a broader sense of conceptual blending, corresponding to the cases where at least images show concept A while at least images which do not necessarily show concept A, show concept B. We measure MCD as well as BCD because, although less direct than BCD, its emergence can still be a clue that both concepts A and B have been considered in the image generation result.

To detect both cases, we first detect the respective presence of concepts A and B in each of the images using the classifier introduced in Section 3.2. Then, we count BCD cases in which both concepts A and B are identified simultaneously in at least of the images. Meanwhile, we also count MCD cases where concepts A and B are identified in at least of the images, respectively. Figure 3 shows examples of BCD and MCD cases detected on image generation results.

| Interpolation Ratio of Concept A to Concept B | ||||||||||

| Case | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | Overall |

| Concept A | 0.152 | 0.311 | 0.411 | 0.709 | 0.948 | 0.981 | 1.000 | 1.000 | 1.000 | 0.732 |

| Concept B | 1.000 | 1.000 | 1.000 | 0.991 | 0.914 | 0.731 | 0.472 | 0.277 | 0.265 | 0.738 |

| BCD | 0.143 | 0.301 | 0.348 | 0.521 | 0.621 | 0.593 | 0.416 | 0.257 | 0.257 | 0.389 |

| MCD | 0.152 | 0.311 | 0.411 | 0.701 | 0.862 | 0.722 | 0.472 | 0.277 | 0.265 | 0.471 |

| Support | 105 | 103 | 112 | 117 | 116 | 108 | 125 | 101 | 113 | 1,000 |

3.4. Results

Table 1 shows the ratios of cases where Concept A, Concept B, BCD, and MCD are detected respectively in each image generation result. These are measured under the settings and (See supplemental materials for results under different settings).

We first observed a BCD ratio of 0.621 and an MCD ratio of 0.862 when inputting the midpoint between the embeddings of concepts A and B. This indicates that more than 60% and 85% of image generation results depicted blended and mixed concepts, respectively. When aggregating all interpolation ratios, they dropped to 0.389 and 0.471, respectively. These ratios are still high because Stable Diffusion is not explicitly trained to visualize conceptual blending.

The results also revealed that the BCD and MCD ratios achieved the highest scores at the interpolation ratio of 0.5. This aligns with the previous finding (Melzi et al., 2023), suggesting that inputting the midpoint embedding maximizes the occurrence of conceptual blending.

Furthermore, the detected BCD cases appeared to contain two further subcases. One is the case where two concepts were depicted simultaneously in an image (e.g., ``calf'' and ``cave'' shown in Fig. 1), and the other is where the textures of two concepts were blended (e.g., ``armour'' and ``spider'' shown in Fig. 3). The former is more likely to occur when concepts A and B are often co-photographed in a real-world scene, whereas the latter is likely to occur when either of the concepts can grammatically work as an adjective. Thus, Stable Diffusion depicts ``calf'' and ``cave'' simultaneously since ``calf'' can be in a ``cave'' in the real world (See Fig. 1). Meanwhile, since ``armour'' can work as the stem of an adjective as in ``armoured'', it can generate images depicting ``an armoured spider''.

4. Nonword-to-Image Generation

Based on the findings reported in Section 3.4, this section investigates conceptual blending in nonword-to-image generation results.

[]Figure 4. Fully described in the text.

4.1. Generalized Framework

Figure 4 shows a generalized framework proposed by an existing study (Matsuhira et al., 2023b, a, 2024) which utilizes a CLIP text encoder and Stable Diffusion to generate images for a nonword. First, a nonword encoder encodes a target nonword into the CLIP pooled embedding space to be in a location interpolating its similar-sounding words. To realize this, the existing study trains a language encoder NonwordCLIP by distilling the CLIP text encoder to project a nonword into the CLIP pooled embedding space. This distillation is performed in the pooled embedding space to ensure compatibility with the CLIP image encoder which also encodes images into the pooled embedding space. During this distillation, a phonetic prior is inserted into the nonword encoder to approximate nonword embeddings to those of the phonetically similar existing words.

After this nonword projection, an embedding space conversion method converts the pooled embedding into a corresponding last-hidden-state embedding before generating images using Stable Diffusion. This process is required by Stable Diffusion because it generates images from the CLIP last-hidden-state embedding space, not the pooled embedding space.

This paper adopts the same framework to first project nonwords into the pooled embedding space rather than projecting directly into the last-hidden-state embedding space, and then convert embeddings from the former space to the latter. We stick to this framework because using the CLIP pooled embedding space is a more common tradition than using other embedding spaces, and is also more flexible for extension to other image generation paradigms with different text-to-image generation models. For example, the same framework can be used for audio-to-image generation using a pretrained audio encoder distilled from the CLIP pooled embedding space (Wu et al., 2022) even if a generative model requires the CLIP penultimate layer's hidden-state embeddings, only with a minor adjustment.

4.2. Proposed Embedding Space Conversion

To convert embeddings between the two CLIP embedding spaces, the existing study (Matsuhira et al., 2023b, a, 2024) trained an MLP that reconstructs a last-hidden-state embedding from a pooled embedding. However, since the CLIP pooled embedding space is a compressed space of the last-hidden-state embedding space, it is impossible to perfectly reconstruct last-hidden-state embeddings directly from pooled embeddings. This can lead to poor-quality image generation and a reduced chance of generating blended concepts.

To bypass this information loss, the proposed method takes a different approach; We combine nearest-neighbor search and linear regression to convert a pooled embedding into its last-hidden-state embedding. In the proposed method, last-hidden-state embeddings are calculated as interpolation in the last-hidden-state embedding space using the neighborhood relationships in the pooled embedding space. This approach improves the conversion accuracy because the last-hidden-state embedding is estimated not directly from the pooled embedding but based on the interpolation of the neighborhood embeddings in the last-hidden-state embedding space.

The proposed method first performs -nearest neighbor search for a target nonword embedding in the pooled embedding space, obtaining text embeddings . Then, a linear regressor is trained to predict from its nearest-neighbor embeddings, predicting optimized coefficients , which minimizes the loss of

| (2) |

Lastly, we estimate the last-hidden-state embedding by performing a linear combination in the last-hidden-state embedding space using the optimized coefficients, which is formulated as

| (3) |

Our regressor does not employ a constant variable as the resulting intercept is valid only for regression in the pooled embedding space.

This estimation works only if the data distributions in two embedding spaces are similar. Our case should meet this requirement since the CLIP pooled embedding space is a space linearly compressed from the CLIP last-hidden-state embedding space (Radford et al., 2021).

4.3. Evaluating Embedding Space Conversion

This section evaluates the proposed embedding space conversion method in terms of information loss, neighborhood relationships, and image generation quality.

4.3.1. Task

Given an interpolated embedding in the pooled embedding space, the task is to estimate its last-hidden-state embedding that preserves the positional relationships with its neighbors with minimum loss. In this evaluation, we first prepare pairs of interpolated embeddings in both pooled and last-hidden-state embedding spaces. Equation (1) and its analogy to the pooled output space are used for calculating the interpolated embeddings in each space. For each pair, the last-hidden-state interpolated embedding is regarded as the ground truth for the pooled interpolated embedding. This evaluation does not use actual outputs of the nonword encoder as shown in the generalized framework because there is no direct way to prepare corresponding last-hidden-state embeddings.

4.3.2. Implementation

The proposed method performs -nearest neighbor search on 26,143 data samples in the pooled embedding space and uses the corresponding 26,143 samples for the estimation in the last-hidden-state embedding space. These samples, which we refer to as TrainWords26143, are taken from the existing study (Matsuhira et al., 2023b, a, 2024). TrainWords26143 consists of 26,143 words listed on the Spell Checker Oriented Word Lists (SCOWL)555http://wordlist.aspell.net/ (Accessed August 7, 2024). The previous work selected those words based on word frequency and pronunciation availability. In this paper, we create an embedding from each word using a prompt ``a photo of a <WORD>''. We also confirmed that our EvalNouns1000 is a subset of TrainWords26143. This evaluation tests the hyperparameter with different integers ranging from 1 to 1,000.

We compare the proposed method with the MLP-based method identical to the one used in the existing study (Matsuhira et al., 2023b, a, 2024). Their training data for the MLP used a set of 26,455 words. This set is almost identical to TrainWords26143, with the only difference being that it contains an additional 312 words for which no pronunciation was available. To increase the number of samples, they created three prompts for each word in the wordlist: ``<WORD>'', ``a photo of <WORD>'', and ``a photo of a <WORD>'', although this augmentation is not performed in our method.

4.3.3. Evaluation Data and Metrics

As evaluation data, we use the 1,000 matching pairs created in Section 3, which are pairs of two words randomly selected from EvalNouns1000, to prepare 1,000 interpolated embeddings.

As a metric for the information loss, we compute the L2 distance between a ground-truth embedding and an estimated embedding in the last-hidden-state embedding space averaged over all samples, which we call an L2 error. To see how well the neighborhood relationships are preserved, we also report Spearman's rank correlation between the rankings of neighborhood embeddings within TrainWords26143 averaged over all samples. The rankings for ground-truth and estimated embeddings are obtained by searching their nearest-neighbor embeddings in the pooled and last-hidden-state embedding spaces, respectively. All the nearest-neighbor searches during this evaluation are performed on an L2 distance basis. The metric is measured under two different s: 2 and 5 (See supplementary materials for results under more various settings). Before calculating these metrics, we flatten each last-hidden-state embedding shaped into a dimensional vector.

Image generation quality is measured using Fréchet Inception Distance (FID) (Heusel et al., 2017). This evaluation measures two FIDs: and . Calculating the former uses images generated from text embeddings of real text prompts which should depict clear visual concepts. In contrast, calculating the latter uses images generated from ground-truth interpolated embeddings which can exhibit conceptual blending as confirmed in Section 3. As a reference for calculating these metrics, ten images are generated from each of the 1,000 text embeddings of EvalNouns1000 and the 1,000 ground-truth interpolated embeddings introduced above666We calculate FID using a Python package pytorch-fid: https://pypi.org/project/pytorch-fid/ (Accessed August 7, 2024) with a prompt.

4.3.4. Results

| Method | L2 Error () | () | () | () | () | |

|---|---|---|---|---|---|---|

| MLP (Matsuhira et al., 2023b, a, 2024) | 245.38 | 0.846 | 0.783 | 16.03 | 11.59 | |

| Ours ( | 1) | 47.12 | 0.902 | 0.702 | 13.02 | 10.95 |

| Ours ( | 2) | 37.38 | 0.880 | 0.788 | 12.25 | 7.58 |

| Ours ( | 5) | 24.63 | 0.884 | 0.765 | 12.71 | 4.57 |

| Ours ( | 10) | 19.29 | 0.882 | 0.771 | 13.21 | 3.52 |

| Ours ( | 100) | 10.85 | 0.888 | 0.781 | 13.80 | 1.96 |

| Ours ( | 200) | 10.30 | 0.886 | 0.791 | 13.85 | 1.87 |

| Ours ( | 300) | 10.65 | 0.890 | 0.793 | 13.76 | 1.86 |

| Ours ( | 400) | 11.68 | 0.890 | 0.797 | 13.66 | 1.95 |

| Ours ( | 500) | 14.49 | 0.880 | 0.802 | 13.64 | 2.19 |

| Ours ( | 1,000) | 39.14 | 0.882 | 0.799 | 13.73 | 4.11 |

|

[]Figure 5. Fully described in the text.

Results are shown in Table 2. First, we can see that the L2 error of the proposed method was always lower than that of the comparative MLP-based method with a great margin. This demonstrates the strong advantage of our interpolation approach over the direct prediction approach. The rank correlations of distances within a few neighborhood samples also showed a large gain over MLP, while tended to decrease as increased (See more results in supplementary materials). This can be explained as the curse of dimensionality, where the L2 distances measured in high-dimensional spaces become less diverse. Yet, the higher correlations of the proposed method within small s indicate that it preserved neighborhood relationships better than the comparative method.

Furthermore, the proposed method showed better scores for both FID metrics than the comparative method. This indicates that our more precise and accurate last-hidden-state embedding estimation has improved image generation quality, too. Notably, showed 1.86 point at minimum when . This small value indicates that the generated images using the proposed method were almost identical to those generated using the ground-truth interpolated embeddings. Since we have confirmed in Section 3 that the ground-truth embeddings can yield BCD in up to 60% image generation results, it suggests that the proposed method with or can also yield conceptual blending in a similar frequency, which will be assessed more deeply in the next section.

4.4. Assessing Nonword-to-Image Generation

Lastly, we assess conceptual blending in image generation results generated for actual nonwords.

4.4.1. Implementation

As an encoder to compute CLIP pooled embeddings for nonwords, we retrain the NonwordCLIP-P (Matsuhira et al., 2023b, a, 2024) pronunciation encoder with a customized dataset. Since the original training dataset contained various sentences from image captioning datasets, the trained model tended to be biased on the word frequency in the dataset. For instance, in the case of the nonword ``calve'' (/”kæv/) which we assumed to have the two most similar-sounding words ``calf'' (/”kæf/) and ``cave'' (/”keIv/), its nonword embeddings were always encoded in a similar position to ``calf'' because the dataset contained ``calf'' more frequently than ``cave''.

To avoid this, we construct a dataset in which each word appears almost an equal number of times. The dataset consists of 5,496 highly-imageable and -frequent nouns and noun phrases created by combining the MRC Psycholinguistic Database (Coltheart, 1981), a Python package wordfreq (Speer et al., 2018), and an English lexical database WordNet (Miller, 1995) (See supplementary materials for more details). We augment the dataset twice using the two prompts ``<WORD>'' and ``a photo of a <WORD>'', resulting in the training data of 10,992 samples.

4.4.2. Evaluation Data and Metrics

BCG and MCG ratios introduced in Section 3 are measured to assess conceptual blending in nonword-to-image generation results. To calculate these, this evaluation lacks ground-truth concepts A and B since the nonword embeddings are not computed by interpolating the embeddings of two concepts. Hence, to prepare pseudo-concepts A and B, we search the top-two nearest-neighbor words for each nonword embedding in the CLIP pooled embedding space. For instance, if the top-two nearest-neighbor embeddings of the nonword embedding ``calve'' are ``calf'' and ``cave'', our metrics detect occurrences of conceptual blending of ``calf'' and ``cave'' in the image generation result for ``calve''.

To align the metric calculation condition to Section 3, the nearest neighbors are searched from words in EvalNouns1000, and the hyperparameters for the metrics are set as and . When searching the second nearest-neighbor word, we exclude words that are too close to the first nearest-neighbor word from the candidates. This is to avoid concepts A and B being semantically too similar (e.g., ``bloom'' and ``blossom'') and mis-detecting the image generation of only concept A as the emergence of conceptual blending. The threshold to judge closeness is set as the first percentile of the distribution of the L2 distance between each of pairs of two samples in EvalNouns1000.

| Method | 1st-NN Concept | 2nd-NN Concept | BCD | MCD | |

|---|---|---|---|---|---|

| MLP (Matsuhira et al., 2023b, a, 2024) | 0.930 | 0.847 | 0.669 | 0.789 | |

| Ours ( | 1) | 0.913 | 0.620 | 0.545 | 0.566 |

| Ours ( | 2) | 0.913 | 0.669 | 0.550 | 0.607 |

| Ours ( | 5) | 0.959 | 0.769 | 0.603 | 0.727 |

| Ours ( | 10) | 0.926 | 0.798 | 0.579 | 0.727 |

| Ours ( | 100) | 0.913 | 0.781 | 0.595 | 0.719 |

| Ours ( | 200) | 0.938 | 0.810 | 0.624 | 0.760 |

| Ours ( | 300) | 0.884 | 0.810 | 0.607 | 0.723 |

| Ours ( | 400) | 0.884 | 0.818 | 0.612 | 0.740 |

| Ours ( | 500) | 0.876 | 0.810 | 0.628 | 0.723 |

| Ours ( | 1,000) | 0.913 | 0.868 | 0.707 | 0.789 |

For nonwords, we use Sabbatino et al.'s 270 randomly created English nonwords (Sabbatino et al., 2022), among which our evaluation uses only 242 nonwords whose embeddings are not located in a close position to existing words. Specifically, filtering is performed to restrict nonwords to be located in positions where the ratio of the distances to the top-one and top-two nearest-neighbor words, which corresponds to the interpolation ratio in Section 3, is between 0.4 and 0.6. The others are excluded because, as confirmed in Table 1, they are less likely to yield conceptual blending and can disturb metrics.

4.4.3. Results

The results are shown in Table 3. First, we confirmed that the proposed method yielded a maximum BCG ratio of 0.707 and an MCD ratio of 0.789 when was set to 1,000. This BCG ratio is much larger than the maximum value observed in Table 1 when the interpolation ratio was 0.5. The MCG and BCG ratios of the proposed method had another local maximum at , where the previous evaluation suggested the most accurate embedding conversion in the L2 error. These results indicate that the accurate embedding space conversion method did increase the chance of conceptual blending, but also suggest other factors that control the emergence of the effect. This can also be deduced by seeing the comparative method which yielded better BCD and MCD ratios than the proposed method with while producing a larger L2 error in Table 2.

To seek insights into those factors, we next look at actual nonword-to-image generation results exhibiting conceptual blending. Figure 5 shows ten images for each of the three nonwords ``calve'' (/”kæv/), ``broin'' (/”brOIn/), and ``blour'' (/”blaU@r/) generated using each method. According to the top-two nearest-neighbor words, the nonword ``calve'' has similar sounding words ``calf'' and ``cave'', ``broin'' has ``brain'' and ``bone'', and ``blour'' has ``flower'' and ``flour''. The figure indicates that all the methods can depict the blended concepts of the two similar-sounding words. Meanwhile, they mainly differed in image generation qualities and abstractness of the depicted objects, as indicated in the FID metrics in the previous evaluation. Most notably, the proposed method with tended to exaggerate the texture of visual concepts, making the images very abstract. Also, as especially observable in Figs. 5 and 5, the MLP-based method tended to lack details of objects compared to the proposed method. Such abstractness can have overrated the concept detection metrics, as our classifier used in the metrics is prone to misdetection for images containing abstract objects that are recognizable in various ways.

As for why the inaccuracy of the embedding conversion did not affect conceptual blending, the dimensionality of the CLIP last-hidden-state embedding space can be the key. Last-hidden-state embeddings of the CLIP model used in this paper have the shape , where denotes the maximum token length of a transformer model and each dimension corresponds to each token of the input text prompt. In our experimental setup, the input prompt used to create the evaluation data was always restricted to ``a photo of a <WORD>''. This prompt was generally tokenized into less than ten tokens, suggesting that the dimensions of only the first less than ten tokens were more essential than the other dimensions.

Considering this, we calculate the dimension-wise L2 error metric in the same setting as Table 2, as shown in Fig. 6. As expected, the MLP-based approach yielded more information loss than the proposed method in most dimensions. However, the loss became comparable especially in the dimension of the first token corresponding to the [CLS] token, which usually represents the global feature of a last-hidden-state embedding. A similar trend can be seen in the position of the seventh token, where [EOS] (End Of Sentence) token typically falls. These results indicate that the embedding conversion accuracy in the dimension of [CLS] and [EOS] tokens would be the most responsible for the occurrence of conceptual blending in text-to-image diffusion models. Stable Diffusion could be referring dominantly to these two dimensions for determining concepts to blend in our experimental setup.

[]Figure 6. Fully described in the text.

5. Limitations

The results represented in Tables 1 and 3 are affected by the precision of the classifier used to detect conceptual blending. Therefore, constructing a more precise classifier would yield a more reliable measurement for blending. Besides, the proposed nonword-to-image generation method inherits limitations from the original Stable Diffusion regarding the ability to blend concepts. Due to this, image generation for nonwords would not always blend concepts even if they had multiple similar-sounding words.

6. Conclusion

This paper first quantitatively analyzed under which conditions text-to-image diffusion models exhibit conceptual blending. We targeted a pretrained model, Stable Diffusion (Rombach et al., 2022; Vision and at Ludwig Maximilian University of Munich, 2022), finding that it blends concepts in a high percentage of generated images when inputting an embedding interpolating between the text embeddings of two text prompts referring to different concepts. Next, we explored the best embedding space conversion method in the nonword-to-image generation framework (Matsuhira et al., 2023b, a, 2024) to analyze factors that affect conceptual blending and image generation quality. We compared the conventional direct prediction approach with the proposed method combining -nearest neighbor search and linear regression. The evaluation showed that the embedding space conversion accuracy improved by the proposed method contributed to better image generation quality. It also suggested key dimensions in the high-dimensional text embedding space that could trigger the text-to-diffusion models to determine which concepts to blend.

As future work, it would be interesting to investigate the correlation of the conceptual blending in diffusion models and nonword-to-image generation results with human cognition. Furthermore, evaluations with diverse prompts could yield additional insights into the occurrence of this phenomenon.

Acknowledgements.

This work was partly supported by Microsoft Research CORE16 program and JSPS Grant-in-aid for Scientific Research (22H03612 and 23K24868). The computation was carried out using the General Projects on supercomputer ``Flow'' at Information Technology Center, Nagoya University. This work was financially supported by JST SPRING, Grant Number JPMJSP2125. The author C. Matsuhira would like to take this opportunity to thank the ``THERS Make New Standards Program for the Next Generation Researchers''.References

- (1)

- Betker et al. (2023) James Betker, Gabriel Goh, Li Jing, Tim Brooks, Jianfeng Wang, Linjie Li, Long Ouyang, Juntang Zhuang, Joyce Lee, Yufei Guo, Wesam Manassra, Prafulla Dhariwal, Casey Chu, Yunxin Jiao, and Aditya Ramesh. 2023. Improving image generation with better captions. https://cdn.openai.com/papers/dall-e-3.pdf (Accessed August 7, 2024), OpenAI.

- Coltheart (1981) Max Coltheart. 1981. The MRC psycholinguistic database. Q. J. Exp. Psychol. Section A 33, 4 (1981), 497–505. https://doi.org/10.1080/14640748108400805

- Fauconnier and Turner (1998) Gilles Fauconnier and Mark Turner. 1998. Conceptual integration networks. Cog. Sci. 22, 2 (1998), 133–187. https://doi.org/10.1016/S0364-0213(99)80038-X

- Goodfellow et al. (2014) Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27 (12 2014), 9 pages.

- Heusel et al. (2017) Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. Adv. Neural Inf. Process. Syst. 30 (2017), 6626–6637.

- Hinton et al. (1995) Leanne Hinton, Johanna Nichols, and John J. Ohala. 1995. Sound Symbolism. Cambridge University Press, Cambridge, England, UK. https://doi.org/10.1017/CBO9780511751806

- Kingma and Welling (2013) Diederik P. Kingma and Max Welling. 2013. Auto-encoding variational bayes. Comput. Res. Reposit., arXiv Preprints, arXiv:1312.6114. https://doi.org/10.48550/arXiv.1312.6114

- Köhler (1929) Wolfgang Köhler. 1929. Gestalt Psychology. H. Liveright, New York, NY, USA.

- Matsuhira et al. (2023a) Chihaya Matsuhira, Marc A. Kastner, Takahiro Komamizu, Takatsugu Hirayama, Keisuke Doman, and Ichiro Ide. 2023a. Nonword-to-image generation considering perceptual association of phonetically similar words. In Proc. 1st Int. Workshop Multimed. Content Gener. Eval.: New Methods Pract. (Ottawa, ON, Canada). 115–125. https://doi.org/10.1145/3607541.3616818

- Matsuhira et al. (2023b) Chihaya Matsuhira, Marc A. Kastner, Takahiro Komamizu, Takatsugu Hirayama, Keisuke Doman, Yasutomo Kawanishi, and Ichiro Ide. 2023b. IPA-CLIP: Integrating phonetic priors into vision and language pretraining. Comput. Res. Reposit., arXiv Preprints, arXiv:2303.03144. https://doi.org/10.48550/arxiv.2303.03144

- Matsuhira et al. (2024) Chihaya Matsuhira, Marc A. Kastner, Takahiro Komamizu, Takatsugu Hirayama, Keisuke Doman, Yasutomo Kawanishi, and Ichiro Ide. 2024. Interpolating the text-to-image correspondence based on phonetic and phonological similarities for nonword-to-image generation. IEEE Access 12 (2024), 41299–41316. https://doi.org/10.1109/ACCESS.2024.3378095

- Melzi et al. (2023) Simone Melzi, Rafael Peñaloza, and Alessandro Raganato. 2023. Does Stable Diffusion dream of electric sheep?. In Proc. 7th Image Schema Day (Rhodes, Greece) (CEUR Workshop Proceedings 3511). 11 pages.

- Miller (1995) George A. Miller. 1995. WordNet: A lexical database for English. Commun. ACM 38, 11 (1995), 39–41. https://doi.org/10.1145/219717.219748

- Nichol et al. (2022) Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. 2022. GLIDE: Towards photorealistic image generation and editing with text-guided diffusion models. In Proc. 39th Int. Conf. Mach. Learn., Proc. Mach. Learn. Res. (Baltimore, MD, USA), Vol. 162. 16784–16804.

- Radford et al. (2021) Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning transferable visual models from natural language supervision. In Proc. 38th Int. Conf. Mach. Learn., Proc. Mach. Learn. Res. (Online), Vol. 139. 8748–8763.

- Ramesh et al. (2022) Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with CLIP latents. Comput. Res. Reposit., arXiv Preprints, arXiv:2204.06125. https://doi.org/10.48550/arXiv.2204.06125

- Ritchie (2004) L. David Ritchie. 2004. Lost in ``conceptual space'': Metaphors of conceptual integration. Metaphor and Symb. 19, 1 (2004), 31–50. https://doi.org/10.1207/S15327868MS1901_2

- Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proc. 2022 IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (New Orleans, LA, USA). 10684–10695. https://doi.org/10.1109/CVPR52688.2022.01042

- Sabbatino et al. (2022) Valentino Sabbatino, Enrica Troiano, Antje Schweitzer, and Roman Klinger. 2022. ``splink'' is happy and ``phrouth'' is scary: Emotion intensity analysis for nonsense words. In Proc. 12th Workshop Comput. Approaches Subj. Sentiment Soc. Media Anal. (Dublin, Ireland). 37–50. https://doi.org/10.18653/v1/2022.wassa-1.4

- Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S. Sara Mahdavi, Rapha Gontijo Lopes, Tim Salimans, Jonathan Ho, David J. Fleet, and Mohammad Norouzi. 2022. Photorealistic text-to-image diffusion models with deep language understanding. Comput. Res. Reposit., arXiv Preprints, arXiv:2205.11487. https://doi.org/10.48550/arxiv.2205.11487

- Sapir (1929) Edward Sapir. 1929. A study in phonetic symbolism. J. Exp. Psychol. 12, 3 (1929), 225–239. https://doi.org/10.1037/h0070931

- Schuhmann et al. (2022) Christoph Schuhmann, Romain Beaumont, Richard Vencu, Cade W. Gordon, Ross Wightman, Mehdi Cherti, Theo Coombes, Aarush Katta, Clayton Mullis, Mitchell Wortsman, Patrick Schramowski, Srivatsa R. Kundurthy, Katherine Crowson, Ludwig Schmidt, Robert Kaczmarczyk, and Jenia Jitsev. 2022. LAION-5B: An open large-scale dataset for training next generation image-text models. Adv. Neural Inf. Process. Syst. 35 (2022), 25278–25294.

- Sohl-Dickstein et al. (2015) Jascha Sohl-Dickstein, Eric Weiss, Niru Maheswaranathan, and Surya Ganguli. 2015. Deep unsupervised learning using nonequilibrium thermodynamics. In Proc. 32nd Int. Conf. Mach. Learn., Proc. Mach. Learn. Res. (Lille, Nord, France), Vol. 37. 2256–2265.

- Speer et al. (2018) Robyn Speer, Joshua Chin, Andrew Lin, Sara Jewett, and Lance Nathan. 2018. LuminosoInsight/wordfreq: v2.2. https://doi.org/10.5281/zenodo.1443582

- Vision and at Ludwig Maximilian University of Munich (2022) Computer Vision and Learning Research Group at Ludwig Maximilian University of Munich. 2022. Stable Diffusion. https://github.com/CompVis/stable-diffusion/ (Accessed August 7, 2024).

- Wu et al. (2022) Ho-Hsiang Wu, Prem Seetharaman, Kundan Kumar, and Juan Pablo Bello. 2022. Wav2CLIP: Learning robust audio representations from CLIP. In Proc. 2022 IEEE Int. Conf. Acoust. Speech Signal Process. (Singapore). 4563–4567. https://doi.org/10.1109/ICASSP43922.2022.9747669

Appendix A Supplementary Materials

A.1. Dataset Creation

A.1.1. EvalNouns1000

EvalNouns1000 is a list of 1,000 nouns created by our paper as evaluation data. This wordlist is used in our paper mainly for the following two purposes:

-

•

To create 10,000 matching and 10,000 mismatching pairs in Section 3.

-

•

To create 1,000 interpolated embeddings in Section 4.3.

As mentioned in our paper, these nouns are randomly taken from the MRC Psycholinguistic Database [2] with the restrictions of word imageability and frequency. This database provides an imageability score for each noun ranging from 100 to 700, where a high score indicates that the noun is highly imageable. Although it also provides word frequency scores, we do not use them but instead use the Python package wordfreq [24]. This is to ensure EvalNouns1000 to be a subset of another dataset TrainWords26143, which will be described later. Selecting words with 500 or more imageability scores and 3.5 or more Zipf frequency values resulted in 1,183 words in total. EvalNouns1000 is created by randomly sampling 1,000 words from these words.

A.1.2. TrainWords26143

TrainWords26143 is a list of 26,143 words compiled by an existing study on nonword-to-image generation [9–11]. Our paper uses this wordlist mainly for the following three purposes:

-

•

Used by the proposed embedding space conversion method to create anchors of -nearest neighbor search and linear regression.

-

•

These anchors are also used for calculating Spearman's rank correlation metrics in Section 4.3.

-

•

A minor-modified one is used to train a comparative Multi-Layer Perceptron (MLP).

As mentioned in our paper, the existing study created this wordlist using the Spell Checker Oriented Word Lists (SCOWL)777http://wordlist.aspell.net/ (Accessed August 7, 2024) and 26,143 words were selected based on word frequency and pronunciation availability. Specifically, a Python package wordfreq [24] was used to remove words having Zipf frequency less than . Also, the Carnegie Mellon University (CMU) dictionary888https://github.com/menelik3/cmudict-ipa/ (Accessed August 7, 2024) was looked up for checking the pronunciation availability.

The modified wordlist used to train the MLP consists of 26,455 words, which was created by adding 312 words filtered out during the pronunciation availability check.

A.1.3. Training Data of NonwordCLIP

To train a NonwordCLIP [9–11] in Section 4, we constructed a dataset in which each word appears almost an equal number of times. As mentioned in our paper, the dataset consists of 5,496 highly-imageable and -frequent nouns and noun phrases created by combining the MRC Psycholinguistic Database [2], wordfreq [24], and an English lexical database WordNet [13].

First, from the MRC database, we collected highly-imageable nouns having an imageability score of 500 or more. Next, we used WordNet to augment the vocabulary based on Liu et al. (Liu et al., 2014)'s procedure, in which synonym and hyponym relationships on WordNet were used to extend the imageability dictionary. Specifically, for each noun in an imageability dictionary, their method propagated the same imageability score to the synonyms and hyponyms of the noun. Following this policy, for each word in our imageable noun list, we propagated its imageability score to its first, second, and third synonym nouns and all hyponym nouns. Natural Language ToolKit (NLTK) (Bird et al., 2009) was used to access the WordNet hierarchy and to judge whether each WordNet node is a noun or a noun phrase. After this augmentation, we used wordfreq to obtain nouns having 3.5 or more Zipf frequency values.

Lastly, we further augmented the dataset twice using the two prompts ``<WORD>'' and ``a photo of a <WORD>'', resulting in training data of 10,992 samples.

A.2. Prompt Engineering for Calculating CLIP Score

In Section 3.2, Contrastive Language-Image Pretraining (CLIP) score [15] was calculated to detect the presence of a single concept in an image. To increase the precision of the scores, we adopted prompt engineering like the one adopted in the original paper [15] to solve an image classification task999https://github.com/openai/CLIP/blob/main/notebooks/Prompt_Engineering_for_ImageNet.ipynb (Accessed August 7, 2024). The original paper used 80 templates describing images containing a target concept, all of which ends with a period, such as ``a bad photo of a <WORD>.''. Our paper increased the number of templates to 160 by creating a variant without the period in the ending position for each template, such as ``a bad photo of a <WORD>''.

For each pair of a concept and an image, we calculated the final CLIP score by averaging the 160 CLIP similarity scores computed for each prompt.

A.3. Detailed Experimental Results

A.3.1. Results under Different s

Tables 4, 5, and 6 show the ratios of conceptual blending evaluated in Section 3 under different s. Our conclusions mentioned in the paper are consistent throughout all s, while the ratio decreases as increases because setting a larger makes the detection criterion more strict.

| Interpolation Ratio of Concept A to Concept B | ||||||||||

| Case | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | Overall |

| Concept A | 0.286 | 0.466 | 0.580 | 0.855 | 0.974 | 0.991 | 1.000 | 1.000 | 1.000 | 0.802 |

| Concept B | 1.000 | 1.000 | 1.000 | 1.000 | 0.957 | 0.870 | 0.648 | 0.495 | 0.496 | 0.829 |

| BCD | 0.286 | 0.466 | 0.562 | 0.744 | 0.819 | 0.778 | 0.600 | 0.465 | 0.487 | 0.584 |

| MCD | 0.286 | 0.466 | 0.580 | 0.855 | 0.931 | 0.861 | 0.648 | 0.495 | 0.496 | 0.631 |

| Interpolation Ratio of Concept A to Concept B | ||||||||||

| Case | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | Overall |

| Concept A | 0.152 | 0.311 | 0.411 | 0.709 | 0.948 | 0.981 | 1.000 | 1.000 | 1.000 | 0.732 |

| Concept B | 1.000 | 1.000 | 1.000 | 0.991 | 0.914 | 0.731 | 0.472 | 0.277 | 0.265 | 0.738 |

| BCD | 0.143 | 0.301 | 0.348 | 0.521 | 0.621 | 0.593 | 0.416 | 0.257 | 0.257 | 0.389 |

| MCD | 0.152 | 0.311 | 0.411 | 0.701 | 0.862 | 0.722 | 0.472 | 0.277 | 0.265 | 0.471 |

| Interpolation Ratio of Concept A to Concept B | ||||||||||

| Case | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | Overall |

| Concept A | 0.010 | 0.107 | 0.134 | 0.291 | 0.716 | 0.898 | 0.984 | 1.000 | 1.000 | 0.578 |

| Concept B | 1.000 | 1.000 | 0.982 | 0.897 | 0.698 | 0.380 | 0.208 | 0.099 | 0.053 | 0.587 |

| BCD | 0.000 | 0.097 | 0.116 | 0.162 | 0.302 | 0.204 | 0.184 | 0.099 | 0.053 | 0.138 |

| MCD | 0.010 | 0.107 | 0.134 | 0.214 | 0.466 | 0.306 | 0.208 | 0.099 | 0.053 | 0.181 |

A.3.2. Results under Different s

Table 7 shows the transition of the rank correlation metric used in Section 4.3 with different s. The hyperparameter denotes how many nearest-neighbor embeddings in both the CLIP pooled and last-hidden-state embedding spaces are used to calculate the rank correlation. As a reference, we also measured the rank correlation metric between the nearest-neighbor ranking for the ground-truth interpolated embedding in the pooled embedding space and that for the ground-truth interpolated embedding in the last-hidden-state embedding space, averaged over all samples. This metric, shown as ``Ground Truth'' in the table, measures the alignment of the sample distributions in the two embedding spaces.

The results in the table indicate that the comparative MLP-based method yielded higher correlations than the proposed method under a large , and they were even higher than the metrics measured using the ground-truth interpolated embeddings presumably due to the curse of dimensionality.

| Method | ||||||

|---|---|---|---|---|---|---|

| MLP [9–11] | 0.846 | 0.783 | 0.711 | 0.464 | 0.444 | |

| Ours ( | 1) | 0.902 | 0.702 | 0.586 | 0.352 | 0.363 |

| Ours ( | 2) | 0.880 | 0.788 | 0.669 | 0.376 | 0.365 |

| Ours ( | 5) | 0.884 | 0.765 | 0.735 | 0.395 | 0.366 |

| Ours ( | 10) | 0.882 | 0.771 | 0.689 | 0.397 | 0.366 |

| Ours ( | 100) | 0.888 | 0.781 | 0.694 | 0.373 | 0.365 |

| Ours ( | 200) | 0.886 | 0.791 | 0.697 | 0.373 | 0.365 |

| Ours ( | 300) | 0.890 | 0.793 | 0.699 | 0.373 | 0.365 |

| Ours ( | 400) | 0.890 | 0.797 | 0.700 | 0.373 | 0.365 |

| Ours ( | 500) | 0.880 | 0.802 | 0.701 | 0.373 | 0.364 |

| Ours ( | 1,000) | 0.882 | 0.799 | 0.696 | 0.367 | 0.359 |

| Ground Truth | 0.868 | 0.809 | 0.703 | 0.373 | 0.365 | |

A.4. Similarity between Embedding Spaces

In Section 4.2, our paper assumed similar data distributions in the CLIP pooled embedding space and the CLIP last-hidden-state embedding space to convert embeddings from one space to another. To support this assumption, this section quantitatively evaluates how similar the two embedding spaces are.

First, we describe how embeddings in each space are computed by the CLIP adopted in our paper. In the forwarding step of CLIP, it first computes a -dimensional last-hidden-state embedding for a given text prompt. Each of the 77 dimensions corresponds to the tokens of the tokenized text prompt. For instance, if the prompt is ``a photo of a calf'' and its tokenized result is [[CLS], `a', `photo', `of', `a', `calf', [EOS]], the first and seventh dimensions of the embedding correspond to the [CLS] token and the [EOS] token, respectively, Next, a 768-dimensional pooled embedding is computed by linearly projecting the -dimensional [EOS] token embedding chosen from the -dimensional whole last-hidden-state embedding. This means that the last-hidden-state embedding of only the [EOS] position is linearly predictable from a pooled embedding.

To quantify the actual inter-space similarity, we conducted Canonical Correlation Analysis (CCA) between the two spaces using the whole dataset of TrainWords26143 as data samples. Due to the huge dimensionality of the last-hidden-state embedding space, CCA is applied token-wise; Correlations are measured between the pooled embedding space and each dimension of the 77 token positions of the last-hidden-state embedding space.

[]Figure 8. Fully described in the text.

The result is shown in Fig. 7. The figure shows more than a 0.90 maximum correlation between the pooled embedding and each slice of the corresponding last-hidden-state embedding later than the fifth token position, while the other earlier slices yield almost no correlation. The fifth and earlier slices rarely vary among each sample under our experimental setting since they always correspond to the tokens [[CLS], `a', `photo', `of', `a']. On the other hand, the slices after them vary, truly representing the text features expressed in the last-hidden-embedding space. Therefore, the high correlation of the pooled embedding space to the slices after them allows us to conclude that the data distributions in the two spaces are similar enough to ensure that the linear combination in Eq. (3) works.

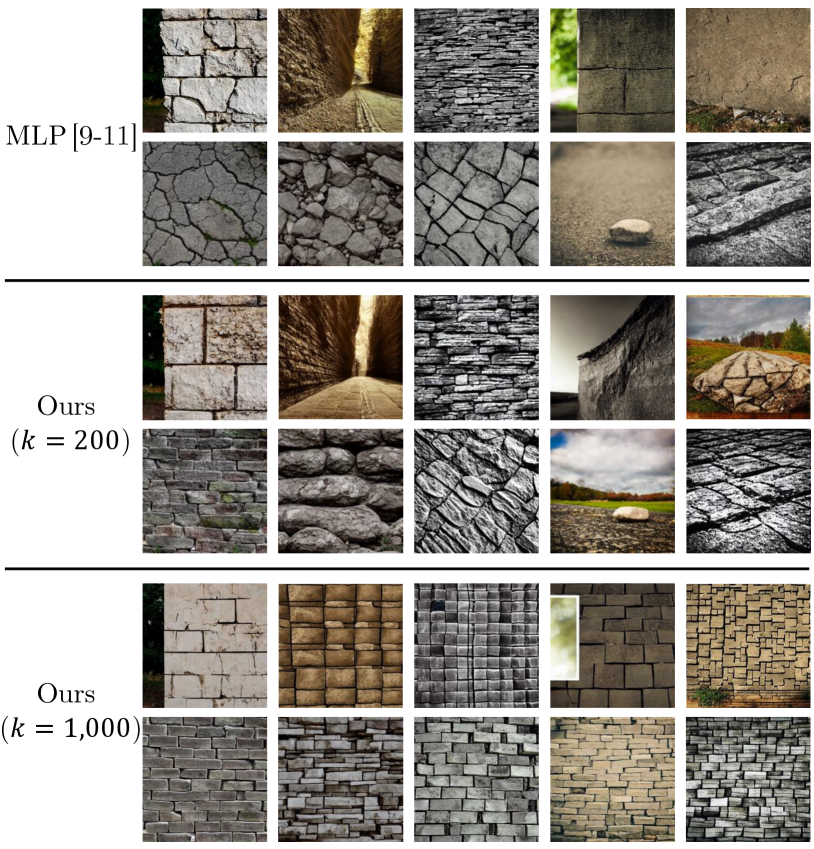

A.5. Image Generation Examples

A.5.1. Image Generation from Interpolated Embeddings

Figure 8 shows more conceptual blending examples yielded by inputting interpolated embeddings into Stable Diffusion. These cases of conceptual blending are detected by our classifier constructed in Section 3.

Figures 9 and 10 compare images generated from embeddings computed by different text embedding space conversion methods evaluated in Section 4.3. Also, at the bottom right of each figure, the images generated from the ground-truth last-hidden-state embeddings used in Section 3 are attached for comparison.

A.5.2. Nonword-to-Image Generation

Figure 11 showcases more nonword-to-image generation results generated by different methods used in the evaluation in Section 4.4. As mentioned in Section 4.4.2, these nonwords are taken from Sabbatino et al. [19]'s work, in which they annotated evoked emotion labels to each of them.

|

|

|

|

[]Figure 9. Fully described in the text.

[]Figure 10. Fully described in the text.

[]Figure 11. Fully described in the text.

|

|

[]Figure 12. Fully described in the text.

References

- (1)

- Bird et al. (2009) Steven Bird, Ewan Klein, and Edward Loper. 2009. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit. O'Reilly Media, Inc., Sebastopol, CA, USA.

- Liu et al. (2014) Ting Liu, Kit Cho, G. Aaron Broadwell, Samira Shaikh, Tomek Strzalkowski, John Lien, Sarah Taylor, Laurie Feldman, Boris Yamrom, Nick Webb, Umit Boz, Ignacio Cases, and Ching-sheng Lin. 2014. Automatic expansion of the MRC psycholinguistic database imageability ratings. In Proc. 9th Int. Conf. Lang. Resour. Eval. (Reykjavik, Iceland). 2800–2805.