Inverse Uncertainty Quantification by Hierarchical Bayesian Modeling and Application in Nuclear System Thermal-Hydraulics Codes

Abstract

In nuclear Thermal Hydraulics (TH) system codes, a significant source of input uncertainty comes from the Physical Model Parameters (PMPs), and accurate uncertainty quantification in these input parameters is crucial for validating nuclear reactor systems within the Best Estimate Plus Uncertainty (BEPU) framework. Inverse Uncertainty Quantification (IUQ) method has been used to quantify the uncertainty of PMPs from a Bayesian perspective. This paper introduces a novel hierarchical Bayesian model for IUQ which aims to mitigate two existing challenges: the high variability of PMPs under varying experimental conditions, and unknown model discrepancies or outliers causing over-fitting issues for the PMPs.

The proposed hierarchical model is compared with the conventional single-level Bayesian model based on the PMPs in TRACE using the measured void fraction data in the Boiling Water Reactor Full-size Fine-mesh Bundle Test (BFBT) benchmark. A Hamiltonian Monte Carlo Method - No U-Turn Sampler (NUTS) is used for posterior sampling in the hierarchical structure. The results demonstrate the effectiveness of the proposed hierarchical structure in providing better estimates of the posterior distributions of PMPs and being less prone to over-fitting. The proposed hierarchical model also demonstrates a promising approach for generalizing IUQ to larger databases with a broad range of experimental conditions and different geometric setups.

keywords:

Hierarchical Bayesian Model , Inverse Uncertainty Quantification , Thermal Hydraulics , Markov Chain Monte Carlo , Surrogate Models[label1]organization=Department of Nuclear, Plasma and Radiological Engineering, University of Illinois Urbana Champaign \affiliation[label2]organization=Department of Nuclear Engineering, North Carolina State University

1 Introduction

Best-estimate codes have been widely used in the nuclear system modeling and licensing process. These codes are designed to model all relevant physical processes in a realistic way and provide better insights into the accident progress. While a single calculation with a best-estimate code is considered the most accurate representation of reality, uncertainties in its results make it unsuitable for direct safety analysis. Consequently, Best-Estimate Plus Uncertainty (BEPU) methods are under fast development for the licensing process. BEPU approaches have been the focus of several OECD/NEA projects, such as UMS (Amri and Gulliford (2013)), BEMUSE (Perez et al. (2011)), etc. The results of these projects have shown that the uncertainty methods have a good maturity for the evaluation of the large-break loss-of-coolant accident (LB-LOCA) transients.

As highlighted in the final BEMUSE project report (Perez et al. (2011)), significant efforts must be directed towards quantifying input uncertainty—an essential component of probabilistic uncertainty analysis in BEPU approaches. Since most current BEPU methodologies rely on propagating uncertainties from input parameters to predictive model outputs, determining and justifying the uncertainty range associated with each uncertain parameter is crucial. Historically, the input uncertainty range is often determined by subjective expert judgment and therefore requires further development to provide a more scientific and rigorous method. This challenge was investigated in the international PREMIUM project (Skorek and Crecy (2013) Mendizábal et al. (2016)), while no consensus was reached by participants on guidelines and methodologies for input uncertainty quantification (UQ). The lack of consensus also motivated another OECD/NEA project SAPIUM (Systematic APproach for Input Uncertainty quantification Methodology) (Baccou et al. (2020)), which aims to develop a systematic approach for input UQ methodology in nuclear thermal-hydraulics (TH) codes.

The input uncertainties related to the numerical simulation of nuclear TH phenomena have multiple origins and can be classified into the different categories according to their origins (Smith (2013) Barth (2011) Wang (2020)):

-

1.

Numerical uncertainties arising from the schemes employed in solving partial differential equations (PDEs). These uncertainties may originate from temporal and spatial discretization, errors due to iterative convergence of approximation algorithms, or round-off errors. Discretization uncertainty has been studied extensively and a number of techniques have been proposed for modeling this uncertainty.

-

2.

Uncertainties in the physical properties of materials and working fluids. Variations in properties such as density, specific heat, thermal conductivity, speed of sound, thermal expansion, and others can impact simulation outcomes. Accurate knowledge of these properties is crucial for the mathematical modeling and computer simulation of associated heat and mass transport processes.

-

3.

Uncertainties in geometries, initial/boundary conditions. The geometry information of relevant objectives in a model may not be exactly known to us. This type of uncertainty can be obtained from uncertainty in measurements or the manufacture tolerance. Initial and boundary conditions imposed to models can be caused by the inaccuracy in measuring them during experiment. The initial and boundary conditions may include the power level, pressure, temperature, flow rate, wall roughness, etc.

-

4.

Uncertainties in physical phenomena. Uncertainty may arise due to lack of knowledge (epistemic uncertainty) in the formulation of the model or intentional simplifications of the model. In addition, empirical equations of state and constitutive equations (closure laws) utilized in two-fluid models or other fluid dynamics models may exhibit inherent probabilistic variations (aleatory uncertainty) in their parameters.

In this study, we focus on the uncertainties in physical phenomena present in the TRACE code (Bajorek et al. (2008)), which employs empirical correlations within the two-phase flow model to characterize transfer terms in balance equations. These correlations are obtained from different sets of experiments. However, their accuracy is subject to certain experimental conditions and may decrease due to different conditions. Thus, it is important to study the accuracy as well as the influence of those physical model correlations (Wang et al. (2019b)). As has been shown in many benchmark studies on BEPU applications (Glaeser et al. (2011) Wang et al. (2017)), these uncertain parameters may have significant influences on Quantity-of-Interests (QoIs), thus it is important to improve our knowledge their uncertainties. Inverse UQ (sometimes also called input UQ) methods can be used to estimate the statistics of those parameters given experimental data, and the obtained statistics will useful for more accurate uncertainty and validation analysis.

Addressing input uncertainty distributions in this work can be broadly characterized as solving an inverse problem in modeling and simulation. This involves estimating model input parameters (with uncertainty) by comparing model outputs to experimental data. The inverse problem is an important approach to provide insights into parameters that cannot be directly observed. It has been extensively applied in various disciplines, including data assimilation, engineering optimization, medical imaging, and geophysics (Tarantola (2005)). A common approach for addressing inverse problems in complex engineering systems is Bayesian inference. A comprehensive framework for input UQ for computer models, based on Bayesian formulation, was first introduced by Kennedy and O’Hagan (2001). In the filed of nuclear system TH, input UQ has mainly focused on the parametric uncertainty from closure equations in TH codes. w̧u2021comprehensive performed a comprehensive literature review on the IUQ methods for physical model parameters in nuclear system TH codes. This review paper summarized 12 existing IUQ methods from different research groups and compared them using various evaluation criteria.

The landscape of IUQ has been considerably shaped by the progressive advancements in machine learning (ML) and artificial intelligence (AI). These technologies have significantly improved the precision and dependability of simulation models in various sectors, tackling a multitude of intricate challenges Wang et al. (2023b). Their successful deployment spans several disciplines, including healthcare (Chen et al. (2019)), agriculture (Wu et al. (2022)), reliability engineering (Chen et al. (2020); Wang et al. (2024); Chen et al. (2017); Chen (2020)), industrial engineering (Chen et al. (2018)), and artificial intelligence (Liu et al. (2019); Liu and Neville (2023); Liu et al. (2024); Wu et al. (2024); Chen et al. (2023)). The adaptability and success of ML/AI approaches in these areas provide compelling evidence of their potential and impart crucial learnings for advancing IUQ initiatives within the nuclear power industry.

Previous IUQ research in the nuclear engineering domain has primarily utilized single-level Bayesian inference which uses Markov Chain Monte Carlo (MCMC) to explore the parameter posterior distributions. These approaches are often constrained to relatively small datasets. The calculated posterior distributions are valid only for the selected experimental cases and may vary when different datasets are used. To address this issue, this paper presents a hierarchical Bayesian modeling approach. Hierarchical Bayesian models are particularly relevant when dealing with observations that are organized into distinct groups (Gelman et al. (2013)). In such scenarios, calibration parameters may differ across groups, and employing traditional Bayesian inference methods may introduce substantial errors. Hierarchical models enable the definition and identification of “hyperparameters” to ensure that both “group” characteristics and “individual” characteristics are taken into account (Wang et al. (2023a)).

A practical challenge for inverse problems is the large computational cost due to the long-running best-estimate codes, and the large number of samples needed in MCMC algorithms. Accurate and fast-running surrogate models can be used to significantly reduce the computational cost. The general idea is to train the surrogate models using a limited number of full model (the expensive computer code) simulations, usually with a certain learning algorithm. Many types of surrogate models have been developed, such as Polynomial Chaos Expansion (PCE) and Stochastic Collocation (SC) used by Wu et al. (2017a), Gaussian Process (GP) model by Wu et al. (2018a) Wang et al. (2019a) and Wu et al. (2017c), Neural Networks by Liu et al. (2022) and Liu et al. (2021), etc. In this work, Polynomial Regression (PR) and GP are used as surrogate models and compared. The PR model might not be as accurate as other more complex surrogate models but it has proven to be sufficient for this work. The primary motivation for using PR is that it provides easily obtained gradient information of predictions, which is useful for the following gradient-based MCMC algorithm called No-U-Turn Sampler (NUTS) (Hoffman and Gelman (2014)). NUTS takes advantage of gradient information from the likelihood to achieve much faster convergence than traditional sampling methods, and it works well on high dimensional and complex posterior distributions (Salvatier et al. (2016)). The high-dimensional sampling space introduced by the hierarchical model requires the NUTS sampler to be used in this work.

The rest of the paper is organized as follows. Section 2 will give an overview of IUQ method and its essential components. Section 3 will introduce the hierarchical Bayesian framework and its details, and then in Section 4, the hierarchical framework is applied to a case study for TRACE physical model parameters using the BFBT benchmark steady-state void fraction data. Section 5 will be the summary and conclusions.

2 Overview of Inverse Uncertainty Quantification Methodology

2.1 Overview

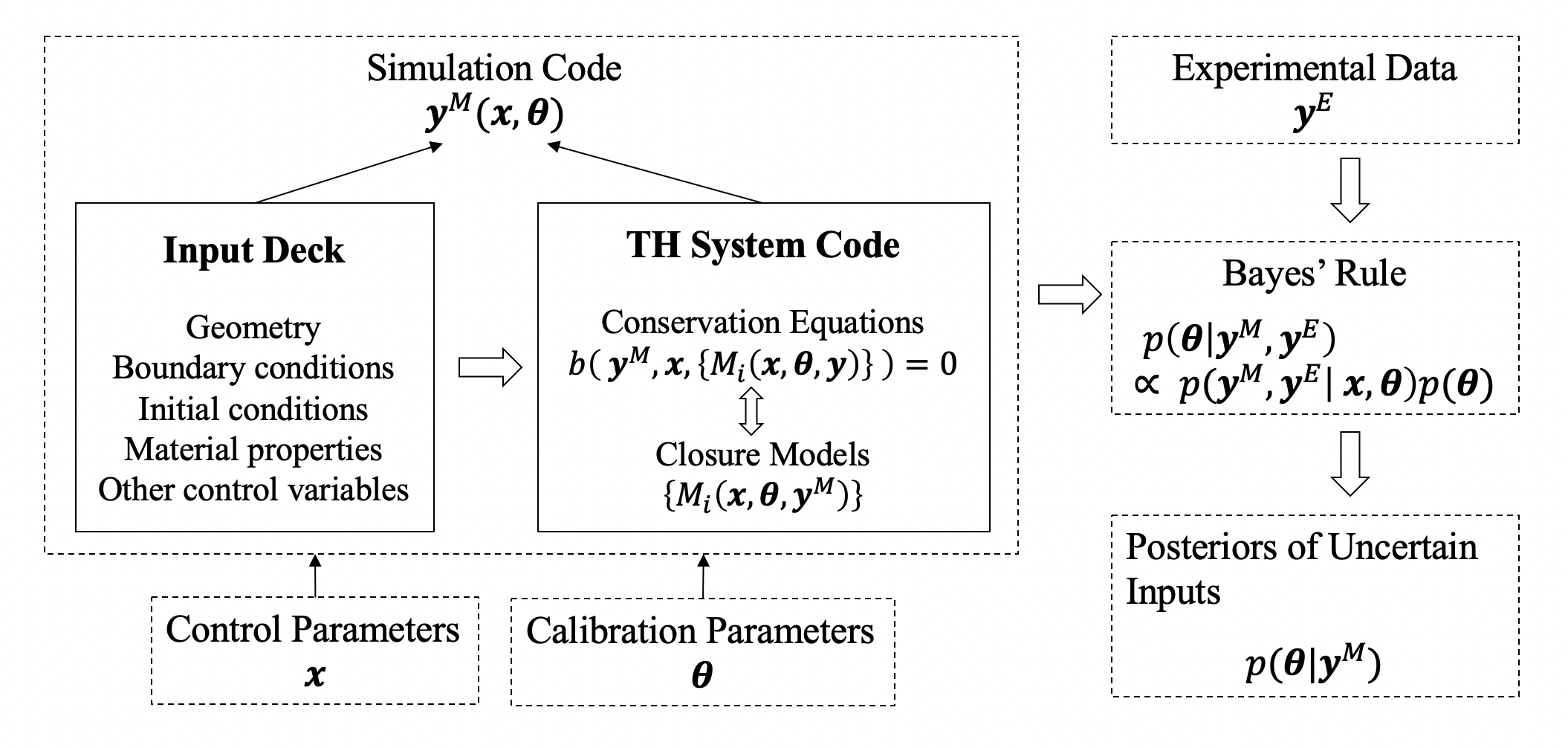

Figure 1 shows the major components used in the IUQ framework, where x represents the control parameters, such as boundary conditions, initial conditions, etc. represents calibration parameters, in this work it specifically represents the selected physical model parameters in the closure models of TH codes.

The input deck serves as the input for thermal-hydraulics (TH) codes, defining a specific system. It includes the geometrical configuration (i.e., nodalization), the materials and fluids involved, the initial and boundary conditions, and potentially the settings for the numerical solver. Control parameters x are selected from those specifications. The TH simulation codes TRACE consists of 6 conservation equations, which are then closed with additional set of closure models (Wicaksono (2018)). The output of the simulation code can be combined with corresponding experimental data in the Bayes’ rule, resulting in a joint posterior probability density distribution (PDF) for the selected inputs.

The detailed procedures of the IUQ framework can be organized into the following stages:

-

1.

Define the problem under investigation and select appropriate experimental data and simulation codes. In this study, the BFBT (Boiling Water Reactor Full-size Fine-mesh Bundle Test) benchmark is used as the basis, with corresponding TRACE models developed.

-

2.

Determine influential input parameters within the chosen TH codes.

-

3.

Conduct a two-step sensitivity analysis to identify significant input parameters. Initially, implement a relatively straightforward perturbation method to all parameters, selecting those that are active in the model. Subsequently, employ a more precise sensitivity analysis technique, Sobol indices, to identify the influential parameters. Surrogate models may be used in this stage.

-

4.

Develop a hierarchical Bayesian inference model to quantify the posteriors of uncertain inputs. This stage integrates experimental data with simulation outcomes to establish input distributions that result in improvement agreement with experimental data. Surrogate model and MCMC sampling algorithms are used in this stage.

-

5.

Validate the derived posteriors.

2.2 Model Updating Equation

A key assumption in the Bayesian IUQ framework is the model updating equation. Following the work of Kennedy and O’Hagan (2001), we represent the relationship between the computer model outputs and the observations in the equation:

| (1) |

where is the observation error that is usually assumed to be independent and identically distributed as . It should be noted that this assumption may not always hold in reality, especially in time-dependent problems (Wang et al. (2018b)). is the model discrepancy term, which is caused by incomplete or inaccurate physics employed in the model. Here, the parametric uncertainty is derived from the parameter, and other forms of uncertainties are incorporated in the model discrepancy term.

Following the model updating equation, the posterior PDF of the calibration parameters can be found using Bayes’ rule:

| (2) |

where is the prior distribution of the calibration parameters, and is the likelihood function. From equation 1, we know that follows a multivariate normal distribution. So the posterior can be written as:

| (3) |

The covariance matrix is defined as:

| (4) |

where is the experimental uncertainty, is the model uncertainty, and is the code uncertainty, introduced by using surrogate models to replace the full model. is the prior distribution of calibration parameters and can be treated as a non-informative uniform distribution over a certain range.

It can be very challenging to estimate the model discrepancy . In cases where model discrepancy is negligible, we can simply ignore the term. However, ignoring the model discrepancy when it does exist can cause over-fitting issue of the calibration parameters, meaning that the IUQ process will favor a distribution that yields the best agreement between the measurement data and the model simulation, rather than the “true” value. A method called the Modular Bayesian Approach (MBA) was developed to tackle the model discrepancy problem in IUQ (Wu et al. (2018b) Wu et al. (2018c) Wang et al. (2018a)).

2.3 Sensitivity Analysis

One question we need to answer at the very beginning of IUQ is the identification of input parameters requiring study. Sensitivity Analysis (SA) examines how uncertain inputs contribute to variations in model QoIs. There are typically two classes of SA: local SA and global SA (Saltelli (2002)). Local SA addresses the local impact of input variations, whereas global SA considers the entire variation range of inputs and can also provide insights into how interactions between input parameters influence QoIs. Generally, local SA is computationally less expensive than global SA; thus, in the IUQ framework, we use local SA for initial parameter selection and global SA for a more accurate study to determine the final list of inputs to be investigated.

Sobol indices method provides a straightforward measure of sensitivity in arbitrarily complex computational models. It is a variance-based method where the variance of the output is decomposed as a sum of contributions of each input variable and their combinations. This decomposition of variance is referred to as ANOVA (ANalysis Of VAriance). Sobol indices method has been successfully applied to many UQ related applications (Aly et al. (2019) Wang et al. (2017) Wu et al. (2017b)) and the details of the method will not be repeated in this paper. The calculation of Sobol indices in this paper will follow the samping-based approach outlined by Saltelli et al. (2010). This approach requires number of samples. is usually a value of several thousand for sufficient accuracy and is the dimension of the input, and GP surrogate model is used here due to a large number of code runs required.

2.4 Surrogate Models

Surrogate models, also known as metamodels or emulators, play a critical role in Bayesian calibration of computer models, particularly when dealing with computationally demanding simulations such as nuclear reactor analyses. These surrogate models are constructed using a limited number of full model runs at specifically chosen input parameter values, combined with a learning algorithm. Many types of surrogate models have been utilized in the Bayesian calibration process, Different types of surrogate models have different characteristics, so they may fit in different engineering scenarios. Factors to consider when selecting an appropriate surrogate model include input dimensionality, the non-linearity of the input-output relationship, and whether the problem is time-dependent or steady-state.

In this work, we primarily employ two types of surrogate models: GP and PR. GP has been widely used as surrogate models since the seminal work of Bayesian calibration by Kennedy and O’Hagan (2001) utilizing GP modeling. One notably characteristic of GP is that it not only provides a mean estimator at any untried input location, but also the corresponding variance estimator, which can serve as the code uncertainty term () in Equation 4. In other surrogate models, since this term is not available, we will need to treat the covariance term as a calibration parameter. Details of the GP surrogate model will not be repeated in this paper, interested readers may refer to Rasmussen (2004) for more details on GP and Wu et al. (2018b) Wang et al. (2019a) for similar IUQ applications using GP.

In addition to the GP model, as a more convenient but less accurate alternative, Polynomial Regression model is also used in the current work. The incentive of using PR derives from the requirement of gradient information in some advanced and efficient MCMC algorithms. As a parametric regression model, it is straightforward to calculate the gradient at any given location. The PR model with degree of for two variables has the following form:

| (5) |

where all dth order polynomials and interaction terms are considered. The parameter can be easily estimated by Ordinary Least Squares method, similar to other linear regression models.

The accuracy of the surrogate models needs to be assessed before use. We are particularly interested in the predictive accuracy at untried points, which can be achieved by quantifying the predictive error using an additional set of validation data. Various metrics, such as the Mean Squared Error, Leave-One-Out-Error, Coefficient of Determination, and the Mean Absolute Error, can be employed to measure the discrepancy between the predicted values and the actual values. By comparing these metrics, we can ascertain the effectiveness of the surrogate model in capturing the underlying patterns and relationships in the data, ensuring its reliability for subsequent analysis and applications.

3 Hierarchical Bayesian Framework for Inverse Uncertainty Quantification

Prior IUQ research in the field of nuclear engineering primarily employs single-level Bayesian inference models and MCMC algorithms to calculate the posterior distributions of specific parameters. However, these methods are often limited to relatively small datasets. The derived posterior distributions are only applicable to the chosen data and may fluctuate given different datasets due to the fact that the calibrated parameters are over-fitted to the selected data. This paper presents a hierarchical Bayesian model to address this limitation. The hierarchical Bayesian model is particularly relevant when we have observations that are organized in groups in which the calibration parameters may differ.

3.1 Hierarchical Bayesian Model

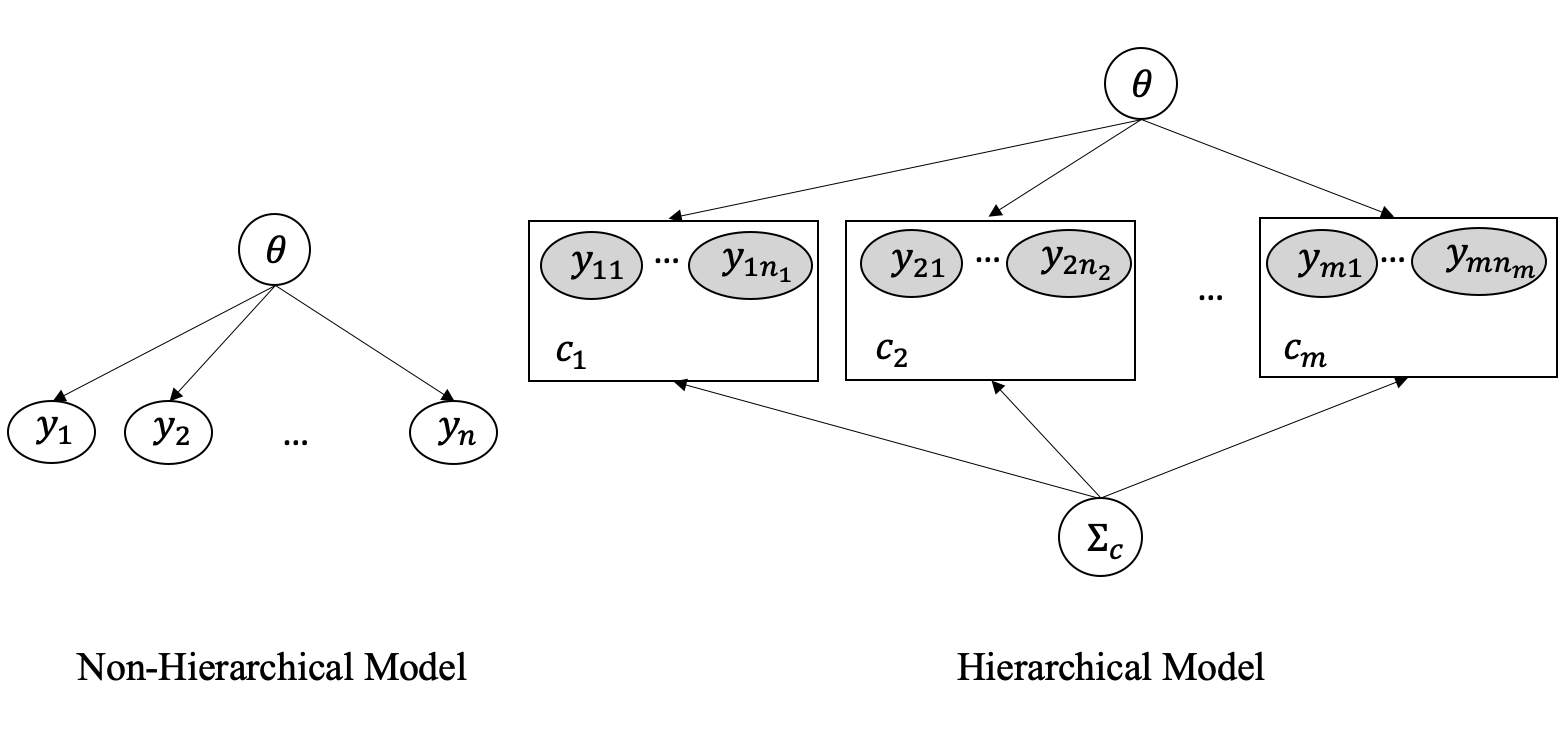

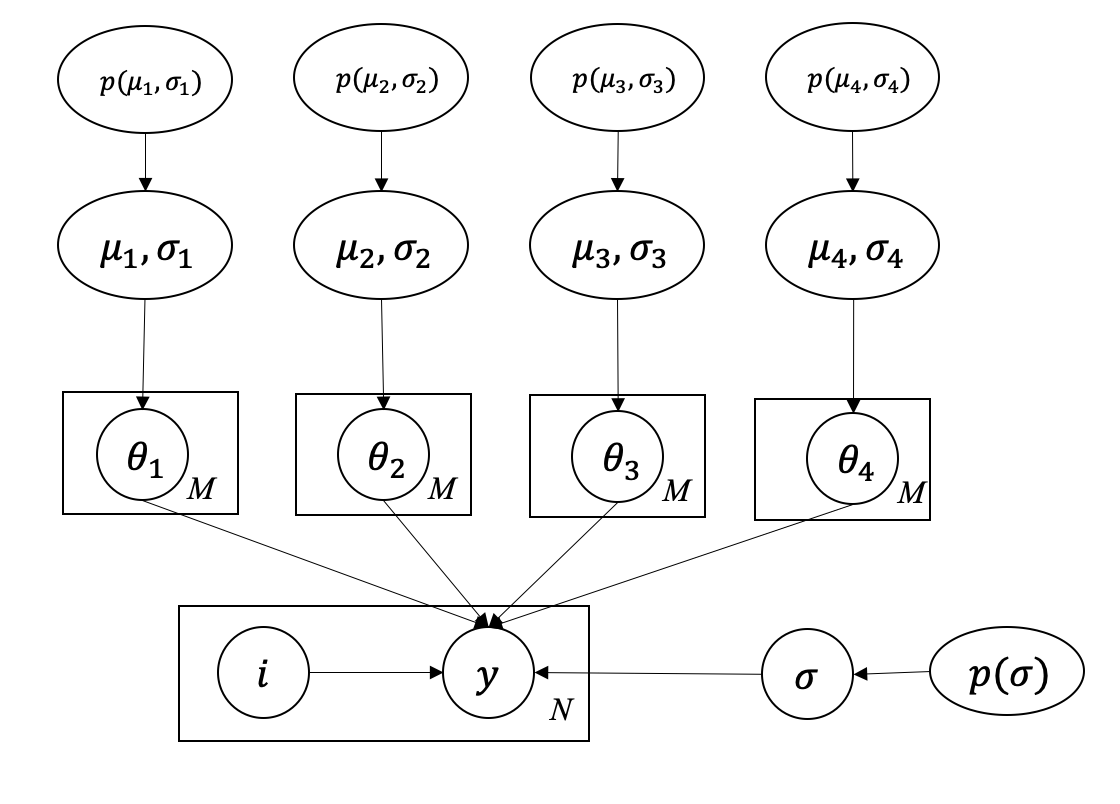

In the traditional Bayesian calibration setting, we have assumed that the observations are independent of each other so that the joint likelihood function can easily be formulated as the product of each individual likelihood. However, in many situations, such independence may not hold. Hierarchical model is also called a multi-level model or mixed-effect model. A simple illustration of the structure of the hierarchical model is shown in Figure 2. Observations are from different clusters determined by (1) cluster parameter which might be different among clusters, and (2) parameter shared across clusters. If the probability distribution of can be parameterized by , can be seen as the cluster-specific parameter, or per-group parameter, to be estimated.

Consider the simple example illustrated in Figure 2, where the observations are assumed to follow a normal distribution. In this scenario, the parameters to be estimated are the mean and variance. Let’s assume that all clusters share the same variance, denoted as , but have different means for each cluster. So the is a shared parameter ( in Figure 2) and is per-group parameter ( in Figure 2). Now we would like to specify the distribution over the cluster-specific . We can assume the distributions are also normal, or other task-specification distributions, without loss of generality. If normal distributions are assumed, two parameters (global mean and global standard deviation , correspond to the in Figure 2) would be required. So we can describe the parameters and observations as:

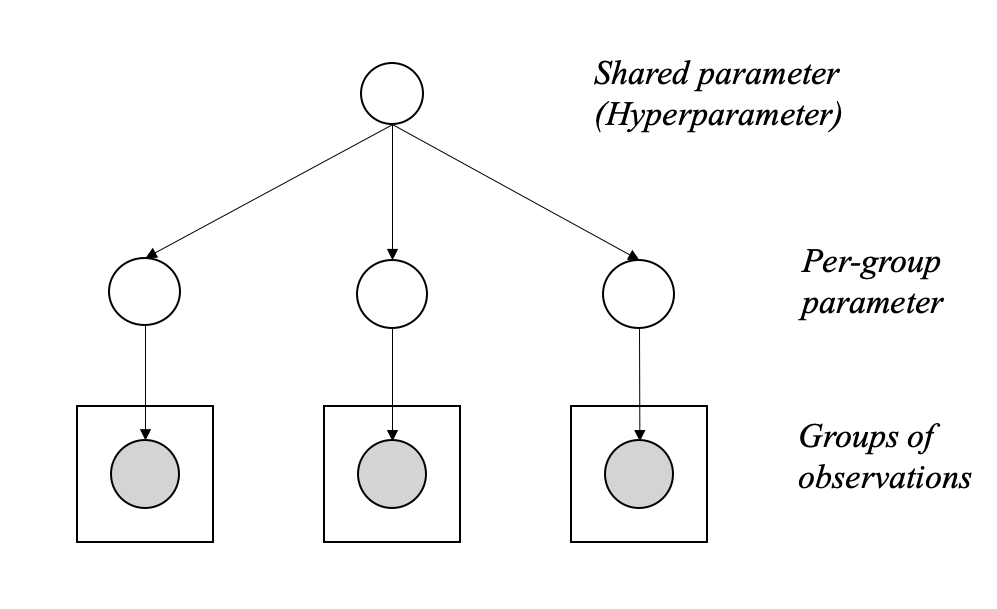

Now we can re-arrange the structure of the above hierarchical model to better illustrate the process of the two preceding equations: the per-group parameters are generated from shared parameters, and the observations are generated from per-group parameters. This relationship is shown in Figure 3.

In summary, the application of hierarchical models is highly beneficial when there’s an observable existence of group-level characteristics, and the data’s structure can be effectively modeled or assumed. When dealing with hierarchically structured data, utilizing a non-hierarchical model may not be appropriate for two reasons: (1) a model containing insufficient parameters may not successfully fit the dataset, and (2) a model with an abundance of parameters may cause over-fitting of the dataset. In contrast, hierarchical models, having a substantial number of parameters for fitting the data accurately and a population distribution to model the inter-dependencies of the parameters, can avoid the issue of over-fitting. This is achieved as the parameters are “constrained” by the population distribution, preventing them from exactly replicating the data.

3.2 Bayesian Inference in Hierarchical Models

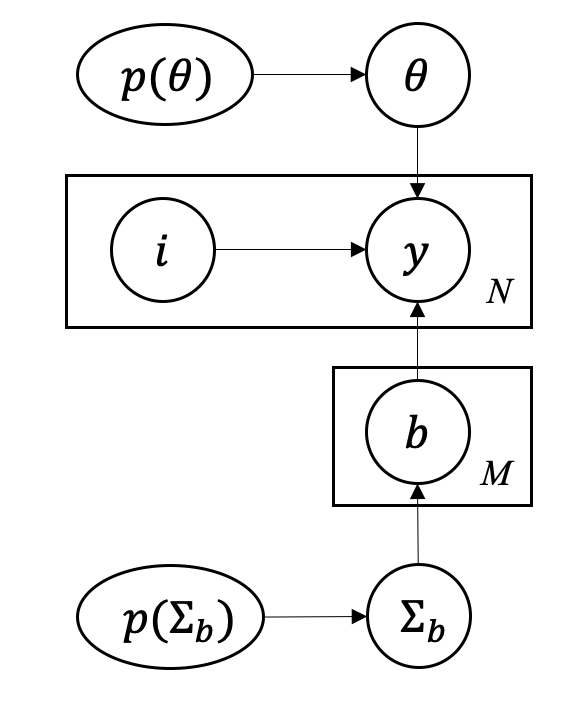

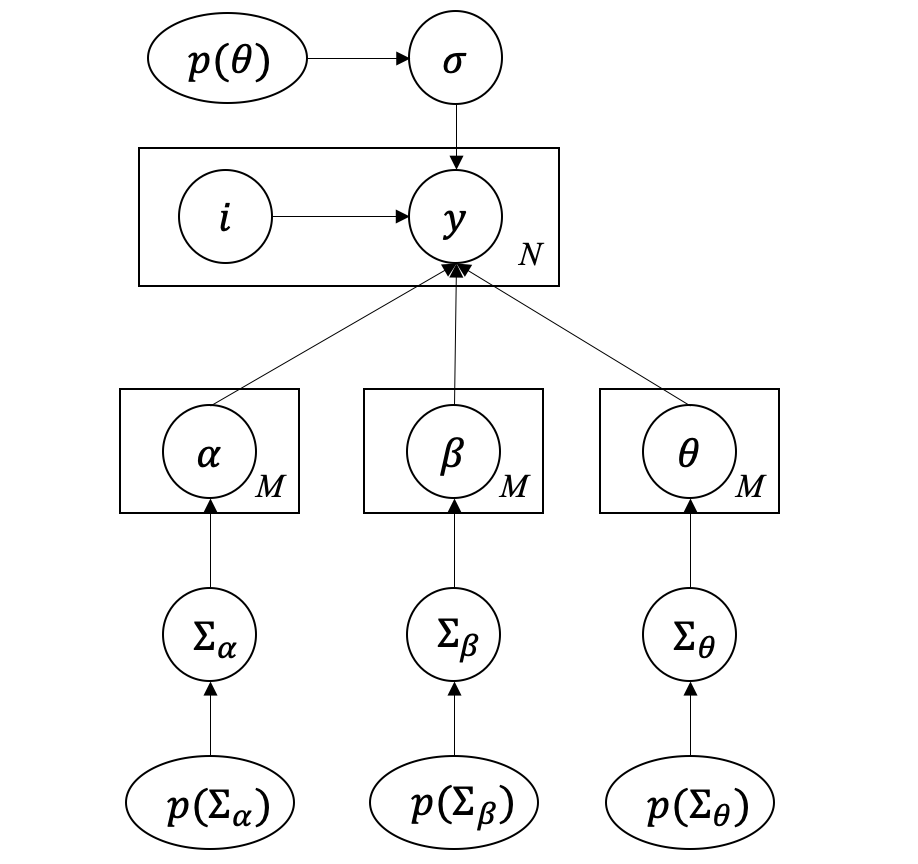

In this section we describe how to estimate parameters in a hierarchical Bayesian model structure. The hierarchical model structure in Figure 2 can be plotted into a more succinct and formal graphical representation, as shown in Figure 4. In the figure, is the prior distribution of , is the cluster index, is the observation, and there are observations in total. The per-group parameter is governed by its distribution , which has a prior distribution .

It should be noted that within this model, our primary goal is to learn about and , as opposed to the individual group-specific parameters , but also has to be estimated in order to estimate . So we need to marginalize over the parameters after we have obtained the joint posterior distributions. There are generally two approaches to estimate the posteriors, we can either use Maximum Likelihood Estimation (MLE) to obtain best-fit point estimations or use sampling to get full posterior distributions for these parameters.

In the MLE method, the likelihood of and , marginalized over , can be expressed as:

| (6) |

In case full posterior distributions are desired, we can integrate the prior distribution and apply Bayes’ rule. The prior distribution can be decomposed into the following form:

| (7) |

which is based on the fact that influences observations only through . So the marginalized joint posterior distribution can be expressed as:

| (8) |

Hierarchical models provide a flexible framework for modeling the complex interactions but come with higher computational requirements in inference. The parameter space to be estimated can be high-dimensional: we need to estimate cluster-specific parameter , which may contain up to hundreds. Traditional random-walk based MCMC algorithms such as Metropolis-Hastings (MH) or advanced MH algorithms tailored for relatively high dimensional problems still suffer from serious convergence issues because that the random behavior of the proposal function in very inefficient in high-dimension domains. Hamiltonian Monte Carlo (HMC) and No-U-Turn Sampler (NUTS), which will be introduced in the following section, would be useful in such high-dimensional conditions.

3.3 Markov Chain Monte Carlo sampling

Estimating the posterior in Equation 3 requires numerical sampling method as analytical solution is not available. Statistical tools that can be used here include MLE, Maximum A Posteriori (MAP), Variational Inference (VI), Laplace Approximation (LA), and various MCMC methods. MLE, MAP, LA all give point estimates and VI, LA are both approximate solutions rather than exact solutions. MCMC, although more time consuming, can estimate the exact posteriors for arbitrary distributions. It is a class of algorithms for sampling from an arbitrary probability distribution, for example, the probability distribution of equation 3, where the density function needs to be known only up to a normalizing constant.

The MH algorithm is one of the most widely used MCMC methods for sampling from a target distribution. In this approach, a family of potential transitions from one state to the next is determined using a proposal distribution. However, when the number of parameters to be estimated increases to 20 or 30, the random walk-based methods, such as the traditional MH algorithm, suffer from a very low acceptance rate. To address these limitations, the HMC method has been developed as an alternative to the conventional MH algorithm. HMC is an MCMC technique that avoids the random walk behavior and the sensitivity to correlated parameters by utilizing first-order gradient information to inform the sequence of steps taken during the sampling process (Andrieu and Thoms (2008) Hoffman and Gelman (2014)). By employing an auxiliary variable scheme, HMC can transform the problem of sampling from a target distribution into simulating Hamiltonian dynamics, effectively suppressing the random walk behavior. As a result, HMC offers a more efficient approach to sampling from high-dimensional distributions and provides a smoother transition between states, thus enhancing the performance of MCMC methods in scenarios with a large number of parameters to be estimated.

In HMC, an auxiliary momentum variable is introduced for each model model parameter . In most cases, these momentum variables are drawn independently from standard normal distribution, yielding the joint density , where is the logarithm of the joint density of the variables of interest . We can interpret this model in physical terms as a fictitious Hamiltonian system where is a particle’s position in D-dimensional space, is the momentum of that particle in the d-th dimension, is a position-dependent negative potential energy function, is the kinetic energy of the particle, and is the negative energy of the particle. The evolution of the Hamiltonian dynamics of this system can be simulated via the “leapfrog” integrator (Hoffman and Gelman (2014)), which will update the and accordingly. The details of the algorithm is shown in Algorithm 1.

Suppose we are drawing samples using the HMC algorithm. For each sample, we first re-sample the momentum variables from , which can be interpreted as a Gibbs sampling update. Then, the leapfrog function is used times to update the variables and , and a proposal position-momentum pair of and is generated. The proposal can be accepted or rejected according to the Metropolis algorithm. The leapfrog functions plays an important role in improving the efficiency of sampling. By taking leapfrog steps per sample, the proposal generated for has a high probability of being accepted. However, as we can see from the definition of leapfrog function, derivative of the joint density function of is required. The performance of the HMC also depends on hand-chosen values of and . If the two parameters are not properly selected, the Markov chain may be slow to move between regions of high and low densities.

NUTS is an extension of HMC that eliminates the need to manually tune these parameters in HMC. The idea is to use a recursive algorithm to build a set of likely candidate points that spans the target distribution, and stop automatically when it starts to retrace its steps. A detailed description about NUTS can be found in Hoffman and Gelman (2014). In this work, the NUTS is implemented with an open-source package PyMC3.8 (Salvatier et al. (2016)). A significant advantage of NUTS is that it can be used without any hand-tuning, making it suitable for a wide range of engineering applications. However, as can be seen in the algorithm, the gradient of the log-likelihood with respect to the sampling parameter is required. Therefore, the surrogate model should also be capable of providing the gradient of outputs if NUTS is to be employed.

3.4 Application to Synthetic Data

3.4.1 Problem Definition

The synthetic data example is constructed in a way that is similar to the thermal-hydraulics system code application using BFBT void fraction data, which we will be utilizing in a subsequent sections. Suppose there are three parameters to be estimated , and we know that observations are from a quadratic function ( is determined):

and there 100 groups of data, , each group contains 5 data, . The true values of have slight variability among different groups, they are from a common normal distribution:

Now the objective of this problem is to estimate the above distributions of , only knowing and the quadratic relationship between and . Conceptually, can be considered as the physical model parameters (PMPs), while the quadratic relationship can be likened to the complex simulations of nuclear system codes.

3.4.2 Hierarchical Structure

Given that the parameters differ across groups, a Bayesian hierarchical model is employed to address this issue. The hyperparameters of are defined as . A graphical representation of this model structure can be seen in Figure 5. denotes the hyperparameters of , which is and . Same notations apply for and . Consequently, we have a set of shared parameters () and cluster-specific parameters (). Relatively wide uniform priors are imposed on those shared parameters:

The input parameters can then be sampled from the normal distribution defined by :

In this formulation, we are faced with the challenge of estimating parameters in the Bayesian inference process. Utilizing random sampling-based MCMC algorithms would be impractical due to the high dimensionality of this problem. Therefore, to address this challenge, the NUTS method is employed using the PyMC3.8 package (Salvatier et al. (2016)).

3.4.3 Results and Discussions

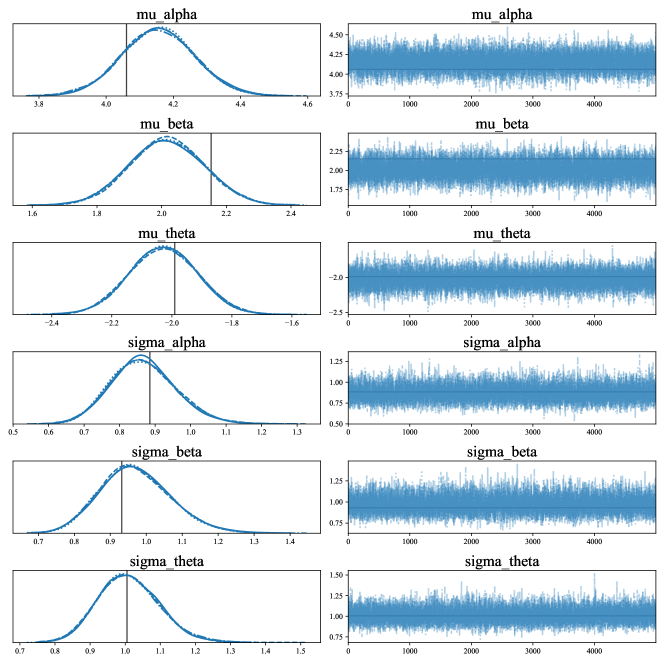

The posterior distributions of the hyper-parameters sampled by NUTS are shown in Figure 6. The vertical lines on the left side are the true values from the samples, and the figures on the right are the sampling trace plots. These trace plots demonstrate well-mixed chains, and the consistency across the four parallel sampling chains is obvious, as represented by the different line styles in the left figures. Although a minor discrepancy can be observed for the first two parameters, the true parameters remain within the corresponding probability distributions.

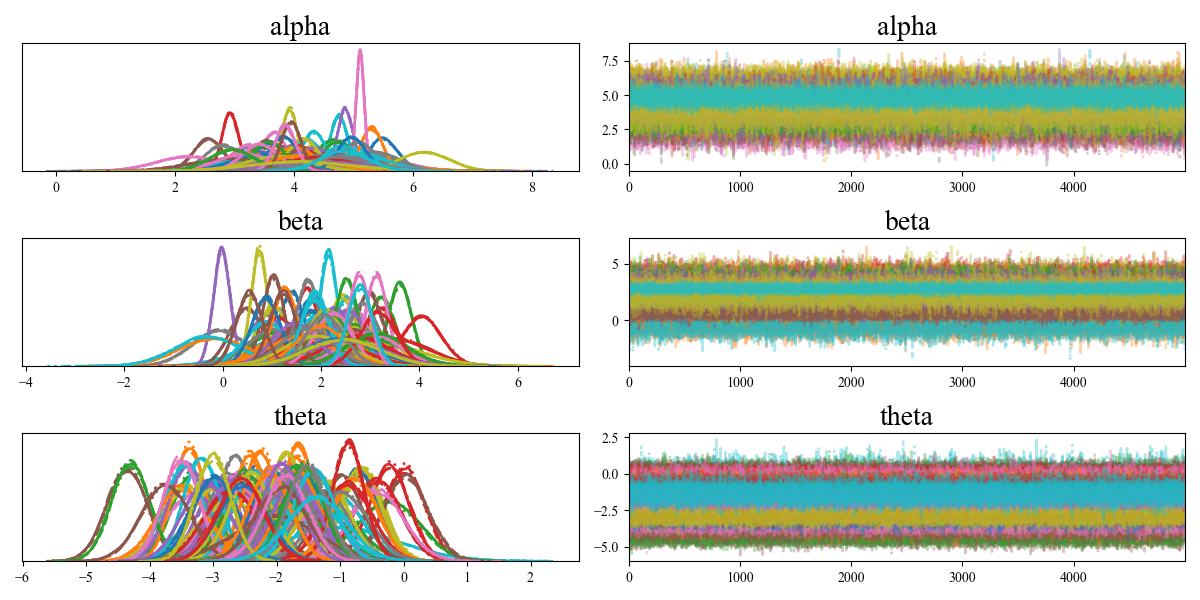

It is also interesting to see the calibration results for all cluster-specific parameters. Figure 7 shows all the posterior distributions for each group’s as well as their trace plots. It can be seen that these individual parameters do have relatively large differences. It can be reasonably inferred that if we were to build a model with many group-specific parameters, the model would “over-fit” since the estimated parameters will only be valid in certain groups. If we were to use a simple non-hierarchical model, the model would not be able to capture true distributions of the calibration parameters.

Figure 7 provides a closer examination of the IUQ results for all cluster-specific parameters, showcasing all posterior distributions for each group’s along with their trace plots. It is clear that these individual parameters exhibit considerable variability. This variability suggests that, if we constructed a model with numerous group-specific parameters, such a model would “over-fit“, as the estimated parameters would only be valid within certain groups. Consequently, a simple non-hierarchical model would not be able to capture the true distributions of the calibration parameters accurately.

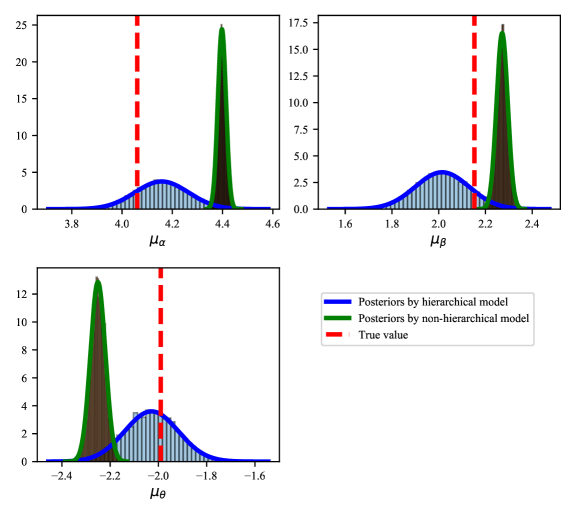

For comparison purposes, a simple non-hierarchical model for this toy example is also constructed. In this non-hierarchical model, three global parameters , and are estimated and no groups are involved. A comparison between the posterior distributions obtained by the hierarchical model and the non-hierarchical model is shown in Figure 8.

We can see that the non-hierarchical model struggles to handle the variability of the calibration parameters, resulting in inaccurate estimations. In contrast, the hierarchical model successfully includes the true values in its posterior distributions. This comparison demonstrates the effectiveness of hierarchical models when dealing with data that exhibits grouping or clustering.

4 Application to Thermal-Hydraulics System Code

In this section, we will apply the hierarchical Bayesian framework to IUQ problem for the PMPs in TRACE code, using the BFBT benchmark dataset as a practical case study.

4.1 BFBT Benchmark and TRACE simulations

The international OECD/NRC BWR full-size fine-mesh bundle test (BFBT) benchmark (Neykov et al. (2006)) was created to encourage advancement in sub-channel analysis of two-phase flow in rod bundles, which has great relevance to the nuclear reactor safety evaluation. The BFBT test program includes experiments performed under steady-state and transient conditions, with measurements for single and two-phase pressure losses, void fraction, and critical power. The benchmark has been widely used in the validation process of various computational approaches (Wang et al. (2019b), Borowiec et al. (2017) Wu et al. (2018c)).

The void fractions are measured at four elevations along the test section, three of them are measured by X-ray densitometer, and the upper one is measured by a X-ray CT scanner. The void fraction data measured from the lower to upper location are referred to as ‘Void Fraction 1’ to ‘Void Fraction 4’, respectively. BFBT benchmark includes various assembly types and in each assembly type, experiments were conducted at various boundary conditions and void fraction data were measured.

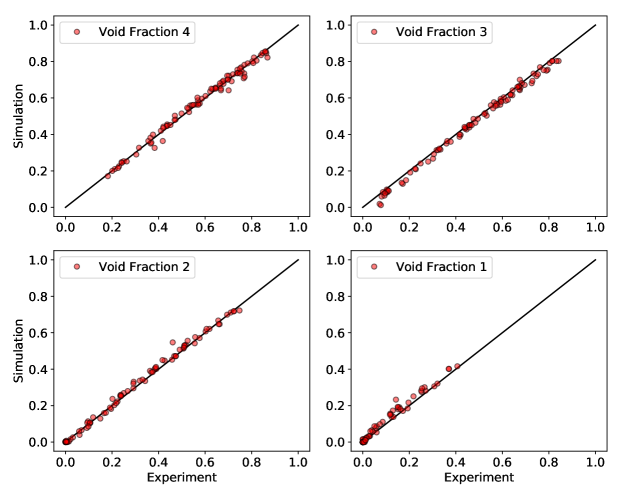

The BFBT benchmark consists of 5 assembly types in all. The Assembly-4 is chosen in the current work. It consists of 86 experimental cases under various boundary conditions (flow rate, inlet temperature, power, and inlet pressure). The TRACE model is constructed according to the experimental geometry and boundary conditions. A comparison between the simulated void fraction results and experimental measurements are shown in Figure 9. The experimental data on ‘Void Fraction 1’, ‘Void Fraction 2’, and ‘Void Fraction 3’ by densitometers are corrected according to Glück (2008). We can see that TRACE model results agree well with experimental data and no obvious model discrepancy exists.

4.2 Selection of input parameters

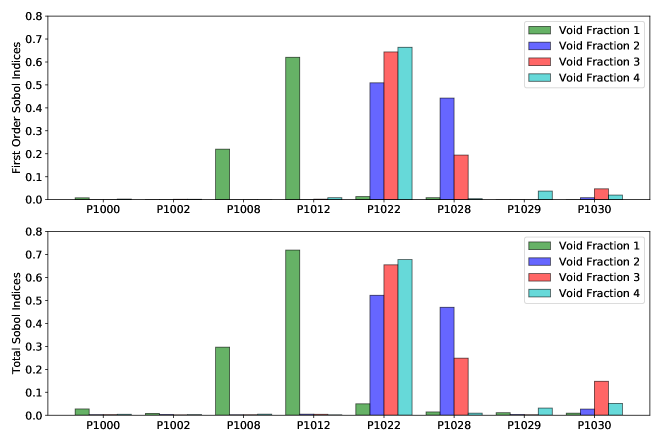

TRACE provides the option to adjust 36 physical model parameters (PMPs) by a multiplicative factor, however, not all of those parameters will be active in the BFBT bundle assembly model because some parameters involve phenomena that do not occur in BFBT benchmark, e.g. reflood. A simple perturbation method is firstly used to perturb each parameter in the range of while fixing other parameters. 50 uniform samples in that range are used to test the effect of this parameter on the simulated void fraction data. The resulting output variance is calculated for each parameter. The results show that most of the variances are 0 or very close to 0. Finally, eight parameters with variances larger than are selected and shown in Table 1.

| Parameter | coefficient Definition |

|---|---|

| P1000 | Liquid to interface bubbly-slug heat transfer |

| P1002 | Liquid to interface transition heat transfer |

| P1008 | Single phase liquid to wall heat transfer |

| P1012 | Subcooled boiling heat transfer |

| P1022 | Wall drag |

| P1028 | Interfacial drag (bubbly/slug rod bundle-bestion) |

| P1029 | Interfacial drag (bubbly/slug Vessel) |

| P1030 | Interfacial drag (annular/mist Vessel) |

The Sobol indices method is subsequently employed for an further screening, and the first and total Sobol indices are shown in Figure 10. The different colors represent the corresponding indices for a certain measurement location. We can see that four out of eight have more significant influences on the model outputs, so the four parameters P1008, P1012, P1022, and P1028 are selected in the sensitivity analysis step and will be treated as uncertain inputs.

4.3 Construction of surrogate models

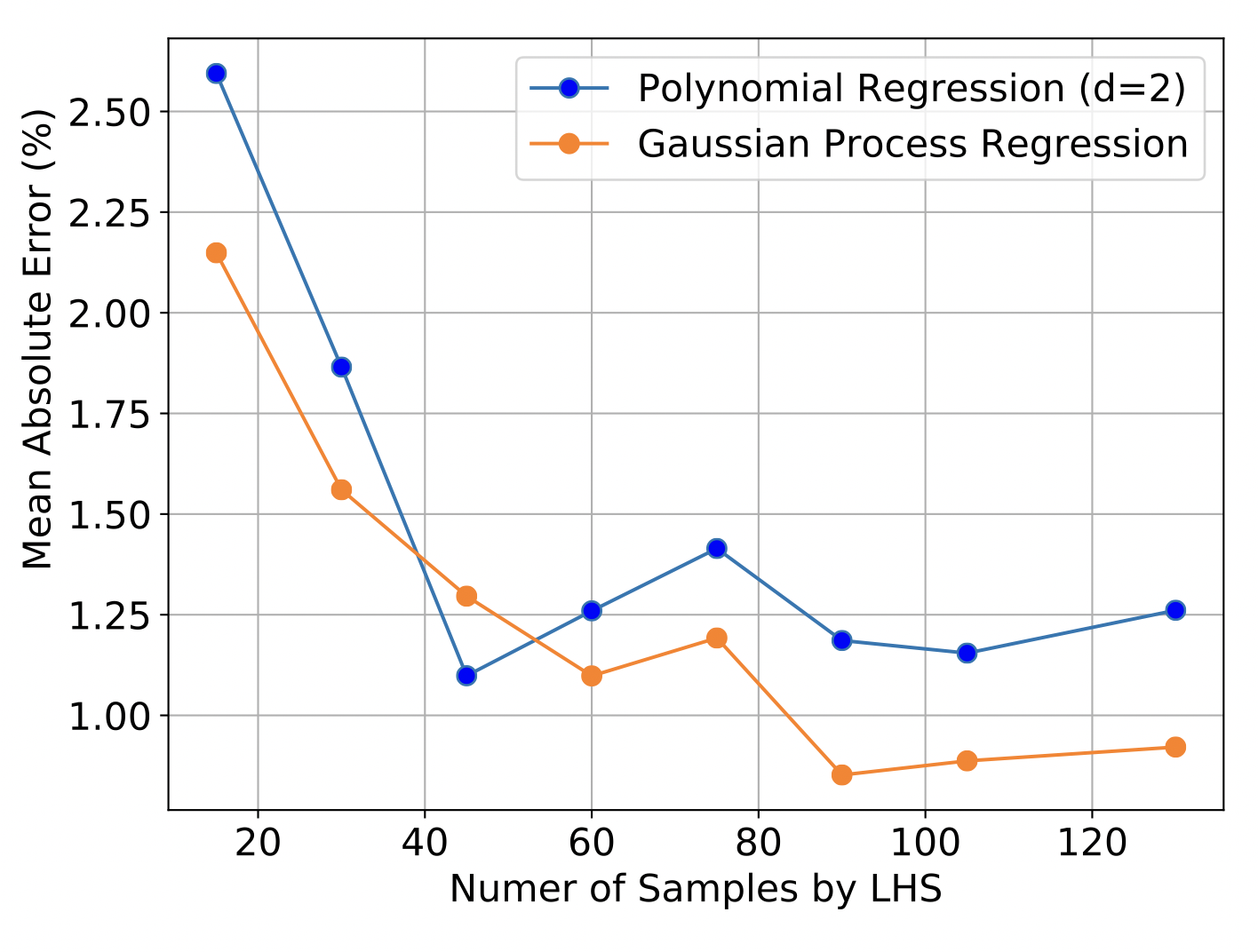

In this section, we provide the specifics of surrogate models that were introduced earlier. The task is to create a surrogate model with 4 inputs and 4 outputs. In order to determine the number of samples required for an accurate surrogate model, a convergence study is needed. Below we show a convergence study to find the sufficient number of Latin Hypercube Sampling (LHS) samples required by surrogates. Two types of surrogates are considered, GP and PR with a degree of 2. An additional 50 samples are taken as a validation set. Figure 11 displays the Mean Absolute Error (MAE). The graph indicates that the out-of-sample errors plateau once the sample number surpasses 100. Therefore, in this study, we choose 100 LHS samples to build the surrogate models. For the results presented below, PR model is used because they can provide convenient gradient information.

In order to determine the range of the input parameter for sampling, an iterative sampling procedure is used in this work. In the posterior sampling phase, it’s possible that the posterior distribution of the calibration parameter may exceed the specified prior range. This occurrence is particularly common in the context of certain PMPs, such as the interfacial drag coefficient, where deviations from their nominal value can be large. In these instances, the posterior distribution may be artificially truncated by the upper bound of the initial range.

To circumvent this issue, we have employed an iterative resampling procedure in Wang (2020). If the posterior distribution is truncated by the upper bound, this suggests that the true posterior distribution may exceed the current range. In such a scenario, it is necessary to extend the initial upper bound of this parameter to a higher value. Doing so ensures that the prior range can accommodate the final posterior distribution. This process involves performing new LHS of the original code, the construction of a surrogate model, and the application of MCMC sampling. As the calibration parameters selected in this paper are all multiplicative factors, the lower bound remains 0.

4.4 Hierarchical Model Structure

In TRACE, dimensionless multipliers are usually used because it provides a straightforward way to compare those coefficients with the nominal value of , and there is no need to calculate their absolute values. Traditional calibration methods implicitly assume that is a global variable for all experimental cases . This assumption greatly simplifies the problem being considered, however, it may be problematic in the context of closure models in TH codes. The closure models are derived from Separate Effects Tests and are strongly dependent on boundary conditions and flow regimes, thus the “true” parameter may have different distributions (from run to run over the physical experiments), resulting in different posteriors (including the uncertainty information as well as the best-fitting value information of the parameter) sampled by MCMC algorithms.

Ignoring this fact and sampling a single global variable might lead to unrealistic posteriors. An accurate way to treat this problem is to partition the experimental data and simulation outputs according to each correlation equation in a certain range of boundary conditions. However, the work will be tedious and is not meaningful in BEPU applications, because simple input distributions are desired rather than many input distributions dependent on other variables. As a middle ground between the two treatments, we propose to use hierarchical models to account for both similarities and differences among calibration parameters over all considered physical experiments.

The hierarchical model used in this work allows for the calibration parameters to vary for each experimental case , reflecting the different boundary conditions. At the same time, we think that those parameters (multipliers) should not display substantial disparities and they can follow a shared distribution, for example, normal distribution. This treatment permits variability for input parameters, which is more realistic and can help reduce the over-fitting issue.

In the hierarchical model proposed for this application, we assume that each experimental case forms a ‘group‘ or ‘cluster‘ since they have different boundary conditions but they have the same assembly type and similar underlying physics. Thus, we can view the 86 experimental cases as forming 86 distinct clusters. Furthermore, each group includes four measurements, leading to the model structure shown in Figure 12.

In Figure 12, represent our selected four PMPs, ‘P1008’, ‘P1012’, ’P1022’, ’P1028’, respectively. M is the number of groups and N is the total number of measurement points. In this case because there are four measurements in each group. is measurement error. For each calibration parameter , since they can be different across groups, so we suppose they are from a common normal distribution:

This approach accounts for possible variations of across different experiments that could arise due to potential errors or discrepancies. It is worth noting that some experimental data may exhibit a significant discrepancy for reasons that remain unknown or unaccounted for. Such data points could disproportionately influence the likelihood function, thereby making the posterior distribution highly sensitive to these points. We can view these points as “outliers”. By employing a hierarchical framework, we can focus on the distribution of , making the model robust against outliers. Adding suitable prior information to and allows us to efficiently perform Bayesian inference for these parameters using the NUTS algorithm.

4.5 Comparison between the Non-Hierarchical and Hierarchical Models

4.5.1 Results of the Non-hierarchical Model

In order to provide a basis for comparison, we first display the results derived from a non-hierarchical model. Here, only four input parameters are required to estimate uncertainty in the Bayesian model. We use the NUTS sampler to sample the posterior distribution, with the polynomial regression model serving as the foundation. Uniform priors on the interval (0,3) are utilized for all four parameters according to Equation 3. For the NUTS sampling algorithm, 100,000 samples are generated and the first 20,000 samples are discarded as burn-in. Multiple chains are used to check the convergence of the Markov chain. It is worth to mention that the non-hierarchical model doesn’t require such many samples, this is only used for the purpose of comparing with hierarchical model.

As we mentioned before, the non-hierarchical model might produce different outcomes when provided with various datasets. Some “outliers” may have significant impacts on the results because they may have larger errors thus create more “weight” on the likelihood function of the posterior formulation. This effect is evident when we partition the data into two sets.

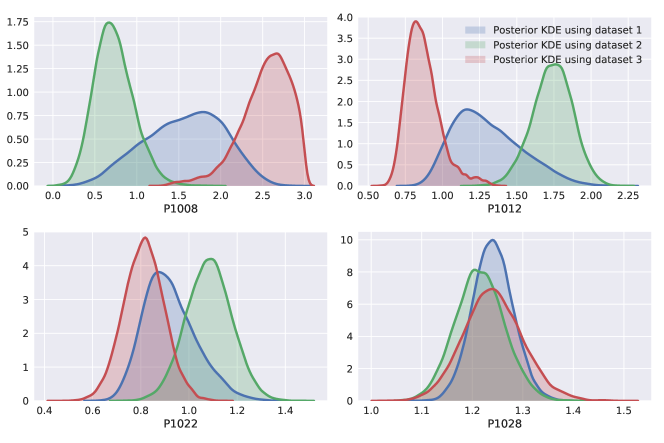

Figure 13 presents the outcomes obtained using a non-hierarchical model with varied datasets. Dataset 1 includes all the data, while Dataset 2 consists of a randomly selected half of Dataset 1’s data. The posterior resulting from Dataset 2 yields the green distribution plot. Dataset 3 contains the remaining data from Dataset 1 and is represented by the red distribution plot. This figure clearly illustrates the significant effect of data selection using the traditional method.

4.5.2 Results of the Hierarchical Model

In the hierarchical model, the priors of the parameters are formulated as:

| (9) | ||||

Other parameters (P1012, P1022, and P1028) are the same and will not be repeated here. It is worth to mention that the range for is quite large considering that the void fractions are all lower than .

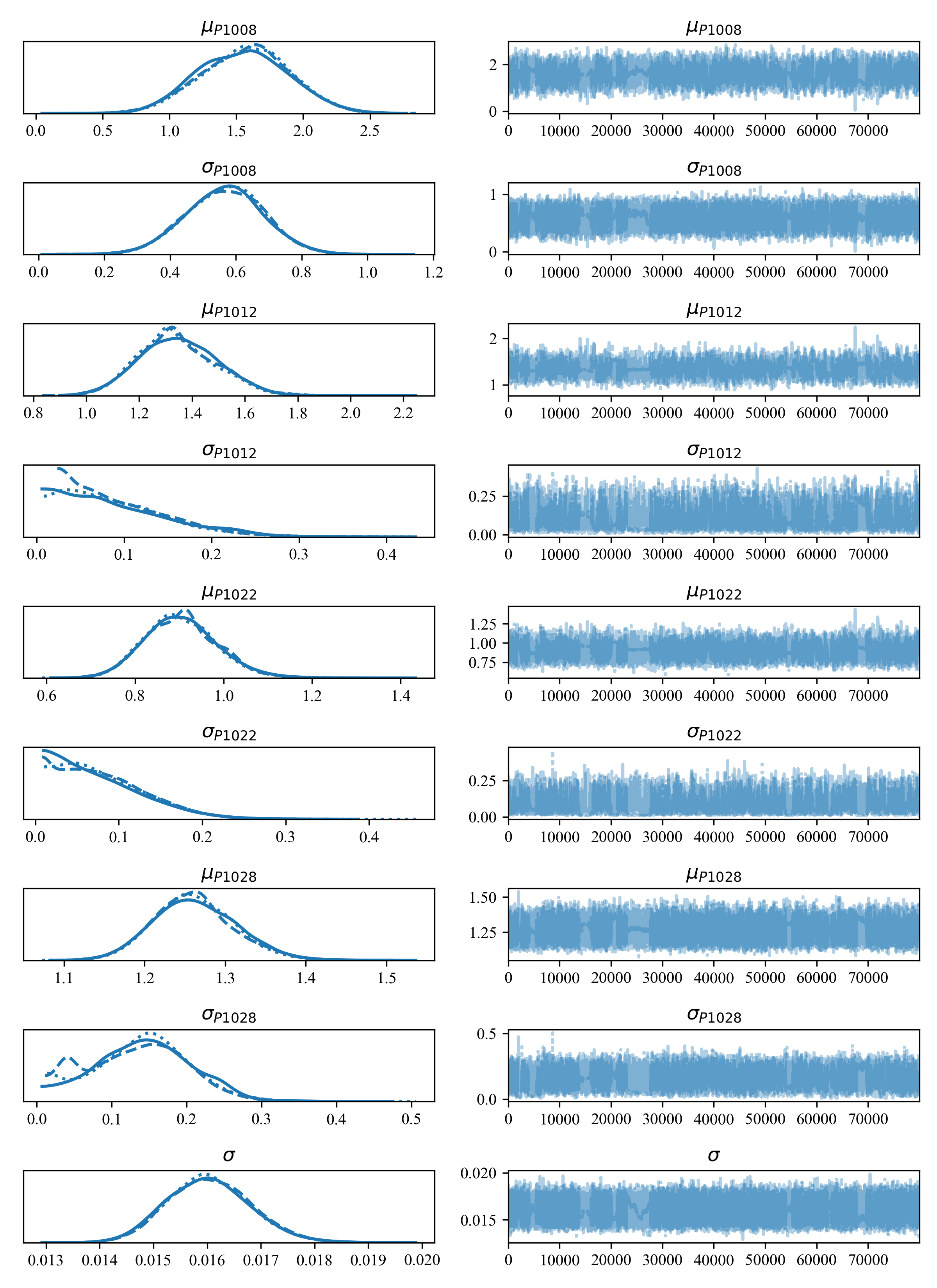

The posteriors and trace plots of all shared parameters () calculated by this hierarchical structure using NUTS are shown in Figure 14. 100,000 steps are used in the MCMC algorithm and the first 20,000 are used as the burn-in period to help convergence. We can see that multiple chains (indicated by different line styles) show similar results and good convergences are achieved. The last parameter in Figure 14 is the total variance term in the model updating equation. We can see its posterior mean corresponds to a 4% void fraction error, which is consistent with the reported measurement error in BFBT benchmark.

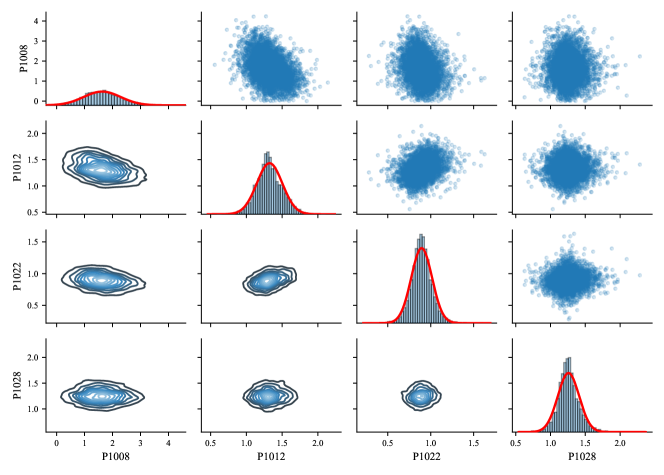

Now we can use these parameters in Figure 14 to generate samples of the calibration parameters. Note that this is different from the posteriors of cluster-specific calibration parameters because we are not interested in obtaining a distribution for each experimental case. In BEPU applications, we are more interested in a single distribution to describe a parameter. This can be done by the same data generating process shown in Equation 9. For example, recall that the samples of are generated from . samples are firstly drawn from the posterior of . Then, for each sample, a random normal number is generated where the mean and standard deviation are based on these two parameter. The total of these numbers will form the posterior of calibration parameters. Take and the posteriors as well as pair-wise distributions are shown in Figure 15. The red curves are fitted normal distributions, and the statistics of the fitted distribution for the four parameters are shown in Table LABEL:tab:5fitted. The fitted normal distribution will be very convenient in future UQ or SA. The pair-wise distributions in Figure 15 show positive correlation between P1008 and P1012, and negative correlation between P1012 and P1022. Further studies focusing on the meaning and impacts of input correlations in the calibration process are required in future.

| Parameters | Distributions | Dist. Parameter 1 | Dist. Parameter 2 |

|---|---|---|---|

| Normal | |||

| Normal | |||

| Normal | |||

| Normal |

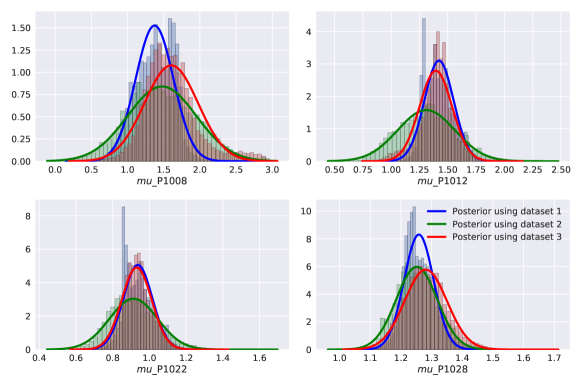

As a comparison to Figure 13 by the non-hierarchical model, Figure 16 shows the posteriors using the same 3 datasets described in the previous section. We can see that the hierarchical model is significantly more robust against the impact of data selection. This resilience can be attributed to the fact that the hierarchical model is largely immune to the effects of outliers. If we assume a global variable, every single data point contributes equally to the likelihood. This can make the likelihood particularly sensitive to extreme data. In contrast, the hierarchical model permits variability within calibration parameters, making it less vulnerable to outliers and capable of producing consistent results given sufficient data.

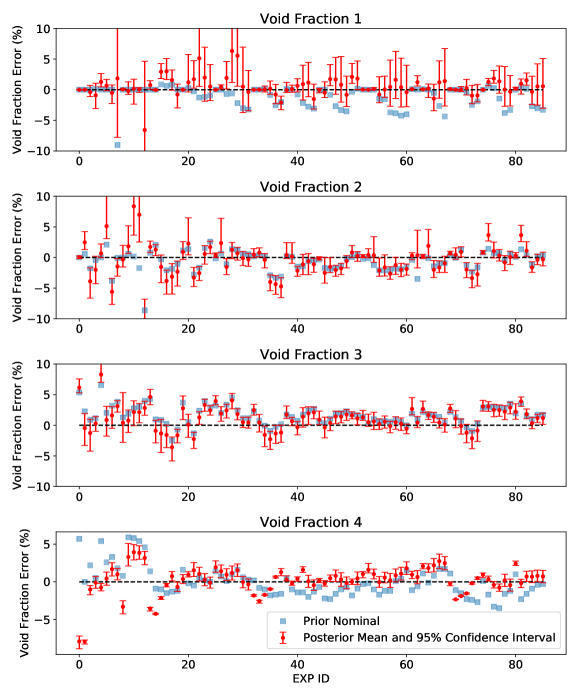

The validity of the obtained posteriors can be confirmed through a process known as a posterior predictive check (PPC). Essentially, this involves conducting a forward Uncertainty Quantification (UQ) process: employing the posteriors as input distributions and propogating these uncertainties through the computational model. This enables us to evaluate the mean and the confidence interval of the model’s responses for validation.

Figure 17 shows the validation of the posteriors. The input samples were drawn from the fitted normal distribution shown in Table LABEL:tab:5fitted. The confidence interval can be seen as the uncertainties in the model responses caused by the parametric uncertainty. We can see that the Void Fraction 1 has relatively larger uncertainty than other locations, and experimental cases with smaller IDs generally have larger uncertainties than those with larger experimental IDs. This can be related to the fact that the inlet pressure increases with the experimental ID, and it is widely known that the TH simulations under low pressures can be problematic.

The posteriors’ validity is further confirmed in Figure 17 by the improved agreement between simulation results and experimental data. This improvement is readily observable from the Void Fraction 1 and Void Fraction 4. The discrepancies between the experiment and two models (the original model and the calibrated model) are reported in Table 3. The original model employs the nominal value of calibration parameters (1.0), and the calibrated model uses the posterior samples of calibration parameters. As shown in Table 3, the calibrated model shows a better alignment than the original model for 3 out of 4 measurement locations.

| Error types | QoIs | Original model | Calibrated model |

|---|---|---|---|

| Mean Squared Error [%] | Void Fraction 1 | 3.93 | 2.67 |

| Void Fraction 2 | 2.88 | 3.51 | |

| Void Fraction 3 | 5.09 | 4.77 | |

| Void Fraction 4 | 4.03 | 3.77 | |

| Mean Absolute Error [%] | Void Fraction 1 | 1.18 | 0.99 |

| Void Fraction 2 | 1.19 | 1.60 | |

| Void Fraction 3 | 1.84 | 1.71 | |

| Void Fraction 4 | 1.51 | 1.31 |

5 Conclusions

This work aims at developing a systematic approach for quantifying the input parametric uncertainties in nuclear TH codes to address the “lack of input uncertainty information” issue using a novel hierarchical Bayesian model. The traditional single-level IUQ approaches are often constrained to relatively small datasets and the calculated posterior distributions are applicable only for the selected experimental cases and may vary when different datasets are used. Outliers also have relative large impacts on the single-level IUQ approach. The proposed hierarchical model considers the variability of calibration parameters and assumes that each calibration parameter is generated from a common population distribution. Using the hierarchical model can help make the model robust against outliers and avoid over-fitting.

The hierarchical model in IUQ is beneficial for nuclear TH applications because the PMPs exhibit group-specific behaviours that require hierarchical structures. The proposed approach is applied to a BFBT benchmark dataset using the TRACE code. In order to deal with the high dimension calibration problem under the hierarchical model, NUTS is used to more efficiently sample the posterior distribution. PPC is used to verify that hierarchical models deliver better alignment with experimental data compared to the original model. Additionally, the IUQ results obtained from the hierarchical model were found to be more robust against outliers and can provide consistent results irrespective of data selection. The hierarchical model also shows a promising approach for generalizing to larger databases with broad ranges of experimental conditions and different geometric setups.

In the next steps, we intend to expand the capacity of our current IUQ framework to accommodate a larger pool of observations. Nevertheless, the computational demands posed by the hierarchical model and the MCMC algorithms represent a significant bottleneck, particularly when the hierarchical structure involves hundreds of groups. More efficient MCMC algorithms such as Variational Inference should be explored and validated. In addition, model discrepancy always poses challenges for IUQ of computer code and Modular Bayesian Approach can be useful when model discrepancy exists. In future works, we need to consider a combination of the Modular Bayesian approach and hierarchical model to in order to apply the IUQ framework for broader applications when model discrepancy exists.

References

- Aly et al. (2019) Aly, Z., Casagranda, A., Pastore, G., Brown, N.R., 2019. Variance-based sensitivity analysis applied to the hydrogen migration and redistribution model in bison. part ii: Uncertainty quantification and optimization. Journal of Nuclear Materials 523, 478–489.

- Amri and Gulliford (2013) Amri, A., Gulliford, J., 2013. Overview of OECD/NEA BEPU Programmes. Technical Report.

- Andrieu and Thoms (2008) Andrieu, C., Thoms, J., 2008. A tutorial on adaptive mcmc. Statistics and computing 18, 343–373.

- Baccou et al. (2020) Baccou, J., Zhang, J., Fillion, P., Damblin, G., Petruzzi, A., Mendizábal, R., Reventos, F., Skorek, T., Couplet, M., Iooss, B., et al., 2020. Sapium: A generic framework for a practical and transparent quantification of thermal-hydraulic code model input uncertainty. Nuclear Science and Engineering 194, 721–736.

- Bajorek et al. (2008) Bajorek, S., et al., 2008. Trace v5. 0 theory manual, field equations, solution methods and physical models. United States Nuclear Regulatory Commission .

- Barth (2011) Barth, T., 2011. A brief overview of uncertainty quantification and error estimation in numerical simulation. NASA Ames Research Center, NASA Report .

- Borowiec et al. (2017) Borowiec, K., Wang, C., Kozlowski, T., Brooks, C.S., 2017. Uncertainty quantification for steady-state psbt benchmark using surrogate models. Transactions of the American Nuclear Society 117, 119–122.

- Chen (2020) Chen, S., 2020. Some recent advances in design of bayesian binomial reliability demonstration tests. University of South Florida.

- Chen et al. (2019) Chen, S., Kong, N., Sun, X., Meng, H., Li, M., 2019. Claims data-driven modeling of hospital time-to-readmission risk with latent heterogeneity. Health care management science 22, 156–179.

- Chen et al. (2017) Chen, S., Lu, L., Li, M., 2017. Multi-state reliability demonstration tests. Quality Engineering 29, 431–445.

- Chen et al. (2018) Chen, S., Lu, L., Xiang, Y., Lu, Q., Li, M., 2018. A data heterogeneity modeling and quantification approach for field pre-assessment of chloride-induced corrosion in aging infrastructures. Reliability Engineering & System Safety 171, 123–135.

- Chen et al. (2020) Chen, S., Lu, L., Zhang, Q., Li, M., 2020. Optimal binomial reliability demonstration tests design under acceptance decision uncertainty. Quality Engineering 32, 492–508.

- Chen et al. (2023) Chen, S., Wu, J., Hovakimyan, N., Yao, H., 2023. Recontab: Regularized contrastive representation learning for tabular data. arXiv preprint arXiv:2310.18541 .

- Gelman et al. (2013) Gelman, A., Carlin, J.B., Stern, H.S., Dunson, D.B., Vehtari, A., Rubin, D.B., 2013. Bayesian data analysis. CRC press.

- Glaeser et al. (2011) Glaeser, H., Bazin, P., Baccou, J., Chojnacki, E., Destercke, S., 2011. Bemuse phase vi report, status report on the area, classification of the methods, conclusions and recommendations. Nuclear Energy Agency Committee on the Safety of Nuclear Installations .

- Glück (2008) Glück, M., 2008. Validation of the sub-channel code f-cobra-tf: Part ii. recalculation of void measurements. Nuclear Engineering and Design 238, 2317–2327.

- Hoffman and Gelman (2014) Hoffman, M.D., Gelman, A., 2014. The no-u-turn sampler: adaptively setting path lengths in hamiltonian monte carlo. Journal of Machine Learning Research 15, 1593–1623.

- Kass et al. (1998) Kass, R.E., Carlin, B.P., Gelman, A., Neal, R.M., 1998. Markov chain monte carlo in practice: a roundtable discussion. The American Statistician 52, 93–100.

- Kennedy and O’Hagan (2001) Kennedy, M.C., O’Hagan, A., 2001. Bayesian calibration of computer models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 63, 425–464.

- Liu and Neville (2023) Liu, J., Neville, J., 2023. Stationary algorithmic balancing for dynamic email re-ranking problem, in: Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pp. 4527–4538.

- Liu et al. (2024) Liu, J., Yang, T., Neville, J., 2024. Cliqueparcel: An approach for batching llm prompts that jointly optimizes efficiency and faithfulness. arXiv preprint arXiv:2402.14833 .

- Liu et al. (2019) Liu, J., Yuan, Q., Yang, C., Huang, H., Zhang, C., Yu, P., 2019. Dapred: Dynamic attention location prediction with long-short term movement regularity .

- Liu et al. (2022) Liu, Y., Hu, R., Zou, L., Nunez, D., 2022. Sam-ml: Integrating data-driven closure with nuclear system code sam for improved modeling capability. Nuclear Engineering and Design 400, 112059.

- Liu et al. (2021) Liu, Y., Wang, D., Sun, X., Dinh, N., Hu, R., 2021. Uncertainty quantification for multiphase-cfd simulations of bubbly flows: a machine learning-based bayesian approach supported by high-resolution experiments. Reliability Engineering & System Safety 212, 107636.

- Mendizábal et al. (2016) Mendizábal, R., de Alfonso, E., Freixa, J., Reventós, F., 2016. Oecd/nea premium benchmark final report.

- Neykov et al. (2006) Neykov, B., Aydogan, F., Hochreiter, L., Ivanov, K., Utsuno, H., Kasahara, F., Sartori, E., Martin, M., 2006. Nupec bwr full-size fine-mesh bundle test (bfbt) benchmark. OECD Papers 6, 1–132.

- Perez et al. (2011) Perez, M., Reventos, F., Batet, L., Guba, A., Tóth, I., Mieusset, T., Bazin, P., De Crécy, A., Borisov, S., Skorek, T., et al., 2011. Uncertainty and sensitivity analysis of a lbloca in a pwr nuclear power plant: Results of the phase v of the bemuse programme. Nuclear Engineering and Design 241, 4206–4222.

- Rasmussen (2004) Rasmussen, C.E., 2004. Gaussian processes in machine learning, in: Advanced lectures on machine learning. Springer, pp. 63–71.

- Saltelli (2002) Saltelli, A., 2002. Making best use of model evaluations to compute sensitivity indices. Computer physics communications 145, 280–297.

- Saltelli et al. (2010) Saltelli, A., Annoni, P., Azzini, I., Campolongo, F., Ratto, M., Tarantola, S., 2010. Variance based sensitivity analysis of model output. design and estimator for the total sensitivity index. Computer Physics Communications 181, 259–270.

- Salvatier et al. (2016) Salvatier, J., Wiecki, T.V., Fonnesbeck, C., 2016. Probabilistic programming in python using pymc3. PeerJ Computer Science 2, e55.

- Skorek and Crecy (2013) Skorek, T., Crecy, A.d., 2013. PREMIUM-Benchmark on the quantification of the uncertainty of the physical models in the system thermal-hydraulic codes. Technical Report.

- Smith (2013) Smith, R.C., 2013. Uncertainty quantification: theory, implementation, and applications. volume 12. Siam.

- Tarantola (2005) Tarantola, A., 2005. Inverse problem theory and methods for model parameter estimation. volume 89. siam.

- Wang et al. (2024) Wang, B., Lu, L., Chen, S., Li, M., 2024. Optimal test design for reliability demonstration under multi-stage acceptance uncertainties. Quality Engineering 36, 91–104.

- Wang (2020) Wang, C., 2020. A hierarchical Bayesian calibration framework for quantifying input uncertainties in thermal-hydraulics simulation models. Ph.D. thesis.

- Wang et al. (2018a) Wang, C., Wu, X., Borowiec, K., Kozlowski, T., 2018a. Bayesian calibration and uncertainty quantification for trace based on psbt benchmark. Transactions of the American Nuclear Society 118, 419–422.

- Wang et al. (2017) Wang, C., Wu, X., Kozlowski, T., 2017. Sensitivity and uncertainty analysis of trace physical model parameters based on psbt benchmark using gaussian process emulator. Proc. 17th Int. Topl. Mtg. Nuclear Reactor Thermal Hydraulics (NURETH-17) , 3–8.

- Wang et al. (2018b) Wang, C., Wu, X., Kozlowski, T., 2018b. Surrogate-based bayesian calibration of thermal-hydraulics models based on psbt time-dependent benchmark data, in: Proc. ANS Best Estimate Plus Uncertainty International Conference, Real Collegio, Lucca, Italy.

- Wang et al. (2019a) Wang, C., Wu, X., Kozlowski, T., 2019a. Gaussian process–based inverse uncertainty quantification for trace physical model parameters using steady-state psbt benchmark. Nuclear Science and Engineering 193, 100–114.

- Wang et al. (2019b) Wang, C., Wu, X., Kozlowski, T., 2019b. Inverse uncertainty quantification by hierarchical bayesian inference for trace physical model parameters based on bfbt benchmark. Proceedings of NURETH-2019, Portland, Oregon, USA .

- Wang et al. (2023a) Wang, C., Wu, X., Xie, Z., Kozlowski, T., 2023a. Scalable inverse uncertainty quantification by hierarchical bayesian modeling and variational inference. Energies 16, 7664.

- Wang et al. (2023b) Wang, H., Fu, T., Du, Y., Gao, W., Huang, K., Liu, Z., Chandak, P., Liu, S., Van Katwyk, P., Deac, A., et al., 2023b. Scientific discovery in the age of artificial intelligence. Nature 620, 47–60.

- Wicaksono (2018) Wicaksono, D.C., 2018. Bayesian Uncertainty Quantification of Physical Models in Thermal-Hydraulics System Codes. Technical Report. EPFL.

- Wu et al. (2024) Wu, J., Chen, S., Zhao, Q., Sergazinov, R., Li, C., Liu, S., Zhao, C., Xie, T., Guo, H., Ji, C., et al., 2024. Switchtab: Switched autoencoders are effective tabular learners. arXiv preprint arXiv:2401.02013 .

- Wu et al. (2022) Wu, J., Tao, R., Zhao, P., Martin, N.F., Hovakimyan, N., 2022. Optimizing nitrogen management with deep reinforcement learning and crop simulations, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1712–1720.

- Wu et al. (2018a) Wu, X., Kozlowski, T., Meidani, H., 2018a. Kriging-based inverse uncertainty quantification of nuclear fuel performance code bison fission gas release model using time series measurement data. Reliability Engineering & System Safety 169, 422–436.

- Wu et al. (2018b) Wu, X., Kozlowski, T., Meidani, H., Shirvan, K., 2018b. Inverse uncertainty quantification using the modular bayesian approach based on gaussian process, part 1: Theory. Nuclear Engineering and Design 335, 339–355.

- Wu et al. (2018c) Wu, X., Kozlowski, T., Meidani, H., Shirvan, K., 2018c. Inverse uncertainty quantification using the modular bayesian approach based on gaussian process, part 2: Application to trace. Nuclear Engineering and Design 335, 417–431.

- Wu et al. (2017a) Wu, X., Mui, T., Hu, G., Meidani, H., Kozlowski, T., 2017a. Inverse uncertainty quantification of trace physical model parameters using sparse gird stochastic collocation surrogate model. Nuclear Engineering and Design 319, 185–200.

- Wu et al. (2017b) Wu, X., Wang, C., Kozlowski, T., 2017b. Global sensitivity analysis of trace physical model parameters based on bfbt benchmark, in: Proceedings of the MC-2017, International Conference on Mathematics Computational Methods Applied to Nuclear Science Engineering, Jeju, Korean.

- Wu et al. (2017c) Wu, X., Wang, C., Kozlowski, T., 2017c. Kriging-based surrogate models for uncertainty quantification and sensitivity analysis, in: Proceedings of the MC-2017, International Conference on Mathematics Computational Methods Applied to Nuclear Science Engineering, Jeju, Korean.