Intrinsically Motivated Self-supervised Learning in Reinforcement Learning

Abstract

In vision-based reinforcement learning (RL) tasks, it is prevalent to assign auxiliary tasks with a surrogate self-supervised loss so as to obtain more semantic representations and improve sample efficiency. However, abundant information in self-supervised auxiliary tasks has been disregarded, since the representation learning part and the decision-making part are separated. To sufficiently utilize information in auxiliary tasks, we present a simple yet effective idea to employ self-supervised loss as an intrinsic reward, called Intrinsically Motivated Self-Supervised learning in Reinforcement learning (IM-SSR). We formally show that the self-supervised loss can be decomposed as exploration for novel states and robustness improvement from nuisance elimination. IM-SSR can be effortlessly plugged into any reinforcement learning with self-supervised auxiliary objectives with nearly no additional cost. Combined with IM-SSR, the previous underlying algorithms achieve salient improvements on both sample efficiency and generalization in various vision-based robotics tasks from the DeepMind Control Suite, especially when the reward signal is sparse.

I Introduction

Reinforcement learning has achieved significant success in many fields with visual observations [1, 2, 3, 4]. Sample efficiency and representation learning in vision-based reinforcement learning tasks have, though, hitherto been a challenging problem [2, 5], especially when applying RL into real-world robotics [6]. Assigning an auxiliary task with a surrogate loss, which introduces the powerful and promising self-supervised learning (SSL) methods in computer vision to RL, is an efficacious technique for representation learning and sample efficiency. With self-supervised auxiliary objectives, the RL algorithms learn more semantic representations and achieve faster convergence.

Albeit combining with SSL naively can improve sample efficiency in vision-based RL, there is still room for improvements. Especially in a real-world setting with sparse rewards, exploration becomes desiderata for RL to obtain non-trivial reward signals, which remains a predicament that previous SSL-RL baselines can not solve efficiently. Adequate information in self-supervised auxiliary tasks can offer practical assistance; however, such paramount information has been disregarded, since the representation learning part is separated from the decision-making part.

Based on that, we propose a framework for SSL-RL to assign the surrogate self-supervised loss into policy learning as an intrinsic reward, namely Intrinsically Motivated Self-Supervised learning in Reinforcement learning (IM-SSR). It is a simple yet effective modification with nearly no additional cost, which can be effortlessly plugged in any reinforcement learning with self-supervised auxiliary tasks. Intuitively, the self-supervised loss measures the quality of the observation representation learning, which would be relatively large for the states rarely encountered or vulnerable to nuisance. Also we theoretically decompose the self-supervised loss as a metric in the feature space: one motivates exploration for novel states, the other improves robustness from nuisance elimination. Thereby, it is reasonable to design the self-supervised loss as an intrinsic reward to award extra bonuses for those states. Combining with this intrinsic reward, the agent is encouraged to explore novel and vulnerable states, thus improving sample efficiency along side generalization ability.

Empirical experiments are conducted on robotics control tasks in DeepMind Control Suite [7] based on a generalization benchmark DMControl-GB111https://github.com/nicklashansen/dmcontrol-generalization-benchmark. Complex testing tasks are included, like environments with unseen natural video backgrounds, which can evaluate the generalization ability of the algorithm to various real-world settings. Two of the classical vision-based reinforcement learning algorithms with self-supervised methods, CURL [2] and SODA [1], are chosen to be baselines. Combined with IM-SSR, the underlying baselines improve sample efficiency and generalization, especially when the reward signals are sparse, resulting in new state-of-the-art performance.

We summarize our contributions as follows:

-

•

We present a general framework, IM-SSR, which is an effective idea to utilize the self-supervised loss in auxiliary tasks as an intrinsic reward. The philosophy of associating self-supervised learning with reinforcement learning is promising since the information in auxiliary tasks should not be disregarded.

-

•

We formally show that the self-supervised loss can be decomposed as exploration for novel states and robustness in representations from nuisance elimination.

-

•

With nearly no additional cost, we offer an approach to promote improvements on the previous underlying reinforcement learning algorithms with self-supervised auxiliary loss, which achieves new state-of-the-art performance on vision-based reinforcement learning.

II Methodology

As demonstrated in Figure 1, the framework of reinforcement learning combined with self-supervised learning is: First, we maintain a replay buffer with transitions. Various kinds of augmentations can be employed to obtain different views of the original observations. It remains ambiguous whether to use the original observation or an augmented view into the encoder for RL, which we demonstrate as above just for simplicity. In reinforcement learning part, we encode inputs into latent variable and train the policy based on and related transitions; in self-supervised learning part, an auxiliary task is conducted to constrain the consistency of encoded from different views of observations. The self-supervised loss in auxiliary task is generally denoted as and explicitly formulated in Appendix B. To utilize the self-supervised learning part, we introduce on each observation as an intrinsic reward added to the original extrinsic reward in Equation (1).

| (1) |

When , i.e., , the framework degenerates into previous SSL-RL methods, which have no interplay between SSL and RL.

Motivation. Intuitively, the self-supervised loss contains information about how well the learned representation is. The loss would be relatively large for rarely encountered states, which motivates us to award novel or vulnerable states. The bonus motivates the agent to explore, which helps with the searching for non-trivial rewards. Based on that, sample efficiency can be improved significantly, especially in sparse-rewarded tasks. We have to clarify that IM-SSR utilizes the inherent yet unemployed information in SSL, which is different from those manually designed exploration methods. Also, generalization ability is a by-product gained from building robust representations for vulnerable states.

Summary. To utilize inherent information in SSL, we present a generic framework, IM-SSR, where the SSR can be replaced by any suitable self-supervised learning method. For instance, IM-CURL means a CURL baseline combined with the intrinsic reward modification. Our method requires no additional network architectures nor any modification on the self-supervised task, thus making it easy to be plugged-in.

The pseudo-code of IM-SSR is as follows.

Implementation details. The intrinsic rewards need to be normalized, since it is the relative value inside one batch/update that matters, not the exact quantity. The hyper-parameter can be elaborately designed to decay; however, we implement the method with a fixed or a naive decaying schedule without fine-tuning and it still achieves desired performance. Our method can be easily deployed on any SSL-RL framework. In the experiments, we strictly follow the same way as the corresponding baseline does and use exactly the same augmentation method. Details of the related SSL-RL baselines are shown in Appendix B.

III Analysis on Self-supervised Loss in Reinforcement Learning

We formally show that it is reasonable to employ the self-supervised loss as an intrinsic reward, which can be interpreted as an exploration bonus and robustness improvement from nuisance elimination.

III-A Notations and Preliminaries

A Markov Decision Process (MDP) is defined for the information transmission in Figure 2. denotes an optimal representation in the latent space, which is necessary and sufficient for the reinforcement learning task . Another random variable denotes nuisance for the task, i.e., or ; also, we assume and . Observation is generated by an implicit function , i.e., . For instance, denotes the stacked pixel observation captured from the environment; is the desired optimal representation for downstream tasks, such as state-based proprioceptive features; denotes task-irrelevant information like textures, shadows and backgrounds, which may be a culprit for the representation learning, thus seriously depreciating sample efficiency and generalization ability to unseen environments of the algorithm.

We now consider a generic setting for contrastive learning as an auxiliary self-supervised task along side reinforcement learning. The encoding function is denoted by . A main principle for contrastive learning is that features from different views of the same observation should be forced to be close in the feature space, i.e., the ideal encoder would satisfy . The contrastive loss depends on the SSL architecture, which has the form like Equation (5) in CURL or Equation (6) in SODA as shown in Appendix B. Then we use to denote the underlying pair-wise contrastive loss as a metric defined on a metric space , measuring the distance between different latent representations . It satisfies the well-known properties: ; ; .

III-B Decomposition and Interpretation of Contrastive Loss

For each observation , we analyze the pair-wise contrastive loss. Augmentations of are denoted by . Since the augmentation function is designed to perturb nuisance and maintain significant information of the original observation, we assume , s.t. . The corresponding features are and . We further introduce an optimal representation function . Ideally, it would ignore information related to nuisance and maintain the information in related to downstream tasks, i.e., . We may use a fixed to denote .

| (2) | ||||

The loss can be decomposed as above, where denotes the composite function of and , and the second inequality is from the triangle inequality of the metric. Each term on the right hand side can be further bounded by

| (3) | ||||

The first term, interpreted as the projected distance of , is indeed an exploration bonus. It is the distance in feature space between different projection functions and , which contrastive learning is trying to minimize; therefore, the distance would be smaller if the state has been encountered. From the perspective of exploration in reinforcement learning, this term can be treated as a prediction error, and it would bring extra bonus for novel states that the contrastive part is not trained on. The prediction error is often considered to motivate exploration like RND [8] does; however, it requires additional networks for distillation. In the most desired case, this term should vanish, as the function is optimized to .

The second term, related to nuisance elimination, encourages the agent to visit vulnerable states and introduces randomness into the optimization. States that are more sensitive to distortions will give larger distance between and , which is encouraged to be visited or revisited. To be clarified, since the nuisance in the considered MDP is independent of , our method can not directly improve the generalization ability of the baseline; however, it can contribute by building more robust representations for vulnerable states. Robustness in representations makes the learned representations more invariant to distortions, thus improving the generalization ability to untrained environments. While the function converges to , the information in is getting ignored, and the whole term of nuisance elimination would also vanish.

To summarize, the intrinsic reward, i.e., pair-wise contrastive loss of the auxiliary tasks in reinforcement learning, can be interpreted as: exploration for novel states that improves sample efficiency, and robustness in representations from nuisance elimination that improves generalization ability.

| (4) | ||||

Inequality (4) is tightly bounded, since is optimized towards and will ignore the information in ideally. Apart from that, with a decaying parameter in front of the intrinsic reward, our method is able to converge to the optimal policy asymptotically. Specifically, can be a pair-wise loss of Equation (5) in CURL or Equation (6) in SODA, which will serve as an intrinsic reward in IM-SSR. The pair-wise loss can also be designed by ourselves to utilize the information in encoders, which will be further explored in Appendix D.

IV Experiments

| DMControl Suite (training) | 0.2T Training Frames | 0.5T Training Frames | 1.0T Training Frames | |||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CURL | IM-CURL | SODA | IM-SODA | CURL | IM-CURL | SODA | IM-SODA | CURL | IM-CURL | SODA | IM-SODA | |||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

IV-A Experimental Settings

Empirically, we conduct experiments on DMControl suite. All experiments are evaluated over 5 random seeds, except for those mentioned in the related caption. DMControl-GB provides diverse test environments for policy evaluation, which are important for sim-to-real in robotics. The agent is trained in the unmodified training environment, while it is evaluated in some new environments as well as the unmodified environment of DMControl suite. The new test environments can be summarized as two types: changing the color randomly and replacing the background with natural videos. Specifically, it includes color_easy, color_hard, video_easy, video_hard, as shown in Figure 3 and we select training, color_hard and video_hard as the representative environments.

Primarily, to validate the priority in sample efficiency of IM-SSR, we compare IM-CURL, IM-SODA with CURL and SODA in the training environment. Especially, we elaborately design tasks with sparse rewards to further prove the significant exploration brought by IM-SSR. We conduct all experiments with 500K training frames. We denote the total training frames as T, using 0.2T as early-term, 0.5T as mid-term and 1.0T as final-term to represent the training progress of 20%, 50% and 100%. We report the mean and standard deviation in the tables. Additional results and more details can be found in Appendix A and C.

Besides, to verify that IM-SSR is helpful for robustness and generalization, we compare IM-SODA and SODA in challenging generalization environments. First, in Section IV-C, we intend to evaluate the generalization ability of sim-to-real, where agents are trained in simulated unmodified mujoco environment training, and tested in real-world like environment color_hard and video_hard. Second, we design tasks which are trained on one scene and tested on another to further validate the generalization in transferring. The detailed analysis of generalization tasks and results can be found in Appendix E.

IV-B Sample Efficiency

In this subsection, we demonstrate that IM-SSR can improve sample efficiency on the training environment as Figure 3(a). The results shown in Table I can be summarized:

-

•

IM-SSR surpasses its underlying baselines in the early-term in most cases and maintains its priority in the mid-term significantly.

-

•

The improvements in mid-term are much larger than in other terms, since IM-SSR converges much faster than underlying baselines. The baseline may still struggle in learning representations or searching for non-trivial rewards, while IM-SSR has already learned semantic representations and obtained positive and effective reward signals to be trained on.

-

•

In the final-term, we expect IM-SSR and baselines to achieve similar results like in walker_walk. However, we surprisingly find that in several tasks like cartpole_swingup_sparse, IM-SSR achieves higher mean and much lower variance in performance than the underlying baselines, which means IM-SODA is much more stable in these environments.

-

•

There are few cases that IM-SSR provides slight improvements, which mainly attribute to the dense reward. When setting the reward signal to be sparse, the improvements of IM-SSR become significant. Related experiments are implemented in Section IV-D.

IV-C Generalization

We demonstrate that IM-SSR can improve robustness and generalization, and the evaluation is conducted on color_hard and video_hard environments as Figure 3(c) and Figure 3(e).

| random colors | video backgrounds | |||||||||||||||||||||||||||||||||||||

| DMControl-GB (evaluating) | 0.2T | 0.5T | 1.0T | 0.2T | 0.5T | 1.0T | ||||||||||||||||||||||||||||||||

| SODA | IM-SODA | SODA | IM-SODA | SODA | IM-SODA | SODA | IM-SODA | SODA | IM-SODA | SODA | IM-SODA | |||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

As shown in Table II, in the early-term and mid-term, IM-SODA outperforms SODA nearly on all tasks, which proves that its generalization ability in unseen challenging evaluation environments is much better. Since video_hard is relatively harder, it makes both algorithms perform poorly in some environments. Still, the peak of IM-SODA is higher than SODA, which also shows better generalization ability of IM-SODA.

IV-D Sparse Cases

Although methods like CURL and SODA achieve comparable performance in major tasks in DMControl, they still perform poorly on tasks with sparse rewards like cartpole_swingup_sparse and pendulum_swingup. As discussed above, simply combining RL with self-supervised learning cannot help solve environments with sparse rewards, but the agent of IM-SSR is encouraged to explore for novel states that have non-trivial rewards potentially.

To verify this hypothesis, we visualize the training process on these two environments with sparse reward signals in Figure 4. Each row in Figure 4 corresponds to an environment, pendulum_swingup is on the top, while cartpole_swingup_sparse is on the bottom. The first column shows the comparison between CURL and IM-CURL on training, while other columns on the right show the comparison between SODA and IM-SODA on training, color_hard and video_hard respectively. IM-SSR improves the sample efficiency significantly in almost all cases. Specifically, the result on the left top panel shows that in IM-CURL pendulum_swingup converges to much higher averaged returns at about 400K steps, while CURL still performs extremely bad at 500K steps.

What makes the improvement to be marginal or significant? As we notice in Table I, sometimes IM-SSR and the corresponding baselines achieve similar performance. The decomposition and interpretation of pair-wise contrastive loss in Equation (4) can be an explanation, especially the exploration term. When the reward signal is dense, the baseline already learns well without exploring for novel states, and there is not much room for IM-SSR to improve. However, when the reward signal is sparse, the significance of exploration emerges and the intrinsic reward does help.

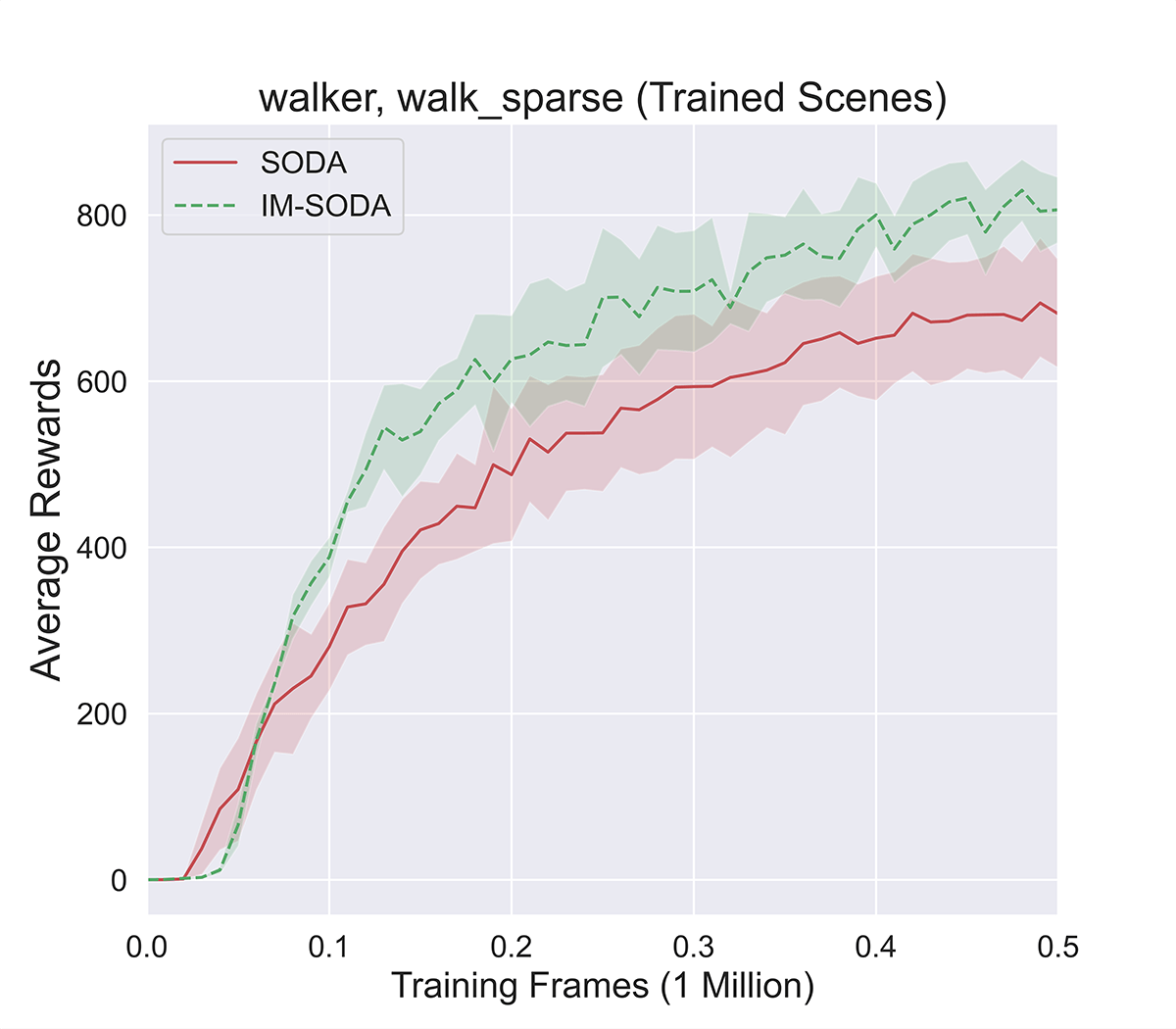

To verify this idea, we take walker_walk as an example and modify the reward to be sparse as either 0 or 1. The new task is called walker_walk_sparse. In walker_walk, IM-SSR and the corresponding baselines have already achieved high performance in early-term. However, as shown in Figure 5 with designed sparse settings, IM-CURL and IM-SODA perform much better than CURL and SODA due to the lack of reward supervision.

V Related Work

Self-Supervised Learning for Visual Representation. Self-supervised learning has attracted interests of researchers in Computer Vision [9, 10, 11, 12, 13, 14]. MoCo (Momentum Contrastive) [9] builds a dynamic queue with the help of the momentum encoder. It trains the encoder by matching queries and keys using InfoNCE loss. SimCLR [10] introduces a learnable nonlinear transformation between the representation and the contrastive loss. With the projection layers, we can remove the momentum encoder and the memory bank. BYOL [11] trains an online network to predict the target network representation of the same image with the different views. Amazingly, they remove negative samples. SimSiam [13] explores what makes good representation in BYOL by modifying the predictor and removing the momentum encoder.

Auxiliary Task in Reinforcement Learning. Directly using images as the input in deep reinforcement learning always causes sample inefficiency, researchers propose to jointly train the policy and a self-supervised loss to solve the inefficiency problem to improve performances [15, 1, 2, 5, 16, 17]. Inspired by [9, 10], CURL [2] uses contrastive learning as the auxiliary tasks. They just treat the different randomly cropped views of the same image as the positive pair. SODA [1] introduces a new overlay augmentation that they use the augmentation and randomly crop the same image as the positive pair, which shows State-of-The-Art performance in generalization [7]. In [11], they use a online network to predict the target network and do not need negative pairs. Besides, they do not use the same batch data to optimize the RL loss and the auxiliary task loss. PAD [16] uses inverse dynamics prediction error to optimize the encoder and introduces policy adaptation tricks to improve generalization performance. Besides, SVEA [17] stables the learning of Q-value to improve generalization. However, the policy training part and SSL part are separated, which prevents the policy from referencing to representation learning to make decision.

Exploration. Exploration is an important topic in reinforcement learning. A natural and effective way is count-based exploration bonuses [18]. When the space is becoming larger and larger, simple counting fails, and lots of work have studied how to generalize count exploration to large state spaces [8, 19, 20]. Another class of exploration is based on the errors in predicting dynamics [8, 21, 22]. For example, Random Network Distillation [8] treats the error of predicting features of the next observations given by a fixed random model as the bonus. When policy gives an action to find a non-seen state, it will be awarded. However, exploration based on predicted errors needs additional network architectures, while our method only borrows the representation learning error as the intrinsic reward.

VI Conclusions and Future Work

In summary, we present a simple yet effective idea to introduce the information in self-supervised learning into policy learning as intrinsically motivated self-supervised learning in reinforcement learning (IM-SSR). Theoretical analysis is made to interpret decomposition of the self-supervised loss, as exploration for novel states and robustness from nuisance elimination. The implementation of the IM-SSR is quite simple, without any extra modification on architectures nor costs in computation, yet the improvement is significant. Both IM-CURL and IM-SODA achieve faster convergence than CURL and SODA. Besides, IM-SODA generalizes much better than SODA in unseen real-world like environments. We also emphasize the IM-SSR performs much better than the underlying baselines in sparse reward environments. Therefore, we are offering an approach to further improve sample efficiency and generalization of suitable SSL-RL baselines, with nearly no additional cost.

Albeit it is promising to introduce interaction between SSL and RL, intrinsic reward is not the only way to implement this idea; thereby, many other approaches remain to be developed. Also, an adaptive schedule can be designed for the intrinsic parameter to achieve a more robust and tuning-free algorithm. In the future, we desire to further extend IM-SSR to more robotics tasks in more complex environments.

Appendix A Performance Curves

To show the effectiveness of IM-SSR, we visualize the performance curves in DMControl environments. To further verify the generalization ability of IM-SSR from simulation to real world, evaluation on complex unseen environments is conducted based on DMControl-GB. As we can see, almost in all environments, IM-SSR outperforms the baseline methods. We mainly follow the experimental setting in CURL and SODA, which trains the agent in unmodified training environments and test in various environments: unmodified training, color_hard and video_hard. The x-axis denotes training frames (1 million).

Additional results related to PAD and SVEA are in Figure 8 and Figure 10, where . We focus on the performance on pendulum_swingup.

The performance curves clearly demonstrate the priority of IM-SSR on sample efficiency over baselines. The green curve which represents IM-SSR is higher than the red baseline almost every time. Especially in tasks with sparse rewards, like the first and the third columns, IM-SSR obtains non-trivial rewards much earlier and surpasses the underlying baseline significantly. Also in generalization evaluation, IM-SODA shows better generalization ability in color_hard and video_hard.

Appendix B Basic SSL-RL Architectures

Our method can be easily deployed on any SSL-RL framework. In this paper, we use CURL [2], SODA [1] and PAD [16] as the basic SSL architectures in the IM-SSR framework, which are built based on SAC [23].

CURL. Vision-based RL is disturbed by sample-inefficiency [5]. Inspired by the success of self supervised learning in computer vision [9, 10], CURL [2] proposes to training an extra contrastive objective as an auxiliary task to make encoder to learn high level representation faster and better. More specifically, they maximize the agreement between augmented versions of the same observation by InfoNCE loss as Equation (5):

| (5) |

where is the encoder query, is parameters to be optimized and denotes the key that matches [2]. Intuitively, the value of the loss is low when is similar to its positive key and dissimilar to other negative keys (denoted as ). In CURL implementation, we simply treat different augmented views of the same state as the positive pair.

SODA. SODA [1] maximizes the mutual information between latent representations of augmented and non-augmented data by employing BYOL-like [11] architecture, which does not need negative samples at all. The RL policy and the self-supervised auxiliary task share a common encoder . is an augmented observation of . They use the encoder and an projection network to extract , and use the target encoder and target projection network to extract , where is an exponential moving average (EMA) of . The objective of SODA is to predict from by , which is formulated as a consistency loss:

| (6) |

where , and .

PAD. PAD [16] explores to use self-supervision in new environments for continue training the policy for generalization. They employ the inverse dynamics model as the SSL part. Specifically, at each step, a transition sequence (,,) is observed and an inverse model takes and to predict , which can adapt the policy to the new environments without any reward signal. Formally, the inverse dynamics objective for continuous actions can be written as:

| (7) |

where MSE means mean squared error and means the inverse prediction model.

SVEA. SVEA [17] improves the generalization of RL algorithms by stabling the learning of the Q-values. To be more specific, SVEA minimizes a nonnegative objective , where

| (8) | ||||

is the target q-value in Bellman equation. and is the constant coefficients and means we use the augmented data to compute the Q-values.

Data Augmentations. In order to smoothly apply SSL to RL tasks, we need to augment the same observation. CURL only augments data by random crop, while SODA proposes a novel and stronger augmentation method, random overlay, which linearly interpolates between an observation and another image. In CURL and PAD, the inputs of different encoders are different crops of the same observation; in SODA, both inputs are the same crop, while the momentum encoder’s input is additionally processed by the random overlay augmentation method. More details can be found in [1, 16, 2]. Noting that our IM-SSR uses the same augmentation method as baselines.

Update Details. Besides, there are differences in the update of auxiliary tasks: Some, like CURL, update the auxiliary task with same sampled transitions as in RL update, while others do not, like SODA. We find it interesting that using different transitions improves the performance, which remains to be further studied. Also, the update frequency of RL and SSL can be different. We mention these differences in the pseudo-code and strictly follow the same way as the corresponding baseline does.

Appendix C Implementation Details

C-A Baseline Details

We show the implementation details for CURL, SODA and PAD in this subsection. Specifically, we present in detail about the hyperparameters for both algorithms in Table III and the choice of the for intrinsic reward in Table IV. Noting that the basic SSL-RLs are mainly based on the official released implementation222https://github.com/MishaLaskin/curl 333https://github.com/nicklashansen/dmcontrol-generalization-benchmark.

We utilize a simple decaying schedule for , by multiplying a rate every 100k steps, like . All experiments follow this rule, except for cartpole_swingup_sparse, which uses a fixed parameter without any complicated designed schedule. Such a hyper-parameter, which is fixed or naively scheduled, can still obtain solid performance, thus proving the stability of IM-SSR. In the future, adaptive schedule can be used to adjust . Besides, similar to RND [8], we use the predicted observation error of the next state.

For data augmentation, both CURL and PAD only use random cropping while SODA proposes a new augmentation method for generalization: Random Overlay [1], which linearly interpolates between an observation and another natural image as Figure 9 shows. We can formally write it as:

| (9) |

where is the observation, is the natural image and is the interpolation coefficient. In SODA [1], is set as . We emphasize that we use exactly the same augmentations as the baseline methods.

C-B Hyper-parameters

| Hyper-parameter | Value |

|---|---|

| Frame rendering | |

| Frame after crop | |

| Stacked frames | 3 |

| Number of conv. layers | 11 (SODA, PAD, SVEA) |

| 4 (CURL) | |

| Number of filters in conv. | 32 |

| Action repeat | 2 (finger_spin) |

| 8 (cartpole_swingup_sparse, pendulum_swingup) | |

| 4 (otherwise) | |

| Discount factor | |

| Episode time steps | 1,000 |

| Learning algorithm | Soft Actor-Critic |

| Number of training steps | 500,000 |

| Replay buffer size | 500,000 (SODA, PAD, SVEA) |

| 100,000 (CURL) | |

| Optimizer (RL/aux.) | Adam |

| Optimizer | Adam |

| Learning rate (RL) | 1e-3 |

| Learning rate | 1e-4 |

| Learning rate SODA | 3e-4 |

| Batch size in RL | 128 |

| Batch size in SSL | 128 (CURL) |

| 256 (SODA, PAD, SVEA) | |

| Actor update freq. | 2 |

| Critic update freq. | 1 |

| Auxiliary update freq. | 1 (CURL, PAD) |

| 2 (SODA) | |

| Momentum coef. (SODA) |

| cartpole | finger | pendulum | reacher | walker | walker | |

|---|---|---|---|---|---|---|

| swingup_sparse | spin | swingup | easy | walk | walk_sparse | |

| IM-CURL | 0.01 | 0.005 | 0.05 | 0.005 | 0.005 | 0.1 |

| IM-SODA | 0.01 | 0.005 | 0.1 | 0.001 | 0.05 | 0.1 |

C-C Sparse Reward Settings

In Section IV-D, we modify the reward signal in walker_walk to compare the improvements between dense rewards and sparse rewards. The original reward signal designed in DMControl Suite is a combination of terms related to upright torso, torso height and horizontal velocity [7]. The returned reward is scaled from 0 to 1, which is computed by the following codes.

To maintain the desired combination of rewards in walking, we keep the same setting of the dense reward, and only change it from the continuous version in to a discrete version in . The sparse reward is defined as follows.

| (10) |

Appendix D Further Extensions

Various architectures of SSL-RL can also be explored, such as SVEA [24] whose self-supervised learning part is merged into the Q-learning procedure. Hence, we cannot directly utilize the SSL loss as an intrinsic reward, since no auxiliary loss is available.

However, the philosophy of IM-SSR to utilize the paramount information in SSL can still be realized by information maintained in encoders. We simply add an MSE loss as the metric to evaluate the distance between encoded augmentations and encoded original observations, and then use the pair-wise MSE as the intrinsic reward. The additional computation is still very little.

The detailed implementation is as follows. denotes the encoded variable of the original observation by encoder in Q function, while denotes the encoded variable of the original observation by the encoder in target function. By this way, we can calculate and normalize them within a batch to design the intrinsic reward.

The results show that IM-SVEA achieves better performance than SVEA in Figure 10. The improvements in video_hard can be ignored, which may be attributed to the baseline SVEA who fails.

This section motivates us to explore various approaches to design the intrinsic reward, when the formulation of SSL loss is not desired or even there is no available auxiliary loss. More attempts on how to efficiently utilize the information in the SSL part are needed in the future.

Appendix E Generalization Benchmarks

E-A Motivations on Generalization Tasks

We include two types of experiments related to generalization, one is more likely to sim-to-real, and another is more likely to transferring. In Section IV-C, we train agents based on unmodified environments and tested on unseen challenging environments which is designed for simulation to reality. We also implemented experiments that are trained on one scene and tested on another unseen scene with a dynamical changing camera pose, which is designed for transferring in real world.

First, we would like to explain for generalization tasks in Section IV-C based on DMControl-GB. Researchers mainly focus on the original DMControl baseline in previous works, which is still away from real life. If we have a task in the real world, sometimes we do not train directly in the real world; we can model the object and simulate the task in a mujoco-like world instead. The physical objects are already included in the simulated environment, and the main differences will be nuisances we mentioned in the paper, like color, background, and others. Therefore, it is reasonable to treat the benchmark as a useful generalization benchmark, which helps transfer from simulation to real life.

In this work, to the best of our ability, we try to simulate transferring from one scene to another. The implementation of transferring tasks is based on DMControl Generalization Benchmark [1] and Distracting Control Suite [25]. Additional challenging experiments are implemented, where the agent is trained based on natural videos instead of simulated mujoco environments and tested in another unseen scene. Apart from the changing of scenes, camera poses are also dynamic in training and testing as shown in Figure 11.

E-B Performance in Transferring Tasks

As is shown in Figure 12, we conduct experiments on walker_walk_sparse to compare between the trained scene and another unseen test scene. The performance show that IM-SODA performs better than SODA as desired.

Appendix F Sensitivity Analysis on

Here, we fix the schedule of and explore the utility of various in IM-SSR. Figure 13(a) shows that different will lead to different performance, where a suitable leads to the best performance. As in shown in Figure 13(b), with the increasing of , the final performance will increase first and then decrease. The magnitude of matters for a proper modification on the original reward. If the is too big, the intrinsic reward will be the most important target of the agent, and the extrinsic reward will be neglected, which results in low performance. If the is too small, the performance is closer to CURL, which is a special case for .

References

- [1] N. Hansen and X. Wang, “Generalization in reinforcement learning by soft data augmentation,” arXiv preprint arXiv:2011.13389, 2020.

- [2] A. Srinivas, M. Laskin, and P. Abbeel, “Curl: Contrastive unsupervised representations for reinforcement learning,” arXiv preprint arXiv:2004.04136, 2020.

- [3] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, et al., “Human-level control through deep reinforcement learning,” nature, vol. 518, no. 7540, pp. 529–533, 2015.

- [4] K. Schmeckpeper, O. Rybkin, K. Daniilidis, S. Levine, and C. Finn, “Reinforcement learning with videos: Combining offline observations with interaction,” arXiv preprint arXiv:2011.06507, 2020.

- [5] D. Yarats, A. Zhang, I. Kostrikov, B. Amos, J. Pineau, and R. Fergus, “Improving sample efficiency in model-free reinforcement learning from images,” arXiv preprint arXiv:1910.01741, 2019.

- [6] J. Ibarz, J. Tan, C. Finn, M. Kalakrishnan, P. Pastor, and S. Levine, “How to train your robot with deep reinforcement learning: lessons we have learned,” The International Journal of Robotics Research, vol. 40, no. 4-5, pp. 698–721, 2021.

- [7] Y. Tassa, Y. Doron, A. Muldal, T. Erez, Y. Li, D. d. L. Casas, D. Budden, A. Abdolmaleki, J. Merel, A. Lefrancq, et al., “Deepmind control suite,” arXiv preprint arXiv:1801.00690, 2018.

- [8] Y. Burda, H. Edwards, A. Storkey, and O. Klimov, “Exploration by random network distillation,” arXiv preprint arXiv:1810.12894, 2018.

- [9] K. He, H. Fan, Y. Wu, S. Xie, and R. Girshick, “Momentum contrast for unsupervised visual representation learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9729–9738.

- [10] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A simple framework for contrastive learning of visual representations,” in International conference on machine learning. PMLR, 2020, pp. 1597–1607.

- [11] J.-B. Grill, F. Strub, F. Altché, C. Tallec, P. H. Richemond, E. Buchatskaya, C. Doersch, B. A. Pires, Z. D. Guo, M. G. Azar, et al., “Bootstrap your own latent: A new approach to self-supervised learning,” arXiv preprint arXiv:2006.07733, 2020.

- [12] A. v. d. Oord, Y. Li, and O. Vinyals, “Representation learning with contrastive predictive coding,” arXiv preprint arXiv:1807.03748, 2018.

- [13] X. Chen and K. He, “Exploring simple siamese representation learning,” arXiv preprint arXiv:2011.10566, 2020.

- [14] J. Zbontar, L. Jing, I. Misra, Y. LeCun, and S. Deny, “Barlow twins: Self-supervised learning via redundancy reduction,” arXiv preprint arXiv:2103.03230, 2021.

- [15] E. Shelhamer, P. Mahmoudieh, M. Argus, and T. Darrell, “Loss is its own reward: Self-supervision for reinforcement learning,” arXiv preprint arXiv:1612.07307, 2016.

- [16] N. Hansen, R. Jangir, Y. Sun, G. Alenyà, P. Abbeel, A. A. Efros, L. Pinto, and X. Wang, “Self-supervised policy adaptation during deployment,” arXiv preprint arXiv:2007.04309, 2020.

- [17] N. Hansen, H. Su, and X. Wang, “Stabilizing deep q-learning with convnets and vision transformers under data augmentation,” Advances in Neural Information Processing Systems, vol. 34, 2021.

- [18] A. L. Strehl and M. L. Littman, “An analysis of model-based interval estimation for markov decision processes,” Journal of Computer and System Sciences, vol. 74, no. 8, pp. 1309–1331, 2008.

- [19] M. G. Bellemare, S. Srinivasan, G. Ostrovski, T. Schaul, D. Saxton, and R. Munos, “Unifying count-based exploration and intrinsic motivation,” arXiv preprint arXiv:1606.01868, 2016.

- [20] J. Fu, J. D. Co-Reyes, and S. Levine, “Ex2: Exploration with exemplar models for deep reinforcement learning,” arXiv preprint arXiv:1703.01260, 2017.

- [21] J. Schmidhuber, “A possibility for implementing curiosity and boredom in model-building neural controllers,” in Proc. of the international conference on simulation of adaptive behavior: From animals to animats, 1991, pp. 222–227.

- [22] Y. Burda, H. Edwards, D. Pathak, A. Storkey, T. Darrell, and A. A. Efros, “Large-scale study of curiosity-driven learning,” arXiv preprint arXiv:1808.04355, 2018.

- [23] T. Haarnoja, A. Zhou, P. Abbeel, and S. Levine, “Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor,” in International Conference on Machine Learning. PMLR, 2018, pp. 1861–1870.

- [24] N. Hansen, H. Su, and X. Wang, “Stabilizing deep q-learning with convnets and vision transformers under data augmentation,” 2021.

- [25] A. Stone, O. Ramirez, K. Konolige, and R. Jonschkowski, “The distracting control suite–a challenging benchmark for reinforcement learning from pixels,” arXiv preprint arXiv:2101.02722, 2021.