Intrinsic and extrinsic thermodynamics for stochastic population processes with multi-level large-deviation structure

Abstract

A set of core features is set forth as the essence of a thermodynamic description, which derive from large-deviation properties in systems with hierarchies of timescales, but which are not dependent upon conservation laws or microscopic reversibility in the substrate hosting the process. The most fundamental elements are the concept of a macrostate in relation to the large-deviation entropy, and the decomposition of contributions to irreversibility among interacting subsystems, which is the origin of the dependence on a concept of heat in both classical and stochastic thermodynamics. A natural decomposition is shown to exist, into a relative entropy and a housekeeping entropy rate, which define respectively the intensive thermodynamics of a system and an extensive thermodynamic vector embedding the system in its context. Both intensive and extensive components are functions of Hartley information of the momentary system stationary state, which is information about the joint effect of system processes on its contribution to irreversibility. Results are derived for stochastic Chemical Reaction Networks, including a Legendre duality for the housekeeping entropy rate to thermodynamically characterize fully-irreversible processes on an equal footing with those at the opposite limit of detailed-balance. The work is meant to encourage development of inherent thermodynamic descriptions for rule-based systems and the living state, which are not conceived as reductive explanations to heat flows.

I Introduction

The statistical derivations underlying most thermodynamic phenomena

are understood to be widely applicable, and are mostly developed in

general terms. Yet where thermodynamics is offered as an ontology to

understand new patterns and causes in nature – the

thermodynamics of

computation Szilard:MD:29 ; Landauer:IHGCP:61 ; Bennett:TC:82 ; Wolpert:stoch_thermo_comp:19

or stochastic

thermodynamics Seifert:stoch_thermo_rev:12 ,111

Stochastic thermodynamics is the modern realization of a program to

create a non-equilibrium thermodynamics that began with

Onsager Onsager:RRIP1:31 ; Onsager:RRIP2:31 and took much of its

modern form under Prigogine and

coworkers Glansdorff:structure:71 ; Prigogine:MT:98 . Since the

beginning its core method has been to derive rules or constraints for

non-stationary thermal dynamics from dissipation of free energies

defined by Gibbs equilibria, from any combination of thermal baths or

asymptotic reservoirs.

The parallel and contemporaneous development of thermodynamics of

computation can be viewed as a quasistatic analysis with discrete

changes in the boundary conditions on a thermal bath corresponding to

logical events in algorithms. Early stochastic thermodynamics

combined elements of both traditions, with the quantities termed

“non-equilibrium entropies” corresponding to the information

entropies over computer states, distinct from quasistatic entropies

associated with heat in a locally-equilibrium environment, with

boundary conditions altered by the explicitly-modeled stochastic state

transitions.

More recently stochastic thermodynamics incorporated time-reversal

methods originally developed for measures in dynamical

systems Searles:fluct_thm:99 ; Evans:fluct_thm:02 ; Gallavotti:dyn_ens_NESM:95 ; Gallavotti:dyn_ens_SS:95

leading to a variety of fluctuation

theorems Jarzynski:fluctuations:08 ; Chetrite:fluct_diff:08 ; Esposito:fluct_theorems:10

and nonequilibrium work

relations Crooks:NE_work_relns:99 ; Crooks:path_ens_aves:00 ; Kurchan:NEWRs:07 ,

still however relating path probability ratios either to dissipation

of heat or to differences in equilibrium free energies.

or where these methods are taken to define a foundation for the

statistical physics of reproduction or

adaptation England:statphys_selfrepl:13 ; Perunov:adaptation:15

– these problems are framed in terms of two

properties particular to the domain of mechanics: the conservation of

energy and microscopic reversibility. Other applications of the same

mathematics, with designations such as

intensity of

choice Luce:choice:59 ; McFadden:quantal_choice:76

tipping points, or

early warning signs Lenton:climate_tip_ew:12 , are

recognized as analogies to thermodynamics, on the understanding that

they only become the “thermodynamics of” something when they derive

its causes from energy conservation connected to the entropies of

heat.

This accepted detachment of mathematics from phenomenology, with thermodynamic phenomena interpreted in their historical terms and mathematics kept interpretation-free, contrasts with the way statistical mechanics was allowed to expand the ontological categories of physics at the end of the last century. The kinetic theory of heat Joule:sci_papers:44 ; Clausius:mech_the_heat:65 ; Boltzmann:second_law:86 was not established as an analogy to gambling222The large-deviation rate function underlies the solution of the gambler’s ruin problem of Pascal, Fermat, and Bernoulli Hald:prob_pre_1750:90 . used to describe patterns in the fluid caloric.333However, as late as 1957 Jaynes Jaynes:ITSM_I:57 ; Jaynes:ITSM_II:57 needed to assert that the information entropy of Shannon referred to the same quantity as the physical entropy of Boltzmann, and was not merely the identical mathematical function. Even the adoption of “entropy production” to refer to changes in the state-variable entropy by irreversible transformations – along the course of which the state-variable entropy is not even defined – is a retreat to a substance syntax; I would prefer the less euphonious but categorically better expression “loss of large-deviation accessibility”. Thermodynamics instead took the experienced phenomena involving heat, and where there had formerly been only names and metaphors to refer to them, it brought into existence concepts capturing their essential nature, not dissolving their reality as phenomena Rota:lect_notes:08 , but endowing it with a semantics. The distinction is between formalization in the service of reduction to remain within an existing ontology, and formalization as the foundation for discovery of new conceptual primitives. Through generalizations and extensions that could not have been imagined in the late 19th century GellMann:RG:54 ; Wilson:RG:74 ; Weinberg:phenom_Lagr:79 ; Polchinski:RGEL:84 (revisited in Sec. VII), essentially thermodynamic insights went on to do the same for our fundamental theory of objects and interactions, the nature of the vacuum and the hierarchy of matter, and the presence of stable macro-worlds at all.

This paper is written with the view that the essence of a thermodynamic description is not found in its connection to conservation laws, microscopic reversibility, or the equilibrium state relations they entail, despite the central role those play in the fields mentioned Landauer:IHGCP:61 ; England:statphys_selfrepl:13 ; Perunov:adaptation:15 ; Seifert:stoch_thermo_rev:12 . At the same time, it grants an argument that has been maintained across a half-century of enormous growth in both statistical methods and applications Fermi:TD:56 ; Bertini:macro_NEThermo:09 : that thermodynamics should not be conflated with its statistical methods. The focus will therefore be on the patterns and relations that make a phenomenon essentially thermodynamic, which statistical mechanics made it possible to articulate as concepts. The paper proposes a sequence of these and exhibits constructions of them unconnected to energy conservation or microscopic reversibility.

Essential concepts are of three kinds: 1) the nature and origin of macrostates; 2) the roles of entropy in relation to irreversibility and fluctuation; and 3) the natural apportionment of irreversibility between a system and its environment, which defines an intrinsic thermodynamics for the system and an extrinsic thermodynamics that embeds the system in its context, in analogy to the way differential geometry defines an intrinsic curvature for a manifold distinct from extrinsic curvatures that may embed the manifold in another manifold of higher dimension.444This analogy is not a reference to natural information geometries Amari:inf_geom:01 ; Ay:info_geom:17 that can also be constructed, though perhaps those geometries would provide an additional layer of semantics to the constructions here.

All of these concepts originate in the large-deviation properties of stochastic processes with multi-level timescale structure. They will be demonstrated here using a simple class of stochastic population processes, further specified as Chemical Reaction Network models as more complex relations need to be presented.

Emphasis will be laid on the different roles of entropy as a functional on general distributions versus entropy as a state function on macrostates, and on the Lyapunov role of entropy in the 2nd law Boltzmann:second_law:86 ; Fermi:TD:56 versus its large-deviation role in fluctuations Ellis:ELDSM:85 , through which the state-function entropy is most generally definable Touchette:large_dev:09 . Both Shannon’s information entropy Shannon:MTC:49 and the older Hartley function Hartley:information:28 , will appear here as they do in stochastic thermodynamics generally Seifert:stoch_thermo_rev:12 . The different meanings these functions carry, which sound contradictory when described and can be difficult to compare in derivations with different aims, become clear as each appears in the course of a single calculation. Most important, they have unambiguous roles in relation to either large deviations or system decomposition without need of a reference to heat.

Main results and order of the derivation

Sec. II explains what is meant by multi-level systems with respect to a robust separation of timescales, and introduces a family of models constructed recursively from nested population processes. Macrostates are related to microstates within levels, and if timescale separations arise that create new levels, they come from properties of a subset of long-lived, metastable macrostates. Such states within a level, and transitions between them that are much shorter than their characteristic lifetimes, map by coarse-graining to the elementary states and events at the next level.

The environment of a system that arises in some level of a hierarchical model is understood to include both the thermalized substrate at the level below, and other subsystems with explicit dynamics within the same level. Sec. II.3 introduces the problem of system/environment partitioning of changes in entropy, and states555The technical requirements are either evident or known from fluctuation theorems Speck:HS_heat_FT:05 ; Harris:fluct_thms:07 ; variant proofs are given in later sections. Their significance for system decomposition is the result of interest here. the first main claim: the natural partition employs a relative entropy Cover:EIT:91 within the system and a housekeeping entropy rate Hatano:NESS_Langevin:01 to embed the system in the environment. It results in two independently non-negative entropy changes (plus a third entirely within the environment that is usually ignored), which define the intensive thermodynamic of the focal system and the extensive, or embedding thermodynamics of the system into the whole.

Sec. III expresses the relation of macrostates to microstates in terms of the large-deviations concept of separation of scale from structure Touchette:large_dev:09 . Large-deviations scaling, defined as the convergence of distributions for aggregate statistics toward exponential families, creates a formal concept of a macroworld having definite structure, yet separated by an indefinite or even infinite range of scale from the specifications of micro-worlds.

Large-deviations for population processes are handled with the Hamilton-Jacobi theory Bertini:macro_NEThermo:09 following from the time dependence of the cumulant-generating function (CGF), and its Legendre duality Amari:inf_geom:01 to a large-deviation function called the effective action Smith:LDP_SEA:11 . Macrostates are identified with the distributions that can be assigned large-deviation probabilities from a system’s stationary distribution. Freedom to study any CGF makes this definition, although concrete, flexible enough to require choosing what Gell-Mann and Lloyd GellMann:EC:96 ; GellMann:eff_complx:04 term “a judge”. The resulting definition, however, does not depend on whether the system has any underlying mechanics or conservation laws, explicit or implicit.

To make contact with multi-level dynamics and problems of interest in stochastic thermodynamics Seifert:stoch_thermo_rev:12 ; Polettini:open_CNs_I:14 ; Polettini:stoch_macro_thermo:16 , while retaining a definite notation, Sec. IV assumes generators of the form used for Chemical Reaction Networks (CRNs) Horn:mass_action:72 ; Feinberg:notes:79 ; Krishnamurthy:CRN_moments:17 ; Smith:CRN_moments:17 . The finite-to-infinite mapping that relates macrostates to microstates has counterparts for CRNs in maps from the generator matrix to the transition matrix, and from mass-action macroscopic currents to probability currents between microstates.

This section formally distinguishes the Lyapunov Fermi:TD:56 and large-deviation Ellis:ELDSM:85 roles of entropy, and shows how the definition of macrostates from Sec. III first introduces scale-dependence in the state function entropy that was not present in the Lyapunov function for arbitrary multi-scale models of Sec. II. In the Hamilton-Jacobi representation, Lyapunov and large-deviation entropy changes occur within different manifolds and have different interpretations. These differences are subordinate to the more fundamental difference between the entropy state function and entropy as a functional on arbitrary distributions over microstates. A properly formulated 2nd law, which is never violated, is computed from both deterministic and fluctuation macrostate-entropies. The roles of Hartley informations Hartley:information:28 for stationary states in stochastic thermodynamics Speck:HS_heat_FT:05 ; Seifert:FDT:10 ; Seifert:stoch_thermo_rev:12 enter naturally as macrostate relative entropies.

Sec. V derives the proofs of monotonicity of the intrinsic and extrinsic entropy changes from Sec. II, using a cycle decomposition of currents in the stationary distribution.666The decomposition is related to that used by Schnakenberg Schnakenberg:ME_graphs:76 to compute dissipation in the stationary state, but more cycles are required in order to compute dynamical quantities. Whether only cycles or more complex hyperflows Andersen:generic_strat:14 are required in a basis for macroscopic currents distinguishes complexity classes for CRNs. Because cycles are always a sufficient basis in the microstate space, the breakdown of a structural equivalence between micro- and macro-states for complex CRNs occurs in just the terms responsible for the complex relations between state-function and global entropies derived in Sec. IV.

Sec. VI illustrates two uses of the intensive/extensive decomposition of entropy changes. It studies a simple model of polymerization and hydrolysis under competing spontaneous and driven reactions in an environment that can be in various states of disequilibrium. First a simple linear model of the kind treated by Schnakenberg Schnakenberg:ME_graphs:76 is considered, in a limit where one elementary reaction becomes strictly irreversible. Although the housekeeping entropy rate becomes uninformative because it is referenced to a diverging chemical potential, this is a harmless divergence analogous to a scale divergence of an extensive potential. A Legendre dual to the entropy rate remains regular, reflecting the existence of a thermodynamics-of-events about which energy conservation, although present on the path to the limit, asymptotically is not a source of any relevant constraints.

A second example introduces autocatalysis into the coupling to the disequilibrium environment, so that the system can become bistable. The components of entropy rate usually attributed to the “environment” omit information about the interaction of reactions responsible for the bistability, and attribute too much of the loss of large-deviation accessibility to the environment. Housekeeping entropy rate gives the correct accounting, recognizing that part of the system’s irreversibility depends on the measure for system relative entropy created by the interaction.

Sec.VII offers an alternative characterization of thermodynamic descriptions when conservation and reversibility are not central. In 20th century physics the problem of the nature and source of macroworlds has taken on a clear formulation, and provides an alternative conceptual center for thermodynamics to relations between work and heat. System decomposition and the conditional independence structures within the loss of large-deviation accessibility replace adiabatic transformation and heat flow as the central abstractions to describe irreversibility, and define the entropy interpretation of the Hartley information. Such a shift in view will be needed if path ensembles are to be put on an equal footing with state ensembles to create a fully non-equilibrium thermodynamics. The alternative formulation is meant to support the development of a native thermodynamics of stochastic rule-based systems and a richer phenomenology of living states.

II Multi-level systems

To provide a concrete class of examples for the large-deviation relations that can arise in multi-scale systems, we consider systems with natural levels, such that within each level a given system may be represented by a discrete population process. The population process in turn admits leading-exponential approximations of its large-deviation behavior in the form of a continuous dynamical system. These dynamical systems are variously termed momentum-space WKB approximations Assaf:mom_WKB:17 , Hamilton-Jacobi representations Bertini:macro_NEThermo:09 , or eikonal expansions Freidlin:RPDS:98 . They exist for many other classes of stochastic process besides the one assumed here Martin:MSR:73 ; Kamenev:DP:02 , and may be obtained either directly from the Gärtner-Ellis theorem Ellis:ELDSM:85 for cumulant-generating functions (the approach taken here), by direct WKB asymptotics, or through saddle-point methods in 2-field functional integrals.

A connection between levels is made by supposing that the large-deviation behavior possesses one or more fixed points of the dynamical system. These are coarse-grained to become the elementary states in a similar discrete population process one level up in the scaling hierarchy. Nonlinearities in the dynamical system that produce multiple isolated, metastable fixed points are of particular interest, as transitions between these occur on timescales that are exponentially stretched, in the large-deviation scale factor, relative to the relaxation times. The resulting robust criterion for separation of timescales will be the basis for the thermodynamic limits that distinguish levels and justify the coarse-graining.

II.0.1 Micro to macro, within and between levels

Models of this kind may be embedded recursively in scale through any number of levels. Here we will focus on three adjacent levels, diagrammed in Fig. 1, and on the separation of timescales within a level, and the coarse-grainings that define the maps between levels. The middle level, termed the mesoscale, will be represented explicitly as a stochastic process, and all results that come from large-deviations scaling will be derived within this level. The microscale one level below, and the macroscale one level above, are described only as needed to define the coarse-graining maps of variables between adjacent levels. Important properties such as bidirectionality of escapes from metastable fixed point in the stationary distribution, which the large-deviation analysis in the mesoscale supplies as properties of elementary transitions in the macroscale, will be assumed self-consistently as inputs from the microscale to the mesoscale.

Within the description at a single level, the discrete population states will be termed microstates, as is standard in (both classical and stochastic) thermodynamics. Microstates are different in kind from macrostates, which correspond to a sub-class of distributions (defined below), and from fixed points of the dynamical system. There is no unique recipe for coarse-graining descriptions to reduce dimensionality in a multi-scale system, because the diversity of stochastic processes is vast. Here, to obtain a manageable terminology and class of models, we limit to cases in which the dynamical-system fixed points at one level can be put in correspondence with the microstates at the next higher level. Table 1 shows the terms that arise within, and correspondences between, levels.

| macroscale | mesoscale | microscale |

|---|---|---|

| microstate | H-J fixed point | |

| thermalization of the microscale | H-J relaxation trajectories | |

| elementary transitions between microstates | H-J first-passages | |

| arbitrary distribution on microstates | coarse-grained distribution on fixed points | |

| macrostate (distribution) H-J variables | ||

| microstate | H-J fixed point | |

| … | … |

II.1 Models based on population processes

The following elements furnish a description of a system:

The multiscale distribution:

The central object of study is any probability distribution , defined down to the smallest scale in the model, and the natural coarse-grainings of produced by the dynamics. To simplify notation, we will write for the general distribution, across all levels, and let the indexing of indicate which level is being used in a given computation.

Fast relaxation to fixed points at the microscale:

The counterpart, in our analysis of the mesoscale, to Prigogine’s Glansdorff:structure:71 ; Prigogine:MT:98 assumption of local equilibrium in a bath, is fast relaxation of the distribution on the microscale, to a distribution with modes around the fixed points. In the mesoscale these fixed points become the elementary population states indexed , and the coarse-grained probability distribution is denoted . If a population consists of individuals of types indexed , then is a vector in which the non-negative integer coefficient counts the number of individuals of type .

Elementary transitions in the mesoscale:

First-passages occurring in the (implicit) large-deviations theory, between fixed points and at the microscale, appear in the mesoscale as elementary transitions . In Fig. 1, the elementary states are grid points and elementary transitions occur along lines in the grid in the middle layer. We assume as input to the mesoscale the usual condition of weak reversibility Polettini:open_CNs_I:14 , meaning that if an elementary transition occurs with nonzero rate, then the transition also occurs with nonzero rate. Weak reversibility is a property we will derive for first passages within the mesoscale, motivating its adoption at the lower level.

Thermalization in the microscale:

The elementary transitions are separated by typical intervals exponentially longer in some scale factor than the typical time in which a single transition completes. (In the large-deviation theory, they are instantons.) That timescale separation defines thermalization at the microscale, and makes microscale fluctuations conditionally independent of each other, given the index of the basin in which they occur. Thermalization also decouples components in the mesoscale at different except through the allowed elementary state transitions.

A System/Environment partition within the mesoscale:

Generally, in addition to considering the thermalized microscale a part of the “environment” in which mesoscale stochastic events take place, we will choose some partition of the type-indices to distinguish one subset, called the system (), from one or more other subsets that also form part of the environment (). Unlike the thermalized microscale, the environment-part in the mesoscale is slow and explicitly stochastic, like the system. The vector indexes a tensor product space , so we write .

Marginal and conditional distributions in system and environment:

On , is a joint distribution. A marginal distribution for the system is defined by , where fixes the component of in the sum. From the joint and the marginal a conditional distribution at each is given by . The components of can fill the role often given to chemostats in open-system models of CRNs. Here we keep them as explicit distributions, potentially having dynamics that can respond to changes in .

Notations involving pairs of indices:

Several different sums over the pairs of indices associated with state transitions appear in the following derivations. To make equations easier to read, the following notations are used throughout:

is an unordered pair of indices.

counts every pair

in both orders.

counts every unordered pair once.

Therefore for any function , .

is a sum on the component of .

counts all unordered pairs with common -component .

II.2 Stochastic description within the mesoscale

The coarse-grained distribution at the mesoscale evolves in time under a master equation

| (1) |

Here and below, indicates the time derivative. The generator is a stochastic matrix on the left, which we write , where is the row-vector on index corresponding to the uniform (unnormalized) measure. (following a standard notation Polettini:open_CNs_I:14 ) is the component of giving the transition rate from state to state .

For all of what follows, it will be necessary to restrict to systems that possess a stationary, normalizable distribution denoted , satisfying . The stationary distribution will take the place of conservation laws as the basis for the definition of macrostates. Moreover, if is everywhere continuous, and the number of distinct events generating transitions in the mesoscale (in a sense made precise below) is finite, first passages between fixed points corresponding to modes of will occur at rates satisfying a condition of detailed balance. The joint, marginal, and conditional stationary distributions are denoted .

The marginal stochastic process on :

The system-marginal distribution evolves under a master equation , for which the transition matrix has components that are functions of the instantaneous environmental distribution, given by

| (2) |

Time-independent overall stationary distribution as a reference:

Now we make an assumption that is crucial to being able to define a Lyapunov function for the whole multi-level system distribution : namely, that the parameters in the mesoscale transition matrix and hence the stationary distribution are time-independent. All dynamics is made explicit as dynamics of distributions and , but the explicitly-written distributions are the only source of dynamics: once the microscale has thermalized, there are no further un-written sources of time-dependence in the system.

Detailed balance propagating up from the microscale:

Finally we assume, propagating up from the microscale, a condition of detailed balance that we will prove as a property of first-passages in the mesoscale, and then apply recursively:

| (3) |

Note that condition (3) is not an assumption of microscopic reversibility in whatever faster stochastic process is operating below in the microscale. To understand why, using constructions that will be carried out explicitly within the mesoscale, see that even with rate constants satisfying Eq. (3), the system-marginal transition rates (2) need not satisfy a condition of detailed balance. Indeed we will want to be able to work in limits for the environment’s conditional distributions in which some transitions can be made completely irreversible: that is but . Even from such irreversible dynamics among microstates, first-passage rates with detailed balance in the stationary distribution will result, and it is that property that is assumed in Eq. (3).

From these assumptions on and , it follows that the relative entropy of any distribution from the stationary distribution is non-decreasing,

| (4) |

The result is elementary for systems with detailed balance, because each term in the third line of Eq. (4) is individually non-negative. We use relative entropy to refer to minus the Kullback-Leibler divergence of from Cover:EIT:91 , to follow the usual sign convention for a non-decreasing entropy.

II.3 System-environment decompositions of the entropy change

The exchange of heat for work is central to classical thermodynamics because energy conservation is a constraint on joint configurations across sub-systems, either multiple thermal systems in contact or a mechanical subsystem having only deterministic variables, some of which set the boundary conditions on thermal subsystems that also host fluctuations. Only the state-function entropy, however, is a “function” of energy in any sense, so the only notion of a limiting partition of irreversible effects between subsystems derivable from energy conservation is the one defined by adiabatic transformations passing through sequences of macrostates.

In more general cases, with or without conservation laws, the boundary conditions on a system are imposed only through the elements of the marginal transition matrix . The problem remains, of understanding how one subsystem can limit entropy change in another through a boundary, but it is no longer organized with reference to adiabatic transformations.

We wish to understand what constitutes a thermodynamically natural decomposition of the mesoscale process into a system and an environment. A widely-adopted decomposition Seifert:stoch_thermo_rev:12 ; Polettini:open_CNs_I:14 ; Polettini:stoch_macro_thermo:16 for systems with energy conservation777The decomposition is the same one used to define an energy cost of computation Landauer:IHGCP:61 ; Bennett:TC:82 by constructing logical states through the analogues to heat engines Szilard:MD:29 ; Smith:NS_thermo_II:08 . separates a Shannon entropy of from heat generation associated with terms by the local equilibrium assumption for the bath. We begin by writing down this information/heat decomposition, and arguing that it is not the natural partition with respect to irreversibility.

Entropy relative to the stationary state rather than Shannon entropy:

The following will differ from the usual construction in replacing Shannon entropy with a suitable relative entropy, without changing the essence of the decomposition. Two arguments can be given for preferring the relative entropy: It would be clear, for a system with a continuous state space in which would become a density, that the logarithm of a dimensional quantity is undefined. Hence some reference measure is always implicitly assumed. A uniform measure is not a coordinate-invariant concept, and a measure that is uniform in one coordinate system makes those coordinates part of the system specification. Since discrete processes are often used as approximations to continuum limits, the same concerns apply. The more general lesson is that a logarithmic entropy unit is always given meaning with respect to some measure. Only for systems such as symbol strings, for which a combinatorial measure on integers is the natural measure, is Shannon entropy the corresponding natural entropy. For other cases, such as CRNs, the natural entropy is relative entropy referenced to the Gibbs equilibrium Smith:NS_thermo_I:08 , and its change gives the dissipation of chemical work. For the processes described here, the counterpart to Shannon entropy that solves these consistency requirements, but does not yet address the question of naturalness, is the relative entropy referenced to the steady state marginal . Its time derivative is given by

| (5) |

The quantity (5) need not be either positive or negative in general.

A second term that separates out of the change in total relative entropy (4) comes from changes in environmental states through events that do not result in net change of the system state.888Note may depend on the system index-component shared by both and , so these rates can depend on system state. Catalysis acts through such dependencies. The relative entropy of the conditional distribution at a particular index from its stationary reference has time derivative

| (6) | ||||

Unlike the change of system relative entropy (5), Eq. (6) is non-negative term-by-term, in the same way as Eq. (4).

The remaining terms to complete the entropy change (4) come from joint transformations in system and environment indices and , and in usual treatments have the interpretation of dissipated heats.999When more than one environment transition couples to the same system transition, there can be reasons to further partition these terms; an example is given in Sec. VI.1. They are functions of the pair of indices . An average change in relative entropy of the environment, over all processes that couple to a given system state-change, is

| (7) |

(Note that if we had wished to use the un-referenced Shannon entropy in place of the relative entropy (5) – for instance, in an application to digital computing – we could shift the measures to the dissipation term to produce what is normally considered the “environmental” heat dissipation, given by

| (8) |

The quantity (8) is regarded as a property of the environment (both slow variables and the thermal bath) because it is a function only of the transition rates and of the marginal distributions .)

II.3.1 The information/heat decomposition of total relative-entropy change

Eq. (4) is decomposed in terms of the quantities in equations (5–7) as

| (9) |

The total is non-negative and the third line is independently non-negative, as already mentioned. The sum of the first two lines is also non-negative, a result that can be proved as a fluctuation theorem for what is normally called “total entropy change” Esposito:fluct_theorems:10 .101010More detailed proofs for a decomposition of the same sum will be given below.

Here we encounter the first property that makes a decomposition of a thermal system “natural”. The term in is not generally considered, and it does not need to be considered, because thermal relaxation at the microscale makes transitions in at fixed conditionally independent of transitions that change . Total relative entropy changes as a sum of two independently non-decreasing contributions.

The first and second lines in Eq. (9) are not likewise independently non-negative. Negative values of the second or first line, respectively, describe phenomena such as randomization-driven endothermic reactions, or heat-driven information generators. To the extent that they do not use thermalization in the microscale to make the system and environment conditionally independent, as the third term in Eq. (9) is independent, we say they do not provide a natural system/environment decomposition.

II.3.2 Relative entropy referencing the system steady state at instantaneous parameters

Remarkably, a natural division does exist, based on the housekeeping heat introduced by Hatano and Sasa Hatano:NESS_Langevin:01 . The decomposition uses the solution to , which would be the stationary marginal distribution for the system at the instantaneous value of . As for the whole-system stationary distribution , we restrict to cases in which exists and is normalizable.111111This can be a significant further restriction when we wish to study limits of sequences of environments to model chemostats. For systems with unbounded state spaces, such as arise in polymerization models, it is quite natural for a chemostat-driven system to possess no normalizable steady-state distributions. In such cases other methods of analysis must be used Esposito:copol_eff:10 .

Treating as fixed and considering only the dynamics of with as a reference, we may consider a time derivative in place of Eq. (5). Note that the rates in the transition matrix no longer need satisfy any simplified balance condition in relation to , such as detailed balance. Non-negativity of was proved by Schnakenberg Schnakenberg:ME_graphs:76 by an argument that applies to discrete population processes with normalized stationary distributions of the kind assumed here. We will derive a slightly more detailed decomposition proving this result for the case of CRNs, in a later section.

The dissipation term that complements the change in is obtained by shifting the “environmental” entropy change (8) by to obtain the housekeeping heat121212These terms are used because this is how they are known. No energy interpretation is assumed here, so a better term would be “housekeeping entropy change”.

| (10) |

Non-negativity of Eq. (10) is implied by a fluctuation theorem Speck:HS_heat_FT:05 , and a time-local proof with interesting further structure for CRNs will be given below.

The total change in relative entropy (4) is then the sum

| (11) |

Each line in Eq. (11) is now independently non-negative. The first measures a gain of entropy within the system , conditionally independent of changes in the environment given the marginal transition matrix . The third measures a gain of entropy in the environment independent of any changes in the system at all. The second, housekeeping entropy rate, measures a change of entropy in the environment that is conditionally independent of changes of entropy within the system, given as represented in . Any of the terms may be changed, holding the conditioning data or , or omitted, without changing the limits for the others. They respect the conditional independence created by thermalization in the microscale, and by that criterion constitute a natural decomposition of the system.

II.3.3 Intrinsic and extrinsic thermodynamics

We take and as a specification of the intrinsic thermodynamics of the system , analogous to the role of intrinsic curvature of a manifold in differential geometry. The vector (indexed by pairs of system indices) of housekeeping entropy differentials, , correspondingly defines the way is thermodynamically embedded in the mesoscale system, analogous to the role of components of an embedding curvature for a submanifold within larger manifold.

II.3.4 System Hartley information as a temporal connection

The natural decomposition (11) differs from the information/heat decomposition (9) in what is regarded as inherent to the system versus the environment. The attribution of Shannon entropy as a “system” property follows from the fact that it involves only , and its change counts only actual transitions with rate . Likewise, the “environment” heat (8) is a function only of the actual distribution and the realized currents.

Those events that occur within or , however, fail to capture the additional features of : that specific transitions are coupled as a system, and that they have the dependence on of Eq. (2). The stationary distribution is the function of reflecting its status as a system.

The differences between the three entropy-change terms (7,8,10) are differences of the Hartley informations Hartley:information:28 , respectively for or . In the natural decomposition (11), they are not acted upon by the time derivative, but rather define the tangent plane of zero change for terms that are acted upon by the transition matrix, and resemble connection coefficients specifying parallel transport in differential geometry.

III Hamilton-Jacobi theory for large deviations

The central concepts in thermodynamics are that of the macrostate, and of the entropy as a state function from which properties of macrostates and constraints on their transformations are derived. In classical thermodynamics Fermi:TD:56 ; Kittel:TP:80 , macrostates are introduced in association with average values of conserved quantities (e.g. energy, particle numbers), because conservation laws naturally generate conditional independence between subsystems in contact, given a simple boundary condition (the partition of the conserved quantity between them).

Here we wish to separate the construction that defines a macrostate from the properties that make one or another class of macrostates dynamically robust in a given system (conservation laws are important for the latter). The defining construction can be quite general, but it must in all cases create a dimensional reduction by an indefinite (or in the limit infinite) factor, from the dimensionality of the microstate space that is definite but arbitrarily large, to the dimensionality of a space of macrostate variables that is fixed and independent of the dimensionality of microstates. Only dimensional reductions of this kind are compatible with the large-deviation definition of macroworlds as worlds in which structure can be characterized asymptotically separate from scale Touchette:large_dev:09 . Robustness can then be characterized separately within the large-deviation analysis in terms of closure approximations or spectra of relaxation times for various classes of macrostates.

Dimensional reduction by an indefinite degree is achieved by associating macrostates with particular classes of distributions over microstates: namely, those distributions produced in exponential families to define generating functions. The coordinates in the tilting weights that define the family are independent of the dimension of the microstate space for a given family and become the intensive state variables (see Amari:inf_geom:01 ). They are related by Legendre transform to deviations that are the dual extensive state variables. Relative entropies such as , defined as functionals on arbitrary distributions, and dual under Legendre transform to suitable cumulant-generating functions, become state functions when restricted to the distributions for macrostates. The extensive state variables are their arguments and the intensive state variables their gradients. Legendre duality leads to a system of Hamiltonian equations Bertini:macro_NEThermo:09 for time evolution of macrostate variables, and from these the large-deviation scaling behavior, timescale structure, and moment closure or other properties of the chosen system of macrostates are derived.

In the treatment of Sec. II, the relative entropy increased deterministically without reference to any particular level of system timescales, or the size-scale factors associated with various levels. As such it fulfilled the Lyapunov role of (minus) the entropy, but not the large-deviation role that is the other defining characteristic of entropy Ellis:ELDSM:85 ; Touchette:large_dev:09 . The selection of a subclass of distributions as macrostates introduces level-dependence, scale-dependence, and the large-deviation role of entropy, and lets us construct the relation between the Lyapunov and large-deviation roles of entropy for macroworlds, which are generally distinct. As a quantity capable of fluctuations, the macrostate entropy can decrease along subsets of classical trajectories; these fluctuations are the objects of study in stochastic thermodynamics. The Hamiltonian dynamical system is a particularly clarifying representation for the way it separates relaxation and fluctuation trajectories for macrostates into distinct sub-manifolds. In distinguishing the unconditional versus conditional nature of the two kinds of histories, it shows how these macro-fluctuations are not “violations” of the 2nd law, but rather a partitioning of the elementary events through which the only properly formulated 2nd law is realized.131313See a brief discussion making essentially this point in Sec. 1.2 of Seifert:stoch_thermo_rev:12 . Seifert refers to the 2nd law as characterizing “mean entropy production”, in keeping with other interpretations of entropies such as the Hartley information in terms of heat. The characterization adopted here is more categorical: the Hartley function and its mean, Shannon information, are not quantities with the same interpretation; likewise, the entropy change (11) is the only entropy relative to the boundary conditions in that is the object of a well-formulated 2nd law. The “entropy productions” resulting from the Prigogine local-equilibrium assumption are conditional entropies for macrostates defined through various large-deviation functions, shown explicitly below.

III.1 Generating functions, Liouville equation, and the Hamilton-Jacobi construction for saddle points

III.1.1 Relation of the Liouville operator to the cumulant-generating function

The -dimensional Laplace transform of a distribution on discrete population states gives the moment-generating function (MGF) for the species-number counts . For time-dependent problems, it is convenient to work in the formal Doi operator algebra for generating functions, in which a vector of raising operators are the arguments of the MGF, and a conjugate vector of lowering operators are the formal counterparts to . MGFs are written as vectors in a Hilbert space built upon a ground state , and the commutation relations of the raising and lowering operators acting in the Hilbert space are .

The basis vectors corresponding to specific population states are denoted . They are eigenvectors of the number operators with eigenvalues :

| (12) |

Through by-now-standard constructions Smith:LDP_SEA:11 , the master equation is converted to a Liouville equation for time evolution of the MGF,

| (13) |

in which the Liouville operator is derived from the elements of the matrix .

To define exponential families and a cumulant-generating function (CGF), it is convenient to work with the Laplace transform with an argument that is a vector of complex coefficients. The corresponding CGF, ,141414We adopt the sign for corresponding to the free energy in thermodynamics. Other standard notations, such as for the CGF Amari:inf_geom:01 , are unavailable because they collide with notations used in the representation of CRNs below. for which the natural argument is , is constructed in the Doi Hilbert space as the inner product with a variant on the Glauber norm,

| (14) |

will be called the tilt of the exponential family, corresponding to its usage in importance sampling Siegmund:IS_seq_tests:76 .

An important quantity will be the vector of expectations of the number operators in the tilted distribution

| (15) |

Time evolution of the CGF follows from Eq. (13), as

| (16) |

Under the saddle-point or leading-exponential approximation that defines the large-deviation limit (developed further below), the expectation of in Eq. (16) is replaced by the same function at classical arguments

| (17) |

From coherent-state to number-potential coordinates:

With a suitable ordering of operators in (with all lowering operators to the right of any raising operator), is the exact value assigned to in the expectation (16), and only the value for depends on saddle-point approximations. In special cases, where are eigenstates of known as coherent states, the assignment to is also exact. Therefore the arguments of in Eq. (17) are called coherent-state coordinates.

However, , which we henceforth denote by , is the affine coordinate system in which the CGF is locally convex,151515 also provides the affine coordinate system in the exponential family, which defines contravariant coordinates in information geometry Amari:inf_geom:01 . and it will be preferable to work in coordinates , which we term number-potential coordinates, because for applications in chemistry has the dimensions of a chemical potential. We abbreviate exponentials and other functions acting component-wise on vectors as , and simply assign . Likewise, is defined in either coordinate system by mapping its arguments: .

III.1.2 Legendre transform of the CGF

To establish notation and methods, consider first distributions that are convex with an interior maximum in . Then the gradient of the CGF

| (18) |

gives the mean (15) in the tilted distribution.

The stochastic effective action is the Legendre transform of , defined as

| (19) |

For distributions over discrete states, to leading exponential order, is a continuously-indexed approximation to minus the log-probability: . Its gradient recovers the tilt coordinate ,

| (20) |

and the CGF is obtained by inverse Legendre transform.

| (21) |

The time evolution of can be obtained by taking a total time derivative of Eq. (19) along any trajectory, and using Eq. (18) to cancel the term in . The partial derivative that remains, evaluated using Eq. (17), gives

| (22) |

Eq. (22) is of Hamilton-Jacobi form, with filling the role of the Hamiltonian. (The reason for this sign correspondence, which affects nothing in the derivation, will become clear below.)

Multiple modes, Legendre-Fenchel transform, and locally-defined extrema

Systems with interesting multi-level structure do not have or globally convex, but rather only locally convex. For these the Legendre-Fenchel transform takes the place of the Legendre transform in Eq. (19), and if constructed with a single coordinate , may have discontinuous derivative.

For these one begins, rather than with the CGF, with , evaluated as a line integral of Eq. (20) in basins around the stationary points at . Each such basin defines invertible pair of functions and . We will not be concerned with the large-deviation construction for general distributions in this paper, which is better carried out using a path integral. We return in a later section to the special case of multiple metastable fixed points in the stationary distribution, and the modes associated with the stationary distribution , and provide a more complete treatment.

III.1.3 Hamiltonian equations of motion and the action

Partial derivatives with respect to and commute, so the relation (20), used to evaluate of Eq. (22), gives the relation

| (23) |

The dual construction for the time dependence of from Eq. (18) gives

| (24) |

The evolution equations (23,24) describe stationary trajectories of an extended-time Lagrange-Hamilton action functional which may be written in either coherent-state or number-potential coordinates, as161616The same action functionals are arrived at somewhat more indirectly via 2-field functional integral constructions such as the Doi-Peliti method.

| (25) |

From the form of the first term in either line, it is clear that the two sets of coordinates relate to each other through a canonical transformation Goldstein:ClassMech:01 .

Circulation-free vector field of for the stationary distribution

In order for to be a continuum approximation to , if exists and is smooth everywhere, the vector field obtained from stationary trajectories of the action (25) must have zero circulation in order to be a well-defined gradient through Eq. (20). To check that this is the case, consider the increment of under a small interval under Eq. (23):

| (26) |

The gradient in of therefore increments in time as

| (27) |

Contraction of Eq. (27) with the antisymmetric symbol in and vanishes

| (28) |

so the circulation of is the same everywhere as at the fixed points.

From Eq. (20), it is required to be the case that , the inverse of the Fisher metric Amari:inf_geom:01 , symmetric by construction and thus giving . The only difference between the fixed point and any other point is that for distant points we are relying on Hamiltonian trajectories to evaluate , whereas the the fixed point the Fisher metric may be calculated by means not relying on the large-deviation saddle-point approximation. Therefore Eq. (28) may be read as a check that symmetry of the Fisher metric is preserved by Hamiltonian trajectories directly from the symmetric partial derivative of in Eq. (27).

III.2 The stationary distribution and macrostates

Up to this point only the Lyapunov role (4) of the relative entropy has been developed. While the increase of has the appearance of the classical 2nd law, we can understand from three observations that this relative entropy is not the desired generalization of the entropy state function of classical thermodynamics to express the phenomenology of multi-level systems:

-

1.

The relative entropy is a functional on arbitrary distributions, like the Shannon entropy that is a special case. It identifies no concept of macrostate, and has no dependence on state variables.

-

2.

In a multilevel system that may have arbitrarily fine-grained descriptions, there is no upper limit to , and no appearance of the system scale at any particular level, which characterizes state-function entropies.

-

3.

Eq. (4) describes a deterministic increase of relative entropy; the large-deviation role of entropy as a log-probability for macrostate fluctuations Ellis:ELDSM:85 does not appear.

The step that has not yet been taken in our construction is, of course, the identification of a macrostate concept. Here we depart from the usual development based on conservation laws, and follow Gell-Mann and Lloyd GellMann:EC:96 ; GellMann:eff_complx:04 in claiming that the concept of macrostate is not inherent in features of a system’s dynamics, but requires one to explicitly choose a procedure for aggregation or coarse-graining – what they call a “judge” – as part of the commitment to which phenomenology is being described.

We will put forth the definition of macrostates as the tilted distributions arising in generating functions for the stationary distribution . In the case of generating functions for number, these are the distributions appearing in Eq. (14), which we will denote by . They are the least-improbable distributions with a given non-stationary mean to arise through aggregate microscopic fluctuations, and therefore dominate the construction of the large-deviation probability.

The extensive state variable associated with this definition of macrostate is the tilted mean from Eq. (18), which we will denote . If and are sharply peaked – the limit in which the large-deviation approximation is informative – the relative entropy of the macrostate is dominated at the saddle point of , where , and thus

| (29) |

The general relative entropy functional, applied to the macrostate, becomes the entropy state function , which takes as its argument the extensive state variable . Moreover, because probability under is concentrated on configurations with the scale that characterizes the system, tilted means that are not suppressed by very large exponential probabilities will have comparable scale. If is also the scale factor in the large-deviations function (a property that may or may not hold, depending on the system studied), then in scale, and the entropy state function now has the characteristic scale of the mesoscale level of the process description.

The three classes of distributions that enter a thermodynamic description are summarized in Table 2.

| notation | definition | comment |

|---|---|---|

| , , | whole-system, -marginal, -conditional distributions in the global stationary state | |

| marginal system steady-state distribution | function of instantaneous environment conditional distribution | |

| macrostate tilted to saddle-point value | defined relative to global stationary distributions ; may be defined for whole-system, , or |

III.2.1 Coherent states, dimensional reduction, and the -divergence

A special case, which is illustrative for its simplicity and which arises for an important sub-class of stochastic CRNs, is the case when is a coherent state, an eigenvector of the lowering operator . Coherent states are the generating functions of product-form Poisson distributions, or cross-sections through such products if the transitions in the population process satisfy conservation laws. They are known Anderson:product_dist:10 to be general solutions for CRN steady states satisfying a condition termed complex balance, and the fixed points associated with such stationary distributions are also known to be unique and interior (no zero-expectations for any ) Feinberg:notes:79 .

Let be the eigenvalue of the stationary coherent state: . Then the mean in the tilted distribution lies in a simple exponential family, (component-wise), and the tilted macrostate is also a coherent state: .

The logarithm of a product-form Poisson distribution in Stirling’s approximation is given by

| (30) |

, known as the -divergence, is a generalization of the Kullback-Leibler divergence to measures such as which need not have a conserved sum. The Lyapunov function from Eq. (4) reduces in the same Stirling approximation to

| (31) |

giving in Eq. (29). On coherent states, the Kullback-Leibler divergence on distributions, which may be of arbitrarily large dimension, reduces to the -divergence on their extensive state variables which have dimension .

The coherent states play a much more general role than their role as exact solutions for the restricted case of complex-balanced CRNs. In the Doi-Peliti 2-field functional integral formalism Doi:SecQuant:76 ; Doi:RDQFT:76 ; Peliti:PIBD:85 ; Peliti:AAZero:86 for generating functionals over discrete-state stochastic processes, the coherent states form an over-complete basis in the Peliti representation of unity. The saddle-point approximation on trajectories, which yields the classical actions (25) and the resulting Hamilton-Jacobi equations, approximates expectations in the exact distribution by those in the nearest coherent-state basis element. Observables in macrostates are thus mapped to observables in coherent states, although in cases when the coherent state is not an exact solution, the saddle-point condition may be sensitive to which observable is being evaluated.

III.2.2 Multiple fixed points and instantons

Systems with multiple metastable fixed points correspond to non-convex and thus multiple modes. For these, monotone decrease of in Eq. (4) does not entail monotonicity of the -divergence in Eq. (31). In such systems, first passages between basins of attraction are solutions to the Hamiltonian equations (23,24) with momentum coordinate . Along these increases, and that increase is what is sometimes termed the “violation of the 2nd law”.

For unimodal , the large-deviation trajectories have a separate use and interpretation from the relaxation trajectories at that give the classical 2nd law in Eq. (49). For multi-modal a special sub-class of trajectories, those known as instantons and responsible for first-passages between fixed-points Cardy:Instantons:78 ; Coleman:AoS:85 , must be used to refine the interpretation of classical relaxation trajectories. That refinement relates the transient increases in the large-deviation function to the deterministic 2nd law (4) that continues to apply.

This section briefly introduces the Legendre duality that defines first-passage probabilities in metastable systems, arriving at the chain rule for entropy that separates the roles of classical and instanton trajectories. Let be a fixed point of the Hamiltonian equations for , and denote by the values of classical state variables obtained along trajectories from . Call these escape trajectories. The set of all is partitioned among basins of repulsion from fixed points. Saddle points and escape separatrices are limit points of escapes from two or more basins.

Within one such basin, we may construct as a Legendre transform of a summand in the overall CGF, as

| (32) |

ranges only over the values that arise on escape trajectories from , which generally are bounded Smith:CRN_CTM:20 , and within that range

| (33) |

Next, let the function 171717Note that the maps and need not be reflexive. That is, we may have for any , because escape and relaxation separatrices may differ. denote the fixed point to which a trajectory with relaxes, starting from . From the large-deviation identification of , recognize that the large-deviation function for the stationary distribution is given by , where denotes the special macrostate for which the stationary value is a fixed-point value .

Then the expression (29) for the Kullback-Leibler divergence appearing in Eq. (4) may be approximated to leading exponential order as

| (34) |

Eq. (34) is the chain rule for relative entropy. is the conditional entropy of the macrostate relative to the macrostate to which it is connected by a Hamiltonian trajectory. is the unconditioned entropy of relative to .

Relaxation along trajectories describes a classical 2nd law only for the conditional part of the relative entropy. Deterministic relaxation of the unconditioned entropy is derived from the refinement of the (as it turns out, only apparent) classical trajectory with an instanton sum. The general method is described in depth in Cardy:Instantons:78 and Coleman:AoS:85 Ch.7, using path integrals that require too much digression to fit within the scope of this paper. The structure of the instanton sum, and in particular the way it creates a new elementary stochastic process at the macroscale for which is the Lyapunov function, will be explained in Sec. IV.2.2 following Smith:LDP_SEA:11 , after the behavior of along and trajectories has been characterized.

IV Population processes with CRN-form generators

The results up to this point apply to general processes with discrete state spaces, normalizable stationary distributions, and some kind of tensor-product structure on states. For the next steps we restrict to stochastic population processes that can be written in a form equivalent to Chemical Reaction Networks Horn:mass_action:72 ; Feinberg:notes:79 ; Gunawardena:CRN_for_bio:03 . CRNs are an expressive enough class to include many non-mechanical systems such as evolving Darwinian populations Smith:evo_games:15 , and to implement algorithmically complex processes Andersen:NP_autocat:12 . Yet they possess generators with a compact and simple structure Krishnamurthy:CRN_moments:17 ; Smith:CRN_moments:17 , in which similarities of microstate and macrostate phenomena are simply reflected in the formalism.

IV.1 Hypergraph generator of state-space transitions

Population states give counts of individuals, grouped according to their types which are termed species. Stochastic Chemical Reaction Networks assume independent elementary events grouped into types termed reactions. Each reaction event removes a multiset181818A multiset is a collection of distinct individuals, which may contain more than one individual from the same species. of individuals termed a complex from the population, and places some (generally different) multiset of individuals back into it. The map from species to complexes is called the stoichiometry of the CRN. Reactions occur with probabilities proportional per unit time to rates. The simplest rate model, used here, multiplies a half-reaction rate constant by a combinatorial factor for proportional sampling without replacement from the population to form the complex, which in the mean-field limit leads to mass-action kinetics.

Complexes will be indexed with subscripts , and an ordered pair such as labels the reaction removing and creating . With these conventions the Liouville-operator representation of the generator from Eq. (13) takes the form Krishnamurthy:CRN_moments:17 ; Smith:CRN_moments:17

| (35) |

are half-reaction rate constants, organized in the second line into an adjacency matrix on complexes. is a column vector with components that, in the Doi algebra, produce the combinatorial factors and state shifts reflecting proportional sampling without replacement. Here is the matrix of stoichiometric coefficients, with entry giving the number of individuals of species that make up complex . Further condensing notation, are column vectors in index , and are row vectors on index . is understood as the component-wise product of powers over the species index . is a transpose (row) vector, and vector and matrix products are used in the second line of Eq. (35).

The generator (35) defines a hypergraph Berge:hypergraphs:73 in which is the adjacency matrix on an ordinary graph over complexes known as the complex graph Gunawardena:CRN_for_bio:03 ; Baez:QTRN_eq:14 . The stoichiometric vectors , defining complexes as multisets, make the edges in directed hyper-edges relative to the population states that are the Markov states for the process.191919Properly, the generator should be called a “directed multi-hypergraph” because the complexes are multisets rather than sets. The concurrent removal or addition of complexes is the source of both expressive power and analytic difficulty provided by hypergraph-generators.

A master equation (1) acting in the state space rather than on the generating function may be written in terms of the same operators, as

| (36) |

Here a formal shift operator is used in place of an explicit sum over shifted indices, and creates shifts by the stoichiometric vector . refers to the matrix with diagonal entries given by the components .

In the master equation the combinatorial factors must be given explicitly. These are written as a vector with components that are falling factorials from , denoted with the underscore as .

The matrix elements of Eq. (1) may be read off from Eq. (36) in terms of elements in the hypergraph, as

| (37) |

between all pairs separated by the stoichiometric difference vector . If multiple reactions produce transitions between the same pairs of states, the aggregate rates become

| (38) |

In this way a finite adjacency matrix on complexes may generate an infinite-rank transition matrix , which is the adjacency matrix for an ordinary graph over states. We will see that for CRNs, the hypergraph furnishes a representation for macrostates similar to the representation given by the simple graph for microstates.

From Eq. (38), marginal transition rates for the system may be defined using the average (2) over . Note that the dependence of the activity products on species within the system remains that of a falling factorial, even if the average over activities of species in the environment is complicated. Denote by and the restrictions of the activity and shift functions to the species in the system , and by the rate constants after computing the sum in Eq. (2) over the index for species in the environment.

IV.1.1 Descaling of transition matrices for microstates

Proofs of monotonic change, whether of total relative entropy (1) or for the components of entropy change partitioned out in Sec. II.3, take a particularly simple form for CRNs generated by finitely many reactions. They make use of finite cycle decompositions of the current through any microstate or complex, which are derived from descaled adjacency matrices.

The descaling that produces a transition matrix that annihilates the uniform measure on both the left and the right is

| (39) |

for the whole mesoscale, or

| (40) |

for the subsystem , by definition of the stationary states. in Eq. (40) is the adjacency matrix on complexes that gives rate constants from Eq. (2). These descalings are familiar as the ones leading to the dual time-reversed generators in the fluctuation theorem for housekeeping heat Speck:HS_heat_FT:05 .

We return to use the descaled microstate transition matrices (39,40) in monotonicity proofs for general CRNs in Sec. V, but before doing that, we use the descaling in the state space to motivate an analogous and simpler descaling for macrostates at the level of the hypergraph. That descaling illustrates the cycle decomposition on finitely many states, though it only yields the -Divergence of Eq. (31) as a Lyapunov function for the complex-balanced CRNs.

IV.1.2 Descaling of transition matrices for macrostates

The coherent states, which are the moment-generating functions of Poisson distributions and their duals in the Doi Hilbert space, are defined as

| (41) |

where is a vector of (generally complex) numbers and is its Hermitian conjugate. They are eigenstates respectively of the raising and lowering operators with eigenvalues and .

On the constant trajectories corresponding to a fixed point of the Hamiltonian equations (23, 24), and , the fixed-point number. We may descale the coherent-state parameters and , which correspond to classical state variables, by defining

| (42) |

In vector notation, , . The scaling (42) was introduced by Baish Baish:DP_duality:15 to study dualities of correlation functions in Doi-Peliti functional integrals.

The compensating descaling of the adjacency matrix

| (43) |

may be compared with Eq. (39) for . A similar descaling may be done for using . Henceforth we omit the duplicate notation, and carry out proofs with respect to whatever is the stationary distribution for a given adjacency matrix and hypergraph.

IV.1.3 Equations of motion and the manifold

The Hamiltonian equations derived by variation of the action (44) can be written

| (45) |

The general solution is shown first in each line and then particular limiting forms are shown.

The sub-manifold contains all relaxation trajectories for any CRN. It is dynamically stable because is a stochastic matrix on the left, and thus in the second line of Eq. (45). The image of is called the stoichiometric subspace, and its dimension is denoted .

An important simplifying property of some CRNs is known as complex balance of the stationary distributions.202020Complex balance can be ensured at all parameters if a topological character of the CRN known as deficiency equals zero, but may also be true for nonzero-deficiency networks for suitably tuned parameters. In this work nothing requires us to distinguish these reasons for complex balance. It is the condition that the fixed point and not only . Since corresponds to and thus , and in the first line of Eq. (45) at any . Complex-balanced CRNs (with suitable conditions on to ensure ergodicity on the complex network Gunawardena:CRN_for_bio:03 ) always possess unique interior fixed points Feinberg:notes:79 ; Feinberg:def_01:87 and simple product-form stationary distributions at these fixed points Anderson:product_dist:10 .

For non-complex-balanced stationary solutions, although escapes may have as initial conditions, that value is not dynamically maintained. Recalling the definition (12) of the number operator, the field , so non-constant is required for instantons to escape from stable fixed points and terminate in saddle fixed points, in both of which limits .

All relaxations and also the escape trajectories from fixed points (the instantons) share the property that for a CRN with time-independent parameters.212121We can see that this must be true because is a Hamiltonian conserved under the equations of motion, and because instantons trace the values of and in the stationary distribution , which must then have to satisfy the Hamilton-Jacobi equation (22). This submanifold separates into two branches, with for relaxations and for escapes.

IV.1.4 The Schlögl cubic model to illustrate

The features of CRNs with multiple metastable fixed points are exhibited in a cubic 1-species model introduced by Schlögl Schlogl:near_SS_thermo:71 , which has been extensively studied Dykman:chem_paths:94 ; Krishnamurthy:CRN_moments:17 ; Smith:CRN_moments:17 as an example of dynamical bistability.

The reaction schema in simplified form is

| (46) |

We choose rate constants so that the mass-action equation for the number of particles, given by , is

| (47) |

The three fixed points are , of which and are stable, and is a saddle.

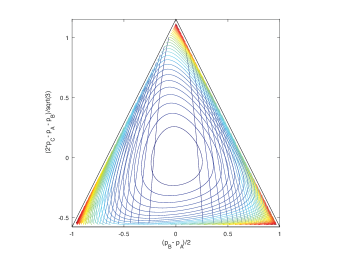

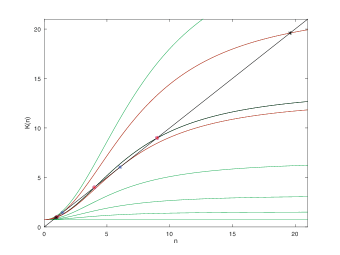

The relaxation and escape branches of the manifold are shown in Fig. 2. Because the Schlögl model is 1-dimensional, the condition fixes along escape trajectories. Because the stochastic model is a birth-death process, it is also exactly solvable vanKampen:Stoch_Proc:07 . We will return to the example in Sec. VI.2 to study properties of the intensive and extensive thermodynamic potentials for it.

IV.2 Large-deviation and Lyapunov roles of the effective action

The role of entropy in the understanding of Boltzmann and Gibbs was that of a Lyapunov function Fermi:TD:56 ; Kittel:TP:80 , accounting for unidirectionality from microscopically reversible mechanics. The much later understanding of entropy in relation to fluctuations Ellis:ELDSM:85 ; Touchette:large_dev:09 – precisely the opposite of deterministic evolution – is that of a large-deviation function.

The chain rule in Eq. (34) of Sec. III.2.2 relates the conditional relative entropy associated with quasi-deterministic relaxation to an additional unconditioned entropy of metastable states that are stable fixed points of the Hamiltonian dynamical system. Here we complete the description of the relation between the Lyapunov and large-deviation roles of the macrostate entropy (29), and show how the sum over instantons rather than a single Hamiltonian trajectory results in deterministic increase of the unconditioned relative entropy . In the Hamilton-Jacobi representation this means constructing relations between the and the branches of the manifold. For special cases, such as CRNs with detailed balance or one-dimensional systems, the mapping is one of simple time reversal of the fields in trajectories. More generally, even for complex-balanced CRNs where the Lyapunov and large-deviation functions are the same, the relations between relaxation and escape trajectories become more variable.

IV.2.1 Convexity proof of the Lyapunov property of macrostate entropy in Hamilton-Jacobi variables

Begin with the dynamics of the relative entropy from Eq. (29), as the state variable evolves along a relaxation solution to the Hamiltonian equations of motion. The properties of follow from its construction as a large-deviation function along escape trajectories. From Eq. (20), the time derivative of along an escape trajectories is given by

| (48) |

Hamiltonian trajectories are least-improbable paths of fluctuation, so escapes are conditionally dependent along trajectories. The conditional probability to extend a path, having reached any position along the path, is always positive, giving in Eq. (48).

Next compute along a relaxation trajectory, for simplicity considering (an equivalent construction exists for in terms of ). The continuum limit of the relative entropy from Eq. (4) replaces , and continuously-indexed and are defined through the large-deviation functions.

Writing the CRN Liouville operator (35) in coherent-state arguments, the time dependence is evaluated as

| (49) |

In Eq. (49) abbreviates from Eq. (22), for the that is the continuum approximation of . The third through fifth lines expand explicitly in terms of rate constants following Eq. (35), to collocate all terms in in the Liouville operator. The fourth line expands and to linear order in in neighborhoods of the saddle point of . The matrix is the inverse of the Fisher metric that is the variance Amari:inf_geom:01 , so the product of the two is just the identity .

In the penultimate line of Eq. (49), is the value of for the escape trajectory passing through , and now is the velocity along the relaxation trajectory rather than the Hamilton-Jacobi escape solution at . So the net effect of the large-deviation approximation on relative entropy has been to replace escape with relaxation velocity vectors at a fixed value of Legendre dual to .

Lemma: .

Proof: The proof follows from four observations:

-

1.

: As noted, for both escapes and relaxations, .

-

2.

Convexity: Both the potential value for the escape trajectory, and the velocity of the relaxation trajectory, are evaluated at the same location . The Liouville function , with all , is convex on the -dimensional sub-manifold of fixed . is bounded above at fixed , and in order for cycles to be possible, shift vectors giving positive exponentials must exist for all directions of in the stoichiometric subspace. Therefore at large in every direction, and the region at fixed is bounded. The boundary at fixed is likewise convex with respect to as affine coordinates, and is its interior.

-

3.

Chord: The vector is thus a chord spanning the submanifold of co-dimension 1 within the -dimensional manifold of fixed .

-

4.

Outward-directedness: The equation of motion gives as the outward normal function to the surface . The outward normal at is the classical relaxation trajectory. Every chord of the surface lies in its interior, implying that for any , and thus .

The conditional part of the relative entropy, , is thus shown to be monotone increasing along relaxation trajectories, which is the Lyapunov role for the entropy state function familiar from classical thermodynamics. That increase ends when terminates in the trajectory fixed point .

IV.2.2 Instantons and the loss of Large-deviation accessibility from first passages

The deterministic analysis of Eq. (49) is refined by the inclusion of instanton trajectories through the following sequence of observations, completing the discussion begun in Sec. III.2.2. Relevant trajectories are shown in Fig. 1.

-

1.

The 2nd law as formulated in Eq. (4) is approximated in the large-deviation limit not by a single Hamiltonian trajectory, but by the sum of all Hamiltonian trajectories, from an initial condition. Along a single trajectory, could increase or decrease.

-

2.

increases everywhere in the submanifold of the manifold , by Eq. (49). This is the classical increase of (relative) entropy of Boltzmann and Gibbs. decreases everywhere in the submanifold of the manifold , by Eq. (48). This is the construction of the log-probability for large deviations. These escape paths, however, simply lead to the evaluations of , the stationary distribution.

- 3.

-

4.